18

Case Studies: Deep Learning in Remote Sensing

Emily Jenifer A. and Sudha N.*

SASTRA Deemed University, Thanjavur, India

Abstract

Interpreting the data captured by earth observation satellites in the context of remote sensing is a recent and interesting application of deep learning. The satellite data may be one or more of the following: (i) a synthetic aperture radar image, (ii) a panchromatic image with high spatial resolution, (iii) a multispectral image with good spectral resolution, and (iv) hyperspectral data with high spatial and spectral resolution. Traditional approaches involving standard image processing techniques have limitations in processing huge volume of remote sensing data with high resolution images. Machine learning has become a powerful alternative for processing such data. With the advent of GPU, the computation power has increased several folds which, in turn, support training deep neural networks with several layers. This chapter presents the different deep learning networks applied to remote sensed image processing. While individual deep networks have shown promise for remote sensing, there are scenarios where combining two networks would be valuable. Of late, hybrid deep neural network architecture for processing multi-sensor data has been given interest to improve performance. This chapter will detail a few hybrid architectures as well.

Keywords: Deep learning, remote sensing, neural network, satellite image, fusion

18.1 Introduction

Remote sensing involves the acquisition of earth observation data using a satellite or an unmanned aerial vehicle (UAV). Interpreting remotely sensed images provides essential information in a variety of applications such as land cover classification, damage assessment during disasters, monitoring crop growth, and urban planning.

Traditional approaches for interpreting remote sensed data apply standard image processing techniques such as texture analysis and transformations. The huge volume of remote sensing data sets limitations to these approaches. A powerful alternative for dealing with huge data volume is through deep learning. Deep learning has shown great potential in improving the segmentation and classification performance in remote sensing [1]. Deep learning is achieved using deep neural networks. With the advent of graphics processing units (GPUs), computational power has increased several times allowing the training of deep neural networks in a short period of time.

A variety of deep neural networks have found applications in remote sensing. One of them is convolutional neural network (CNN). CNN performs convolutions on a remote sensed image with learnt filter kernels to compute the feature maps. CNNs are used for land cover classification, crop classification, super-resolution, pan-sharpening, and denoising hyper spectral images. Another category of deep networks, namely, recurrent neural networks (RNN), has feedback recurrent connections and they are largely used for analysing time-series data. In remote sensing, they are useful for change detection in multi-temporal images. Studying crop growth dynamics and damage assessment during disasters are typical applications of these networks. Deep learning by autoencoders (AE) performs excellent feature abstraction. This is used for remote sensing image classification to achieve high accuracy and also in pan-sharpening. Deep networks such as generative adversarial networks (GANs) are used to generate super-resolution images, pan-sharpened images, and cloud-free images.

Although individual networks have shown promise for remote sensing, there are certain scenarios where combining two different networks would be valuable. In assessing the crop damage caused by a cyclone, CNN can be used to identify the agricultural land while RNN can be used to detect the change due to damage. Further, fusing images from different sensors aids in obtaining better accuracy as one modality can complement the other. Recent research focuses on fusing evidences from different deep neural network architectures as well as data from multiple sensors to improve performance.

Section 18.2 provides the motivation behind the application of deep learning in remote sensing. Section 18.3 presents various deep neural networks used for interpreting remote sensing data. Section 18.4 describes the hybrid architectures for processing data from multiple sensors. Section 18.5 concludes the chapter.

18.2 Need for Deep Learning in Remote Sensing

In recent years, deep learning has been proved to be a very successful technique. Deep networks sometimes surpass even humans in solving intensive computational tasks. Due to these successes and thanks to the availability of high-performance computational resources, deep learning has become the model of choice in many application areas. In the field of remote sensing, satellite data poses some new challenges for deep learning. The data is multimodal, geo-tagged, time-stamped, and voluminous. Remote sensing scientists have exploited the power of deep learning to address these challenges.

Satellite images are captured with a variety of sensors. Each data modality possesses its own merits and demerits. The performance is highly enhanced when data from multiple sensors are fused together in such a way to meet the needs of a particular application. Traditionally, fusion is achieved by extracting the features from images. Feature extraction is, however, a complex procedure. Image fusion algorithms based on machine learning have been developed in recent years to overcome the complexity, and they have given promising results. Among the various methods available for fusion, deep learning is a recent and highly effective way of achieving it. The various architectures developed so far for deep fusion in remote sensing will be reviewed in this chapter.

Deep learning has played a key role in data processing in many remote sensing applications. The applications involve agriculture, geology, disaster assessment, urban planning, and remote-sensed image processing in general. The different deep learning architectures and their roles in remote-sensed data processing are discussed next.

18.3 Deep Neural Networks for Interpreting Earth Observation Data

18.3.1 Convolutional Neural Network

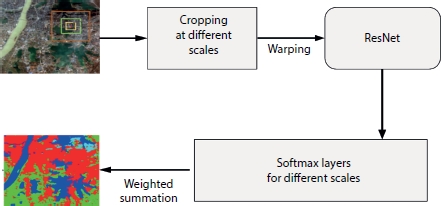

The CNN is the most successful deep learning network for image classification and segmentation. The CNN has emerged as a powerful tool by providing outstanding performance in these computer vision tasks. With the increase in the remote sensing satellites installed with a variety of sensors, CNN-based approaches may benefit from this large data availability. The CNN has been effectively applied to remote sensing for object detection and image segmentation. Building [2] and aircraft [3] detection has been achieved using CNN. The final fully connected layer generally performs the classification task. CNN has, therefore, been largely used for land use and land cover classification [4–7]. One such multi-scale classification using ResNet [8] is shown in Figure 18.1. The ground objects such as buildings, farmlands, water areas, and forests show various characteristics in varied resolution; thus, single-scale observation cannot exploit the features effectively. The ResNet-50 was adopted by the authors due to its low computational complexity compared to other pre-trained models. It has 16 residual blocks with three convolutional layers and the default input size of ResNet-50 is 224 × 224 × 3. The multi-scale patches are assigned with land cover category labels for classification through pre-trained ResNet-50, and they are combined with the boundary segmentation for efficient final projection of the land cover. Pan-sharpening is also an another important application of CNN [9].

Figure 18.1 Land cover classification using CNN.

18.3.2 Autoencoder

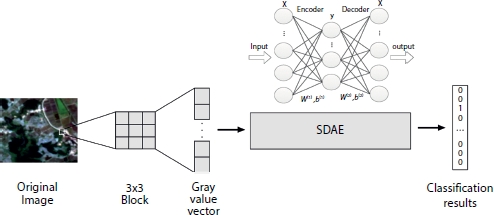

Autoencoder (AE) is an unsupervised deep learning model that performs non-linear transformation. The non-linear function learns the hidden surface from the unlabeled data. AE consists of an encoder network that reduces the dimension of the given input and a decoder network that reconstructs the input. Typical applications of AE in remote sensing are dimensionality reduction [10] and feature variation [11]. AE also performs classification efficiently with unlabeled data [12]. In specific, it is applied to snow cover mapping [13]. An image classifier using stacked denoising autoencoder (SDAE) [14] is shown in Figure 18.2. SDAE is based on the concept of adding noise to each encoder input layer and forcing to learn all the important features. It creates a stable network that is robust to noise. SDAE has bi-level learning process. Initially, the unlabeled inputs are sent to the SDAE. The features are learnt with greedy layer-wise approach for unsupervised training. It is followed by a labeled supervised training of a back propagation layer for classification.

Figure 18.2 Remote sensing image classifier using stacked denoising autoencoder.

18.3.3 Restricted Boltzmann Machine and Deep Belief Network

A restricted Boltzmann machine (RBM) is a generative stochastic model that can learn probability distribution over its input set. It has two types of nodes, hidden and visible, and the connections between nodes form a bipartite graph. Deep belief network (DBN) is formed by stacking RBMs. In remote sensing images, RBM is applied to classification [15, 16] and feature learning [17]. Figure 18.3 shows an application of RBM in hyper-spectral image classification [15]. In conventional RBM, all the visible layer nodes are connected with all hidden layer nodes but nodes of visible layer or hidden layer are not connected among each other. However in [15], authors have replaced binary visible layers with Gaussian ones for effective land cover classification. The Gaussian RBM extracts the features in an unsupervised way, and these extracted land cover features are given to logistic regression unit for classification.

Figure 18.3 Gaussian-Bernoulli RBM for hyperspectral image classification.

18.3.4 Generative Adversarial Network

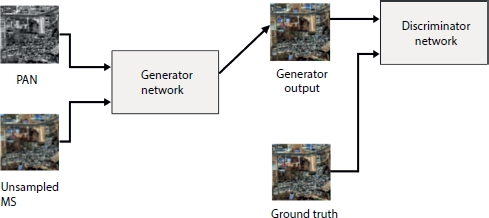

GAN is a generative model that automatically discovers and learns the patterns in the input data in such a way that the learnt model can generate new examples similar to the input data during learning. GAN is realized using two CNNs: a generator and a discriminator. In remote sensing, GAN is largely used in image synthesis for data augmentation [18, 19]. GAN is also used in generating super resolution images [20]. In [21], semi-supervised detection of airplanes from unlabeled airport images is performed using GAN. GAN has been effectively employed in pan-sharpening [22] as shown in Figure 18.4. Pan-sharpening is a process of merging high-resolution panchromatic and lower resolution multispectral images to create a single high-resolution color image. The authors in [22] aimed to solve the pan-sharpening problem by image generation with a GAN. The generator was trained in a way to learn the adaptive loss function to produce a high quality pan sharpened images.

Figure 18.4 GAN for pan-sharpening with multispectral and panchromatic images.

18.3.5 Recurrent Neural Network

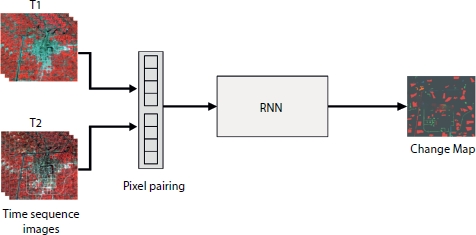

A RNN takes a time-series input and captures the temporal pattern present in it. RNN usually suffers from short-term memory which is addressed by long short-term memory (LSTM) and gated recurrent unit (GRU). LSTM and GRU are capable of processing long sequential inputs. In the field of remote sensing, RNN and its variants have been used for image classification [23], satellite image correction [24], and other processing with temporal data [25]. The change detection performed on multi-temporal images [26] is illustrated in Figure 18.5. During the learning process, RNN captures the relationship between the successive inputs in the temporal data and remembers the sequence. Learning the dependencies among the time-series inputs facilitates performing change detection effectively. The RNN network learns the change rule of the time sequence images efficiently through the transferability of the memory. RNN not only learns the bi-temporal changes but also the multi-temporal changes in land cover.

Change detection in crops and after-effects of the natural disasters is efficiently performed using RNN [27]. While a single deep neural network has been largely applied to various remote sensing applications, a few recent works focus on combining more than one type of network and process multi-sensor data to improve results. The next section reviews these hybrid architectures in remote sensing.

Figure 18.5 Change detection on multi-temporal images using RNN.

18.4 Hybrid Architectures for Multi-Sensor Data Processing

Independent deep architectures applied to remote sensing give only moderate performance. Recent developments in combining different deep architectures yield better results as each deep learning architecture shows some advantage for a particular application. Hybrid architectures can be very useful in change detection and classification. Hybrid deep neural networks also achieve image fusion. In remote sensing, applications such as classification and change detection deal with massive data which, in turn, increases the processing time. To address this challenge efficiently, different deep learning models are considered leveraging their capacities. The hybrid approach improves speed and accuracy by parallel processing with multiple networks. Table 18.1 displays a few existing hybrid deep architectures that process multi-sensor data.

The combination CNN-GAN has been applied to change detection [28]. The relationship between the multi-temporal images and their change maps can be learnt through generative learning process for change detection. The learning process is as follows. The CNN initially extracts the features to remove redundancy and noise from the multispectral images. The GAN is then applied to learn the mapping function.

Another application of this hybrid architecture is cloud removal. Critical information is lost in the image in the presence of cloud. The missed information needs to be reconstructed. An efficient CNN-GAN combination, proposed by [29], removes the clouds in an optical image using the corresponding SAR image in a two-step procedure. In the first step, the SAR image is converted to a simulated optical image with coarse texture and less spectral information. In the second step, the fusion of simulated optical image along with SAR and cloudy optical image is performed by a GAN to produce an optical image free from clouds.

The RNN has shown great potential in analysing the time-series images. A variant of RNN, namely, LSTM, performs better in change detection. Its fusion with a CNN has shown further improvement. The network training of CNN-LSTM for change detection is as follows. The CNN extracts the spatial and spectral features from the patches of input image while the classification using these features is performed by the LSTM network. The city expansion study done with CNN-LSTM hybrid network [30] has given a satisfactory overall accuracy rate of 98.75% as displayed in the table.

Table 18.1 Hybrid deep architectures for remote sensing.

| Publication | Hybrid architecture | Inputs | Application | Results | |

| [28] | CNN | GAN | MS images | Change detection | Overall error = 5.76% |

| [29] | CNN | GAN | SAR and MS | Cloud removal | SAM = 3.1621 |

| [30] | CNN | LSTM | MS images | Change detection | Overall accuracy = 98.75% |

| [31] | CNN | SAE | PAN and MS | Pan sharpening | Overall accuracy = 94.82% |

| [32] | CNN | GAN | Near-infrared (NIR) and short-wave infrared (SWIR) | Image Fusion | ERGAS = 0.69 SAM = 0.72 |

| [33] | CNN | RNN | MS and PAN | To find oil spill in the ocean | mIoU = 82.1% |

SAM - Spectral Angle Mapper; ERGAS - Relative Dimensionless Global Error in Synthesis; mIoU - mean Intersection over Union.

Pan-sharpening has been achieved using a combination of CNN and stacked autoencoder (SAE) in order to generate a fused image of panchromatic and multispectral data. The hybrid architecture proposed by [31] deals with the spatial and spectral contents separately. The spectral features of the multispectral image are extracted by the successive layers of SAE. The spatial information present in large amount in the panchromatic images is extracted by CNN. Both the spatial and spectral features are fused and the resulting pan-sharpened image is given to a fully connected layer for classification of water and urban areas. The overall accuracy of the pan-sharpened image classification by the hybrid architecture is 94.82% which is much higher than the classification accuracies provided by individual architectures.

With the combination of CNN and RNN, a hybrid architecture has been developed and applied to ocean oil spill detection [33]. A single deep CNN can extract the features for oil spill segmentation, but it missed most of the finer details. By incorporating conditional random fields in RNN, the hybrid architecture efficiently captures the missed out finer details of the oil spill in SAR images. The evaluation index of segmentation, namely, mean intersection over union (mIoU), is computed to be 82.1%. It is much better than a CNN-only architecture. Moreover, the run time of CNN-RNN network is only 0.8 s, which is much less when compared to the fully convolutional network architecture that takes 1.4 s.

The above discussions reveal that a hybrid architecture gives much better performance than a single architecture.

18.5 Conclusion

In recent years, deep learning has played a major role in enhancing the performance and accuracy in remote sensing given the challenges posed in terms of limited availability of ground truths and cumbersome preprocessing. A review of various deep learning–based remote-sensed image processing has been presented in this chapter. Deep architectures such as CNN, RNN, AEs, and GAN applied to remote sensing have been discussed. Combination of two different architectures for fusing data from multiple sensors has also been discussed. The role of fusion in applications such as cloud removal, change detection, pan sharpening, land use land cover classification, and oil spill detection has been highlighted.

Although remote sensing is a growing application area that employs deep learning, supervised classification has so far been widely used. The enormous volume of data available from satellites and dependence on large labeled dataset pose challenges in the generation of ground truth. The design of deep unsupervised and semi-supervised neural networks is an important future direction to explore. The models can self-learn the spatial patterns from the unlabeled data and classify them without any prior information.

References

1. Zhu, X.X., Tuia, D., Mou, L., Xia, G.S., Zhang, L., Xu, F., Fraundorfer, F., Deep learning in remote sensing: A comprehensive review and list of resources. IEEE Geosci. Remote Sens. Mag., 5, 4, 8–36, 2017.

2. Konstantinidis, D., Argyriou, V., Stathaki, T., Grammalidis, N., A modular CNN-based building detector for remote sensing images. Comput. Networks, 168, 107034, 2020.

3. Liu, Q., Xiang, X., Wang, Y., Luo, Z., Fang, F., Aircraft detection in remote sensing image based on corner clustering and deep learning. Eng. Appl. Artif. Intell., 87, 103333, 2020.

4. Jin, B., Ye, P., Zhang, X., Song, W., Li, S., Object-oriented method combined with deep convolutional neural networks for land-use-type classification of remote sensing images. J. Indian Soc. Remote Sens., 47, 6, 951–965, 2019.

5. Rajesh, S., Nisia, T.G., Arivazhagan, S., Abisekaraj, R., Land Cover/Land Use Mapping of LISS IV Imagery Using Object-Based Convolutional Neural Network with Deep Features. J. Indian Soc. Remote Sens., 48, 1, 145–154, 2020.

6. Sharma, A., Liu, X., Yang, X., Land cover classification from multi-temporal, multi-spectral remotely sensed imagery using patch-based recurrent neural networks. Neural Networks, 105, 346–355, 2018.

7. Sharma, A., Liu, X., Yang, X., Shi, D., A patch-based convolutional neural network for remote sensing image classification. Neural Networks, 95, 19–28, 2017.

8. Tong, X.Y., Xia, G.S., Lu, Q., Shen, H., Li, S., You, S., Zhang, L., Land-cover classification with high-resolution remote sensing images using transferable deep models. Remote Sens. Environ., 237, 111322, 2020.

9. Masi, G., Cozzolino, D., Verdoliva, L., Scarpa, G., Pansharpening by convo-lutional neural networks. Remote Sens., 8, 7, 594, 2016.

10. Zabalza, J., Ren, J., Zheng, J., Zhao, H., Qing, C., Yang, Z., Marshall, S., Novel segmented stacked autoencoder for effective dimensionality reduction and feature extraction in hyperspectral imaging. Neurocomputing, 185, 1–10, 2016.

11. Abdi, G., Samadzadegan, F., Reinartz, P., Spectral–spatial feature learning for hyperspectral imagery classification using deep stacked sparse autoencoder. J. Appl. Remote Sens., 11, 4, 042604, 2017.

12. Mughees, A. and Tao, L., Efficient deep auto-encoder learning for the classification of hyperspectral images, in: 2016 International Conference on Virtual Reality and Visualization (ICVRV), 2016, September, IEEE, pp. 44–51.

13. Mughees, A. and Tao, L., Efficient deep auto-encoder learning for the classification of hyperspectral images, in: 2016 International Conference on Virtual Reality and Visualization (ICVRV), 2016, September, IEEE, pp. 44–51.

14. Liang, P., Shi, W., Zhang, X., Remote sensing image classification based on stacked denoising autoencoder. Remote Sens., 10, 1, 16, 2018.

15. Tan, K., Wu, F., Du, Q., Du, P., Chen, Y., A parallel gaussian–bernoulli restricted boltzmann machine for mining area classification with hyper-spectral imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens., 12, 2, 627–636, 2019.

16. Qin, F., Guo, J., Sun, W., Object-oriented ensemble classification for pola-rimetric SAR imagery using restricted Boltzmann machines. Remote Sens. Lett., 8, 3, 204–213, 2017.

17. Taherkhani, A., Cosma, G., McGinnity, T.M., Deep-FS: A feature selection algorithm for Deep Boltzmann Machines. Neurocomputing, 322, 22–37, 2018.

18. Lin, D.Y., Wang, Y., Xu, G.L., Fu, K., Synthesizing remote sensing images by conditional adversarial networks, in: 2017 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), 2017, July, IEEE, pp. 48–50.

19. Wang, G., Dong, G., Li, H., Han, L., Tao, X., Ren, P., Remote sensing image synthesis via graphical generative adversarial networks, in: IGARSS 2019 IEEE International Geoscience and Remote Sensing Symposium, 2019, July, IEEE, pp. 10027–10030.

20. Ma, W., Pan, Z., Guo, J., Lei, B., Super-resolution of remote sensing images based on transferred generative adversarial network, in: IGARSS 2018-2018 IEEE International Geoscience and Remote Sensing Symposium, 2018, July, IEEE, pp. 1148–1151.

21. Chen, G., Liu, L., Hu, W., Pan, Z., Semi-Supervised Object Detection in Remote Sensing Images Using Generative Adversarial Networks, in: IGARSS 2018 - 2018 IEEE International Geoscience and Remote Sensing Symposium, 2018, July, IEEE, pp. 2503–2506.

22. Liu, X., Wang, Y., Liu, Q., PSGAN: A generative adversarial network for remote sensing image pan-sharpening, in: 2018 25th IEEE International Conference on Image Processing (ICIP), 2018, October, IEEE, pp. 873–877.

23. Mou, L., Ghamisi, P., Zhu, X.X., Deep recurrent neural networks for hyper-spectral image classification. IEEE Trans. Geosci. Remote Sens., 55, 7, 3639– 3655, 2017.

24. Maggiori, E., Charpiat, G., Tarabalka, Y., Alliez, P., Recurrent neural networks to correct satellite image classification maps. IEEE Trans. Geosci. Remote Sens., 55, 9, 4962–4971, 2017.

25. Pelletier, C., Webb, G.I., Petitjean, F., Temporal convolutional neural network for the classification of satellite image time series. Remote Sens., 11, 5, 523, 2019.

26. Lyu, H., Lu, H., Mou, L., Learning a transferable change rule from a recurrent neural network for land cover change detection. Remote Sens., 8, 6, 506, 2016.

27. Vignesh, T., Thyagharajan, K.K., Ramya, K., Change detection using deep learning and machine learning techniques for multispectral satellite images. Int. J. Innov. Tech. Exploring Eng., 9, 1S, 90–93, 2019.

28. Gong, M., Niu, X., Zhang, P., Li, Z., Generative adversarial networks for change detection in multispectral imagery. IEEE Geosci. Remote Sens. Lett., 14, 12, 2310–2314, 2017.

29. Gao, J., Yuan, Q., Li, J., Zhang, H., Su, X., Cloud Removal with Fusion of High Resolution Optical and SAR Images Using Generative Adversarial Networks. Remote Sens., 12, 191, 1–17, 2020.

30. Mou, L. and Zhu, X.X., A recurrent convolutional neural network for land cover change detection in multispectral images, in: IGARSS 2018-2018 IEEE International Geoscience and Remote Sensing Symposium, 2018, July, IEEE, pp. 4363–4366.

31. Liu, X., Jiao, L., Zhao, J., Zhao, J., Zhang, D., Liu, F., Tang, X., Deep multiple instance learning-based spatial–spectral classification for PAN and MS imagery. IEEE Trans. Geosci. Remote Sens., 56, 1, 461–473, 2017.

32. Palsson, F., Sveinsson, J.R., Ulfarsson, M.O., Single sensor image fusion using a deep convolutional generative adversarial network, in: 2018 9th Workshop on Hyperspectral Image and Signal Processing: Evolution in Remote Sensing (WHISPERS), 2018, September, IEEE, pp. 1–5.

33. Chen, Y., Li, Y., Wang, J., An end-to-end oil-spill monitoring method for multisensory satellite images based on deep semantic segmentation. Sensors, 20, 3, 1–14, 2020.

- *Corresponding author: [email protected]; [email protected]