Racial inequality in the 21st century: the declining significance of discrimination

Fryer Roland G. Jr.1, Harvard University, EdLabs, NBER

Abstract

There are large and important differences between blacks in whites in nearly every facet of life— earnings, unemployment, incarceration, health, and so on. This chapter contains three themes. First, relative to the 20th century, the significance of discrimination as an explanation for racial inequality across economic and social indicators has declined. Racial differences in social and economic outcomes are greatly reduced when one accounts for educational achievement; therefore, the new challenge is to understand the obstacles undermining the development of skill in black and Hispanic children in primary and secondary school. Second, analyzing ten large datasets that include children ranging in age from eight months old seventeen years old, we demonstrate that the racial achievement gap is remarkably robust across time, samples, and particular assessments used. The gap does not exist in the first year of life, but black students fall behind quickly thereafter and observables cannot explain differences between racial groups after kindergarten. Third, we providea brief history of efforts to close the achievement gap.

There are several programs—various early childhood interventions, more flexibility and stricter accountability for schools, data-driven instruction, smaller class sizes, certain student incentives, and bonuses for effective teachers to teach in high-need schools, which have a positive return on investment, but they cannot close the achievement gap in isolation. More promising are results from a handful of high-performing charter schools, which combine many of the investments above in a comprehensive framework and provide an “existence proof’’—demonstrating that a few simple investments can dramatically increase the achievement of even the poorest minority students. The challenge for the future is to take these examples to scale.

Keywords

Racial achievement gap; Charter schools; Racial inequality

“In the 21st Century, the best anti-poverty program around is a world-class education.”

President Barack Obama, State of the Union Address (January 27,2010)

1 Introduction

Racial inequality is an American tradition. Relative to whites, blacks earn twenty-four percent less, live five fewer years, and are six times more likely to be incarcerated on a given day. Hispanics earn twenty-five percent less than whites and are three times more likely to be incarcerated.2 At the end of the 1990s, there were one-third more black men under the jurisdiction of the corrections system than there were enrolled in colleges or universities (Ziedenberg and Schiraldi, 2002). While the majority of barometers of economic and social progress have increased substantially since the passing of the civil rights act, large disparities between racial groups have been and continue to be an everyday part of American life.

Understanding the causes of current racial inequality is a subject of intense debate. A wide variety of explanations—which range from genetics (Jensen, 1973; Rushton, 1995) to personal and institutional discrimination (Darity and Mason, 1998; Pager, 2007; Krieger and Sidney, 1996) to the cultural backwardness of minority groups (Reuter, 1945; Shukla, 1971)—have been put forth. Renowned sociologist William Julius Wilson argues that a potent interaction between poverty and racial discrimination can explain current disparities (Wilson, 2010).

Decomposing the share of inequality attributable to these explanations is exceedingly difficult, as experiments (field, quasi-, or natural) or other means of credible identification are rarely available.3 Even in cases where experiments are used (i.e., audit studies), it is unclear precisely what is being measured (Heckman, 1998). The lack of success in convincingly identifying root causes of racial inequality has often reduced the debate to a competition of “name that residual”—arbitrarily assigning identity to unexplained differences between racial groups in economic outcomes after accounting for a set of confounding factors. The residuals are often interpreted as “discrimination,” “culture,” “genetics,” and so on. Gaining a better understanding of the root causes of racial inequality is of tremendous importance for social policy, and the purpose of this chapter.

This chapter contains three themes. First, relative to the 20th century, the significance of discrimination as an explanation for racial inequality across economic and social indicators has declined. Racial differences in social and economic outcomes are greatly reduced when one accounts for educational achievement; therefore, the new challenge is to understand the obstacles undermining the achievement of black and Hispanic children in primary and secondary school. Second, analyzing ten large datasets that include children ranging in age from eight months old to seventeen years old, we demonstrate that the racial achievement gap is remarkably robust across time, samples, and particular assessments used. The gap does not exist in the first year of life, but black students fall behind quickly thereafter and observables cannot explain differences between racial groups after kindergarten.

Third, we provide a brief history of efforts to close the achievement gap. There are several programs—various early childhood interventions, more flexibility and stricter accountability for schools, data-driven instruction, smaller class sizes, certain student incentives, and bonuses for effective teachers to teach in high-need schools, which have a positive return on investment, but they cannot close the achievement gap in isolation.4 More promising are results from a handful of high-performing charter schools, which combine many of the investments above in a comprehensive model and provide a powerful “existence proof”—demonstrating that a few simple investments can dramatically increase the achievement of even the poorest minority students.

An important set of questions is: (1) whether one can boil the success of these charter schools down to a form that can be taken to scale in traditional public schools; (2) whether we can create a competitive market in which only high-quality schools can thrive; and (3) whether alternative reforms can be developed to eliminate achievement gaps. Closing the racial achievement gap has the potential to substantially reduce or eliminate many of the social ills that have plagued minority communities for centuries.

2 The declining significance of discrimination

One of the most important developments in the study of racial inequality has been the quantification of the importance of pre-market skills in explaining differences in labor market outcomes between blacks and whites (Neal and Johnson, 1996; O’Neill, 1990). Using the National Longitudinal Survey of Youth 1979 (NLSY79), a nationally representative sample of 12,686 individuals aged 14 to 22 in 1979, Neal and Johnson (1996) find that educational achievement among 15- to 18-year-olds explains all of the black-white gap in wages among young women and 70% of the gap among men. Accounting for pre-market skills also eliminates the Hispanic-white gap. Important critiques such as racial bias in the achievement measure (Darity and Mason, 1998; Jencks, 1998), labor market dropouts, or the potential that forward-looking minorities underinvest in human capital because they anticipate discrimination in the market cannot explain the stark results.5

We begin by replicating the seminal work of Neal and Johnson (1996) and extending their work in four directions. First, the most recent cohort of NLSY79 is between 42 and 44 years old (15 years older than in the original analysis), which provides a better representation of the lifetime gap. Second, we perform a similar analysis with the National Longitudinal Survey of Youth 1997 cohort (NLSY97). Third, we extend the set of outcomes to include unemployment, incarceration, and measures of physical health. Fourth, we investigate the importance of pre-market skills among graduates of thirty-four elite colleges and universities in the College and Beyond database, 1976 cohort.

To understand the importance of academic achievement in explaining life outcomes, we follow the lead of Neal and Johnson (1996) and estimate least squares models of the form:

![]() (1)

(1)

where i indexes individuals, Xi denotes a set of control variables, and Ri is a full set of racial identifiers.

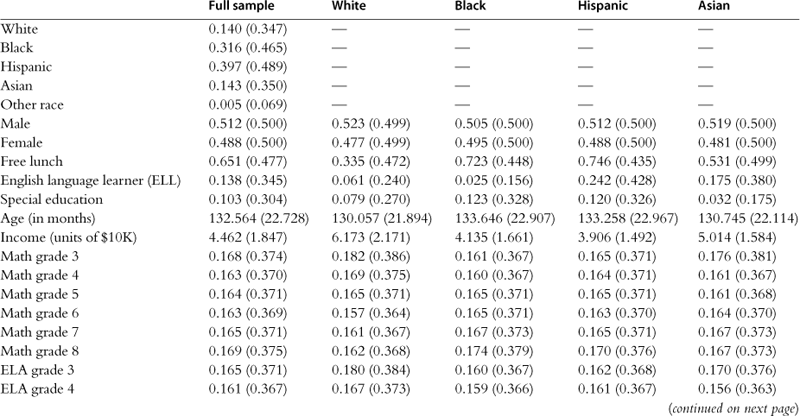

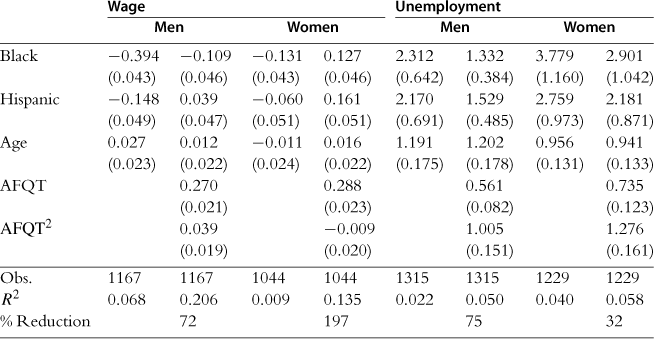

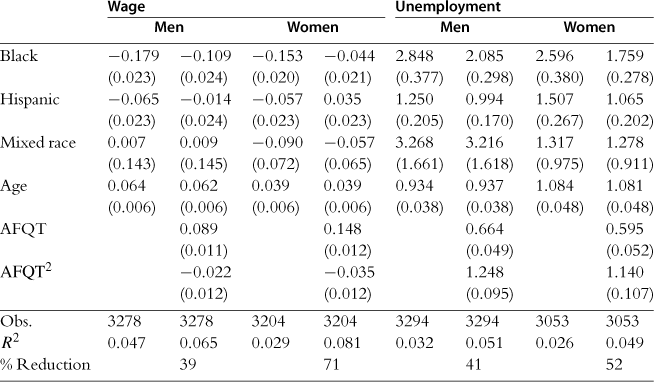

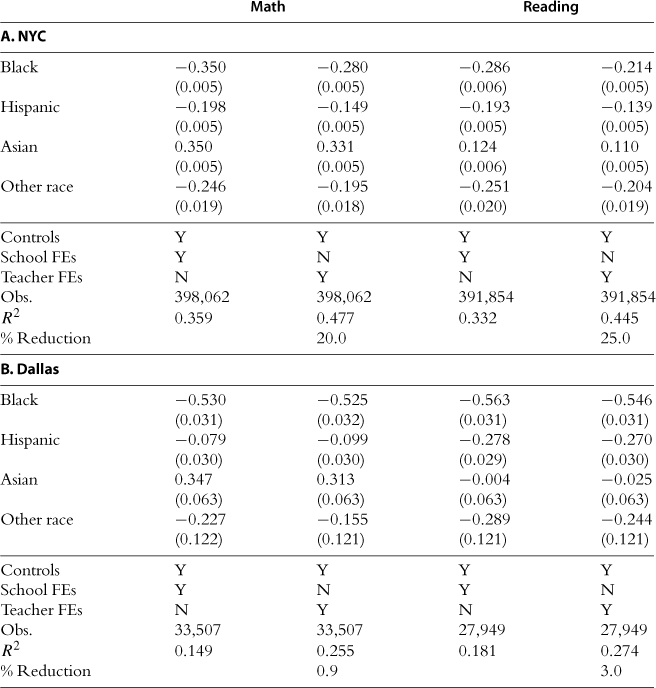

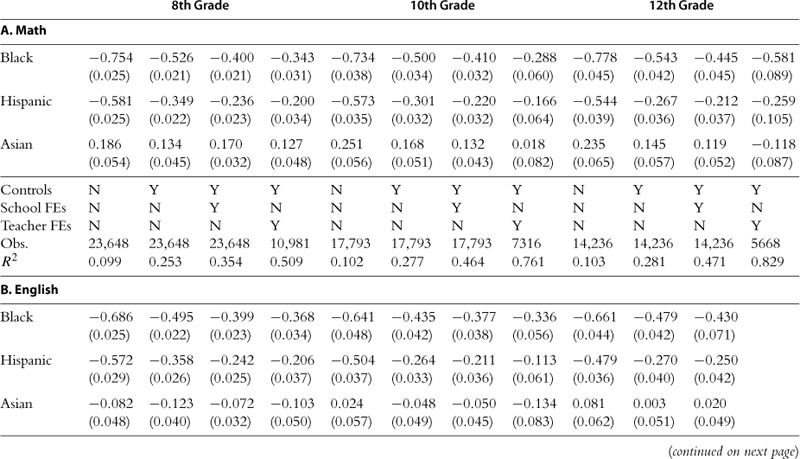

Table 1 presents racial disparities in wage and unemployment for men and women, separately.6 The odd-numbered columns present racial differences on our set of outcomes controlling only for age. The even-numbered columns add controls for the Armed Forces Qualifying Test (AFQT)—a measure of educational achievement that has been shown to be racially unbiased (Wigdor and Green, 1991)—and its square. Black men earn 39.4% less than white men; black women earn 13.1% less than white women. Accounting for educational achievement drastically reduces these inequalities—39.4% to 10.9% for black men and 13.1% lower than whites to 12.7% higher for black women.7 An eleven percent difference between white and black men with similar educational achievement is a large and important number, but a small fraction of the original gap. Hispanic men earn 14.8% less than whites in the raw data—62% less than the raw black-white gap—which reduces to 3.9% more than whites when we account for AFQT. The latter is not statistically significant. Hispanic women earn six percent less than white women (not significant) without accounting for achievement. Adding controls for AFQT, Hispanic women earn sixteen percent more than comparable white women and these differences are statistically significant.

Table 1

The importance of educational achievement on racial differences in labor market outcomes (NLSY79).

The dependent variable in columns 1 through 4 is the log of hourly wages of workers. The wage observations come from 2006. All wages are measured in 2006 dollars. The wage measure is created by multiplying the hourly wage at each job by the number of hours worked at each job that the person reported as a current job and then dividing that number by the total number of hours worked during a week at all current jobs. Wage observations below $1 per hour or above $115 per hour are eliminated from the data. The dependent variable in columns 5 through 8 is a binary variable indicating whether the individual is unemployed. The unemployment variable is taken from the individual’s reported employment status in the raw data. In both sets of regressions, the sample consists of the NLSY79 cross-section sample plus the supplemental samples of blacks and Hispanics. Respondents who did not take the ASVAB test are included in the sample and a dummy variable is included in the regressions that include AFQT variables to indicate if a person did not have a valid AFQT score. This includes 134 respondents who had a problem with their test according to the records. All included individuals were born after 1961. The percent reduction reported in even-numbered columns represents the reduction in the coefficient on black when controls for AFQT are added. Standard errors are in parentheses.

Labor force participation follows a similar pattern. Black men are more than twice as likely to be unemployed in the raw data and thirty percent more likely after controlling for AFQT. For women, these differences are 3.8 and 2.9 times more likely, respectively. Hispanic-white differences in unemployment with and without controlling for AFQT are strikingly similar to black-white gaps.

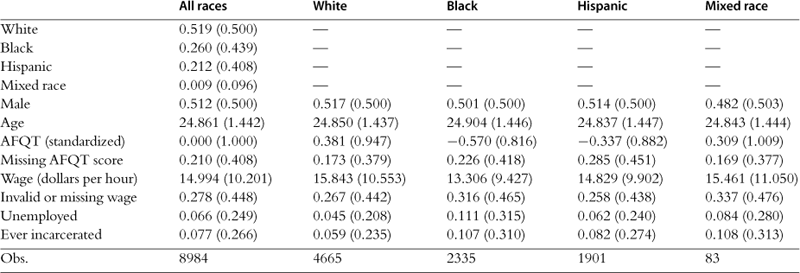

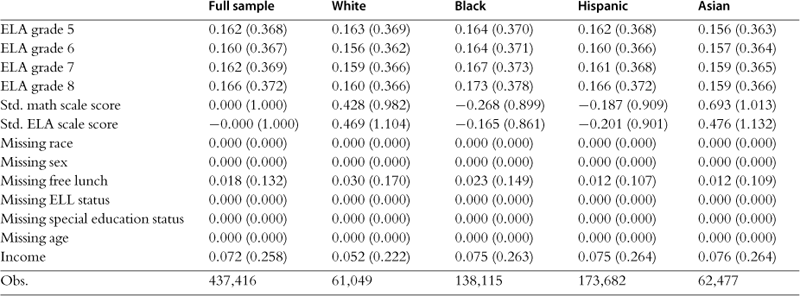

Table 2 replicates Table 1 using the NLSY97.8 The NLSY97 includes 8984 youths between the ages of 12 and 16 at the beginning of 1997; these individuals are 21 to 27 years old in 2006–2007, the most recent years for which wage measures are available. In this sample, black men earn 17.9% less than white men and black women earn 15.3% less than white women. When we account for educational achievement, racial differences in wages measured in the NLSY97 are strikingly similar to those measured in NLSY79— 10.9% for black men and 4.4% for black women. The raw gaps, however, are much smaller in the NLSY97, which could be due either to the younger age of the workers and a steeper trajectory for white males (Farber and Gibbons, 1996) or to real gains made by blacks in recent years. After adjusting for age, Hispanic men earn 6.5% less than white men and Hispanic women earn 5.7% less than white women, but accounting for AFQT eliminates the Hispanic-white gap for both men and women.

Table 2

The importance of educational achievement on racial differences in labor market outcomes (NLSY97).

The dependent variable in columns 1 through 4 is the log of hourly wages of workers. The wage observations come from 2006 and 2007. All wages are measured in 2006 dollars. The wage measure for each year is created by multiplying the hourly wage at each job by the number of hours worked at each job that the person reported as a current job and then dividing that number by the total number hours worked during a week at all current jobs. If a person worked in both years, the wage is the average of the two wage observations. Otherwise the reported wage is from the year for which the individual has valid wage data. Wage observations below $1 per hour or above $115 per hour are eliminated from the data. The dependent variable in columns 5 through 8 is a binary variable indicating whether the individual is unemployed. The unemployment variable is taken from the individual’s reported employment status in the raw data. The employment status from 2006 is used for determining unemployment. The coefficients in columns 5 through 8 are odds ratios from logistic regressions. Respondents who did not take the ASVAB test are included in the sample and a dummy variable is included to indicate if a person did not have a valid AFQT score in the regressions that include AFQT variables. The percent reduction reported in even-numbered columns represents the reduction in the coefficient on black when controls for AFQT are added. Standard errors are in parentheses.

Black men in the NLSY97 are almost three times as likely to be unemployed, which reduces to twice as likely when we account for educational achievement. Black women are roughly two and a halftimes more likely to be unemployed than white women, but controlling for AFQT reduces this gap to seventy-five percent more likely. Hispanic men are twenty-five percent more likely to be unemployed in the raw data, but when we control for AFQT, this difference is eliminated. Hispanic women are fifty percent more likely than white women to be unemployed and this too is eliminated by controlling for AFQT. Similar to the NLSY79, controlling for AFQT has less of an impact on racial differences in unemployment than on wages.

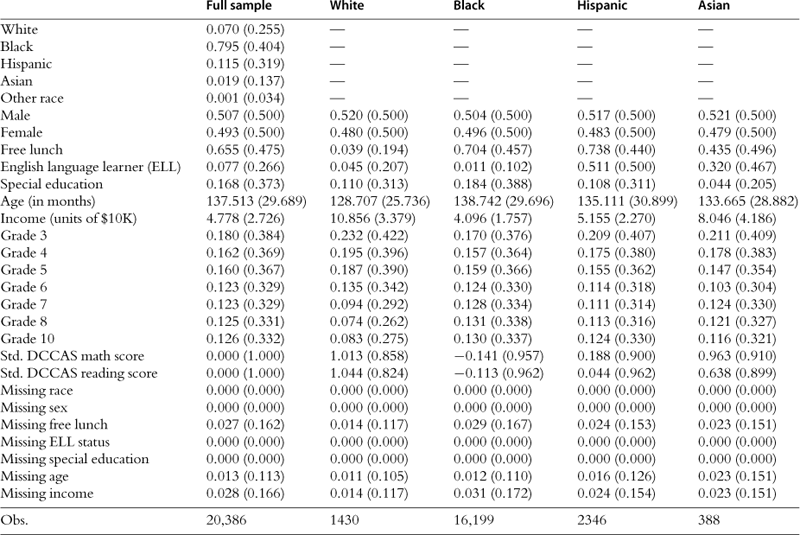

Table 3 employs a Neal and Johnson specification on two social outcomes: incarceration and physical health. The NLSY79 asks the “type of residence” in which the respondent is living during each administration of the survey, which allows us to construct a measure of whether the individual was ever incarcerated when the survey was administered across all years of the sample.9 The NLSY97 asks individuals if they have been sentenced to jail, an adult corrections institution, or a juvenile corrections institution in the past year for each yearly follow-up survey of participants. In 2006, the NLSY79 included a 12-Item Short Form Health Survey (SF-12) for all individuals over age 40. The SF-12 consists of twelve self-reported health questions ranging from whether the respondent’s health limits him from climbing several flights of stairs to how often the respondent has felt calm and peaceful in the past four weeks. The responses to these questions are combined to create physical and mental component summary scores.

Table 3

The importance of educational achievement on racial differences in incarceration and health outcomes.

The dependent variable in columns 1 through 8 is a measure of whether the individual was ever incarcerated. In the NLSY79 data, this variable is equal to one if the individual reported their residence as jail during any of the yearly follow-up surveys or if they reported having been sentenced to a corrective institution before the baseline survey and is equal to zero otherwise. In the NLSY97 data, this variable is equal to one if the person reports having been sentenced to jail, an adult corrections institution, or a juvenile corrections institution in the past year during any of the yearly administrations of the survey and is equal to zero otherwise. The coefficients in columns 1 through 8 are odds ratios from logistic regressions. The dependent variable in columns 9 through 12 is the physical component score (PCS) reported in the NLSY79 derived from the 12-Item Short Form Health Survey of individuals over age 40. The PCS is standardized to have a mean of zero and a standard deviation of one. Individuals who do not have valid PCS data are not included in these regressions. In the NLSY79 regressions, included individuals were born after 1961. Respondents who did not take the ASVAB test are included in the sample and a dummy variable is included in the regressions that include AFQT variables to indicate if a person did not have a valid AFQT score. For NLSY79, this includes 134 respondent that had a problem with their test according to the records. The percent reduction reported in even-numbered columns represents the reduction in the coefficient on black when controls for AFQT are added. Standard errors are in parentheses.

Adjusting for age, black males are about three and a half times and Hispanics are about two and a half times more likely to have ever been incarcerated when surveyed.10 Controlling for AFQT, this is reduced to about eighty percent more likely for blacks and fifty percent more likely for Hispanics. Again, the racial differences in incarceration after controlling for achievement is a large and important number that deserves considerable attention in current discussions of racial inequality in the United States. Yet, the importance of educational achievement in the teenage years in explaining racial differences is no less striking.

The final two columns of Table 3 display estimates from similar regression equations for the SF-12 physical health measure, which has been standardized to have a mean of zero and standard deviation of one for ease of interpretation. Without accounting for achievement, there is a black-white disparity of 0.15 standard deviations in self-reported physical health for men and 0.23 standard deviations for women. For Hispanics, the differences are −0.140 for men and 0.030 for women. Accounting for educational achievement eliminates the gap for men and cuts the gap in half for black women [−0.111 (0.076)]. The remaining difference for black women is not statistically significant. Hispanic women report better health than white women with or without accounting for AFQT.

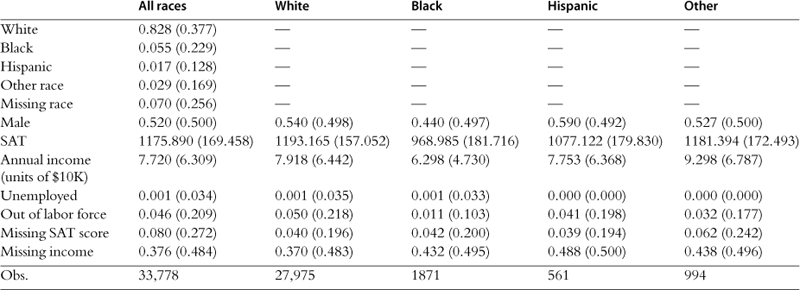

Extending Neal and Johnson (1996) further, we turn our attention to the College and Beyond (C&B) Database, which contains data on 93,660 full-time students who entered thirty-four elite colleges and universities in the fall of 1951, 1976, or 1989. We focus on the cohort from 1976.11 The C&B data contain information drawn from students’ applications and transcripts, Scholastic Aptitude Test (SAT) and the American College Test (ACT) scores, standardized college admissions exams that are designed to assess a student’s readiness for college, as well as information on family demographics and socioeconomic status in their teenage years.12 The C&B database also includes responses to a survey administered in 1995 or 1996 to all three cohorts that provides detailed information on post-college labor market outcomes. Wage data were collected when the respondents were approximately 38 years old, and reported as a series of ranges. We assigned individuals the midpoint value of their reported income range as their annual income.13 The response rate to the 1996 survey was approximately 80%. Table A.3 contains summary statistics used in our analysis.

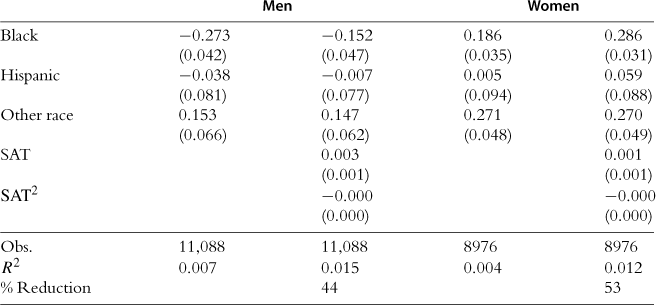

Table 4 presents racial disparities in income for men and women from the 1976 cohort of the C&B Database.14 The odd-numbered columns present raw racial differences. The even-numbered columns add controls for performance on the SAT and its square.15 Black men from this sample earn 27.3% less than white men, but when we account for educational achievement, the gap shrinks to 15.2%. Black women earn more than white women by 18.6%, which increases to an advantage of 28.6% when accounting for SAT scores. There are no differences in income between Hispanics and whites with or without accounting for achievement.

Table 4

The importance of educational achievement on racial differences in labor market outcomes (C&B 76).

The dependent variable is the log of annual income. Annual income is reported as a series of ranges; each individual is assigned the midpoint of their reported income range as their annual income. Income data were collected for either 1994 or 1995. Individuals who report earning less than $1000 annually or who were students at the time of data collection are excluded from these regressions. Those individuals with missing SAT scores are included in the sample and a dummy variable is included in the regressions that include SAT variables to indicate that a person did not have a valid AFQT score. All regressions use institution weights and standard errors are clustered at the institution level. Standard errors are in parentheses.

In developing countries, eradicating poverty takes a large and diverse set of strategies: battling disease, fighting corruption, building schools, providing clean water, and so on (Schultz and Strauss, 2008). In the United States, important progress toward racial equality can be made if one ensures that black and white children obtain the same skills. This is an enormous improvement over the battles for basic access and equality that were fought in the 20th century, but we must now work to close the racial achievement gaps in education—high-quality education is the new civil rights battleground.16

3 Basic facts about racial differences in achievement before kids enter school

We begin our exploration of the racial achievement gap with data on mental function in the first year of life. This approach has two virtues. First, nine months is one of the earliest ages at which one can reliably test cognitive achievement in infants. Second, data on the first year of life provide us with a rare opportunity to potentially understand whether genetics is an important factor in explaining racial differences later in life.17

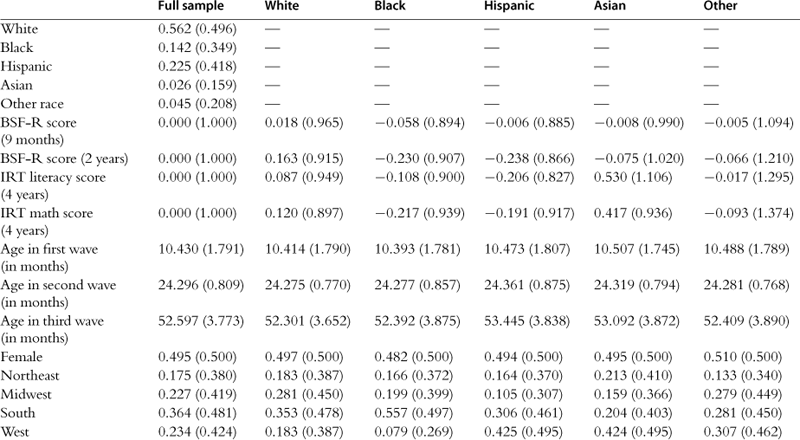

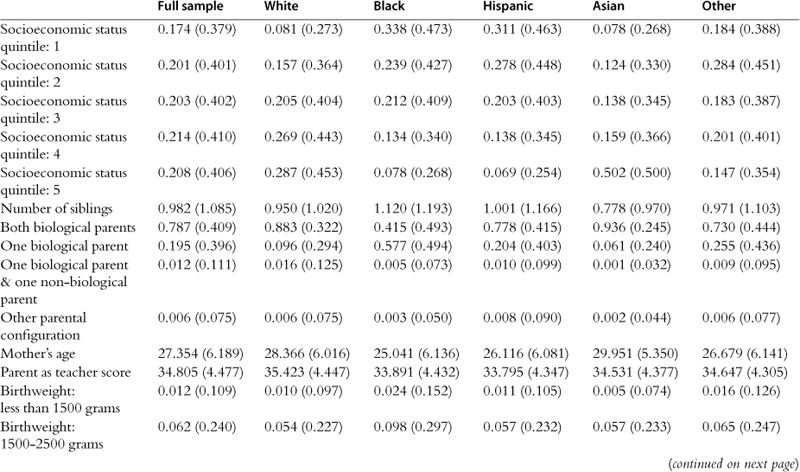

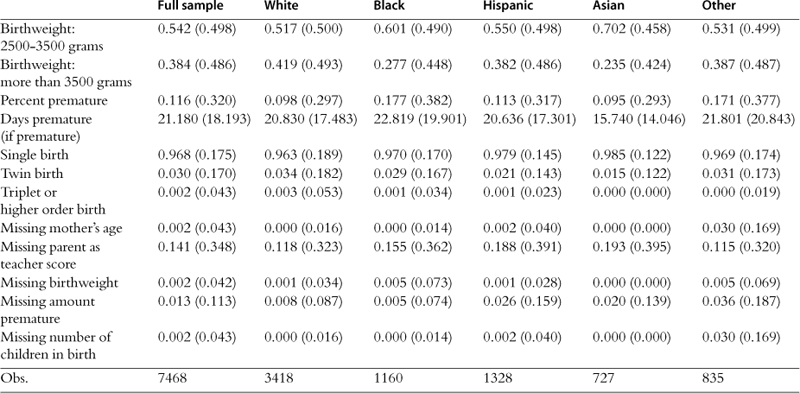

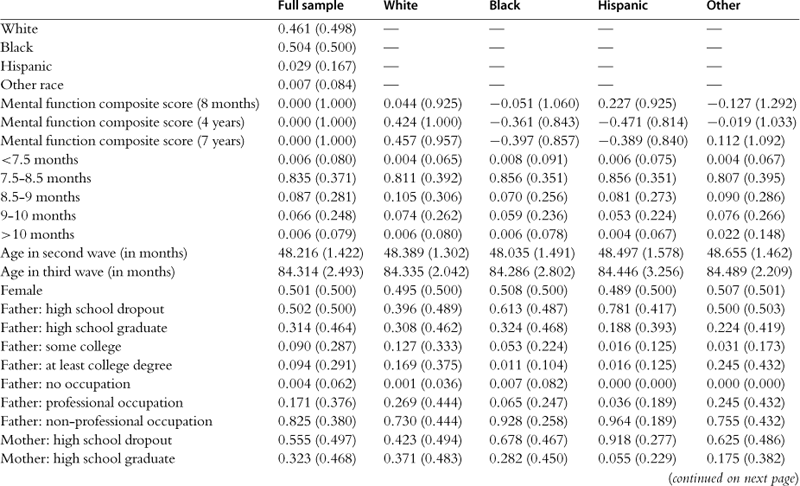

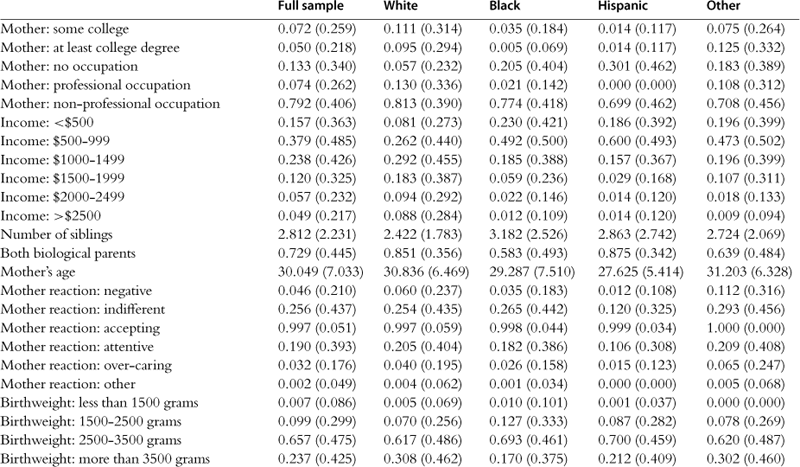

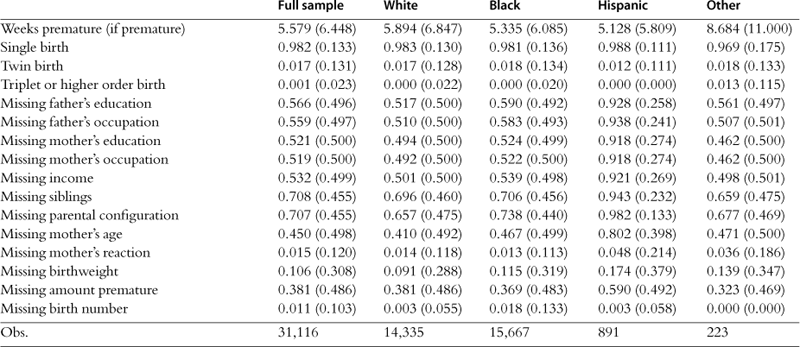

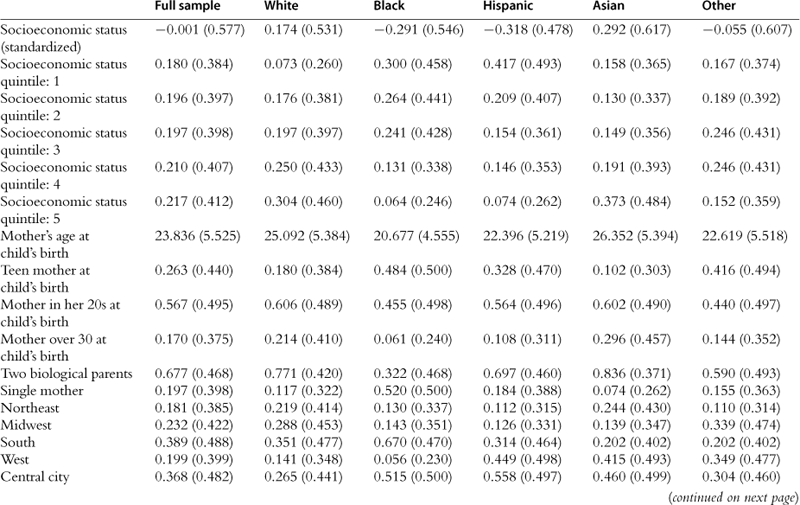

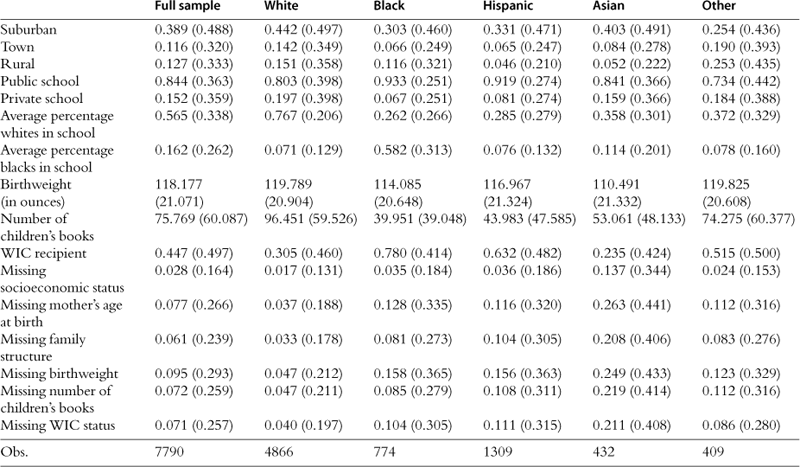

There are only two datasets that both are nationally representative and contain assessments of mental function before the first year of life. The first is the US Collaborative Perinatal Project (CPP) (Bayley, 1965), which includes over 31,000 women who gave birth in twelve medical centers between 1959 and 1965. The second dataset is the Early Childhood Longitudinal Study, Birth Cohort (ECLS-B), a nationally representative sample with measures of mental functioning (a shortened version of the Bayley Scale of Infant Development) for over 10,000 children aged one and under. Summary statistics for the variables we use in our core specifications are displayed by race in Table A.4 (CPP) and Table A.5 (ECLS-B).

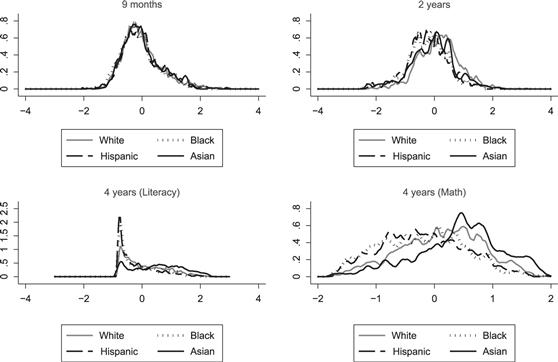

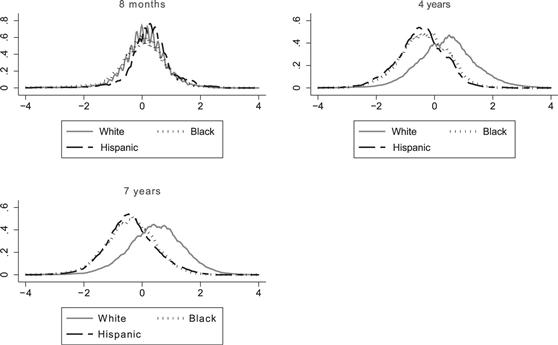

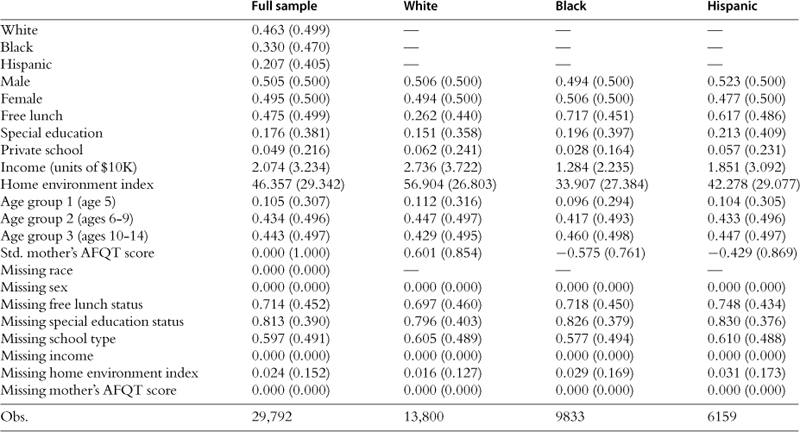

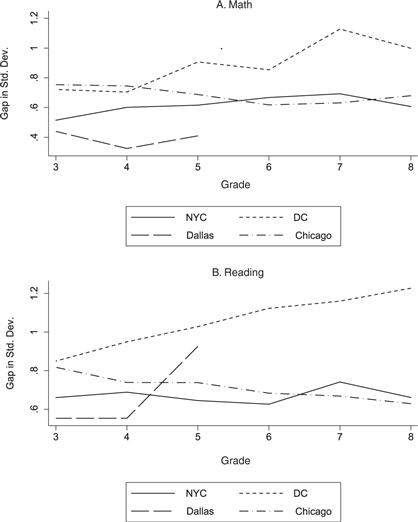

Figures 1 and 2 plot the density of mental test scores by race at various ages in the ECLS-B and CPP data sets, respectively.18 In Fig. 1, the test score distributions on the Bayley Scale at age nine months for children of different races are visually indistinguishable. By age two, the white distribution has demonstrably shifted to the right. At age four, the cognitive score is separated into two components: literacy (which measures early language and literacy skills) and math (which measures early mathematics skills and math readiness). Gaps in literacy are similar to disparities at age two; early math skills differences are more pronounced. Figure 2 shows a similar pattern using the CPP data. At age eight months, all races look similar. By age four, whites are far ahead of blacks and Hispanics and these differences continue to grow over time. Figures 1 and 2 make one of the key points of this section: the commonly observed racial achievement gap only emerges after the first year of life.

To get a better sense of the magnitude (and standard errors) of the change from nine months to seven years old, we estimate least squares models of the following form:

![]() (2)

(2)

where i indexes individuals, a indexes age in years, and Ri corresponds to the racial group to which an individual belongs. The vector Xi captures a wide range of possible control variables including demographics, home and prenatal environment; ε; a is an error term. The variables in the ECLS-B and CPP datasets are similar, but with some important differences.19 In the ECLS-B dataset, demographic variables include the gender of the child, the age of the child at the time of assessment (in months), and the region of the country in which the child lives. Home environment variables include a single socioeconomic status measure (by quintile), the mother’s age, the number of siblings, and the family structure (child lives with: “two biological parents,” “one biological parent,” and so on). There is also a “parent as teacher” variable included in the home environment variables. The “parent as teacher” score is coded based on interviewer observations of parent-child interactions in a structured problem-solving environment and is based on the Nursing Child Assessment Teaching Scale (NCATS). Our set of prenatal environment controls include: the birthweight of the child (in 1000-gram ranges), the amount premature that the child was born (in 7-day ranges), and a set of dummy variables representing whether the child was a single birth, a twin, or one in a birth of three or more.

In the CPP dataset, demographic variables include the age of the child at the time of assessment (in months) and the gender of the child. Our set of home environment variables provides rich proxies of the environment in which children were reared. The set of home variables includes: parental education (both mother’s and father’s, which have been transformed to dichotomous variables ranging from “high school dropout” to “college degree or more”), parental occupation (a set of mutually exclusive and collectively exhaustive dummy variables: “no occupation,” “professional occupation,” or “non-professional occupation”), household income during the first three months of pregnancy (in $500 ranges), mother’s age, number of siblings, and each mother’s reaction to and interactions with the child, which are assessed by the interviewer (we indicate whether a mother is indifferent, accepting, attentive, over-caring, or if she behaves in another manner). The set of prenatal environment controls for the CPP is the same as the set of prenatal environment controls in the ECLS-B dataset. Also included in the analysis of both datasets is interviewer fixed effects, which adjust for any mean differences in scoring of the test across interviewers.20 It is important to stress that a causal interpretation of the coefficients on the covariates is likely to be inappropriate; we view these particular variables as proxies for a broader set of environmental and behavioral factors.

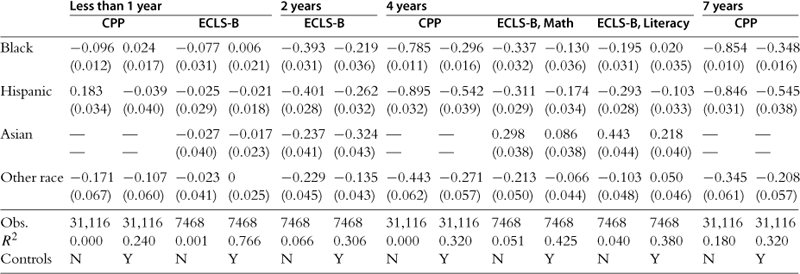

The coefficients on the race variables across the first three waves of ECLS-B and CPP datasets are presented in Table 5. The omitted race category is non-Hispanic white, so the other race coefficients are relative to that omitted group. Each column reflects a different regression and potentially a different dataset. The odd-numbered columns have no controls. The even-numbered columns control for interviewer fixed effects, age at which the test was administered, the gender of the child, region, socioeconomic status, variables to proxy for a child’s home environment (family structure, mother’s age, number of siblings, and parent-as-teacher measure) and prenatal condition (birth weight, premature birth, and multiple births).21 Even-numbered columns for CPP data omit region and the parent-as-teacher measure, which are unique to ECLS-B.22

Table 5

Racial differences in the mental function composite score, ECLS-B and CPP.

The dependent variable is the mental composite score, which is normalized to have a mean of zero and a standard deviation of one in each wave for the full, unweighted sample in CPP and the full sample with wave 3 weights in ECLS-B. Non-Hispanic whites are the omitted race category in each regression and all race coefficients are relative to that group. The unit of observation is a child. Estimation is done using weighted least squares for the ECLS-B sample (columns 3–6 and 9–12) using sample weights provided in the third wave of the data set. Estimation is done using ordinary least squares for the CPP sample (columns 1–2, 7–8, and 13–14). In addition to the variables included in the table, indicator variables for children with missing values on each covariate are also included in the regressions. Standard errors are in parentheses. Columns 1 through 4 present results for children under one year; Columns 5 and 6 present results for 2-year-olds; Columns 7 through 12 present results for 4-year-olds; Columns 13 and 14 present results for 7-year-olds.

In infancy, blacks lag whites by 0.077 (0.031) standard deviations in the raw ECLS-B data. Hispanics and Asians also slightly trail whites by 0.025 (0.029) and 0.027 (0.040), respectively. Adding our set of controls eliminates these trivial differences. The patterns in the CPP data are strikingly similar. Yet, raw gaps of almost 0.4 standard deviations between blacks and whites are present on the test of mental function in the ECLS-B at age two. Even after including extensive controls, a black-white gap of 0.219 (0.036) standard deviations remains. Hispanics look similar to blacks. Asians lag whites by a smaller margin than blacks or Hispanics in the raw data but after including controls they are the worst-performing ethnic group. By age four, a large test score gap has emerged for blacks and Hispanics in both datasets—but especially in the CPP. In the raw CPP data, blacks lag whites by almost 0.8 standard deviations and Hispanics fare even worse. The inclusion of controls reduces the gap to roughly 0.3 standard deviations for blacks and 0.5 standard deviations for Hispanics. In the ECLS-B, black math scores trail white scores by 0.337 (0.032) in the raw data and trail by 0.130 (0.036) with controls. Black-white differences in literacy are −0.195 (0.031) without controls and 0.020 (0.035) with controls. The identical estimates for Hispanics are −0.311 (0.029) and −0.174 (0.034) in math; −0.293 (0.028) and −0.103 (0.033) in literacy. Asians are the highest-performing ethnic group in both subjects on the age four tests. Racial disparities at age seven, available only in CPP, are generally similar to those at age four.

There are at least three possible explanations for the emergence of racial differences with age. The first is that the skills tested in one-year-olds are not the same as those required of older children, and there are innate racial differences only in the skills that are acquired later. For instance, an infant scores high if she babbles expressively or looks around to find the source of the noise when a bell rings, while older children are tested directly on verbal skills and puzzle-solving ability. Despite these clear differences in the particular tasks undertaken, the outcomes of these early and subsequent tests are correlated by about 0.30, suggesting that they are, to some degree, measuring a persistent aspect of a child’s ability.23 Also relevant is the fact that the Bayley Scales of Infant Development (BSID) score is nearly as highly correlated with measures of parental IQ as childhood aptitude tests.

Racial differences in rates of development are a second possible explanation for the patterns in our data. If black infants mature earlier than whites, then black performance on early tests may be artificially inflated relative to their long-term levels. On the other hand, if blacks are less likely to be cognitively stimulated at home or more likely to be reared in environments that Shonkoff (2006) would label as characterized by “toxic stress,” disruptions in brain development may occur, which may significantly retard cognitive growth.

A third possible explanation for the emerging pattern of racial gaps is that the relative importance of genes and environmental factors in determining test outcomes varies over time. In contrast to the first two explanations mentioned above, under this interpretation, the measured differences in test scores are real, and the challenge is to construct a model that can explain the racial divergence in test scores with age.

To better understand the third explanation, Fryer and Levitt (forthcoming) provide two statistical models that are consistent with the data presented above. Here we provide a briefoverview of the models and their predictions.

The first parameter of interest is the correlation between test scores early on and later in life. Fryer and Levitt (forthcoming) assign a value of 0.30 to that correlation. The measured correlation between test scores early and late in life and parental test scores is also necessary for the analysis. Based on prior research (e.g., Yeates et al., 1983), we take these two correlations as 0.36 and 0.39, respectively.24 The estimated black-white test score gap at young ages is taken as 0.077 based on our findings in ECLS-B, compared to a gap of 0.78 at later ages based on our findings in CPP.

The primary puzzle raised by our results is the following: how does one explain small racial gaps on the BSID test scores administered at ages 8 to 12 months and large racial gaps in tests of mental ability later in life, despite the fact that these two test scores are reasonably highly correlated with one another (ρ = 0.3), and both test scores are similarly correlated with parental test scores (ρ ≥ 0.3)?

The basic building blocks

Let θa denote the measured test score of an individual at age a. We assume that test scores are influenced by an individual’s genetic make-up (G) and his environment (Ea) at age a. The simplest version of the canonical model of genes and environment takes the following form:

![]() (3)

(3)

In this model, the individual’s genetic endowment is fixed over time, but environmental factors vary and their influence may vary. θa, G, and Ea are all normalized into standard deviation units. Initially we will assume that G, Ea, and ∈a are uncorrelated for an individual at any point in time (this assumption will be relaxed below), and that Ea and the error terms for an individual at different ages are also uncorrelated.25 There will, however, be a positive correlation between an individual’s genetic endowment G and the genetic endowment of his or her mother (which we denote Gm). We will further assume, in accord with the simplest models of genetic transmission, that the correlation between G and Gm is 0.50.26

We are interested in matching two different aspects of the data: (1) correlations between test scores, and (2) racial test score gaps at different ages. The test score correlations of interest are those of an individual at the age of one (for which we use the subscript b for baby) and later in childhood (denoted with subscript c).

Under the assumptions above, these correlations are as follows:

![]() (4)

(4)

![]() (5)

(5)

![]() (6)

(6)

where the 0.5 in the first two equations reflects the assumed genetic correlation between mother and child, and the values 0.36, 0.39, and 0.30 are our best estimates of the empirical values of these correlations based on past research cited above.

The racial test score gaps in this model are given by:

![]() (7)

(7)

![]() (8)

(8)

where the symbol Δ in front of a variable signifies the mean racial gap between blacks and whites for that variable. The values 0.077 and 0.854 represent our estimates of the black-white test score gap at ages nine months and seven years from Table 5.27 For Hispanics, these differences are 0.025 and 0.846, respectively.

Solving Eqs (4)–(6), this simple model yields a value of 1.87 for ![]() . Under the assumptions of the model, however, the squared value of the coefficients α and β represent the share of the variance in the measured test score explained by genetic and environmental factors, respectively, meaning that

. Under the assumptions of the model, however, the squared value of the coefficients α and β represent the share of the variance in the measured test score explained by genetic and environmental factors, respectively, meaning that ![]() is bounded at one. Thus, this simple model is not consistent with the observed correlations in the data. The correlation between child and mother test scores observed in the data is too large relative to the correlation between the child’s own test scores at different ages.

is bounded at one. Thus, this simple model is not consistent with the observed correlations in the data. The correlation between child and mother test scores observed in the data is too large relative to the correlation between the child’s own test scores at different ages.

Consequently, we consider two extensions to this simple model that can reproduce these correlations in the data: assortative mating and allowing for a mother’s test score to influence the child’s environment.28

Assortative mating

If women with high G mate with men who also have high G, then the parent child corr(G, Gm) is likely to exceed 0.50. Assuming a value of am = 0.80, which is consistent with prior research, the necessary corr(G, Gm) to solve the system of equations above is roughly 0.76, which requires the correlation between parents on G to be around 0.50, not far from the 0.45 value reported for that coefficient in a literature review (Jensen, 1978).29 With that degree of assortative mating, the other parameters that emerge from the model are αb = 0.53 and αc = 0.57. Using these values of ab and ac, it is possible to generate the observed racial gaps in (7) and (8). If we assume as an upper bound that environments for black and Hispanic babies are the same as those for white babies (i.e., ΔEb = 0) in Eq. (7), then the implied racial gap in G is a modest 0.145 standard deviations for blacks and 0.04 for Hispanics.30

To fit Eq. (8) requires βcΔEc = 0.77. If βc = 0.77 (implying that environmental factors explain about half of the variance in test scores), then a one standard deviation gap in environment between black and white children and a 1.14 standard deviation gap between Hispanic and white children would be needed to generate the observed childhood racial test score gap31. If environmental factors explain less of the variance, a larger racial gap in environment would be needed. Taking a simple non-weighted average across environmental proxies available in the ECLS yields a 1.2 standard deviation gap between blacks and whites32.

Allowing parental test scores to influence the child’s environment

A second class of model consistent with our empirical findings is one in which the child’s environment is influenced by the parent’s test score, as in Dickens and Flynn (2001). One example of such a model would be

![]() (9)

(9)

where Eq. (9) differs from the original Eq. (3) by allowing the child’s environment to be a function of the mother’s test score, as well as factors ![]() that are uncorrelated with the mother’s test score. In addition, we relax the earlier assumption that the environments an individual experiences as a baby and as a child are uncorrelated. We do not, however, allow for assortative mating in this model. Under these assumptions, Eq. (9) produces the following three equations for our three key test score correlations

that are uncorrelated with the mother’s test score. In addition, we relax the earlier assumption that the environments an individual experiences as a baby and as a child are uncorrelated. We do not, however, allow for assortative mating in this model. Under these assumptions, Eq. (9) produces the following three equations for our three key test score correlations

![]() (10)

(10)

![]() (11)

(11)

![]() (12)

(12)

Allowing parental ability to influence the child’s environment introduces extra degrees of freedom; indeed, this model is so flexible that it can match the data both under the assumption of very small and large racial differences in G (e.g., ΔG ≤ 1 standard deviation). In order for our findings to be consistent with small racial differences in G, the importance of environmental factors must start low and grow sharply with age. In the most extreme case (where environment has no influence early in life: βb = 0), solving Eqs (10) and (12) implies αb = 0.80 and αc = 0.37. If βc = 0.77 (as in the assortative mating model discussed above), then a correlation of 0.29 between the mother’s test score and the child’s environment is necessary to solve Eq. (11). The mean racial gap in G implied by Eq. (7) is 0.096 standard deviations. To match the test score gap for children requires a mean racial difference in environmental factors of approximately one standard deviation.

A model in which parents’ scores influence their offspring’s environment is, however, equally consistent with mean racial gaps in G of one standard deviation. For this to occur, G must exert little influence on the baby’s test score, but be an important determinant of the test scores of children. Take the most extreme case in which G has no influence on the baby’s score (i.e., αb = 0). If genetic factors are not directly determining the baby’s test outcomes, then environmental factors must be important. Assuming βb = 0.80, Eq. (10) implies a correlation between the mother’s test score and the baby’s environment of 0.45. If we assume that the correlation between the baby’s environment and the child’s environment is 0.70, then Eq. (12) implies a value of βc = 0.54. If we maintain the earlier assumption of ![]() = 0.80, as well as a correlation between the mother’s test score and the child’s environment of 0.32, then a value of αc = 0.49 is required to close the model. If there is a racial gap of one standard deviation in G, then Eqs (7) and (8) imply 0.096 and 0.67 standard deviation racial gaps in environment factors for babies and children, respectively, to fit our data.

= 0.80, as well as a correlation between the mother’s test score and the child’s environment of 0.32, then a value of αc = 0.49 is required to close the model. If there is a racial gap of one standard deviation in G, then Eqs (7) and (8) imply 0.096 and 0.67 standard deviation racial gaps in environment factors for babies and children, respectively, to fit our data.

Putting the pieces together, the above analysis shows that the simplest genetic models are not consistent with the evidence presented on racial differences in the cognitive ability of infants. These inconsistencies can be resolved in two ways: incorporating assortative mating or allowing parental ability to affect the offspring’s environment. With assortative mating, our data imply a minimal racial gap in intelligence (0.11 standard deviations as an upper bound), but a large racial gap in environmental factors. When parent’s ability influences the child’s environment, our results can be made consistent with almost any value for a racial gap in G (from roughly zero to a full standard deviation), depending on the other assumptions that are made. Thus, despite stark empirical findings, our data cannot resolve these difficult questions—much depends on the underlying model.

4 Interventions to foster human capital before children enter school

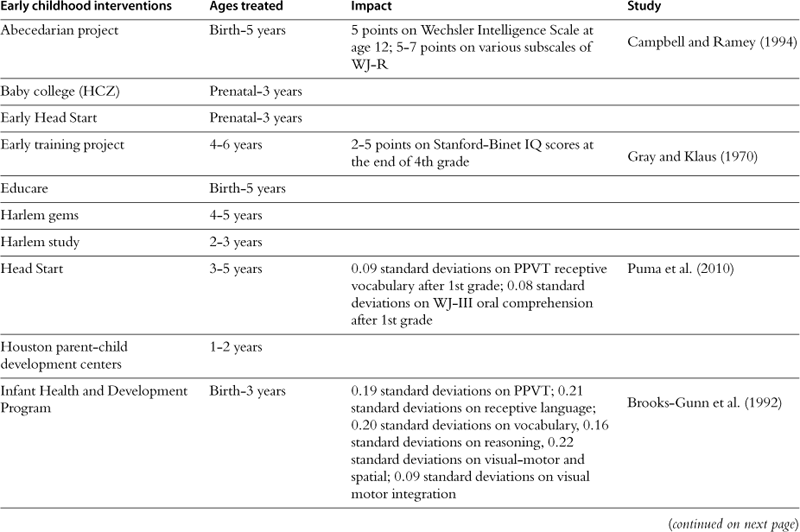

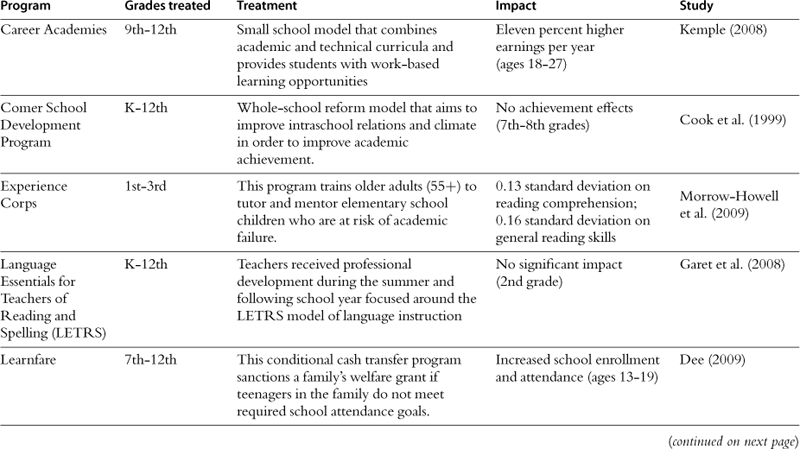

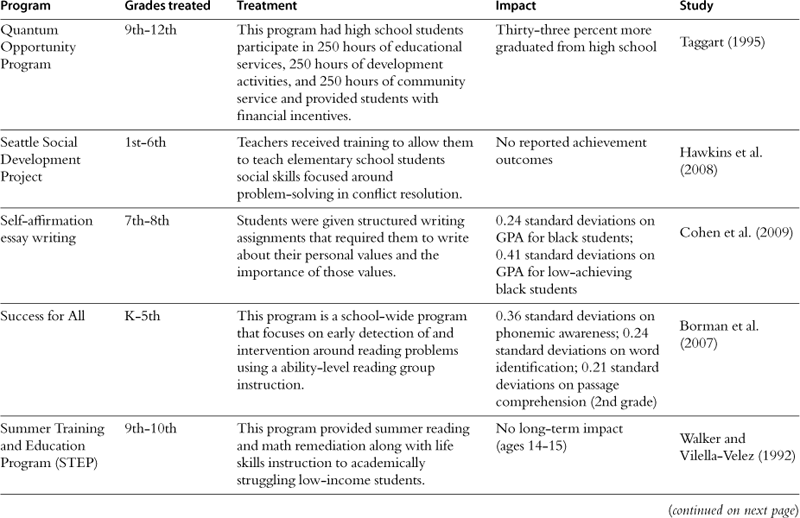

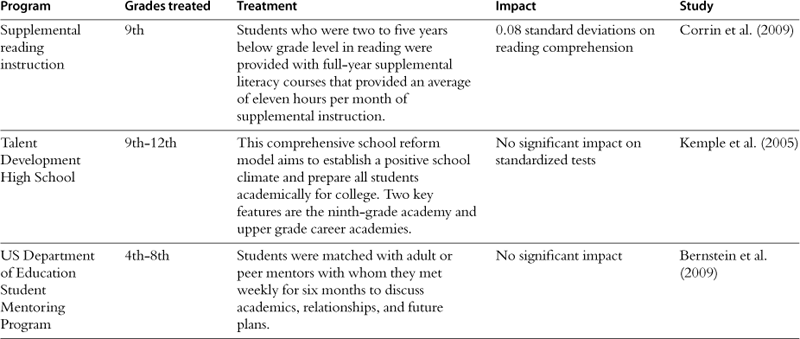

In the past five decades there have been many attempts to close the racial achievement gap before kids enter school.33 Table 6 provides an overview of twenty well-known programs, the ages they serve, and their treatment effects (in the cases in which they have been credibly evaluated).

Table 6

Early childhood interventions to increase achievement.

The set of interventions included in this table was generated in two ways. First, we used Heckman (1999) and Heckman et al. (2009) as the basis for a thorough literature review on early childhood intervention programs. We investigated all of the programs included in these papers, and then examined the papers written on this list of programs for additional programs. Second, we examined all of the relevant reports available through the IES What Works Clearinghouse. From this original list, we included twenty of the most credibly evaluated, largest scale programs in our final list.

Perhaps the most famous early intervention program for children involved 64 students in Ypsilanti, Michigan, who attended the Perry Preschool program in 1962. The program consisted of a 2.5-hour daily preschool program and weekly home visits by teachers, and targeted children from disadvantaged socioeconomic backgrounds with IQ scores in the range of 70–85. An active learning curriculum—High/Scope—was used in the preschool program in order to support both the cognitive and non-cognitive development of the children over the course of two years beginning when the children were three years old. Schweinhart et al. (1993) find that students in the Perry Preschool program had higher test scores between the ages of 5 and 27, 21% less grade retention or special services required, 21% higher graduation rates, and halfthe number of lifetime arrests in comparison to children in the control group. Considering the financial benefits that are associated with the positive outcomes of the Perry Preschool, Heckman et al. (2009) estimated that the rate of return on the program is between 7 and 10%, passing a cost-benefit analysis.

Another important intervention, which was initiated three years after the Perry Preschool program is Head Start. Head Start is a preschool program funded by federal matching grants that is designed to serve 3- to 5-year-old children living at or below the federal poverty level.34 The program varies across states in terms of the scope of services provided, with some centers providing full-day programs and others only half-day. In 2007, Head Start served over 900,000 children at an average annual cost of about $7300 per child.

Evaluations of Head Start have often been difficult to perform due to the non-random nature of enrollment in the program. Currie and Thomas (1995) use a national sample of children and compare children who attended a Head Start program with siblings who did not attend Head Start, based on the assumption that examining effects within the family unit will reduce selection bias. They find that those children who attended Head Start scored higher on preschool vocabulary tests but that for black students, these gains were lost by age ten. Using the same analysis method with updated data, Garces et al. (2002) find several positive outcomes associated with Head Start attendance. They conclude that there is a positive effect from Head Start on the probability of attending college and—for whites—the probability of graduating from high school. For black children, Head Start led to a lower likelihood of being arrested or charged with a crime later in life.

Puma et al. (2005), in response to the 1998 reauthorization of Head Start, conduct an evaluation using randomized admission into Head Start.35 The impact of being offered admission into Head Start for three and four year olds is 0.10 to 0.34 standard deviations in the areas of early language and literacy. For 3-year-olds, there were also small positive effects in the social-emotional domain (0.13 to 0.18 standard deviations) and on overall health status (0.12 standard deviations). Yet, by the time the children who received Head Start services have completed first grade, almost all of the positive impact on initial school readiness has faded. The only remaining impacts in the cognitive domain are a 0.08 standard deviation increase in oral comprehension for 3-year-old participants and a 0.09 standard deviation increase in receptive vocabulary for the 4-year-old cohort (Puma et al., 2010).36

A third, and categorically different, program is the Nurse Family Partnership. Through this program, low-income first-time mothers receive home visits from a registered nurse beginning early in the pregnancy that continue until the child is two years old—a total of fifty visits over the first two years. The program aims to encourage preventive health practices, reduce risky health behaviors, foster positive parenting practices, and improve the economic self-sufficiency of the family. In a study of the program in Denver in 1994–95, Olds et al. (2002) find that those children whose mothers had received home visits from nurses (but not those who received home visits from paraprofessionals) were less likely to display language delays and had superior mental development at age two. In a long-term evaluation of the program, Olds et al. (1998) find that children born to women who received nurse home visits during their pregnancy between 1978 and 1980 have fewer juvenile arrests, convictions, and violations of probation by age fifteen than those whose mothers did not receive treatment.

Other early childhood interventions—many based on the early success of the Perry Preschool, Head Start, and the Nurse Family Partnership—include the Abecedarian Project, the Early Training Project, the Infant Health and Development Program, the Milwaukee Project, and Tulsa’s universal pre-kindergarten program. The Abecedarian Project provided full-time, high-quality center-based childcare services for four cohorts of children from low-income families from infancy through age five between 1971 and 1977. Campbell and Ramey (1994) find that at age twelve, those children who were randomly assigned to the project scored 5 points higher on the Wechsler Intelligence Scale and 5–7 points higher on various subscales of the Woodcock-Johnson Psycho-Educational Battery achievement test. The Early Training Project provided children from low-income homes with summertime experiences and weekly home visits during the three summers before entering first grade in an attempt to improve the children’s school readiness. Gray and Klaus (1970) report that children who received these intervention services maintained higher Stanford-Binet IQ scores (2–5 points) at the end of fourth grade. The Infant Health and Development Program specifically targeted families with low birthweight, preterm infants and provided them with weekly home visits during the child’s first year and biweekly visits through age three, as well as enhanced early childhood educational care and bimonthly parent group meetings. Brooks-Gunn et al. (1992) report that this program had positive effects on language development at the end of first grade, with participant children scoring 0.09 standard deviations higher on receptive vocabulary and 0.08 standard deviations higher on oral comprehension. The Milwaukee Project targeted newborns born to women with IQs lower than 80; mothers received education, vocational rehabilitation, and child care training while their children received high-quality educational programming and three balanced meals daily at “infant stimulation centers” for seven hours a day, five days a week until the children were six years old. Garber (1988) finds that this program resulted in an increase of 23 points on the Stanford-Binet IQ test at age six for treatment children compared to control children.

Unlike the other programs described, Tulsa’s preschool program is open to all 4-year-old children. It is a basic preschool program that has high standards for teacher qualification (a college degree and early childhood certification are both required) and a comparatively high rate of penetration (63% of eligible children are served). Gormley et al. (2005) use a birthday cutoff regression discontinuity design to evaluate the program and find that participation improves scores on the Woodcock-Johnson achievement test significantly (from 0.38 to 0.79 standard deviations).

Beyond these highly effective programs, Table 6 demonstrates that there is large variance in the effectiveness of well-known early childhood programs. The Parents as Teachers Program, for instance, shows mixed and generally insignificant effects on initial measures of cognitive development (Wagner and Clayton, 1999). In an evaluation of the Houston Parent-Child Development Centers, Andrews et al. (1982) find no significant impact on children’s cognitive skills at age one and mixed impacts on cognitive development at age two. Even so, the typical early childhood intervention passes a simple cost-benefit analysis.37

There are two potentially important caveats going forward. First, most of the programs are built on the insights gained from Perry and Head Start, yet what we know about infant development in the past five decades has increased dramatically. For example, psychologists used to assume that there was a relatively equal degree of early attachment across children but they now acknowledge that there is a great deal of variance in the stability of early attachment (Thompson, 2000). Tying new programs to the lessons learned from previously successful programs while incorporating new insights from biology and developmental psychology is both the challenge and opportunity going forward.

Second, and more important for our purposes here, even the most successful early interventions cannot close the achievement gap in isolation. If we truly want to eliminate the racial achievement gap, early interventions may or may not be necessary but the evidence forces one to conclude that they are not sufficient.

5 The racial achievement gap in kindergarten through 12th grade

As we have seen, children begin life on equal footing, but important differences emerge by age two and their paths quickly diverge. In this section, we describe basic facts about the racial achievement gap from the time children enter kindergarten to the time they exit high school. Horace Mann famously argued that schools were “the great equalizer,” designed to eliminate differences between children that are present when they enter school because of different background characteristics. As this section will show, if anything, schools currently tend to exacerbate group differences.

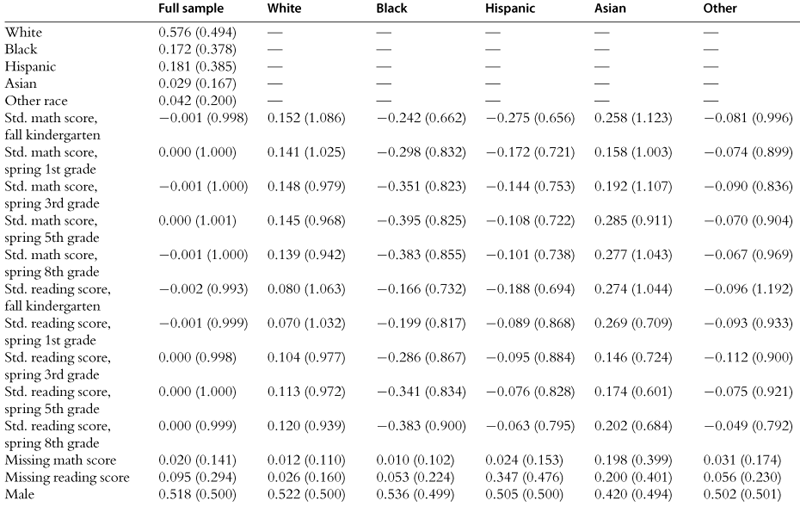

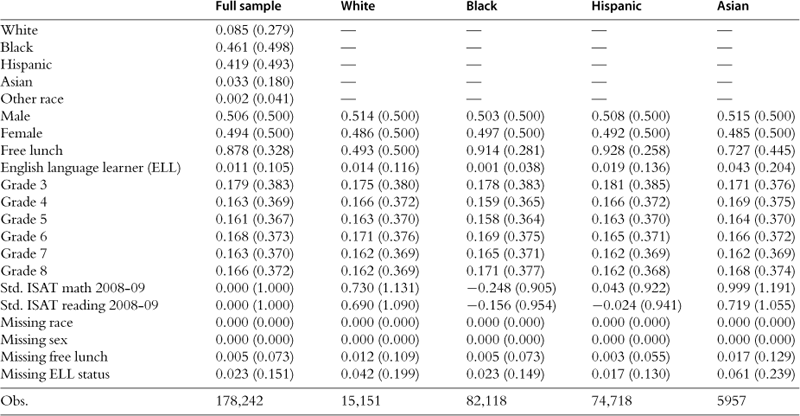

Basic facts about racial differences in educational achievement using ECLS-K

The Early Childhood Longitudinal Study, Kindergarten Cohort (ECLS-K) is a nationally representative sample of over 20,000 children entering kindergarten in 1998. Information on these children has been gathered at six separate points in time. The full sample was interviewed in the fall and spring of kindergarten, and the spring of first, third, fifth, and eighth grades. Roughly 1000 schools are included in the sample, with an average of more than twenty children per school in the study. As a consequence, it is possible to conduct within-school or even within-teacher analyses.

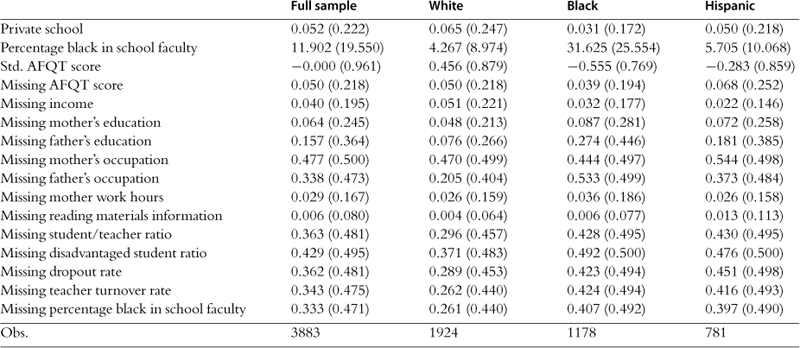

A wide range of data is gathered on the children in the study, which is described in detail at the ECLS website http://nces.ed.gov/ecls. We utilize just a small subset of the available information in our baseline specifications, the most important of which are cognitive assessments administered in kindergarten, first, third, fifth, and eighth grades. The tests were developed especially for the ECLS, but are based on existing instruments including Children’s Cognitive Battery (CCB); Peabody Individual Assessment Test—Revised (PIAT-R); Peabody Picture Vocabulary Test-3 (PPVT-3); Primary Test of Cognitive Skills (PTCS); and Woodcock-Johnson Psycho-Educational Battery—Revised (WJ-R). The questions are administered orally through spring of first grade, as it is not assumed that students know how to read until then. Students who are missing data on test scores, race, or gender are dropped from our sample. Summary statistics for the variables we use in our core specifications are displayed by race in Table A.6.

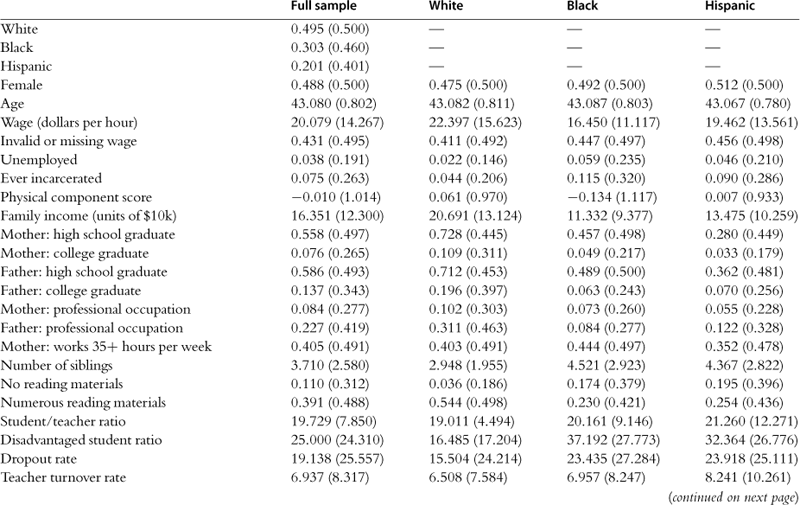

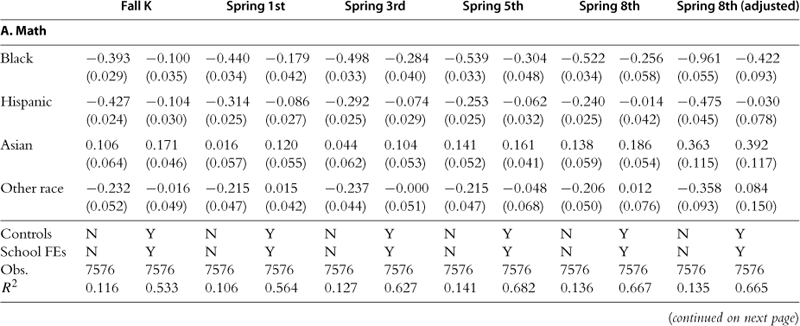

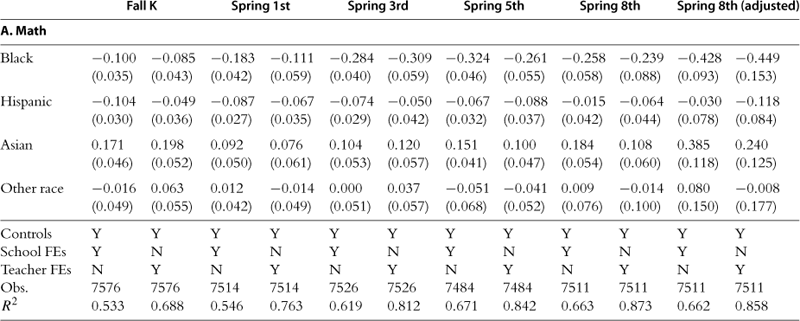

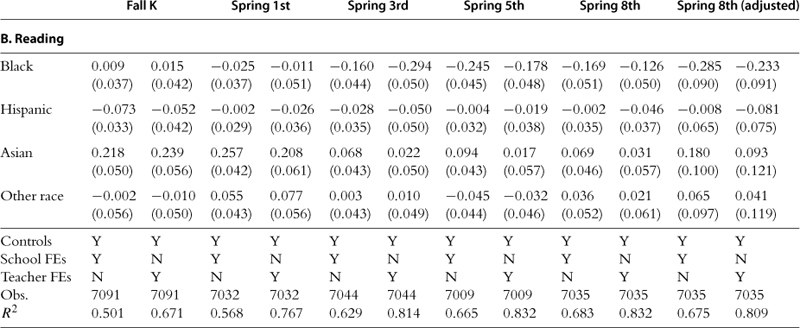

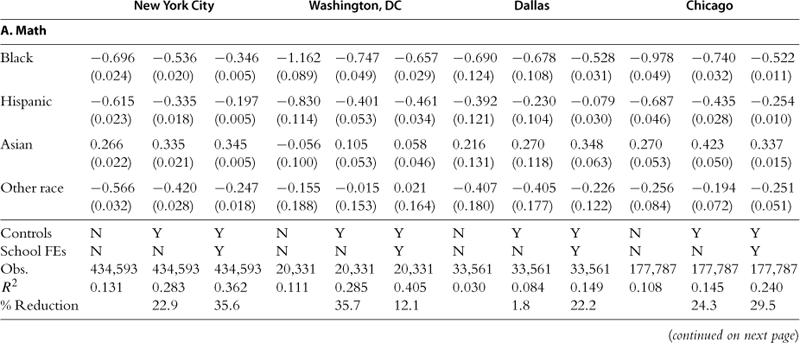

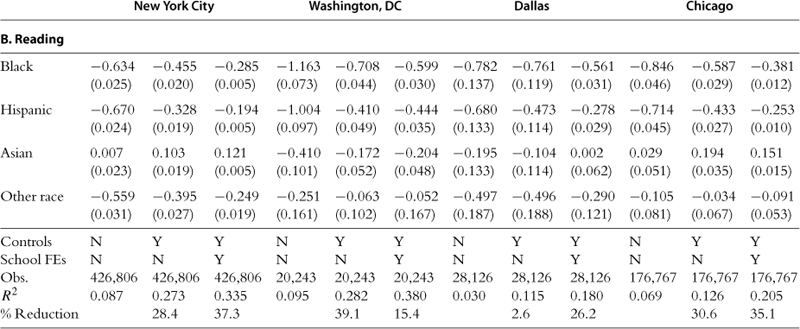

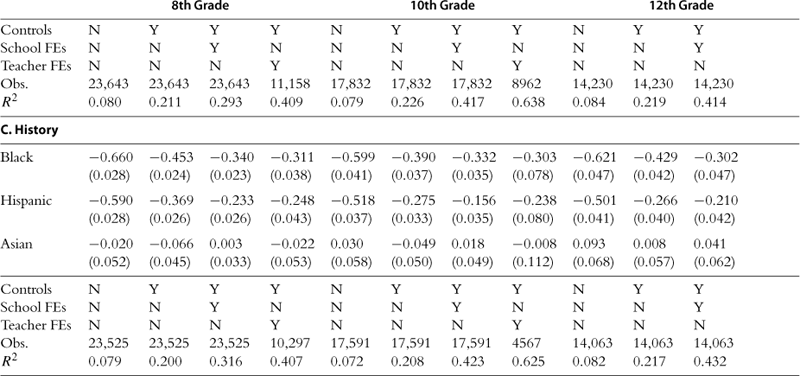

Table 7 presents a series of estimates of the racial test score gap in math (Panel A) and reading (Panel B) for the tests taken over the first nine years of school. Similar to our analysis of younger children in the previous section, the specifications estimated are least squares regressions of the form:

Table 7

The evolution of the achievement gap (ECLS), K-8.

The dependent variable in each column is test score from the designated subject and grade. Odd-numbered columns estimate the raw racial test score gaps and do not include any other controls. Specifications in the even-numbered columns include controls for socioeconomic status, number of books in the home (linear and quadratic terms), gender, age, birth weight, dummies for mother’s age at first birth (less than twenty years old and at least thirty years old), a dummy for being a Women, Infants, Children (WIC) participant, missing dummies for all variables with missing data, and school fixed effects. Test scores are IRT scores, normalized to have mean zero and standard deviation one in the full, weighted sample. Non-Hispanic whites are the omitted race category, so all of the race coefficients are gaps relative to that group. The sample is restricted to students from whom data were collected in every wave from fall kindergarten through spring eighth grade, as well as students who have non-missing race and non-missing gender. Panel weights are used. The unit of observation is a student. Robust standard errors are located in parentheses.

![]() (13)

(13)

where outcomei,g denotes an individual i ’s test score in grade g and Xi, represents an array of student-level social and economic variables describing each student’s environment. The variable Ri, is a full set of race dummies included in the regression, with non-Hispanic white as the omitted category. In all instances, we use sampling weights provided in the dataset.

The vector Xi, contains a parsimonious set of controls—the most important of which is a composite measure of socio-economic status constructed by the researchers conducting the ECLS survey. The components used in the SES measure are parental education, parental occupational status, and household income. Other variables included as controls are gender, child’s age at the time of enrollment in kindergarten, WIC participation (a nutrition program aimed at relatively low income mothers and children), mother’s age at first birth, birth weight, and the number of children’s books in the home.38 When there are multiple observations of social and economic variables (SES, number of books in the home, and so on), for all specifications, we only include the value recorded in the fall kindergarten survey.39 While this particular set of covariates might seem idiosyncratic, Fryer and Levitt (2004) have shown that results one obtains with this small set of variables mirror the findings when they include an exhaustive set of over 100 controls. Again, we stress that a causal interpretation is unwarranted; we view these variables as proxies for a broader set of environmental and behavioral factors. The odd-numbered columns of Table 7 present the differences in means, not including any covariates. The even-numbered columns mirror the main specification in Fryer and Levitt (2004).

The raw black-white gap in math when kids enter school is 0.393 (0.029), shown in column one of Panel A. Adding our set of controls decreases this difference to 0.100 (0.035). By fifth grade, Asians outperform other racial groups and Hispanics have gained ground relative to whites, but blacks have lost significant ground. The black-white achievement gap in fifth grade is 0.539 (0.033) standard deviations without controls and 0.304 (0.048) with controls. Disparities in eighth grade look similar, but a peculiar aspect of ECLS-K (very similar tests from kindergarten through eighth grade with different weights on the components of the test) masks potentially important differences between groups. If one restricts attention on the eighth grade exam to subsections of the test which are not mastered by everyone (eliminating the counting and shapes subsection, for example), a large racial gap emerges. Specifically, blacks are trailing whites by 0.961 (0.055) in the raw data and 0.422 (0.093) with the inclusion of controls.

The black-white test score gap grows, on average, roughly 0.60 standard deviations in the raw data and 0.30 when we include controls between the fall of kindergarten and spring of eighth grade. The table also illustrates that the control variables included in the specification shrink the gap a roughly constant amount of approximately 0.30 standard deviations regardless of the year of testing. In other words, although blacks systematically differ from whites on these background characteristics, the impact of these variables on test scores is remarkably stable over time. Whatever factor is causing blacks to lose ground is likely operating through a different channel.40

In contrast to blacks, Hispanics gain substantial ground relative to whites, despite the fact that they are plagued with many of the social problems that exist among blacks— low socioeconomic status, inferior schools, and so on. One explanation for Hispanic convergence is an increase in English proficiency, though we have little direct evidence on this question.41 Calling into question that hypothesis is the fact that after controlling for other factors Hispanics do not test particularly poorly on reading, even upon school entry. Controlling for whether or not English is spoken in the home does little to affect the initial gap or the trajectory of Hispanics.42 The large advantage enjoyed by Asians in the first two years of school is maintained. We also observe striking losses by girls relative to boys in math—over two-tenths of a standard deviation over the four-year period— which is consistent with other research (Becker and Forsyth, 1994; Fryer and Levitt, forthcoming).

Panel B of Table 7 is identical to Panel A, but estimates racial differences in reading scores rather than math achievement. After adding our controls, black children score very similarly to whites in reading in the fall of kindergarten. As in math, however, blacks lose substantial ground relative to other racial groups over the first nine years of school. The coefficient on the indicator variable black is 0.009 standard deviations above whites in the fall of kindergarten and 0.246 standard deviations below whites in the spring of fifth grade, or a loss of over 0.25 standard deviations for the typical black child relative to the typical white child. In eighth grade, the gap seems to shrink to 0.168 (0.051), but accounting for the fact that a large fraction of students master the most basic parts of the exam left over from the early elementary years gives a raw gap of 0.918 (0.060) and 0.284 (0.090) with controls. The impact of covariates—explaining about 0.2 to 0.25 of a standard deviation gap between blacks and whites across most grades—is slightly smaller than in the math regressions. Hispanics experience a much smaller gap relative to whites, and it does not grow over time. The early edge enjoyed by Asians diminishes by third grade.

One potential explanation for such large racial achievement gaps, even after accounting for differences in the schools that racial minorities attend, is the possibility that they are assigned inferior teachers within schools. If whites and Asians are more likely to be in advanced classes with more skilled teachers then this sorting could exacerbate differences and explain the divergence over time. Moreover, with such an intense focus on teacher quality as a remedy for racial achievement gaps, it useful to understand whether and the extent to which gaps exist when minorities and non-minorities have the same teacher. This analysis is possible in ECLS-K—the data contain, on average, 3.3 students per teacher within each year of data collection (note that because the ECLS surveys subsamples within each classroom, this does not reflect the true student-teacher ratios in these classrooms).

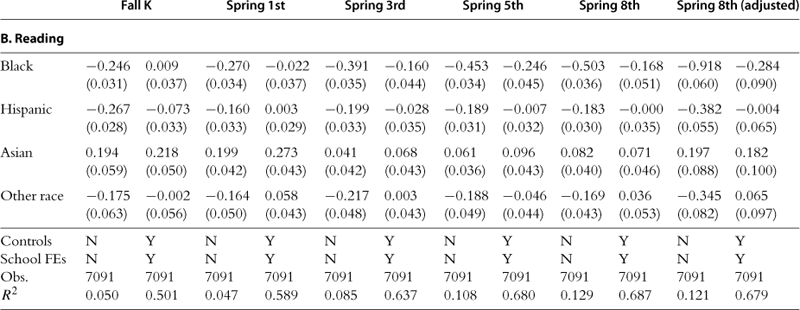

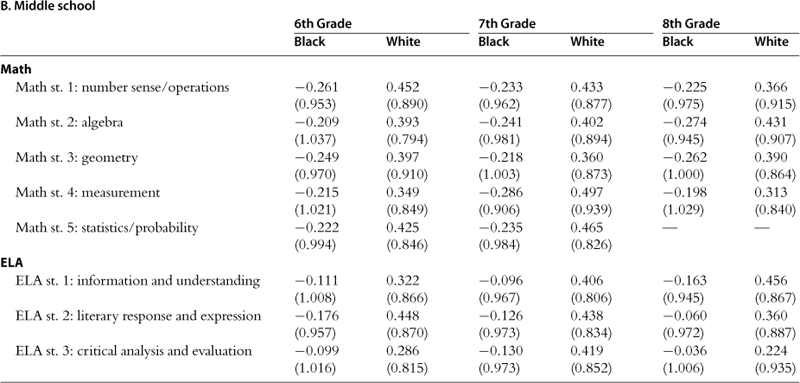

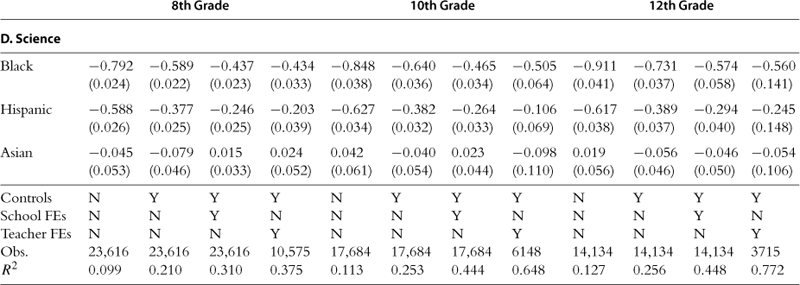

Table 8 estimates the racial achievement gap in math and reading over the first nine years of school including teacher fixed effects. For each grade, there are two columns. The first column estimates racial differences with school fixed effects on a sample of students for whom we have valid information on their teacher. This restriction reduces the sample approximately one percent from the original sample in Table 7. Across all grades and both subjects, accounting for sorting into classrooms has very little marginal impact on the racial achievement gap beyond including school fixed effects. The average gain in standard deviations from including teacher fixed effects is only about 0.014. The minimum marginal gain from including the teacher controls is 0.006 and the maximum difference is 0.072; however, in several cases the gap is not actually reduced by including teacher fixed effects. There are two important takeaways. First, differential sorting within schools does not seem to be an important contributor to the racial achievement gap. Second, although much has been made of the importance of teacher quality in eliminating racial disparities (Levin and Quinn, 2003; Barton, 2003), the above analysis suggests that racial gaps among students with the same teacher are stark.

Table 8

The evolution of the achievement gap (ECLS), K-8: accounting for teachers.

The dependent variable in each column is test score from the designated subject and grade. All specifications include controls for race, socioeconomic status, number of books in the home (linear and quadratic terms), gender, age, birth weight, dummies for mother’s age at first birth (less than twenty years old and at least thirty years old), a dummy for being a Women, Infants, Children (WIC) participant, and missing dummies for all variables with missing data. Odd-numbered columns include school fixed effects, whereas even-numbered columns include teacher fixed effects. Test scores are IRT scores, normalized to have mean zero and standard deviation one in the full, weighted sample. Non-Hispanic whites are the omitted race category, so all of the race coefficients are gaps relative to that group. The sample is restricted to students from whom data were collected in every wave from fall kindergarten through spring eighth grade and students for whom teacher data was available in the relevant grade, as well as students who have non-missing race and non-missing gender. Panel weights are used. The unit of observation is a student. Robust standard errors are located in parentheses.

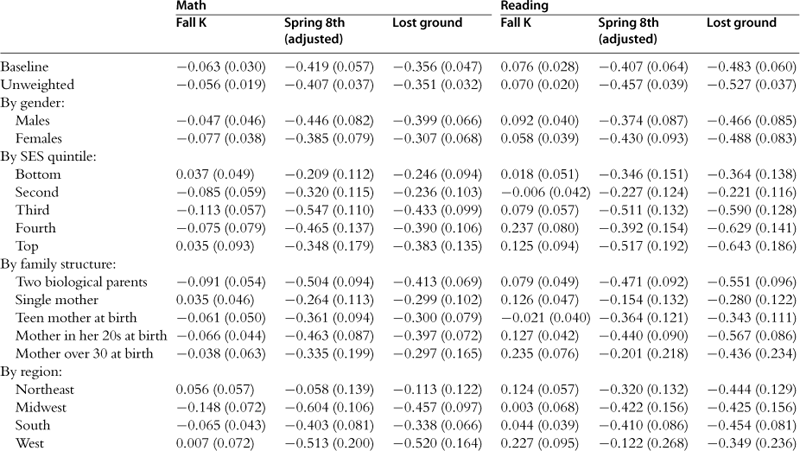

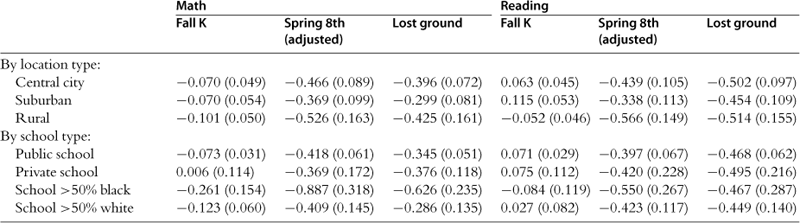

In an effort to uncover the factors that are associated with the divergent trajectories of blacks and whites, Table 9 explores the sensitivity of these “losing ground” estimates across a wide variety of subsamples of the data. We report only the coefficients on the black indicator variable and associated standard errors in the table. The top row of the table presents the baseline results using a full sample and our parsimonious set of controls (the full set of controls used in Tables 7 and 8, but omitting fixed effects). For the eighth grade scores, we restrict the test to components that are not mastered by all students.43 In that specification, blacks lose an average of 0.356 (0.047) standard deviations in math and 0.483 (0.060) in reading relative to whites over the first nine years of school.

Table 9

Sensitivity analysis for losing ground, ECLS (Fall K vs. Spring 8th).

Specifications in this table include controls for race, socioeconomic status, number of books in the home (linear and quadratic terms), gender, age, birth weight, dummies for mother’s age at first birth (less than twenty years old and at least thirty years old), a dummy for being a Women, Infants, Children (WIC) participant, and missing dummies for all variables with missing data. Only the coefficients on black are reported. The sample is restricted to students from whom data were collected in every wave from fall kindergarten through spring eighth grade, as well as students who have non-missing race and non-missing gender. Panel weights are used (except in the specification). The top row shows results from the baseline specification across the entire sample, the second row shows the results when panel weights are omitted, and the remaining rows correspond to the baseline specification restricted to particular subsets of the data.

Surprisingly, blacks lose similar amounts of ground across many subsets of the data, including by sex, location type, and whether or not a student attends private schools. The results vary quite a bit across the racial composition of schools, quintiles of the socioeconomic status distribution, and by family structure. Blacks in schools with greater than fifty percent blacks lose substantially more ground in math than do blacks in greater than fifty percent white schools. In reading, their divergence follows similar paths. The top three SES quintiles lose more ground than the lower two quintiles in both math and reading, but the differences are particularly stark in reading. The two largest losing ground coefficients in the table are for the fourth and fifth quintile of SES in reading. Black students in these categories lose ground at an alarming rate—roughly 0.6 standard deviations over 9 years. This latter result could be related to the fact that, in the ECLS-K, a host of variables which are broad proxies for parenting practices differ between blacks and whites. For instance, black college graduates have the same number of children’s books for their kids as white high school graduates. A similar phenomenon emerges with respect to family structure; the most ground is lost, relative to whites, by black students who have both biological parents. Investigating within-race regressions, Fryer and Levitt (2004) show that the partial correlation between SES and test scores are about half the magnitude for blacks relative to whites. In other words, there is something that higher income buys whites that is not fully realized among blacks. The limitation of this argument is that including these variables as controls does not substantially alter the divergence in black-white achievement over the first nine years of school. This issue is beyond the scope of this chapter but deserves further exploration.

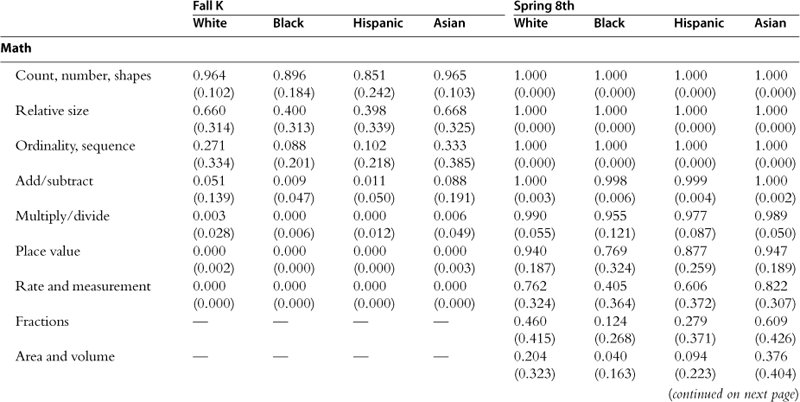

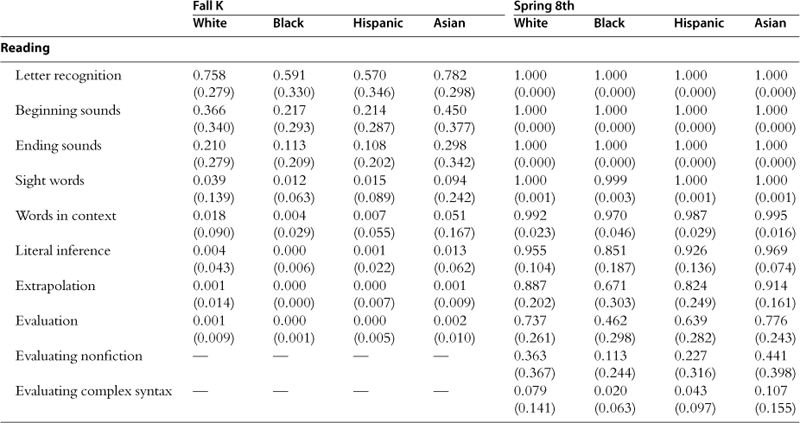

We conclude our analysis of ECLS-K by investigating racial achievement gaps on questions assessing specific skills in kindergarten and eighth grade. Table 10 contains unadjusted means on questions tested in each subsample of the test. The entries in the table are means of probabilities that students have mastered the material in that subtest. Math sections include: counting, numbers, and shapes; relative size; ordinality and sequence; adding and subtracting; multiplying and dividing; place value; rate and measurement; fractions; and area and volume. Reading sections include: letter recognition, beginning sounds, ending sounds, sight words, words in context, literal inference, extrapolation, evaluation, nonfiction evaluation, and complex syntax evaluation. In kindergarten, the test excluded fractions and area and volume (in math) as well as nonfiction evaluation and complex syntax evaluation (in reading).

Table 10

Unadjusted means on questions assessing specific sets of skills, ECLS.

Entries are unadjusted mean scores on specific areas of questions in kindergarten fall and eighth grade spring. They are proficient probability scores, which are constructed using IRT scores and provide the probability of mastery of a specific set of skills. Dashes indicate areas that were not included in kindergarten fall exams. Standard deviations are located in parentheses.

All students enter kindergarten with a basic understanding of counting, numbers, and shapes. Black students have a probability of 0.896 (0.184) of having mastered this material and the corresponding probability for whites is 0.964 (0.102). Whites outpace blacks on all other dimensions. Hispanics are also outpaced by whites on all dimensions, while Asians actually fare better than whites on all dimensions. By eighth grade, students have essentially mastered six out of the nine areas tested in math, and six out of the ten in reading. Interestingly, on every dimension where there is room for growth, whites outpace blacks—and by roughly a constant amount. Blacks only begin to close the gap after white students have demonstrated mastery of a specific area and therefore can improve no more. While it is possible that this implies that blacks will master the same material as whites but on a longer timeline, there is a more disconcerting possibility—as skills become more difficult, a non-trivial fraction of black students may never master the skills. If these skills are inputs into future subject matter, then this could lead to an increasing black-white achievement gap. The same may apply to Hispanic children, although they are closer to closing the gap with white students than blacks are.

In summary, using the ECLS-K—a recent and remarkably rich nationally representative dataset of students from the beginning of kindergarten through their eighth grade year—we demonstrate an important and remarkably robust racial achievement gap that seems to grow as children age. Blacks underperform whites in the same schools, the same classrooms, and on every aspect of each cognitive assessment. Hispanics follow a similar, though less stark, pattern.

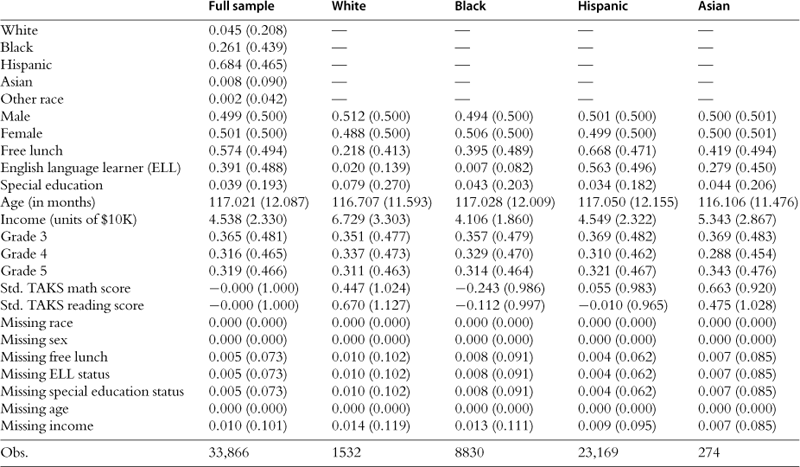

Basic facts about racial differences in educational achievement using CNLSY79

Having exhausted possibilities in the ECLS-K, we now turn to the Children of the National Longitudinal Survey of Youth 1979 (CNLSY79). The CNLSY79 is a survey of children born to NLSY79 female respondents that began in 1986. The children of these female respondents are estimated to represent over 90% of all the children ever to be born to this cohort of women. As of 2006, a total of 11,466 children have been identified as having been born to the original 6283 NLSY79 female respondents, mostly during years in which they were interviewed. In addition to all the mother’s information from the NLSY79, the child survey includes assessments of each child as well as additional demographic and development information collected from either the mother or child. The CNLSY79 includes the Home Observation for Measurement of Environment (HOME), an inventory of measures related to the quality of the home environment, as well as three subtests from the full Peabody Individual Achievement Test (PIAT) battery: the Mathematics, Reading Recognition, and Reading Comprehension assessments. We use the Mathematics and Reading Recognition assessments for our analysis.44

Most children for whom these assessments are available are between the ages of five and fourteen. Administration of the PIAT Mathematics assessment is relatively straightforward. Children enter the assessment at an age-appropriate item (although this is not essential to the scoring) and establish a “basal” by attaining five consecutive correct responses. If no basal is achieved then a basal of “1” is assigned. A “ceiling” is reached when five of seven items are answered incorrectly. The non-normalized raw score is equivalent to the ceiling item minus the number of incorrect responses between the basal and the ceiling scores. The PIAT Reading Recognition subtest measures word recognition and pronunciation ability, essential components of reading achievement. Children read a word silently, then say it aloud. PIAT Reading Recognition contains 84 items, each with four options, which increase in difficulty from preschool to high school levels. Skills assessed include matching letters, naming names, and reading single words aloud. Table A.7 contains summary statistics for variables used in our analysis.

To our knowledge, the CNLSY is the only large nationally representative sample that contains achievement tests both for mothers and their children, allowing one to control for maternal academic achievement in investigating racial disparities in achievement. Beyond the simple transmission of any genetic component of achievement, more educated mothers are more likely to spend time with their children engaging in achievement-enhancing activities such as reading, using academically stimulating toys, encouraging young children to learn the alphabet and numbers, and so on (Klebanov, 1994).

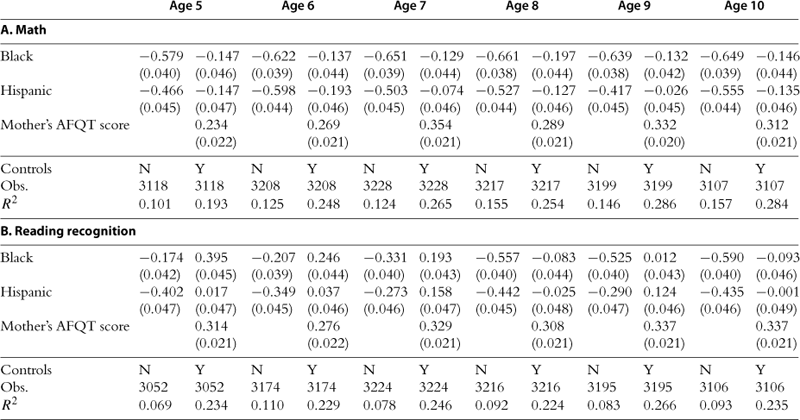

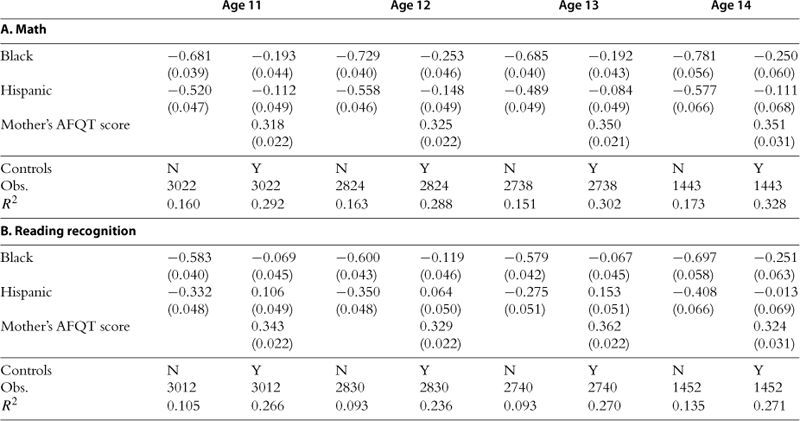

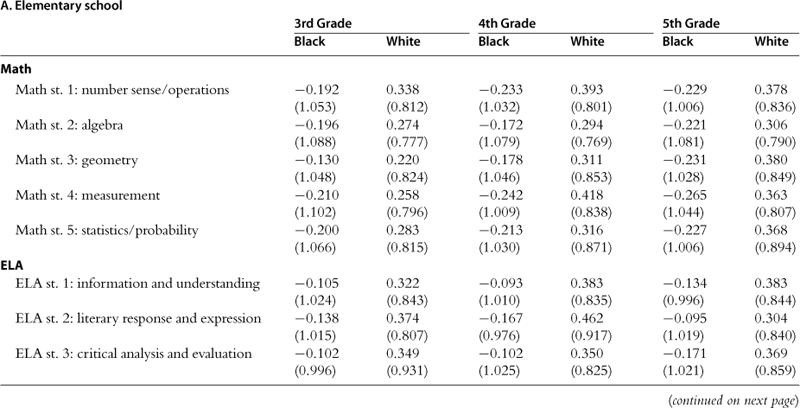

Tables 11 and 12 provide estimates of the racial achievement gap, by age, for children between the ages of five and fourteen.45 Table 11 provides estimates for elementary school ages and Table 12 provides similar estimates for middle school aged children. Both tables contain two panels: Panel A presents results for math achievement and Panel B presents results for reading achievement. The first column under each age presents raw racial differences (and includes dummies for the child’s age in months and for the year in which the assessment was administered). The second column adds controls for race, gender, free lunch status, special education status, whether the child attends a private school, family income, the HOME inventory, mother’s standardized AFQT score, and dummies for the mother’s birth year. Most important of these controls, and unique relative to other datasets, is maternal AFQT.

Table 11

Determinants of PIAT math and reading recognition scores, elementary school (CNLSY79).