High availability

This chapter covers the following high availability (HA)-related enhancements of IBM i:

4.1 PowerHA SystemMirror for i

This section describes the following enhancements included with IBM PowerHA® SystemMirror® for i, which is the strategic IBM high availability product for IBM i:

4.1.1 New PowerHA packaging

IBM PowerHA for i was renamed to IBM PowerHA SystemMirror for i to align with the corresponding Power Systems PowerHA family product PowerHA SystemMirror for AIX.

IBM PowerHA SystemMirror for i is offered in two editions for IBM i 7.1:

•IBM PowerHA SystemMirror for i Standard Edition (5770-HAS *BASE) for local data center replication only

•IBM PowerHA SystemMirror for i Enterprise Edition (5770-HAS option 1) for local or multi-site replication

Customers already using PowerHA for i with IBM i 6.1 are entitled to an upgrade to PowerHA SystemMirror for i Enterprise Edition with IBM i 7.1.

The functional differences between the IBM PowerHA SystemMirror for i Standard and Enterprise Edition are summarized in Figure 4-1.

Figure 4-1 PowerHA SystemMirror for i editions

4.1.2 PowerHA versions

To use any of the new PowerHA SystemMirror for i enhancements, all nodes in the cluster must be upgraded to IBM i 7.1. Before this upgrade, both the cluster version and the PowerHA version must be updated to the current cluster Version 7 and PowerHA Version 2 by running the following CL command:

CHGCLUVER CLUSTER(cluster_name) CLUVER(*UP1VER) HAVER(*UP1VER)

As PowerHA SystemMirror for i now has N-2 support for clustering, it is possible to skip one level of IBM i just by running the earlier command twice. As such, a V5R4M0 system within a clustered environment can be upgraded towards IBM i 7.1 by skipping IBM i 6.1.

4.1.3 PowerHA SystemMirror for i enhancements

This section describes the enhancements in IBM i 7.1 regarding Power SystemMirror for i.

Enhancements that are delivered with 5799-HAS PRPQ

In April 2012, IBM PowerHA SystemMirror for i started to support the following new functions. Some of these functions were covered with 5799-HAS Program Request Pricing Quotation (PRPQ). Therefore, the requirement of 5799-HAS PRPQ for using these functions has been eliminated:

•Support for managing IBM System Storage® SAN Volume Controller and IBM Storwize® V7000 Copy Services with IBM PowerHA SystemMirror for i 5770-HAS PTF SI45741

•IBM i command-line commands for configuring an independent auxiliary storage pool (CFGDEVASP) with 5770-SS1 PTF SI44141

•IBM i command-line command for configuring geographic mirroring (CFGGEOMIR) with PTF SI44148

•PowerHA GUI in IBM Navigator for i

The following subsections provide a brief overview of these enhancements. For more information, see PowerHA SystemMirror for IBM i Cookbook, SG24-7994.

Support for SAN Volume Controller and V7000 Copy Services

PowerHA SystemMirror for i now also supports Metro Mirror, Global Mirror, IBM FlashCopy® and LUN level switching for the IBM System Storage SAN Volume Controller, and IBM Storwize V7000.

The available commands are similar to the ones that you use for IBM DS8000® Copy Services, but some parameters are different:

•Add SVC ASP Copy Description (ADDSVCCPYD): This command is used to describe a single physical copy of an auxiliary storage pool (ASP) that exists within an SAN Volume Controller and to assign a name to the description.

•Change SVC Copy Description (CHGSVCCPYD): This command changes an existing auxiliary storage pool (ASP) copy description.

•Remove SVC Copy Description (RMVSVCCPYD): This command removes an existing ASP copy description. It does not remove the disk configuration.

•Display SVC Copy Description (DSPSVCCPYD): This command displays an ASP copy description.

•Work with ASP Copy Description (WRKASPCPYD) shows both DS8000 and SAN Volume Controller / V7000 copy descriptions.

•Start SVC Session (STRSVCSSN): This command assigns a name to the Metro Mirror, Global Mirror, or FlashCopy session that links the two ASP copy descriptions for the source and target IASP volumes and starts an ASP session for them.

•Change SVC Session (CHGSVCSSN): This command is used to change an existing Metro Mirror, Global Mirror, or FlashCopy session.

•End SVC ASP Session (ENDSVCSSN): This command ends an existing ASP session.

•Display SVC Session (DSPSVCSSN): This command displays an ASP session.

Configure Device ASP (CFGDEVASP) command

The Configure Device ASP (CFGDEVASP) command is part of the IBM i 7.1 base operating system and is available with PTF SI44141. It is used to create or delete an independent auxiliary storage pool (IASP).

If you use this command with the *CREATE action, it does the following actions:

•Creates the IASP using the specified non-configured disk units.

•Creates an ASP device description with the same name if one does not exist yet.

If you use this command with the *DELETE action, it does the following actions:

•Deletes the IASP.

•Deletes the ASP device description if it was created by this command.

Figure 4-2 shows creating or deleting an IASP through the command-line interface (CLI).

|

Configure Device ASP (CFGDEVASP)

Type choices, press Enter.

ASP device . . . . . . . . . . . ASPDEV > IASP1

Action . . . . . . . . . . . . . ACTION > *CREATE

ASP type . . . . . . . . . . . . TYPE *PRIMARY

Primary ASP device . . . . . . . PRIASPDEV

Protection . . . . . . . . . . . PROTECT *NO

Encryption . . . . . . . . . . . ENCRYPT *NO

Disk units . . . . . . . . . . . UNITS *SELECT

+ for more values

Additional Parameters

Confirm . . . . . . . . . . . . CONFIRM *YES

|

Figure 4-2 Configure Device ASP (CFGDEVASP) command

CFGGEOMIR command

The Configure Geographic Mirror (CFGGEOMIR) command that is shown in Figure 4-3 can be used to create a geographic mirror copy of an existing IASP in a device cluster resource group (CRG).

The command can also create ASP copy descriptions if they do not exist yet and can start an ASP session. It performs all the necessary configuration steps to take an existing stand-alone IASP and create a geographic mirror copy. To obtain this command, the 5770-HAS PTF SI44148 must be on the system that is running IBM i 7.1.

|

Configure Geographic Mirror (CFGGEOMIR)

Type choices, press Enter.

ASP device . . . . . . . . . . . ASPDEV

Action . . . . . . . . . . . . . ACTION

Source site . . . . . . . . . . SRCSITE *

Target site . . . . . . . . . . TGTSITE *

Session . . . . . . . . . . . . SSN

Source ASP copy description .

Target ASP copy description .

Transmission delivery . . . . . DELIVERY *SYNC

Disk units . . . . . . . . . . . UNITS *SELECT

+ for more values

Additional Parameters

Confirm . . . . . . . . . . . . CONFIRM *YES

Cluster . . . . . . . . . . . . CLUSTER *

Cluster resource group . . . . . CRG *

More...

|

Figure 4-3 Configure Geographic Mirror (CFGGEOMIR) command

PowerHA GUI in IBM Navigator for i

PowerHA GUI is available in IBM Navigator for i. It supports switched disk and geographic mirroring environments, and DS8000 FlashCopy, Metro Mirror, and Global Mirror technologies. The PowerHA GUI provides an easy to use interface to configure and manage a PowerHA high availability environment. It can configure and manage cluster nodes, administrative domain, and independent auxiliary storage pools (IASPs).

Section 4.1.4, “PowerHA SystemMirror for i graphical interfaces” on page 131 introduces GUIs that are associated with a high availability function, including two existing interfaces and the PowerHA GUI.

N-2 support for clustering

Before the release of 5770-SS1 PTF SI42297, PowerHA and clustering supported only a difference of one release, which meant that the nodes within the cluster needed to be within one release, and a node within the cluster could be upgraded only one release at a time.

After this PTF is installed, a 7.1 node can be added to a 5.4 cluster. A node can also be upgraded from a 5.4 cluster node directly to a 7.1 cluster node if this PTF is installed during the upgrade.

The main intent of this enhancement is to ensure that nodes can be upgraded directly from 5.4 to 7.1. PowerHA replication of the IASP still does not allow replication to an earlier release, so for a complete high availability solution, other than during an upgrade of the HA environment, keep all nodes at the same release level.

Duplicate library error handling

Duplicate libraries and spool files are not allowed between SYSBAS and an IASP. When the IASP is available, the operating system prevents a duplicate library or spool file from being created. However, if a duplicate library or spool file is created in SYSBAS while the IASP is unavailable, the next varyon of the IASP fails.

With this enhancement, the message ID CPDB8EB and CPF9898, shown in Figure 4-4, is displayed in the QSYSOPR message queue at the IASP varyon time when a duplicate library is found in SYSBAS and the IASP. The varyon of the IASP can be continued or canceled after the duplicate library issue is resolved.

This enhancement is available in both 6.1 with 5761-SS1 PTF SI44564 and 7.1 with 5770-SS1 PTF SI44326.

|

Additional Message Information

Message ID . . . . . . : CPDB8EB Severity . . . . . . . : 30

Message type . . . . . : Information

Date sent . . . . . . : 10/10/12 Time sent . . . . . . : 09:30:30

Message . . . . : Library TESTLIB01 exists in *SYSBAS and ASP device IASP01.

Cause . . . . . : Auxiliary storage pool (ASP) device IASP01 cannot be

varied on to an available status because the ASP contains a library named

TESTLIB01 and a library by the same name already exists in the system ASP or

a basic user ASP (*SYSBAS).

Recovery . . . : Use the Rename Object (RNMOBJ) or Delete Library (DLTLIB)

command to rename or delete library TESTLIB01 from ASP IASP01 or *SYSBAS to

remove the duplicate library condition. Vary ASP device IASP01 off and

retry the vary on.

Bottom

Press Enter to continue.

Additional Message Information

Message ID . . . . . . : CPF9898 Severity . . . . . . . : 40

Message type . . . . . : Inquiry

Date sent . . . . . . : 10/10/12 Time sent . . . . . . : 09:30:30

Message . . . . : See previous CPDB8EB messages in MSGQ QSYSOPR. Respond (C

G).

Cause . . . . . : This message is used by application programs as a general

escape message.

Bottom

Type reply below, then press Enter.

Reply . . . .

F3=Exit F6=Print F9=Display message details F12=Cancel

F21=Select assistance level

|

Figure 4-4 CPDB8EB and CPF9898 messages for duplicate library

4.1.4 PowerHA SystemMirror for i graphical interfaces

With IBM i 7.1, there are three different graphical user interfaces (GUIs) within IBM Navigator for i:

•Cluster Resource Services GUI

•High Availability Solutions Manager GUI

•PowerHA GUI

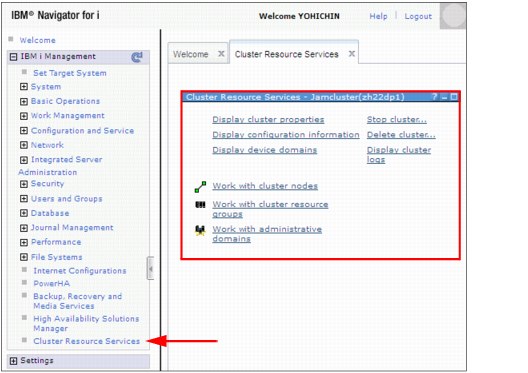

Cluster Resource Services GUI

You can access this GUI by completing the steps shown in Figure 4-5:

1. Expand IBM i Management.

2. Select Cluster Resource Services.

Figure 4-5 Cluster Resource Services GUI

The Cluster Resource Services GUI has the following characteristics:

•Supports the existing environment

•Limited Independent ASP (IASP) function

•Cannot manage from one node

•Difficult to determine the status

|

Removed feature: The clustering GUI plug-in for System i Navigator from High Availability Switchable Resources licensed program (IBM i option 41) was removed in IBM i 7.1.

|

High Availability Solutions Manager GUI

You can access this GUI by completing the steps shown in Figure 4-6:

1. Expand IBM i Management.

2. Select High Availability Solutions Manager.

Figure 4-6 High Availability Solutions Manager GUI

The High Availability Solutions Manager GUI has the following characteristics:

•“Dashboard” interface

•No support for existing environments

•Cannot choose names

•Limited to four configurations

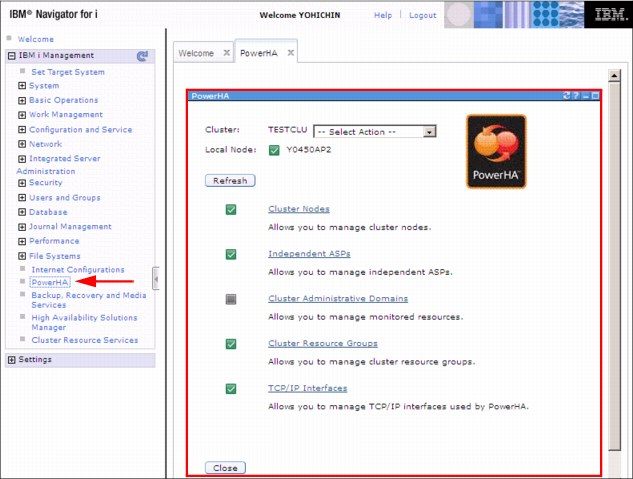

New PowerHA SystemMirror for i GUI

In IBM PowerHA SystemMirror for i, there is a new interface available for PowerHA within IBM Navigator for i. This new PowerHA GUI was already supported by the October 2011 PRPQ announcement of 5799-HAS, but it supported only an English GUI at that level. With the April 2012 enhancement of IBM PowerHA SystemMirror for i, it supports multiple languages in the GUI.

You can access the new GUI by completing the following steps, as shown in Figure 4-7:

1. Expand IBM i Management.

2. Select PowerHA.

Figure 4-7 PowerHA SystemMirror for i GUI

The PowerHA GUI handles the high availability solution from one single window. It supports the following items:

•Geographic mirroring

•Switched disk (IOA)

•SVC/V7000/DS6000/DS8000 Metro Mirror

•SVC/V7000/DS6000/DS8000 Global Mirror

•SVC/V7000/DS6000/DS8000 FlashCopy

•SVC/V7000/DS6000/DS8000 LUN level switching

For more information about the PowerHA GUI, see Chapter 9, “PowerHA User Interfaces” in the PowerHA SystemMirror for IBM i Cookbook, SG24-7994.

Differences between the three graphical interfaces

Figure 4-8 shows the main differences between the three available graphical interfaces.

Figure 4-8 Main differences between the graphical interfaces

|

Note: As the PowerHA GUI is a combination of the two other ones, those GUIs will be withdrawn in a later release.

|

4.1.5 N_Port ID virtualization support

The new N_Port ID Virtualization (NPIV) support made available with IBM i 6.1.1 or later is fully supported by IBM PowerHA SystemMirror for i for IBM System Storage DS8000 series storage-based replication.

Using NPIV with PowerHA SystemMirror for i does not require dedicated Fibre Channel IOAs for each SYSBAS and IASP because the (virtual) IOP reset that occurs when you switch the IASP affects the virtual Fibre Channel client adapter only, instead of all ports of the physical Fibre Channel IOA, which are reset in native-attached storage environment.

For an overview of the new NPIV support by IBM i, see Chapter 7, “Virtualization” on page 319.

For more information about NPIV implementation in an IBM i environment, see DS8000 Copy Services for IBM i with VIOS, REDP-4584.

4.1.6 Asynchronous geographic mirroring

Asynchronous geographic mirroring is a new function supported by PowerHA SystemMirror for i Enterprise Edition with IBM i 7.1 that extends the previously available synchronous geographic mirroring option, which, for performance reasons, is limited to metro area distances up to 30 km.

The asynchronous delivery of geographic mirroring (not to be confused with the asynchronous mirroring mode of synchronous geographic mirroring) allows IP-based hardware replication beyond synchronous geographic mirroring limits.

Asynchronous delivery, which also requires the asynchronous mirroring mode, works by duplicating any changed IASP disk pages in the *BASE memory pool on the source system and sending them asynchronously while you preserve the write-order to the target system. Therefore, at any time, the data on the target system (though not up-to-date) still represents a so-called crash-consistent copy of the source system.

With the source system available, you can check the currency of the target system and memory impact on the source system because of asynchronous geographic mirroring. Use the Display ASP Session (DSPASPSSN) command to show the total data in transit, as shown in Figure 4-9.

|

Display ASP Session

04/09/10 15:53:50

Session . . . . . . . . . . . . . . . . . . : GEO

Type . . . . . . . . . . . . . . . . . . : *GEOMIR

Transmission Delivery . . . . . . . . . . : *ASYNC

Mirroring Mode . . . . . . . . . . . . . : *ASYNC

Total data in transit . . . . . . . . . . : 0.02 MB

Suspend timeout . . . . . . . . . . . . . : 240

Synchronization priority . . . . . . . . : *MEDIUM

Tracking space allocated . . . . . . . . : 100%

Copy Descriptions

ASP ASP Data

Device Copy Role State State Node

GEO001 GEO001S2 PRODUCTION AVAILABLE USABLE RCHASHAM

GEO001 GEO001S1 MIRROR ACTIVE UNUSABLE RCHASEGS

|

Figure 4-9 DSPASPSSN command data in transit information

For ASP sessions of type *GEOMIR, the changing of geographic mirroring options requires that the IASP be varied off. The option for asynchronous delivery can be enabled through the Change ASP Session (CHGASPSSN) command’s new DELIVERY(*ASYNC) parameter, as shown in Figure 4-10.

|

Change ASP Session (CHGASPSSN)

Type choices, press Enter.

Session . . . . . . . . . . . . SSN

Option . . . . . . . . . . . . . OPTION

ASP copy: ASPCPY

Preferred source . . . . . . . *SAME

Preferred target . . . . . . . *SAME

+ for more values

Suspend timeout . . . . . . . . SSPTIMO *SAME

Transmission delivery . . . . . DELIVERY *ASYNC

Mirroring mode . . . . . . . . . MODE *SAME

Synchronization priority . . . . PRIORITY *SAME

Tracking space . . . . . . . . . TRACKSPACE *SAME

FlashCopy type . . . . . . . . . FLASHTYPE *SAME

Persistent relationship . . . . PERSISTENT *SAME

ASP device . . . . . . . . . . . ASPDEV *ALL

+ for more values

Track . . . . . . . . . . . . . TRACK *YES

More...

|

Figure 4-10 CHGASPSSN command - * ASYNC Transmission delivery parameter

This setting can also be seen and changed through the IBM Navigator for i GUI by clicking PowerHA → Independent IASPs → Details in the menu that is displayed by clicking next to the IASP name. Click the menu under the large arrow in the middle of the topic pane and select Mirroring Properties, as shown in Figure 4-11.

|

Note: You must stop the geographic mirroring session by running the ENDASPSSN command before you change this setting.

|

Figure 4-11 Independent ASP details in PowerHA GUI

Figure 4-12 shows the changed setting.

Figure 4-12 Independent ASP Mirroring Properties in PowerHA GUI

Geographic mirroring synchronization priority

It is now possible to adjust the priority of the synchronization while the IASP is in use. Previously, this required that the IASP was varied off to be adjusted.

4.1.7 LUN level switching

LUN level switching is a new function that is provided by PowerHA SystemMirror for i in IBM i 7.1. It provides a local high availability solution with IBM System Storage DS8000 or DS6000™ series, IBM Storwize V7000 or V3700, or IBM System Storage SAN Volume Controller similar to what used to be available as switched disks for IBM i internal storage.

With LUN level switching single-copy (that is, non-replicated) IASPs that are managed by a cluster resource group device domain and in a supported storage can be switched between IBM i systems in a cluster.

A typical implementation scenario for LUN level switching is where multi-site replication through Metro Mirror or Global Mirror is used for disaster recovery and protection against storage subsystem outages. When this scenario happens, additional LUN level switching at the production site is used for local high availability protection, eliminating the requirement for a site-switch if there are IBM i server outages.

Implementing on IBM System Storage DS8000 or DS6000

To implement LUN level switching on an IBM System Storage DS8000 or DS6000, create an ASP copy description for each switchable IASP using the Add ASP Copy Description (ADDASPCPYD) command, which is enhanced with recovery domain information for LUN level switching, as shown in Figure 4-13.

|

Add ASP Copy Description (ADDASPCPYD)

Type choices, press Enter.

Logical unit name: LUN

TotalStorage device . . . . . *NONE

Logical unit range . . . . . .

+ for more values

Consistency group range . . .

+ for more values

Recovery domain: RCYDMN

Cluster node . . . . . . . . . *NONE

Host identifier . . . . . . .

+ for more values

Volume group . . . . . . . . .

+ for more values

+ for more values

Bottom

F3=Exit F4=Prompt F5=Refresh F12=Cancel F13=How to use this display

F24=More keys

|

Figure 4-13 IBM i ADDASPCPYD enhancement for DS8000, DS6000 LUN level switching

An ASP session is not required for LUN level switching, as there is no replication for the IASP involved.

|

Important: For LUN level switching, the backup node host connection on the DS8000 or DS6000 storage system must not have a volume group (VG) assigned. PowerHA automatically unassigns the VG from the production node and assigns it to the backup node at site-switches or failovers.

|

Implementing on IBM Storwize V7000, V3700 or IBM System Storage SAN Volume Controller

To implement LUN level switching on an IBM Storwize V7000, V3700, or IBM System Storage SAN Volume Controller, create an ASP copy description for each switchable IASP by using the Add SVC Copy Description (ADDSVCCPYD) command. This command is enhanced with recovery domain information for LUN level switching, as shown in Figure 4-14.

|

Add SVC ASP Copy Description (ADDSVCCPYD)

Type choices, press Enter.

Virtual disk range: VRTDSKRNG

Range start . . . . . . . . .

Range end . . . . . . . . . .

Host identifier . . . . . . . *ALL

+ for more values

+ for more values

Device domain . . . . . . . . . DEVDMN *

Recovery domain: RCYDMN

Cluster node . . . . . . . . . *NONE

Host identifier . . . . . . .

+ for more values

+ for more values

Bottom

F3=Exit F4=Prompt F5=Refresh F12=Cancel F13=How to use this display

F24=More keys

|

Figure 4-14 IBM i ADDSVCCPYD enhancement for V7000, V3700, SVC LUN level switching

An ASP session is not required for LUN level switching, as there is no replication for the IASP involved.

|

Important: For LUN level switching, the backup node host connection on the V7000, V3700, or SAN Volume Controller storage system must not have a host connection assigned. PowerHA automatically unassigns the host connection from the production node and assigns it to the backup node at site-switches or failovers.

|

4.1.8 IBM System SAN Volume Controller and IBM Storwize V7000 split cluster

Support is now also added to use the split cluster function of the IBM System Storage SAN Volume Controller and IBM Storwize V7000. The split cluster environment is commonly used on other platforms. This support enables IBM i customers to implement the same mechanisms as they use on those platforms.

A split-cluster setup uses a pair of storage units in a cluster arrangement. These storage units present a copy of an IASP to one of two servers on their local site, with PowerHA managing the system side of the takeover. As with any split cluster environment, you can end up with a “split brain” or partitioned state. To avoid this, the split cluster support requires the use of a quorum device preferably on a third site with separate power. The storage unit automatically uses this quorum device to resolve the split brain condition.

For more information, see IBM i and IBM Storwize Family: A Practical Guide to Usage Scenarios, SG24-8197.

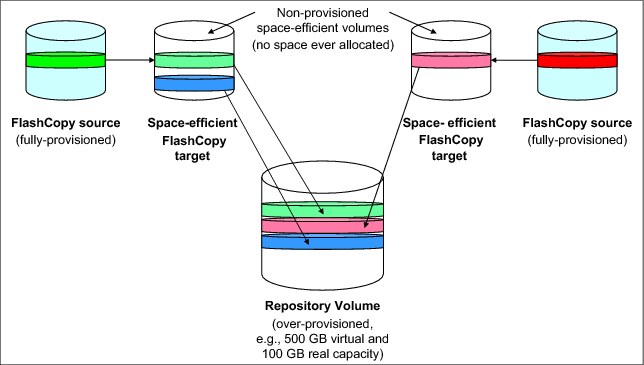

4.1.9 Space-efficient FlashCopy

PowerHA for SystemMirror for i with IBM i 7.1 now supports space-efficient FlashCopy of the IBM System Storage DS8000 series.

You can use the IBM System Storage DS8000 series FlashCopy SE licensed feature to create space-efficient FlashCopy target volumes that can help you reduce the required physical storage space for the FlashCopy target volumes. These volumes are typically needed only for a limited time (such as during a backup to tape).

A space-efficient FlashCopy target volume has a virtual storage capacity that is reported to the host that matches the physical capacity of the fully provisioned FlashCopy source volume, but no physical storage space is ever allocated. Physical storage space for space-efficient FlashCopy target volumes is allocated in 64-KB track granularity. This allocation is done on demand for host write operations from a configured repository volume that is shared by all space-efficient FlashCopy target volumes within the same DS8000 extent pool, as shown in Figure 4-15.

Figure 4-15 DS8000 Space-Efficient FlashCopy

From a user perspective, the PowerHA setup (not the DS8000 FlashCopy setup) for space-efficient FlashCopy is identical to the setup for traditional FlashCopy with the nocopy option. The reason for this situation is PowerHA SystemMirror for i internally interrogates the DS8000 to determine the type of FlashCopy relationship and makes sure that it uses the corresponding correct DS CLI command syntax. The syntax check is done for either traditional FlashCopy or FlashCopy SE when you run the mkflash and rmflash commands.

For more information about using IBM System Storage DS8000 FlashCopy SE with IBM i, see IBM System Storage Copy Services and IBM i: A Guide to Planning and Implementation, SG24-7103.

4.1.10 Reverse FlashCopy

The use of reverse FlashCopy is now supported from a FlashCopy Target to a FlashCopy Source that is also the source of the Metro Mirror or Global Mirror relationship. Any changes that result from this operation are then replicated to the target of the replication relationship.

The reverse of the FlashCopy is performed by using the Change ASP Session (CHGASPSSN) command with OPTION(*REVERSE) as shown in Figure 4-16.

|

Change ASP Session (CHGASPSSN)

Type choices, press Enter.

Session . . . . . . . . . . . . > FLASHCOPY Name

Option . . . . . . . . . . . . . > *REVERSE *CHGATTR, *SUSPEND...

Device domain . . . . . . . . . * Name, *

Bottom

F3=Exit F4=Prompt F5=Refresh F10=Additional parameters F12=Cancel

F13=How to use this display F24=More keys

|

Figure 4-16 IBM i command CHGASPSSN

|

Note: The ability to reverse the FlashCopy is also available with the “no copy” option, in which case the FlashCopy relationship is removed as well.

|

4.1.11 FlashCopy at a Global Mirror target

It is now possible to take a FlashCopy at the target of a Global Mirror relationship with a single command. Use the Start ASP Session (STRASPSSN TYPE(*FLASHCOPY)) command to run the FlashCopy operation.

This improvement removes the need to manually detach and reattach the Global Mirror session that existed on previous releases. PowerHA now handles the entire process in a single command.

4.1.12 Better detection of cluster node outages

There are situations where a sudden cluster node outage, such as a main storage memory dump, an HMC immediate partition power-off, or a system hardware failure, results in a partitioned cluster. If so, you are alerted by the failed cluster communication message CPFBB22 sent to QHST and the automatic failover not started message CPFBB4F sent to the QSYSOPR message queue on the first backup node of the CRG.

You must determine the reason for the cluster partition condition. This condition can be caused either by a network problem or a sudden cluster node outage. You must either solve the network communication problem or declare the cluster node as failed, which can be done by running Change Cluster Node Entry (CHGCLUNODE) in preparation of a cluster failover.

With IBM i 7.1, PowerHA SystemMirror for i now allows advanced node failure detection by cluster nodes. This task can be accomplished by registering with an HMC or Virtual I/O Server (VIOS) management partition on IVM managed systems. The clusters are notified when severe partition or system failures trigger a cluster failover event instead of causing a cluster partition condition.

For LPAR failure conditions, it is the IBM POWER® Hypervisor™ (PHYP) that notifies the HMC that an LPAR failed. For system failure conditions other than a sudden system power loss, it is the flexible service processor (FSP) that notifies the HMC of the failure. The CIM server on the HMC or VIOS can then generate a power state change CIM event for any registered

CIM clients.

CIM clients.

Whenever a cluster node is started, for each configured cluster monitor, IBM i CIM client APIs are used to subscribe to the particular power state change CIM event. The HMC CIM server generates such a CIM event and actively sends it to any registered CIM clients (that is, no heartbeat polling is involved with CIM). On the IBM i cluster nodes, the CIM event listener compares the events with available information about the nodes that constitute the cluster to determine whether it is relevant for the cluster to act upon. For relevant power state change CIM events, the cluster heartbeat timer expiration is ignored (that is, IBM i clustering immediately triggers a failover condition in this case).

Using advanced node failure detection requires SSH and CIMOM TCP/IP communication to be set up between the IBM i cluster nodes and the HMC or VIOS. Also, a cluster monitor must be added to the IBM i cluster nodes, for example, through the new Add Cluster Monitor (ADDCLUMON) command, as shown in Figure 4-17. This command enables communication with the CIM server on the HMC or VIOS.

|

Add Cluster Monitor (ADDCLUMON)

Type choices, press Enter.

Cluster . . . . . . . . . . . . HASM_CLU Name

Node identifier . . . . . . . . CTCV71 Name

Monitor type . . . . . . . . . . *CIMSVR *CIMSVR

CIM server:

CIM server host name . . . . . HMC1

CIM server user id . . . . . . hmcuser

CIM server user password . . . password

Bottom

F3=Exit F4=Prompt F5=Refresh F12=Cancel F13=How to use this display

F24=More keys

|

Figure 4-17 Add Cluster Monitor (ADDCLUMOD) command

For more information about configuring clustering advanced node failure detection, see the Advanced node failure detection topic in the IBM i 7.1 Knowledge Center:

4.1.13 Improved geographic mirroring full synchronization performance

Performance improvements were implemented in IBM i 7.1 for geographic mirroring full synchronization.

Changes within the System Licensed Internal Code (SLIC) provide more efficient processing of data that is sent to the target system if there is a full resynchronization. Even with source and target side tracking, some instances require a full synchronization of the production copy, such as any time that the IASP cannot be normally varied off, because of a sudden cluster node outage.

The achievable performance improvement varies based on the IASP data. IASPs with many small objects see more benefit than those IASPs with a smaller number of large objects.

4.1.14 Geographic mirroring in an IBM i hosted IBM i client partition environment

In an IBM i hosted IBM i client partition environment, you can replicate the whole storage spaces of the IBM i client partition by configuring *NWSSTG for the client partition in an IASP of the IBM i host partition and using the geographic mirroring between the IBM i host partitions, as shown in Figure 4-18.

Figure 4-18 Geographic mirroring for an IBM i hosted IBM i client partition environment

The advantage of this solution is that no IASP is needed on the production (client) partition, so no application changes are required.

Conversely, the following two considerations are part of this solution:

•It is a cold-standby solution, so an IPL on the backup IBM i client partition is required after you switch to the backup node.

•Temporary storage spaces on the IBM i client partition are also transferred to the backup node side.

In October 2012, geographic mirroring of PowerHA SystemMirror for i can eliminate the transfer of temporary storage spaces for the IBM i client partition. This enhancement reduces the amount of a network traffic between IBM i host partition on the production node side and on the backup node side.

4.1.15 Support virtualization capabilities on an active cluster node

IBM i 7.1 supports Suspend/Resume and Live Partition Mobility, which are provided by the IBM PowerVM® virtualization technology stack. If your IBM i 7.1 partition is a member of an active cluster, you cannot suspend this partition, but can move it to another Power Systems server by using Live Partition Mobility.

For more information about Suspend/Resume and Live Partition Mobility, see Chapter 7, “Virtualization” on page 319.

4.1.16 Cluster administrative domain enhancements

The IBM cluster administrative domain support was enhanced in IBM i 7.1 with the following two new monitored resource entries (MREs):

•Authorization lists (*AUTL)

•Printer device descriptions (*PRTDEV) for LAN or virtual printers

PowerHA SystemMirror for i is required to support these two new administration domain monitored resource entries.

For a complete list of attributes that can be monitored and synchronized among cluster nodes by the cluster administrative domain see the Attributes that can be monitored topic in the

IBM i 7.1 Knowledge Center:

IBM i 7.1 Knowledge Center:

Additional enhancements are made to adding and removing monitored resource entries in the cluster administrative domain:

•When adding new entries to the administrative domain, this can now be done even if the object cannot be created on all nodes. If the creation is not possible on all of the nodes in the administrative domain, the MRE will be in an inconsistent state to remind you that the object must still be manually created.

•When removing entries from the administrative domain from the cluster administrative domain, you are now able to do this when some of the nodes in the administrative domain are not active.

The processing that is associated with cluster administrative domains has also been enhanced by the use of the QCSTJOBD job description. This allows any IBM initiated jobs to be run in the QSYSWRK subsystem from the job queue QSYSNOMAX. It improves processing by eliminating potential issues caused by contention that might exist with customer jobs when using the QBATCH subsystem and job queue.

4.1.17 Working with cluster administrative domain monitored resources

A new CL command, Work with Monitored Resources (WRKCADMRE), has been introduced to provide easier management of cluster administrative domain MREs, including sorting and searching capabilities. See Figure 4-19.

|

Work with Monitored Resources (WRKCADMRE)

Type choices, press Enter.

Cluster administrative domain . * Name, *

Bottom

F3=Exit F4=Prompt F5=Refresh F12=Cancel F13=How to use this display

F24=More keys

|

Figure 4-19 Work with Monitored Resources (WRKCADMRE) command

The default for the ADMDMN parameter is to use the administrative domain that the current node is a part of. You are then presented with the panel shown in Figure 4-20. From this list, you can sort the entries or go to an entry of interest.

|

Work with Monitored Resources

System: DEMOAC710

Administrative domain . . . . . . . . . : ADMDMN

Consistent information in cluster . . . : Yes

Domain status . . . . . . . . . . . . . : Active

Type options, press Enter.

1=Add 4=Remove 5=Display details 6=Print 7=Display attributes

Resource Global

Opt Resource Type Library Status

CHRIS *PRTDEV QSYS Consistent

LILYROSE *PRTDEV QSYS Consistent

NEAL *PRTDEV QSYS Consistent

NELLY *PRTDEV QSYS Consistent

DPAINTER *USRPRF QSYS Consistent

Bottom

Parameters for option 1 or command

===>

F1=Help F3=Exit F4=Prompt F5=Refresh F9=Retrieve

F11=Order by type and name F12=Cancel F24=More Keys

|

Figure 4-20 Example of output from WRKCADMRE command

4.1.18 IPv6 support

PowerHA SystemMirror for i on IBM i 7.1 now fully supports IPv6 or a mix of IPv6 and IPv4. All HA-related APIs, commands, and GUIs are extended for field names that hold either a 32-bit IPv4 or a 128-bit IPv6 address, as shown for the Change Cluster Node Entry (CHGCLUNODE) command in Figure 4-21. An IPv6 IP address is specified in the form x:x:x:x:x:x:x:x, with x being a hexadecimal number 0 - FFFF, and “::” can be used once in the IPv6 address to indicate one or more groups of 16-bit zeros.

|

Change Cluster Node Entry (CHGCLUNODE)

Type choices, press Enter.

Cluster . . . . . . . . . . . . > HASM_CLU Name

Node identifier . . . . . . . . > CTCV71 Name

Option . . . . . . . . . . . . . > *CHGIFC *ADDIFC, *RMVIFC, *CHGIFC...

Old IP address . . . . . . . . .

New IP address . . . . . . . . .

................................................................

: New IP address (NEWINTNETA) - Help :

: :

: Specifies the cluster interface address which is being :

: added to the node information or replacing an old cluster :

: interface address. The interface address may be an IPv4 :

: address (for any cluster version) or IPv6 address (if :

: current cluster version is 7 or greater). :

: :

: More... :

: F2=Extended help F10=Move to top F12=Cancel :

F3=Exit F4= : F13=Information Assistant F20=Enlarge F24=More keys :

F24=More keys : :

:..............................................................:

|

Figure 4-21 IBM i change cluster node entry

4.1.19 New CL commands for programming cluster automation

With PowerHA SystemMirror for i, the following new CL commands are introduced in IBM i 7.1 to better support CL programming for cluster automation management:

•Retrieve Cluster (RTVCLU) command

•Retrieve Cluster Resource Group (RTVCRG) command

•Retrieve ASP Copy Description (RTVASPCPYD) command

•Retrieve ASP Session (RTVASPSSN) command

•Print Cluster Administrative Domain Managed Resource Entry (PRTCADMRE) command

4.1.20 Removal of existing command processing restrictions

A number of commands have been changed to allow them to run from any active node in the cluster, providing that at least one eligible node is active. A parameter has been added to each command to specify to which device domain they should apply, with the default being the device domain of the current node.

The commands that are listed in Table 4-1 are enhanced by this change.

Table 4-1 Cluster commands enabled to run from any active cluster node

|

ADDASPCPYD

|

ADDSVCCPYD

|

CHGASPSSN

|

CHGSVCSSN

|

|

CHGASPCPYD

|

CHGSVCCPYD

|

DSPASPSSN

|

ENDSVCSSN

|

|

DSPASPCPYD

|

DSPSVCCPYD

|

ENDASPSSN

|

STRSVCSSN

|

|

RMVASPCPYD

|

RMVSVCCPYD

|

STRASPSSN

|

|

|

WRKASPCPYD

|

|

|

|

In addition, the administrative domain commands listed in Table 4-2 have been enhanced to run from any active node in the cluster, providing that at least one eligible node is active. A parameter has been added to these commands to specify the active cluster node to be used as the source for synchronization to the other nodes in the administrative domain,

Table 4-2 Administrative domain commands enabled to run from any active cluster node

|

ADDCADMRE

|

RMVCADMRE

|

|

WRKCADMRE

|

PRTCADMRE

|

4.2 Journaling and commitment control enhancements

Journaling and commitment control are the base building blocks for any HA solution, as they ensure database consistency and recoverability.

Several enhancements were made in the area of the integrity preservation and journaling. The main objectives of these enhancements are to provide easier interfaces for the setup and monitoring of the database’s persistence, including HA setups.

4.2.1 Journal management

Journals (more familiarly known as logs on other platforms) are used to track changes to various objects. Although the OS has built-in functions to protect the integrity of certain objects, use journaling to protect the changes to objects, to reduce the recovery time of a system after an abnormal end, to provide powerful recovery and audit functions, and to enable the replication of journal entries on a remote system.

The Start Journal Library (STRJRNLIB) command was introduced in IBM i 6.1. This command defines one or more rules at a library or schema level. These rules are used, or inherited, for journaling objects.

In the IBM i 7.1 release, STRJRNLIB (see Figure 4-22) now provides two new rules:

•If these objects are eligible for remote journal filtering by object (*OBJDFT, *NO, or *YES).

•A name filter to associate with the inherit rule. This filter can be specified with a specific or generic name. The default is to apply the rule to all objects that match the other criteria that are specified in the inherit rule regardless of the object name. You can use this filter to start journaling on new production work files, but not journal temporary work files if they have unique names.

|

Start Journal Library (STRJRNLIB)

Type choices, press Enter.

Library . . . . . . . . . . . . > LIBA Name, generic*

+ for more values

Journal . . . . . . . . . . . . > QSQJRN Name

Library . . . . . . . . . . . > AJRNLIB Name, *LIBL, *CURLIB

Inherit rules:

Object type . . . . . . . . . > *FILE *ALL, *FILE, *DTAARA, *DTAQ

Operation . . . . . . . . . . *ALLOPR *ALLOPR, *CREATE, *MOVE...

Rule action . . . . . . . . . *INCLUDE *INCLUDE, *OMIT

Images . . . . . . . . . . . . *OBJDFT *OBJDFT, *AFTER, *BOTH

Omit journal entry . . . . . . *OBJDFT *OBJDFT, *NONE, *OPNCLO

Remote journal filter . . . . *OBJDFT *OBJDFT, *NO, *YES

Name filter . . . . . . . . . *ALL Name, generic*, *ALL

+ for more values

Logging level . . . . . . . . . *ERRORS *ERRORS, *ALL

|

Figure 4-22 STRJRNLIB command prompt

If the library is journaled already and you want to define one of the new inherit rules, run the Change Journaled Object (CHGJRNOBJ) command. If the library is not already journaled, the new rules can be specified by running the Start Journal Library (STRJRNLIB) command. To view the inherit rules that are associated with a journaled library, run the Display Library Description (DSPLIBD) command, and then hen click F10 - Display inherit rules.

There is an equivalent in the IBM Navigator for i to do the same task. Click Expand File Systems → Select Integrated File System → Select QSYS.LIB. Select the library that you want to journal, as shown in Figure 4-23.

Figure 4-23 Select a library for Journaling

Select the Journaling action, as shown in Figure 4-24.

Figure 4-24 Setting a rule

4.2.2 Remote journaling

When a remote journal connection ends with a recoverable error, you can now specify that the operating system try to restart the connection automatically. This action is done by identifying the number of attempts and the time, expressed in seconds, between restart attempts. These settings can be set by running the Change Remote Journal (CHGRMTJRN) command or with the Change Journal State (QJOCHANGEJOURNALSTATE) API. For a list of errors for which an automatic restart attempt is made, see the Journal management topic collection in the IBM i Knowledge Center at:

You can also run this command to filter the remote journals. Filtering out journal entries that are not needed on the target system can decrease the amount of data that is sent across the communication line.

This remote journal filtering feature is available with option 42 of IBM i, that is, feature 5117 (HA Journal Performance). Ensure that critical data is not filtered when you define remote journal filtering.Three criteria can be used to filter entries sent to the remote system:

•Before images

•Individual objects

•Name of the program that deposited the journal entry on the source system

The filtering criteria are specified when you activate a remote journal. Different remote journals that are associated with the same local journal can have different filtering criteria. Remote journal filtering can be specified only for asynchronous remote journal connections. Because journal entries might be missing, filtered remote journal receivers cannot be used with the Remove Journaled Changes (RMVJRNCHG) command. Similarly, journal receivers that filtered journal entries by object or by program cannot be used with the Apply Journaled Change (APYJRNCHG) command or the Apply Journaled Change Extend (APYJRNCHGX) command.

The Work with Journal Attributes (WRKJRNA) command can now monitor, from the target side, how many seconds the target is behind in receiving journal entries from the source system. Also, new in IBM i 7.1 is the ability, from the source side, to view the number of retransmissions that occur for a remote journal connection.

4.2.3 DISPLAY_JOURNAL (easier searches of a journal)

Displaying a journal entry from a GUI interface either requires using APIs or writing the journal entries to an outfile. The APIs are labor-intensive and the outfile is restrictive and slower because a copy of the data required.

QSYS2.Display_Journal is a new table function that you can use to view entries in a journal by running a query.

There are many input parameters of the table function that can (and should) be used for best performance to return only those journal entries that are of interest. For more information about the special values, see the “QjoRetrieveJournalEntries API” topic in the Knowledge Center at:

Unlike many other UDTFs in QSYS2, this one has no DB2 for i provided view.

Here is a brief summary of the parameters:

•Journal_Library and Journal_Name

The Journal_Library and Journal_Name must identify a valid journal. *LIBL and *CURLIB are not allowed as values of the Journal_Library.

•Starting_Receiver_Library and Starting_Receiver_Name

If the specified Starting_Receiver_Name is the null value, an empty string, or a blank string, *CURRENT is used and the Starting_Receiver_Library is ignored. If the specified Starting_Receiver_Name contains the special values *CURRENT, *CURCHAIN, or *CURAVLCHN, the Starting_Receiver_Library is ignored. Otherwise, the Starting_Receiver_Name and Starting_Receiver_Library must identify a valid journal receiver. *LIBL and *CURLIB can be used as a value of the Starting_Receiver_Library. The ending journal receiver cannot be specified and is always *CURRENT.

•Starting_Timestamp

If the specified Starting_Timestamp is the null value, no starting time stamp is used. A value for Starting_Timestamp and Starting_Sequence cannot both be specified at the same time. However, both values can be queried when querying the table function.

•Starting_Sequence

If the specified Starting_Sequence is the null value, no starting sequence number is used. If the specified Starting_Sequence is not found in the receiver range, an error is returned. A value for Starting_Timestamp and Starting_Sequence cannot both be specified at the same time. However, both values can be queried when querying the table function.

•Journal_Codes

If the specified Journal_Codes is the null value, an empty string, or a blank string, *ALL is used. Otherwise, the string can consist of the special value *ALL, the special value *CTL, or a string that contains one or more journal codes. Journal codes can be separated by one or more separators. The separator characters are the blank and comma. For example, a valid string might be 'RJ', 'R J', 'R,J', or 'R, J'.

•Journal_Entry_Types

If the specified Journal_Entry_Types is the null value, an empty string, or a blank string, *ALL is used. Otherwise, the string can consist of the special value *ALL, the special value *RCD, or a string that contains one or more journal entry types. Journal entry types can be separated by one or more separators. The separator characters are the blank and comma. For example, a valid string might be 'RJ', 'R J', 'R,J', or 'R, J'.

•Object_Library, Object_Name, Object_ObjType, and Object_Member

If the specified Object_Name is the null value, an empty string, or a blank string, no object name is used and the Object_Library, Object_ObjType, and Object_Member are ignored.

Otherwise, if the specified Object_Name contains the special value *ALL, the Object_Library must contain a library name and Object_ObjType must contain a valid object type (for example, *FILE).

Otherwise, only one object can be specified and the Object_Library, Object_Name, Object_ObjType, and Object_Member must identify a valid object. *LIBL and *CURLIB can be used as a value of the Object_Library.

The Object_ObjType must be one of *DTAARA, *DTAQ, *FILE, or *LIB (*LIB is 6.1 only). The Object_Member can be *FIRST, *ALL, *NONE, or a valid member name. If the specified object type is not *FILE, the member name is ignored.

•User

If the specified user is the null value, an empty string, or a blank string, *ALL is used. Otherwise, the user must identify a valid user profile name.

•Job

If the specified job is the null value, an empty string, or a blank string, *ALL is used. Otherwise, the job must identify a valid job name, that is, a specific job where the first 10 characters are the job name, the second 10 characters are the user name, and the last six characters are the job number.

•Program

If the specified program is the null value, an empty string, or a blank string, *ALL is used. Otherwise, the program must identify a valid program name.

Example 4-1 gives a possible usage of the DISPLAY_JOURNAL function.

Example 4-1 Possible usage of DISPLAY_JOURNAL function

set path system path, jsochr; -- Change jsochr to your library you chose above

-- Select all entries from the *CURRENT receiver of journal mjatst/qsqjrn.

select * from table (

Display_Journal(

'JSOCHR', 'QSQJRN', -- Journal library and name

'', '', -- Receiver library and name

CAST(null as TIMESTAMP), -- Starting timestamp

CAST(null as DECIMAL(21,0)), -- Starting sequence number

'', -- Journal codes

'', -- Journal entries

'','','','', -- Object library, Object name, Object type, Object member

'', -- User

'', -- Job

'' -- Program

) ) as x;

This function provides a result table with data similar to what you get from using the Display Journal Command (DSPJRN) command.

4.2.4 Commitment control and independent ASPs

You can use commitment control to define the boundaries of a business or logical transaction, identifying when it starts and where it ends, and to ensure that all the database changes are either applied permanently or removed permanently. Furthermore, if any process or even a complete system performs such transactions ends abnormally, commitment control provides recovery of pending transactions by bringing the database contents to a committed status, and identifies the last transactions that were pending and recovered.

With commitment control, you have assurance that when the application starts again, no partial updates are in the database because of incomplete transactions from a prior failure. As such, it is one of the building blocks of any highly available setup and it identifies the recovery point for any business process.

If your application was deployed using independent ASPs (IASPs), you are using a database instance that is in that IASP. This situation has an impact on how commitment control works.

When a process starts commitment control, a commitment definition is created in a schema (QRECOVERY) that is stored in the database to which the process is connected. Assuming that your process is connected to an IASP, commitment control is started in the database that is managed by the IASP. When your process is running commitment control from an IASP (that is, it has its resources registered with commitment control on that disk pool), switching to another disk pool fails and throws message CPDB8EC (The thread has an uncommitted transaction).

However, if you switch from the system disk pool (ASP group *NONE), commitment control is not affected. The commitment definitions stay on the system disk pool. A new feature in IBM i 7.1 is that if you later place independent disk pool resources under commitment control before system disk pool resources, the commitment definition is moved to the independent disk pool. This situation means that if your job is not associated with an independent ASP, the commitment definition is created in *SYSBAS; otherwise, it is created in the independent ASP. If the job is associated with an independent ASP, you can open files under commitment control that are in the current library name space. For example, they can be in the independent ASP or *SYSBAS.

If the first resource that is placed under commitment control is not in the same ASP as the commitment definition, the commitment definition is moved to the resource's ASP. If both *SYSBAS and independent ASP resources are registered in the same commitment definition, the system implicitly uses a two-phase commit protocol to ensure that the resources are committed atomically in the event of a system failure. Therefore, transactions that involve data in both *SYSBAS and an independent ASP have a small performance degradation versus transactions that are isolated to a single ASP group.

When recovery is required for a commitment definition that contains resources that are in both *SYSBAS and an independent ASP, the commitment definition is split into two commitment definitions during the recovery. One is in *SYSBAS and one in the independent ASP, as though there were a remote database connection between the two ASP groups. Resynchronization can be initiated by the system during the recovery to ensure that the data in both ASP groups is committed or rolled back atomically.

4.2.5 System Managed Access Path Protection

You can use System Managed Access Path Protection (SMAPP) to reduce the time for the system or independent disk pool to restart after an abnormal end. When the system must rebuild access paths, the next restart takes longer to complete than if the system ended normally. When you use SMAPP, the system protects the access paths implicitly and eliminates the rebuild of the access paths after an abnormal end.

SMAPP affects the overall system performance. The lower the target recovery time that you specify for access paths, the greater this effect can be. Typically, the effect is not noticeable, unless the processor is nearing its capacity.

Another situation that can cause an increase in processor consumption is when local journals are placed in standby state and large access paths that are built over files that are journaled to the local journal are modified. Using the F16=Display details function from the Display Recovery for Access Paths (DSPRCYAP) or Edit Recovery for Access Paths (EDTRCYAP) panels shows the internal threshold that is used by SMAPP (see Figure 4-25). This panel was added in IBM i 7.1. All access paths with estimated rebuild times greater than the internal threshold are protected by SMAPP. The internal threshold value might change if the number of exposed access paths changes, the estimated rebuild times for exposed access paths changes, or if the target recovery time changes.

|

Display Details CTCV71

03/15/10 12:46:18

ASP . . . . . . . . . . . . : *SYSTEM

Internal threshold . . . . . : 00:52:14

Last retune:

Date . . . . . . . . . . . : 03/09/10

Time . . . . . . . . . . . : 06:54:58

Last recalibrate:

Date . . . . . . . . . . . : 02/24/10

Time . . . . . . . . . . . : 08:19:44

|

Figure 4-25 Display Details from the Edit and Display Recovery for Access Paths panel

4.2.6 Journaling and disk arm usage

Starting in IBM i 7.1 with PTF MF51614, journal receivers are spread across all disk arms in the disk pool. Journaling no longer directs writes to specific arms.

The journal receiver threshold value influences the number of parallel writes that journal allows. The higher the journal receiver threshold value, the more parallel I/O requests are allowed. Allowing more parallel I/O requests can improve performance.

For more information, see the TechDocs “IBM i 7.1 and changes for journaling” at:

4.2.7 Range of journal receivers parameter and *CURAVLCHN

In IBM i 6.1 with DB2 PTF Group SF99601 Level 28 and IBM i 7.1 with DB2 PTF Group SF99701 Level18, the Range of Journal Receivers parameter (RCVRNG) is extended to allow a *CURAVLCHN option with the RTVJRNE, RCVJRNE, and DSPJRN commands.

If you saved any journal receivers using SAVOBJ or SAVLIB with the STG(*FREE) option, the receiver chain is effectively broken and the *CURCHAIN option fails to retrieve journal entries.

By specifying the *CURAVLCHN option, if journal receivers exist in the receiver chain that are not available because they were saved with the storage freed option, those journal receivers are ignored and the entries are retrieved starting with the first available journal receiver in the chain.

The *CURAVLCHN option is already supported on the QjoRetrieveJournalEntries() API. With these enhancements, the three journal commands have the same capability.

4.2.8 Journal management functions: IBM Navigator for i

IBM Navigator for i now supports more journal management functions. With IBM i 7.1, the following functions were added:

•Change journal receivers and attributes that are associated with a journal.

•View the properties that are associated with a journal receiver.

•View the objects that are journaled to a specific journal.

•Add and remove remote journals.

•View the list of remote journals that are associated with a specific journal.

•Activate and deactivate remote journals.

•View the details of a remote journal connection.

For more information, see Chapter 17, “IBM Navigator for i 7.1” on page 671.

4.3 Other availability improvements

A number of other enhancements to availability have been made in the areas of reorganize physical file member (RGZPFM) and Lightweight Directory Access Protocol (LDAP).

4.3.1 Reorganize physical file member

In addition to the improvements listed previously in this chapter, there have been changes made to the ability of RGZPFM to enable it to be used concurrently with normal system operations.

For more information, see 5.3.25, “Database reorganization” on page 205.

4.3.2 LDAP enhancements

LDAP has been enhanced to allow configuration libraries and IFS directories to be in an independent auxiliary storage pool as well as the previously available instance database and change log libraries.

This allows the entire LDAP instance to be switchable by PowerHA.

4.4 Additional information

For more information and in-depth details about the latest enhancements in IBM PowerHA SystemMirror for i, see the PowerHA SystemMirror for IBM i Cookbook, SG24-7994.

For more information about external storage capabilities with IBM i, see IBM i and IBM Storwize Family: A Practical Guide to Usage Scenarios, SG24-8197.

..................Content has been hidden....................

You can't read the all page of ebook, please click here login for view all page.