IBM DB2 for i

This chapter describes what is new for DB2 for i and discusses the following topics:

5.1 Introduction: Getting around with data

DB2 for i is a member of the IBM leading-edge family of DB2 products. It has always been known and appreciated for its ease of use and simplicity. It supports a broad range of applications and development environments.

Because of its unique and self-managing computing features, the cost of ownership of DB2 for i is a valuable asset. The sophisticated cost-based query optimizer, the unique single level store architecture of the OS, and the database parallelism feature of DB2 for i allow it to scale almost linearly. Rich SQL support makes not only it easier for software vendors to port their applications and tools to IBM i, but it also enables developers to use industry-standard SQL for their data access and programming. The IBM DB2 Family has this focus on SQL standards with DB2 for i, so investment in SQL enables DB2 for i to use the relational database technology leadership position of IBM and maintain close compatibility with the other DB2 Family products.

Reading through this chapter, you find many modifications and improvements as part of the new release. All of these features are available to any of the development and deployment environments that are supported by the IBM Power platforms on which IBM i 7.1 can be installed.

Many DB2 enhancements for IBM i 7.1 are also available for Version 6.1. If you must verify their availability, go to:

This link takes you to the DB2 for i section of the IBM i Technology Updates wiki.

5.2 SQL data description and data manipulation language

There are several changes and additions to the SQL language:

5.2.1 XML support

Extensible Markup Language (XML) is a simple and flexible text format that is derived from SGML (ISO 8879). Originally designed to meet the challenges of large-scale electronic publishing, XML is also playing an increasingly important role in the exchange of a wide variety of data on the web and elsewhere.

For more information about XML, go to:

Previously, XML data types were supported only through user-defined types and any handling of XML data was done using user-defined functions. In IBM i 7.1, the DB2 component is complemented with support for XML data types and publishing functions. It also supports XML document and annotation, document search (IBM OmniFind®) without decomposition, and client and language API support for XML (CLI, ODBC, JDBC, and so on).

For more information about moving from the user-defined function support provided through the XML Extenders product to the built-in operating support, see the Replacing DB2 XML Extender With integrated IBM DB2 for i XML capabilities white paper:

XML data type

An XML value represents well-formed XML in the form of an XML document, XML content, or an XML sequence. An XML value that is stored in a table as a value of a column that is defined with the XML data type must be a well-formed XML document. XML values are processed in an internal representation that is not comparable to any string value, including another XML value. The only predicate that can be applied to the XML data type is the IS NULL predicate.

An XML value can be transformed into a serialized string value that represents an XML document using the XMLSERIALIZE (see “XML serialization” on page 163) function. Similarly, a string value that represents an XML document can be transformed into an XML value using the XMLPARSE (see “XML publishing functions” on page 162) function. An XML value can be implicitly parsed or serialized when exchanged with application string and binary data types.

The XML data type has no defined maximum length. It does have an effective maximum length of 2 GB when treated as a serialized string value that represents XML, which is the same as the limit for Large Object (LOB) data types. Like LOBs, there are also XML locators and XML file reference variables.

With a few exceptions, you can use XML values in the same contexts in which you can use other data types. XML values are valid in the following circumstances:

•CAST a parameter marker, XML, or NULL to XML

•XMLCAST a parameter marker, XML, or NULL to XML

•IS NULL predicate

•COUNT and COUNT_BIG aggregate functions

•COALESCE, IFNULL, HEX, LENGTH, CONTAINS, and SCORE scalar functions

•XML scalar functions

•A SELECT list without DISTINCT

•INSERT VALUES clause, UPDATE SET clause, and MERGE

•SET and VALUES INTO

•Procedure parameters

•User-defined function arguments and result

•Trigger correlation variables

•Parameter marker values for a dynamically prepared statement

XML values cannot be used directly in the following places. Where expressions are allowed, an XML value can be used, for example, as the argument of XMLSERIALIZE.

•A SELECT list that contains the DISTINCT keyword

•A GROUP BY clause

•An ORDER BY clause

•A subselect of a fullselect that is not UNION ALL

•A basic, quantified, BETWEEN, DISTINCT, IN, or LIKE predicate

•An aggregate function with the DISTINCT keyword

•A primary, unique, or foreign key

•A check constraint

•An index column

No host languages have a built-in data type for the XML data type.

XML data can be defined with any EBCDIC single byte or mixed CCSID or a Unicode CCSID of 1208 (UTF-8), 1200 (UTF-16), or 13488 (Unicode-specific version). 65535 (no conversion) is not allowed as a CCSID value for XML data. The CCSID can be explicitly specified when you define an XML data type. If it is not explicitly specified, the CCSID is assigned using the value of the SQL_XML_DATA_CCSID QAQQINI file parameter (5.3.17, “QAQQINI properties” on page 200). If this value is not set, the default is 1208. The CCSID is established for XML data types that are used in SQL schema statements when the statement is run.

XML host variables that do not have a DECLARE VARIABLE that assigns a CCSID have their CCSID assigned as follows:

•If it is XML AS DBCLOB, the CCSID is 1200.

•If it is XML AS CLOB and the SQL_XML_DATA_CCSID QAQQINI value is 1200 or 13488, the CCSID is 1208.

•Otherwise, the SQL_XML_DATA_CCSID QAQQINI value is used as the CCSID.

Because all implicit and explicit XMLPARSE functions are run by using UTF-8 (1208), defining data in this CCSID removes the need to convert the data to UTF-8.

XML publishing functions

Table 5-1 describes the functions that are directly used in a SQL query.

Table 5-1 XML publishing functions

|

Function

|

Description

|

|

xmlagg

|

Combines a collection of rows, each containing a single XML value to create an XML sequence that contains an item for each non-null value in a set of XML values.

|

|

xmlattributes

|

Returns XML attributes from columns, using the name of each column as the name of the corresponding attribute.

|

|

xmlcomment

|

Returns an XML value with the input argument as the content.

|

|

xmlconcat

|

Returns a sequence that contains the concatenation of a variable number of XML input arguments.

|

|

xmldocument

|

Returns an XML document.

|

|

xmlelement

|

Returns an XML element.

|

|

xmforest

|

Returns an XML value that is a sequence of XML element nodes.

|

|

xmlgroup

|

Returns a single top-level element to represent a table or the result of a query.

|

|

xmlnamespaces

|

Constructs namespace declarations from the arguments.

|

|

xmlparse

|

Parses the arguments as an XML document and returns an XML value.

|

|

xmlpi

|

Returns an XML value with a single processing instruction.

|

|

xmlrow

|

Returns a sequence of row elements to represent a table or the result of a query.

|

|

xmlserialize

|

Returns a serialized XML value of the specified data type generated from the XML-expression argument.

|

|

xmltext

|

Returns an XML value that has the input argument as the content.

|

|

xmlvalidate

|

Returns a copy of the input XML value that is augmented with information obtained from XML schema validation, including default values and type annotations.

|

|

xsltransform

|

Converts XML data into other forms, accessible for the XSLT processor, including but not limited to XML, HTML, and plain text.

|

You can use the SET CURRENT IMPLICIT XMLPARSE OPTION statement to change the value of the CURRENT IMPLICIT XMLPARSE OPTION special register to STRIP WHITESPACE or to PRESERVE WHITESPACE for your connection. You can either remove or maintain any white space on an implicit XMLPARSE function. This statement is not a committable operation.

XML serialization

XML serialization is the process of converting XML data from the format that it has in a DB2 database to the serialized string format that it has in an application.

You can allow the DB2 database manager to run serialization implicitly, or you can start the XMLSERIALIZE function to request XML serialization explicitly. The most common usage of XML serialization is when XML data is sent from the database server to the client.

Implicit serialization is the preferred method in most cases because it is simpler to code, and sending XML data to the client allows the DB2 client to handle the XML data properly. Explicit serialization requires extra handling, which is automatically handled by the client during implicit serialization.

In general, implicit serialization is preferable because it is more efficient to send data to the client as XML data. However, under certain circumstances (for example, if the client does not support XML data) it might be better to do an explicit XMLSERIALIZE.

With implicit serialization for DB2 CLI and embedded SQL applications, the DB2 database server adds an XML declaration with the appropriate encoding specified to the data. For .NET applications, the DB2 database server also adds an XML declaration. For Java applications, depending on the SQLXML object methods that are called to retrieve the data from the SQLXML object, the data with an XML declaration added by the DB2 database server is returned.

After an explicit XMLSERIALIZE invocation, the data has a non-XML data type in the database server, and is sent to the client as that data type. You can use the XMLSERIALIZE scalar function to specify the SQL data type to which the data is converted when it is serialized (character, graphic, or binary data type) and whether the output data includes the explicit encoding specification (EXCLUDING XMLDECLARATION or INCLUDING XMLDECLARATION). The best data type to which to convert XML data is the BLOB data type because retrieval of binary data results in fewer encoding issues. If you retrieve the serialized data into a non-binary data type, the data is converted to the application encoding, but the encoding specification is not modified. Therefore, the encoding of the data most likely does not agree with the encoding specification. This situation results in XML data that cannot be parsed by application processes that rely on the encoding name.

Although implicit serialization is preferable because it is more efficient, you can send data to the client as XML data. When the client does not support XML data, you can consider doing an explicit XMLSERIALIZE. If you use implicit XML serialization for this type of client, the DB2 database server then converts the data to a CLOB (Example 5-1) or DBCLOB before it sends the data to the client.

Example 5-1 XMLSERIALIZE

SELECT e.empno, e.firstnme, e.lastname,

XMLSERIALIZE(XMLELEMENT(NAME "xmp:Emp",

XMLNAMESPACES('http://www.xmp.com' as "xmp"),

XMLATTRIBUTES(e.empno as "serial"),

e.firstnme, e.lastname

OPTION NULL ON NULL))

AS CLOB(1000) CCSID 1208

INCLUDING XMLDECLARATION) AS "Result"

FROM employees e WHERE e.empno = 'A0001'

Managing XML schema repositories (XSR)

The XML schema repository (XSR) is a set of tables that contain information about XML schemas. XML instance documents might contain a reference to a Uniform Resource Identifier (URI) that points to an associated XML schema. This URI is required to process the instance documents. The DB2 database system manages dependencies on externally referenced XML artifacts with the XSR without requiring changes to the URI location reference.

Without this mechanism to store associated XML schemas, an external resource might not be accessible when needed by the database. The XSR also removes the additional impact that is required to locate external documents, along with the possible performance impact.

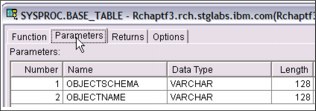

An XML schema consists of a set of XML schema documents. To add an XML schema to the DB2 XSR, you register XML schema documents to DB2 by calling the DB2 supplied stored procedure SYSPROC.XSR_REGISTER to begin registration of an XML schema.

The SYSPROC.XSR_ADDSCHEMADOC procedure adds more XML schema documents to an XML schema that you are registering. You can call this procedure only for an existing XML schema that is not yet complete.

Calling the SYSPROC.XSR_COMPLETE procedure completes the registration of an XML schema. During XML schema completion, DB2 resolves references inside XML schema documents to other XML schema documents. An XML schema document is not checked for correctness when you register or add documents. Document checks are run only when you complete the XML schema registration.

To remove an XML schema from the DB2 XML schema repository, you can call the SYSPROC.XSR_REMOVE stored procedure or use the DROP XSROBJECT SQL statement.

|

More considerations: Because an independent auxiliary storage pool (IASP) can be switched between multiple systems, there are more considerations for administering XML schemas on an IASP. Use of an XML schema must be contained on the independent ASP where it was registered. You cannot reference an XML schema that is defined in an independent ASP group or in the system ASP when the job is connected to the independent ASP.

|

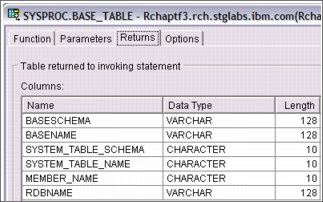

Annotated XML schema decomposition

Annotated XML schema decomposition, also referred to as decomposition or shredding, is the process of storing content from an XML document in columns of relational tables. Annotated XML schema decomposition operates based on annotations that are specified in an XML schema. After an XML document is decomposed, the inserted data has the SQL data type of the column into which it is inserted.

An XML schema consists of one or more XML schema documents. In annotated XML schema decomposition, or schema-based decomposition, you control decomposition by annotating a document’s XML schema with decomposition annotations. These annotations specify the following details:

•The name of the target table and column in which the XML data is to be stored

•The default SQL schema for when an SQL schema is not identified

•Any transformation of the content before it is stored

The annotated schema documents must be stored in and registered with the XSR. The schema must then be enabled for decomposition. After the successful registration of the annotated schema, decomposition can be run by calling the decomposition stored procedure SYSPROC.XDBDECOMPXML.

The data from the XML document is always validated during decomposition. If information in an XML document does not comply with its specification in an XML schema, the data is not inserted into the table.

Annotated XML schema decomposition can become complex. To make the task more manageable, take several things into consideration. Annotated XML schema decomposition requires you to map possible multiple XML elements and attributes to multiple columns and tables in the database. This mapping can also involve transforming the XML data before you insert it, or apply conditions for insertion.

Here are items to consider when you annotate your XML schema:

•Understand what decomposition annotations are available to you.

•Ensure, during mapping, that the type of the column is compatible with the XML schema type of the element or attribute to which it is being mapped.

•Ensure complex types that are derived by restriction or extension are properly annotated.

•Confirm that no decomposition limits and restrictions are violated.

•Ensure that the tables and columns that are referenced in the annotation exist at the time the schema is registered with the XSR.

XML decomposition enhancements (order of result rows)

In IBM i 7.1, a series of decomposition annotations are provided to define how to decompose an XML document into relational database tables, such as db2-xdb:defaultSQLSchema or db2-xdb:rowSet, db2-xdb:column.

In one XSR, multiple target tables can be specified, so data in an XML document can be shredded to more than one target tables using one XSR. But the order of insertion into tables cannot be specified with existing decomposition annotations. Therefore, if the target tables have a reference relationship, the insertion of dependent row fails if its parent row is not inserted before it.

Two new annotations are supported:

•db2-xdb:order

The db2-xdb:order annotation specifies the insertion order of rows among different tables.

•db2-xdb:rowSetOperationOrder

The db2-xdb:rowSetOperationOrder annotation is a parent for one or more

db2-xdb:order elements.

db2-xdb:order elements.

Using db2-xdb:order and db2-xdb:rowSetOperationOrder is needed only when referential integrity constraints exist in target tables and you try to decompose to them using one XSR.

5.2.2 The MERGE statement

This statement enables the simplification of matching rows in tables so that you can use a single statement that updates a target (a table or view) using data from a source (result of a table reference). Rows might be inserted, updated, or deleted in the target row, as specified by the matching rules. If you insert, update, or delete rows in a view, without an INSTEAD OF trigger, it updates, deletes, or inserts the row into the tables on which the view is based.

More than one modification-operation (UPDATE, DELETE, or INSERT) or signal-statement can be specified in a single MERGE statement. However, each row in the target can be operated on only once. A row in the target can be identified only as MATCHED with one row in the result table of the table-reference. A nested SQL operation (RI or trigger except INSTEAD OF trigger) cannot specify the target table (or a table within the same hierarchy) as a target of an UPDATE, DELETE, INSERT, or MERGE statement. This statement is also often referred to as an upsert.

Using the MERGE statement is potentially good in a Business Intelligence data load scenario, where it can be used to populate the data in both the fact and the dimension tables upon a refresh of the data warehouse. It can also be used for archiving data.

In Example 5-2, the MERGE statement updates the list of activities that are organized by Group A in the archive table. It deletes all outdated activities and updates the activities information (description and date) in the archive table if they were changed. It inserts new upcoming activities into the archive, signals an error if the date of the activity is not known, and requires that the date of the activities in the archive table be specified.

Example 5-2 UPDATE or INSERT activities

MERGE INTO archive ar

USING (SELECT activity, description, date, last_modified

FROM activities_groupA) ac

ON (ar.activity = ac.activity) AND ar.group = 'A'

WHEN MATCHED AND ac.date IS NULL THEN

SIGNAL SQLSTATE '70001'

SET MESSAGE_TEXT =

ac.activity CONCAT ' cannot be modified. Reason: Date is not known'

WHEN MATCHED AND ac.date < CURRENT DATE THEN

DELETE

WHEN MATCHED AND ar.last_modified < ac.last_modified THEN

UPDATE SET

(description, date, last_modified) = (ac.description, ac.date, DEFAULT)

WHEN NOT MATCHED AND ac.date IS NULL THEN

SIGNAL SQLSTATE '70002'

SET MESSAGE_TEXT =

ac.activity CONCAT ' cannot be inserted. Reason: Date is not known'

WHEN NOT MATCHED AND ac.date >= CURRENT DATE THEN

INSERT

(group, activity, description, date)

VALUES ('A', ac.activity, ac.description, ac.date)

ELSE IGNORE

Each group has an activities table. For example, activities_groupA contains all activities Group A organizes, and the archive table contains all upcoming activities that are organized by groups in the company. The archive table has (group, activity) as the primary key, and date is not nullable. All activities tables have activity as the primary key. The last_modified column in the archive is defined with CURRENT TIMESTAMP as the default value.

There is a difference in how many updates are done depending on whether a NOT ATOMIC MERGE or an ATOMIC MERGE was specified:

•In an ATOMIC MERGE, the source rows are processed as though a set of rows is processed by each WHEN clause. Thus, if five rows are updated, any row level update trigger is fired five times for each WHEN clause. This situation means that n statement level update triggers are fired, where n is the number of WHEN clauses that contain an UPDATE, including any WHEN clause that contains an UPDATE that did not process any of the source rows.

•In a NOT ATOMIC MERGE setting, each source row is processed independently as though a separate MERGE statement ran for each source row, meaning that, in the previous case, the triggers are fired only five times.

After running a MERGE statement, the ROW_COUNT statement information item in the SQL Diagnostics Area (or SQLERRD(3) of the SQLCA) is the number of rows that are operated on by the MERGE statement, excluding rows that are identified by the ELSE IGNORE clause.

The ROW_COUNT item and SQLERRD(3) do not include the number of rows that were operated on as a result of triggers. The value in the DB2_ROW_COUNT_SECONDARY statement information item (or SQLERRD(5) of the SQLCA) includes the number of these rows.

No attempt is made to update a row in the target that did not exist before the MERGE statement ran. No updates of rows were inserted by the MERGE statement.

If COMMIT(*RR), COMMIT(*ALL), COMMIT(*CS), or COMMIT(*CHG) is specified, one or more exclusive locks are acquired during the execution of a successful insert, update, or delete. Until the locks are released by a commit or rollback operation, an inserted or updated row can be accessed only by either the application process that ran the insert or update or by another application process using COMMIT(*NONE) or COMMIT(*CHG) through a read-only operation.

If an error occurs during the operation for a row of source data, the row being processed at the time of the error is not inserted, updated, or deleted. Processing of an individual row is an atomic operation. Any other changes that are previously made during the processing of the MERGE statement are not rolled back. If CONTINUE ON EXCEPTION is specified, execution continues with the next row to be processed.

5.2.3 Dynamic compound statements

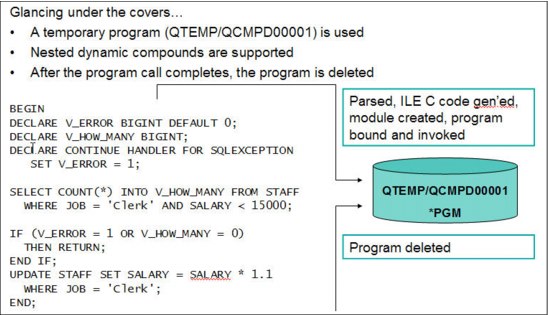

A dynamic compound statement starts with a BEGIN, has a middle portion similar to an SQL procedure, and ends with an END. It can be executed through any SQL dynamic interface such as the Run SQL Statements (RUNSQLSTM) command or IBM i Navigator's Run SQL scripts. It can also be executed dynamically with PREPARE/EXECUTE or EXECUTE IMMEDIATE. Variables, handlers, and all normal control statements can be included within this statement. Both ATOMIC and NOT ATOMIC are supported.

Example 5-3 shows a code of example of a dynamic compound statement.

Example 5-3 Dynamic compound statement code sample

BEGIN

DECLARE V_ERROR BIGINT DEFAULT 0;

DECLARE V_HOW_MANY BIGINT;

DECLARE CONTINUE HANDLER FOR SQLEXCEPTION

SET V_ERROR = 1;

SET V_ERROR = 1;

SELECT COUNT(*) INTO V_HOW_MANY FROM STAFF

WHERE JOB = 'Clerk' AND SALARY < 15000;

IF (V_ERROR = 1 OR V_HOW_MANY = 0)

THEN RETURN;

END IF;

THEN RETURN;

END IF;

UPDATE STAFF SET SALARY = SALARY * 1.1

WHERE JOB = 'Clerk';

WHERE JOB = 'Clerk';

END

Programming considerations are as follows:

•If your compound statement is frequently started, an SQL Procedure is the better choice.

•A Dynamic Compound statement is a great match for situations where you do not want to build, deploy, authorize, and manage a permanent program, but you do want to use the extensive SQL logic and handling that is possible within a compound statement.

•When a Dynamic Compound is prepared and executed, the statements within are processed as static statements. Because a DC statement is a compiled program, the parser options at the time of execution are used with the same rules in place for compile programs.

•Result sets can be consumed, but cannot be returned to the caller or client.

•The subset of SQL statements that are not allowed in triggers and routines are also not allowed within a Dynamic Compound statement. Additionally, the following statements are not allowed:

– SET SESSION AUTHORIZATION

– SET RESULT SET

•There is support for Parameter Markers on a DC statement.

•If you want to parameterize a DC statement, use Global Variables in place of the parameter markers.

•Within the Database Monitor, a Dynamic Compound statement surfaces with QQC21 = 'BE'.

•The SQL Reference for 7.1 is updated with information for this new statement. Search for “Compound (dynamic)”.

Figure 5-1 shows the dynamic compound statement implementation.

Figure 5-1 Dynamic compound statement implementation

5.2.4 Creating and using global variables

You can use global variables to assign specific variable values for a session. Use the CREATE VARIABLE statement to create a global variable at the server level.

Global variables have a session scope, which means that although they are available to all sessions that are active on the database, their value is private for each session. Modifications to the value of a global variable are not under transaction control. The value of the global variable is preserved when a transaction ends with either a COMMIT or a ROLLBACK statement.

When a global variable is instantiated for a session, changes to the global variable in another session (such as DROP or GRANT) might not affect the variable that is instantiated. An attempt to read from or to write to a global variable created by this statement requires that the authorization ID attempting this action holds the appropriate privilege on the global variable. The definer of the variable is implicitly granted all privileges on the variable.

A created global variable is instantiated to its default value when it is first referenced within its given scope. If a global variable is referenced in a statement, it is instantiated independently of the control flow for that statement.

A global variable is created as a *SRVPGM object. If the variable name is a valid system name but a *SRVPGM exists with that name, an error is generated. If the variable name is not a valid system name, a unique name is generated by using the rules for generating system table names.

If a global variable is created within a session, it cannot be used by other sessions until the unit of work is committed. However, the new global variable can be used within the session that created the variable before the unit of work commits.

Example 5-4 creates a global variable that defines a user class. This variable has its initial value set based on the result of starting a function that is called CLASS_FUNC. This function is assumed to assign a class value, such as administrator or clerk that is based on the USER special register value. The SELECT clause in this example lists all employees from department A00. Only a session that has a global variable with a USER_CLASS value of 1 sees the salaries for these employees.

Example 5-4 Creating and using global variables

CREATE VARIABLE USER_CLASS INT DEFAULT (CLASS_FUNC(USER))

GRANT READ ON VARIABLE USER_CLASS TO PUBLIC

SELECT

EMPNO,

LASTNAME,

CASE

WHEN USER_CLASS = 1

THEN SALARY

ELSE NULL

END

FROM

EMPLOYEE

WHERE

WORKDEPT = 'A00'

5.2.5 Support for arrays in procedures

An array is a structure that contains an ordered collection of data elements in which each element can be referenced by its ordinal position in the collection. If N is the cardinality (number of elements) of an array, the ordinal position that is associated with each element is an integer value greater than or equal to 1 and less than or equal to N. All elements in an array have the same data type.

An array type is a data type that is defined as an array of another data type. Every array type has a maximum cardinality, which is specified on the CREATE TYPE (Array) statement. If A is an array type with maximum cardinality M, the cardinality of a value of type A can be any value 0 - M inclusive. Unlike the maximum cardinality of arrays in programming languages such as C, the maximum cardinality of SQL arrays is not related to their physical representation. Instead, the maximum cardinality is used by the system at run time to ensure that subscripts are within bounds. The amount of memory that is required to represent an array value is proportional to its cardinality, and not to the maximum cardinality of its type.

SQL procedures support parameters and variables of array types. Arrays are a convenient way of passing transient collections of data between an application and a stored procedure or between two stored procedures.

Within SQL stored procedures, arrays can be manipulated as arrays in conventional programming languages. Furthermore, arrays are integrated within the relational model in such a way that data represented as an array can be easily converted into a table, and data in a table column can be aggregated into an array.

Example 5-5 shows two array data types (intArray and stringArray), and a persons table with two columns (ID and name). The processPersons procedure adds three more persons to the table, and returns an array with the person names that contain the letter ‘a’, ordered by ID. The IDs and names of the three persons to be added are represented as two arrays (IDs and names). These arrays are used as arguments to the UNNEST function, which turns the arrays into a two-column table, whose elements are then inserted into the persons table. Finally, the last set statement in the procedure uses the ARRAY_AGG aggregate function to compute the value of the output parameter.

Example 5-5 Support for arrays in procedures

CREATE TYPE intArray AS INTEGER ARRAY[100]

CREATE TYPE stringArray AS VARCHAR(10) ARRAY[100]

CREATE TABLE persons (id INTEGER, name VARCHAR(10))

INSERT INTO persons VALUES(2, 'Tom'),

(4, 'Gina'),

(1, 'Kathy'),

(3, 'John')

CREATE PROCEDURE processPersons(OUT witha stringArray)

BEGIN

DECLARE ids intArray;

DECLARE names stringArray;

SET ids = ARRAY[5,6,7];

SET names = ARRAY['Denise', 'Randy', 'Sue'];

INSERT INTO persons(id, name)

(SELECT t.i, t.n FROM UNNEST(ids, names) AS t(i, n));

SET witha = (SELECT ARRAY_AGG(name ORDER BY id)

FROM persons

WHERE name LIKE '%a%'),

END

If WITH ORDINALITY is specified, an extra counter column of type BIGINT is appended to the temporary table. The ordinality column contains the index position of the elements in the arrays. See Example 5-6.

The ARRAY UNNEST temporary table is an internal data structure and can be created only by the database manager.

Example 5-6 UNNEST temporary table WITH ORDINALITY

CREATE PROCEDURE processCustomers()

BEGIN

DECLARE ids INTEGER ARRAY[100];

DECLARE names VARCHAR(10) ARRAY[100];

set ids = ARRAY[5,6,7];

set names = ARRAY['Ann', 'Bob', 'Sue'];

INSERT INTO customerTable(id, name, order)

(SELECT Customers.id, Customers.name, Customers.order

FROM UNNEST(ids, names) WITH ORDINALITY

AS Customers(id, name, order) );

END

5.2.6 Result set support in embedded SQL

You can write a program in a high-level language (C, RPG, COBOL, and so on) to receive results sets from a stored procedure for either a fixed number of result sets, for which you know the contents, or a variable number of result sets, for which you do not know the contents.

Returning a known number of result sets is simpler. However, if you write the code to handle a varying number of result sets, you do not need to make major modifications to your program if the stored procedure changes.

The basic steps for receiving result sets are as follows:

1. Declare a locator variable for each result set that is returned. If you do not know how many result sets are returned, declare enough result set locators for the maximum number of result sets that might be returned.

2. Call the stored procedure and check the SQL return code. If the SQLCODE from the CALL statement is +466, the stored procedure returned result sets.

3. Determine how many result sets the stored procedure is returning. If you already know how many result sets the stored procedure returns, you can skip this step.

Use the SQL statement DESCRIBE PROCEDURE to determine the number of result sets. The DESCRIBE PROCEDURE places information about the result sets in an SQLDA or

SQL descriptor.

SQL descriptor.

For an SQL descriptor, when the DESCRIBE PROCEDURE statement completes, the following values can be retrieved:

– DB2_RESULT_SETS_COUNT contains the number of result sets returned by the stored procedure.

– One descriptor area item is returned for each result set:

• DB2_CURSOR_NAME

This item contains the name of the cursor that is used by the stored procedure to return the result set.

• DB2_RESULT_SET_ROWS

This item contains the estimated number of rows in the result set. A value of -1 indicates that no estimate of the number of rows in the result set is available.

• DB2_RESULT_SET_LOCATOR

This item contains the value of the result set locator that is associated with the result set.

For an SQLDA, make the SQLDA large enough to hold the maximum number of result sets that the stored procedure might return. When the DESCRIBE PROCEDURE statement completes, the fields in the SQLDA contain the following values:

– SQLDA contains the number of result sets returned by the stored procedure.

– Each SQLVAR entry gives information about a result set. In an SQLVAR entry, the following information is in effect:

• The SQLNAME field contains the name of the cursor that is used by the stored procedure to return the result set.

• The SQLIND field contains the estimated number of rows in the result set. A value of -1 indicates that no estimate of the number of rows in the result set is available.

• The SQLDATA field contains the value of the result set locator, which is the address of the result set.

4. Link result set locators to result sets.

You can use the SQL statement ASSOCIATE LOCATORS to link result set locators to result sets. The ASSOCIATE LOCATORS statement assigns values to the result set locator variables. If you specify more locators than the number of result sets returned, the extra locators are ignored.

If you ran the DESCRIBE PROCEDURE statement previously, the result set locator values can be retrieved from the DB2_RESULT_SET_LOCATOR in the SQL descriptor or from the SQLDATA fields of the SQLDA. You can copy the values from these fields to the result set locator variables manually, or you can run the ASSOCIATE LOCATORS statement to do it for you.

The stored procedure name that you specify in an ASSOCIATE LOCATORS or DESCRIBE PROCEDURE statement must be a procedure name that was used in the CALL statement that returns the result sets.

5. Allocate cursors for fetching rows from the result sets.

Use the SQL statement ALLOCATE CURSOR to link each result set with a cursor. Run one ALLOCATE CURSOR statement for each result set. The cursor names can differ from the cursor names in the stored procedure.

6. Determine the contents of the result sets. If you already know the format of the result set, you can skip this step.

Use the SQL statement DESCRIBE CURSOR to determine the format of a result set and put this information in an SQL descriptor or an SQLDA. For each result set, you need an SQLDA large enough to hold descriptions of all columns in the result set.

You can use DESCRIBE CURSOR only for cursors for which you ran

ALLOCATE CURSOR previously.

ALLOCATE CURSOR previously.

After you run DESCRIBE CURSOR, if the cursor for the result set is declared WITH HOLD, for an SQL descriptor DB2_CURSOR_HOLD can be checked. For an SQLDA, the high-order bit of the eighth byte of field SQLDAID in the SQLDA is set to 1.

Fetch rows from the result sets into host variables by using the cursors that you allocated with the ALLOCATE CURSOR statements. If you ran the DESCRIBE CURSOR statement, complete these steps before you fetch the rows:

a. Allocate storage for host variables and indicator variables. Use the contents of the SQL descriptor or SQLDA from the DESCRIBE CURSOR statement to determine how much storage you need for each host variable.

b. Put the address of the storage for each host variable in the appropriate SQLDATA field of the SQLDA.

c. Put the address of the storage for each indicator variable in the appropriate SQLIND field of the SQLDA.

Fetching rows from a result set is the same as fetching rows from a table.

7. Close the cursors.

Example 5-7 gives you an idea on how to implement this process in an RPG program.

Example 5-7 Result set support in an RPG program

D MYRS1 S SQLTYPE(RESULT_SET_LOCATOR)

D MYRS2 S SQLTYPE(RESULT_SET_LOCATOR)

…

C/EXEC SQL CALL P1(:parm1, :parm2, ...)

C/END-EXEC

…

C/EXEC SQL DESCRIBE PROCEDURE P1 USING DESCRIPTOR :MYRS2

C/END-EXEC

…

C/EXEC SQL ASSOCIATE LOCATORS (:MYRS1,:MYRS2) WITH PROCEDURE P1

C/END-EXEC

C/EXEC SQL ALLOCATE C1 CURSOR FOR RESULT SET :MYRS1

C/END-EXEC

C/EXEC SQL ALLOCATE C2 CURSOR FOR RESULT SET :MYRS2

C/END-EXEC

…

C/EXEC SQL ALLOCATE DESCRIPTOR ‘SQLDES1’

C/END-EXEC

C/EXEC SQL DESCRIBE CURSOR C1 INTO SQL DESCRIPTOR ‘SQLDES1’

C/END-EXEC

5.2.7 FIELDPROC support for encoding and encryption

You can now specify a FIELDPROC attribute for a column, designating an external program name as the field procedure exit routine for that column. It must be an ILE program that does not contain SQL. It cannot be a *SRVPGM, OPM *PGMs, or a Java object. Field procedures are assigned to a table by the FIELDPROC clause of the CREATE TABLE and ALTER TABLE statements. A field procedure is a user-written exit routine that transforms values in a single column.

This procedure allows for transparent encryption / decryption or encoding / decoding of data that is accessed through SQL or any other interface. It allows for transparent encryption or encoding of data that is accessed through SQL or natively.

When values in the column are changed, or new values are inserted, the field procedure is started for each value, and can transform that value (encode it) in any way. The encoded value is then stored. When values are retrieved from the column, the field procedure is started for each value, which is encoded, and must decode it back to the original value. Any indexes that are defined on a non-derived column that uses a field procedure are built with encoded values.

The transformation your field procedure performs on a value is called field-encoding. The same routine is used to undo the transformation when values are retrieved, which is called field-decoding. Values in columns with a field procedure are described to DB2 in two ways:

•The description of the column as defined in CREATE TABLE or ALTER TABLE appears in the catalog table QSYS2.SYSCOLUMNS. This description is the description of the field-decoded value, and is called the column description.

•The description of the encoded value, as it is stored in the database, appears in the catalog table QSYS2.SYSFIELDS. This description is the description of the field-encoded value, and is called the field description.

The field-decoding function must be the exact inverse of the field-encoding function. For example, if a routine encodes ALABAMA to 01, it must decode 01 to ALABAMA. A violation of this rule can lead to unpredictable results.

The field procedure is also started during the processing of the CREATE TABLE or ALTER TABLE statement. That operation is called a field-definition. When so started, the procedure provides DB2 with the column’s field description. The field description defines the data characteristics of the encoded values. By contrast, the information that is supplied for the column in the CREATE TABLE or ALTER TABLE statement defines the data characteristics of the decoded values.

The data type of the encoded value can be any valid SQL data type except ROWID or DATALINK. Also, a field procedure cannot be associated with any column that has values that are generated by IDENTITY or ROW CHANGE TIMESTAMP.

If a DDS-created physical file is altered to add a field procedure, the encoded attribute data type cannot be a LOB type or DataLink. If an SQL table is altered to add a field procedure, the encoded attribute precision field must be 0 if the encoded attribute data type is any of the integer types.

A field procedure cannot be added to a column that has a default value of CURRENT DATE, CURRENT TIME, CURRENT TIMESTAMP, or USER. A column that is defined with a user-defined data type can have a field procedure if the source type of the user-defined data type is any of the allowed SQL data types. DB2 casts the value of the column to the source type before it passes it to the field procedure.

Masking support in FIELDPROCs

FIELDPROCs were originally designed to transparently encode or decode data. Several third-party products use the support in 7.1 to provide transparent column level encryption. For example, to allow a credit card number or social security number to be transparently encrypted on disk.

The FIELDPROC support is extended to allow masking to occur to that same column data (typically based on what user is accessing the data). For example, only users that need to see the actual credit card number see the value, whereas other users might see masked data. For example, XXXX XXXX XXXX 1234.

The new support is enabled by allowing the FIELDPROC program to detect masked data on an update or write operation and returning that indication to the database manager. The database manager then ignores the update of that specific column value on an update operation and replaces it with the default value on a write.

A new parameter is also passed to the FIELDPROC program. For field procedures that mask data, the parameter indicates whether the caller is a system function that requires that the data are decoded without masking. For example, in some cases, RGZPFM and ALTER TABLE might need to copy data. If the field procedure ignores this parameter and masks data when these operations are run, the column data is lost. Hence, it is critical that a field procedure that masks data properly handles this parameter.

Parameter list for execution of field procedures

The field procedure parameter list communicates general information to a field procedure. It signals what operation is to be done and allows the field procedure to signal errors. DB2 provides storage for all parameters that are passed to the field procedure. Therefore, parameters are passed to the field procedure by address.

When you define and use the parameters in the field procedure, ensure that no more storage is referenced for a parameter than is defined for that parameter. The parameters are all stored in the same space and exceeding a parameter’s storage space can overwrite another parameter’s value. This action, in turn, can cause the field procedure to see invalid input data or cause the value returned to the database to be invalid. The following list details the parameters you can pass:

•A 2-byte integer that describes the function to be run. This parameter is input only.

•A structure that defines the field procedure parameter value list (FPPVL).

•The decoded data attribute that is defined by the Column Value Descriptor (CVD). These attributes are the column attributes that were specified at CREATE TABLE or ALTER TABLE time. This parameter is input only.

•The decoded data.

The exact structure depends on the function code.

– If the function code is 8, then the NULL value is used. This parameter is input only.

– If the function code is 0, then the data to be encoded is used. This parameter is input only.

– If the function code is 4, then the location to place the decoded data is used. This parameter is output only.

•The encoded data attribute that is defined by the Field Value Descriptor (FVD). This parameter is input only.

•The encoded data that is defined by the FVD. The exact structure depends on the function code. This parameter is input only.

•The SQLSTATE (character(5)). This parameter is input/output. This parameter is set by DB2 to 00000 before it calls the field procedure. It can be set by the field procedure. Although the SQLSTATE is not normally set by a field procedure, it can be used to signal an error to the database.

•The message text area (varchar(1000)). This parameter is input/output.

5.2.8 Miscellaneous

A number of functions are aggregated under this heading. Most are aimed at upscaling or improving the ease of use for existing functions.

Partitioned table support

A partitioned table is a table where the data is contained in one or more local partitions (members). This release allows you to partition tables that use referential integrity or identity columns.

If you specify a referential constraint where the parent is a partitioned table, the unique index that is used for the unique index that enforces the parent unique constraint must be non-partitioned. Likewise, the identity column cannot be a partitioned key.

Partitioned tables with referential constraints or identity columns cannot be restored to a previous release.

Parameter markers

You can use this function to simplify the definition of variables in a program. Example 5-8 shows how you can write it.

Example 5-8 Parameter markers

SELECT stmt1 =

‘SELECT * FROM t1

WHERE c1 = CAST(? AS DECFLOAT(34)) + CAST(? AS DECFLOAT(34));

PREPARE prestmt1 FROM STMT1;

#Replace this with:

SET STMT1 = ‘SELECT * FROM T1 WHERE C1 > ? + ? ’;

PREPARE PREPSTMT1 FROM STMT;

Expressions in a CALL statement

You can now call a procedure and pass as arguments an expression that does not include an aggregate function or column name. If extended indicator variables are enabled, the extended indicator variable values of DEFAULT and UNASSIGNED must not be used for that expression. In Example 5-9, PARAMETER1 is folded and PARAMETER2 is divided by 100.

Example 5-9 Expressions in a CALL statement

CALL PROC1 ( UPPER(PARAMETER1), PARAMETER2/100 )

Three-part names support

You can use three-part names to bypass the explicit CONNECT or SET CONNECTION. Statements that use three-part names and see distributed data result in IBM DRDA access to the remote relational database. When an application program uses three-part name aliases for remote objects and DRDA access, the application program must be bound at each location that is specified in the three-part names. Also, each alias must be defined at the local site. An alias at a remote site can see yet another server if a referenced alias eventually refers to a table or view.

All object references in a single SQL statement must be in a single relational database. When you create an alias for a table on a remote database, the alias name must be the same as the remote name, but can point to another alias on the remote database. See Example 5-10.

Example 5-10 Three-part alias

CREATE ALIAS shkspr.phl FOR wllm.shkspr.phl

SELECT * FROM shkspr.phl

RDB alias support for 3-part SQL statements

As an alternative to using the CREATE ALIAS SQL statement to deploy database transparency, the Relational Database Directory Entry Alias name can be used instead.

To do this, the SQL statement is coded to refer to the RDB directory entry alias name as the first portion (RDB target) of a 3-part name. By changing the RDB directory entry to have a different destination database using the Remote location (RMTLOCNAME) parameter, the SQL application can target a different database without having to change the application.

Figure 5-2 shows the effect of redefining an RDB alias target.

Figure 5-2 Effect of redefining an RDB alias target

Example 5-11 shows some sample code that pulls daily sales data from different locations.

Example 5-11 Sample code pulling data from different locations

ADDRDBDIRE RDB(X1423P2 MYALIAS) RMTLOCNAME(X1423P2 *IP)

INSERT INTO WORKTABLE SELECT * FROM MYALIAS.SALESLIB.DAILY_SALES

CHGRDBDIRE RDB(LP13UT16 MYALIAS) RMTLOCNAME(LP13UT16 *IP)

INSERT INTO WORKTABLE SELECT * FROM MYALIAS.SALESLIB.DAILY_SALES

Concurrent access resolution

The concurrent access resolution option can be used to minimize transaction wait time. This option directs the database manager how to handle record lock conflicts under certain isolation levels.

The concurrent access resolution option can have one of the following values:

•Wait for outcome

This value is the default. This value directs the database manager to wait for the commit or rollback when it encounters locked data that is being updated or deleted. Locked rows that are being inserted are not skipped. This option does not apply for read-only queries that are running under COMMIT(*NONE) or COMMIT(*CHG).

•Use currently committed

This value allows the database manager to use the currently committed version of the data for read-only queries when it encounters locked data being updated or deleted. Locked rows that are being inserted can be skipped. This option applies where possible when it is running under COMMIT(*CS) and is ignored otherwise. It is what is referred to as “Readers do not block writers and writers do not block readers.”

•Skip locked data

This value directs the database manager to skip rows in the case of record lock conflicts. This option applies only when the query is running under COMMIT(*CS) or COMMIT(*ALL).

The concurrent access resolution values of USE CURRENTLY COMMITTED and SKIP LOCKED DATA can be used to improve concurrency by avoiding lock waits. However, care must be used when you use these options because they might affect application functions.

You can specify the usage for concurrent access resolution in several ways:

•By using the concurrent-access-resolution clause at the statement level for a select-statement, SELECT INTO, searched UPDATE, or searched DELETE

•By using the CONACC keyword on the CRTSQLxxx or RUNSQLSTM commands

•With the CONACC value in the SET OPTION statement

•In the attribute-string of a PREPARE statement

•Using the CREATE or ALTER statement for a FUNCTION, PROCEDURE, or TRIGGER

If the concurrent access resolution option is not directly set by the application, it takes on the value of the SQL_CONCURRENT_ACCESS_RESOLUTION option in the QAQQINI query options file.

CREATE statement

Specifying the CREATE OR REPLACE statement makes it easier to create an object without having to drop it when it exists. This statement can be applied to the following objects:

•ALIAS

•FUNCTION

•PROCEDURE

•SEQUENCE

•TRIGGER

•VARIABLE

•VIEW

To replace an object, the user must have both *OBJEXIST rights to the object and *EXECUTE rights for the schema or library, and privileges to create the object. All existing privileges on the replaced object are preserved.

BIT scalar functions

The bitwise scalar functions BITAND, BITANDNOT, BITOR, BITXOR, and BITNOT operate on the “two’s complement” representation of the integer value of the input arguments. They return the result as a corresponding base 10 integer value in a data type based on the data type of the input arguments. See Table 5-2.

Table 5-2 Bit scalar functions

|

Function

|

Description

|

A bit in the two's complement representation of the result is:

|

|

BITAND

|

Runs a bitwise AND operation.

|

1 only if the corresponding bits in both arguments are 1

|

|

BITANDNOT

|

Clears any bit in the first argument that is in the second argument.

|

Zero if the corresponding bit in the second argument is 1; otherwise, the result is copied from the corresponding bit in the first argument

|

|

BITOR

|

Runs a bitwise OR operation.

|

1 unless the corresponding bits in both arguments are zero

|

|

BITXOR

|

Runs a bitwise exclusive OR operation.

|

1 unless the corresponding bits in both arguments are the same

|

|

BITNOT

|

Runs a bitwise NOT operation.

|

Opposite of the corresponding bit in the argument

|

The arguments must be integer values that are represented by the data types SMALLINT, INTEGER, BIGINT, or DECFLOAT. Arguments of type DECIMAL, REAL, or DOUBLE are cast to DECFLOAT. The value is truncated to a whole number.

The bit manipulation functions can operate on up to 16 bits for SMALLINT, 32 bits for INTEGER, 64 bits for BIGINT, and 113 bits for DECFLOAT. The range of supported DECFLOAT values includes integers -2112 - 2112 -1, and special values such as NaN (Not a Number) or INFINITY are not supported (SQLSTATE 42815). If the two arguments have different data types, the argument that is supporting fewer bits is cast to a value with the data type of the argument that is supporting more bits. This cast affects the bits that are set for negative values. For example, -1 as a SMALLINT value has 16 bits set to 1, which when cast to an INTEGER value has 32 bits set to 1.

The result of the functions with two arguments has the data type of the argument that is highest in the data type precedence list for promotion. If either argument is DECFLOAT, the data type of the result is DECFLOAT(34). If either argument can be null, the result can be null. If either argument is null, the result is the null value.

The result of the BITNOT function has the same data type as the input argument, except that DECIMAL, REAL, DOUBLE, or DECFLOAT(16) returns DECFLOAT(34). If the argument can be null, the result can be null. If the argument is null, the result is the null value.

Because of differences in internal representation between data types and on different hardware platforms, using functions (such as HEX) or host language constructs to view or compare internal representations of BIT function results and arguments is data type dependent and not portable. The data type- and platform-independent way to view or compare BIT function results and arguments is to use the actual integer values.

Use the BITXOR function to toggle bits in a value. Use the BITANDNOT function to clear bits. BITANDNOT(val, pattern) operates more efficiently than BITAND(val, BITNOT(pattern)). Example 5-12 is an example of the result of these operations.

Example 5-12 BIT scalar functions

# Return all items for which the third property bit is set.

SELECT ITEMID FROM ITEM

WHERE BITAND(PROPERTIES, 4) = 4

# Return all items for which the fourth or the sixth property bit is set.

SELECT ITEMID FROM ITEM

WHERE BITAND(PROPERTIES, 40) <> 0

# Clear the twelfth property of the item whose ID is 3412.

UPDATE ITEM

SET PROPERTIES = BITANDNOT(PROPERTIES, 2048)

WHERE ITEMID = 3412

# Set the fifth property of the item whose ID is 3412.

UPDATE ITEM

SET PROPERTIES = BITOR(PROPERTIES, 16)

WHERE ITEMID = 3412

# Toggle the eleventh property of the item whose ID is 3412.

UPDATE ITEM

SET PROPERTIES = BITXOR(PROPERTIES, 1024)

WHERE ITEMID = 3412

# Switch all the bits in a 16-bit value that has only the second bit on.

VALUES BITNOT(CAST(2 AS SMALLINT))

#returns -3 (with a data type of SMALLINT)

Encoded vector index

When you create an encoded vector index (EVI), you can now use an INCLUDE statement in the index option of the CREATE ENCODED VECTOR INDEX command, specifying an aggregate function to be included in the index. These aggregates make it possible for the index to be used directly to return aggregate results for a query. The aggregate function name must be one of the built-in functions AVG, COUNT, COUNT_BIG, SUM, STDDEV, STDDEV_SAMP, VARIANCE, or VARIANCE_SAMP, or a sourced function that is based on one of these built-in functions.

INCLUDE is only allowed for an encoded vector index.

This change has the potential of improving performance on queries that make this type of calculations. Example 5-13 shows the syntax for constructing a simple INCLUDE statement when you create such an index.

Example 5-13 Aggregate function support for EVI

CREATE ENCODED VECTOR INDEX GLDSTRN.RSNKRNZ_EVI1

ON GLDSTRN.HMLT (JOB_TYPE, JOB_CATEGORY)

INCLUDE (AVG(WORK_TIME))

Inlining of scalar functions

In cases of simple SQL scalar functions, instead of starting the function as part of a query, the expression in the RETURN statement of the function can be copied (inlined) into the query itself. Such a function is called an inline function. A function is an inline function if the following criteria are met:

•The SQL function is deterministic.

•The SQL-routine-body contains only a RETURN statement.

•The RETURN statement does not contain a scalar subselect or fullselect.

INSERT with remote SUBSELECT

INSERT with SUBSELECT is enhanced to allow the select to reference a single remote database that is different from the current server connection

An implicit remote connection is established and used by DB2 for i.

This enhancement is the second installment in extending DB2 for i on 7.1 to use implicit or explicit remote three-part names within SQL.

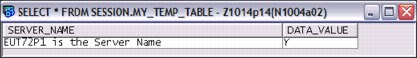

Example 5-14 declares the global temporary table from a remote subselect, which is followed by the insert.

Example 5-14 Usage of INSERT with remote SUBSELECT

DECLARE GLOBAL TEMPORARY TABLE SESSION.MY_TEMP_TABLE (SERVER_NAME VARCHAR(40), DATA_VALUE CHAR(1)) WITH REPLACE

INSERT INTO SESSION.MY_TEMP_TABLE (SELECT CURRENT_SERVER CONCAT ‘is the server name’, IBMREQD FROM EUT72P1.SYSIBM.SYSDUMMY1)

SELECT * FROM SESSION.MY_TEMP_TABLE;

Figure 5-3 displays the output that is generated from Example 5-14 on page 181.

Figure 5-3 Clearly showing the result was from a remote subselect

SQL procedure and function obfuscation

Obfuscation provides the capability of optionally obscuring proprietary SQL statements and logic within SQL procedures or functions.

ISVs can use this support to prevent their customers from seeing or changing SQL routines that are delivered as part of their solution.

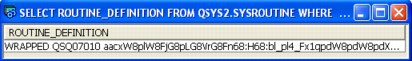

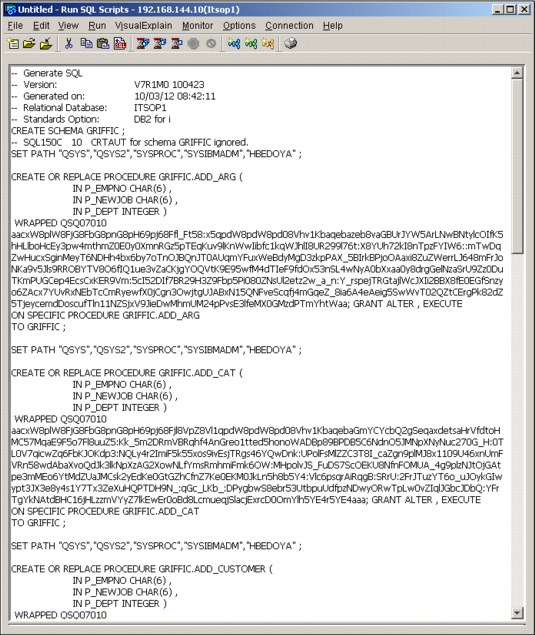

Example 5-15 demonstrates how obfuscation is performed.

Example 5-15 Example of obfuscation

CALL SYSIBMADM.CREATE_WRAPPED('CREATE PROCEDURE UPDATE_STAFF (

IN P_EmpNo CHAR(6),

IN P_NewJob CHAR(5),

IN P_Dept INTEGER)

LANGUAGE SQL

TR : BEGIN

UPDATE STAFF

SET JOB = P_NewJob

WHERE DEPT = P_Dept and ID = P_EmpNo; END TR '),

SELECT ROUTINE_DEFINITION FROM QSYS2.SYSROUTINE WHERE

ROUTINE_NAME = 'UPDATE_STAFF';

The output in Figure 5-4 clearly shows the result of obfuscation.

Figure 5-4 Output of routine clearly showing obfuscation

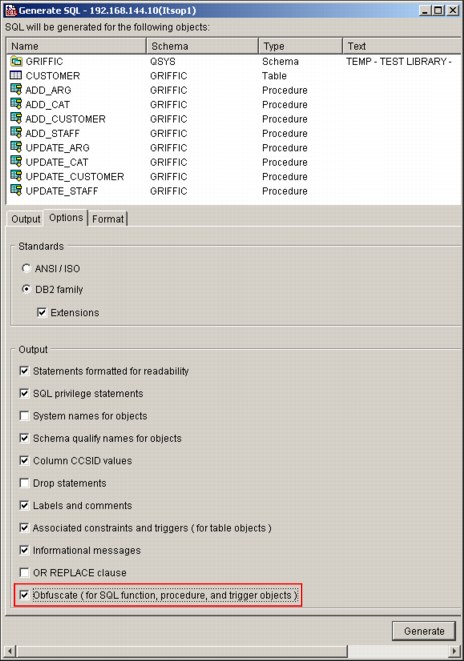

System i Navigator can also be used to obfuscate SQL through the Generate SQL option by selecting Obfuscate (for SQL function, and procedures) as shown in Figure 5-5.

Figure 5-5 Obfuscate (for SQL function and procedure objects) check box

Figure 5-6 shows an example of obfuscated code after the option to obfuscate is selected.

|

Important: The obfuscate option is not available for triggers.

|

Figure 5-6 Example of obfuscated code

CREATE TABLE with remote SUBSELECT

One of the enhancements in the CREATE TABLE command in IBM i 7.1 is the capability of creating a table based on a remote table.

•CREATE TABLE AS and DECLARE GLOBAL TEMPORARY TABLE are enhanced to allow the select to reference a single remote database that is different from the current server connection.

•An implicit remote connection is established and used by DB2 for i.

•The remote query can reference a single remote homogeneous or heterogeneous table.

This enhancement is the third installment for extending DB2 for i on 7.1 to use implicit or explicit remote three-part names within SQL.

A table is created based on a remote table, as shown in Example 5-16.

Example 5-16 Create a table in the local database that references a remote database with the AS clause

CREATE TABLE DATALIB.MY_TEMP_TABLE AS (SELECT CURRENT_SERVER CONCAT ' is the Server Name', IBMREQD

FROM X1423P2.SYSIBM.SYSDUMMY1) WITH DATA

SELECT * FROM DATALIB.MY_TEMP_TABLE

Running the example SQL produces the output that shows that a remote table was accessed, as shown in Figure 5-7.

Figure 5-7 Output from the SQL showing that the remote table was accessed

5.2.9 Generating field reference detail on CREATE TABLE AS

Impact Analysis tools use reference field information to identify tables and programs that need to be modified. Before this enhancement, SQL tables did not propagate reference information.

CREATE TABLE AS is enhanced to store the originating column and table as the reference information in the file object.

When using LIKE to copy columns from another table, REFFLD information is copied for each column that has a REFFLD in the original table.

When using AS to define a new column, any column that directly references a table or view (not used in an expression) has a REFFLD defined that refers to that column. A simple CAST also generates REFFLD information (that is, CAST (PARTNAME as varchar(50)))

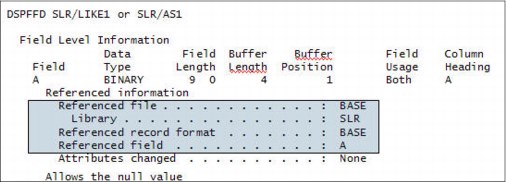

As an example, if the following table is created:

create table slr/base (a int, b int, c int)

create table slr/as1 as (select * from slr/base) with no data

create table slr/like1 like slr/as1

The improved field reference detail is as shown in Figure 5-8.

Figure 5-8 CREATE TABLE AS field reference detail

5.2.10 Qualified name option added to generate SQL

Today, generated SQL includes schema/library qualification of objects.

System i Navigator and the QSQGNDDL() are enhanced to include the qualified name option, making it easier to redeploy generated SQL. Any three-part names (object and column) are left unchanged. Any schema qualification within the object that does not match the database object library name are left unchanged.

The qualified name option specifies whether qualified or unqualified names should be generated for the specified database object. The valid values are:

‘0’ Qualified object names should be generated. Unqualified names within the body of SQL routines remain unqualified (by default).

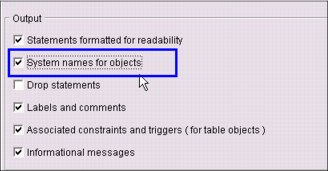

‘1’ Unqualified object names should be generated when a library is found that matches the database object library name. Any SQL object or column reference that is RDB qualified is generated in its fully qualified form. For example, rdb-name.schema-name.table-name and rdb-name.schema-name.table-name.column-name references retain their full qualification. This option also appears on the Generate SQL dialog within System i Navigator, as shown in Figure 5-9 on page 187. The default behavior is to continue to generate SQL with schema qualification.

Figure 5-9 Schema qualified names for objects check box

5.2.11 New generate SQL option for modernization

Today, an SQL view is generated for DDS-created keyed physical, keyed logical, or join logical files. With this enhancement, IBM i Navigator has more generate SQL options. The options are mutually exclusive options and have been added to QSQGNDDL and IBM i Navigator.

This enhancement makes it easier to proceed with DDS to SQL DDL modernization.

The following examples provide samples of the new generate SQL option for modernization. There is a generate additional indexes option for keyed physical and logical files whether more CREATE INDEX statements are generated for DDS created keyed physical, keyed logical, or join logical files.

Example 5-17 shows a sample DDS for join logical file.

Example 5-17 Sample DDS for a join logical file

R FMT JFILE(MJATST/GT MJATST/GT2)

J JFLD(F1_5A F1_5A)

F1_5A JREF(1)

F2_5A JREF(2)

K F1_5A

The resulting v statement after using the generate SQL for modernization option is shown in Example 5-18.

Example 5-18 Resulting CREATE VIEW statement after using Generate SQL for modernization option

CREATE VIEW MJATST.GVJ (

F1_5A , F2_5A )

AS

SELECT

Q01.F1_5A , Q02.F2_5A

FROM MJATST.GT AS Q01 INNER JOIN

MJATST.GT2 AS Q02 ON ( Q01.F1_5A = Q02.F1_5A )

RCDFMT FMT;

CREATE INDEX MJATST.GVJ_QSQGNDDL_00001

ON MJATST.GT ( F1_5A ASC );

CREATE INDEX MJATST.GVJ_QSQGNDDL_00002

ON MJATST.GT2 ( F1_5A ASC );

There is also a generate index instead of view option, which specifies whether a CREATE INDEX or CREATE VIEW statement is generated for a DDS-created keyed logical file. Example 5-19 shows the DDS created keyed logical file.

Example 5-19 DDS created keyed logical file

R FMT PFILE(MJATST/GT)

F3_10A

F2_5A

F1_5A

K F3_10A

Example 5-20 shows the resulting CREATE INDEX statement

Example 5-20 Resulting CREATE INDEX statement

CREATE INDEX MJATST.GV2

ON MJATST.GT ( F3_10A ASC )

RCDFMT FMT;

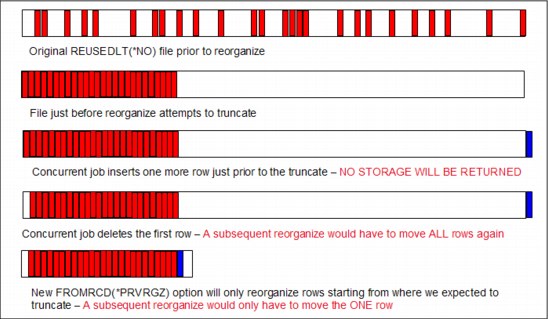

5.2.12 OVRDBF SEQONLY(YES, buffer length)

OVRDBF adds support to allow the user to specify the buffer length rather than the number of records for OVRDBF SEQONLY(*YES N). N can be:

•*BUF32KB

•*BUF64KB

•*BUF128KB

•*BUF256KB

This setting means that the number of records are the number of records that fit into a 32 KB,

64 KB, 128 KB, or 256 KB buffer.

64 KB, 128 KB, or 256 KB buffer.

5.3 Performance and query optimization

In the IBM i 7.1 release of DB2 for i, a considerable effort was undertaken to enhance the runtime performance of the database, either by extending existing functions or by introducing new mechanisms.

Runtime performance is affected by many issues, such as the database design (the entity-relationship model, which is a conceptual schema or semantic data model of a relational database), the redundancy between functional environments in composite application environment, the level of normalization, and the size and volumes processed. All of these items influence the run time, throughput, or response time, which is supported by the IT components and is defined by the needs of the business. Performance optimization for database access must address all the components that are used in obtained acceptable and sustainable results, covering the functional aspects and the technical components that support them.

This section describes the query optimization method. It describes what is behind the changes that are implemented in the database management components to relieve the burden that is associated with the tools and processes a database administrator uses or follows to realize the non-functional requirements about performance and scalability. These requirements include the following:

•Global Statistics Cache (GSC)

•Adaptive Query Processing

•Sparse indexes

•Encoded vector index-only access, symbol table scan, symbol table probe, and

INCLUDE aggregates

INCLUDE aggregates

•Keeping tables or indexes in memory

5.3.1 Methods and tools for performance optimization

Typically, the autonomous functions in IBM i, and the new ones in IBM i 7.1, all strive to obtain the best possible performance and throughput. However, you can tweak settings to pre-emptively enhance the tooling of IBM i.

In today’s business world, the dynamics of a business environment demand quick adaptation to changes. You might face issues by using a too generic approach in using these facilities. Consider that you made the architectural decision for a new application to use a stateless runtime environment and that your detailed component model has the infrastructure for it. If the business processes it supports are changing and require a more stateful design, you might face an issue if you want to preserve information to track the statefulness in your transactions. Then, the database where you store information about these transactions might quickly become the heaviest consumer of I/O operations. If your infrastructure model did not consider this factor, you have a serious issue. Having high volumes with a low latency is good. However, this situation must be balanced against the effort it takes to make it sustainable and manageable throughout all of the IT components you need to support the business.

When you define components for a database support, develop a methodology and use preferred practices to obtain the best results. Any methodology must be consistent, acceptable, measurable, and sustainable. You want to stay away from ad hoc measures or simple bypasses.

IBM i provides statistics about I/O operations, provided by the database management function. These statistics show accumulated values, from which you can derive averages, on the I/O operations on tables and indexes. These statistics do not take into account the variability and the dynamic nature of the business functions these objects support. So if you want to use these statistics to define those objects to be placed either in memory or on faster disks, you must consider a larger scope.

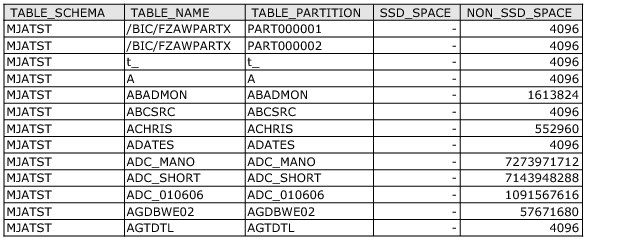

For example: Since the introduction of solid-state drives (SSD), which have a low latency, the IBM i storage manager has awareness about this technology and uses it as appropriate. Since release 6.1, you can specify the media preference on the CREATE TABLE/INDEX and ALTER TABLE/INDEX commands along with the DECLARE GLOBAL TEMPORARY TABLE (see 5.3.9, “SQE optimization for indexes on SSD” on page 196). The SYSTABLESTAT and SYSINDEXSTAT catalog tables provide more I/O statistics (SEQUENTIAL_READS and RANDOM_READS) in release 7.1 on these objects. These statistics, generated by the database manager, indicate only possible candidates to be housed on SSD hardware. Further investigation of the run time and the contribution to the performance and capacity or the infrastructure reveals whether they are eligible for those settings.

For more information about SSDs, see Chapter 8, “Storage and solid-state drives” on page 377.

Finally, and as a last resort, there is now a stored procedure available that you can use to cancel long running SQL jobs using the QSYS2.CANCEL_SQL procedure.

5.3.2 Query optimization

Whenever a query is submitted, the database engine creates an artifact that allows the query to trigger a set of events and processes that allows it to run the request with the lowest cost. In this context, cost is expressed as the shortest time possible to run the query. This cost calculation is done on a number of both fixed and variable elements. The fixed cost elements are attributes, such as both the hardware components (processor, memory, and disks) and in the instruments or methods that can be used to handle rows and columns in a (set of) database files. These methods are known as using indexes (binary radix index or encoded vector index), index or table scan, hashing, sorting, and so on. The variable elements are typically the volume of data (that is, the number or rows) to be handled and the join functions that are required by the query. Based on these methods, the database query engine builds an access plan that targets reduction of cost.

Even with all the technologies that are used, the access plans might still yield an incorrect (that is, not obeying the rule of capping the cost) result. This situation can, for example, be the result of not having an index to navigate correctly through the data. For that reason, IBM i supports the technology to create temporary indexes autonomically until the system undergoes an IPL. This index can be used by any query that might benefit from its existence. These autonomous indexes can be viewed and carry information that a database administrator can use to decide whether to make it a permanent object by using the definition of the temporary index.

Other elements that can contribute to incorrect access plans are as follows:

•Inclusion of complex or derivated predicates, which are hard to predict without running the query about the existence of stale statistics on busy systems

•Hidden correlations in the data, often because of a poor design, data skew, and data volatility

•Changes in the business or infrastructure environment

In the last case, this situation is more likely to happen with variations in both memory and processor allocations on partitioned systems, which are reconfigured using dynamic partitioning. It can also be caused when the data is changed frequently in bulk.

If you want to read more about the database query engine, see Preparing for and Tuning the SQL Query Engine on DB2 for i5/OS, SG24-6598.

5.3.3 Global statistics cache

There are several process models to reduce the impact of managing the dynamics of a database structure and its content. Moreover, this database is often deployed on a system that is subject to many changes. These tasks can be a wide array of non-automated interventions, including the setup of a validation process of access plans, manually tuning the query, or having the access plans invalidated and re-created. It can also include a reset of the statistics information or an extensive review of the query functions to achieve a higher degree of expected consumability by the system. These actions are typically post-mortem actions and are labor-intensive.

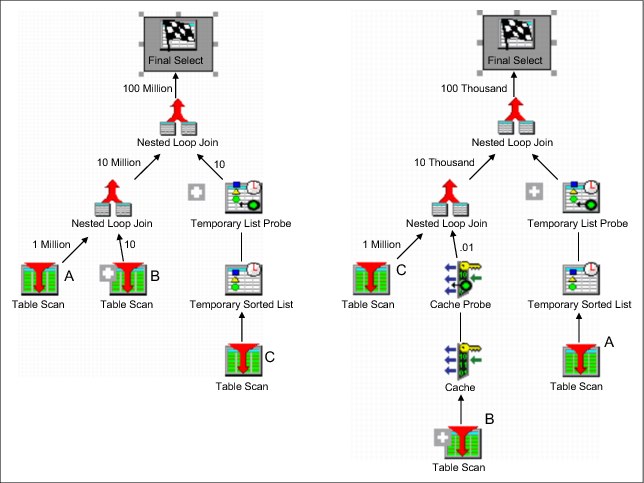

To reduce this labor-intensive work, the DB2 Statistics Manager was revised. By default, it now collects data about observed statistics in the database and from partially or fully completed queries. This data is stored in the Global Statistics Cache (GSC), which is a system-wide repository, containing those complex statistics. The adaptive query processing (AQP) (see 5.3.4, “Adaptive query processing” on page 192) inspects the results of queries and compares the estimated row counts with the actual row counts. All of the queries that are processed by the SQL Query Engine (SQE) use this information to increase overall efficiency. One of the typical actions the SQE can take is to use the live statistics in the GSC, compare the estimated row count with the actual row count, and reoptimize and restart the query using the new query plan. Furthermore, if another query asks for the same or a similar row count, the Storage Manager (SM) can return the stored actual row count from the GSC. This action allows generating faster query plans by the query optimizer.

Typically, observed statistics are for complex predicates, such as a join. A simple example is a query that joins three files, A, B, and C. There is a discrepancy between the estimate and actual row count of the join of A and B. The SM stores an observed statistic into the GSC. Later, if a join query of A, B, and Z is submitted, SM recalls the observed statistic of the A and B join. The SM considers that observed statistic in its estimate of the A, B, and Z join.

The GSC is an internal DB2 object, and the contents of it are not directly observable. You can harvest the I/O statistics in the database catalog tables SYSTABLESTAT and SYSINDEXSTAT or by looking at the I/O statistics using the Display File Description (DSPFD) command. This command provides only a limited number of I/O operations. Both counters (catalog tables and the object description) are reset at IPL time.

The statistics collection is defined by the system value Data Base file statistics collection (QDBFSTCCOL). The SM jobs that update the statistics carry the same name.

5.3.4 Adaptive query processing

The SQE uses statistics to build the mechanism to run an SQL statement. These statistics come from two sources:

•Information that is contained in the indexes on the tables that are used in the statement

•Information that is contained in the statistics tables (the GSC)

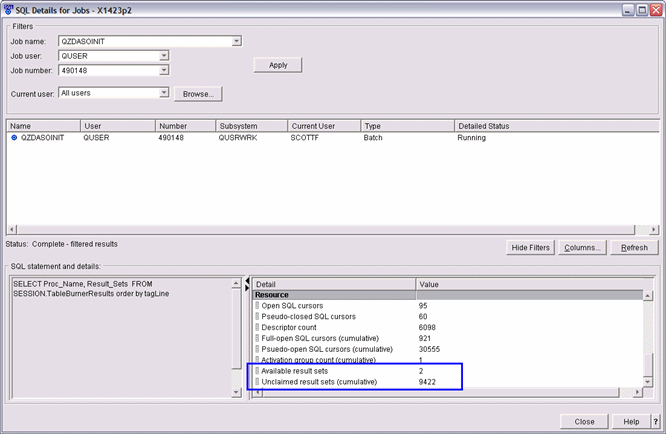

When the query compiler optimizes the query plans, its decisions are heavily influenced by statistical information about the size of the database tables, indexes, and statistical views. The optimizer also uses information about the distribution of data in specific columns of tables, indexes, and statistical views if these columns are used to select rows or join tables. The optimizer uses this information to estimate the costs of alternative access plans for each query.