An affine transformation is a geometric transformation that preserves points, lines and planes. It satisfies the following conditions:

•Collinearity: Points which lie on a line before the transformation continue to lie on the line after the transformation.

•Parallelism: Parallel lines will continue to be parallel after the transformation.

•Convexity: A convex set will continue to be convex after the transformation.

•Ratios of parallel line segments: The ratio of the length of parallel line segments will continue to be the same after transformation.

In this chapter, we will discuss the common affine transformation such as translation, rotation and scaling. We will begin the discussion with the mathematical process to perform affine transformation. We will follow that with specific examples and code for various affine transformations. Finally, we will discuss interpolation which affects the image quality after affine transformation.

The affine transformation is applied as follows:

•Consider every pixel coordinate in the image.

•Calculate the dot product of the pixel coordinate with a transformation matrix. The matrix differs depending on the type of transformation being performed which will be discussed below. The dot product gives the pixel coordinate for the transformed image.

•Determine the pixel value in the transformed image using the pixel coordinate calculated from the previous step. Since the dot product may produce non-integer pixel coordinates, we will apply interpolation (discussed later).

We will discuss the following affine transformation in this chapter. There are other transformations as well but these are most commonly used.

•Translation

•Rotation

•Scaling

Translation is the process of shifting the image along the various axes (x-, y- and z-axis). For a 2D image, we can perform translation along one or both axes independently. The transformation matrix for translation is defined as:

(6.1) |

|---|

If we consider a pixel coordinate (x, y, 1) and perform the dot product with the translation matrix in Equation 6.1, we will obtain the pixel coordinate of the transformed matrix.

(6.2) |

|---|

(6.3) |

|---|

Thus every pixel in the transformed image is offset by tx and ty along x and y respectively. The value of tx and ty may be positive or negative.

The following code implements translation transformation. The image is read and converted in to a numpy array. The transformation matrix is created as an instance of the AffineTransform class. The translation value of (10, 4) is supplied as input to the AffineTransform class. If you need to visualize the value of the transformation matrix similar to one in Equation 6.1, you can print out the content of ‘transformation.params’. The transformation is supplied to the warp function which transform the input image img1 to the output image img2.

import numpy as npimport scipy.misc, mathfrom scipy.misc.pilutil import Imagefrom skimage.transform import AffineTransform, warpimg = Image.open(’../Figures/angiogram1.png’).convert(’L’)img1 = scipy.misc.fromimage(img)# translate by 10 pixels in x and 4 pixels in ytransformation = AffineTransform(translation=(10, 4))img2 = warp(img1, transformation)im4 = scipy.misc.toimage(img2)im4.save(’../Figures/translate_output.png’)im4.show()

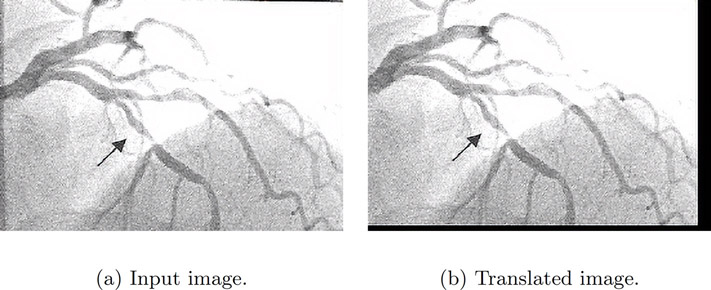

The output of the translation is shown below. The image in Figure 6.1(a) is translated to produce the output image in Figure 6.1(b). The transformed image is translated by 10 pixels to the left and 4 pixels to the top with reference to the input image.

The missing pixel values on the right and bottom are given a value of 0 and hence the black pixels on the right and bottom edge. The warp function’s mode parameter can be used to modify this behavior. If the mode is, “constant” the value of cval parameter to the warp function will be used instead of a pixel value of 0. If the mode is, “mean”, “median”, “maximum”, or “minimum” a padding value equal to the mean, median, maximum or minimum along that vector will be used respectively. The readers are recommended to read the documentation for other options. The choice of the padding value affects the quality of the image and in some cases further computation.

Rotation is the process of changing the radial orientation of an image along the various axes with respect to a fixed point. The transformation matrix for a counter-clockwise rotation is defined as:

(6.4) |

|---|

If we consider a pixel coordinate (x, y, 1) and perform the dot product with the rotation matrix in Equation 6.4, we will obtain the pixel coordinate for the rotated matrix.

(6.5) |

|---|

(6.6) |

|---|

The following code implements the rotation transformation. The image is read and converted into a numpy array. The transformation matrix is created as an instance of the AffineTransform class. The rotation value of 0.1 radians is supplied as input to the AffineTransform class. If you need to visualize the value of the transformation matrix similar to the one in Equation 6.4, you can print out the content of ‘transformation.params’. The transformation is supplied to the warp function, which transforms the input image img1 to the output image img2 using the transformation.

import numpy as npimport scipy.misc, mathfrom scipy.misc.pilutil import Imagefrom skimage.transform import AffineTransform, warpimg = Image.open(’../Figures/angiogram1.png’).convert(’L’)img1 = scipy.misc.fromimage(img)# rotation angle in radianstransformation = AffineTransform(rotation=0.1)img2 = warp(img1, transformation)im4 = scipy.misc.toimage(img2)im4.save(’../Figures/rotate_output.png’)im4.show()

The image in Figure 6.2(a) is rotated to produce the output image in Figure 6.2(b). The transformed image is rotated by 0.1 radians with reference to the input image. The missing pixel values on the left and bottom are given a value of 0 that can be modified by supplying appropriate values to the warp function’s mode parameter (as discussed in the previous section).

Scaling is a process of changing the distance (compression or elongation) between points in one or more axes. This change in distance causes the object in the image to appear larger or smaller than the original input. The scaling factor may be different across different axes. The transformation matrix for scaling is defined as:

(6.7) |

|---|

If the value of kx or ky is less than 1, then the objects in the image will appear smaller ,and missing pixel values will be filled with 0 or based on the value of the warp parameter. If the value of kx or ky is greater than 1, then the objects in the image will appear larger. If the value of kx and ky are equal, the image is compressed or elongated by the same amount along both axes.

If we consider a pixel coordinate (x, y, 1) and perform the dot product with the scaling matrix in Equation 6.7, we will obtain the pixel coordinate for the scaled matrix.

(6.8) |

|---|

(6.9) |

|---|

The following code implements scaling transformation. The image is read and converted to a numpy array. The transformation matrix is created as an instance of the AffineTransform class. The scaling value of (0.5, 0.5) is supplied as input to the AffineTransform class corresponding to scaling along x and y axes. The transformation is supplied to the warp function, which transforms the input image img1 to the output image img2 using the transformation.

import numpy as npimport scipy.misc, mathfrom scipy.misc.pilutil import Imagefrom skimage.transform import AffineTransform, warpimg = Image.open(’../Figures/angiogram1.png’).convert(’L’)img1 = scipy.misc.fromimage(img)# scale by 1/2 on both x and y.transformation = AffineTransform(scale=(0.5, 0.5))img2 = warp(img1, transformation)im4 = scipy.misc.toimage(img2)im4.save(’../Figures/scale_output.png’)im4.show()

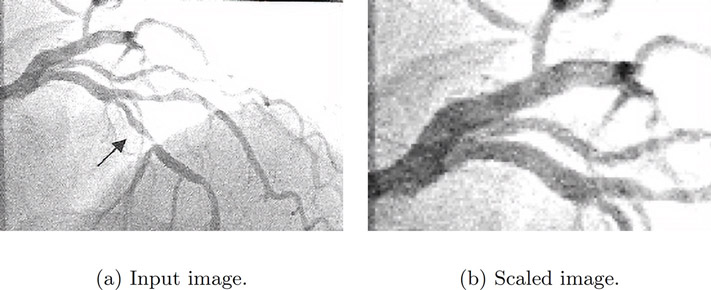

The image in Figure 6.3(a) is scaled to produce the output image in Figure 6.3(b). The image is scaled to 0.5 of its original size with reference to the input image along both axes.

To understand the use of interpolation, we will first perform a thought experiment. Consider an image of size 2x2. If this image is scaled to four times its size in all linear dimensions, the new image will be of size 8x8. The original image has only 4 pixel values while the new image needs 64 pixel values. The question is: How can we fill 64 pixels with values given that there are only 4 pixel values? The answer is interpolation.

The various interpolation schemes available are:

1.Nearest-neighbor (order = 0)

2.Bi-linear (order = 1)

3.Bi-quadratic (order = 2)

4.Bi-cubic (order = 3)

5.Bi-quartic (order = 4)

6.Bi-quintic (order = 5)

The order number specified in parentheses is the number used by scikit-image. We will learn about the first 4 interpolation schemes. In all these schemes, the aim is to fill the missing pixel value.

In the nearest-neighbor interpolation,a the missing pixel value is determined based on its immediate neighbors. For a large scaling factor such as 2, we will assign 4 neighbors in the output image to the same pixel value as one of the pixels in the input image, thus making the output image appear pixelated. It is not recommended to use this interpolation even though it is the easiest to implement and also the fastest.

In the bi-linear interpolation, the missing pixel values are determined based on 2x2 pixels around the missing pixels. This results in a smoothed image with fewer artifacts compared to the nearest-neighbor interpolation. Since nearest-neighbor interpolation does not produce a good-quality image compared to other interpolation, it is recommended to use bi-linear at least. In scikit-image, bi-linear is the default interpolation.

In the bi-quadratic interpolation, the missing pixel values are determined based on 3x3 pixels around the missing pixels while in the bi-cubic interpolation, the missing pixel values are determined based on 4x4 pixels around the missing pixels. This results in a smoothed image with fewer artifacts compared to the bi-linear interpolation but at a higher computational cost.

The other two interpolations bi-quartic and bi-quintic, result in smoother interpolation but higher computational cost.

The following code demonstrates the effect of various interpolations. The image is read and converted to a numpy array. The transformation matrix is created as an instance of the AffineTransform class. The scaling value of (0.3, 0.3) is supplied as input to the AffineTransform class corresponding to scaling along x and y axes.

The transformation is then supplied to the warp functio,n which transforms the input image img1 to the output image img2 using the transformation with various interpolation schemes specified using the value for the parameter order. The transformed image for each of the interpolations is then stored.

import numpy as npimport scipy.misc, mathfrom scipy.misc.pilutil import Imagefrom skimage.transform import AffineTransform, warpimg = Image.open(’../Figures/angiogram1.png’).convert(’L’)img1 = scipy.misc.fromimage(img)transformation = AffineTransform(scale=(0.3, 0.3))# nearest neighbor order = 0img2 = warp(img1, transformation, order=0)im4 = scipy.misc.toimage(img2)im4.save(’../Figures/interpolate_nn_output.png’)im4.show()# bi-linear order = 1img2 = warp(img1, transformation, order=1) # defaultim4 = scipy.misc.toimage(img2)im4.save(’../Figures/interpolate_bilinear_output.png’)im4.show()#bi-quadratic order = 2img2 = warp(img1, transformation, order=2)im4 = scipy.misc.toimage(img2)im4.save(’../Figures/interpolate_biquadratic_output.png’)im4.show()#bi-cubic order = 3img2 = warp(img1, transformation, order=3)im4 = scipy.misc.toimage(img2)im4.save(’../Figures/interpolate_bicubic_output.png’)im4.show()

The image in Figure 6.4(a) is scaled to produce all the other images. The image in Figure 6.4(b) used nearest neighbor interpolation, the image in Figure 6.4(c) used bi-linear interpolation, the image in Figure 6.4(d) used bi-quadratic interpolation and the image in Figure 6.4(e) used bi-cubic interpolation. As can be seen in the image, the nearest-neighbor performed poorly as it exhibits pixelation compared to all other methods. The quality of the image for all other cases are similar but the cost increases significantly for all other methods.

•Affine transformation is a geometric transformation that preserves points, lines and planes.

•We discussed the commonly used affine transformations such as rotation, translation and scaling.

•We also discussed interpolation, its purpose and the various schemes. It is recommended to not use nearest-neighbor interpolation as it results in pixelation artifact.

1.Consider any of the images used in this chapter. Then, rotate or translate the image by various angles and distance, and for each case, study the histogram. Are the histograms of the input and output image different for different transformations?

2.What happens if you zoom (scale) into the image while keeping the image size the same? Try different zoom levels (2X, 3X, and 4X). Would the histograms of the input image and output image look different?