Investigating Windows Systems

The objective of this chapter is to help you understand the fundamental sources of evidence on a Windows system, and how you can apply them to solve common questions that typically arise during an incident response investigation. To be blunt, investigating Windows can be an intimidating challenge. The operating system is a complex beast on its own—even more so when it’s part of an Active Directory domain, as is most often the case in corporate environments. The sheer volume of files, registry keys, log entries, and other artifacts generated by a system during normal day-to-day use can be overwhelming for a new analyst.

Fortunately, as the fields of forensics and incident response have matured over the past decade, so too have the tools available to investigate Windows systems. The net result is that it is easier than ever to collect and parse the sources of evidence that we’ll discuss throughout this chapter. However, we’ve often found that less experienced analysts can fall victim to relying upon a tool’s interpretation of evidence without understanding its true origin or behavior. Moreover, tools can have bugs and convey data in a way that is misleading or incomplete. This can significantly limit your ability to draw the correct conclusions about an intruder’s actions on a system—or worse yet, cause you to miss or misrepresent findings outright. No tool can be a complete substitute for a comprehensive understanding of how Windows behaves “under the hood.” We hope that this chapter can serve as a foundation for your analysis, as well as a launching-point for further research on more complex topics beyond our scope.

This chapter is divided into subsections that each focus on a specific source of evidence. Rather than attempting to cover every facet of the operating system, we have prioritized areas that are most beneficial for incident response investigations. These include the following:

• NTFS and file system analysis

• Windows prefetch

• Event logs

• Scheduled tasks

• The registry

• Other artifacts of interactive sessions

• Memory forensics

• Alternative persistence mechanisms

Within each section, we’ll provide information on how the evidence “works” (that is, its role in supporting operating system functionality), what you need to collect as part of your forensic-acquisition process, and how you can analyze or interpret the evidence (including suggested tools). Along the way, we’ll link the various sources of evidence to scenarios that are common to incident response investigations, and highlight how they are impacted by attacker behavior. And at the end of the chapter, we’ll include a review that summarizes all the Windows forensic artifacts you’ve learned about.

As of this writing, Windows 8 has yet to see widespread deployment in the majority of corporate environments. In fact, many organizations with which we have worked only recently upgraded their user endpoints to the latest edition of Windows 7. As a result, this chapter does not include analysis of artifacts or changes specific to Windows 8. Like all significant Windows upgrades, many of the forensic artifacts remain unchanged, some have been removed, and some have been added. Post-publication, we’ll be updating the website accompanying this book with additional information on Windows 8, so stay tuned. |

NTFS AND FILE SYSTEM ANALYSIS

The Windows file system serves as the foundation for many of the sources of evidence we’ll discuss throughout this chapter. Today, the NT File System (NTFS) is the most common file system in use by all recent versions of Windows. NTFS is a proprietary file system developed by Microsoft in the early 1990s—it gained widespread usage during the Windows 2000 and XP era, eventually supplanting the legacy FAT file system that was common with the Microsoft Disk Operating System (MS-DOS). Because NTFS is now the most common file system we expect you will encounter on Windows systems, particularly for the disk volume on which the operating system resides, we will focus exclusively on NTFS in this chapter.

We’ll begin with a crash-course on the most important artifacts of NTFS: the Master File Table and associated metadata, INDX attributes, and change logs. We’ll also discuss several file system topics that are not exclusively related to NTFS, but remain essential knowledge for any investigator, including volume shadow copies and file system redirection.

The Master File Table

As with any file system, NTFS defines how disk space is allocated and utilized, how files are created and deleted, and how the metadata associated with these files is stored and updated. If you have any experience with “dead disk” forensics, you’ve probably performed routine tasks such as reviewing file timestamps and identifying deleted files that can be recovered—these are all possible due to NTFS artifacts that can be acquired and analyzed like any other source of evidence on a Windows system.

The Master File Table (MFT) is the primary source of metadata in NTFS. It contains or indirectly references everything about a file: its timestamps, size in bytes, attributes (such as permissions), parent directory, and contents. In other words, if you have a copy of the MFT from a Windows system, you have the authoritative catalog of all files that exist on that volume—as well as some that may have been recently deleted (more on that in a bit).

Each NTFS volume will contain its own MFT, stored within the volume root as a file named $MFT. If you’re analyzing a system with multiple partitions or drives, ensure you acquire the MFT from each volume—you never know what you might need. NTFS metadata files such as the $MFT are not accessible via Windows Explorer or other standard application programming interface (API) file-access methods. You’ll need raw disk access—such as that provided by any disk forensics software and its drivers—to interact with or acquire the contents of the $MFT file.

On a standard hard drive with 512-byte sectors, the MFT is structured as a series of 1,024-byte records, also known as “entries,” one for each file and directory on a volume. The first 16 MFT entries are reserved for essential NTFS artifacts, including the $MFT itself, $LogFile, and other special files we’ll cover later in this section. Each entry contains metadata and attributes that both describe a file or directory and indicate where its contents reside on the physical disk. The essential elements of an MFT entry include the following:

• Record type Specifies whether a given entry represents a file or directory.

• Record # An integer used to identify a given MFT entry. Record numbers grow sequentially as new entries are added.

• Parent record # The record number of the parent directory. Each MFT entry only tracks the record number of its immediate parent, rather than its full path on disk. You can re-construct a file or directory’s full path by following this sequence of record numbers until you reach the root entry for a volume.

• Active/Inactive flag MFT entries for deleted files or directories are marked “Inactive.” NTFS will automatically reclaim and replace inactive entries with new active entries to keep the MFT from growing indefinitely.

• Attributes Each MFT entry contains a number of “attributes” that contain metadata about a file—everything from timestamps to the physical location of the file’s contents on disk. The three most important attributes we’ll detail in this section are $STANDARD_INFORMATION, $FILENAME, and $DATA.

Note that on advanced format (AF) drives with 4KB sectors, each MFT record will be 4,096 bytes instead. Additional information is available in the following blog articles:

GO GET IT ON THE WEB

Figure 12-1 provides a high-level depiction of an MFT record’s layout and key elements, including the FILE_RECORD_SEGMENT_HEADER (shown as “Record Header”), the $STANDARD_INFORMATION and $FILE_NAME attributes, and the $DATA attribute.

As we mentioned earlier, the MFT is among the most important sources of evidence that we use when conducting file system forensic analysis. In this section, we’ll focus on how to analyze the MFT to recover information about deleted files and directories, and to obtain timestamps—both of which are common tasks you’ll likely perform again and again during an investigation. We’ll also spend some time discussing two topics that can impact your ability to recover “hidden” data related to a file, given an MFT: resident records and alternate data streams.

Identifying Deleted Files Just about any forensics practitioner knows that “deleting” a file or directory doesn’t actually mean it’s erased from disk and unrecoverable. But specific to NTFS (and ignoring factors such as the Recycle Bin), why is that the case? We already mentioned that each MFT entry contains a flag that can indicate whether a given record is “Active” or “Inactive.” When you delete a file or directory, NTFS sets the flag in the corresponding MFT entry to “Inactive”—meaning that the MFT entry is now available for reuse. After all, NTFS wouldn’t be very efficient if MFT entries for deleted files remained forever—the MFT would continuously grow in size. But note that nothing else about the file, or the MFT entry, changes. Its actual contents still remain on disk, and are still “pointed to” by the inactive MFT entry (specifically, the $DATA attribute, which we’ll discuss in more detail shortly). As long as those clusters are not overwritten with other data, they can still be recovered. Similarly, as long as the deleted file’s inactive MFT entry is not reused, its metadata can be recovered.

How long will an inactive MFT entry remain present? It depends. NTFS will always try to replace an existing available entry before extending the size of the MFT. As a result, we often find that inactive entries only last for seconds or minutes on the primary operating system volume. On a secondary drive with less frequent activity, you may find that there is less “churn,” so inactive entries remain present for a longer period of time.

Analyzing Timestamps File timestamps are among the most important metadata stored in the MFT. You’ll often hear forensic analysts refer to a file’s “MACE” times—that’s short for the four types of NTFS timestamps: Modified, Accessed, Created, Entry Modified (MACE). We’ll detail what each of these mean momentarily.

An MFT entry for a given file will have at least two sets of attributes containing MACE timestampattributes. One set is contained within the “Standard Information” attribute (also known as $SIA, STANDARD_INFORMATION, or $SI). This attribute also contains various identifier codes, flags, and sequence numbers used by the operating system. The other set of MACE timestamps is contained within FileName attribute(s) (also known as FNA, FILE_NAME, or $FN). The $FN attribute stores the file name itself, its size, and its parent directory record number.

If you’ve ever looked at a file’s properties in Windows Explorer or in the default user interface for popular forensics tools such as Encase, you’ve seen Standard Information timestamps. They’re accessible via the Windows API and thereby exposed to user-mode applications throughout the operating system. In Figure 12-2, note that the properties for cmd.exe illustrate its $SI Created, Accessed, and Modified times. Windows Explorer does not show the Entry Modified timestamp—but nearly any disk forensics tool, including SleuthKit, EnCase, and FTK, will do so.

The definitions of the Standard Information ($SI) MACE timestamps are fairly intuitive (except perhaps for Entry Modified):

• Modified When the contents of a file were last changed

• Accessed When the contents of a file were last read

• Created When the file was “born”

• Entry Modified When the MFT entry associated with a file, rather than the contents of the file, was changed

In order to improve performance, Windows Vista, 7, Server 2008, and later versions of Windows no longer update the Accessed timestamp by default. This behavior is controlled by the registry key and value HKLMCurrentControlSetControlFileSystemNtfsDisableLastAccessUpdate. Even if this value is not set, NTFS may delay updates to the Accessed timestamp by up to an hour. |

So how do the $FN MACE timestamps differ from $SI? They refer to the MFT entry for the file name itself. For instance, Filename Created refers to the date and time on which the MFT entry for a given file was created. Filename Modified refers to the date and time on which a file name attribute in the MFT last changed, and so on.

If, like this author, you’re old enough to remember using MS-DOS, you might recall that file names were once restricted to being no longer than eight characters long (plus a three-character extension). NTFS actually maintains multiple sets of file name attributes—including both full, case-sensitive long file names and the MS-DOS 8.3 file name. That means you can recover another four MACE timestamps for the MS-DOS file name, although these will typically be identical to the “long” file name timestamp attribute.

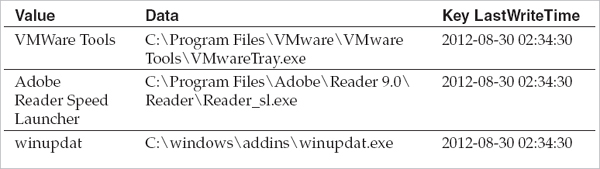

Why care about all of these timestamps—isn’t one set of MACE attributes enough? Recall that we mentioned that the $SI timestamps are available to user applications through the Windows API. That means that programs can both read and change any of these timestamps to a value that is intentionally not accurate—a process known as “time-stomping.” Utilities such as setmace can easily change any file’s $SI timestamps to an arbitrary value or to match those of another file. Malware droppers and installers will often automate this process as a counter-forensics technique, frequently cloning timestamps from legitimate system files in %SYSTEMROOT% to blend in and hinder timeline analysis.

GO GET IT ON THE WEB

Fortunately, it is a bit more difficult to manipulate the $FN MACE timestamps. Applications cannot read these values without obtaining access to the MFT itself, which requires raw disk access (typically through a device driver). Similarly, there are no supported methods for directly setting or changing the $FN timestamps—but there is an increasing number of indirect mechanisms to achieve this. For example, you can use setmace and other utilities to set both $SI and $FN to arbitrary values by performing the following steps:

1. Modify the Standard Information timestamps to the desired value.

2. Move the file to a new directory within the same volume.

3. Modify the Standard Information timestamps of the file in its new location.

4. Move the file back to its original path.

5. Modify the Standard Information timestamps once again.

This operation will result in a matching set of modified $SI and $FN attributes. Why does this work? When moving the file to a new destination on the same volume, Windows uses its $SI timestamps to set the $FN timestamps for the new MFT entry. Restoring the file to its original location and remodifying ensures that all eight MACE timestamps match the desired values. This trick is often referred to as “double-time-stomping.”

The setmace utility has yet another mechanism for manipulating the $FN attributes: writing to physical disk. This requires a kernel mode driver in Windows Vista and later versions, and as is the case with anything that directly tampers with NTFS metadata, it runs the risk of corrupting an MFT entry. In practice, most attackers rely on the previously cited double-stomping trick because it is simple, reliable, and does not require raw disk access.

Although it may be discouraging to learn about all the ways an attacker can tamper with NTFS metadata—fear not! There are several ways to defeat the most common counter-forensic techniques. We recommend incorporating $FN timestamps whenever conducting timeline analysis of NTFS metadata. If an attacker has simply tampered with a file’s $SI timestamps, its $FN attributes may reflect its true MACE times and thereby “fit” within a time frame of interest. Of course, reviewing eight timestamps per file ($SI and $FN) can make for a very cluttered timeline—so you may find it advantageous to just begin with Filename Created and Standard Information Created. As needed, you can then expand your “window” of analysis to include the remaining MACE timestamps. You can also rely on the other sources of time evidence we’ll discuss in this chapter, such as the registry, prefetch files, and event log entries, to help you determine time frames of interest that can help focus your analysis efforts.

Figure 12-3 depicts an example of time-stomping from a real-world case we investigated. An attacker’s backdoor installer modified the $SI timestamps of a malicious DLL (rasmon.dll) to match those of legitimate system files included with a standard installation of Windows. However, the $FN timestamps remained accurate (as confirmed through analysis of other corroborating sources of time evidence on the system).

Is a file intrinsically suspicious if its $FN timestamps don’t match its $SI timestamps? Unfortunately not—there are a number of cases where this can legitimately occur. For example, files installed from physical media (such as a CD), extracted from archives, or copied over network shares may retain some or all of their $SI timestamps from their source. In such cases, the $FN-created timestamps usually represent their true creation date and time on a given system.

It definitely can be challenging to keep track of the “rules” that Windows follows when updating these attributes during various operations. SANS maintains a useful Digital Forensics cheat sheet that summarizes how common file system tasks affect each of the $SI and $FN timestamps. This cheat sheet can be found at blogs.sans.org/computer-forensics/files/2012/06/SANS-Digital-Forensics-and-Incident-Response-Poster-2012.pdf. |

Resident Data We’ve spent a lot of time talking about the $SI and $FN attributes within a file’s MFT entry—but one of the most important attributes is named $DATA. This attribute typically provides a listing of the clusters on disk wherein a file’s contents reside. The clusters that contain all the data for a given file may not all be sequential, or more commonly called “contiguous.” When all the clusters for a given file are not contiguous, the file is said to be “fragmented.” When a file is fragmented, the $DATA attribute will list the “data runs” that, assembled together, contain the data for the file. You may wonder why NTFS would use this method for very small files since it would be inefficient. In fact, NTFS stores very small files in a different way, which is referred to as “Resident Data.”

Recall that a typical MFT entry has a fixed length of 1,024 bytes. That’s a decent amount of headroom for most files—even given the plethora of attributes it tracks. So for efficiency’s sake, NTFS will actually store the complete contents of files under approximately 700–800 bytes within the $DATA attribute itself. If this happens, a special flag named the Resident flag is set in the MFT entry to indicate this condition. In other words, by acquiring the $MFT and locating the entry for a given file that falls under this size constraint, you can recover its contents directly from the MFT file itself. This will remain true for files that have been deleted but still retain an inactive MFT entry.

If a file is too large, the $DATA attribute will only contain its cluster runs—there’s no such thing as a “partially” resident file. However, an MFT entry may contain slack space that includes previously resident data. If a file is initially small enough to be resident, and subsequently grows to exceed the available space, the MFT entry will be flagged non-resident and the $DATA attribute will update to point to the file’s clusters on disk. However, leftover space in the attribute may still contain the previously resident data from older versions of the file. Analysts often encounter artifacts of resident data during string searches across a forensic disk image. Therefore, if you ever get a search hit for file contents within the $MFT, now you know how that might have occurred.

Alternate Data Streams Earlier in this section, we explained that each MFT entry contains a $DATA attribute that either contains the contents of a resident file or the cluster runs of a resident file. NTFS also allows you to add additional named $DATA attributes, also known as alternate data streams (ADS), to a file’s MFT entry. Each of these attributes can point to a unique set of cluster runs, and thereby behave as files that are “attached” to a given primary file. The MFT entry will not contain separate sets of Standard Information or Filename attributes for an ADS, which means the ADS will not have its own distinct set of MACE timestamps.

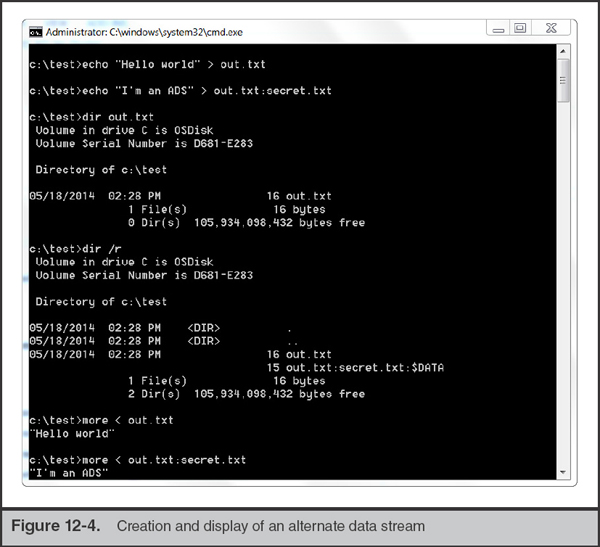

The standard naming convention for referencing an ADS is filename:streamname—most forensic utilities will follow this convention when displaying alternate data streams in a file listing. To better understand how such streams can be created, refer to the command-line sequence shown in Figure 12-4. In this example, we created a file named out.txt, containing the string “Hello World”, and then created an alternate data stream named out.txt:secret.txt, containing the string “I’m an ADS”. We used the dir command to list the file—both with and without the /r switch. Finally, we used the “more” command to display the contents of both out.txt and its alternate data stream.

Note that the contents of the “out.txt” file were unaffected by the addition of the alternate data stream. The size remained the same, and if we calculated an MD5 checksum, it too would have remained identical before and after the ADS was created. Streams effectively behave as separate files distinct from their “host” file. Also note that the standard dir command did not list out.txt:secret.txt:$DATA—we had to use the switch “dir /r” to display the stream. We also used the “more” command (rather than “type”) to display the contents of the ADS (the “type” command does not have the ability to read from an ADS).

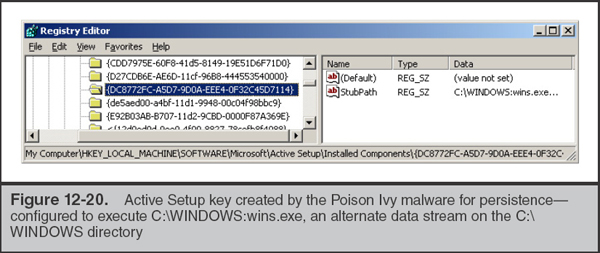

Hopefully you’re already thinking of ways that bad guys might abuse alternate data streams. In older versions of Windows, attackers would often hide malicious executable files in streams of legitimate files or folders (this is actually one of the default options available when building an instance of the Poison Ivy backdoor, for example). To make matters worse, initially there were no built-in mechanisms for listing alternate data streams via the command line or Explorer. The SysInternals “streams” and Frank Heyne’s LADS utilities are two free tools that provided the ability to list alternate data streams, until this functionality was included in later versions of Windows.

Beginning with Windows Vista, Microsoft implemented features and controls to limit malicious usage of alternate data streams. It’s no longer possible to directly run executable files out of an ADS, and the /r switch to the native dir command can list all available alternate data streams. However, be aware that it is still possible to store data in an ADS: this could include anything from RAR files to batch scripts or other interpreted code that’s loaded by a separate executable file.

If you’re using one of the popular commercial drive forensics tools, such as Guidance Encase, X-Ways Forensic, or AccessData FTK, alternate data streams will be listed in their own rows as if they were separate files. Encase 6 and 7, for example, use the display convention hostfile.ext·ADS rather than hostfile.ext:ADS. However, many free tools (such as FTK Imager) do not display ADS at all. Be sure to determine whether your toolkit of choice has this capability before examining a disk.

Windows continues to use alternate data streams for several legitimate purposes. For example, Internet Explorer and other web browsers append a stream named Zone. Identifier to downloaded files. Explorer refers to this stream to determine the origin of a file and enforce configured security controls if a user attempts to execute it. MSDN article “Known Alternate Stream Names” provides information on other valid ADS: msdn.microsoft.com/en-us/library/dn365326.aspx. |

MFT Analysis Tools Most, if not all, commercial forensic toolkits, including EnCase and FTK, are capable of extracting and parsing the contents of the $MFT and other NTFS structures. Fortunately, plenty of free and open source software is available to help you analyze such evidence—and we’ve provided a few of our favorites:

• The Sleuth Kit www.sleuthkit.org/sleuthkit Comprehensive open source toolkit for analyzing disk images and file system metadata.

• mft2csv code.google.com/p/mft2csv Suite of tools for converting the MFT to a CSV file and dumping single MFT entries to console for a specified file/path.

• analyzeMFT github.com/dkovar/analyzeMFT Another MFT parsing utility, capable of converting entries to CSV and Sleuthkit body file formats. If mft2csv fails to convert a given MFT successfully, try using this tool (and vice versa).

• plaso plaso.kiddaland.net A powerful timeline analysis engine that can incorporate evidence from Sleuth Kit and numerous other sources of metadata. This tool was designed to supersede the popular log2timeline utility.

INDX Attributes

An efficient file system should be able to quickly look up the contents of a directory to determine whether a file is present. However, recall that MFT entries only record the record number of their immediate parent directory. This makes enumerating the contents of a given directory inefficient—at minimum, you’d need to identify the directory’s record number, and then review each MFT entry to identify anything with a matching parent record number.

In order to optimize this process, NTFS provides a special attribute, $INDX (aka $I30), that is only present for directories. The INDX attribute maintains a B+ tree whose nodes track the contents of a given directory; by walking this tree, one can quickly enumerate the contents of a directory or find a file of interest. An INDX record may be resident within the MFT entry for a directory—this is referred to as the INDEX_ROOT attribute and represents the root node of the B-tree. Non-resident INDX records are referred to as INDEX_ALLOCATION and contain the subnodes that effectively describe the contents of a directory.

If you’ve ever done a string search for a file name across a forensic image, and ended up with ”hits” within a directory, an $I30 file, or data in disk slack prefaced with the heading “INDX,” you’ve encountered INDX attributes at work. |

So what’s the point of analyzing these obscure INDX attributes? Can’t we just identify the contents of any given directory by examining the MFT? Sure, but slack space within an INDX attribute may yield evidence of deleted files that are no longer tracked in the MFT. Specifically, the file system allocates INDX allocation attributes in fixed 4,096-byte chunks. As files are added to a directory, the allocation attribute must track more items and may grow to 8,192 bytes, and then 12,288 bytes, and so on. What happens when files are removed? The B+ tree subnodes are rebalanced, but remnants of the old files may remain in the resulting slack space—the allocation attribute does not dynamically shrink.

What’s particularly nice about INDX attribute entries (including those in slack) is that they contain the same metadata as a file name attribute in the MFT, including:

• File name

• Parent directory MFT record number

• All four MACE timestamps

• Physical and logical file size

The longevity of recoverable entries from INDX slack space will depend on the volume and frequency of file “churn” within a given directory. We’ve had tremendous success identifying remnants of everything from RAR archives staged for data theft to malware installers and other temporary files by parsing INDX records—but that’s typically true when the attacker’s working directory isn’t frequently used. If an attacker stages files within a user’s %TEMP% directory or paths such as %SYSTEMROOT%, you shouldn’t expect to recover nearly as much data from INDX slack.

For further reading on this admittedly complex topic, Mandiant’s Willi Ballenthin and Jeff Hamm have an excellent four-part blog post on acquiring and analyzing INDX records:

GO GET IT ON THE WEB

Willi also wrote a useful Python script, called INDXParse, that can parse NTFS INDX records and output CSV or bodyfile formats.

GO GET IT ON THE WEB

Figure 12-5 displays an example of the output generated by INDXParse.

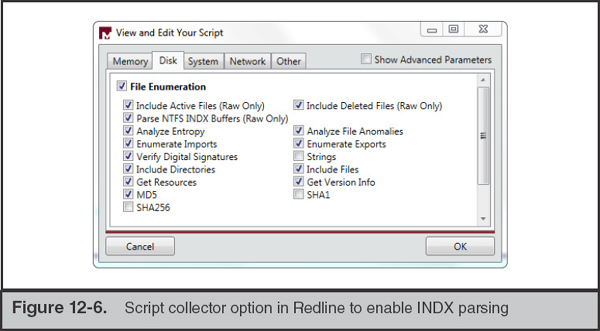

Redline, Mandiant’s free live-response analysis tool, can also be configured to collect and parse INDX records as part of its File Enumeration collector options (see Figure 12-6).

NTFS is a recoverable and journaled file system, so it maintains several logs designed to track changes to directories and files. The data within these logs can be used to reverse file system operations in the event of a failure. For a forensic investigator, they can also serve as a useful source of evidence for file system activity—particularly in cases where an attacker has attempted to delete files and disguise their activity. In this section, we provide a brief overview of the most important journaling logs: $Logfile and $UsnJrnl.

The NTFS log file, named $LogFile, tracks all transactions that change the structure of a volume. That includes file or directory creation/copy/deletes, changes to file metadata, and changes to INDX records. In fact, transaction entries within the $LogFile contain the same attributes that are present in the MFT. The $LogFile is located within the root of each NTFS volume and is typically 64MB by default. The log is circular and can roll over on a frequent basis, especially on system volumes. Like the MFT and other NTFS artifacts, you’ll need to use a forensic utility that provides raw disk access to copy the $LogFile from a running system.

The Update Sequence Number (USN) journal, named $UsnJrnl, provides a higher-level summary of changes to a volume. A given entry includes the type of change event that occurred, its corresponding timestamp, the file name, its attributes, and the MFT entry identifiers for the file and its parent directory. The journal is located in path $Extend$UsnJrnl. You may not find the USN journal on every volume because it’s not strictly required by NTFS. However, a number of common Windows services, such as the Indexing/Search Service and File Replication Service, require and enable it. Like the $LogFile, $UsnJrnl is a circular log and may roll over frequently on a system volume. In practice, $UsnJrnl often holds a longer history of changes to the file system than $LogFile because its entries track much less data. Additional details on the USN journal and Microsoft’s fsutil utility can be found at the following links:

GO GET IT ON THE WEB

The following free tools can help you parse and decode the $LogFile and $UsnJrnl artifacts:

GO GET IT ON THE WEB

LogFileParser code.google.com/p/mft2csv/wiki/LogFileParser

TZWorks Journal Parser tzworks.net/prototype_page.php?proto_id=5

parser-usnjrnl code.google.com/p/parser-usnjrnl

Volume Shadow Copies

The Volume Shadow Copy (VSC) service provides a mechanism for maintaining point-in-time copies, also known as “snapshots” or “restore points,” of files on an entire volume. Windows Server 2003 and Windows XP introduced VSCs in a limited capacity to support the NT Backup Service. Windows Vista, Windows 7, and Server 2008 provide a more comprehensive VSC implementation in place of Windows XP’s legacy System Restore Point feature. Among many improvements, it supports the useful “Restore previous versions” feature in Explorer, which allows users to roll back a file or directory to its state from a previous snapshot.

Some of the terminology can get confusing here. A “restore point” in the context of a VSC is a snapshot of a point-in-time state of a system. This is not the same as the legacy “System Restore Point” solution available in Windows XP. The XP implementation did not rely upon VSC, but instead used a process by which certain registry keys, and files with certain extensions, would automatically be copied to a protected path on disk. Due to the rapidly diminishing number of XP systems “in the wild,” this chapter will solely focus on the VSC implementation in Windows Vista, 7, and Server 2008. |

Examining shadow copies can be a useful way to recover files, registry keys, and other data that an attacker may have deleted or tampered with on a compromised system. Rather than get into the details of how shadow copies work, we’ll focus on a few basic characteristics that you should know before analyzing this evidence. It’s important to understand that VSC tracks changes to blocks of data on disk rather than logical files in their entirety. A mounted shadow copy will appear to be a mirror of the complete contents of a monitored volume, but the service only stores blocks that have changed between snapshot intervals. By default, Windows limits the maximum disk space consumed by VSC snapshots to 5 percent of the volume size on Windows 7 and 15 percent on Windows Vista.

Aside from this space limitation, the availability of data in shadow copies is also limited by the frequency of snapshots. Service pack installation, Windows updates, and driver installation can automatically trigger the creation of a snapshot, and users/applications can also manually request a snapshot. Finally, the system restore service can automatically create snapshots on a daily basis via scheduled tasks.

As you might realize, VSCs could help you recover data that might otherwise be deleted or is no longer present on a system’s current “live” file system. For example, given a known date and time of attacker activity, you might want to check the contents of the VSC taken in closest proximity to this period. This might yield files, data in registry hives, and even event log entries that would otherwise no longer be available.

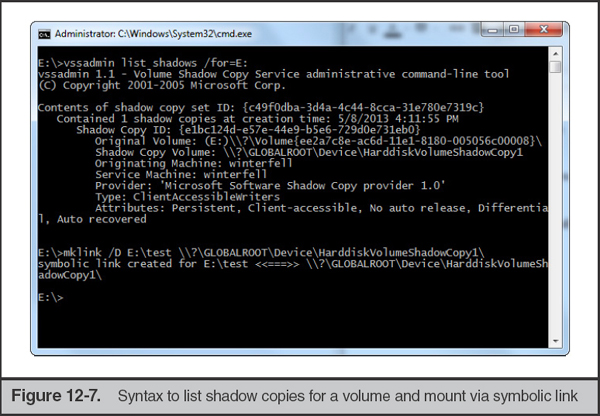

How do you recover data from a shadow copy? You’ll first need to mount your evidence—be it a disk image or a drive—on a Windows Vista or 7 system. This will allow you to use the Volume Shadow Service and related tools to interact with the shadow copy “mirrors” of the target file system. Then, from an Administrator command prompt, type the command

This will list each shadow copy on the volume and provide a shadow copy ID, originating volume ID, shadow copy volume name, originating host name, and other attributes. You can then create a symbolic link via mklink to mount the shadow copy to a directory of your choice:

Note that the target directory for your link cannot previously exist on disk. You also must ensure that you include a trailing slash at the end of the Shadow Copy Volume path. Figure 12-7 shows the output of these commands.

The shadow copy—a mirror of the volume’s entire file system at the time of the snapshot—will now be available within the linked directory.

Although vssadmin and mklink are all that’s needed to explore the contents of VSCs on a Windows system, several free tools are available that provide additional analysis capabilities or facilitate review on other operating systems. We recommend the following:

GO GET IT ON THE WEB

libvshadow code.google.com/p/libvshadow

Multiplatform library and tools for interacting with volume shadow snapshot data.

Shadow Explorer www.shadowexplorer.com

An easy-to-use user interface for exploring the contents of shadow copy snapshots.

VSC Toolset dfstream.blogspot.com/p/vsc-toolset.html

A user interface through which you can mount shadow copies, browse their contents, and execute batch scripts against them.

Windows provides a compatibility subsystem, Windows 32-bit on Windows 64-bit (WoW64), that helps ensure 32-bit applications run properly on 64-bit operating systems. Not only must WoW64 ensure that 32-bit applications are able to access the right versions of DLLs and other dependencies, it also must prevent them from overwriting or modifying system resources designed for other 64-bit applications. One such mechanism to do so is “file system redirector”—and it can have a significant impact on your investigative process.

The 64-bit versions of Windows maintain separate directories for files and dependencies used by 32-bit applications. If a 32-bit application attempts to load a file from %SYSTEMROOT%system32, then WoW64 will transparently redirect it to the directory %SYSTEMROOT%SysWOW64, which contains the proper 32-bit DLLs. The application remains unaware of this redirection and cannot “see” the actual %SYSTEMROOT%system32directory, which is reserved for 64-bit applications. Further detail is available on the Microsoft Developer Network at the following link:

GO GET IT ON THE WEB

Similarly, Windows maintains a separate Program Files directory, called Program Files (x86), for 32-bit applications, whereas 64-bit typically use the normal Program Filespath.

We’ll occasionally see attackers inadvertently use 32-bit malware droppers/installers on 64-bit systems and subsequently “lose” files that were subsequently dropped into paths such as C:WindowsSysWOW64, rather than the expected C:Windowssystem32. |

Hopefully you’re already realizing how this might impact your investigative process and tools on a 64-bit Windows system. If you use a 32-bit tool that relies on the Windows API to enumerate files and directories, they will effectively be “blind” to paths such as C:Windowssystem32 that are only accessible to 64-bit applications. Of course, if you acquire the Master File Table to reconstruct the file system, you wouldn’t be impacted by redirection—but still should ensure your scope of analysis includes all sets of redirected paths. WoW64 also redirects access to several widely used paths in the Windows registry, but we’ll provide further detail on that topic in “The Windows Registry” section of this chapter.

PREFETCH

Prefetch is a performance optimization mechanism that Microsoft introduced in Windows XP to reduce boot and application loading times. To provide some context on why prefetch exists and its value as a source of evidence, we’ll need to briefly delve into the details of Windows memory management.

The Windows Cache Manager is a component of the memory management system that monitors the data and code that running processes load from files on disk. Specifically, it tracks the first two minutes of boot processes and the first 10 seconds of all other applications’ startup. The Cache Manager then works with the Task Scheduler to write the results of these traces to prefetch files. The next time the system boots or a “prefetched” application executes, the Cache Manager can use these prefetch files like a “cheat sheet” to speed up the loading process.

The Evidence

Prefetch files are stored in %SYSTEMROOT%Prefetch. In this directory, you will find the following files:

• NTOSBOOT-B00DFAAD.pf The system boot prefetch. This always has the same name. On Windows Servers, this is the only prefetch file that will exist by default.

• Layout.ini Contains data used by the disk defragmenter.

• AppName-########.pf Up to 128 application-specific prefetch files, each with the extension .pf, and each representing an executable file that ran at least once. These files are named by concatenating the executable name, a dash, and a 32-bit hexadecimal hash derived from the file’s path. That means that the same executable, if ran from two different paths, will have two different prefetch files.

Figure 12-8 displays the partial contents of the C:WindowsPrefetch directory on a drive image loaded in FTK imager.

If you are analyzing a Windows Vista or Windows 7 system, you may see some additional files following the naming convention Ag*.db, such as AgAppLaunch.db. These files are generated by Superfetch—another performance optimization mechanism that can run concurrently with Prefetch. The format of these files is undocumented, but researchers have begun to analyze their structure and contents (reference: blog.rewolf.pl/blog/?p=214). |

Sometimes we come across systems that have no prefetch files in the Prefetch directory. In those cases, it may be helpful to check the registry key containing most of the prefetch settings: HKEY_LOCAL_MACHINESYSTEMCurrentControlSetControlSession ManagerMemory ManagementPrefetchParameters. Two of the values within this key are particularly important:

• EnablePrefetcher Controls the Prefetcher operation. Settings are as follows: 1 – Disable Prefetch; 2 – Prefetch Boot Files; 3 – Prefetch All Applications. Prefetch is often disabled on servers and systems with solid state drives.

• HostingAppList Specifies special Windows applications that load other executable modules, such as DLLHOST.EXE, MMC.EXE, and RUNDLL32.EXE. The Prefetcher incorporates the loaded module into the Prefetch hash value for these applications.

Note that even when EnablePrefetcher is set to 3, Windows will not create an infinite number of prefetch files—the maximum number is 128.

Analysis

Now that we’ve discussed how Windows creates and uses prefetch files, you’ve hopefully come to realize how they can be a valuable source of forensic evidence. Prefetch files serve as a record of programs that have executed on a system, regardless of whether the original executable files are still on disk. As we’ll discuss in this section, not only does the existence of a prefetch file prove that an application ran—but you can also determine when it ran, how many times, and from which path.

The simplest way to analyze prefetch files is to look at their Standard Information timestamps. The File Created date indicates when the corresponding application first ran. The Last Modified date indicates when it most recently executed. Of course, we’re limited by the fact that the prefetch file name does not provide us with path information. A prefetch file named EXPLORER.EXE-A840CB32.pf could have been created for the legitimate %SYSTEMROOT%Explorer.exe or for C:path oevilExplorer.exe. You can obtain this important evidence, along with other useful data, by analyzing the contents of the prefetch files themselves.

The excellent Hexacorn forensics blog published a useful article on how prefetch path calculation works, a Perl script that can generate the hashes within prefetch file names, and a list of precomputed hashes for Windows native executables in their default paths: www.hexacorn.com/blog/2012/06/13/prefetch-hash-calculator-a-hash-lookup-table-xpvistaw7w2k3w2k8/. |

So what additional evidence is available within prefetch files? The most useful is the list of full paths, including volume, to files loaded by the application within the first 10 seconds after it executed. This includes the executable itself, thus allowing you to determine exactly where it resided on disk. This can be important—C:UsersJohnDoesvchost.exe in prefetch is much more suspicious than C:WINDOWSsystem32svchost.exe. The list of accessed files will also include application dependencies (such as loaded DLLs) and files that may have been used for input or output.

The NTOSBOOT prefetch file is the only exception to this 10-second “rule.” It monitors accessed files through the first two minutes of the boot process. |

Figure 12-9 shows a prefetch file for the pwdump2x.exe password dumping utility displayed in NirSoft’s WinPrefetchView utility. Note that its parsed contents include references to the file to which the utility’s output was redirected, pwdump_out.txt.

Another scenario where this data could be useful is when analyzing the prefetch files for a “hosting” application. For example, an attacker might use rundll.exe to install or launch code in a malicious DLL. Because rundll.exe is one of the executables listed in the HostingAppList registry value, you’ll likely find multiple prefetch files for it—parsing the accessed file list in each prefetch file could help segregate legitimate usage from malicious.

We’ll often bulk-acquire and parse prefetch files across multiple systems in an investigation, and subsequently sort and filter on the path. This can be a great way to find evidence of previously unknown binaries executing within paths never used by legitimate programs (such as System Volume Information and Recycler). |

Each prefetch file also contains a counter that is incremented whenever its corresponding application has executed. This can also come in handy—in one recent investigation, we observed that a prefetch file for the attacker’s copy of Rar.exe indicated that it had been launched several dozen times within a two-month timespan. When combined with other sources of evidence, this helped support our findings that the intruder conducted a significant amount of data staging and theft on the system.

What happens if you run an executable file out of an alternate data stream (only supported in Windows XP and prior)? A file named identically to the primary file stream is created in the Prefetch directory. It will contain an ADS that is the actual prefetch data and follows the normal naming convention. For instance, running C:Windows:evil.exe (an ADS to the “windows” directory) could result in the creation of Prefetch file C:PrefetchWindows:evil.exe-A02B49FF. |

Finally, prefetch files contain a Last Run Time date and time. Why might this differ from the Last Modified time for the prefetch file itself? You may often find that the Last Run Time is approximately 10 seconds “earlier” than the Last Modified Time. This is likely caused by the delay between a program executing, the cache manager monitoring its first seconds of loading activity, and the prefetch file finally being written/updated to disk.

Prefetch Analysis Tools

Prefetch files contain binary data in a proprietary format. Known details are documented on the Forensics Wiki at the following link:

GO GET IT ON THE WEB

Although you can use file metadata such as the Created and Last Modified times to determine basic information such as the first and most-recent run times of the corresponding executable, you’ll need to parse the .pf files with a special-purpose tool in order to obtain encoded data such as the run counter, executable path, and accessed file listing. We’ve found the following three tools useful in performing prefetch analysis:

GO GET IT ON THE WEB

WinPrefetchView www.nirsoft.net/utils/win_prefetch_view.html

TZWorks Prefetch Parser www.tzworks.net/prototype_page.php?proto_id=1

RedWolf Forensics Prefetch-Parser redwolfcomputerforensics.com/index.php?option=com_content&task=view&id=42&Itemid=55

EVENT LOGS

Event logs are generated by the system-wide auditing and monitoring mechanisms that are built in to the Windows operating system. By reviewing these logs, you may be able to perform the following tasks:

• Identify successful and failed logon attempts and determine their origin

• Track the creation, start, and stop of system services

• Track usage of specific applications

• Track alterations to the audit policy

• Track changes to user permissions

• Monitor events generated by installed applications (such as antivirus, database, and web server services)

This section provides you with the information needed to locate and acquire the event logs on a Windows system. We’ll then spend the remainder of the section focused on analysis, prioritizing the types of log entries that we typically find to be most useful in an incident response investigation. Along the way, we’ll demonstrate how event logs can help you investigate common patterns of lateral movement, malware execution, and other patterns of activity common in compromised Windows environments.

The Evidence

All versions of Windows maintain three “core” event logs: Application, System, and Security. Activities related to user programs and commercial off-the-shelf applications populate the Application log. Application events that are audited by Windows include any errors or information that an application wants to report. Host-based security tools such as antivirus and intrusion prevention systems often record events to this log.

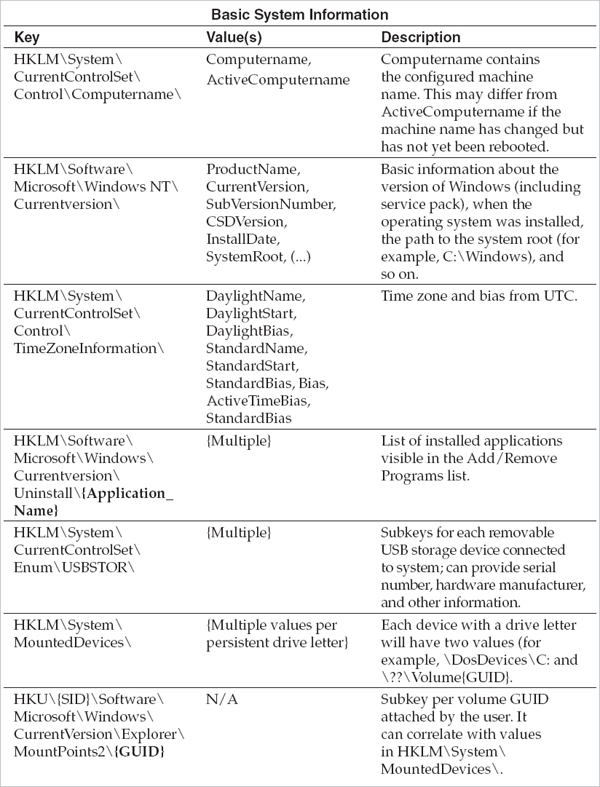

Windows authentication and security processes record events in the Security log. This log can include user logon and logoff attempts, account creation, changes to user privileges or credentials, changes to the audit policy, process execution, and file and directory access. Local or Group Policy settings can configure exactly which security events are captured and logged.

The System event logs events reported by a variety of core operating system components. Its contents can include Windows service events, changes to the system time, driver loads and unloads, and network configuration issues.

Acquiring the Windows event logs is a straightforward file acquisition task. Each log is stored in a separate file in paths specified within registry key HKEY_LOCAL_MACHINESYSTEMCurrentControlSetServicesEventlog. On Windows XP, Windows Server 2003, and prior operating systems, the default event log paths are

• Application %SYSTEMROOT%System32ConfigAppEvent.Evt

• System %SYSTEMROOT%System32ConfigSysEvent.Evt

• Security %SYSTEMROOT%System32ConfigSecEvent.Evt

Microsoft made significant changes to the event logging system in modern versions of Windows, beginning with Vista and Server 2008. EVT files were scrapped for a new XML-based format using the extension .evtx. The default paths were also slightly changed to the following:

• Application %SYSTEMROOT%System32WinevtLogsApplication.evtx

• System %SYSTEMROOT%System32WinevtLogsSystem.evtx

• Security %SYSTEMROOT%System32WinevtLogsSecurity.evtx

Microsoft also added a second category of logs, Applications and Services, that are used by individual installed applications or system components. These logs are also stored in EVTX files located within the directory %SYSTEMROOT%System32WinevtLogs. The Task Scheduler, Windows Firewall, AppLocker, Terminal Services, and User Access Control are a few examples of Windows features that can maintain their own logs (if enabled) under this category. Although we focus on the main Windows logs, we’ll mention how some of these other logs can be useful later in this chapter.

Analysis

Much like the registry, an entire book could be written on the myriad types of events generated by each version of Windows and their meaning. For the scope of this chapter, we focus on some of the events that are the most useful when investigating an incident.

Know Your Event IDs (EIDs)

Every type of event tracked in Windows event logs has an associated ID value. These IDs are often more useful than the event message itself when you are trying to research, filter, or cross-reference log entries. Covering the hundreds of EIDs that may hold forensic relevance is beyond the scope of this chapter. Fortunately, Microsoft provides a useful search engine, called Events and Errors Message Center, that you can query.

GO GET IT ON THE WEB

Several third-party websites maintain databases of Windows event IDs, including www.myeventlog.com and www.eventid.net. Although these sites often lack coverage of event IDs from the Applications and Services logs and are not officially supported by Microsoft, they can be a useful starting point.

The search engine will not only let you look up information about EIDs you are unfamiliar with, but it will also allow you to search for events that contain certain text or are generated by certain sources.

Be aware that Microsoft changed the EIDs for some (but not all) types of events between NT Kernel 5 (2000, XP, 2003) and 6 (Vista, 7, 2008). The newer EIDs often (but not always) equal the old value plus 4096 (for example, Successful Network Logon changed from 540 to 4624, whereas service start/stop events remained as EID 7036). Hooray for inconsistency! |

Understanding Logon Events

Nearly any Windows investigation you perform will include tracking and analyzing logon events. You may need to prove how a legitimate user accessed their own system, or how a malicious attacker gained remote access to it. You may need to track failed logon attempts indicative of password brute forcing, or you may need to determine how a compromised account was reused throughout an environment. Fortunately, the Security event log can cover all of these needs.

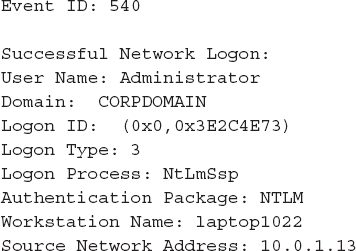

Let’s look at an example of a logon event and discuss what each of the key fields means. This particular example is taken from a Windows XP workstation, but you’d find the same information in later versions of Windows as well:

That’s plenty of cryptic terminology for just a simple logon event. Here’s a definition for each of these fields:

• User Name The account used to log on.

• Domain The domain associated with the user name. If the user name is a local account, this field will contain the system’s host name.

• Logon ID A unique session identifier. You can use this value as a search term or filter to find all event log entries associated with this specific logon session.

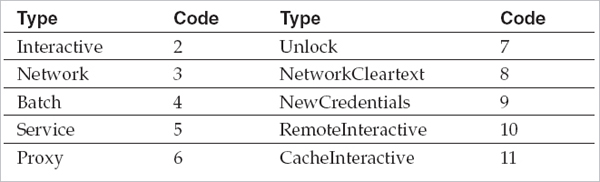

• Logon Type A code referencing the type of logon initiated by the user. The following table provides further detail on the Logon Type field and its possible values:

• Interactive The user logged on from the console (for example, from the host machine’s keyboard), via the RunAS command, or from a hardware-based remote access solution (such as KVM).

• Network The user logged on over the network. Mounting a share through the “net use” command or logging on to a web server via IIS integrated authentication are both examples of activity that would generate a Network logon.

• Batch The logon session generated by a scheduled task.

• Service The Windows service logged on using its configured credentials.

• Proxy Microsoft defines this as “a proxy-type logon.” We have yet to see this type of event in the wild, or any documentation explaining how it may be generated.

• Unlock The user unlocked the system (for example, after resuming from the screensaver).

• NetworkCleartext Typically generated by basic authentication logins on web servers.

• NewCredentials The user assumed a different set of credentials for a remote connection (such as through the use of the RunAs command).

• RemoteInteractive The user logged on via Terminal Services, Remote Assistance, or Remote Desktop.

• CacheInteractive The user logged on with domain credentials that were validated using locally cached data rather than contacting the domain controller.

Further details are available at the following link:

GO GET IT ON THE WEB

• Logon Process The process that initiated the logon event. Common options include NtLmSsp, Kerberos, User32, and Advapi.

• Authentication Package The security and authentication protocol used to process the logon event. Common options include NTLM, Kerberos, and Negotiate.

• Workstation Name The source system from which the logon originated. This is not always captured in the event log entry.

• Source Network Address The source IP address from which the logon originated. This is not always captured in the event log entry.

The Logon Type field can be a useful data point when searching or filtering the security event log. If you know an attacker accesses the system using stolen credentials via the “net use” command, you may be able to disregard Unlock and Console logons as noise from legitimate system usage. If an application service account is never supposed to log on interactively, you may want to focus on RemoteInteractive logon events with its credentials.

Does your environment use VNC or other screen-sharing remote access programs? If so, be aware that these utilities effectively emulate local access to a system. Logon events during their usage are typically of type Interactive or Unlock, not RemoteInteractive. |

Investigating Lateral Movement

In a compromised Windows environment, attackers typically leverage stolen, valid credentials (either local or domain) to move from system to system—a process called “lateral movement.” Many environments use common local administrator passwords for all systems, or subsets (for example, all workstations within a region), of their environment. If an attacker compromises a single system and obtains such a credential, they can move freely from host to host. Worse yet, if an attacker gains access to a domain administrator or equivalent account, they may be able to access any system within the domain (absent any network-layer restrictions) at will.

One of the challenges with investigating such lateral movement is that it can blend in with normal Windows activity. This is particularly the case for environments where application service accounts or privileged domain accounts are permitted to establish network and interactive logons, as typically used by “humans.” You may find yourself attempting to tease out evidence of attacker logins via such credentials amid a pile of legitimate “noise” from day-to-day operations.

Let’s use what you’ve learned about logon types to illustrate common methods of lateral movement and the evidence resulting from each approach:

• Our attacker, Bob, has interactive access to a Windows 7 workstation, alpha, through a persistent backdoor.

• Alpha is joined to a corporate domain, ACME.

• The backdoor runs under the context of the domain user who owns alpha, ACMEEve.

• Through password dumping and other intrusion activities, the attacker has obtained credentials for two accounts:

• A local administrator, localAdmin, that is configured with an identical password on each workstation in the ACME domain

• A domain administrator, ACMEdomainAdmin, who has full access to all workstations and servers in the environment

Bob uses the backdoor to invoke a command shell under the context of ACMEEve. He then uses various commands, in combination with the credentials for accounts localAdmin and ACMEdomainAdmin, to access four systems, each in a different manner:

1. He mounts the C$ share for workstation beta, from source system alpha, to transfer malware and tools, using the following command:

2. He uses the SysInternals PSExec utility to remotely execute a command on workstation gamma, once again from source system alpha, using the following command:

3. He establishes a remote desktop connection to server zeta, once again from source system alpha, using the Windows built-in RDP client (username ACMEdomainAdmin, password worsePassword).

4. He browses to an IIS intranet web server, delta, that requires NTLM authentication. Bob uses ACMEdomainAdmin credentials.

Each of these actions will result in a login event—but what types, and where would they be logged?

• Action 1 will generate a logon type 3 (network) recorded on beta because a local account was used.

• Action 2 will generate a logon type 3 recorded on beta, as well as on the ACME domain controller, because a domain account was used. In addition, a “Logon attempt using explicit credentials” event (EID 4648) will be recorded on alpha and reference both the attacker’s use of the credentials ACMEdomainAdmin and the target system beta. This event is generated due to the use of PsExec under a different set of domain credentials than the attacker’s current session (ACMEEve).

• Action 3 will generate a logon type 10 (RemoteInteractive) recorded on zeta as well as on the ACME domain controller.

• Action 4 will generate a logon type 3 (due to using IIS authentication) recorded on delta as well as on the ACME domain controller.

These examples illustrate the impact of different login mechanisms and the usage of local versus domain accounts on both the types of events generated, as well as the systems on which you can find this evidence. This can have significant ramifications when you are conducting investigations in large environments—particularly if the attacker has heavily leveraged local accounts—because tracking lateral movement may entail examining logs on every system.

Kerberos authentication events for lateral movement typically will not include the source system or IP address. However, network logons using Kerberos are often immediately preceded by a login from the source system’s machine account. Keep an eye out for these events to help identify the origin of a Kerberos authentication attempt. |

It can be difficult to ascertain legitimate versus suspect activity in an event log, especially at the early stages of an investigation when you may have minimal leads. When examining logs over a specific period of time and identifying events of interest, be sure to check against the entire log history to determine just how common or uncommon they are. For example, a service event that conveniently falls within the time frame of attacker activity may happen to occur on a frequent basis—context that is often missed when focusing too exclusively on a single window of time. It is also often helpful to aggregate and search logon events to determine the source systems and accounts most frequently used during normal activity. This can help make anomalous logons using valid credentials more obvious amid all the noise on a busy system.

Auditing Changes to Accounts and Security Settings

Several types of events within the Security log record changes to user accounts and security policies. Reviewing these events can help you determine whether an attacker has tampered with a system’s security settings:

• Account management events indicate whether a user account has been created, deleted, enabled, or disabled, as well as similar changes to account groups.

• Policy change events capture changes to system security settings, including the audit policies that specify what is recorded in event logs.

• An event noting “The audit log was cleared” is recorded whenever a user clears the event logs, irrespective of audit settings. This message includes the username responsible for the change.

Process tracking, also known as detailed tracking or process auditing, generates an event in the Security event log every time a process is executed or terminated. These events include the full path to the executable on disk, the process ID, the parent process ID, and the associated logon ID under which it executed. This evidence can provide a detailed account of everything that a user executed within a specified period of time. By default, this audit setting is not enabled—you’ll have to turn it on in either local audit policy or through Group Policy Objects.

Sometimes we wish process tracking logged a few more details. Notably, the process arguments and the parent process name are not included as part of the process tracking event. There are many cases where those two pieces of information would be invaluable, especially when attackers use command-line tools. For example, if an attacker used a command-line tool to compress data prior to theft, you would see the source and destination directories and perhaps even a password, if one was used.

Figure 12-10 displays an example of a Process Created event in which account winterfell

yankaz executed C:Windowssystem32mmc.exe.

Note that audit process tracking will result in a significantly higher volume of logged events. If you are enabling this feature, make sure you increase the Maximum Security Event Log setting to ensure that the log does not reach capacity and roll over too frequently. In some cases, process tracking may have a severe performance impact, so be sure to test this setting prior to enabling it on any critical systems. |

Analyzing Service Events

Windows services are frequently utilized as persistence mechanisms for both commodity and targeted malware. In addition, nonpersistent malware will often install itself as a “temporary” service to run under a privileged context. Fortunately, every time a service is started or stopped, the Service Control Manager (SCM) creates an entry in the System event log.

When a service is explicitly started (such as upon its initial installation), SCM logs an event indicating “The [servicename] service was successfully sent a start control.” This event usually includes the user account responsible for initiating the service. Once the service begins running, SCM logs “The [servicename] service entered the running state.” The log entries for service stoppages follow a similar pattern. As an example, the SysInternals PsExec utility installs itself as a Windows service on the remote system receiving a connection. When the PsExec session ends, the service is terminated and subsequently uninstalled. The following table illustrates how the System event log would capture this sequence of events.

When conducting investigations, we’ll often use the username recorded along with EID 7035 events as an important data point or indicator of compromise. For example, in one case we were able to distinguish between legitimate and unauthorized usage of the PsExec utility based on the user account that started the service, as recorded in these System event log entries. |

There are hundreds of Windows services on an average system, and many change, start, and stop frequently during normal system operations. In our experience, it’s fairly difficult to find a “suspicious” service by simply reviewing every event in an entire log. However, if you have a known period of attacker activity, perhaps near a series of logon events, you may find that there are far fewer events to parse and review. It is definitely worthwhile to follow up on any new services that start for the first time during such periods of activity.

If Process Tracking is enabled, the Security event log will record whenever a new service is created through the SCM (EID 601 or 4697). |

You also may be able to search or filter the System event log for “known-bad” service names previously identified during analysis of other systems. Finally, you may find that an attacker, or sometimes antivirus, deleted a malicious service binary at some point after it was installed—but did not remove the corresponding service configuration keys in the registry. This will result in the SCM logging failure events each time it unsuccessfully attempts to load the service.

From our earlier example, if PsExec was not legitimately used in our sample environment, this service event could be a useful indicator of compromise when analyzing other systems or searching aggregated log data. The same principle goes for any service name used for malware persistence by an attacker—if it’s unique, use it as an indicator when reviewing event logs. For example, some versions of the popular password dumping and hash-replay toolkit Windows Credential Editor create a service named WCE Service upon run time, which can serve as a simple and effective IOC.

Other Log Analysis Tips

Here are some additional tips concerning log analysis:

• If you’re tracking down suspicious but yet-to-be-categorized activity on a system, be sure to check the Application event log to see if any antivirus alerts were generated during your period of interest. This may help point you in the direction of suspicious files, and even help you determine whether your investigative leads were due to a common, nontargeted malware infection or something warranting further review.

• This section has highlighted some of the forensic value of evidence in the event logs. However, if the maximum log sizes are so small that they roll frequently, you may find that you have insufficient information available for analysis. Disk space is inexpensive—most users won’t notice a missing 500MB or 1GB of data—so consider reviewing and increasing your log size limits to improve retention on all Windows endpoints.

• If you acquire event logs from a Windows XP or Server 2003 system, you may find that Event Viewer or other analysis tools report that the EVT files are corrupted. This is a widely known issue that can easily be repaired by the free FixEVT utility available at the following website:

GO GET IT ON THE WEB

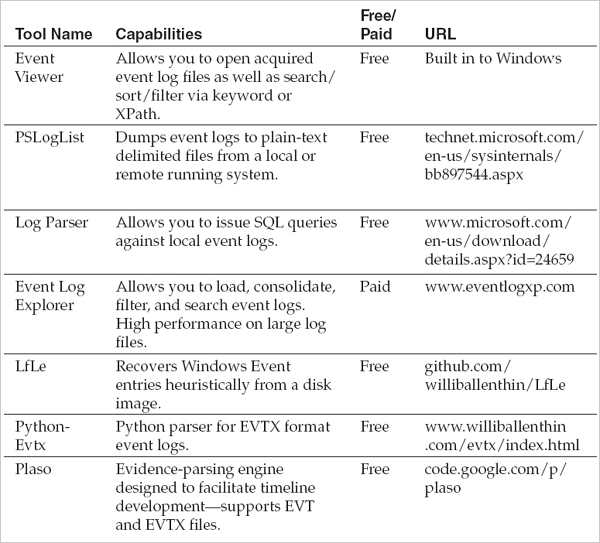

Event Log Analysis Tools

Event log analysis tools provide the ability to acquire, view, search, sort, and filter EVT and EVTX files since they are not intrinsically “human readable.” We’ve summarized some of our favorite tools in the following table.

Note that some of these tools, such as PSLogList, only can acquire and parse event logs from a running system. Others, such as Event Viewer, can load raw log files that were copied from a forensic acquisition.

It’s worthwhile to note that Microsoft’s own built-in Event Viewer was significantly enhanced in Windows Vista and later versions of the operating system. Although it still struggles to display and search large log files, it has excellent search and filtering capabilities—especially compared to the old version—and does a fine job in a pinch.

Time to get confused! Event log entries are stored in UTC time. However, different event log analysis tools convert these timestamps in different ways—which can lead to trouble if you’re trying to line up various sources of evidence. For example, the Windows Event Viewer displays events in your local system time, regardless of the log’s original source. To avoid headaches and errors, always double-check how your tools deal with timestamps—and set the time zone of your forensic analysis system to UTC time to make things easier. |

SCHEDULED TASKS

The Windows Task Scheduler provides the ability to automatically execute programs at a specific date and time or on a recurring basis. It is functionally similar to the cron utility built in to most Unix-based operating systems. Applications can programmatically create scheduled tasks through the Windows API; in fact, many do so to enable features such as periodic checks for software updates. Users can create scheduled tasks through the console via the “at” command or the “schtasks” command. Windows Vista and later versions also provide an updated implementation of the Task Scheduler that includes a Management Console snap-in for creating, editing, and deleting tasks.

Attackers often use scheduled tasks to execute malware on a remote compromised system without the need for “helper” utilities such as PsExec, which may increase the likelihood of detection. This technique is especially common when the attacker’s access is limited to a command shell.

Creating Tasks with the “at” Command

Using the “at” command is the simplest way to create a scheduled task. Let’s look at a few simple examples to illustrate its usage. Note that use of the “at” command requires a minimum of local administrator privileges on the local host (for locally created tasks) or the target host (for remotely created tasks):

•

Run “evil.exe” once at the next time the clock is 16:25.

•

Run “beacon.exe” at 10:25 on Monday, Tuesday, and Wednesday on a recurring basis.

•

Run “C:RECYCLERpassdump.bat” on “alpha” the next time its local system time is 08:00.

Notice anything interesting about the third example? It creates a task on a remote system (alpha) rather than running it locally—yes, you can do that. Note that C:RECYCLERpassdump.bat must be present on alpha, not the source system, for this to work. Also be aware that creating a remote scheduled task requires Administrator privileges on the remote system; if you haven’t already authenticated with the appropriate credentials, the “at” command will return a Permission Denied error.

In the preceding examples, you may have wondered what time zone the “at” command uses. The times specified are the local time on the system the “at” job is scheduled on. In some cases, you may not even know what the time zone of the system is—especially for remote systems that are part of a global infrastructure. Attackers often run the command net time \targetHost prior to scheduling a remote task, to check the system’s local time. That’s an effective way to go about scheduling tasks—there is no need to determine or know what the time zone is on any system.

After you’ve created some scheduled tasks, you can list existing tasks on a local or remote system by running the “at” command without any additional parameters (for example, “at” or “at \targetSystem”).

Scheduled tasks created via the “at” command—either local or remote—run under the context of the SYSTEM built-in account. Attackers often exploit this to execute malware, such as certain password dumpers, that may require greater privileges than a normal Administrator user would have on Vista and later versions of Windows. |

Finally, you’ll notice that scheduled tasks created by “at” are each assigned an ID—starting with “1” for the first active scheduled task, and subsequently incrementing for subsequent created tasks. You can list the details of an existing job by running

where # is the ID of interest. You can also delete a job by running

What’s in a name? Scheduled tasks created using the “at” utility are always unnamed. Such jobs are instead referenced based on their ID (At1, At2, and so on). In contrast, tasks created through the Windows API or “schtasks” can have descriptive names. It goes without saying that unnamed tasks are not always malicious—system administrators often use the “at” command for a variety of maintenance and automation purposes. |

For further reference on the syntax for the “at” command, refer to Microsoft’s TechNet page:

GO GET IT ON THE WEB

Creating Tasks with the schtasks Command

The “schtasks” command is a more robust command-line utility for managing scheduled tasks that Microsoft added to Windows XP Professional, Windows Server 2003, and all subsequent versions of the operating system. It supports the ability to create tasks with descriptive names, configure the account context under which they execute, enable complex schedules, and many more features.

Attackers use “schtasks” less frequently than “at”—it’s a more complicated command, and most of its advantages only apply to legitimate usage. Refer to Microsoft’s TechNet page on “schtasks” for further details on its usage:

GO GET IT ON THE WEB

Be aware of an important distinction between the “schtasks” command and the “at” command. Running “schtasks” without any parameters lists all scheduled tasks created by both “schtasks” and “at”. However, running “at” without any parameters only lists scheduled tasks created by “at”. |

The Evidence

Evidence of currently scheduled tasks, as well as records of those that previously executed, is readily available from a running Windows system or a “dead” drive image. The files and logs you’ll need are detailed in this section.

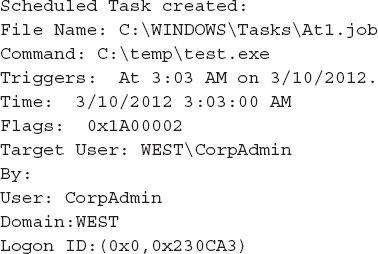

.job Files

Configuration data for scheduled tasks is stored in .job files—one per task—within the %SYSTEMROOT%Tasks directory. These files are encoded in a proprietary file format documented in the following TechNet article:

GO GET IT ON THE WEB

In older versions of Windows (prior to Vista), the Task Scheduler service deleted .job files after a task successfully completed, thus significantly reducing the likelihood of recovering these artifacts from previously executed tasks. On Vista and later versions, .job files can remain until the Task Scheduler service stops or restarts, typically upon shutdown or reboot.

Task Scheduler Logs

The Windows Task Scheduler service logs the start time and completion of previously executed tasks to a plain-text file named SchedLgU.txt. This file grows to a maximum of 32KB before rolling.

• %SYSTEMROOT%SchedLgU.txt Windows 2000, Server 2003, and XP

• %SYSTEMROOT%TasksSchedLgU.txt Windows Vista, 2008, and later

Windows Vista, 7, and Server 2008 added a number of specialized event logs—including one that is responsible for tracking the Task Scheduler service activity: Microsoft-Windows-TaskScheduler%4Operational.evtx. As we’ll discuss in the next section, this log contains far more evidence than SchedLgU.txt, including the full path to processes executed by a scheduled task as well as the user account that registered or created the task.

You can easily enable the Task Scheduler log via the wevtutil utility: wevtutil sl Microsoft-Windows-TaskScheduler/Operational /e:True. |

Finally, be sure to acquire the system security event log (Security.evt or Security.evtx). It can be another useful source of evidence for scheduled tasks if Process Tracking has been enabled.

Analysis

Now that you know how to acquire all the scheduled task-related artifacts from a system, we can focus on how to parse and interpret their contents. In this section, we discuss analysis of .job files and entries within both kinds of scheduled task logs.

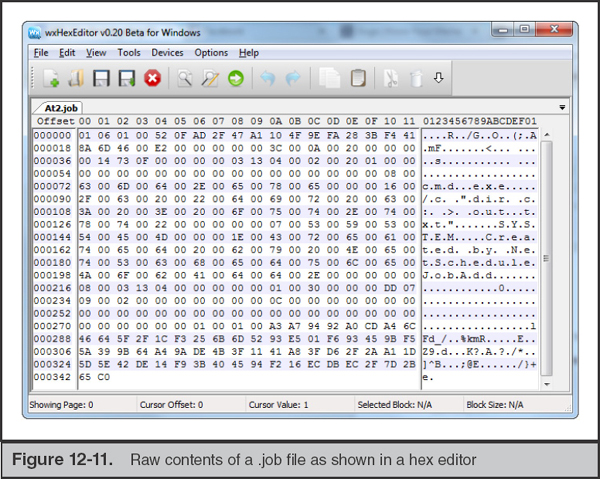

Analyzing .job Files

As mentioned earlier, you’re most likely to recover .job files from scheduled tasks that are set to run in the future or on a recurring basis. So given a task of interest, how do you analyze its corresponding .job file?

In a pinch, you can always use a hex editor or the “strings” command to view the contents of a .job file (see Figure 12-11). Key items such as user information and the full path to whatever is being launched by the task are normally “human readable” and will stand out.

However, we recommend that you use a tool that properly parses the .job file format and reconstructs the configuration data therein. Jamie Levy’s jobparser.py, recently released as of this writing, does an excellent job of analyzing .job files across all major versions of Windows (see Figure 12-12).

GO GET IT ON THE WEB

Looking for other evidence that a scheduled task executed on a system of interest? The Last Modified time for the %SYSTEMROOT%Tasks directory often corresponds to the most recent creation or completion of a task (resulting from the addition or deletion of the .job file). You may also find that registry keys for the Task Scheduler service are last modified during the most recent task execution. |

Analyzing SchedLgU.txt

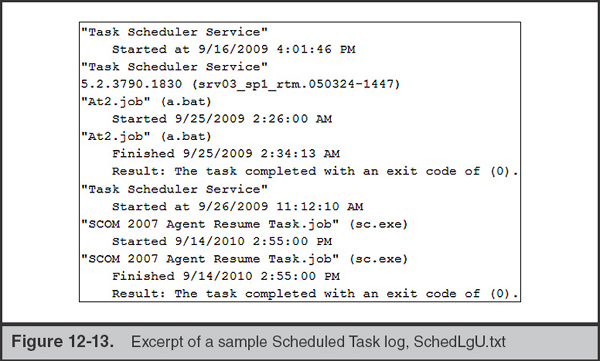

The SchedLgU.txt log can be a useful source of evidence for previously executed tasks, and is in fact the only such resource at your disposal when analyzing Windows 2000, Windows XP, and Server 2003 systems. Fortunately, analyzing this log is straightforward and requires no special tools (although you should be aware that its contents are encoded in Unicode). Figure 12-13 is an excerpt of SchedLgU.txt taken from a Windows XP system.

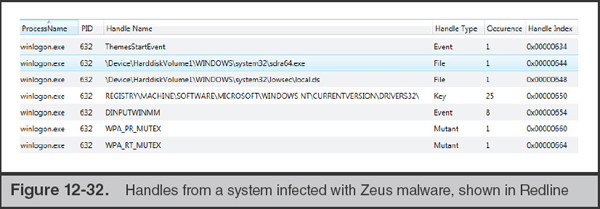

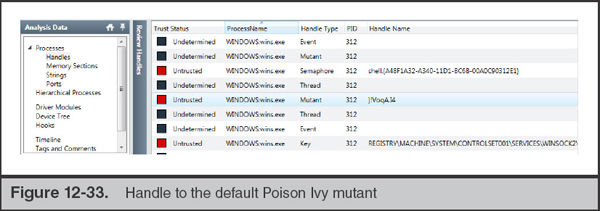

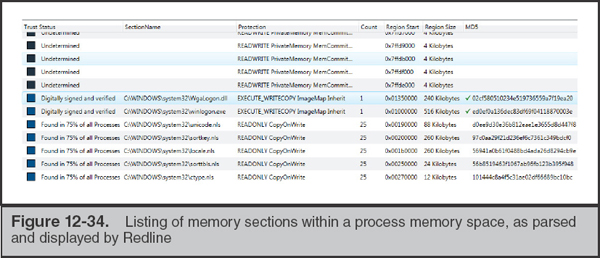

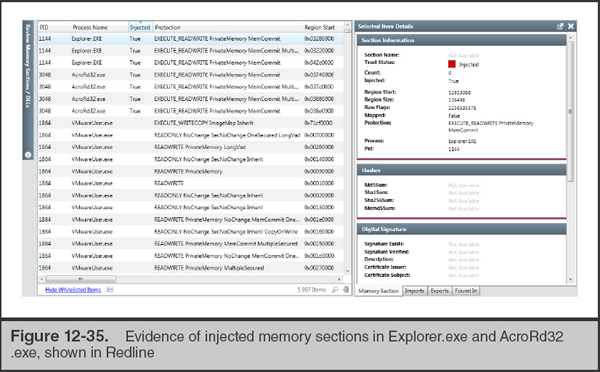

• An unnamed scheduled task, At2.job, executed a.bat on September 25, 2009 at 2:26:00 A.M. and ran until completing at 2:34:13 A.M.