8

Locating Potential Fraud

Fraud is deception that is perpetrated with either financial or personal gain in mind. Many people think about identity theft or credit card theft when thinking about fraud, but that’s only a very small part of the picture. A person claiming or being credited with another’s accomplishments or qualities is another form of fraud. The key word when thinking about fraud is deception, which takes many forms – for example, disinformation and hypocrisy. Consequently, it’s important to come up with a solid definition of what fraud is when working with ML applications and data, which is the goal of the first part of this chapter.

Determining fraud sources is essential with ML because fraud sources generate data – deceptive data. Yes, the data looks just fine, but when you study it in depth, it contains one or more of the five mistruths of data described in the Defining the human element section of Chapter 1, Defining Machine Learning Security. Using this data will cause hard-to-locate issues within your ML models and make it difficult to ensure a secure environment.

In the ML realm, fraud either occurs in the background or in real time. Background fraud is the type that a hacker or another individual hopes will go unnoticed while the perpetrator makes small changes a little at a time. This is the kind of fraud that eventually improves sales for one company, while starving another, because the data used for comparisons is just a little off or resources are being siphoned off. Real-time fraud (the kind that most people think about when the term fraud is mentioned) occurs quickly with the intent of obtaining a fast gain, such as identity theft. With these issues in mind, this chapter discusses these topics:

- Understanding the types of fraud

- Defining fraud sources

- Considering fraud that occurs in the background

- Considering fraud that occurs in real time

- Building a fraud detection example

Technical requirements

This chapter requires that you have access to either Google Colab or Jupyter Notebook to work with the example code. The Requirements to use this book section of Chapter 1, Defining Machine Learning Security, provides additional details on how to set up and configure your programming environment. When testing the code, use a test site, test data, and test APIs to avoid damaging production setups and to improve the reliability of the testing process. Testing over a non-production network is highly recommended, but not necessary. Using the downloadable source is always highly recommended. You can find the downloadable source on the Packt GitHub site at https://github.com/PacktPublishing/Machine-Learning-Security-Principles or my website at http://www.johnmuellerbooks.com/source-code/.

Understanding the types of fraud

Fraud entails deception. The kind of deception depends on the fraud being perpetrated. For example, a compelling product message could result in identity theft, stolen credentials, or other resource gains for the perpetrator. Creating a condition in which one entity receives the blame for another entity’s actions is another form of fraud. When considering fraud, it pays to have a Machiavellian mindset because the deception can become quite complex. However, the majority of fraud is quite simple: someone is deceived into giving someone else a resource the other wants for no apparent return. It amounts to a kind of theft.

There are many types of fraud. Some are watched by professionals, while others aren’t, possibly because no one thinks to monitor them. With this in mind, here are a few common types of fraud that professionals do monitor:

- Bank account takeover: The common way to perpetrate this fraud when using ML techniques is to install a key logger on a system that someone uses to interact with their bank account over a computer. However, it’s also possible to gain access to the information through the mail system, using a social engineering attack, or by hacking an unsecured Wi-Fi connection. The goal is to drain the person’s bank account or to perpetrate other forms of fraud using the person’s credentials. Constant monitoring can help with this kind of fraud and, interestingly enough, you could build an application to provide automated alerts.

- Charity and disaster: This particular kind of fraud often affects businesses with a benevolent urge directly. The disaster or charity is real, but the organization that is collecting funds or goods does the least that it can, so that little (if anything) of what is donated ends up going to the charity or disaster. An application can help in locating information about the organization doing the collecting. However, this is one case where human intervention is needed to keep the fraudsters at bay.

- Debit and credit card: There are many ways to perpetrate this fraud, including the use of card skimmers and persons of dubious character at restaurants and other locations making copies of your card. A man-in-the-middle attack works well for computer-based fraud. ML techniques can also be used to break into business databases to steal the information there. Having a chipped card does reduce the potential for fraud, but can’t eliminate it. Some sites recommend using a third-party purchasing agent such as PayPal, which does provide a measure of protection, but only if PayPal isn’t broken into by a hacker.

- Driver’s license: A driver’s license provides proof of identity for many situations, such as voting or boarding a plane. Often, you must provide a driver’s license when checking into a hotel or engaging in an activity. Recent advances in driver’s license construction have made copying a driver’s license harder, but not impossible. The Real ID (https://www.dhs.gov/real-id) initiative also helps. However, the technology doesn’t protect the actual number when used for online transactions, so there is still significant risk.

- Elderly people: Some fraud specifically targets the elderly because they’re considered easy prey, especially when it comes to navigating computer systems. Because elderly people leave breadcrumbs that ML algorithms can use to create a pattern for fraud, perpetrators have more than the usual amount of information available for a social engineering attack. One way around this problem would be to use ML methodologies to give everyone a greater level of protection from prying eyes.

- Healthcare: Someone can use someone else’s medical identification to obtain their care. ML could help thwart this type of attack by looking for inconsistent usage patterns. Two (or possibly more) people using the same ID will leave different usage trails, something that a human auditor might miss, but an ML application could be trained to see.

- Internet: Most of this book has explored the many ways hackers gain some advantage illegally through the internet. Many forms of fraud involve stealing user credentials and then using those credentials to make purchases. The products are then sold for cash. Sometimes, a hacker doesn’t even take that many steps – the stolen credentials are used to empty bank accounts or cause other problems for the user.

- Mail: It’s easy to use ML techniques to create a profile about people using data sources such as Facebook. The profile is then used to create a targeted letter that is sent through the mail system to fraudulently obtain money or other goods from the recipient. Because people don’t quite get the connection between paper mail and the internet, this particular kind of fraud can be quite successful. Another form of this kind of fraud is to create a profile of somebody who receives money in the mail and then steal the check. However, this approach is becoming far less common with the use of techniques such as a direct deposit.

- Phishing: The internet provides many methods for a smart fraudster to gain access to an entity’s personal or financial information through the use of social engineering attacks. It may be easy to think that this is an individual’s problem until it affects an organization in a big way when the individual loses security clearance, is sent to jail, blamed for another’s action in a way that reflects badly on the business, and so on. Unfortunately, phishing also affects organizations as a whole, so this isn’t just something that individuals need to worry about. Training does help with this sort of fraud, but keeping the sources of phishing out of the organization in the first place is better. The ML techniques mentioned throughout this book can help you look for patterns that indicate someone is employing phishing that you can avoid.

- Insurance: Insurance fraud is one in which someone makes a false claim. Damage may have occurred, but the claim is exaggerated or perhaps an accident is staged and hasn’t happened for real. In some cases, a third party will create a situation where some damage occurs, but the damage is self-inflicted, such as someone jumping in front of a car to make an insurance claim.

Email is fraud’s friend

This chapter could become a book if it were to cover all of the creative ways in which fraud occurs through email. One of the most famous forms of fraud is Nigerian Fraud. There are whole websites devoted to just this one form of fraud (see https://consumer.georgia.gov/consumer-topics/nigerian-fraud-scams as an example). There are also debt elimination, advanced fee, cashier check, high-yield investment, and a great many other email-based fraud attacks. They all have one thing in common: they use email to perform their tasks to take money or other resources from people. As such, monitoring the email stream to your organization using a combination of off-the-shelf and customized ML applications can reduce the problem by reducing these emails. Of course, there still has to be a mechanism in place for dealing with false positives, but targeting your defenses is a good way to reduce false positives as well. Oddly enough, one of the suggested methods for dealing with this problem that seems effective is to mark all emails that come from outside the organization with the word [EXTERNAL] in the subject line.

- Stolen tax refund: This is one of those weird, never would have thought of it, types of fraud. Someone steals credentials from someone else, fills out their taxes as soon as possible, and then claims the refund for themselves. When the real filer sends in their tax information, the IRS rejects it because the person has already filed. Automation can help prevent this problem by allowing a person to file their taxes early (cutting off any attempt at a fraudulent return).

- Voters: This is a kind of fraud that many people don’t fully understand. It’s more than simply getting the wrong person into office or spending money on bonds that no one wants. Voter fraud can and does shape all sorts of issues that affect everyone, such as new taxes or drawing new voting district lines. ML techniques could at least help in locating votes by people who have passed away or are currently too young to vote.

This list doesn’t include some types of common fraud that are unlikely to affect a business or its applications directly, such as adoption fraud, holiday scams, or money mules. However, because these kinds of fraud do affect your employees and make them less efficient because they’re thinking about something other than work, you also need to be aware of them and provide the required support. There are many other kinds of fraud that can take place and it’s important to remember that the people who perpetrate fraud can be quite creative. The simple rule to follow is that if something seems too simple, too good to be true, or simply too convenient, then it’s likely a fraud of some sort. A fraudster uses human weaknesses to their advantage.

Now that you have a better understanding of fraud types, it’s time to look at the source of fraud. Often, the source of fraud is the entity that is most trusted by the target. It’s easier to perpetrate fraud when there is a trust relationship. However, it’s important to understand that organizations have trust relationships with other organizations, organizations trust a particular application or service, and people trust other people. To understand the scope of fraud sources, it’s important to stretch the concept of trust to fit all sorts of scenarios.

Defining fraud sources

A fraud source is an entity that is generating deception. People are most often considered fraud sources, but organizations and even non-entities (things that someone would normally not consider an entity, such as a smart device) can become fraud sources as well. An application that is fed misinformation can become a fraud source by outputting the incorrect analysis of data input. A user is deceived into believing one thing is true when another thing is actually true. The deception isn’t intentional, but fraud occurs just the same. However, if the application contains a bug, then it’s broken and needs to be fixed; it isn’t a fraud source in this case. Organizational fraud usually involves a group of people working together to develop this deception. For example, an organization could try to attract investors for a product that doesn’t exist yet and will never exist (vaporware is specific to the computer industry because it involves non-existent hardware or software).

Distinguishing between types of fraud and fraud sources

Most articles online on this topic cover the different kinds of fraud. For example, identity theft is a type of fraud because it focuses on what an entity is doing. Unfortunately, without some knowledge of the potential sources for identity theft, the task of locating the culprit and ending the activity is made much harder. A fraudster is the primary perpetrator of identity theft, followed by hackers. You already have detailed knowledge of other organizations, company insiders, and customers (or fraudsters posing as customers; you don’t know until you ferret out their intentions), so the potential for identity theft from these sources is much less (or possibly non-existent) when compared to other sources. So, it’s important to know the type of fraud, but also important to know the potential fraud source so that you don’t spend a lot of time looking for a particular type of fraud from a source that’s unlikely to commit it.

Considering fraudsters

A fraudster is someone who perpetrates fraud solely for some type of gain, usually immediate gain. Fraud is just business to them. They aren’t out for revenge, to gain access to your organization, or any of the other motivations that can and do affect others who commit fraud. A fraudster uses some technique to gain a person’s or organization’s trust and then robs them of everything possible. However, some fraudsters take years to set up their fraud or they engage in it continually. There is a form of fraud called long-term fraud where a fraudster places small orders with a business and promptly pays for the goods to win the business’s trust. The fraudster then places one or more large orders and absconds with the goods without paying. ML techniques can look for patterns in the fraudster’s buying habits. For example, would any one organization need the eclectic assortment of goods that the fraudster is buying? Anomalies (as discussed in Chapter 6, Detecting and Analyzing Anomalies) can help detect these sorts of frauds because the fraudster isn’t buying for a business, but rather to create a reputation.

Of the kinds of input that these schemes produce, the Ponzi scheme (https://www.investor.gov/protect-your-investments/fraud/types-fraud/ponzi-scheme) is the most common for businesses because it affects people managing the businesses’ assets directly. The use of virtual currencies has made such Ponzi schemes incredibly easy to perpetrate and it may take a long time for anyone to see that there is any sort of problem. ML can help avoid this particular type of fraud by automating a paperwork review. A Ponzi scheme often provides payments that are too regular and hides details in copious paperwork that might be hard to analyze without a computer. Given that a human would take too long to spot the necessary patterns, ML is the only solution to augment human capability when dealing with lots of high-speed transactions using virtual currency.

Considering hackers

Hackers have a different set of goals from fraudsters when it comes to fraud. As discussed previously in this book, a hacker is usually trying to gain entry to your organization to perform some task, such as installing ransomware or stealing credentials. The hacker’s motivations can include personal gain or it may simply be business on the part of someone else who wants to access your business. Unlike fraudsters, you won’t have any actual contact with a hacker in most cases. The organization may receive an email, but that email won’t be personalized for the most part unless the hacker is perpetrating some type of personal attack. The goal is to blanket an organization in the hopes that someone will take the bait and leave your organization wide open. While a fraudster usually makes targeted attacks based on social engineering, a hacker can use any of the techniques described in Chapter 5, Keeping Your Network Clean, through Chapter 7, Dealing with Malware, to commit fraud based on automation, hitting as many targets as possible in the shortest time possible. These differences between fraudsters are clues that you can use when trying to detect a fraud source, whether you’re dealing with a fraudster or a hacker.

Considering other organizations

When dealing with fraud from other organizations, the prime motivators are espionage, sabotage, or stealing trade secrets (sometimes, there is a bit of revenge involved as well). The organization is using fraud to obtain something that your organization has that gives you some sort of advantage or that can be sold to someone else for a profit. Fraud between organizations tends to be more personalized than that provided by fraudsters because the members of each organization know each other. With this fact in mind, the kind of fraud is usually very personal based on what one party knows about the other.

Considering only electronic interactions (and setting aside personal interactions), it’s possible to use ML to look for patterns in data exchanges with the other organization. In this case, the main means of detection is fake data. The other organization will have enough information to avoid potential issues with anomalies or making randomized purchases. However, it’s still possible to use ML to detect fake data that the other organization generates to skew the target organization’s perception of it. This is one of the situations where using unsupervised learning techniques may be necessary, as described in Unsupervised Deep Learning for Fake Content Detection in Social Media at https://scholarspace.manoa.hawaii.edu/items/6d7560aa-2aff-4439-a884-35994e242c06. The same techniques work when reviewing other sorts of textual data exchanged between organizations.

Considering company insiders

Oddly enough, company insiders are often the most eager perpetrators of fraud. A company insider generally has good access to company assets and is familiar enough with company policies and procedures to avoid detection. However, the kinds of fraud that the company insiders perform are different because the focus of the fraud is different. For example, a company insider is unlikely to try to use someone else’s credit card to make a purchase and may not make any purchases at all (depending on what the company sells). Consequently, consider these kinds of fraud when looking at company insiders as a potential source:

- Asset misappropriation: Any time that someone steals something from the organization, it amounts to asset misappropriation. The most commonly stolen asset is money. For example, someone who is allowed to create and sign checks may create and sign a check for themselves. Likewise, an employee on a trip could misrepresent the costs for items purchased at the company’s expense while on the trip. ML can significantly automate any checks made to verify the actual amounts that employees are paid or spend, reducing the workload on auditors and ensuring accuracy. Difficulties can arise when third parties become involved in asset misappropriation because the third party (such as another organization) is better able to hide details that an ML application would need to detect. However, it’s still possible to locate potential sources of asset misappropriation through behavior monitoring and reviewing the change in individual or organizational patterns.

- Corruption schemes: This kind of fraud normally involves bribery, conflicts of interest, and kickbacks that somehow involve your organization, especially your organization’s reputation or monetary state. The problem with this sort of fraud is that it relies on personal relationships that don’t leave a paper trail. So, even behavior analysis may not reveal the information you need (at least, not completely). In this particular case, ML may provide some facts, but you need a human investigator to complete the task.

- Financial or other statement issues: This particular kind of fraud is a deliberate misrepresentation of an organization’s financial strength or abilities. While the previous kinds of fraud are commonly performed by employees, this kind of fraud is common to managers or owners. The methodology for the financial form of this kind of fraud includes manipulating revenue figures, delaying the recognition of expenses, or overstating inventory (among a great many other ways). Other types of statement fraud can include stating that an organization has a certain number of employees with a particular certification or has access to specific kinds of equipment. In general, someone outside the organization will expose this type of fraud. ML can help research and correlate facts, but this form of fraud requires a human investigator. One approach to ferreting out the required information is to perform comparisons between the target organization and its competitors to look for odd differences in performance or ability.

As you can see, these are still kinds of fraud, but they’re different because they would be harder for someone outside the organization to perpetrate due to a lack of knowledge and/or access. Make sure that you also consider the role third parties can play in company insider fraud. In addition, employees can be blackmailed into committing fraud by a third party, which complicates the situation even further. Given modern ML methods, it’s entirely possible that the target of a fraud investigation isn’t even involved, but is being blamed for the fraud activity by someone else (so, look for things such as misuse of credentials).

Considering customers (or fraudsters posing as customers)

Customers are the lifeblood of any business, so learning how to avoid fraud when dealing with customers is essential. A customer that wants to perpetrate fraud against your business will usually do so in the form of a scam. The idea is to get whatever is desired as quickly as possible and then disappear without a trace. With this in mind, here are typical scammer tactics you need to be aware of:

Customer or fraudster?

Some fraudsters start as customers and only perpetrate fraud when they see weakness on the part of the business, so there is a great amount of ambiguity here: you don’t always know when someone goes from being a customer to being a fraudster; a well-prepared business will avoid certain kinds of fraud through a show of strength. The addition of a sign saying “You’re on candid camera!” immediately below a surveillance camera has been shown to reduce theft. That camera could be attached to an ML application with facial recognition capabilities to make it easier for you to detect repeat offenders. With this ambiguity in mind, the following section uses the term customer because what you see is a customer until you find out otherwise.

- Trust environment: The customer will try to create some level of trust as quickly as possible so that you lower your defenses.

- Urgency: The customer will often create a situation in which you need to make a decision quickly. ML applications can help with this issue by reducing the time to perform in-depth research about the customer from multiple sources and also to provide some type of reading on the probability of a particular situation occurring.

- Intimidation and fear: The customer will make it seem as if something bad will happen if you don’t take advantage of an offer. For example, the customer may intimate that they’ll provide whatever it is to your competition instead. An expert system can provide a sanity check by providing a worst-case scenario thought process that will help reduce the potential for fear taking the place of reason.

- Untraceable payment methods: Once a deal is struck, the customer may want to use alternative payment methods such as wire transfers, reloadable cards, or gift cards to make payments. The right application can help you trace the payment method and help you determine whether it’s legitimate. Otherwise, this is probably one situation where you can just let a potential opportunity pass you by.

There are potential ways to use ML to help reduce the potential for customer fraud in your organization. One of the most important methods is to create and maintain a database of customer contacts with detailed information. A customer who wants to perpetrate fraud will often try multiple employees, looking for one that is more open to creating a trust environment. You can also use this database to search for odd customer contact patterns that don’t match other customers that you deal with. ML applications can also validate customers in several ways:

- Ensuring that the person on the phone is actually who they say they are because their caller ID can easily be faked.

- Verifying that email addresses and websites are legitimate. Typos in addresses are a common method of misdirecting searches and checks. Avoid opening such sites because they often download malware to the caller’s system.

- Securing every aspect of your network, APIs, and other computer resources using ML techniques found in previous chapters and then monitoring these resources constantly using other ML applications.

- Researching the customer by checking online reviews, rating systems, and so on. Calling colleagues who may have dealt with the customer is always a good addition to automated checks.

- Checking whether the service, goods, or other items of interest are free. It’s the old idea of someone trying to sell someone else the Brooklyn Bridge.

- Performing onboarding, which relies on a series of both human and automated controls to verify and validate customers.

The actual techniques that customers use to defraud your business vary, but they tend to fall into one of several areas. The following list provides ideas of what you should look for:

- Fake invoices: The customer provides an invoice for a service or product that your organization uses, except that you didn’t receive the invoiced product or service. A good database design wouldn’t allow payment for products or services without a corresponding request. However, you can also rely on ML to analyze the database logs to look for scam patterns where a customer employs more than one person to commit the scam.

- Things you didn’t order: The customer calls and asks whether you want a free item such as a catalog. You accept the offer and receive it, along with the merchandise you didn’t order, in the mail. The customer then tries to high-pressure you into paying for the goods. A good database application will reveal that you didn’t create a purchase order for the merchandise. However, given the numerous methods that businesses now employ to make purchases, you may need an ML application to search all of the potential places in which an order could have been made.

- Directory listings and advertising: The customer tries to interest you in a directory listing or advert that does not actually exist. Banner ads are especially prone to this scam because it’s hard to verify that they’re being displayed. Screen scraping techniques and an ML application designed to analyze the related advertising can ensure that you are getting the amount of advertising or the directory listings that you’re paying for.

- A utility company, government agent, or other professional contact imposters: Customers will often pose as someone that they’re not to create a trust relationship. Any method that you can use to verify the person’s identity will help reduce the potential for fraud.

- Tech support: There are several ways to perpetrate this particular fraud, but it comes down to convincing someone in your organization that they need some type of technical support to fix a problem that doesn’t exist. The scam itself may consist of a one-time payment to fix this problem, or possibly a maintenance plan to keep it from happening again. Often, the goal is not the payment or the maintenance plan, but rather to gain access to sensitive information on your network, which is worth a lot more.

- Coaching and training scams: The customer promises to provide you with coaching or training that will greatly improve your organization’s income. They use false testimonials and other methods to convince you that the techniques work, except that they don’t. This is an especially prevalent scam for new businesses. Finding a service, usually ML-based, to help you separate the wheat from the chaff is the only way to get past this particular scam if you do feel that coaching or training will be helpful.

- Payment processing or acceptance scams: This category includes things such as credit card processing and equipment scams and the use of fake checks. Most of these scams focus on getting customers to pay what they owe or obtaining payment for lower processing costs. Possibly the best advice here is the idea that There Is No Such Thing As A Free Lunch (TINSTAAFL) (see https://www.investopedia.com/terms/t/tanstaafl.asp for details). Whether it’s creating a model for your ML application, developing a new product, selling to customers, or getting paid, there is a cost involved and no one gets by for free.

As you can see, customers use several highly successful approaches to defrauding businesses. This list doesn’t even include the social engineering, phishing, and ransomware attacks discussed in other chapters. Many of these scams can be tracked down with the assistance of an ML application. In addition, using expert systems to create smart scripts so that employees know what process to follow when working with customers can make a huge difference in ensuring that things go smoothly and don’t necessarily require a lot of management time to solve.

Obtaining fraud datasets

To create effective ML models for your organization, you need to train the model using real data or something that approximates real data. The best choice for a dataset is one that your organization gathers, but this is a time-consuming process and you may not generate enough data to produce a good dataset. With this in mind, you can use any of several fraud datasets available online. One of the best places to get this sort of dataset if you need several different types is the FDB: Fraud Dataset Benchmark at https://github.com/amazon-research/fraud-dataset-benchmark. This one download provides you with access to nine different fraud datasets as listed on the website. You can read the goals of creating this dataset at https://www.linkedin.com/posts/groverpr_fdb-fraud-dataset-benchmark-activity-6970921322067427328-fNCo.

It’s tough, perhaps impossible, to create a single grouping of datasets that answers all fraud detection needs. The Building a fraud detection example section of this chapter uses an entirely different dataset to demonstrate actual credit card fraud based on sanitized data from a real-world dataset. Any public dataset you get will likely be sanitized already (and you need to exercise care in downloading or using any dataset that isn’t already sanitized). Here are some other dataset sites you should visit:

- Amazon Fraud Detector (fake data that looks real): https://docs.aws.amazon.com/frauddetector/latest/ug/step-1-get-s3-data.html

- data.world: https://data.world/datasets/fraud

- Machine Learning Repository (Australian Credit Approval): https://archive.ics.uci.edu/ml/datasets/Statlog+%28Australian+Credit+Approval%29

- Machine Learning Repository (German Credit Data): https://archive.ics.uci.edu/ml/datasets/statlog+(german+credit+data)

- OpenML (CreditCardFraudDetection): https://www.openml.org/search?type=data&sort=runs&id=42175&status=active

- Papers with Code (Amazon-Fraud): https://paperswithcode.com/dataset/amazon-fraud

- Papers with Code (Yelp-Fraud): https://paperswithcode.com/dataset/yelpchi

- Synthesized (Fraud Detection Project): https://www.synthesized.io/data-template-pages/fraud-detection

- US Treasury Financial Crimes Enforcement Unit: https://www.fincen.gov/fincen-mortgage-fraud-sar-datasets

Note that older datasets are often removed without much comment from the provider. Two examples that are often cited by researchers and data scientists are the Kaggle dataset at https://www.kaggle.com/dalpozz/creditcardfraud and the one at http://weka.8497.n7.nabble.com/file/n23121/credit_fruad.arff. Both of these datasets are gone and it isn’t worth pursuing them because there are others. The reason this is important is that the previous list may become outdated at some point, but because fraud is such a common and consistent problem, there will be others to take their place.

Now that you’ve considered all of the kinds of fraud sources and perhaps reviewed a few fraud datasets for use in your research, it’s time to look at the slow type of fraud that occurs in the background. A background fraud scenario is the most dangerous kind of fraud because it happens slowly over a certain period. In some cases, this sort of fraud is never found out except accidentally as part of an audit or other research that has nothing to do with the fraud in question. Of course, this kind of fraud also requires patience on the part of the deceiver, so it can also be the hardest kind of fraud to perpetrate successfully.

Considering fraud that occurs in the background

Niccolo Machiavelli, the person from whose name the term Machiavellian is derived, is one of those individuals who observed human nature with great patience and in great depth in many cases. Unlike the perception that many have of him being a scoundrel of the worst sort, he was a philosopher who saw human nature as it was at the time when it came to politics. Background fraud is often Machiavellian in nature. It has an “ends justifies the means” view of the world and can be utterly immoral in its approach to obtaining some goal through the use of deception. In other words, yelling that something isn’t fair is unlikely to garner any sort of response in this situation. The following sections define the kinds of background fraud and how to detect it.

Detecting fraud that occurs when you’re not looking

There are many different definitions for the difference between background, or long-term, fraud and real-time, or short-term, fraud. When viewing long-term fraud strictly from a financial perspective, many experts view it as a scenario where a fraudster makes lots of small purchases and pays for them to build trust and then makes one or two large purchases, but doesn’t pay for them. By the time anyone thinks to look for the fraudster, the products have been sold for cash, and the fraudster is gone.

Because the term long-term fraud has a specific meaning in many financial circles, this book uses the term background fraud to indicate fraud that happens over weeks or months in a manner that the fraudster hopes no one will notice until it’s too late to do anything about it. The key difference between any sort of fraud and an attack is that fraud relies on deception – it appears to be one thing when it’s another. For example, the Using supervised learning example section of Chapter 5, Keeping Your Network Clean, represents a kind of attack because no deception is employed during the probing process. To change that example into a kind of fraud, the hacker would need to make completely legitimate calls exclusively during the learning process from what appears to be a legitimate caller, and then commit an act that would derive some sort of tangible benefit once the learning process is done by using API calls to manipulate the database in some manner (as an example). Perhaps the hacker would obtain company secrets from the database to sell to a competitor. Consequently, the technique shown in Chapter 5, Keeping Your Network Clean, would have a slim chance of working because the model wouldn’t necessarily have time to recognize a change in pattern.

Detecting background fraud often involves more detailed detective work that relies on some sort of targeted knowledge. Looking again at the example from Chapter 5, Keeping Your Network Clean, a form of fraud might be detected by reviewing the IP addresses making calls to the API and comparing them to a list of IP addresses that could make legitimate requests. As an alternative, it might be possible to trace the IP addresses to a specific caller. Reviewing the history and background of the caller might reveal some anomalies that would point to fraud. Yes, automation plays a very big role in helping a human track the caller down and check into the caller’s information, but eventually, a human has to decide on the viability of the caller because that’s something automation can’t do.

Building a background fraud detection application

There are some types of fraud that can be tracked using pattern recognition models such as the one described in Chapter 5, Keeping Your Network Clean. For example, if a fraudster wanted to slowly manipulate the price of a product to obtain financial gain, the act of manipulating the price would create a pattern of some sort that an ML application could detect, given a model that is trained using enough examples (there are a lot of caveats here, so make sure you pursue this approach with care).

It’s also important to think small in some cases when it comes to fraud. For example, loyalty rewards may seem like a very small sort of fraud to commit, but according to Kount (https://kount.com/blog/how-to-prevent-loyalty-fraud/), the value of just the unredeemed loyalty rewards in a given year may amount to $160 billion. Fraudsters perform an Account Takeover (ATO) attack to gain access to royalty rewards using stolen credentials in many cases. The reason that this is such perfect fraud is that many people never check their royalty rewards information; they just assume that the business will automatically apply a loyalty reward at the cash register when one is available. Consequently, the fraudster has a significantly reduced chance of being caught when perpetrating this form of fraud. An ML application could help in this case by flagging customer accounts that usually have no access, but are currently experiencing a surge in access. In addition, the ML application could monitor the customer’s loyalty rewards for large withdrawals of benefits in a short time.

Now that you have a better idea of how background fraud works, it’s time to look at something with a faster turnaround: real-time fraud. Real-time fraud is marked by impatience; the quick use of deception to make a fast gain, even if that gain isn’t substantial. The idea is that even a small gain is worthwhile if there are enough small gains.

Considering fraud that occurs in real time

Real-time fraud is marked by a certain level of impatience and often requires quick thinking to pull off. Real-time fraud usually infers a kind of interaction that is performed by an entity that can perpetrate the fraud and then become inaccessible (usually by changing venue). In addition, real-time fraud normally relies on social engineering, a lack of knowledge, or some type of artifice. The following sections provide insights into real-time fraud and its detection.

Considering the types of real-time fraud

Real-time fraud, a term this book uses to indicate a kind of fraud that occurs within hours or possibly days (or sometimes even seconds), targets quick gains with little effort on the part of the fraudster. Here are a few real-time fraud types to consider:

- Email phishing: The fraudster commonly sends what appears to be a legitimate email to a user with some method of invoking fraud that antivirus or antispam software can’t detect. One such approach is the use of a malformed URL to take the user to the fraudster’s site where the user enters confidential information, such as credentials. The best way to avoid this kind of fraud is to ignore the message, but users will often click on the link in an email without thinking about it first. Consequently, the best way to avoid this fraud is to keep the user from seeing the email in the first place, which normally involves two levels of protection:

- Authentication checks: Where the identity of the sender is verified. The Developing a simple authentication example section of Chapter 4, Considering the Threat Environment, provides some ideas on how to implement this solution.

- Network-level checks: The use of filters to keep the message out of the site. The most common approaches are the use of whitelists, blacklists, and pattern matching. The example in the Developing a simple spam filter example section of Chapter 4, Considering the Threat Environment, provides you with some ideas on how to implement this approach.

- Payment fraud: The fraudster somehow obtains a payment card or its information. In many cases, this type of fraud requires the use of stolen or counterfeit cards. The use of chipped cards has helped reduce this form of fraud, but no method of protection is absolute or foolproof. Pattern matching and logical checks can also detect this sort of fraud. For example, it’s physically impossible for someone to use a card in one location, and then use it in another location 500 miles away just a few minutes later. Humans monitoring the system can’t pick up clues such as this; you need an ML application and a database with specific triggers set on it to look for illogical entries and then look for a pattern that indicates the fraud source.

- ID document forgery: It’s hard to detect fraud when the ID used to verify a person’s identity appears legitimate because a fraudster has expertly copied the original. There are techniques in place to detect this kind of fraud based on the ID itself, but fraudsters are continually improving their skill at creating fake IDs. One possible way around this kind of fraud would be to have cameras at the site where the ID is used to capture the person’s image and then compare it to the legitimate image on file. Of course, fraudsters are already looking at ways around facial recognition, as we will see in Chapter 10, Considering the Ramifications of Deepfakes. It’s also possible to use pattern recognition to look for behavioral anomalies. The problem is that trying to get some of these techniques to work in real time is going to be quite hard. Customers balk over the smallest delays in any transaction, so making the check promptly is important. What you need to consider is your business model and the kinds of checks that it can support. For example, a bank could more easily accommodate a facial recognition check than a grocery store, which would likely rely on some type of pattern matching and possibly historical data.

- Identity theft: Identity theft differs from ID document forgery in that the fraudster focuses on the user’s accounts in some way. Yes, credentials are stolen, but the goal is to gain access to some second-tier level of access, such as a bank account. There are three common types of identity theft: real name theft (where the fraudster acts in the victim’s stead), ATO (where the fraudster actually takes over the user’s account and usually seals the user out), and synthetic (where the fraudster combines real information with fake information to create a new identity).

There are many other kinds of real-time fraud. Fraudsters are extremely skilled at coming up with the next confidence trick (con) to play on unwitting victims. So, the people that are protecting others from the fraudster have to be equally skilled and quite fast. The next section provides some ideas on the tools that someone can use to aid in detection.

Detecting real-time fraud

The act of detecting real-time fraud as it occurs is really hard because everything happens so quickly. Humans have developed intuitive approaches to knowing when fraud is occurring based on clues that ML has yet to pick up on (and may never pick up on). For example, there is some evidence to suggest that odors can indicate that a person is lying. According to the article, Artificial networks learn to smell like the brain (https://news.mit.edu/2021/artificial-networks-learn-smell-like-the-brain-1018), this approach is still a work in progress. Gamblers often rely on a person’s tell (behavior change) to know what appears in the person’s hand. What this amounts to is a kind of facial recognition over time. Chapter 10 will tell you that ML is getting closer to good facial recognition, but this too is a work in progress. So, what can ML do for you today to help mitigate real-time fraud?

- Physical inspection: Humans can look at an ID and not see any problem with it. A scanned image provided to an ML application can locate small (possibly imperceptible) problems and flag the ID as fake. The same technique also holds for any other kind of document, including money.

- Smart scripting based on the situation: Rule-based systems are inflexible because they require hardcoded rules to determine a course of action. However, they can provide a basis for smart scripting where a rattled employee can remember the recommended process for dealing with fraud based on the current situation.

- Constantly upgraded models using unsupervised learning: Normally, you want to use labeled data when building a model because of the chances for an increase in accurate analysis. However, unsupervised models are better with constantly changing situations because they learn without using labels (which means the learning is more automatic). The downside is that an unsupervised model will present more false positives.

The set of features commonly used for real-time fraud detection is reduced compared to those used for background fraud detection, partly because less data is available and partly because time is of the essence. Here are the four features most commonly used to detect real-time fraud:

- Identity: The main method for avoiding real-time fraud is to ensure that the entity is who they say they are. Most of the time, you look for an ID, but online transactions require confirmation of the credentials provided. Something as small as a purchase from a new device or the use of a new address can provide a clue that the credentials need further investigation, which will be provided by your ML application.

The effect of using multiple devices

The use of different devices by a single individual today commonly triggers an email to the authorized person, an indicator that tracking the device does work.

- Amount: Purchases generally follow a routine of sorts. Trips to the grocery store usually follow within a few dollars of each other unless there is some sort of special event (and even then, the total won’t be outrageously different). Time at the coffee shop or a night at a restaurant also tends to follow patterns. It’s when the amount of a purchase is much higher or much lower than normal that you need to check further. An amount can also mean the number of items or the type of items purchased. Consider the amount as being a quantifier, rather than a specific value.

- Location: Even online purchases are normally performed from the same location. Any time that the buyer starts changing locations radically, it could be an indicator of fraud (or that they simply moved to a new house). Some credit card companies will contact a purchaser about a change in location to verify that the purchase was made by them from a different location. Of course, since someone can’t be in more than one location at a time, purchases from more than one location in a given timeframe are almost certainly indicative of fraud.

- Frequency: Habits are often considered bad, but they can also protect a potential victim. A fraudster can grab credentials and try to use them successfully, but a change in credential usage frequency can often signal potential fraud.

As you can see from this list, it’s not a perfect setup for detecting absolutely every kind of fraud because there is a potential for fraudsters to slip through the cracks. A highly motivated fraudster could verify that the identity used is perfect, make purchases only within the range that the target would make, spoof the location of the purchase, and ensure that they followed the person’s habits within a reasonable range. Of course, that’s a lot of ifs to consider, but it could happen. The point is that observing these four characteristics as part of an ML application will greatly reduce the potential for fraud.

Now that you have some basis for understanding the nature of fraud detection, the next section looks at a specific fraud detection example. In this case, you will see how to detect fraud in credit card purchases.

Building a fraud detection example

This section will show you how to build a simple fraud detection example using real sanitized credit card data available on Kaggle. The transactions occurred in September 2013 and there are 492 frauds out of 284,807 transactions, which is unbalanced because the number of frauds is a little low for training a model. The data has been transformed by Principal Component Analysis (PCA) using the techniques demonstrated in the Relying on Principle Component Analysis section of Chapter 6, Detecting and Analyzing Anomalies. Only the Amount column has the original value in it. The Class column has been added to label the data. You can also find the source code for this example in the MLSec; 08; Perform Fraud Detection.ipynb file of the downloadable source.

Getting the data

The dataset used in this example appears at https://www.kaggle.com/datasets/mlg-ulb/creditcardfraud?resource=download. The data is in a 69 MB .zip file. Download the file manually and unzip it into the source code directory. Note that you must obtain a Kaggle subscription if you don’t already have one to download this dataset. Getting a subscription is easy and free; check out https://www.kaggle.com/subscribe.

Setting the example up

This example begins with importing the data, which requires a little work in this case. The following steps show you how to do so:

- Import the required packages:

import pandas as pdfrom sklearn.preprocessing import StandardScaler

- Import the creditcard.csv data file. Note that this process may require a minute or two, depending on the speed of your system. This is because the unzipped file is 150.83 MB:

cardData=pd.read_csv("creditcard.csv") - Check the dataset’s statistics:

total_transactions = len(cardData)normal = len(cardData[cardData.Class == 0]) fraudulent = len(cardData[cardData.Class == 1]) fraud_percentage = fraudulent/normal print(f'Total Number Transactions: {total_transactions}') print(f'Normal Transactions: {normal}') print(f'Fraudulent Transactions: {fraudulent}') print(f'Fraudulent Transactions Percent: ' f'{fraud_percentage:.2%}')

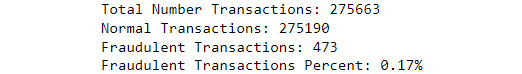

The number of actual fraudulent transactions is smaller, accounting for only 0.17% of the transactions, as shown in Figure 8.1:

Figure 8.1 – Output showing the transaction statistics

- Look for any potential null values. Having null values in the data will skew the model and cause other problems:

cardData.info()

Figure 8.2 shows that there are no null values in the dataset. If there had been null values, then you would need to clean the data by replacing the null values with a specific value, such as the mean of the other entries in the column. The same thing applies to missingness, which is missing data in a dataset and sometimes indicates fraud. You need to replace the missing value with some useful alternative:

Figure 8.2 – Dataset output showing a lack of missing or null values

- Determine the range of the Amount column. The Amount column is the only unchanged column from the original dataset:

print(f'Minimum Value: {min(cardData.Amount)}')print(f'Mean Value: ' f'{sum(cardData.Amount)/total_transactions}') print(f'Maximum Value: {max(cardData.Amount)}') print(cardData['Amount'])

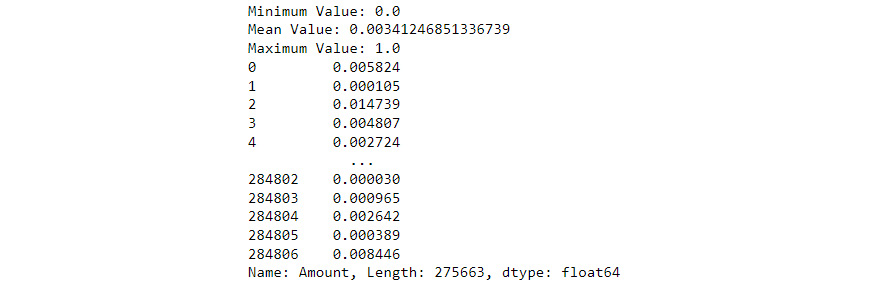

If the Amount column has too great a range, as shown in Figure 8.3, then the model will become skewed. In this case, the standard practice is to scale the data to obtain better results:

Figure 8.3 – Output showing variance in the Amount column

- Remove the Time column. For this analysis, time isn’t a factor because the model isn’t concerned with when credit card fraud occurs; the concern is detecting it when it does occur. The output of this code, (284807, 30), shows that the Time column is gone:

cardData.drop(['Time'], axis=1, inplace=True)print(cardData.shape)

- Scale the dataset to avoid negative values in any of the columns. Some classifiers work well with negative values, while others don’t. For example, if you want to use a DecisionTreeClassifier, then negative values aren’t a problem. On the other hand, if you want to use a MultinomialNB, as was done in the Developing a simple spam filter example section of Chapter 4, Considering the Threat Environment, then you need positive values. Selecting a scaler is also important. This example uses a MinMaxScaler to allow the minimum and maximum range of values to be set:

scaler = MinMaxScaler(feature_range=(0,1))col = cardData.columns cardData = pd.DataFrame( scaler.fit_transform(cardData), columns = col) print(cardData)

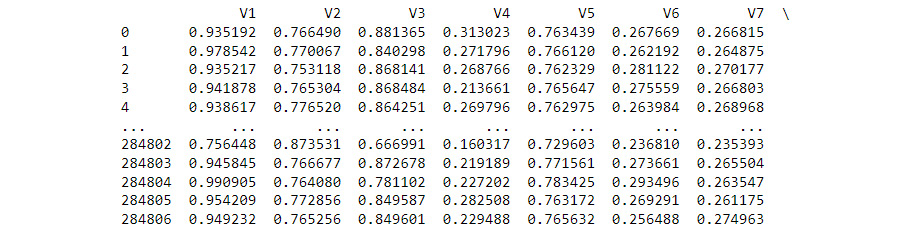

Figure 8.4 shows the partial results of the scaling operation:

Figure 8.4 – Presentation of data values after scaling

- Remove any duplicates. Removing the duplicates ensures that any entries in the dataset that replicate another entry don’t remain in place and cause the model to overfit and it won’t generalize well. This means that it won’t catch as many fraudulent transactions from data it hasn’t seen. The output of (275663, 30) shows that there were 9,144 duplicated records:

cardData.drop_duplicates(inplace=True)print(cardData.shape)

- Determine the final Amount column’s characteristics:

print(f'Minimum Value: {min(cardData.Amount)}')print(f'Mean Value: ' f'{sum(cardData.Amount)/total_transactions}') print(f'Maximum Value: {max(cardData.Amount)}') print(cardData['Amount'])

Now that the dataset has been massaged, it’s time to see the result, as shown in Figure 8.5:

Figure 8.5 – Output of the Amount column after massaging

A lot of ML comes down to ensuring that the data you use is prepared properly to create a good model. Now that the data has been prepared, you can split it into training and testing sets. This process ensures that you have enough data to train the model, and then test it using data the model hasn’t seen before so that you can ascertain the goodness of the model (something you will see later in this process).

Splitting the data into train and test sets

Splitting the data into training and testing sets makes it possible to train the model on one set of data and test it using another set of data that the model hasn’t seen. This approach ensures that you can validate the model concerning its goodness in locating credit card fraud (or anything else for that matter). The following steps show how to split the data in this case:

- Import the required packages:

from sklearn.model_selection import train_test_split

- Split the dataset into data (X, which is a matrix, so it appears in uppercase) and labels (y, which is a vector, so it appears in lowercase):

X = cardData.drop('Class', axis=1).valuesy = cardData['Class'].values print(X) print(y)

When you print the result, you will see that X is indeed a matrix and y is indeed a vector, as shown in Figure 8.6:

Figure 8.6 – Contents of the training and testing datasets

- Divide X and y into training and testing sets. There are many schools of thought as to what makes for a good split. What it comes down to is creating a split that provides the least amount of variance during training, but then tests the model fully. In this case, there are plenty of samples, so using the 80:20 split that many people use will work fine:

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=1) print(f"X training data size: {X_train.shape}") print(f"X testing data size: {X_test.shape}") print(f"y training data size: {y_train.shape}") print(f"y testing data size: {y_test.shape}")

Figure 8.7 shows that the data is split according to the 80:20 ratio and that the training and testing variables have the same number of entries:

Figure 8.7 – The output shows that the data is split according to an 80:20 ratio

Now that the data is in the correct form, you can finally build a model. Of course, that means selecting a model and configuring it. For this example, you will use DecisionTreeClassifier. However, there are a wealth of other models that could give you an edge when working with various kinds of data.

Considering the importance of testing model goodness

The whole issue of data splitting, selecting the correct model, configuring the model correctly, and so on, comes down to getting a model with very high accuracy. This is especially important when doing things such as looking for malware or detecting fraud. Unfortunately, no method or rule of thumb provides a high level of accuracy in every case. The only real way to tweak your model is to change one item at a time, rebuild the model, and then test it.

Building the model

As you’ve seen in other chapters, building the model involves fitting it to the data. The following steps show how to build the model using a minimal number of configuration changes:

- Import the required packages:

from sklearn.tree import DecisionTreeClassifier

- Fit the model to the data. Notice that the one configuration change here is to limit the max_depth setting to 5. Otherwise, the classifier will keep churning away until all of the leaves are pure or they meet the min_samples_split configuration setting requirements:

dtc = DecisionTreeClassifier(max_depth = 5)dtc.fit(X_train, y_train)

You’ll know that the process is complete when you see the output shown in Figure 8.8:

Figure 8.8 – Description of the DecisionTreeClassifier model

You now have a model to use to detect credit card fraud. Of course, you have no idea of how good that model is at its job. Perhaps it’s not very generalized and overfitted to the data. Then again, it might be underfitted. The next section shows how to verify the goodness of the model in this case.

Performing the analysis

Having a model to use means that you can start detecting fraud. Of course, you don’t know how well you can detect fraud until you test it using the following steps:

- Import the required packages:

from sklearn.metrics import accuracy_scorefrom sklearn.metrics import precision_recall_fscore_support from sklearn.metrics import confusion_matrix from sklearn.metrics import plot_confusion_matrix import matplotlib.pyplot as plt

- Perform a prediction using the X_test data that wasn’t used for training purposes. Use this prediction to verify the accuracy by comparing the actual labels in y_test (showing whether a record is fraudulent or not) to the predictions found in yHat (ŷ):

dtc_yHat = dtc.predict(X_test)print(f"Accuracy score: ' f'{accuracy_score(y_test, dtc_yHat)}")

Figure 8.9 shows that the accuracy is very high:

Figure 8.9 – Output of the DecisionTreeClassifier model accuracy

- Determine the precision (ratio of correctly predicted positive observations to the total of the predictive positive observations), recall (ratio of correctly predicted positive observations to all observations in the class), F-beta (the weighted harmonic mean of precision and recall), and support scores (the number of occurrences of each label in y_test). These metrics are important in fraud work because they tell you more about the model given that the dataset has a few positives and a lot of negatives, making the dataset unbalanced. Consequently, accuracy is important, but these measures are also quite helpful for fraud work:

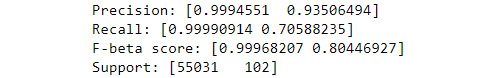

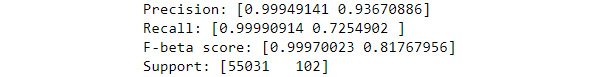

precision, recall, fbeta_score, support = precision_recall_fscore_support(y_test, dtc_yHat) print(f"Precision: {precision}") print(f"Recall: {recall}") print(f"F-beta score: {fbeta_score}") print(f"Support: {support}") - Figure 8.10 shows the output from this step. Ideally, you want a precision as close as possible to 1.0. The output shows that the precision is 0.9994551 for predicting when a transaction isn’t fraudulent and a value of 0.93506494 when a transaction is fraudulent. For recall, there are two classes: not fraud and fraud. So, the ratio of correctly predicting fraud against all of the actual fraud cases is only 0.70588235. The F-beta score is about weighting. The example uses a value of 1.0, which means that recall and precision have equal weight in determining the goodness of a model. If you want to give more weight to precision, then you use a number less than 1.0, such as 0.5. Likewise, if you want to give more weight to recall, then you use a number above 1.0, such as 2.0. Finally, the support output simply tells you how many non-fraud and fraud entries there are in the dataset:

Figure 8.10 – Output of the precision, recall, F-beta, and support statistics

- Print a confusion matrix to show how the prediction translates into the number of entries in the dataset:

print(confusion_matrix(y_test, dtc_yHat, labels=[0, 1]))

Figure 8.11 shows the output. The number of true positives appears in the upper-left corner, which has a value of 55025. Only 29 of the records generated a false positive. There were 73 true negatives and six false negatives in the dataset. So, the chances of finding credit card fraud are excellent, but not perfect:

Figure 8.11 – The confusion matrix output for the DecisionTreeClassifier model prediction

- Create a graphic version of the confusion matrix to make the output easier to understand. Using graphic output does make a difference, especially when discussing the model with someone who isn’t a data scientist:

matrix = plot_confusion_matrix(dtc, X=X_test, y_true=y_test, cmap=plt.cm.Blues) plt.title('Confusion Matrix for Fraud Detection') plt.show(matrix) plt.show()

Figure 8.12 shows the output in this case:

Figure 8.12 – A view of the graphic version of the confusion matrix

This section has shown you one complete model building and testing cycle. However, you don’t know that this is the best model to use. Testing other models is important, as described in the next section.

Checking another model

The decision tree classifier does an adequate job of separating fraudulent credit purchases from those that aren’t, but it could do better. A random forest classifier is a group of decision tree classifiers. In other words, you put multiple algorithms to work on the same problem. When the classification process is complete, the trees vote and the classification with the most votes wins.

In the previous example, you used the max_depth argument to determine how far the tree should go to reach a classification. Now that you have a whole forest, rather than an individual tree, at your disposal, you also need to define the n_estimators argument to define how many trees to use. There are a lot of other arguments that you can use to tune your model in this case, as described in the documentation at https://scikit-learn.org/stable/modules/generated/sklearn.ensemble.RandomForestClassifier.html. The following steps help you create a random forest classifier model so that you can compare it to the decision tree classifier used earlier:

- Import the required packages:

from sklearn.ensemble import RandomForestClassifier

- Fit the model to the data and display its accuracy. Note that this step will take a while to complete because you’re using a whole bunch of trees to complete it. If your system is taking too long, you can always reduce either or both of the max_depth and n_estimators arguments. Changing either of them will affect the accuracy of your model. Of the two, max_depth is the most important for this particular example, but in the real world, you’d need to experiment to find the right combination. The purpose of the n_jobs=-1 argument is to reduce the amount of time to build the model by using all of the processors on the system:

rfc = RandomForestClassifier(max_depth=9, n_estimators=100, n_jobs=-1) rfc.fit(X_train, y_train) rfc_yHat = rfc.predict(X_test) print(f"Accuracy score: " f"{accuracy_score(y_test, rfc_yHat)}")

Figure 8.13 shows that even though it took longer to build this model (using all of the processors no less), it performs only slightly better than the decision tree classifier. In this case, the contributing factors are the small dataset and the fact that the number of fraud cases is small. However, even a small difference is better than no difference at all when it comes to fraud and you need to consider that a real-world scenario will be dealing with far more entries in the dataset:

Figure 8.13 – The accuracy of the random forest classifier

- Calculate the precision, recall, fbeta_score, and support values for the model:

precision, recall, fbeta_score, support = precision_recall_fscore_support(y_test, rfc_yHat) print(f"Precision: {precision}") print(f"Recall: {recall}") print(f"F-beta score: {fbeta_score}") print(f"Support: {support}")

As with accuracy, the differences (as shown in Figure 8.14) between the two models are very small, but important when dealing with fraud. After all, you don’t want to claim a customer has committed fraud unless it’s true:

Figure 8.14 – The precision, recall, F-beta, and support scores for the random forest classifier

- Plot the confusion matrix for this model:

matrix = plot_confusion_matrix(rfc, X=X_test, y_true=y_test, cmap=plt.cm.Blues) plt.title('Confusion Matrix for RFC Fraud Detection') plt.show(matrix) plt.show()

Figure 8.15 shows what it all comes down to in the end. The random forest classifier has the same prediction rate for transactions that aren’t fraudulent as the decision tree classifier in this case. However, it also finds one more case of fraud, which is important:

Figure 8.15 – The confusion matrix for the random forest classifier

This example has looked at credit card fraud, but the same techniques work on other sorts of fraud as well. The main things to consider when looking for fraud are to obtain a large enough amount of data, train the model using your best assumptions about the data, and then test the model for accuracy. Tweak the model as needed to obtain the required level of accuracy. Of course, no model is going to be completely accurate. While ML will greatly reduce the burden on human detectives looking for fraud, it can’t eliminate the need for a human to look at the data entirely.

Creating a ROC curve and calculating AUC

A Receiver Operating Characteristic (ROC) curve and Area Under the Curve (AUC) calculation help you determine where to set thresholds in your ML model. These are methods of looking at the performance of a model at all classification thresholds. The X-axis shows the false positive rate, while the Y-axis shows the true positive rate. As the true positive rate increases, so does the false positive rate. The goal is to determine where to place the threshold for determining whether a particular sample is fraudulent or not based on its score during analysis. A score indicates the model’s confidence as to whether a particular sample is fraud or not, but the model doesn’t determine where to place the line between fraud and legitimate; that line is the threshold. Therefore, a ROC curve helps a human user of a model determine where to set the threshold, and where to say that it’s best to detect fraud.

The True Positive Rate (TPR) defines the ratio between true positives (TP) (as shown in Figure 8.11, Figure 8.12, and Figure 8.15) and false negatives (FN): TPR = TP / TP + FN. The False Positive Rate (FPR) defines the ratio between the false positives (FP) and the true negatives (TN): FPR = FP / FP + TN. Essentially, what you’re trying to determine is how many true positives are acceptable for a given number of false positives when working with a model.

As part of plotting a ROC curve, you also calculate the AUC, which is essentially another good measure for the model. It’s a measure of overall performance against all classification thresholds; the higher the number, the better the model. The AUC is a probability measure that tells you how likely it is that the model will rank a random positive example higher than a random negative example. Consequently, the MultinomialNB classifier used in an earlier example would have an AUC of 0, which means it never produces a correct detection. With all of these things in mind, use the following steps to create two ROC curves comparing DecisionTreeClassifier to the RandomForestClassifier classifier used earlier:

- Import the required packages:

from sklearn.metrics import roc_curvefrom sklearn.metrics import auc from numpy import argmax from numpy import sqrt

- Perform the required DecisionTreeClassifier analysis. Notice that this is a three-step process of calculating the probabilities for each value in X_test, determining the FPR and TPR values, and then estimating the AUC value. dtc_thresholds will be used in a later location to determine the best-calculated place to put the threshold for this model:

dtc_y_scores = dtc.predict_proba(X_test)dtc_fpr, dtc_tpr, dtc_thresholds = roc_curve(y_test, dtc_y_scores[:, 1]) dtc_roc_auc = auc(dtc_fpr, dtc_tpr)

- Perform the required RandomForestClassifier analysis. Notice that this is the same process we followed previously:

rfc_y_scores = rfc.predict_proba(X_test)rfc_fpr, rfc_tpr, rfc_thresholds = roc_curve(y_test, rfc_y_scores[:, 1]) rfc_roc_auc = auc(rfc_fpr, rfc_tpr)

- Calculate the best threshold for each of the models and display the generic mean (G-mean) for each one. The best-calculated threshold represents a balance between sensitivity, which is the TPR, and specificity, which is 1 - FPR. To calculate this value, the code must perform the analysis for each threshold; then, a call to argmax() will report the best option based on the calculation:

dtc_gmeans = sqrt(dtc_tpr * (1-dtc_fpr))dtc_ix = argmax(dtc_gmeans) print('Best DTC Threshold=%f, G-Mean=%.3f' % (dtc_thresholds[dtc_ix], dtc_gmeans[dtc_ix])) rfc_gmeans = sqrt(rfc_tpr * (1-rfc_fpr)) rfc_ix = argmax(rfc_gmeans) print('Best RFC Threshold=%f, G-Mean=%.3f' % (rfc_thresholds[rfc_ix], rfc_gmeans[rfc_ix]))

Figure 8.16 shows the result of the calculation for each model:

Figure 8.16 – Calculated best threshold and G-mean values for each model

- Create the ROC curve plot and display the AUC values:

plt.title('Receiver Operating Characteristic')plt.plot(dtc_fpr, dtc_tpr, 'g', label = 'DTC AUC = %0.2f' % dtc_roc_auc) plt.plot(rfc_fpr, rfc_tpr, 'b', label = 'RFC AUC = %0.2f' % rfc_roc_auc) plt.plot([0, 1], [0, 1],'r--', label = 'No Skill') plt.scatter(dtc_fpr[dtc_ix], dtc_tpr[dtc_ix], marker='o', color='g', label='DTC Best') plt.scatter(rfc_fpr[rfc_ix], rfc_tpr[rfc_ix], marker='o', color='b', label='RFC Best') plt.legend(loc = 'lower right') plt.xlim([0, 1]) plt.ylim([0, 1]) plt.ylabel('True Positive Rate') plt.xlabel('False Positive Rate') plt.title('ROC Curve Comparison DTC to RFC') plt.show()

The output of this example appears in Figure 8.17. Notice that the RFC model outperforms the DTC in this particular case by a small margin. In addition, the plot shows where you’d place the threshold for each model. Given the data and other characteristics of this example, once the model has achieved a maximum value, there is little advantage in increasing the threshold further:

Figure 8.17 – The ROC curve and AUC calculation for each model

Of course, you won’t likely always see this result. The main takeaway from this example is that you need to compare models and settings to determine how best to configure your ML application to detect as much fraud as possible without creating an overabundance of false positives.

Summary

This chapter introduced you to the topic of fraud as it applies to ML. The key takeaway from this chapter is that fraud involves deception for some type of gain. Often, this deception is completely hidden and subtle; sometimes, the gain is even hard to decipher unless you know how the gain is used. Fraud affects ML security by introducing flawed data into the dataset, which produces unreliable or unpredictable results that are skewed to the perpetrator’s goals. In addition, because the data is unreliable, it also presents a security risk.

When reviewing the security needs of an organization, it’s important to consider both background and real-time fraud. Depending on your organization, one form of fraud or the other may take precedence. For example, a marketing company with no direct consumer interaction would need to consider background fraud more strongly. Likewise, an online seller would need to consider real-time fraud more strongly. Tailoring the type of fraud detection used is incredibly important to make detection both precise and efficient.

Chapter 9, Defending Against Hackers, moves on to defending against direct hacker attacks. Previous chapters have considered what might be termed hacker agents, such as network interference, the introduction of anomalies, the reliance on malware, and now the use of fraud in this chapter. In Chapter 9, the hacker will become actively engaged in what could be termed either sabotage or espionage. It’s the sort of attack seen in movies, but not in the way that movies depict them. You may be amazed at just how subtle a hacker’s machinations against your organization can become.

Further reading

The following bullets provide you with some additional reading that you may find useful in understanding the materials in this chapter:

- Discover how deep learning is already being used to extract information from ID cards: ID Card Digitization and Information Extraction using Deep Learning – A Review: https://nanonets.com/blog/id-card-digitization-deep-learning/

- Understand how organizations suffer phishing attacks: How to Prevent Phishing on an Organizational Level: https://www.tsp.me/blog/cyber-security/how-to-prevent-phishing-on-an-organizational-level/

- Uncover Ponzi schemes using ML techniques: Data-driven Smart Ponzi Scheme Detection at https://arxiv.org/pdf/2108.09305.pdf and Evaluating Machine-Learning Techniques for Detecting Smart Ponzi Schemes at https://ieeexplore.ieee.org/document/9474794

- Read about the man who did sell the Brooklyn Bridge and other monuments: Meet the Conman Who Sold the Brooklyn Bridge — Many Times Over: https://history.howstuffworks.com/historical-figures/conman-sold-brooklyn-bridge.htm

- Learn about the no free lunch theorem as it applies to ML: No Free Lunch Theorem for Machine Learning: https://machinelearningmastery.com/no-free-lunch-theorem-for-machine-learning/

- Learn more about the historical basis of Niccolo Machiavelli: Italian philosopher and writer Niccolo Machiavelli born at https://www.history.com/this-day-in-history/niccolo-machiavelli-born and Machiavelli at https://www.history.com/topics/renaissance/machiavelli

- Gain an understanding of how AI is used to detect government fraud: Using AI and machine learning to reduce government fraud: https://www.brookings.edu/research/using-ai-and-machine-learning-to-reduce-government-fraud/

- See how accuracy, precision, and recall relate: Accuracy, Precision, Recall & F1 Score: Interpretation of Performance Measures: https://blog.exsilio.com/all/accuracy-precision-recall-f1-score-interpretation-of-performance-measures/

- Gain a better understanding of the F-beta measure: A Gentle Introduction to the Fbeta-Measure for Machine Learning: https://machinelearningmastery.com/fbeta-measure-for-machine-learning/

- Consider how long-term fraud detection and protection improve business productivity and profit: How long-term digital fraud protection can increase profitability: https://kount.com/blog/long-term-fraud-protection-benefits/

- Pick up techniques to help determine when someone is lying: Become a Human Lie Detector: How to Sniff Out a Liar: https://www.artofmanliness.com/character/behavior/become-a-human-lie-detector-how-to-sniff-out-a-liar/

- Discover credit card statistics that demonstrate why fraud detection is so important: 42 credit card fraud statistics in 2022 + steps for reporting fraud: https://www.creditrepair.com/blog/security/credit-card-fraud-statistics/