CHAPTER 11

Enterprise Security and Data Visibility in Master Data Management Environments

As we stated in previous chapters, a core component of any Master Data Management solution is its data integration platform, which is designed to provide a complete and accurate view of one or several data domains, including the customer, product, account, and so on. MDM solutions for Customer Domain (sometimes referred to as CDI) and MDM solutions for Product Domain are perfect examples of such platforms.

By design an MDM Data Hub contains a wealth of information about individuals, companies, or products, all in a convenient, integrated form, and thus represents an attractive target for various forms of information security attacks. Thus, as a repository maintaining an authoritative system of record for the enterprise, an MDM Data Hub would require extra levels of protection for the information it manages.

The preceding chapters discussed various concerns and approaches to the protection of the information content.

This chapter will focus on protecting and controlling access to the Data Hub and the information stored there.

Access Control Basics

In general, when we talk about controlling access to a resource, be it a computer, network device, a program, or an application, we intuitively accept that such a control should be based on the individual or group credentials and permissions that are defined according to the security policies.

As we showed in the previous chapters, the credentials that are acquired or assigned through the process called provisioning, and then are verified through the activities called authentication. We also discussed that authentication can be weak or strong, single factor or multifactor. Whatever the approach, the end result of the authentication action is twofold: the user is identified using supplied credentials such as user ID and password, hardware or a biometric token; and the user’s identity is verified (authenticated) by comparing the supplied credentials with the ones stored in the authentication store such as an enterprise directory.

However, the authentication alone does not solve the access control portion of the security equation unless authenticated users are given total access to all resources in the system for which they are authenticated.

In practice, most users should have access to some but not all resources, and we need to find a way to limit access rights per user based on some form of authorization rule that is based on authenticated credentials and the security policy in force at the time of the access request. To state it differently, each authenticated user should be able to access only those resources that he or she is authorized to use. The authorization decision is based on who the user is and on the security policy that defines the access.

This decision-making process could be implemented and enforced easily if an enterprise has individual policies for each user. Clearly, this approach is not realistic since the number of authenticated users can easily reach thousands (that is, employees and contractors) or even millions in the case of customers of large enterprises or government agencies. Managing such a large number of policies is a labor-intensive and error-prone activity that can also create significant security vulnerabilities.

One way to “avoid” creating and managing this large policy set is to implement access control logic inside the applications. Many applications have traditionally been developed with these access control capabilities as a part of their application logic—an approach that historically has proved to be expensive, inflexible, and hard to maintain.

A better approach is to abstract the authorization functionality away from applications into a common set of authorization functions. This approach would deliver a much more flexible and scalable solution. There are design patterns that implement common authorization functionality while reducing the number of policies that need to be managed. The goal of these designs is to provide sufficient flexibility, scalability, and fine-level granularity for functions and data access. Naturally, these techniques and design options are the domain of the security discipline called authorization. And as we stated in previous chapters, authentication, authorization, access control, and related concerns including entitlements and provisioning are all components of the information security discipline known as Identity and Access Management, or IAM.

Groups and Roles

One of the main principles in reducing the required number of policies is to introduce the notion of user groups and roles, and to provide access decisions based on a user’s membership in groups and/or assignment of one or more roles. Since the number of groups and roles is typically much smaller than the overall number of users, this approach can be quite effective. Groups can help reduce errors in permissions and opportunities for a user to have unnecessary and potentially dangerous access.

Some of the group-based approaches for a common authorization facility are based on what is known as Access Control Lists (ACLs), where the typical ACL structure would maintain a name-value pair with the “name” being the user or a group identifier, and the “value” being the reference or an address of the resource this user or this group can access, and a set of actions the group is allowed to perform. For example, an ACL may contain a list of functional groups such as Managers, HR Administrators, and Database Administrators, and where each employee is assigned to one or more groups. Each of the groups will have one or more resources they are allowed to access, with the specific access authorization (Create, Read, Update, Delete, Execute [CRUDE]). Such ACL structures have been widely implemented in many production environments protected by legacy security systems including Resource Access Control Facility (RACF), ACF2, and Top Secret. Several popular operating systems such as UNIX and ACL-controlled Windows Registry also use Access Control List–based authorization schemas.

ACLs work well in situations with well-defined and stable (persistent) objects, such as the IBM DB2 subsystem or the Windows System Registry. In these cases, the ACL can be mapped to the object, and access decisions can be made based on group membership and the CRUDE contents of the ACL. However, ACLs have some well-documented shortcomings. Among them are the following items:

• The ACL model does not work well in situations where the authorization decisions are often based not only on the group membership but also on business logic. For example, an authorization may depend on the value of a particular attribute (for instance, outstanding payment amount), where if a value exceeds a predefined threshold it can trigger a workflow or an authorization action.

• A common administrative task of reviewing and analyzing access given to a particular user or group can become difficult and time-consuming as the number of objects/resources grows, since the ACL model will require reviewing every object to determine if the user has access to the object. Generally speaking, the administration of large ACL sets can become a management nightmare.

• ACLs do not scale well with an increase in the number of users/groups and resources. Similarly, ACLs do not scale well as the granularity of the user authorization requests grows from a coarse to a fine level. In other words, to take full advantage of the group metaphor, the administrator would create a small number of groups and assign all users to some or all of the groups. However, if the organization has a relatively large number of individuals that are organized in a number of small teams with a very specialized set of access permissions, the ACL administrator would have to define many additional groups, thus creating the same scalability and manageability issues stated in the preceding list. An example of the last point can be a brokerage house that defines groups of traders specialized in a particular financial instrument (for example, a specific front-loaded mutual fund). The business rules and policies would dictate that these traders can only access databases that contain information about this particular mutual fund. To support these policies, the ACL administrator may have to define a group for each of the specialized instruments.

Roles-Based Access Control (RBAC)

A more scalable and manageable approach to authorization is to use user roles as the basis for the authorization decisions. Roles tend to be defined and approved using a more rigorous and formalized process known as roles engineering, which can enable the enterprise to better align the roles with the security policies. The approach and technologies of providing access control based on user credentials and roles are known as Roles-Based Access Control (RBAC). Although some of the goals of Roles-Based Access Control can be accomplished via permission groups, we will focus on RBAC since it offers a framework to manage users’ access to the information resources across an enterprise in a controlled, effective, and efficient manner. The goal of RBAC is to allow administrators to define access based on a user’s job requirements or “role.” In this model an administrator follows a two-step process:

1. Define a role in terms of the access privileges for users assigned to a given role.

2. Assign individuals to a role.

As the result, the administrator controls access permissions to the information assets at the role level. Permissions can be queried and changed at the role level without touching the objects that have to be protected. Once the security administrator establishes role permissions, changes to these permissions will be rare compared to changes in assigning users to the roles.

Clearly, for RBAC to be effective the roles of the users have to be clearly defined and their access permissions need to be closely aligned with the business rules and policies.

Roles-Engineering Approach

When we discuss user roles, we need to recognize the fact that similarly named roles may have different meanings depending on the context of how the roles and permissions are used. For example, we may be able to differentiate between roles in the context of the Organization, Segment, Team, and a Channel:

• The Organization role defines the primary relationship between the user and the organization. An example of the organization role could be an Employee, Customer, Partner, Contractor, and so on. Each user can only have one Organization role for a given set of credentials.

• The Segment role defines the user’s assignment to a business domain or segment. For example, in financial services a segment could be defined by the total value of the customer’s portfolio, such as high priority customers, active traders, and so on. Each of these segments can offer its members different types of products and services. Segment roles can be set either statically or dynamically, and each user may be assigned to multiple Segment roles.

• The Team role defines the user’s assignment to a team, for example, a team of software developers working on a common project, or a team of analysts working on a particular M&A transaction. The Team role can be set either statically or dynamically. Each user may be assigned multiple Team roles.

• The Channel role defines user permissions specific to a channel (channel in this context means a specific platform, entry point, application, and so on). For example, a user may have a role of a plan administrator when having personal interactions with the sales personnel (for instance, a financial advisor). At the same time, in the self-service online banking channel the same user can be in the role of a credit card customer who can perform online transactions including funds transfers and payments. Each user may be assigned multiple Channel roles.

The role classification shown in the preceding list is only one example of how to view and define the roles. More formally, we need a rigorous roles-engineering process that would help define the roles of the users in the context of the applications and information assets they need to access.

There are a number of methodological approaches and references1 on how to define roles and to engineer roles-based access controls. The details of these approaches are beyond the scope of this book. However, it would be helpful to further clarify these points by reviewing some key role-designing principles:

• The roles need to be defined in the context of the business and therefore should not be done by the IT organization alone.

• Like any other complex cross-functional activity, the roles-engineering process requires sound planning and time commitment from both business and IT organizations. Planning should start with a thorough understanding of security requirements, business goals, and all the components essential to the implementation of the RBAC.

• Some core roles-engineering components include:

• Classes of users

• Data sources

• Applications

• Roles

• Business rules

• Policies

• Entitlements

The last three components are discussed in greater detail later in this chapter.

Sample Roles-Engineering Process Steps The roles-engineering process consists of several separate steps necessary to build the access management policies. The following steps illustrate how the process can be defined:

• Analyze the set of applications that would require role-based access controls. This analysis should result in a clear inventory of application features and functions, each of which can be mapped to a specific permission set.

• Identify a target user base and its context dimensions (organizations, segment, team, and so on). Use a generally accepted enterprise-wide authoritative source of user credentials to perform this task, for example, a human resources database for employees.

• Create role definitions by associating target functional requirements with a target user base.

Once the role definitions are created, we recommend identifying and mapping business rules that should be used for user-role assignment. For example, a rule may state that a user is assigned the role of a teller if the HR-supplied credentials are verified and if the user operates from the authorized channel (for example, a branch). Another example can be a business rule that states that an employee does not get assigned a Segment role.

RBAC Shortcomings

While roles-based access control can be very effective in many situations and represents a good alternative to Access Control Lists, it does have a number of shortcomings. Some of these shortcomings are described in the following list:

• Roles-Based Access Control does not scale well as the granularity of protected resources grows. In other words, if you need to define access controls to sensitive data at the record or even attribute levels, you should define a set of roles that would map to each possible data record and/or attribute, thus resulting in a very large and difficult-to-manage set of roles.

• Roles can be difficult to define in an enterprise-wide uniform fashion, especially if the enterprise does not have a formal process that guides the creation and differentiation of roles. For example, an enterprise may define a role of an analyst in its marketing department and a similarly named role of the analyst for the IT department. However, these roles represent different capabilities, responsibilities, compensation, and so on.

• Roles alone do not easily support access constraints where different users with equal roles have access only to a portion of the same data object. This particular requirement is often called “data visibility” and is discussed in more detail later in this chapter.

Policies and Entitlements

The limitations of Roles-Based Access Control that we discussed in the preceding section make it difficult to implement many complex business-driven access patterns. These access patterns are particularly important when you consider all the potential uses of a Master Data Management environment. MDM designers should consider these use cases and usage patterns in conjunction with the need to protect information from unauthorized access. At the same time, MDM solutions should streamline, rationalize, and enable new, more effective and efficient business processes. For example, let’s consider an MDM Data Hub implementation that is positioned to replace a number of legacy applications and systems that support customer management processes. In this case, in order to take full advantage of the MDM Data Hub as a system of record for the customer information, an enterprise should change several core processes (for example, the account-opening process). So, instead of accessing legacy data stores and applications, a modified account-opening process should use the MDM Data Hub’s data, services, and RBAC-style access controls as a centralized trusted facility. But in a typical enterprise deployment scenario, existing legacy applications contain embedded business-rules-driven access controls logic that may go beyond RBAC. Thus, the Data Hub cannot replace many legacy systems without analyzing and replicating the embedded functionality of the access control logic in the Data Hub first. This means that all access decisions against Data Hub need to be evaluated based on the user attributes, the content of the data to be accessed, and the business rules that take all these conditions into considerations before returning a decision.

Analysis of typical legacy applications shows that access permissions logic uses a combination of user roles and the business rules, where the business rules are aligned with or derived from the relevant security and business policies in place.

The problem of providing flexible access control solutions that would overcome many known RBAC limitations is not a simple replacement of Roles-Based Access Control with Rules-Based Access Controls (using the RBAC analogy, we will abbreviate Rules-Based Access Control as RuBAC). Indeed, RuBAC can implement and enforce arbitrary complex access rules and thus offers a viable approach to providing fine-grained access control to data. However, RuBAC does not scale well with the growth of the rule set. Managing large rule sets is at least as complex a problem as the one we showed during the RBAC discussion.

Therefore, a hybrid roles-and-rules-based access control (RRBAC) may be a better approach to solving access control problems. This hybrid approach to defining access controls uses more complex and flexible policies and processes that are based on user identities, roles, resource entitlements, and business rules. The semantics and grammar of these policies can be quite complex, and are the subject of a number of emerging standards. Examples of these standards are the OASIS WS-Security2 standard and W3C WS-Policy.3 The latter is a Web Services standard allowing users to create an XML document that unambiguously expresses the authorization rules.

Entitlements Taxonomy

As we just stated, the key concepts of the hybrid approach are policies, identity-based entitlements, roles, and rules. We already discussed roles in the preceding sections of this chapter. However, the discussion on the hybrid approach to access controls would not be complete if we don’t take a closer look at policies and entitlements.

Whenever a user requests an access to a resource, an appropriate policy acts as a “rule book” to map a given request to the entitlements of that user.

For example, you may create an entitlement for your college-age daughter stating that she has permission to withdraw money from your checking account as long as the withdrawal amount does not exceed $500. In this example, we established the party (daughter), object (checking account), nature of the permission or the action (withdrawal), and the condition of the permission ($500 limit).

It is easy to see that entitlements contain both static and dynamic components, where static components tend to stay unchanged for prolonged periods of time. The value of the dynamic component (for example, a $500 limit), on the other hand, may change relatively frequently and will most likely be enforced by applying business rules at run time.

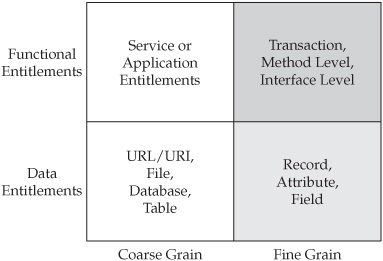

Another interesting observation about entitlements is the fact that, on one hand, they can be categorized as functional and data entitlements, and on the other hand as coarse-and fine-grained entitlements (see Figure 11-1).

This entitlements taxonomy has interesting implications for the complexity of the business rules and the applicability of the already-discussed ACL and RBAC approaches.

Indeed, at a coarse level of granularity, there are many situations where ACL or RBAC solutions would provide an adequate level of access controls. But given their shortcomings in terms of scalability and manageability, a different approach had to be found for fine-grain entitlements. This different approach is based on a relatively recent set of technologies known as policy authorization servers, or policy servers for short. As the name implies, policy servers evaluate all access control requests against the appropriate set of polices and business rules in the context of identities, roles, and entitlements of the authenticated users. To state it differently, policy servers help ensure proper alignment of access control enforcement with the security policies of the enterprise. Policy servers avoid the scalability and manageability issues of ACL and RBAC by combining the roles-based and rules-based approaches to authorization. As important, policy servers abstract the authorization decisions away from the application. Specifically, the network administrators or security managers define users, authentication methods (such as passwords, token-based authentication, or X.509 certificates), and access controls. The applications no longer handle access control directly. Instead, they interact with the policy server whenever a user issues a request to use a resource.

Typically, a policy server answers the question “Can user U perform action A on object O?” A number of commercial products offer robust policy server functionality. Some products use a group-based policy, where a user’s group might be determined by user role (for example, cashiers), or the group’s placement in an enterprise authentication directory (for example, LDAP tree). Other products support policies of higher complexity, where user privilege may be based on certain attributes (for example, a policy defines the authorization level of 1 to 5, where 1 is the highest level of access or similar privilege). Role- or attribute-based policies place users into groups based on user attributes. For example, greater access may be granted to the holder of an American Express Platinum card, or an airline upgrade entitlement is determined by the status and level of the frequent flyer.

FIGURE 11-1 Entitlements taxonomy

Yet, more advanced approaches use complex policies where the policy paradigm is extended to drive access control decisions based on events. For example, if a user is not registered for a particular service, the policy can redirect the user to the enrollment/registration page. Conversely, trying to enter an invalid password more than a predetermined number of times can redirect the user to a customer service page. All of these decisions can be further enhanced by using user attributes as factors in the policy evaluation.

Transactional Entitlements

Event-based access controls can be used to support coarse-grained and fine-grained entitlements as well as transactional entitlements—an interesting approach to policy-based authorization designed to solve complex access control problems in real time.

Transactional entitlements allow authenticated users to check their permissions against specific parameters by evaluating business policy rules in real time on an individual basis. For example, a transactional entitlement can control a user’s ability to execute a requested transaction such as perform a funds transfer, update a log, or search a particular database. Transactional entitlements can control various business-defined actions, including updates to a personnel file, execution of a particular transaction only on a certain day and/or at a certain time, in a given currency, and within a specified spending/trading limit. In our college student example earlier, checking for a $500 withdrawal limit is a transactional component of the entitlement structure.

In transactional entitlements, logical expressions can be arbitrarily complex to address real-world requirements. And ideally, the expressions are strongly typed and reusable, written once to support similar transactions, not just one transaction at a time.

The key differences between transactional and non-transactional entitlements are twofold:

• Transactional entitlements are evaluated (and can be updated) in real time.

• Transactional entitlements can support the transactional semantics of atomicity, consistency, isolation, and durability (ACID properties) by performing their actions within transactional brackets.

Transactional entitlements are vital to providing just-in-time authorization decisions for real-time applications such as online banking, trading, purchasing, and similar activities. Transactional entitlements are especially useful when business applications rely on dynamic policy-driven access controls to a Master Data Management environment and its information, which represents the authoritative system of records.

Entitlements and Visibility

The definition of entitlements makes it clear that the entitlements, being a resource-dependent expression, have to be enforced locally, close to the target resources. This enforcement should be done in the context of the access request, user credentials, and resource attributes. Moreover, the resource access request should not be decided and enforced by a central, resource-agnostic authority because of performance, scalability, and security concerns. As mentioned in previous sections, technology solutions that provide entitlement enforcement are known as policy servers. These servers are designed to be resource-aware and thus can enforce entitlement rules in the context of the specific access requests.

Policy servers can support fine-grained access controls, but are especially well-suited to support functional-level authorization at the method and interface level, since the policy semantics are easily adaptable to include the access rules.

However, fine-grained access control for data access and fine-grained data entitlements represent an interesting challenge. The area of fine-grained data access, shown in the lower-right corner of Figure 11-1, is known as data visibility challenge. It is the primary data access control concern for Master Data Management platforms such as Data Hub systems. This challenge is a direct result of the complexity of the business rules defining who can access what portion of the data, what kind of access (for example, Create, Read, Update, or Delete) can be granted, and under what conditions. Solving this data visibility challenge requires a new policy evaluation and policy enforcement approach that should complement the functional entitlement enforcement provided by the current generation of policy servers. The following sections look more closely at this data visibility challenge and a potential architecture of the visibility solution in more detail. This chapter also discusses approaches that allow for effective integration of functional and data entitlements in the context of the overarching enterprise security architecture framework.

Customer Data Integration Visibility Scenario

Let us illustrate the data visibility challenges with an example of a Data Hub solution for a hypothetical retail brokerage. As we stated previously, one of the key goals of an MDM solution is to enable transformation of the enterprise from an account-centric to an entity-centric (in our example, customer-centric) business model.

To that end, a retail brokerage company has embarked on the initiative to build an MDM platform for Customer Domain (the Customer Data Hub) that eventually would become a new system of record for all customer information. The data model designed for the new Data Hub will have to satisfy the specific business need to support two types of entities— customers with their information profiles, and the brokers who provide advisory and trading services to the customers. In order for this scheme to work, the project architects decided to build a reference facility that would associate a broker with his or her customers.

To improve customer experience and to enforce broker-customer relationships, the company has defined a set of business policies that state the following access restrictions:

• A customer may have several accounts with the company, and have relationships with several brokers to handle the accounts separately.

• A broker can see and change information about his or her customer but only for the accounts that the broker manages. The broker cannot see any customer account data owned by another broker.

• A broker’s assistant can see and change most but not all customer information for the customers that have relationships with this broker.

• A broker’s assistant can support more than one broker and can see the customer data of all of the brokers’ customers but cannot share this information across brokers, nor can this information be accessed at the same time.

• A customer service center representative can see some information about all customers but explicitly is not allowed to see the customers’ social security numbers (SSN) or federal tax identification numbers (TIN).

• A specially appointed manager in the company’s headquarters can see and change all data for all customers.

It is easy to see that this list of restrictions can be quite extensive. It is also easy to see that implementing these restrictions in the Data Hub environment where all information about the customers is aggregated into customer-level data objects is not a trivial task. Specifically, the main requirements of data visibility in Data Hub environments are twofold:

• Create customer and employee/broker entitlements that would be closely aligned with the authorization policies and clearly express the access restrictions defined by the business (for example, the restrictions described in the preceding list).

• Implement a high-performing, scalable, and manageable enforcement mechanism that would operate transparently to the users, be auditable to trace back all actions taken on customer data, and ensure minimal performance and process impact on the applications and the process workflow used by the business.

The first requirement calls for the creation of the entitlement’s grammar and syntax, which would allow the security and system administrators to express business rules of visibility in a terse, unambiguous, and complete manner. This new grammar has to contain the set of primitives that can clearly describe data attributes, conditions of use, access permissions, and user credentials. The totality of these descriptions creates a visibility context that changes as the rules, variables, and conditions change. This context allows the designers to avoid an inflexible approach of using hard-coded expressions, maintenance of which will be time-consuming, error-prone, and hard to administer. The same considerations apply to the functional permissions for the data services (for example, a permission to invoke a “Find Customer by name” service), as well as usage permissions for certain attributes based on the user role (for example, SSN and TIN restrictions for customer service representative). Another consideration to take into account is that the role alone is not sufficient—for example, a broker’s assistant has different permissions on data depending on which broker that assistant supports in the context of a given transaction.

The second requirement is particularly important in any online business, especially in financial services: Performance of a system can be a critical factor in a company’s ability to conduct trading and other business activities in volatile financial markets. Thus, the enforcement of the visibility rules has to be provided in the most effective and efficient manner that ensures data integrity but introduces as little latency as technically feasible.

Policies, Entitlements, and Standards

One way to define the policy and entitlement language and grammar is to leverage the work of standards bodies such as the Organization for the Advancement of Structured Information Standards (OASIS) and the World Wide Web Consortium (W3C). Among some of the most relevant standards to implement policy-enforced visibility are eXtensible Access Control Markup Language (XACML),4 eXtensible Resource Identifier (XRI),5and WS-Policy,6 a component of the broader WS-Security framework of standards for Web Services security.

XACML

XACML is designed to work in a federated environment consisting of disparate security systems and security policies. In that respect, XACML can be very effective in combination with the Security Assertion Markup Language (SAML)7 standard in implementing federated Roles-Based Access Control. We briefly discussed SAML standard in Chapter 9.

These standards can be used to encode the policies and entitlements and therefore design policy evaluation systems that can provide clear and unambiguous access control decisions. For example, XACML policy can define a condition that allows users to log in to a system only after 8 A.M. A fragment of this policy in XACML that uses standard references to XML schema for the “time” data type may look like this:

<Condition FunctionId=“urn:oasis:names:tc:xacml:1.0:function:and”>

<Apply FunctionId=“urn:oasis:names:tc:xacml:1.0:function:time-

greater-than-or-equal”

…..

AttributeId=“urn:oasis:names:tc:xacml:1.0:environment:current-time”/>

</Apply>

<AttributeValue

DataType=“http://www.w3.org/2001/XMLSchema#time”>08:00:00</AttributeValue>

</Apply>

</Condition>

While XACML offers a vehicle to clearly express access policies and permissions, XRI, on the other hand, allows users to create structured, self-describing resource identifiers. For example, XRI can describe the specific content of the library of research documents where the documents and books are organized by author, title, ISBN number, and even the location of the library, all in one comprehensive expression. To illustrate this power of XRI, consider a book search application that would allow users to find a book on a given subject that is available from one or more bookstores and library branches. Using XRI we can define the following resources:

xri://barnesandnoble.store.com/(urn:isbn:0-123-4567-8)/(+new)

xri://borders.store.com/(urn:isbn:0-123-4567-8)/(+used)

xri://NY.Public.Library.org/(urn:isbn:0-123-4567-8)/(+used)

In this example, XRI is used to identify the same book title (identified by its ISBN number), which is available from three different sources, two of which are bookstores— Barnes and Noble, and Borders—and the third one is the New York Public Library. Furthermore, XRI allows fine-grained resource definition attributes such as new or used book types.

Additional Considerations for XACML and Policies

The OASIS XACML technical committee (TC) has defined a number of details about XACML language, policies, rules, the way the policies are to be evaluated, and the decisions to be enforced. While these details are beyond the scope of this book, we will briefly look at some key XACML constructs, components, and features that can help MDM architects, designers, and implementers to protect access to the information stored in the MDM Data Hub systems.

Using the definition of the Policy as the encoding of Rules particular to a business domain, its data content, and the application systems designed to operate in this domain on this set of data, we can review several basic XACML components:

• XACML specifies that each Policy contains one or more Rules, where each Policy and Rule has a Target

• XACML Target is a simple predicate that specifies which Subjects, Resources, Actions, and Environments the Policy or Rule has to apply to.

• XACML is designed to support policy expressions across a broad range of subject and functional domains known as Profiles.

Profiles and Privacy Protection The notion of XACML profiles is very useful and provides for a high degree of reuse and manageability. For example, among several XACML defined profiles there is a Profile for Access Control and a Profile for Privacy Protection. The latter should be particularly interesting to MDM designers since it shows the power and extensibility of XACML as a policy language even for privacy policies that, unlike most traditional access control policies, often require a verification that the purpose for which information is accessed should match the purpose for which the information was gathered.

In addition to defining the privacy attributes, the XACML Privacy Profile describes how to use them to enforce privacy protection. This is a very important argument in favor of using XACML in defining and enforcing policies in the MDM environments for Customer Domain, where customer privacy preferences have to be captured, maintained, and enforced according to the explicit and implicit requests of the customers.

The XACML standard offers a flexible, useful, and powerful language that allows organizations to implement systematic creation, management, and enforcement of security and privacy policies. What follows is a brief discussion on how to use XACML to help MDM architects, designers, and implementers to address complex issues related to information security and data visibility in MDM environments.

Integrating MDM Solutions with Enterprise Information Security

The challenge of implementing data visibility and security in Master Data Management environments is twofold:

• First, it is a challenge of enforcing the access restriction rules in a new system of record.

• Second, it is a challenge of integrating new visibility controls with the existing enterprise security infrastructure using a comprehensive and overarching security architecture

Many commercially available MDM solutions on the market today support these requirements to a varying degree by adding the data visibility functionality to their core products. Leveraging Policy servers could be problematic because not all Policy server products used in the enterprise can enforce the visibility rules of required complexity and granularity in high-performance, low-latency environments.

However, experience shows that the visibility problem can be solved when you architect the solution (for example, a Data Hub) using a component-based, services-oriented architecture approach and extending the key principle of the “separation of concerns” to include a key concept relevant to information security—separation of duties, or SoD.

We discussed some basics of the “separation of concerns” principle in Part II of this book. Let’s define the companion principle, the separation of duties, since it has direct applicability to information security by stating that no single actor should be able to perform a set of actions that would result in a breach of security.

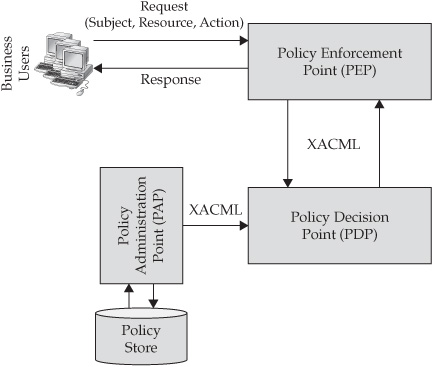

Looking at the conceptual data visibility architecture that is based on XACML and the separation of duties principle, we can identify three major components (we omitted a detailed discussion of all components, such as Policy Information Point, or PIP, for the sake of simplicity). The decision maker in this context is called a Policy Decision Point(PDP), and the decision enforcement is performed by one or more Policy Enforcement Points(PEPs). The third component is a policy administration facility known as a Policy Administration Point (PAP), sometimes referred to as a Policy Management Authority (PMA). As the name implies, the role of a PAP is primarily to perform out-of-band policy creation, administration, editing, and reporting—functionality that is clearly separate and distinct from run-time decision making and enforcement.

The benefits of separating the actions and responsibilities (duties) of policy administration decision making, and access decision enforcement into separate components and services include better security posture against a threat of a compromise by a single user, the ability to better manage the complexity of fine-grained access control enforcement, and the ability to change policy or add additional resources or new entitlements without requiring any changes to the consuming applications that issue data access requests.

The conceptual distributed security architecture that shows the roles of the PDP, PEP, and PAP components is shown in Figure 11-2.

FIGURE 11-2 Conceptual OASIS distributed security architecture

The details of the PDP, PEP, and PAP roles and responsibilities are defined by the OASIS distributed security standard described in detail in the OASIS document library available on www.oasis.org.

Overview of Key Architecture Components for Policy Decision and Enforcement

The OASIS standard defines a Policy Enforcement Point (PEP) that is responsible for intercepting all attempts to access a resource (see Figure 11-2). It is envisioned to be a component of the request-processing server such as a Web server that can act as an interceptor for all requests coming into the enterprise through a network gateway.

Since resource access is application-specific, the PEP is also application- or resource-specific. The PEP takes its orders from the Policy Decision Point (PDP), a component that processes resource access requests and makes authorization decisions.

The PDP is divided into two logical components. The Context Handler is implementation-specific. It knows how to retrieve the policy attributes used in a particular enterprise systems environment. The PDP Core is implementation independent and represents a common, shared facility. It evaluates policies against the information in the access request, using the Context Handler to obtain additional attributes if needed, and returns a decision of Permit, Deny, Not Applicable, or Indeterminate. The last two values are defined as follows:

• Not Applicable means that there is no policy used by this PDP that can be applicable to the access request.

• Indeterminate means that some error occurred that prevents the PDP from knowing what the correct response should be.

Policies can contain Obligations that represent actions that must be performed as part of handling an access request, such as “Permit access to this resource but only when an audit record is created and successfully written to the audit log.”

The PDP exchanges information with PEP via XACML-formatted messages. The PDP starts evaluating a decision request in the following sequence:

• Evaluate the Policy’s Target first.

• If the Target is FALSE, the Policy evaluation result is Not Applicable, and no further evaluation is done of either that Policy or of its descendants.

• If the Target is TRUE, then the PDP continues to evaluate Policies and Rules that are applicable to the request.

• Once all levels of Policies are evaluated to TRUE, the PDP applies the Rule’s Condition (a Boolean combination of predicates and XACML-supplied functions).

• Each Rule has an Effect, which is either Permit or Deny. If the Condition is TRUE, then the Rule returns a valid Effect value. If the Condition is FALSE, then Not Applicable is returned.

Each Policy specifies a combining algorithm that says what to do with the results of evaluating multiple Conditions from the Policies and Rules. An OASIS-defined example of such an algorithm is Deny Override. Here, if any detail-level Policy or Rule evaluates to Deny, then the entire high-level Policy set that contains these detail policies and rules evaluates to Deny. Of course, other combining algorithms are also possible, and should be defined based on the business requirements and business-level security policies.

Note Several implementations of this XACML-based data visibility architecture are available today. These include vendor products such as Oracle Entitlement Server (OES) and custom implementations using various Java or Microsoft.NET frameworks, such as the Spring Framework.

Integrated Conceptual Security and Visibility Architecture

The foregoing brief discussion offers an insight into how PDP and PEP components operate and communicate with each other. We also discussed how policies, rules, and obligations have to be expressed in order to provide a clear set of directives for access control decisions. Together, these components represent building blocks of the Data Hub Visibility and Security Services. Let’s apply the principles of separation of duties, separation of concerns, and service-oriented architecture framework to these Data Hub visibility and security services in order to define a comprehensive end-to-end security and visibility architecture that would enable policy-based, fine-grained access control to the information stored in a Data Hub system.

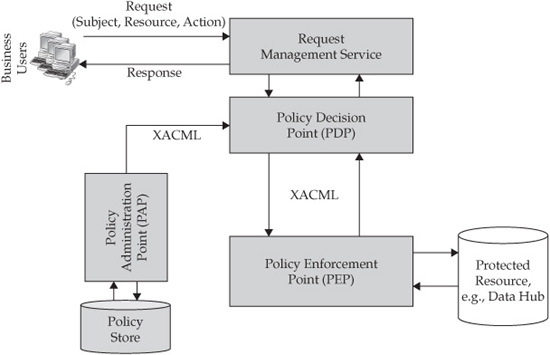

First, let’s adjust the architecture shown in Figure 11-2 to accommodate the following enterprise requirements:

• To ensure appropriate performance and transaction latency requirements, the policy enforcement has to be performed as “close” to the resource being protected as possible. For example, when a Data Hub is built on a relational database platform, a Policy Enforcement Point may be implemented as a stored procedure or other appropriate SQL-based technique.

• Most enterprise environments contain a large number of heterogeneous resources, each of which may implement a different, resource-specific PEP. We’ll reflect this concern by introducing additional resource and PEP components as shown in Figure 11-3.

• Given a potentially large number of heterogeneous resources that require PEP-based protection, we can optimize the information and control flows in such a way that a resource-independent PDP will receive a data request, make a Permit or Deny decision based on the user entitlements and the security policy, and send the result to the appropriate PEP component as part of the XACML obligations.

• Finally, since a global enterprise data environment typically contains a large variety of data stores, it is reasonable to assume that a business application may request data not just from the Data Hub but from multiple, often heterogeneous data stores and applications. Such complex requests have to be managed and orchestrated to support distributed transactions including compensating transactions in case of a partial or complete failure. Therefore, let’s introduce a conceptual Request Management Service Component that would receive data requests from consuming applications, communicate with the Policy Decision Point, and in general, manage and orchestrate request execution in an appropriate, policy-defined order. Note that we’re defining the Request Management function as a service in accordance with the principles of the service-oriented architecture. Thus, as the enterprise adds additional resources and develops new workflows to handle data requests, the business applications will continue to be isolated from these changes since all authorization decisions are performed by the PDP.

FIGURE 11-3 Policy enforcement architecture adjusted to enterprise requirements

The resulting architecture view is shown in Figure 11-3.

Let’s define specific steps aimed at implementing this architecture of a distributed, enterprise-scale data visibility solution:

• Define business visibility requirements and rules.

• Design a Data Hub data model that can enable effective visibility-compliant navigation (for example, by introducing data tags that can be used for highly selective data retrieval).

• Decompose data visibility rules into decision logic and decision enforcement components.

• Develop an entitlements grammar (or extend the existing policy grammar) to express visibility rules.

• Map visibility rules to user credentials and roles.

• Design and/or install and configure a Policy Decision Point (PDP) engine—a component of the architecture that would analyze every data request to the Data Hub and evaluate it against the policies and entitlements of the requester (user). The PDP can be an implementation of a specialized proprietary or a commercial rules engine.

• Design and develop a Policy Enforcement Point (PEP) as a data-structure and data content-aware component that performs requested data access operations in the most technically efficient manner. For example, in the case of a Data Hub implementation that is using a Relational Database Management System (RDBMS), the PEP can be a library of stored procedures, a set of database triggers, built-in functions, or an extension to the Data Hub data model that would include a “security table.” A security table approach includes a purpose-built data object that is populated (provisioned) using the content of the policies and entitlements; this security table will be joined with Data Hub tables by the PEP to produce the required result set or an empty set, depending on the values of security attributes in the security table.

• Ensure that all policies are created, stored, and managed by a Policy Administration Point (PAP). This component stores the policies and policy sets in a searchable policy store. PAP is an administration facility that allows an authorized administrator to create or change user entitlements and business rules. Ideally, if the enterprise has already implemented a policy database that is used by a Policy server, a visibility policy store should be an extension of this existing policy store.

However, data visibility is only a part of the overall data security and access control puzzle. Therefore, while these steps can help develop a policy-based Visibility Architecture, we need to extend this process and the architecture itself to integrate its features and functions with the overall enterprise security architecture. It is reasonable to assume that the majority of functional enterprises have no choice but to implement multilayered “defense-in-depth” security architecture that includes perimeter defense, network security, enterprise-class authentication, roles-based or policy-based authorization, a version of a single-sign-on (SSO) facility, and an automated provisioning, deprovisioning, and certification of user entitlements (see Chapter 9 for additional discussion on these topics).

Let’s add the following steps to the architecture definition and design process outlined in the preceding list. These steps are designed to ensure that the resulting architecture can interoperate and be integrated with the existing enterprise security architecture:

• Develop or select a solution that can delegate authentication and coarse-grained authorization decisions to the existing security infrastructure including Policy Servers.

• Similarly, ensure that user credentials are captured at the point of user entry into the system and carried over through the PDP and PEP components to the Data Hub, thus supporting the creation and management of the auditable log of individual user actions.

• Make sure that the PDP, PEP, and the Enterprise Audit and Compliance system can interface with each other and interoperate in supporting business transactions so that all data access actions can be captured, recorded, and reported for follow-on audit analysis.

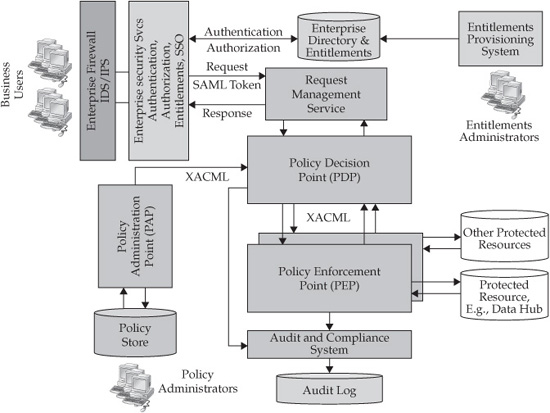

Applying these additional steps to the architecture shown in Figure 11-3 may result in the high-level conceptual integrated enterprise security and visibility architecture shown in Figure 11-4.

FIGURE 11-4 Integrated enterprise visibility and security conceptual architecture

This architecture shows how major components of the visibility and security services can be integrated and configured to provide seamless and transparent access control to the data stored in the MDM platform (that is, a Data Hub). As we stated earlier, the core components in this architecture include:

• Policy Administration Point (PAP)

• Policy Store that contains visibility and entitlements policies and rules

• Policy Decision Point (PDP)

• Policy Enforcement Point (PEP)

These components are integrated with the enterprise security architecture that includes but is not limited to

• Perimeter defense and firewalls

• Enterprise directory

• Entitlements and access control policy store that supports policy authorization server

• Enterprise authentication and authorization service (possibly including enterprise-class single-sign-on solution)

• Enterprise provisioning and certification system

• Regulatory and compliance auditing and reporting system

Visibility and Security Architecture Requirements Summary

The foregoing discussion of the visibility and security components and services helps illustrate the complexity of the integrated visibility design. This complexity is one of the drivers for making the “buy vs. build” decision for PDP, PEP, PAP, and other components and services. This decision must be made very carefully in order to protect the entire MDM initiative from the risk of embarking on a complex, unproven, error-prone, and extensive implementation. To help make this decision, we would like to offer the following list of functional requirements that MDM and security architects should consider during the evaluation of the available products and solutions. At a high level, the PDP and PEP components of the architecture should support

• XACML properties

• Domain independence

• Protection of XML documents

• Distributed policies

• Optimized indexing of policies

• Rich set of standard functions and data types

• Profiles

• Policy administration and delegation

These functional requirements are listed here as a template and a reference. Specific implementation requirements may differ and should be evaluated in a formal, objective, and verifiable fashion like any other enterprise software purchase decision.

Visibility and Security Information Flows

The integrated security and visibility architecture shown in Figure 11-4 is designed to support user requests to access data stored in the MDM platform by implementing a number of runtime interaction flows. These flows have been adapted from the OASIS-defined PDP and PEP interaction patterns briefly mentioned in the previous section. We discuss these flows in this section to illustrate the key processing points:

• The user gains access to the MDM environment by supplying his or her credentials to the network security layer.

• After passing through the firewall the user interacts with the enterprise authentication and authorization service, where the user credentials are checked and the user is authenticated and authorized to use the MDM platform (this is a functional authorization to use MDM services that is enforced by a Policy Server).

• The authenticated user issues the request for data access, probably invoking a highlevel business service (Find Party, Edit Product Reference, and so on).

• The authorization system reviews the request and makes an authorization decision for the user to permit or deny invocation of a requested service and/or method (in this case, a service method that implements Find Party or Edit Product Reference functionality).

• Once the affirmative decision is made, the user credentials and the request are passed to the Policy Decision Point, where the request is evaluated against user entitlements in the context of the data being requested. If the PDP decides that the user is allowed to access or change the requested set of data attributes, it will assemble an XACML-formatted payload using one of the standard message formats (possibly an SAML message). This message would include user credentials, authorization decision, and obligations containing enforcement parameters and the name of the enforcement service or interface (for example, the name of the stored procedure and the value for the predicate in the SQL WHERE clause). The authorization decision and corresponding parameters comprise policy obligations that can be expressed in XACML and XRI.

• If the decision evaluates to Permit, the PDP sends the message with the XACML payload to the appropriate Policy Enforcement Point (PEP).

• The PEP parses the payload, retrieves the value of the obligation constraints that should form data access predicates (for example, a list of values in the SQL WHERE clause), and invokes the appropriate PEP service or function.

• Once the PEP-enforced data access operation completes successfully, the query result set is returned to the requesting application or a user.

• The PEP retrieves the user credentials and uses them to create a time-stamped entry in the audit log for future analysis and recordkeeping.

• If necessary, the PEP will encrypt and digitally sign the audit log record to provide nonrepudiation of the actions taken by the user on the data stored in the MDM/Data Hub.

For these steps to execute effectively at run time, several configuration activities need to take place. These configuration flows may include the following:

• Use the Enterprise Provisioning System to create and deliver user credentials and entitlements from the authoritative systems of user records (for example, an HR database for employees) to all consuming target applications including the Enterprise Directory.

• If necessary, extend, modify, or create new entries in the Visibility and Rules Repository.

• Use an Enterprise Access Certification system to configure and execute audit reports and to obtain regular snapshots of the list of active users, their entitlements, and a summary of their actions, so that system administrators can detect and correct conflicting or improper entitlements and tune the overall system to avoid performance bottlenecks. At the same time, the security administrators can use the activity logs to integrate them with the enterprise intrusion detection and protection systems.

While these steps may appear simple, the task of architecting and integrating data visibility and user entitlements into the overall enterprise security framework is very complex, error-prone, and time-consuming, and will require a disciplined, phased-in approach that should be managed like any other major enterprise integration initiative.

We conclude this part of the book with the following observation: Master Data Management environments are designed to provide their consumers with an accurate, integrated, authoritative source of information. Often, Master Data Management environments deliver radically new ways to look at the information. For example, in the case of a Data Hub for Customer domain, the MDM platform can deliver an authoritative and accurate customer-centric operational data view. However, an MDM Data Hub can deliver much more. Indeed, it may also make integrated data available to users and applications in ways that extend and sometimes “break” traditional processes and rules of the business that define who can see which part of what data. Therefore, the majority of MDM implementations need to extend the existing policy grammar and policy servers or develop and deliver new capabilities that support fine-grained functional and data access entitlements while at the same time maintaining data availability, presentation flexibility, and alignment with the legacy and new improved business processes. To sum up, the entitlements and data visibility concerns of Master Data Management represent an important challenge. To solve this challenge, the demands of data security and visibility have to be addressed in an integrated fashion as a key part of the MDM architecture and in conjunction with the enterprise security architecture.

References

1. Coyne, Edward J. and Davis, John M. Role Engineering for Enterprise Security Management (Information Security and Privacy). Artech House (2008).

2. http://www.oasis-open.org/committees/download.php/16790/wss-v1.1-spec-os-SOAPMessageSecurity.pdf.

3. http://www.w3.org/TR/2006/WD-ws-policy-primer-20061018/.

4. http://www.oasis-open.org/committees/tc_home.php?wg_abbrev=xacml.

5. http://www.oasis-open.org/committees/tc_home.php?wg_abbrev=xri.

6. http://www.w3.org/TR/2006/WD-ws-policy-primer-20061018/.

7. http://www.oasis-open.org/committees/tc_home.php?wg_abbrev=security.

8. Johnson, Rod, Jürgen Höller, Arendsen, Alef, Risberg, Thomas, and Sampaleanu, Colin. Professional Java Development with the Spring Framework (First Edition). Wrox Press (2005).