CHAPTER 9

Introduction to Information Security and Identity Management

In medieval times, commerce was conducted in city-states that were well protected by city walls, weapons, and an army of guards and soldiers. In modern times, as commerce rapidly moved to a global marketplace, the goal of keeping potential participants out was replaced by the desire to invite and keep potential customers in.

In today’s business environment, we see a similar transformation—instead of keeping everything hidden behind proprietary, secure networks protected by firewalls, commerce is done on the public Internet, and every business plans to take advantage of the potentially huge population of prospective customers. Denying access to corporate information is no longer a viable option—inviting new customers and enticing them to do business is the new imperative.

Traditional and Emerging Concerns of Information Security

The new imperative of Internet-driven secure inclusion brings with it a new set of security challenges—challenges that are reinforced by numerous pieces of legislation that promote various forms of e-commerce and even e-government and require new approaches to security that can protect both the customer and corporate information assets. We discussed a number of these regulations in Chapter 8.

What Do We Need to Secure?

The Internet has become a de facto standard environment where corporations and individuals conduct business, “meet” people, perform financial transactions, and seek answers to questions about anything and everything. In fact, all users and all organizations that have some form of Internet access appear to be close (and equidistant) to each other.

The Internet has moved the boundaries of an enterprise so far away from the corporate data center that it created its own set of problems. Indeed, together with the enterprise boundaries, the traditional security mechanisms have also been moved outward, creating a new “playing field” for customers, partners, and unwanted intruders and hackers alike. As a result, enterprise security requirements have become much more complex.

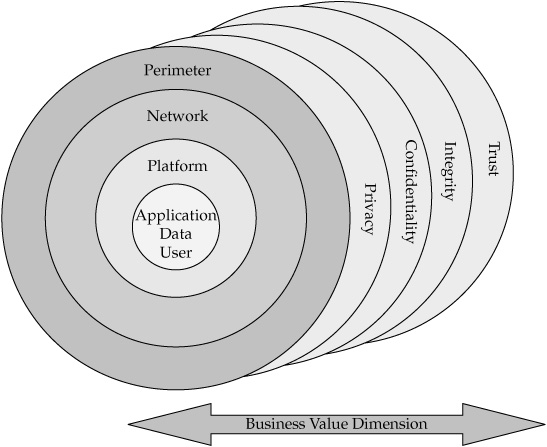

One way to discuss these requirements is to look at what areas of the business environments need to be secured, and from what kind of danger. Figure 9-1 illustrates the areas of security concerns and corresponding security disciplines that are defined in the following section.

The model of a layered security framework describes security “zones” that need to be protected regardless of whether the threat is originating from outside or from within the organization.

FIGURE 9-1 Layered security model

Technologies that enable the implementation of a layered security framework may offer overlapping functionality and can span several security domains. For example, the security disciplines of authentication, authorization, and administration (3A) play equally important roles in securing the network resources, the enterprise perimeter, the computing platform, and the applications, data, and users.

Perimeter Security

Perimeter security deals with the security threats that arrive at the enterprise boundary via a network. By definition, perimeter security has to handle user authentication, authorization, and access control to the resources that reside inside the perimeter. The primary technology employed to achieve perimeter security is known as firewalls.

A firewall is placed at the network node where a secure network (for example, an internal enterprise network) and an insecure network (for example, the Internet) meet each other. As a general rule, all network traffic, inbound and outbound, flows through the firewall, which screens all incoming traffic and blocks that which does not meet the restrictions of the organization’s security policy.

In its most simple form, the role of the firewall is to restrict incoming traffic from the Internet into an organization’s internal network according to certain parameters. Once a firewall is configured, it filters network traffic, examines packet headers, and determines which packets should be forwarded or allowed to enter and which should be rejected.

Network Security

Network security deals with authenticating network users, authorizing access to network resources, and protecting the information that flows over the network.

Network security involves authentication, authorization, and encryption and often uses technologies like Public Key Infrastructure (PKI) and Virtual Private Network (VPN). These technologies are frequently used together to achieve the desired degree of security protection. Indeed, no security tool, be it authentication, encryption, VPN, firewall, or antivirus software, should be used alone for network security protection. A combination of several products needs to be utilized to truly protect the enterprise’s sensitive data and other information assets.

Network and Perimeter Security Concerns A common approach to network security is to surround an enterprise network with a defensive perimeter that controls access to the network. However, once an intruder has passed through the perimeter defenses, he, she, or it may be unconstrained and may cause intentional or accidental damage. A perimeter defense is valuable as a part of an overall defense. However, it is ineffective if a hostile party gains access to a system inside the perimeter or compromises a single authorized user.

Besides a defensive perimeter approach, an alternative network security model is a model of mutual suspicion, where every system within a critical network regards every other system as a potential source of threat.

Platform (Host) Security

Platform or host security deals with security threats that affect the actual device and make it vulnerable to external or internal attacks. The platform security concerns include the already-familiar authentication, authorization, and access control disciplines, and the security of the operating system, file system, application server, and other computing platform resources that can be broken into, or taken over, by an intruder.

Platform security solutions include security measures that protect physical access to a given device. For example, platform security includes placing a server in a protected cage; using sophisticated authentication and authorization tokens that may include biometrics; using “traditional” physical guards to restrict access to the site to the authorized personnel only; developing and installing “hardened” versions of the operating system; and using secure application development frameworks like the Java Authentication and Authorization Service, or JAAS. (JAAS defines a pluggable, stacked authentication scheme. Different authentication schemes can be plugged in without having to modify or recompile existing applications.)

Application, Data, and User Security

Application, data, and user security concerns are at the heart of the overall security framework. Indeed, the main goal of any malicious intent is to get a hold of the protected resource and use it, whether it is information about a company’s financial state or an individual’s private activities, functionality of the electronic payment funds transfer, or, as the case may be, the identity of a person the intruder wants to impersonate for personal, political, or commercial gains.

The security disciplines involved in this are already familiar: the 3As (authentication, authorization, administration), encryption, digital signatures, confidentiality, data integrity, privacy, accountability, and virus protection.

End-to-End Security Framework

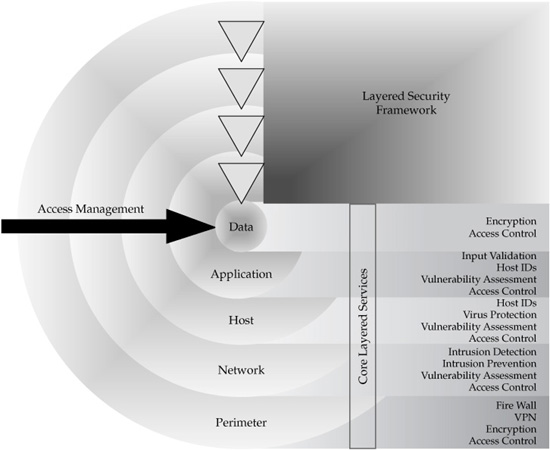

To sum up the discussions in the previous sections, when we talk about security, we may want to look at the entire security space from “outside in,” using the diagram in Figure 9-1. An important point that needs to be emphasized here is that neither of the disciplines taken separately—network, perimeter, platform, application, data and user security—could offer a complete security assurance.

The events of recent history and the heightened awareness of the real dangers that can be exploited by various terrorist organizations and unscrupulous opportunists have taught us that in order to be and feel secure, we need to achieve “end-to-end security”—an environment that does not intentionally or by omission expose security holes and can provide the business benefits of security—privacy, confidentiality, integrity, and trust (see Figure 9-2).

Only a strong understanding of potential security vulnerabilities and an effective combination of various security technologies and disciplines can ensure that this goal can be achieved.

Traditional Security Requirements

Today’s business environment has different security requirements than traditional “brick and mortar” commerce. Enterprise networks are no longer defined by the physical boundaries of a single company location but often encompass remote sites and include mobile and remote users all over the world. Also, organizations often use many contractors who are not employees and thus do not undergo employee-level screening and vetting, but may have similar or even greater access to enterprise systems and applications than many employees.

Traditional security requirements include:

• Authentication The ability to verify that an individual or a party is who they claim they are; authentication is a verification component of the process known as identification.

FIGURE 9-2 Security and business value dimensions

• Authorization A business process of determining what data and computing resources the authenticated party is allowed to access; authorization processes and technologies enforce the permissions expressed in the user authorization schema known as entitlements. An authorization mechanism automatically enforces entitlements that are based on a security policy dealing with the use of the resource, and, in general, the policy could be roles-based, rules-based, or a combination of the two. Clearly, authorization is driven by and depends on reliable authentication (see the discussion on various authorization concerns later in this and the following chapters).

• Confidentiality A business requirement that defines the rules and processes that can protect certain information from unauthorized use. A more formal definition of confidentiality has been offered by the International Organization for Standardization (ISO) in its ISO-17799 standard1 as “ensuring that information is accessible only to those authorized to have access.”

Note Confidentiality is necessary but not sufficient for maintaining the privacy of the personal information stored in the computing system. This is based on the definition of privacy as the proper handling and use of personal information (PI) throughout its life-cycle, consistent with the preferences of the individual and with data protection principles of accountability, collection limitations, disclosure, participation, relevance, security, use limitations, and verification.

• Integrity A business requirement that data in a file or a database, or a message traversing the network remain unchanged unless the change is properly authorized; data integrity means that any received data matches exactly what was sent; data integrity deals with the prevention of accidental or malicious changes to data.

• Verification and Nonrepudiation This requirement deals with the business and legal concepts that allow a systematic verification of the fact that an action in question was undertaken by a party in question, and that the party in question cannot legally dispute or deny the fact of the action (nonrepudiation); this requirement is especially important today when many B2C and B2B transactions are conducted over the network.

• Traditional paper-based forms are now available over the network and are allowed to be signed electronically.

• Legislation such as eSign made such signatures acceptable in the court of law (see section on eSign law later in the chapter).

• Auditing and Accountability The requirement that defines the process of data collection and analysis that allows administrators and other specially designated users, such as IT auditors, to verify that authentication and authorization rules are producing the intended results as defined in the company’s business and security policies. Individual accountability for attempts to violate the intended policy depends on monitoring relevant security events, which should be stored securely and time-stamped using a trusted time source in a reliable log of events (also known as an audit trail or a chain of evidence archive); this audit log can be analyzed to detect attempted or successful security violations. The monitoring process can be implemented as a continuous automatic function, as a periodic check, or as an occasional verification that proper procedures are being followed. The audit trail may be used by security administrators, internal audit personnel, external auditors, government regulatory officials, and in legal proceedings.

• Availability This requirement provides an assurance that a computer system and the information it manages are accessible by authorized users whenever needed.

• Security management This requirement includes user administration and key management:

• In the context of security management, user administration is often referred to as user provisioning. It is the process of defining, creating, maintaining, and deleting user authorizations, resources, and the authorized privilege relationships between users and resources. Administration translates business policy decisions into an internal format that can be used to enforce policy definitions at the point of entry, at a client device, in network devices such as routers, and on servers and hosts. Security administration is an ongoing effort because business organizations, application systems, and users are constantly changing.

• Key management deals with a very complex process of establishing, generating, saving, recovering, and distributing private and public keys for security solutions based on PKI (see more on this topic later in the chapter).

These traditional security concerns apply to any software system or application that has to protect access to and use of information resources regardless of whether the system is Internet based, internal intranet based, or is a more traditional client-server design. However, as businesses and government organizations continue to expand their Internet channels, new security requirements have emerged that introduce additional complexity into an already complex set of security concerns.

Emerging Security Requirements

Let’s briefly discuss several security concerns and requirements that have emerged in recent years. These requirements include identity management, user provisioning and access certification, intrusion detection and prevention, antivirus and antispyware capabilities, and concerns about privacy, confidentiality, and trust.

Identity Management

This security discipline is concerned with some key aspects of doing business on the Internet. These aspects include:

• The need to develop and use a common and persistent identity that can help avoid endless checkpoints that users need to go through as they conduct business on different websites

• The need to prevent the theft and unauthorized use of user identities

The first requirement is not just a user convenience—the lack of a common identity management results in multiple instances of the same user being known but being treated differently in different departments of the same organization. For example, a services company may have a Mike Johnson in its sales database, an M. W. Johnson in its services database, and Dr. Michael Johnson and family in its marketing database—clearly, this organization would have a difficult time reconciling this individual’s sales and services activity, and may end up bombarding him and his household with marketing offers for the products he already has—the result is a negative customer experience and poor customer relationship!

The second requirement is also very important. As we stated in Chapter 8, identity theft continues to be on the rise, and the size of the problem is becoming quite significant. For example, according to the 2009 Gartner Report, about 7.5 percent of U.S. adults lost money to some sort of financial fraud in 2008, and data losses cost companies an average of $6.6 million per breach. The direct effect of the identify theft is that for the same time period, customer attrition across industry sectors due to a data breach almost doubled from the regular rate of 3.6 percent to 6.5 percent, and in the financial services sector in particular the turnover rate reached 5.5 percent.2 This growth of identity theft incidents is estimated to continue at an alarmingly high rate, and many analysts agree that identity theft has become the fastest-growing white-collar crime in the U.S. and probably around the world.

Identity management is also a key requirement for the success of Web Services. For Web Services to become a predominant web-based e-business model, companies need to be assured that web-based applications have been developed with stringent security and authentication controls. Not having strong identity management solutions could prevent Web Services from evolving into mature web-based solutions.

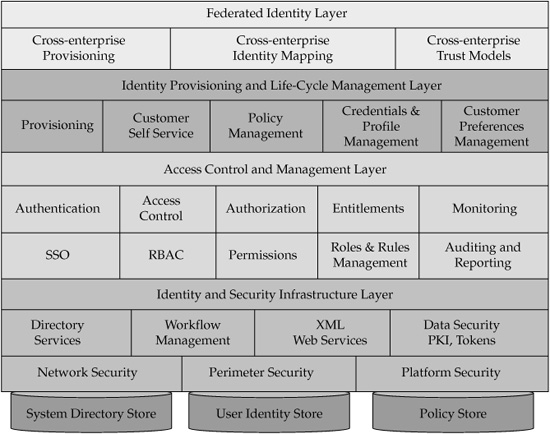

Identity management consists of many components, services, and complex interrelated processes. In order to better visualize the complexity and multitude of identity management, we use a notion of the conceptual reference architecture. Such an identity management reference architecture is shown in Figure 9-3.

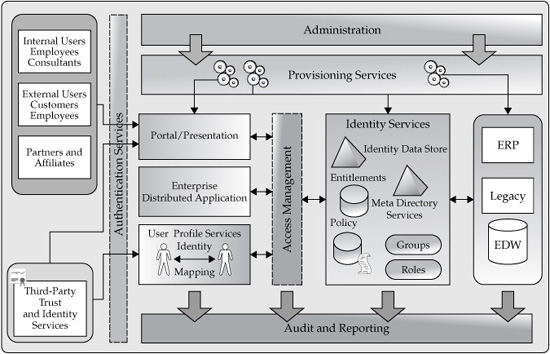

There are a number of standards-based and industry-driven initiatives that attempt to address various aspects of identity management, including such initiatives as Liberty Alliance and the recently announced Kantara Initiative3. However, the topic of identity, anonymity, and privacy involves the sociology of personal information and of information more generally. Therefore, identity management should by itself be considered as a cross-discipline, multifaceted area of knowledge and technology. The diagram in Figure 9-4 shows a typical architecture of identity management.

FIGURE 9-3 Identity management reference architecture

User Provisioning and Access Certification

This requirement has emerged to address identity life-cycle management and its user administration aspects that deal with the creation, maintenance, and termination of digital identities. User provisioning deals with automating the process of granting users access rights (entitlements) to computing and network resources. Therefore, it is also referred to as entitlements provisioning. By automating time-consuming and costly manual procedures, user provisioning can sharply reduce the costs of provisioning new employees, customers, partners, and suppliers with the necessary credentials, tools, and access privileges. Conversely, the process can also be used in reverse to deprovision ex-employees, customers, partners, suppliers, and expired accounts. User provisioning provides a greater degree of corporate efficiency and lowers administrative costs by facilitating account creation and tightly controlling access privileges, and enhances security by tracking, managing, and controlling access. It is also very important to note that by automating the processes of creating and removing user accounts and their entitlements, user provisioning and deprovisioning are widely viewed as some of the key capabilities that are required to enable compliance with various regulations, including those defined in GLBA, SOX, and the USA Patriot Act’s Know Your Customer (KYC).

FIGURE 9-4 Identity management logical architecture

Provisioning and deprovisioning users and assigning their access privileges in the form of entitlements represent only one side of the issue. On-boarding new users, modifying existing entitlements, and removing users and entitlements can become a very complex process, especially when the number of security rules, the number of users and their roles, and the number of protected resources grow with business maturity in a globally competitive marketplace. The new challenge of verifying the outcome of user provisioning gave rise to a companion discipline— Access Certification. This security discipline is often considered in conjunction with user provisioning and frequently implemented as a product bundle. Products and solutions implementing access certification support extensive capabilities for user role modeling, provide an accurate view of access privileges provisioned to the users, verify that these privileges are assigned according to the security policies of the enterprise, and detect conflicts in assigned privileges against the policies, as well as detect changes in user access that can result in an increase in risk profiles.

Access certification helps organizations to quickly determine who has access to what resource, why this access was granted, the impact of adding a new set of roles and entitlements to already established user groups, and in general helps manage identity governance and supports compliance reporting and access control implementation requirements of the enterprise.

Intrusion Detection and Prevention

This truly is a traditional requirement that has been revitalized in recent years due to increased incidents of break-ins and similar security violations, all due to the networked and interconnected nature of today’s business. According to a 2009 survey conducted by the Computer Security Institute (CSI),4 organizations detected large increases in incidences of password sniffing, financial fraud, and malware infection, and 33 percent of the surveyed organizations were fraudulently represented as the sender of a phishing message. These statistics are growing significantly year after year. Considering the typical enterprise’s reluctance to admit to incidents or their inability to detect them, the true figures are likely to be higher than what has been reported.

Intrusion detection is the process of monitoring the events occurring in a computer system or network and analyzing them for signs of intrusion. Intrusion detection is a necessary business, technical, and even legal requirement that cannot be addressed by simply relying on firewalls and Virtual Private Networks. Indeed, as the attackers are getting smarter and their intrusion techniques and tools are getting more effective, reliable intrusion detection solutions have to offer a degree of sophistication and adaptability that requires unprecedented levels of industry collaboration and innovative “out-of-the-box” approaches to designing intrusion detection systems.

While intrusion detection systems were designed to detect unauthorized access or misuse of computing resources, the new breed of security systems, called Intrusion Prevention Systems (IPS) has emerged to change the focus from the detection of attack to the prevention of an attack before actual damage has occurred. Contemporary IPS solutions are designed to protect against such common threats as worms, viruses, and Trojan horses; installation and activation of back doors; modifications to system files; changes in user entitlements and privilege levels; buffer overflow attacks; various spyware applications; and many others.

Information and Software Integrity and Tamper-Resistance

Protection of software and data against illegitimate use and modifications is a pressing security issue for software developers, software publishers, and intellectual property distributors (for example, music, digital video, DVD) alike. Many existing software-based mechanisms are too weak (for example, having a single point of failure) or too expensive to apply (for example, heavy runtime performance penalty). Software tamper-resistance deals with a serious threat, where a malicious user obtains a copy of the software and modifies its protection mechanism so that the modified software can change its original behavior to satisfy the attacker’s intent. For example, a program can be modified to create an unauthorized copy of the software that can be illegally distributed to the public, to a business competitor, to a criminal organization, etc.

Privacy, Confidentiality, and Trust

These requirements became critical as more organizations and users started to use the Internet for many aspects of business and personal life. We briefly described the confidentiality and privacy requirements in the preceding section. The issue of trust adds additional requirements of keeping confidential information confidential, respecting and maintaining user privacy according to his or her privacy preferences, and providing a systematic means of verifying that the privacy and confidentiality aspects of the trusted relationship are not violated. In general, different forms of trust exist to address different types of problems and mitigate risk in certain conditions. Various forms of trust have to be managed and reinforced differently based on the nature of the business and its corporate policies. When we look at the traditional network security solution, we can identify two principal forms of trust: direct trust and third-party trust.

• Direct trust refers to a situation in which two entities (for example, individual users) have established a trusted relationship in order to exchange information. When direct trust is applied to secure communications, it is the responsibility of each entity to ensure that they are comfortable with their level of personal trust in one another.

• Third-party trust refers to a situation in which two entities implicitly trust each other even though they have not previously established a personal relationship. In this situation, two entities implicitly trust each other because they each share a relationship with a common third party, and that third party vouches for the trustworthiness of the two entities in question. As an example, when classical security users named Alice and Bob want to exchange trustworthy credentials, they can use passports and/or driver licenses since they implicitly trust the government agencies that issued those credentials. Third-party trust is a fundamental requirement for any large-scale implementation of a network security solution based on public key cryptography since it’s impractical and unrealistic to expect each user to have previously established relationships with all other users.

Trust and Business Semantics

The nature of trust in relationship to security varies based on the business processes that require different degrees and procedures to establish trust. A Russian proverb, adopted by former U.S. President Ronald Reagan, says, “Trust, but verify.” This is especially true for the financial services industry, and more specifically, for securities trading. The notion of trust is paramount there and is the key to enable securities trading. However, the business trust in securities trading is different from the trust relationship between two individuals. Business trust implies the ability to rely on others to assume certain risks, the assurance that business commitments will be honored, and the certainty that there is an effective avenue of recourse. To establish and maintain these trusted relationships, a security system should support the notion that acting as if you don’t trust the counterparty forces you to find ways to trust the transaction. This concept of trust drives new developments in security standards, systems, and solutions.

Overview of Security Technologies

This section provides a cursory overview of several major security technologies. This overview is by necessity brief—a complete and comprehensive discussion on this topic is well beyond the scope of this book.

Confidentiality and Integrity

Let’s start the overview of security technologies with a high-level discussion of two key security requirements—information confidentiality and integrity—and the techniques that support these requirements.

Cryptography, Cryptology, and Cryptanalysis

Cryptography is the process of converting data into an unreadable form via an encryption algorithm. Cryptography enables information to be sent across communication networks that are assumed to be insecure, without losing confidentiality or the integrity of the information being sent. Cryptography also can be used for user authentication by enabling verification of the sender identity to the recipient.

Cryptanalysis is the study of mathematical techniques designed to defeat cryptographic algorithms. Collectively, a branch of science that deals with cryptography and cryptanalysis is called cryptology.

An encryption algorithm transforms plain text into a coded equivalent, known as the cipher text, for transmission or storage. The coded text is subsequently decoded (decrypted) at the receiving end and restored to plain text. The encryption algorithm uses a key, which typically is a large binary number. The length of the key depends on the encryption algorithm and is one of the factors that determines the strength of the encryption.

The data that needs to be protected is “locked” for sending by using the bits in the key to transform the data bits using some mathematical operation. At the receiving end, the same or a different but related key is used to unscramble the data, restoring it to its original binary form. The effort required to decode the unusable scrambled bits into meaningful data without knowledge of the key—known as “breaking” or “cracking” the encryption—typically is a function of the complexity of the algorithm and the length of the keys. In most effective encryption schemes, the longer the key, the harder it is to decode the encrypted message.

Two types of algorithms are in use today: shared key (also known as “secret key” or symmetric key) and public key (or asymmetric key).

The obvious problems with the symmetric cipher include:

• The key distribution problem

• The need to exchange the secret key between all intended recipients of the sender

• Communication difficulties between unknown parties (that is, the challenge is for the sender to distribute a secret key to a party unknown to the sender)

• The scalability issue; for example, in a group of 100 participants, a sender may have to maintain 99 secret keys

These problems of symmetric ciphers were addressed by the discovery made by Whitfield Diffie and Martin Hellman in the mid-1970s. Their work defined a process in which the encryption and decryption keys were mathematically related but sufficiently different that it would be possible to publish one key with very little probability that anyone would be able to derive the other. This notion of publishing one key in the related key pair to the public gave birth to the term Public Key Infrastructure (PKI). The core premise of PKI is based on the fact that while deriving the other key is possible, it is computationally and economically infeasible.

While symmetric encryption is using a shared secret key for both encryption and decryption, PKI cryptography is based on the use of public/private key pairs. A public key is typically distributed in the form of a certificate that may be available to all users wishing to encrypt information, to be viewed only by a designated recipient using his or her private key.

A private key is a distinct data structure that is always protected from unauthorized disclosure and is used by the owner of the private key to decrypt the information that was encrypted using the recipient’s public key. The elegance of the PKI is in its mathematics, which allows the encryption key to be made public, but it still would be computationally infeasible to derive a private decryption key from the public key.

Allowing every party to publish its public key PKI solves the major key distribution and scalability problems of symmetric encryption that we mentioned earlier.

The mathematical principles, algorithms, and standards used to implement public key cryptography are relatively complex and their detailed description is well beyond the scope of this book. Moreover, creation and management of the public-private key pairs is a complex and elaborate set of processes that include key establishment, key life-cycle management, key recovery, key escrow, and many others. These and other details about various aspects of PKI are too complex and numerous to be discussed in this book. However, there are a number of mature PKI products on the market today, and in many cases the technology has been made sufficiently easy to use to become practically transparent to the user.

PKI, Nonrepudiation, and Digital Signatures

Digital signatures are one of the major value-added services of the Public Key Infrastructure. Digital signatures allow the recipient of a digitally signed electronic message to authenticate the sender and verify the integrity of the message. Most importantly, digital signatures are difficult to counterfeit and easy to verify, making them superior even to handwritten signatures.

Most analysts and legal scholars agree that digital signatures will become increasingly important in establishing the authenticity of the record for admissibility as evidence. The adoption of the Electronic Signatures in Global and National Commerce Act (eSign) legislation into law by the U.S. Congress is one confirmation of the importance of digital signatures. However, the status of explicit digital signature legislation varies from country to country. For example, the European Union has set different levels of legal recognition for different forms of electronic signatures that meet different legal and technology requirements, from limited acceptance to broad applicability.

Digital signatures received serious legal backing from organizations such as the American Bar Association (ABA), which states that to achieve the basic purpose of a signature, it must have the following attributes:

• Signer authentication A signature should indicate who signed a document, message, or record, and should be difficult for another person to produce without authorization.

• Document authentication A signature should identify what is signed, making it impracticable to falsify or alter either the signed matter or the signature without detection.

The ABA clarifies that these attributes are mandatory tools used to exclude impersonators and forgers and are essential ingredients of a “nonrepudiation service.”

Network and Perimeter Security Technologies

This group of security technologies is relatively mature and widely deployed throughout corporate and public networks today.

Firewalls

Network firewalls enforce an enterprise’s security policy by controlling the flow of traffic between two or more networks. A firewall system provides both a perimeter defense and a control point for monitoring access to and from specific networks. Firewalls often are placed between the corporate network and an external network such as the Internet or a partnering company’s network. However, firewalls are also used to segment parts of corporate networks.

Firewalls can control access at the network level, the application level, or both. At the network level, a firewall can restrict packet flow based on such protocol attributes as the packet’s source address, destination address, originating TCP/UDP port, destination port, and protocol type. At the application level, a firewall may base its control decisions on the details of the conversation (for example, rejecting all conversations that discuss a particular topic or use a restricted keyword) between the applications and other available information, such as previous connectivity and user identification.

Firewalls may be packaged as system software, hardware and software bundles, and, more recently, dedicated hardware appliances (embedded in routers, for example). Known as firewall “appliances,” they are easy-to-configure integrated hardware and software packages that run on dedicated platforms. Firewalls can defend against a variety of attacks, including:

• Unauthorized access

• IP address “spoofing” (a technique where hackers disguise their traffic as coming from a trusted address to gain access to the protected network or resources)

• Session hijacking

• Spyware, viruses, and Trojans

• Malicious or rogue applets

• Traffic rerouting

• Denial of Service (DoS)

With the emergence of spyware and malware as some of the fastest-growing security threats, advanced firewalls and other perimeter defense solutions are extending their features to support the capability to recognize and eradicate spyware modules that reside on end-user computers and corporate servers.

Many popular firewalls include VPN technology, where a secure “tunnel” is created over the external network via an encrypted connection between the firewalls to access the internal, protected network transparently.

Virtual Private Networks

Virtual Private Network (VPN) solutions use encryption and authentication to provide confidentiality and data integrity for communications over open and/or public networks such as the Internet. In other words, VPN products establish an encrypted tunnel for users and devices to exchange information. This secure tunnel can only be as strong as the method used to identify the users or devices at each end of the communication, and the method used to protect data that is transmitted over the tunnel.

Typically, each VPN node uses a secret session key and an agreed-upon encryption algorithm to encode and decode data, exchanging session keys at the start of each connection using public key encryption. Both end points of a VPN link check data integrity, usually using a standards-compliant cryptographic algorithm (for example, SHA-1 or MD-5).

Secure HTTP Protocols/SSL/TLS/WTLS

These communication protocols address issues of secure communication between clients and the server on the wired (and in the case of WTLS, wireless) network. We discuss these protocols very briefly in this section for completeness.

Secure HyperText Transport Protocols These protocols include popular HTTP Secure (HTTPS) and its less used alternative—Secure HTTP or S-HTTP. Both use, as their basis, the primary protocol used between web clients and servers—the HyperText Transport Protocol (HTTP). HTTP Secure, or HTTPS, is a combination of HTTP and SSL/TLS protocols (the latter is described in the next section). HTTPS provides encryption and secure identification of the server. It uses a dedicated port (i.e., Port 443) and therefore is often used to secure sensitive communications such as payment transactions, identity establishment and verification, and so on. Similarly to HTTPS, Secure HTTP (S-HTTP) extends the basic HTTP protocol to allow both client-to-server and server-to-client encryptions. Both protocols provide three basic security functions: digital signature, authentication, and encryption. Any message may use any combination of these (as well as no protection). These protocols provide multiple-key management mechanisms including password-style manually distributed shared secret keys, public key key exchange, and Kerberos ticket distribution. In particular, provisions have been made for prearranged symmetric session keys to send confidential messages to those who have no established public-private key pair.

Secure Sockets Layer (SSL) SSL is the most widely used security technology on the web. SSL provides end-to-end security between browsers and servers, always authenticating servers and optionally authenticating clients. SSL is application-independent because it operates at the transport layer rather than at the application layer. It secures connections at the point where the application communicates with the IP protocol stack so it can encrypt, authenticate, and validate all protocols supported by SSL-enabled browsers, such as FTP, Telnet, e-mail, and so on. In providing communications channel security, SSL ensures that the channel is private and reliable and that encryption is used for all messages after a simple “handshake” is used to define a session-specific secret key.

Transport Layer Security Protocol (TLS) The Internet Engineering Task Force renamed SSL as the Transport Layer Security protocol in 1999. TLS is based on SSL 3.0 and offers additional options for authentication such as enhanced certificate management, improved authentication, and new error-detection capabilities. Its three levels of server security include server verification via digital certificate, encrypted data transmission, and verification that the message content has not been altered.

Wireless Transport Layer Security The Wireless Transport Layer Security (WTLS) protocol is the security layer of the Wireless Application Protocol (WAP). The WAP WTLS protocol was designed to provide privacy, data integrity, and authentication for wireless devices. Even though the WTLS protocol is closely modeled after the well-studied TLS protocol, there are a number of potential security problems in it, and it has been found to be vulnerable to several attacks, including a chosen plaintext data recovery attack, a datagram truncation attack, a message forgery attack, and a key-search shortcut for some exportable keys.

Security experts are continuously working on addressing these concerns, and new wireless security solutions are rapidly becoming available, not only to corporate local area networks, but to all wireless devices including personal computers, PDAs, and even mobile phones. Therefore, information stored in an MDM Data Hub can be accessed securely as long as the wireless access point or a router and the wireless users exercise appropriate precautions and employ wireless security protocols of enterprise-defined strengths. These protocols include Wireless Equivalent Privacy (WEP), Wi-Fi Protected Access (WPA), Extensible Authentication Protocol (EAP), and others.

Application, Data, and User Security

In this section, we’ll discuss application, data, and user security technologies and their applicability to the business requirements of authentication, integrity, and confidentiality.

Introduction to Authentication Mechanisms

Authentication mechanisms include passwords and PINs, one-time passwords, digital certificates, security tokens, biometrics, Kerberos authentication, and RADIUS.

Passwords and PINs Authentication most commonly relies on passwords or personal identification numbers (PINs). Passwords are typically used while logging into networks and systems. To ensure mutual authentication, passwords can be exchanged in both directions.

Challenge-Response Handshakes These techniques offer stronger authentication than ordinary passwords. One side starts the exchange, and is presented with an unpredictable challenge value. Based on a secretly shared value, an appropriate response is then calculated and sent. This procedure defeats the unauthorized use of simple passwords.

One-Time Password One-time passwords are designed to remove the security risks presented by traditional, static passwords and PINs. The same password is never reused, so intercepted passwords cannot be used for authentication. Implementations of this approach vary, often using time values to provide the basis on which the current password is based. For example, RSA Security’s SecurID solution generates a key value that changes every 60 seconds and can be displayed on the RSA SecureID small hardware token or presented to the user on a screen of a device that runs a SecureID “soft token” application (this RSA application can be installed on a PDA or a smartphone device). This value, plus an optional PIN, is submitted to an authentication server, where it is compared to a value computed for that user’s SecureID at that particular time. This form of authentication is sometimes referred to as “two-factor authentication.”

Digital Certificates Digital certificates work like their real-life counterparts that are issued by a trusted authority to contain and present the user’s credentials (for example, passports and driver’s licenses). Digital certificates contain encryption keys that can be used to authenticate digital signatures. Certificates are often based on PKI technology and mathematically bind a public encryption key to the identity (or other attribute) of a principal. The principal can be an individual, an application, or another entity such as a web server. A trusted certificate authority creates the certificate and vouches for its authenticity by signing it with the authority’s own private key. There are several commercial certificate issuers, such as RSA Security (now a part of EMC2) and VeriSign. An organization can issue certificates for its own applications by using an internally managed Certificate Authority. A certificate-issuing server can also be installed as a part of the Web Server suite (for example, IBM, Lotus, and Microsoft integrate a certificate server with their web server software). PKI security vendors such as VeriSign and RSA Security/EMC2 offer a variety of mature certificate-enabled products for businesses.

Other authentication techniques include Kerberos and Remote Authentication Dial-In User Service (RADIUS) Authentication.

Multifactor Authentication Technologies

In principle, any authentication process deals with one or more questions that help define the user’s identity. These questions include:

• Something you have (for example, a smart card or a hardware token)

• Something you know (for example, a password or a PIN)

• Something you are (for example, an intrinsic attribute of your body including fingerprint, iris scan, face geometry)

• Something you do (for example, typing characteristics, handwriting style)

The concept of multifactor authentication uses more than one of these options. Clearly, multifactor authentication systems are more difficult for the user to get used to. However, the security benefits of multifactor authentication are significant. Multifactor authentication can successfully withstand a number of impersonation attacks, and therefore can eventually overcome many perceived drawbacks of this technology.

Biometrics The goal of biometric identification is to provide strong authentication and access control with a level of security surpassing password and token systems.5 This goal is achievable because access is allowed only to the specific individual, rather than to anyone in possession of the access card.

Biometric techniques usually involve an automated process to verify the identity of an individual based on physical or behavioral characteristics. The first step in using biometrics is often called the enrollment. Predefined biometric templates, such as a voiceprint, fingerprint, and iris scan, are collected for each individual in the enrollment database. The template data then is used during a verification process for comparison with the characteristic of the person requesting access. Depending on the computer and network technologies used, verification can take only seconds. Biometric techniques fall into two categories: physiological and behavioral.

• Physiological biometrics Face, eye, fingerprint, palmprint, hand geometry, and thermal images

• Behavioral biometrics Voiceprints, handwritten signatures, and keystroke/signature dynamics

Biometric measures are used most frequently to provide hard-to-compromise security against impersonation attacks, but they also are useful for avoiding the inconveniences of needing a token or of users forgetting their passwords.

When considering biometric techniques, care must be taken to avoid a high rate of false positives (erroneous acceptance of the otherwise compromised identity) and false negatives (erroneous rejections of the otherwise valid identity). For example, a fingerprint scan can produce a false negative because the individual’s finger was dirty, covered with grease, etc. Among the biometric techniques available to date, the iris scan produces the highest degree of confidence6 (indeed, an iris scan is unique for each eye for each individual for as long as the individual is alive; the number of all possible iris scan codes is in the order of 1072—a very large number that exceeds the number of observable stars in the universe!).

Smart Cards

Smart cards represent another class of multifactor authentication solutions that offer a number of defenses against password-based vulnerabilities.

Smart cards—plastic cards about the same size as a credit card, but with an embedded computer chip—are increasingly used in a wide variety of applications, from merchant loyalty schemes to credit/debit cards, to student IDs, to GSM phones. According to several reports by the Gartner Group and other research organizations, smart cards are the highest-volume semiconductor-based product manufactured today, with GSM phones and financial-service applications leading this boom—the GSM subscriber information module (SIM) remains the single-largest smart card application.

Authentication, Personalization, and Privacy

Personalization has long been recognized as one of the key elements of an improved customer experience and enhanced customer relationships with the enterprise. However, organizations need to strike the right balance between the customers’ desire for personalized services and the need for privacy protection. In other words, we can recognize the tension between privacy, with its intrinsic property of not disclosing personal information unless and to the extent absolutely necessary, and personalization, which requires access to personal information, transactional behavior, and even knowledge of the party-to-enterprise relationships. To put it slightly differently, enabling personalization requires a certain amount of privacy disclosure.

But personalization is not the only threat to privacy. We have stated repeatedly that effective, strong authentication is a prerequisite to protecting an individual’s privacy. However, it can also threaten privacy since, depending on the situation, the risk profile of the users, and their transactions, the stronger the authentication, the more personal, sensitive, identifying information may be needed before access to an information resource or permission to execute a transaction can be granted. Solving these tensions is one of the drivers for an integrated MDM solution that can support the privacy preferences of the users as a part of the MDM data model and the extension of the data governance rules and policies.

Integrating Authentication and Authorization

While PKI addresses the issues of authentication, integrity, confidentiality, and nonrepudiation, we need to define an overarching conceptual framework that addresses a set of issues related to the authorization of users and applications to perform certain functions, to access protected resources, to create and enforce access control policies, and to “provision” users to automatically map appropriate policies, permissions, and entitlements at the time of their enrollment into a security domain.

As we mentioned earlier, a successful authorization relies on the ability to perform reliable authentication. In fact, these two disciplines should go hand in hand in order to create a robust and fully functional and auditable security framework. To help discuss these topics, let’s take a brief look at access control mechanisms and Single Sign-On (SSO) technologies as a contemporary access control solution for the web and beyond.

Access control simplifies the task of maintaining the security of an enterprise network by cutting down on the number of paths and modes through which attackers might penetrate network defenses. A more detailed discussion on access control can be found in Chapter 11.

SSO Technologies

Single Sign-On (SSO) is a technology that enables users to access multiple computer systems or networks after logging in once with a single set of authentication credentials. This setup eliminates the situation where separate passwords and user IDs are required for each application. SSO offers three major advantages: user convenience, administrative convenience, and improved security. Indeed, having only one sign-on per user makes administration easier. It also eliminates the possibility that users will keep their many passwords in an easily accessible form (for example, paper) rather than try to remember them all, thereby compromising security. Finally, SSO enhances productivity by reducing the amount of time users spend gaining system access.

SSO is particularly valuable in computing environments where users access applications residing on multiple operating systems and middleware platforms and, correspondingly, where its implementation is most challenging.

One disadvantage of the SSO approach is that when it is compromised, it gives the perpetrator access to all resources the user can access via a single sign-on.

Federated SSO and SAML

Enabling effective and seamless access to computing environments where users access applications residing on multiple operating systems and middleware platforms is the challenge addressed by Federated SSO—a set of authentication technologies that support and manage identity federation by enabling the portability of identity information across otherwise autonomous security domains. Identity federation offers a number of business benefits to the organization, including a reduction in the administration costs of identity provisioning and management, increased security and improved user experience by reducing the number of identification and authentication actions (ideally to a single action), and improved privacy compliance by allowing the user to control or limit what information is shared.

From a technology point of view, identity federation and seamless cross-domain authentication (federated SSO) are enabled through the use of open industry standards such as the Security Assertion Markup Language (SAML).7

SAML is the standard for exchanging authentication and authorization data between security domains. SAML has been developed by the Organization for the Advancement of Structured Information Standards (OASIS) and is an XML-based protocol that uses security tokens containing assertions to pass authentication and authorization information about a principal (usually an end user) between an identity provider and a Web Service. There are essentially three fundamental components of the SAML specification:

• SAML assertions

• SAML protocol

• SAML bindings

The SAML specification includes an XML schema that defines SAML assertions and protocol messages. The specification also describes methods for binding these assertions to other existing protocols (HTTP, SOAP) in order to enable additional security functionality. A detailed discussion of SAML is beyond the scope of this book.

SAML is not the only standard addressing identity federation. There are a number of other standards and technologies, many of which are available as standalone products or as part of other environments. Most popular solutions include:

• Windows CardSpace8 A Windows application available in the latest versions of Microsoft Windows (starting with the Vista operating system). The Windows CardSpace application allows users to provide their digital identity to online services in a simple and secure way by creating a secure online virtual information card that is used to prove a user’s identity and is difficult for the intruder to acquire or change.

OpenID 9 A lightweight identity system designed around the concept of Internet identifier (URI-based) identity. OpenID was initially designed to address a very simple use case—enabling blog commenting in a controlled manner to protect against blog abuse, spam, and invalid/misstated attribution. OpenID’s primary benefits are the simplicity of its trust model and ease of integration.

• ID-WSF (The Liberty Alliance’s ID-Web Services Framework) 10 A platform for the discovery and invocation of identity services implemented as Web Services associated with a given identity. ID-WSF’s security model is very flexible and supports use cases with distributed identity services.

Web Services Security Concerns

We discussed Web Services in Part II, when we looked at service-oriented architecture (SOA). While Web Services offer a number of truly significant benefits, they bring with them interesting and challenging security concerns that need to be addressed in order to design, develop, and deploy a security Web Services system.

Authentication

Since Web Services, like any other services and interfaces, should allow only authorized users to access service methods, authenticating those users is the first order of business. This is similar to the username and password authentication of users that ordinary web-sites may require. However, the main difference here is that in the context of Web Services, the users are other computers that want to use the Web Service.

Data Integrity and Confidentiality

If an organization decides to expose an internal application as a Web Service, it may have to also expose supporting data stores (databases, registries, directories). Clearly, special care is necessary to protect that data, either by encryption (which may come with a performance impact) or by guarding its accessibility.

Similarly, data may be in danger of interception as it is being processed. For example, as a Web Service method gets invoked on user request, the temporary data that the Web Service uses locally may be exposed to an attacker if unauthorized users gain access to the system.

Eavesdropping in a Web Services context implies acquiring the information that users get back from a Web Service. If the Web Services output can be intercepted on its way to the user, the attacker may be in a position to violate the confidentiality and integrity of this data. One preventive measure is to use data-in-transit encryption (for example, SSL) for returned information.

Attacks

In the Web Services model, special care needs to be taken with regard to input parameter validation. In a poorly designed service, an attacker can insert a set of invalid input parameters that can bring the service or a system down. One solution to this problem could be to use a standard object transport protocol such as Simple Object Transport Protocol (SOAP) to define an acceptable value for all input parameters in a Web Service.

Denial-of-service (DoS) attacks, especially the ones where an attacker can overload the service with requests, will prevent legitimate users from using the service and thus disrupt the business. Furthermore, a flood of requests on one component or service can propagate to other components, affecting them all in a cascading, “domino” effect. Since component A may receive requests from sources B and C, this means that an attack on B disrupts A, and may also affect users of C.

The loosely coupled nature of Web Services also leads to other security issues. The chain of components always has a component that is most vulnerable to attack (the weakest link). If attackers can compromise such a weak-link component, they can exploit this opportunity in a variety of ways:

• Intercept any data that flows to that particular component from either direction.

• Acquire sensitive, personal, or valuable information.

• Manipulate the streams of data in various ways, including data alteration, data redirection, and using “innocent” servers to mount denial-of-service attacks from the inside.

• Shut down the component, denying its functionality to the other components that depend upon it; this will effectively disrupt many users’ activities from many different access points.

WS-Security Standard

As we discussed in the preceding section, the security of Web Services includes concern about authenticity, integrity, and confidentiality of messages, and understanding of and protection from various penetration and denial-of-service attacks. A dedicated OASIS standard—WS-Security—has been developed to provide options for Web Services protection through message integrity, message confidentiality, and single-message authentication. These mechanisms can be used to accommodate a wide variety of security models and encryption technologies.

WS-Security also provides a general-purpose mechanism for associating security tokens with messages. No specific type of security token is required by WS-Security. It is designed to be extensible (for example, to support multiple security token formats). For example, a user might provide proof of identity and proof of a particular business certification. Additional information about WS-Security can be found on the OASIS Web Services Security Technical Committee website.11

Putting It All Together

Given the multitude and complexity of security technologies and the size constraints of the book, we were able only to “scratch the surface” of security concerns. However, general security practices and common sense dictate that enterprises should develop a comprehensive end-to-end security framework and reference architecture in order to protect data, users, and application platforms to a degree that makes good business, economic, and even legal sense. The key concerns of information security that are driven by risk management imperatives as well as regulatory and compliance requirements can and should be addressed by carefully designing and implementing a holistic, integrated, and manageable information security architecture and infrastructure that are based on established and emerging standards as well as industry- and enterprise-specific policies. Experience shows that as long as you design your security solution holistically and do not concentrate only on one aspect of security, be it authentication, firewall, or encryption, the current state of the art in security technologies can enable you to create robust and secure Master Data Management solutions. This approach of the ground-up end-to-end security design helps protect the MDM system and the master data it manages, even though all modern MDM implementations are architected as SOA instances and thus the security concerns of Web Services are directly applicable to the design of an MDM Data Hub. From the architecture framework point of view, the layered security framework shown in Figure 9-1 should be considered as an integral part of the MDM solution; in fact, the security framework shows that the outer layers of security are “wrapped” around the data core that naturally represents a Data Hub.

The last two chapters in this part of the book focus on two key aspects of information security that are particularly important to Master Data Management solutions: protecting MDM information and functionality from a security breach and unintended, unauthorized access and use.

To that end, we’ll show how to design an MDM solution to enable appropriate levels of information security and security-related compliance. We’ll also show how to integrate MDM information management architecture, which was discussed in Part II of the book, with the security and compliance concerns discussed in this and the previous chapters.

References

1. http://www.iso.org/iso/catalogue_detail?csnumber=39612.

2. Gartner, Inc. “Data breach and financial crimes scare consumers away.” (February 2009).

3. http://kantarainitiative.org/confluence/display/GI/Mission.

4. http://gocsi.com/survey.

5. http://ctl.ncsc.dni.us/biomet%20web/BMIndex.html.

6. http://ctl.ncsc.dni.us/biomet%20web/BMIris.html.

7. http://www.oasis-open.org/committees/tc_home.php?wg_abbrev=security.

8. http://www.microsoft.com/windows/products/winfamily/cardspace/default.mspx.

9. http://openid.net/get-an-openid/what-is-openid/.

10. http://www.projectliberty.org/specs/.

11. www.oasis-open.org/committees/wss/.