15

Optimum Receivers

Geoffrey C. Orsak

15.7 Standard Binary Signaling Schemes

15.1 Introduction

Every engineer strives for optimality in design. This is particularly true for communications engineers since in many cases implementing sub optimal receivers and sources can result in dramatic losses in performance. As such, this chapter focuses on design principles leading to the implementation of optimum receivers for the most common communication environments.

The main objective in digital communications is to transmit a sequence of bits to a remote location with the highest degree of accuracy. This is accomplished by first representing bits (or more generally short bit sequences) by distinct waveforms of finite time duration. These time-limited waveforms are then transmitted (broadcasted) to the remote sites in accordance with the data sequence.

Unfortunately, because of the nature of the communication channel, the remote location receives a corrupted version of the concatenated signal waveforms. The most widely accepted model for the communication channel is the so-called additive white Gaussian noise* channel (AWGN channel). Mathematical arguments based upon the central limit theorem [7], together with supporting empirical evidence, demonstrate that many common communication channels are accurately modeled by this abstraction. Moreover, from the design perspective, this is quite fortuitous since design and analysis with respect to this channel model is relatively straightforward.

*For those unfamiliar with AWGN, a random process (waveform) is formally said to be white Gaussian noise if all collections of instantaneous observations of the process are jointly Gaussian and mutually independent. An important consequence of this property is that the power spectral density of the process is a constant with respect to frequency variation (spectrally flat). For more on AWGN, see Papoulis [4].

15.2 Preliminaries

To better describe the digital communications process, we shall first elaborate on so-called binary communications. In this case, when the source wishes to transmit a bit value of 0, the transmitter broadcasts a specified waveform s0(t) over the bit interval t ∈ [0, T]. Conversely, if the source seeks to transmit the bit value of 1, the transmitter alternatively broadcasts the signal s1(t) over the same bit interval. The received waveform R(t) corresponding to the first bit is then appropriately described by the following hypotheses testing problem:

where, as stated previously, η(t) corresponds to AWGN with spectral height nominally given by N0/2. It is the objective of the receiver to determine the bit value, that is, the most accurate hypothesis from the received waveform R(t).

The optimality criterion of choice in digital communication applications is the total probability of error normally denoted as Pe. This scalar quantity is expressed as

The problem of determining the optimal binary receiver with respect to the probability of error is solved by applying stochastic representation theory [10] to detection theory [5,9]. The specific waveform representation of relevance in this application is the Karhunen–Loève (KL) expansion.

15.3 Karhunen–Loève Expansion

The Karhunen–Loève expansion is a generalization of the Fourier series designed to represent a random process in terms of deterministic basis functions and uncorrelated random variables derived from the process. Whereas the Fourier series allows one to model or represent deterministic time-limited energy signals in terms of linear combinations of complex exponential waveforms, the Karhunen–Loève expansion allows us to represent a second-order random process in terms of a set of orthonormal basis functions scaled by a sequence of random variables. The objective in this representation is to choose the basis of time functions so that the coefficients in the expansion are mutually uncorrelated random variables.

To be more precise, if R(t) is a zero mean second-order random process defined over [0, T] with covariance function KR(t, s), then so long as the basis of deterministic functions satisfy certain integral constraints [9], one may write R(t) as

where

In this case the Ri will be mutually uncorrelated random variables with the φi being deterministic basis functions that are complete in the space of square-integrable time functions over [0, T]. Importantly, in this case, equality is to be interpreted as mean-square equivalence, that is,

for all 0 ≤ t ≤ T.

FACT 15.1: If R(t) is AWGN, then any basis of the vector space of square-integrable signals over [0, T] results in uncorrelated and therefore independent Gaussian random variables.

The use of Fact 15.1 allows for a conversion of a continuous time detection problem into a finite-dimensional detection problem. Proceeding, to derive the optimal binary receiver, we first construct our set of basis functions as the set of functions defined over t ∈ [0, T] beginning with the signals of interest s0(t) and s1(t). That is,

In order to ensure that the basis is orthonormal, we must apply the Gramm–Schmidt procedure* [6] to the full set of functions beginning with s0(t) and s1(t) to arrive at our final choice of basis {φi(t)}.

FACT 15.2: Let {φi(t)} be the resultant set of basis functions.

Then for all i > 2, the φi(t) are orthogonal to s0(t) and s1(t). That is,

for all i > 2 and j = 0,1.

Using this fact in conjunction with Equation 15.3, one may recognize that only the coefficients R1 and R2 are functions of our signals of interest. Moreover, since the Ri are mutually independent, the optimal receiver will, therefore, only be a function of these two values.

Thus, through the application of the KL expansion, we arrive at an equivalent hypothesis testing problem to that given in Equation 15.1,

where it is easily shown that η1 and η2 are mutually independent, zero-mean, Gaussian random variables with variance given by N0/2, and where φ1 and φ2 are the first two functions from our orthonormal set of basis functions. Thus, the design of the optimal binary receiver reduces to a simple two-dimensional detection problem that is readily solved through the application of detection theory.

*The Gramm–Schmidt procedure is a deterministic algorithm that simply converts an arbitrary set of basis functions (vectors) into an equivalent set of orthonormal basis functions (vectors).

15.4 Detection Theory

It is well-known from detection theory [5] that under the minimum Pe criterion, the optimal detector is given by the maximum a posteriori rule (MAP),

that is, determine the hypothesis that is most likely, given that our observation vector is r. By a simple application of Bayes theorem [4], we immediately arrive at the central result in detection theory: the optimal binary detector is given by the likelihood ratio test (LRT),

where the πi are the a priori probabilities of the hypotheses Hi being true. Since in this case we have assumed that the noise is white and Gaussian, the LRT can be written as

where

By taking the logarithm and cancelling common terms, it is easily shown that the optimum binary receiver can be written as

This finite-dimensional version of the optimal receiver can be converted back into a continuous time receiver by the direct application of Parseval's theorem [4] where it is easily shown that

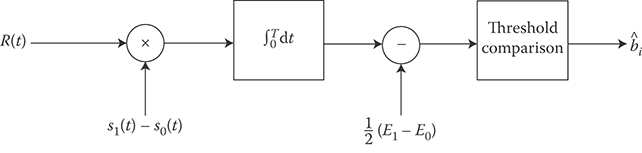

FIGURE 15.1 Optimal correlation receiver structure for binary communications.

FIGURE 15.2 Optimal matched filter receiver structure for binary communications. In this case h(t) = s1(T − t) − s0(t − t).

By applying Equation 15.9 to 15.8 the final receiver structure is then given by

where E1 and E0 are the energies of signals s1(t) and s0(t), respectively. (See Figure 15.1 for a block diagram.) Importantly, if the signals are equally likely (π0 = π1), the optimal receiver is independent of the typically unknown spectral height of the background noise.

One can readily observe that the optimal binary communication receiver correlates the received waveform with the difference signal s1(t) – s0(t) and then compares the statistic to a threshold. This operation can be interpreted as identifying the signal waveform si(t) that best correlates with the received signal R(t). Based on this interpretation, the receiver is often referred to as the correlation receiver.

As an alternate means of implementing the correlation receiver, we may reformulate the computation of the left-hand side of Equation 15.10 in terms of standard concepts in filtering. Let h(t) be the impulse response of a linear, time-invariant (LTI) system. By letting h(t) = s1(T − t) − s0(T − t), then it is easily verified that the output of R(t) to an LTI system with impulse response given by h(t) and then sampled at time t = T gives the desired result. (See Figure 15.2 for a block diagram.) Since the impulse response is matched to the signal waveforms, this implementation is often referred to as the matched filter receiver.

15.5 Performance

On account of the nature of the statistics of the channel and the relative simplicity of the receiver, performance analysis of the optimal binary receiver in AWGN is a straightforward task. Since the conditional statistics of the log likelihood ratio are Gaussian random variables, the probability of error can be computed directly in terms of Marcum Q functions* as

where the si are the two-dimensional signal vectors obtained from Equation 15.4, and where ‖x‖ denotes the Euclidean length of the vector x. Thus, ‖s0 − s1‖ is best interpreted as the distance between the respective signal representations. Since the Q function is monotonically decreasing with an increasing argument, one may recognize that the probability of error for the optimal receiver decreases with an increasing separation between the signal representations, that is, the more dissimilar the signals, the lower the Pe.

15.6 Signal Space

The concept of a signal space allows one to view the signal classification problem (receiver design) within a geometrical framework. This offers two primary benefits: first it supplies an often more intuitive perspective on the receiver characteristics (e.g., performance) and second it allows for a straightforward generalization to standard M-ary signaling schemes.

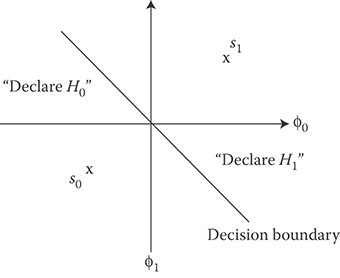

To demonstrate this, in Figure 15.3, we have plotted an arbitrary signal space for the binary signal classification problem. The axes are given in terms of the basis functions φ1(t) and φ2(t). Thus, every point in the signal space is a time function constructed as a linear combination of the two basis functions. By Fact 15.2, we recall that both signals s0(t) and s1(t) can be constructed as a linear combination of φ1(t) and φ2(t) and as such we may identify these two signals in this figure as two points.

Since the decision statistic given in Equation 15.8 is a linear function of the observed vector R which is also located in the signal space, it is easily shown that the set of vectors under which the receiver declares hypothesis Hi is bounded by a line in the signal space. This so-called decision boundary is obtained by solving the equation ln[L(R)] = 0. (Here again we have assumed equally likely hypotheses.) In the case under current discussion, this decision boundary is simply the hyperplane separating the two signals in signal space. Because of the generality of this formulation, many problems in communication system design are best cast in terms of the signal space, that is, signal locations and decision boundaries.

FIGURE 15.3 Signal space and decision boundary for optimal binary receiver.

*The Q function is the probability that a standard normal random variable exceeds a specified constant, that is,

15.7 Standard Binary Signaling Schemes

The framework just described allows us to readily analyze the most popular signaling schemes in binary communications: amplitude-shift keying (ASK), frequency-shift keying (FSK), and phase-shift keying (PSK). Each of these examples simply constitute a different selection for signals s0(t) and s1(t).

In the case of ASK, s0(t) = 0, while , where E denotes the energy of the waveform and fc denotes the frequency of the carrier wave with fcT being an integer. Because s0(t) is the null signal, the signal space is a one-dimensional vector space with . This, in turn, implies that . Thus, the corresponding probability of error for ASK is

For FSK, the signals are given by equal amplitude sinusoids with distinct center frequencies, that is, with fiT being two distinct integers. In this case, it is easily verified that the signal space is a two-dimensional vector space with resulting in The corresponding error rate is given to be

Finally, with regard to PSK signaling, the most frequently utilized binary PSK signal set is an example of an antipodal signal set. Specifically, the antipodal signal set results in the greatest separation between the signals in the signal space subject to an energy constraint on both signals. This, in turn, translates into the energy constrained signal set with the minimum Pe. In this case, the si(t) are typically given by , where θ(0) = 0 and θ(1) = π. As in the ASK case, this results in a one-dimensional signal space, however, in this case resulting in probability of error given by

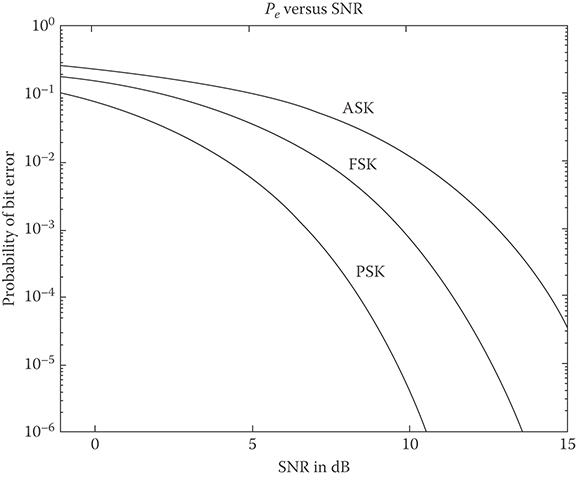

In all three of the described cases, one can readily observe that the resulting performance is a function of only the signal-to-noise (SNR) ratio E/N0. In the more general case, the performance will be a function of the intersignal energy to noise ratio. To gauge the relative difference in performance of the three signaling schemes, in Figure 15.4, we have plotted the Pe as a function of the SNR. Please note the large variation in performance between the three schemes for even moderate values of SNR.

FIGURE 15.4 Pe versus the signal to noise ratio in decibels [dB = 10 log(E/N0)] for amplitude-shift keying, frequency-shift keying, and phase-shift keying; note that there is a 3 dB difference in performance from ASK to FSK to PSK.

15.8 M-ary Optimal Receivers

In binary signaling schemes, one seeks to transmit a single bit over the bit interval [0, T]. This is to be contrasted with M-ary signaling schemes where one transmits multiple bits simultaneously over the so-called symbol interval [0, T]. For example, using a signal set with 16 separate waveforms will allow one to transmit a length four-bit sequence per symbol (waveform). Examples of M-ary waveforms are quadrature phase-shift keying (QPSK) and quadrature amplitude modulation (QAM).

The derivation of the optimum receiver structure for M-ary signaling requires the straightforward application of fundamental results in detection theory. As with binary signaling, the Karhunen–Loève expansion is the mechanism utilized to convert a hypotheses testing problem based on continuous waveforms into a vector classification problem. Depending on the complexity of the M waveforms, the signal space can be as large as an M-dimensional vector space.

By extending results from the binary signaling case, it is easily shown that the optimum M-ary receiver computes

where, as before, the si(t) constitute the signal set with the πi being the corresponding a priori probabilities. After computing M separate values of ξi, the minimum probability of error receiver simply choose the largest amongst this set. Thus, the M-ary receiver is implemented with a bank of correlation or matched filters followed by choose-largest decision logic.

In many cases of practical importance, the signal sets are selected so that the resulting signal space is a two-dimensional vector space irrespective of the number of signals. This simplifies the receiver structure in that the sufficient statistics are obtained by implementing only two matched filters. Both QPSK and QAM signal sets fit into this category. As an example, in Figure 15.5, we have depicted the signal locations for standard 16-QAM signaling with the associated decision boundaries. In this case we have assumed an equally likely signal set. As can be seen, the optimal decision rule selects the signal representation that is closest to the received signal representation in this two-dimensional signal space.

FIGURE 15.5 Signal space representation of 16-QAM signal set. Optimal decision regions for equally likely signals are also noted.

15.9 More Realistic Channels

As is unfortunately often the case, many channels of practical interest are not accurately modeled as simply as an AWGN channel. It is often that these channels impose nonlinear effects on the transmitted signals. The best example of this is channels that impose a random phase and random amplitude onto the signal. This typically occurs in applications such as in mobile communications, where one often experiences rapidly changing path lengths from source to receiver.

Fortunately, by the judicious choice of signal waveforms, it can be shown that the selection of the φi in the Karhunen–Loève transformation is often independent of these unwanted parameters. In these situations, the random amplitude serves only to scale the signals in signal space, whereas the random phase simply imposes a rotation on the signals in signal space.

Since the Karhunen–Loève basis functions typically do not depend on the unknown parameters, we may again convert the continuous time classification problem to a vector channel problem where the received vector R is computed as in Equation 15.3. Since this vector is a function of both the unknown parameters (i.e., in this case amplitude A and phase ν), to obtain a likelihood ratio test independent of A and ν, we simply apply Bayes theorem to obtain the following form for the LRT:

where the expectations are taken with respect to A and ν, and where pR|Hi, A, ν are the conditional probability density functions of the signal representations. Assuming that the background noise is AWGN, it can be shown that the LRT simplifies to choosing the largest amongst

It should be noted that in the Equation 15.11 we have explicitly shown the dependence of the transmitted signals si on the parameters A and ν. The final receiver structures, together with their corresponding performance are, thus, a function of both the choice of signal sets and the probability density functions of the random amplitude and random phase.

15.9.1 Random Phase Channels

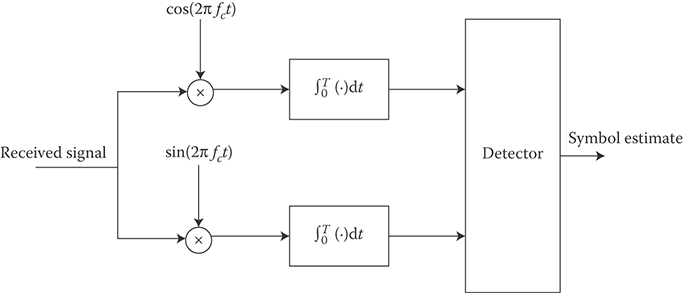

If we consider first the special case where the channel simply imposes a uniform random phase on the signal, then it can be easily shown that the so-called in-phase and quadrature statistics obtained from the received signal R(t) (denoted by RI and RQ, respectively), are sufficient statistics for the signal classification problem. These quantities are computed as

and

where in this case the index i corresponds to the center frequencies of hypotheses Hi, (e.g., FSK signaling). As in Figure 15.6, the optimum binary receiver selects the largest from amongst

where I0 is a zeroth-order, modified Bessel function of the first kind. If the signals have equal energy and are equally likely (e.g., FSK signaling), then the optimum receiver is given by

FIGURE 15.6 Optimum receiver structure for noncoherent (random or unknown phase) ASK demodulation.

One may readily observe that the optimum receiver bases its decision on the values of the two envelopes of the received signal and, as a consequence, is often referred to as an envelope or square-law detector. Moreover, it should be observed that the computation of the envelope is independent of the underlying phase of the signal and is as such known as a noncoherent receiver.

The computation of the error rate for this detector is a relatively straightforward exercise resulting in

As before, note that the error rate for the noncoherent receiver is simply a function of the SNR.

15.9.2 Rayleigh Channel

As an important generalization of the described random phase channel, many communication systems are designed under the assumption that the channel introduces both a random amplitude and a random phase on the signal. Specifically, if the original signal sets are of the form si(t) = mi(t) cos(2πfct) where mi(t) is the baseband version of the message (i.e., what distinguishes one signal from another), then the so-called Rayleigh channel introduces random distortion in the received signal of the following form:

where the amplitude A is a Rayleigh random variable* and where the random phase ν is uniformly distributed between zero and 2π.

*The density of a Rayleigh random variable is given by pA(a) = a/σ2 exp(−a2/2σ2) for a ≥ 0.

To determine the optimal receiver under this distortion, we must first construct an alternate statistical model for si(t). To begin, it can be shown from the theory of random variables [4] that if XI and XQ are statistically independent, zero mean, Gaussian random variables with variance given by σ2, then

Equality here is to be interpreted as implying that both A and ν will be the appropriate random variables. From this, we deduce that the combined uncertainty in the amplitude and phase of the signal is incorporated into the Gaussian random variables XI and XQ. The in-phase and quadrature components of the signal si(t) are given by and , respectively. By appealing to Equation 15.11, it can be shown that the optimum receiver selects the largest from

where the inner product

Further, if we impose the conditions that the signals be equally likely with equal energy over the symbol interval, then optimum receiver selects the largest amongst

Thus, much like for the random phase channel, the optimum receiver for the Rayleigh channel computes the projection of the received waveform onto the in-phase and quadrature components of the hypothetical signals. From a signal space perspective, this is akin to computing the length of the received vector in the subspace spanned by the hypothetical signal. The optimum receiver then chooses the largest amongst these lengths.

As with the random phase channel, computing the performance is a straightforward task resulting in (for the equally likely, equal energy case)

Interestingly, in this case the performance depends not only on the SNR, but also on the variance (spread) of the Rayleigh amplitude A. Thus, if the amplitude spread is large, we expect to often experience what is known as deep fades in the amplitude of the received waveform and as such expect a commensurate loss in performance.

15.10 Dispersive Channels

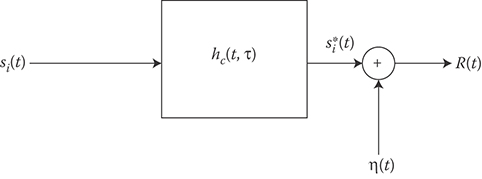

The dispersive channel model assumes that the channel not only introduces AWGN but also distorts the signal through a filtering process. This model incorporates physical realities such as multipath effects and frequency selective fading. In particular, the standard model adopted is depicted in the block diagram given in Figure 15.7. As can be seen, the receiver observes a filtered version of the signal plus AWGN. If the impulse response of the channel is known, then we arrive at the optimum receiver design by applying the previously presented theory. Unfortunately, the duration of the filtered signal can be a complicating factor. More often than not, the channel will increase the duration of the transmitted signals, hence, leading to the description, dispersive channel.

However, if the designers take this into account by shortening the duration of si(t) so that the duration of is less than T, then the optimum receiver chooses the largest amongst

If we limit our consideration to equally likely binary signal sets, then the minimum Pe matches the received waveform to the filtered versions of the signal waveforms. The resulting error rate is given by

Thus, in this case the minimum Pe is a function of the separation of the filtered version of the signals in the signal space.

The problem becomes substantially more complex if we cannot ensure that the filtered signal durations are less than the symbol lengths. In this case we experience what is known as intersymbol interference (ISI). That is, observations over one symbol interval contain not only the symbol information of interest but also information from previous symbols. In this case we must appeal to optimum sequence estimation [5] to take full advantage of the information in the waveform. The basis for this procedure is the maximization of the joint likelihood function conditioned on the sequence of symbols. This procedure not only defines the structure of the optimum receiver under ISI but also is critical in the decoding of convolutional codes and coded modulation. Alternate adaptive techniques to solve this problem involve the use of channel equalization.

FIGURE 15.7 Standard model for dispersive channel. The time-varying impulse response of the channel is denoted byhc(t, τ).

References

1. Gibson, J. D., Principles of Digital and Analog Communications, 2nd ed. New York: MacMillan. 1993.

2. Haykin, S., Communication Systems, 3rd ed. New York: John Wiley & Sons. 1994.

3. Lee, E. A. and Messerschmitt, D. G., Digital Communication. Norwell, MA: Kluwer Academic Publishers. 1988.

4. Papoulis, A., Probability, Random Variables, and Stochastic Processes, 3rd ed. New York: McGraw-Hill. 1991.

5. Poor, H. V., An Introduction to Signal Detection and Estimation. New York: Springer-Verlag. 1988.

6. Proakis, J. G., Digital Communications, 2nd ed. New York: McGraw-Hill. 1989.

7. Shiryayev, A. N., Probability. New York: Springer-Verlag. 1984.

8. Sklar, B., Digital Communications, Fundamentals and Applications. Englewood Cliffs, NJ: Prentice Hall. 1988.

9. Van Trees, H. L., Detection, Estimation, and Modulation Theory, Part I. New York: John Wiley & Sons. 1968.

10. Wong, E. and Hajek, B., Stochastic Processes in Engineering Systems. New York: Springer-Verlag. 1985.

11. Wozencraft, J. M. and Jacobs, I., Principles of Communication Engineering (Reissue), Prospect Heights, Illinois: Waveland Press. 1990.

12. Ziemer, R. E. and Peterson, R. L., Introduction to Digital Communication, New York: Macmillan. 1992.

Further Reading

The fundamentals of receiver design were put in place by Wozencraft and Jacobs in their seminal book. Since that time, there have been many outstanding textbooks in this area. For a sampling see [1,2,3,8,12]. For a complete treatment on the use and application of detection theory in communications see [5,9]. For deeper insights into the Karhunen–Loève expansion and its use in communications and signal processing see [10].