Chapter 5

Embedded Processor Architecture

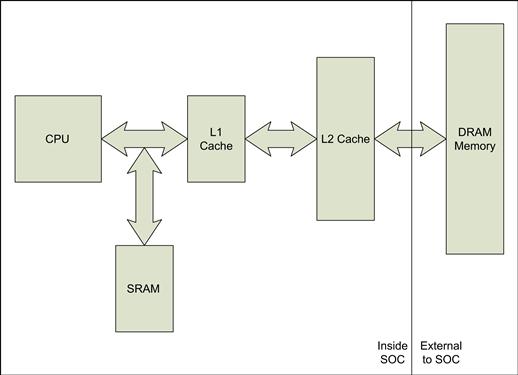

How do we go about classifying an embedded processor? The traditional embedded CPU market is generally divided into microprocessors, microcontrollers, and digital signal processors. Microcontrollers are typically 8-/16-bit processors with a number of input/output (I/O) peripherals, and they are usually self-sufficient in terms of ROM and RAM. Microprocessors, on the other hand, traditionally comprise a 32-bit processor with a number of onboard peripherals. As the transistor continues its reduction in size every year, it has become more and more cost-effective to develop large-scale System-On-Chip (SOC) devices. The majority of SOCs today contain a 32-bit microprocessor integrated with a broad range of additional capabilities alongside the processor. The ability to expand the capabilities of an SOC by integrating industry standard interfaces is an important attribute of developing embedded systems.

The embedded industry currently uses 8-, 16-, and 32-bit processors, continually evolving the performance and applications supported. As applications have steadily evolved well beyond simple control functions, there is a significant migration to 32-bit microprocessor SOCs, as a result we will focus its attention on 32-bit microprocessor based systems. The capabilities of the processors used in the embedded market have typically lagged behind those found in the mainstream processors’ environments. As embedded applications have become more and more complex, requiring higher performance and using larger data sets, this lag is quickly diminishing.

A number of embedded applications incorporate digital signal processing (DSP) algorithms. Consequently, a number of embedded microprocessors incorporate capabilities that are optimized for DSP algorithms. In this book, we discuss processor extensions that are designed to support DSP operations (Chapter 11), but coverage of discrete digital signal processor devices is beyond the scope of this work.

This chapter covers a range of concepts associated with the processor within the SOC, with a focus on topics that are of particular interest when developing embedded systems. Where examples are required we make reference to the Intel® Atom™ processor, and where appropriate make comparisons to other embedded processor architectures.

Basic Execution Environment

All processors provide a register set for use by the programmer. We consider a subset of the registers in a processor to be architecturally defined. Architecturally defined registers are persistent across many specific implementations and generations of the architecture.

All processors provide a general-purpose register file—these registers hold the data to be operated on by the processor. Processors provide a rich set of instructors to operate on these registers; the most basic functions that can be performed are loading to and from memory and logical and arithmetic operations.

The register file on some processors such as ARM™ and PowerPC™ is completely generalized, and most registers can be used as source or destination operators in any of the instructions, whereas other processors impose limitations on the registers that can be used in a subset of the instructions.

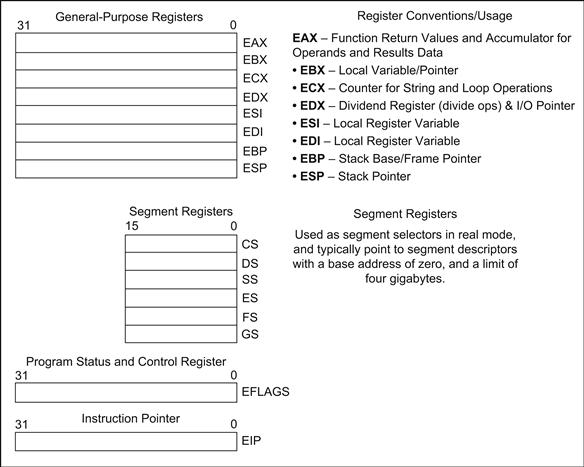

The Intel processors provide eight general-purpose registers and six segment registers. The general-purpose registers are known as EAX, EBX, ECX, EDX, ESI, EDI, EBP, and ESP. Although all registers are considered general purpose, some instructions are limited to act on a subset of the registers.

The general-purpose registers are either 32 bits or 64 bits wide, 32 bits when the CPU is configured in IA32 mode and 64 bits in EM64T mode. We will concentrate on 32-bit operation in this chapter, as at the time of writing it is the most widely deployed configuration for Atom-based processors. On Intel processors, naming conventions are used to access the registers in 16- and 8-bit modes. The naming conventions used by mnemonics to access portions of the 32-bit register are shown in Figure 5.1.

FIGURE 5.1 Intel Architecture Register Naming in 32-Bit Mode.

Each processor architecture and programming language has conventions that assign specific uses for particular registers. The general-purpose registers can attain a specific meaning for many reasons, perhaps because the operation is particularly fast with the designated register or more commonly through conventions established by high-level languages. Conventions established by high-level languages are known as application binary interfaces (ABIs). The ABI is a specification (sometimes de facto) that describes which registers are used to pass function variables, manage the software stack, and return values. The ABIs are always architecture specific and often also operating system dependent. As an embedded systems programmer, it’s quite beneficial to know these conventions for your target architecture/operating system/language combination. We describe ABIs in more detail in the section “Application Binary Interface” later in this chapter.

The segment registers on Intel architecture processors are worth a brief discussion. Both the Intel architecture and the operating systems that run on it have evolved over many years. This evolution has allowed the Intel architecture to keep pace with advances in software architectures and best in class operating system design, moving from 16-bit single-thread real-memory models under MS-DOS to multi-threaded protected mode 32- and 64-bit applications under a variety of modern operating system kernels, such as Linux. Features such as segmented memory solved the need of 16-bit applications to access more memory than a single 16-bit register could address. Segment registers were first introduced to allow the generation of a linear address greater than 16 bits. The segment register was shifted and added to a 16-bit register to generate a logical address greater than 16 bits. Intel processors provide three primary modes of operation, namely, Flat, Segmented, and Real 8086 modes. However, today the predominant configuration mode of the processor is the Flat Memory mode. In this mode a selection of bits within the segment registers provide indexes into a table, which selects a segment descriptor. The segment descriptor then provides a base address for the segment. The base address is added to the contents of the register to create the linear address for the memory reference. The segment base addresses are configured by the operating system; they are set to zero for Linux User Space, Linux™ kernel space, and VxWorks™. Some operating systems (such as Linux) use the FS and GS segment registers to access application thread-specific data or OS-specific data in the kernel. The GCC compiler may use GS to implement a runtime stack protector or as a base pointer for Thread Local Storage (TLS).

The key point is that for most Intel environments today you don’t have to worry about the segmentation model to any great extent, as most environments have migrated to a linear 32-bit flat memory model.

In addition to the general-purpose register file, the processor maintains an instruction pointer, flags, stack, and frame pointers:

• Instruction pointer. All processors provide a register that contains the current instruction pointer. The instruction counter is mostly maintained by the processor and updated automatically as the processor executes instructions. The program flow may be altered from its normally increasing (words) sequence through call, branch, conditional instructions, exceptions, or interrupts. On some architecture such as ARM, instructions are provided to directly alter the instruction pointer itself.

• The instruction pointer on Intel architecture is called the Extended Instruction Pointer (EIP). The EIP is a 32-bit register unless the processor is in one of its extended 64-bit modes. There are no instructions that can be used to directly read or write to the EIP. The EIP can only be modified using instructions such as JMP (jump to an address), JMPcc (conditionally jump to an address), CALL (call a procedure), RET (return from a procedure), and IRET (return from an interrupt or exception).

• Flags. All processors provide a register that contain a set of flags. The bits within a flag register on a processor can usually be broken down into two groups. The first are status flags. Status flags are updated as a result of an instruction that operates on one of the general-purpose registers. Examples of instructions that update the status flags are ADD, SUB, and MUL. Status flags can be used by subsequent instructions to change the flow of the program, for example, to branch to a new program address if the zero flag bit is set. Most processors offer a similar set of status flags, sometimes known as condition codes. Intel processors provide the following status flags: Carry, Parity, BCD Carry/Borrow, Zero, Sign, and Overflow.

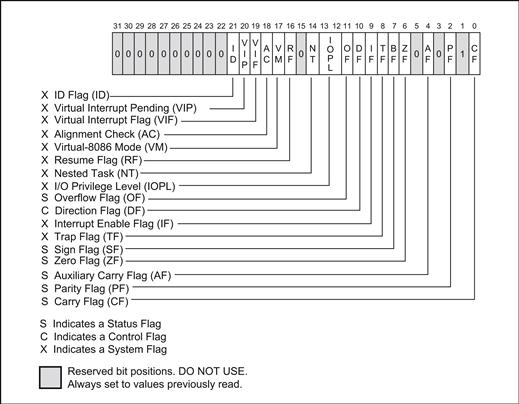

• Along with the status flags, processors usually have a number of additional bits providing information to the programmer and operating system; these bits are usually specific to the processor architecture. They typically provide information on the privilege level of the processor, a global mask for interrupts, and other bits used to control the behavior of the processor for debugging, legacy support, and so on. The full list of flags in the EFLAGS register is shown in Figure 5.2.

• Stack pointer. In higher-level languages such as C, The program stacks are created in system memory. The stack can used to store local variables, allocate storage, and pass function arguments. It is typical for processors to provide dedicated registers to hold the stack pointer and specific instructions to manipulate the stack. The Intel processor provides PUSH and POP instructions, which operate on the ESP register. Depending on the processor architecture, stacks grow up or grow down as entries are added; which way they grow is a largely arbitrary and up to the architecture creators. In Intel processors, the stack frame grows down.

• Base pointer. In conjunction with the stack pointer, higher-level languages create what’s often known as a stack frame. The base pointer allows the program to manage the function calling hierarchy on the stack. Using a memory dump of the program stack, the base pointer, and stack pointer, you can identify the calling sequence of function calls that occurred before the current function. Some processors provide a dedicated base register, and others just define a specific register by ABI convention. The Intel processor ABIs designate the EBP register as the base pointer. The base pointer is also referred to as a frame pointer in other architectures.

FIGURE 5.2 Intel Architecture EFLAGS Register.

Figure 5.3 brings together the basic view described above and adds some conventions for registers use cases.

FIGURE 5.3 Basic 32-Bit Execution Environment.

Privilege Levels

All modern embedded processors provide a mechanism to allow portions of the software to operate with differing levels of privilege. The current privilege level is used by the system to control access to resources and execution of certain instructions. The number and specific use of privilege levels are architecture specific, but most architectures support a minimum of two privilege levels. The highest privilege level is usually reserved for the operating system. User programs and applications typically run with a lower privilege level. The use of privilege levels increases the robustness of the system by reducing the ability of an application to interfere with system-wide resources. For example, the ability to disable interrupts is a privileged instruction and ideally not accessible directly by an application.

Intel processors provide four privilege levels, although in practice level zero and level three are predominantly used. The current privilege level (CPL) of the processor is stored in the lowest 2 bits of the code segment selector (CS). The highest privilege level is number zero. This level is commonly known as Kernel Mode for Linux and Ring 0 for Windows-based operating systems. A CPL of three is used for user space programs in both Linux and Windows.

Many processors simply grant privileges to execute system level instructions or access system level resources by being at a privileged supervisor level. However, on Intel architecture processors some further details are required to understand how an Intel processor decides whether an operation is allowed. Whether an instruction has sufficient privilege to perform a specific operation is established by comparing the CPL to the active I/O Privilege Level (IOPL) The IOPL is stored in bits 12 and 13 of the FLAGS register and its value is controlled by the operating system. If the CPL is less than the current IOPL, then the privileged operation is allowed; if greater than or equal to IOPL, then a privileged operation will fail. On an Intel processor the CPL value is stored in the low 2 bits of the Code Segment register. Most operating systems set the IOPL value to three, thus having a CPL value of three corresponds to the lowest privilege level allowed in the system, and a CPL value of zero is the highest privilege level. As an embedded systems programmer, you might often require direct access to a hardware resource from your application. Most operating systems provide a mechanism to alter the privilege level, and a root privileged application, for example, can call the IOPL() function on Linux to altar the IOPL flags. To increase the security of your application you should minimize the time where the code executes with higher privilege level, and you should not run the entire application with increased privileges.

Floating-Point Units

Floating-point mathematical operations are required in a wide range of embedded applications, such as control systems and digital signal analysis. Where an application does require floating point, the performance targets often dictate the need for a hardware-based floating-point unit. Most embedded processor SOCs offer a version that provides hardware-based floating point. A key attribute of a floating point acceleration function associated with the processor is whether the floating-point unit is compliant with the IEEE Standard 754 for Binary Floating-Point Arithmetic. The precision of the floating-point unit (single/double) is also an important attribute in developing the floating-point algorithms.

Intel processors have two floating-point units. The first and probably best known is the x87 Floating-Point Unit (FPU). The x87 FPU instructions operate on floating-point, integer, and binary-coded decimal (BCD) operands. It supports 80-bit precision, double extended floating-point. The FPU operates as a coprocessor to the instruction unit. The FPU instructions are submitted to the FPU and the scalar (main processor) pipeline continues to run in parallel. To maximize overall application performance it is important to ensure that the processor’s main execution flow can perform useful work while the FPU performs the floating-point operations. To that end it is usually best to use a compiler to generate the target code to ensure efficient instruction scheduling.

Not all floating-point operations can be completed; for example, dividing a number by zero results in a floating-point fault. The following floating-point operations result in faults: all invalid operations, for example, square root of a negative number, overflow, underflow, and inexact result. The operating system provides an exception handler to handle the floating-point fault exceptions. In the case of Linux, the kernel catches the fault and sends a user space signal (SIGFPE, signal floating-point exception). The application will be terminated unless the application has chosen to handle the exceptions. The C language specification as defined by the ISO C99 (ISO/IEC 9899:1999) standardizes a number of functions to control the behavior of floating-point rounding and exception handling. One such function is fegetexceptflag().

Intel processors also have a Single Instruction Multiple Data (SIMD) execution engine. The Intel Atom processor supports the Supplemental Streaming SIMD Extensions 3 (SSSE3) version of the SIMD instructions, which support integer, single, and double precession floating-point units.

The Intel Atom processor has a rich set of floating-point coprocessor capabilities. As a result, a particular algorithm could be implemented in a number of ways. The trade-offs for the use of each floating-point unit for a particular operation are described in Chapter 11, “Digital Signal Processing.”

The floating-point units are resources that contain a number of registers and some current state information. When different software threads wish to use the resources, the kernel software may have to save and restore the registers and all state information during operating system context switches. The Intel processor provides FXSAVE and FXRSTOR to save and restore the required state and register information of FP and SSE units. These operations can be costly if performed on every task transition, so Intel processors provide a mechanism to help the kernel identify whether an FPU was actually used by a particular process. The TS flag in the control register zero (CS0.TS) provides an indication that the floating point unit has been used. The kernel can clear the value when it performs a context switch, and check if the bit has been set during the execution of the process (indicating the process used the FP unit). The operating system can be configured to save the registers and state on transition from a thread that used the resource or alternatively raise an exception when a new thread attempts to use the resource after a previous thread has used it. If the real-time behavior of your FP/SSE code is important, you should look into the detailed operating system behavior. You may have to take special steps if you want to use floating-point or SSE units from within the kernel. For example, in Linux you have to call kernel_fpu_begin() and kernel_fpu_end() around the code that uses the FP/SSE units. This will save and restore the required state.

Processor Specifics

Practically all embedded processors have been in existence for a number of generations, though none of the existing embedded processors quite match the longevity of the Intel architecture. As each product generation evolves, new capabilities are introduced, and as an embedded systems developer you will need to establish exactly which features are supported on the particular version you are working with. Having mechanisms to identity product capabilities at runtime facilitates the development of code that will run on a number of different generations of the part without modification. The information is typically provided by special registers that are accessed via dedicated instructions. For example, on ARM platforms, there are a number of co-processor registers one of which is the System Control co-Processor. The system control coprocessor is known as CP15 and provides information and controls the processor behavior. On Intel platforms, a number of control/information registers, CR0, CR1, CR2, CR3, and CR4, and a special instruction (CPUID) are available. The CPUID instruction and registers describe capabilities of the processor and also provide some current processor state information. The CPUID instruction provides detailed information on the executing processor. The output from the CPUID instruction is dependent upon the contents of the EAX register; by placing different values in the EAX register and then executing the CPUID instruction, the CPUID instruction performs the function defined by the EAX value. The CPUID instruction returns the requested values in the EAX/EBX/EDX and ECX registers. The code segment below uses GCC compiler inline assembly code to dump all the CPUID values available on an Intel Atom platform.

long maxCpuId=0 , long cpuid;

long eax = 0, ebx = 0, ecx=0, edx=0;

eax = 0; /∗ Get the maximum CPUID range ∗/

/∗

∗ Input:Loads eax input register with eax variable value

∗ Call CPUID instrucion

∗ Output:Ensure EAX/EBX/EXC and EDX registers are mapped

∗ the variables with the corresponding name.

∗/

__asm__ __volatile__

("CPUID"

:"=a"(eax), "=b"(ebx), "=c"(ecx), "=d"(edx)

:"a"(eax)

);

maxCpuId=eax; /∗ Get the maximum value for EAX∗/

for ( cpuid=0; cpuid <= maxCpuId ; ++cpuid)

{

eax = cpuid;

__asm__ __volatile__("CPUID"

:"=a"(eax), "=b"(ebx), "=c"(ecx), "=d"(edx)

:"a"(eax)

);

printf("CPUID [%2X] = %08X, %08X, %08X, %08X ",cpuid, eax,ebx,ecx,edx);

}

The code above displays the various CPUID values, which can be decoded to provide detailed information about the processor. A good example of the type of information provided is the processor feature list. This is provided by calling CPUID with an EAX value of 01h. The processor features capabilities listed on an Atom processor returned the following values: EDX[31:0] = 0xBFE9FBFF and ECX[31:0] = 0x0040C3BD. Decoding the bits set in EDX/ECX indicates the following feature set on the Intel Atom processor: Floating-Point Unit, Time Stamp Counter, Physical Address Extension, Local Interrupt Controller (APIC), Cache Line Flush, Model Specific Registers, FXSAVE/FXSTOR, MMX, SSE, SSE2, SSE3, SSSE3, Thermal Management, Multi-Threading – (Hyper thread). The support for 64-bit operation (EM64T) is also indicated through the CPUID feature.

It is often useful to have an awareness of the cache structures on your platform, especially when it comes to performance tuning or at least understanding the behavior of your application and the correlation between application data set size and overall cache sizes. Chapter 18, “Platform Tuning,” provides more specific details on tuning your system. The CPUID provides cache structure details of the processor. The decoded values returned from the current Intel Atom processor are as follows:

• L1 data cache: 24 kB, six-way set associative.

The Linux command cat/proc/cpuinfo provides similar details relating to the CPU. A portion of the details presented is obtained by issuing using the CPUID instruction.

The provision of free running counters and hardware timers is required by most operating systems to provide the operating system “Tick,” timed delay loops, code performance measurements, and the like. Intel Atom platforms provide a wealth of timers and counters on the platform. A very useful free running time stamp counter is provided on Intel platforms. It is simply accessed by using the RDTSC instruction. The instruction returns the number of ticks at maximum processor frequency (resolved at boot up) since reset. The tick represents the length of time for one duty cycle of the CPU clock, so for a processor running at 1.6 GHz, a tick would represent 0.625 ns. The value is very reliable, but you should be aware that in some system interactions may skew some measurements, such as when the processor’s actual speed is slowed due to power-managed controls, although this is not the case for Atom processors. The code segment below shows how the time stamp counter can be read from a C program. The embedded assembly is written to be compiled by the GCC compiler.

long long timeStamp;

__asm__ __volatile__

("RDTSC" ; Mnemonic to read counter

:"=A"(timeStamp) ; Map 64bit timeStamp to EDX/EAX

:

);

In addition to the free running counter, embedded platforms will make use of hardware-based timers. The timers on the Intel Atom platform are described in Chapter 4, “Embedded Platform Architecture.”

Application Binary Interface

As an embedded software developer, you will develop most of your software in higher-level languages. It is less likely that you have to develop large portions of your code in assembly language. However, it is very important that you are conversant with assembly language and how assembly and high-level languages interact, particularly when it comes to debugging the platform. For embedded systems it is not unusual to have to “root cause” a system crash with information saved at the time of the system crash. A common example of this is debugging Linux Kernel Oops (http://www.kerneloops.org). The crash logs provide register values, stack frames, and software versions at the time of the crash. Understanding the register usages and calling convention of the high-level language is critical to debugging such logs. There is little formal standardization of the calling conventions but in general two de facto standard conventions predominate. The open source Gnu Compiler (GCC) conventions are used on Unix-like operating systems and most real-time operating systems, whereas the Microsoft Compiler calling conventions are used by compilers and tool chains on Windows-based operating systems. The best source of information on Linux/Unix type ABIs is located at http://refspecsfreestandards.org; consult http://msdn.microsoft.com for Windows-based platforms. As an indication of the fragmentation that arises, the Intel C++ Compiler provides an -fabi-version option, which allows you to select between most recent ABI, g++ 3.2 and 3.3 compatible, or compatible with gcc 3.4 and higher.

The calling conventions define the following aspects of the code generated by compilation:

The width (number of bits to represent) of data types is largely standard across all compilers and in general is intuitive. The alignment and packing behavior, on the other hand, can vary significantly and cause difficulty when debugging the stack trace associated with a crash. In general, data types are aligned to the width of the data type. A 4-byte integer is aligned to a 32-bit address; this is also known as double word aligned. The number of bits in a word, double word, and quad word is itself a convention specific to the processor architecture. On IA-32 processors, a 16-bit value is a word, a 32-bit value is a double word, and a 64-bit value is a quad-word. ARM and PowerPC architectures were defined later and word size changed to 32 bits, therefore making 16-bit values half words and 64-bit values double words.

The compilers also provide compiler options and pragmas that can be used to change the alignment of basic types and structure members. The most common option to change the way elements in a structure are aligned is the __PACKED__ pragma or equivalent command line compiler option. In this case all members of a structure are packed together, leaving no unused memory space. The alignment of members may not be on their natural boundary. This mechanism is often used in embedded systems where we want to share information between different systems or overlay a structure over the register map of a device. When sharing information across processors within a system or between systems, this approach may suffice, but it is not very rigorous. When sharing data across embedded systems, it is important to format the data in a formally agreed-upon standard for transmission; this removes all packing and endian issues that may arise. Common packing standards are eXternal Data Representation (XDR) and, more recently, XML.

The stack alignment is typically double word (4-byte) aligned for 32-bit operating systems and 16-byte aligned for 64-bit operating systems. The compiler will often have an option to set the alignment. An example of such an option is -mpreferred-stack-boundary=value , which is available when using a GCC compiler. The alignment of the stack is useful to know when debugging stack traces.

The processor’s register usage by high-level languages can be divided into a number of categories:

• Caller saved registers. A number of registers may be altered (such as scratch registers) when a function is called; if the caller needs to retain this value, the caller must save the contents of these registers on the stack to facilitate restoration after the function returns.

• Callee saved registers. This is the list of registers the called function must restore to their previous value when the function returns.

• Function argument passing registers. Arguments are passed to a function either in registers or on the stack, depending on the number and type of arguments. For 32-bit IA-32 ABIs, all function parameters are passed on the stack; for 64-bit ABIs, a combination of registers and stack is used.

• Function return value registers. Function that returns a value does so in a register.

The IA-32 registers for the GNU compiler Linux 32-bit ABI are shown in Table 5.1. At the time of writing, a new draft ABI for use on Intel EM64T-capable processors is known as X32-ABI (https://sites.google.com/site/x32abi/). It maximizes the use of the registers available while enabled in EM64T mode, yet retains pointer, long, and integer sizes as if it were a 32-bit machine. This is a best-case hybrid for 32-bit applications.

Table 5.1. ABI Register Calling Conventions—32-Bit Linux/Windows

| IA-32 Register | 32-Bit Linux GNU/Windows |

| EAX | Scratch and return value |

| EBX | Callee save |

| ECX | Scratch |

| EDX | Scratch and return value |

| ST0-ST7 | Scratch/ST0 return float |

| ESI | Callee save |

| EDI | Callee save |

| EBP | Callee save |

| XMM0-XMM7 | Scratch registers |

| YMM0-YMM7 | Scratch registers—256 bit on AVX-capable processors only |

A key aspect of the calling conventions is the creation of a stack. The stack is built up from a number of stack frames. Each stack frame consists of memory that is allocated from the program stack. The stack frame consists of parameters to the function being called, automatic stack variables, saved scratch registers, and callee and caller saved register values. A number of conventions specify where the stack cleanup operations must take place. These are known as cdecl, stdcall, and fastcall. The cdecl is the default calling convention and requires the calling function to perform the stack cleanup. The cdecl calling convention supports functions with a variable number of arguments such as printf. The stdcall calling convention supports a fixed number of arguments for a function and the stack cleanup is performed by the called function. The majority of embedded systems use the C default cdecl convention. Windows libraries primarily use the stdcall convention. The fastcall convention is an additional convention supported by Linux and Windows to increase the performance of the function call. It is critical that the caller and callee use the same convention—if not, the stack program will undoubtedly crash.

The following sequence of actions is performed as part of a function call:

• Save scratch registers in the caller.

• Push the function arguments onto the stack from right to left.

• Call the function, and the call instruction pushes the Instruction Pointer (EIP) onto the stack and transfers control to the function. The EIP value contains the address of the next instruction, and therefore the stack will contain the address of the instruction after the call.

• Save the base pointer (pushed onto the stack) and update the base pointer with the current stack pointer. The base pointer can now be used to reference the calling arguments. The first argument of the function is referred to by 8(%EBP); the second argument is accessed by 12(%EBP).

• Save scratch registers (caller save) used by this function.

• Grow the stack for local variables, simply by decrementing the ESP by the amount of space needed. Local variables are typically accessed by relative reference from the EBP.

• Free the local stack storage, by adding the appropriate value to the ESP.

• Restore the scratch registers.

• Restore the base pointer, using POP.

• Return from the function, using RET. This moves the previously pushed address off the stack and transfers control to the instruction after the call.

• As this is the cdecl convention, the caller now frees the stack of the calling arguments, typically discarded by adding the required value to the ESP, or if the variables are reused, the values are moved into registers with POP for reuse.

The following section shows a simple C function call and the corresponding assembly.

main()

{

int a = 1; x = 100; y = 150;

int z;

z = foo1(a,x,y);

printf("Z foo1:%d ", z);

}

int foo1(int a, int x, int y)

{

int z;

z = a + x + y;

return(z);

}

This produces the following assembly code using GNU disassembly format. The code is produced by calling gcc with the –S option. The GNU tools command objdump (called with argument –s) is very useful command in studying study the assembly code of an object or executable.

1 main:pushl %ebp ; Save the base pointer

2 movl %esp, %ebp ; Set the stack = base

3 andl $-16, %esp ; Allocate stack -16

4 subl $32, %esp ; Allocate stack -32

5 movl $1, 16(%esp) ; Put 01h into variable a

6 movl $100, 20(%esp) ; Put 100h into variable x

7 movl $150, 24(%esp) ; Put 100h into variable y

8 movl 24(%esp), %eax ; Get y into eax

9 movl %eax, 8(%esp) ; Put y onto stack

10 movl 20(%esp), %eax ; Put x into eax

11 movl %eax, 4(%esp) ; Put x onto stack

12 movl 16(%esp), %eax ; Put a into eax

13 movl %eax, (%esp) ; Push a onto stack

14 call foo1 ; Call the function

15 movl %eax, 28(%esp) ; Put return z onto local stack

16 movl 28(%esp), %eax ; Move local variable into eax

17 movl %eax, 4(%esp) ; move return z into call stack

18 movl $.LC0, (%esp) ; move pointer to printf str

20 leave ; Set ESP to EBP, POP EBP

21 ret ; return from function.

22 …

23 foo1:

24 pushl %ebp ; Save the base pointer

25 movl %esp, %ebp ; Set the stack = base

26 subl $16, %esp ; Grow stack by 16 bytes

27 movl 12(%ebp), %eax ; Get x from stack into eax

28 movl 8(%ebp), %edx ; Get a from stack into edx

29 leal (%edx,%eax), %eax ; Add edx+eax into eax

30 addl 16(%ebp), %eax ; add y to eax

31 movl %eax, -4(%ebp) ; put Z local regstack

32 movl -4(%ebp), %eax ; put local Z into return reg

33 leave ; Sets ESP to EBP and POPs EBP

34 ret ; Return from function

35

36 .section .rodata

37 .LC0:

38 .string "Z foo1:%d " ; String for printf

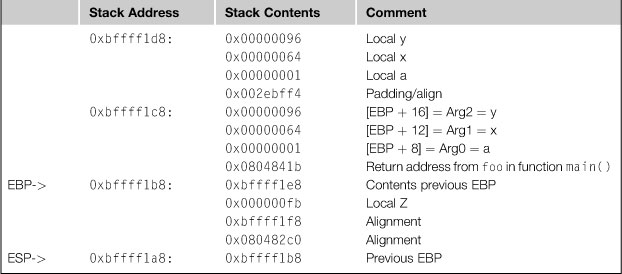

The stack dump below in Table 5.2 is a snapshot of the stack when the processor is executing from line 32 above. The table shows the different stack values, parameters, local variables, and return addresses.

Table 5.2. Detailed Example Stack Snapshot

The code generated for this simple function is far from optimized, and was generated with the default compiler options. The instruction count for the function foo() above is 11 instructions, with the average optimization (GCC option –O2), the code generated is reduced to 7 instructions. The optimized code will make more efficient usage of the stack as well.

Processor Instruction Classes

In this section, we outline the general classes of instructions available in most processors. This is by no means a complete list of instructions; the goal is to provide a flavor of the instruction classes available. As an embedded programmer, this is often the level of detail you will require; you should be familiar enough to read assembly code, but not necessarily proficient at writing highly optimized large scale assembly.

First, a brief segway to discuss the symbolic representation of instructions. The actual representation is defined by the assembler used, but not all assemblers use the same presentation for instructions. In the context of this book, the following representation is used:

label: mnemonic argument1, argument2, argument3

• Label is an identifier that is followed by a colon.

• A mnemonic is a reserved name for a class of instruction op-codes that have the same function.

• The operands argument1, argument2, and argument3 are optional. There may be zero to three operands, depending on the op-code. When present, they take the form of either literals or identifiers for data items. Operand identifiers either are reserved names of registers or are assumed to be assigned to data items declared in another part of the program.

When two operands are present in an arithmetic or logical instruction, the right operand is the source and the left operand is the destination (same order as the mathematical assignment operator (=)). For example:

LOADREG: MOV EAX, SUBTOTAL

In this example, LOADREG is a label, MOV is the mnemonic identifier of an op-code, EAX is the destination operand, and SUBTOTAL is the source operand. The direction of the assignment is just a convention set out by the assembler. We’ll call the convention above the Intel convention; the opposite assignment is also used on Intel platforms and it’s known as the AT&T convention. The LOADREG instruction would be shown as follows:

LOADREG: MOV SUBTOTAL, EAX

The Intel Compiler and Microsoft compilers use the Intel convention. The GNU tool chain (including debuggers) uses the AT&T convention by default. However, we use the Intel convention throughout this book, unless it’s an example segment to be used by a GNU tool chain.

The types and number of operand supported by a processor instruction set are architecture specific; all architectures allow operands that represent an immediate value, a register, or memory location. Intel architecture machine instructions operate on zero or more operands. Operands are specified explicitly and others are implicit. The data for a source operand can be found in one of the following:

• The instruction itself (an immediate operand)—The number of bits available in the op-code for an immediate value depends on the processor architecture; on Intel processors an immediate value has a range of 232. RISC processors such as ARM have a fixed size op-code and as a result support immediate values with a reduced range of 216. As a result, RISC processors generally use literal pools to load 32-bit values into a register.

• An I/O port—The Intel architecture supports 64K 8-bit I/O ports that can be written to and read from using the OUT/IN instructions. These ports are now mostly used to provide simple debug output on Intel reference platforms; most will provide a two-character eight-segment LED display to show the values written to I/O PORT 80h and 84h.

When an instruction returns data to a destination operand, it can be returned to:

• Memory location—In limited cases the Intel processor supports direct memory to memory moves, this is typically not supported on RISC processors.

The size of the instruction is worth a brief mention. Most RISC processors such as ARM and PowerPC use fixed size instructions, typically 32-bit instruction word. The Intel processors use variable sized op-codes ranging from a single byte op-code to a theoretical maximum of 17 bytes. When using a debugger to display the raw assembly of a program, the address provided to the decode command must be aligned to an instruction boundary. This can take some practice, although it is pretty obvious when you get it wrong, as the command will display quite a few invalid op-codes.

Immediate Operands

Some instructions use data encoded in the instruction itself as a source operand. The operands are called immediate operands. For example, the following instruction loads the EAX register with zero.

MOV EAX, 00

The maximum value of an immediate operand varies among instructions, but it can never be greater than 232. The maximum size of an immediate on RISC architecture is much lower; for example, on the ARM architecture the maximum size of an immediate is 12 bits as the instruction size is fixed at 32 bits. The concept of a literal pool is commonly used on RISC processors to get around this limitation. In this case the 32-bit value to be stored into a register is a data value held as part of the code section (in an area set aside for literals, often at the end of the object file). The RISC instruction loads the register with a load program counter relative operation to read the 32-bit data value into the register.

Register Operands

Source and destination operands can be any of the follow registers depending on the instruction being executed:

• 32-bit general purpose registers (EAX, EBC, ECX, EDX, ESI, EDI, ESP, or EBP)

• 16-bit general purpose registers (AX, BX, CX, DX, SI, SP, BP)

• 8-bit general-purpose registers (AH, BH, CH, DH, AL, BL, CL, DL)

• System Table registers (such as the Interrupt Descriptor Table register)

On RISC embedded processors, there are generally fewer limitations in the registers that can be used by instructions. IA-32 often reduces the registers that can be used as operands for certain instructions.

Memory Operands

Source and destination operands in memory are referenced by means of a segment selector and an offset. On embedded operating systems, the segment selector often results in a base address of zero, particularly if virtual memory is used, so the memory address specified by the operand degenerates to being the offset value. The segment selector is automatically chosen by the processor, but can be overridden if needed. The following instruction moves the value in EAX to the address pointed by EBX, assuming the data segment selector contains zero. It is the simplest memory operand form.

MOV [EBX], EAX

The memory operand can also specify offsets to the base address specified in the memory operand. The offset is added to the base address (the general-purpose register) and can be made up from one or more of the following components:

• Displacement—An 8-, 16-, or 32-bit immediate value.

• Index—A value in a general-purpose register.

• Scale factor—A value of 2, 4, or 8 that is multiplied by the index value.

So we have a memory operand that can consist of

![]()

Since the segment selector usually returns zero, the memory operand effective address becomes the following:

![]()

The compiler will make best use of these modes to de-reference data structures in memory or on the stack. The components of the offsets can be either positive or negative (two’s complement values), providing excellent flexibility in the memory operand address generation.

Data Transfer Instructions

All processes provide instructions to move data between registers, memory, and registers, and in some architectures between memory locations. Table 5.3 shows some instruction combinations.

Table 5.3. Data Transfer Instructions

| Instruction Mnemonic | Example | Description |

| MOV | MOV EAX,EBX | Move contents between registers. Note that register may be ALU register, segment register, or control registers such as CR0 |

| MOV | MOVEAX,0abcd00h

MOV EAX,[ EBX -4] |

Load a register from memory. Effect address defined by the addressing modes discussed above |

| MOV | MOV [EBX],EAX | Write register contents to memory |

| MOV | MOV EAX,12345678h | Load an immediate value into a register |

| MOV | MOV EAX,[4∗ESI][EBX+256] | Load memory at 4∗ESI + BX + 256 to register ax |

| MOV | MOVS EDI,ESI | String move memory to memory |

| PUSH | PUSH EBP | Push ECX value onto stack. Update EBP |

| POP | POP ECX | Pop ECX, update EBP |

| XCHG | XCHG EBX, ECX | Swap register values |

| XCHG | XCHG [EAX],EBX | Swap contents at memory location with register value in atomic fashion |

| CMOVcc | CMOVE EAX,[EBX] | Move if Flags show equal (ZF = 1) |

There is also a set of instructions that provides hints to the underlying hardware to help manage the cache more efficiently. The MOVNTI (store double word using non-temporal hint) instruction is designed to minimize cache pollution; by writing a double word to memory without writing to the cache hierarchy, it also prevents allocation in the cache line. There are also PREFETCH instructions that perform memory reads and bring the result data closer to the processor core. The instruction includes a temporal hint to specify how close the data should be brought to it. These instructions are used when you are aggressively tuning your software and require some skill to use effectively. More details are provided in Chapter 18, “Platform Tuning.” These hints are optional; the processor may ignore them.

Arithmetic Instructions

The arithmetic instructions define the set of operations performed by the processor Arithmetic Logic Unit (ALU). The arithmetic instructions are further classified into binary, decimal, logical, shift/rotate, and bit/byte manipulation instructions.

Binary Operations

The binary arithmetic instructions perform basic binary integer computations on byte, word, and double word integers located in memory and/or the general-purpose registers, as described in Table 5.4.

Table 5.4. Binary Arithmetic Operation Instructions

| Instruction Mnemonic |

Example | Description |

| ADD | ADD EAX, EAX | Add the contents of EAX to EAX |

| ADC | ADC EAX, EAX | Add with carry |

| SUB | SUB EAX, 0002h | Subtract the 2 from the register |

| SBB | SBB EBX, 0002h | Subtract with borrow |

| MUL | MUL EBX | Unsigned multiply EAX by EBX; results in EDX:EAX |

| DIV | DIV EBX | Unsigned divide |

| INC | INC [EAX] | Increment value at memory eax by one |

| DEC | DEC EAX | Decrement EAX by one |

| NEG | NEG EAX | Two’s complement negation |

Decimal Operations

The decimal arithmetic instructions perform decimal arithmetic on binary coded decimal (BCD) data, as described in Table 5.5. BCD is not used as much as it has been in the past, but it still remains relevant for some financial and industrial applications.

Table 5.5. Decimal Operation Instructions (Subset)

| Instruction Mnemonic |

Example | Description |

| DAA | ADD EAX, EAX | Decimal adjust after addition |

| DAS | DAS | Decimal adjust AL after subtraction. Adjusts the result of the subtraction of two packed BCD values to create a packed BCD result |

| AAA | AAA | ASCII adjust after addition. Adjusts the sum of two unpacked BCD values to create an unpacked BCD result |

| AAS | AAS | ASCII adjust after subtraction. Adjusts the result of the subtraction of two unpacked BCD values to create a unpacked BCD result |

Logical Operations

The logical instructions perform basic AND, OR, XOR, and NOT logical operations on byte, word, and double word values, as described in Table 5.6.

Table 5.6. Logical Operation Instructions

| Instruction Mnemonic | Example | Description |

| AND | AND EAX, 0ffffh | Performs bitwise logical AND |

| OR | OR EAX, 0fffffff0h | Performs bitwise logical OR |

| XOR | EBX, 0fffffff0h | Performs bitwise logical XOR |

| NOT | NOT [EAX] | Performs bitwise logical NOT |

Shift Rotate Operations

The shift and rotate instructions shift and rotate the bits in word and double word operands. Table 5.7 shows some examples.

Table 5.7. Shift and Rotate Instructions

| Instruction Mnemonic |

Example | Description |

| SAR | SAR EAX, 4h | Shifts arithmetic right |

| SHR | SAL EAX,1 | Shifts logical right |

| SAL/SHL | SAL EAX,1 | Shifts arithmetic left/Shifts logical left |

| SHRD | SHRD EAX, EBX, 4 | Shifts right double |

| SHLD | SHRD EAX, EBX, 4 | Shifts left double |

| ROR | ROR EAX, 4h | Rotates right |

| ROL | ROL EAX, 4h | Rotates left |

| RCR | RCR EAX, 4h | Rotates through carry right |

| RCL | RCL EAX, 4h | Rotates through carry left |

The arithmetic shift operations are often used in power of two arithmetic operations (such a multiply by two), as the instructions are much faster than the equivalent multiply or divide operation.

Bit/Byte Operations

Bit instructions test and modify individual bits in word and double word operands, as described in Table 5.8. Byte instructions set the value of a byte operand to indicate the status of flags in the EFLAGS register.

Table 5.8. Bit/Byte Operation Instructions

| Instruction Mnemonic |

Example | Description |

| BT | BT EAX, 4h | Bit test. Stores selected bit in Carry flag |

| BTS | BTS EAX, 4h | Bit test and set. Stores selected bit in Carry flag and sets the bit |

| BTR | BTS EAX, 4h | Bit test and reset. Stores selected bit in Carry flag and clears the bit |

| BTC | BTS EAX, 4h | Bit test and complement. Stores selected bit in Carry flag and complements the bit |

| BSF | BTS EBX, [EAX] | Bit scan forward. Searches the source operand (second operand) for the least significant set bit (1 bit) |

| BSR | BTR EBX, [EAX] | Bit scan reference. Searches the source operand (second operand) for the most significant set bit (1 bit) |

| SETE/SETZ | SET EAX | Conditional Set byte if equal/Set byte if zero |

| TEST | TEST EAX, 0ffffffffh | Logical compare. Computes the bit-wise logical AND of first operand (source 1 operand) and the second operand (source 2 operand) and sets the SF, ZF, and PF status flags according to the result |

Branch and Control Flow Instructions

We clearly need instructions to control the flow of a program’s execution. The branch and control flow instructions fall into two primary categories. The first is unconditional changes of program flow to a new program counter address. This occurs when a jump or call instruction is encountered. The second category of branch or control flow instructions are conditional branches or conditional execution of an instruction. The conditional execution of an instruction is dictated by the contents of bits within the EFLAGS register, or for some instructions the value in the ECX register.

Jump operations transfer control to a different point in the program stream without recording any return information. The destination operand specifies the address of the instruction we wish to execute next. The operand can be an immediate value, a register, or a memory location. Intel processors have several different jump modes that have evolved over time, but a number of modes are no longer used. The near jump is a jump within the current code segment. As we mentioned earlier, the current code segment often spans the entire linear memory range (such as zero to 4 GB). So all jumps are effective within the current code segment. The target operand specifies either an absolute offset (that is, an offset from the base of the code segment) or a relative offset (a signed displacement relative to the current value of the instruction pointer in the EIP register). A near jump to a relative offset of 8 bits is referred to as a short jump. The CS register is not changed on near and short jumps. An absolute offset is specified indirectly in a general-purpose register or a memory location. Absolute offsets are loaded directly into the EIP register. A relative offset is generally specified as a label in assembly code, but at the machine code level it is encoded as a signed 8-, 16-, or 32-bit immediate value. This value is added to the value in the EIP register. (Here, the EIP register contains the address of the instruction following the JMP instruction). Although this looks complicated, in practice the near jump is a simple branch with flexibility in specifying the target destination address. Intel processors also includes FAR jumps, which allow the program to jump to a different code segment, jump through a call gate with privilege checks, or a task switch (task in the IA-32 processor context). Table 5.9 shows the different instructions with examples.

Table 5.9. Program Flow—No Saved Return State

| Instruction Mnemonic |

Example | Description |

| Jmp | JMP target_label | Jumps unconditionally to the destination address operand |

| JZ | JZ target_label | Jumps conditionally to the destination operand if the EFLAG. Zero bit is set |

| JNZ | JZ target_label | Jumps conditionally to the destination operand if the EFLAG. Zero is not set |

| LOOP | MOV ECX,5

LoopStart: XXX YYY LOOP LoopStart |

Decrements the contents of the ECX register, then tests the register for the loop-termination condition. If the count in the ECX register is non-zero, program control is transferred to the instruction address specified by the destination operand |

Call gates are sometimes used to support calls to operating system services; for instance, this is a configuration available in VxWorks for real-time tasks when calling operating system services. However, operating system calls are more usually provided via the software interrupt call.

Calling subroutines, functions, or procedures require the return address to be saved before the control is transferred to the new address; otherwise, there is no way for the processor to get back from the call. The CALL (call procedure) and RET (return from procedure) instructions allow a jump from one procedure (or subroutine) to another and a subsequent jump back (return) to the calling procedure. The CALL instruction transfers program control from the current procedure (the calling procedure) to another procedure (the called procedure). To allow a subsequent return to the calling procedure, the CALL instruction saves the current contents of the EIP register on the stack before jumping to the called procedure. The EIP register (prior to transferring program control) contains the address of the instruction following the CALL instruction. When this address is pushed on the stack, it is referred to as the return instruction pointer or return address. The address of the called procedure (the address of the first instruction in the procedure being jumped to) is specified in a CALL instruction in the same way it is in a JMP instruction (described above).

Most processors provide an instruction to allow a program to explicitly raise a specified interrupt. The INT instruction can raise any of the processor’s interrupts or exceptions by encoding the vector number or the interrupt or exception in the instruction or exception, which in turn causes the handler routine for the interrupt or exception to be called. This is typically used by user space programs to call operating system services. Table 5.10 shows the instructions that affect the program flow.

Table 5.10. Program Flow with Saved Return State

| Instruction Mnemonic | Example | Description |

| CALL | CALL target_label | Saves the return address of the stack and jumps to subroutine |

| RET | RET | Returns to the instruction after the previous call |

| INT x | INT 13h | Calls software interrupt 13 |

| IRET | IRET | Returns from the interrupt handler |

On Intel platforms there is quite a lot of history associated with the INT calls. Legacy (non-EFI) BIOS supports a number of INT calls to provide support to operating systems. An example of a well-known INT call is the E820. This is an interrupt call that the operating system can use to get a report of the memory map. The data are obtained by calling INT 15h while setting the AX register to E820h. For embedded programmers, there is an ever-decreasing dependence on the INT service provided by the BIOS. The Linux kernel reports the memory map reported by the BIOS in the dmesg logs at startup.

The BIOS environment is transitioning from a traditional legacy BIOS, which was first developed a few decades ago, to a more modern codebase. The newer codebase is known as Unified Extensible Firmware Interface (UEFI). At the time of writing, many products are transitioning from this legacy BIOS to EFI. More information on this topic can be found in Chapter 6.

Structure/Procedure Instructions

Modern languages such as C/C++ define a frame structure on the stack to allocate local variables and define how parameters are passed on the stack. The IA-32 provides two instructions to support the creation and management of these stack frames, namely, ENTER and LEAVE. The stack frame is discussed as part of the Application Binary Interface discussed earlier in this chapter.

SIMD Instructions

Several classes of embedded applications are a mix of traditional general-purpose (scalar) workloads with an additional moderate digital signaling workload. Most embedded processors provide extensions to their core instruction set to support these additional workloads. These instructions are known as Single Instruction Multiple Data (SIMD). On Intel platforms the extensions are known as Intel Streaming SIMD Extensions (Intel SSE). Other processors have more basic extensions to provide single multiply-and-accumulate (MAC) operations. This operation is the basic building block for a large number of simple DSP algorithms. Over time the range of SSE instructions has grown significantly, and in fact they can bring substantial performance benefits to a wide range of workloads:

• Speech compression algorithms and filters

• Speech recognition algorithms

• Video display and capture routines

• Image and video processing algorithms

Generally, code that contains small-sized repetitive loops that operate on sequential arrays of integers of 8, 16, or 32 bits, single-precision 32-bit floating-point data, and double-precision 64-bit floating-point data are a good candidate for tuning using SSE instructions. The repetitiveness of these loops incurs costly application processing time. However, these routines have the potential for increased performance when you convert them to use one of the SIMD technologies.

On the Intel Atom processor, the SSSE3 SIMD instructions are supported and the instructions operate on packed byte, word, and double-word integers, as well as single-precision floating point. A key efficiency of the SIMD instructions is the fact that a single instruction operates on a number of data elements in parallel. If your data structures can be structured to make use of SIMD instructions, significant improvements in performance can be obtained. Figure 5.4 shows a typical SIMD operation.

FIGURE 5.4 Typical SIMD Operation.

The following code sequence is an example of how to get the data into the XMM registers and have the SIMD operation performed. It is written in the Intel Compiler format to incorporate assembly into C code.

1 void add(float ∗a, float ∗b, float ∗c)

2 {

3 __asm {

4 mov eax, a

5 mov edx, b

6 mov ecx, c

7 movaps xmm0, XMMWORD PTR [eax]

8 addps xmm0, XMMWORD PTR [edx]

9 movaps XMMWORD PTR [ecx], xmm0

10 }

11

The first three instructions get pointers for a, b, and c into EAX, EDX, and ECX registers, respectively. The MOVAPS instruction in line 7 above moves four quad-words containing four packed single-precision floating-point values from memory pointed to by EAX (128 bytes) into the XMM0 register. The ADDPS instruction performs a SIMD add of the four packed single-precision floating-point values in XMM0 with the four packed single-precision floating-point values pointed to by the EDX register. The final MOVAPS instruction saves the XMM0 (128-byte contents) to the memory pointed to by the ECX register. This is far more efficient than the traditional scalar equivalent.

Exceptions/Interrupts Model

Integral to all processors is the ability for the processor to handle events that are orthogonal to the execution flow of the program. All modern processors have a well-defined model to signal and prioritize these events. The processor can then change the flow of the executing instructions sequence to handle these events in a deterministic manner. The event is typically handled by transferring execution from the current running task to a special software routine. The software routines are called interrupt or exception handlers. The processor will save sufficient processor state information to allow the processor to return to the currently executing task when the interrupt or exception was raised. The resumption of the interrupts task happens with no loss in program continuity unless the exception is not recoverable or causes the running program to be terminated. To aid in handling exceptions and interrupts, each architecturally defined exception and each interrupt condition requiring special handling by the processor is assigned a unique identification number, called a vector.

Interrupts are divided into two types—hardware and software. Hardware interrupts are typically generated as a result of a peripheral (external to the processor core) that needs attention. Peripherals both within the SOC device and on the platform can raise an interrupt to the processor. The processor then transfers control to the appropriate interrupt handled for the specific device interrupt. The allocation and sharing of interrupt vectors is performed by the operating system. A simple example of a peripheral is a timer block, used to “kick” the operating system timer.

Software interrupts are typically triggered via a dedicated instruction such as INT #vector on Intel processors and SWI on ARM architectures. The execution of a software interrupt instruction causes a context switch to an interrupt handler in a fashion similar to an external hardware interrupt. Software interrupts are most often used as part of a system call. A system call is a call to an operating system kernel in order to execute a specific function that controls a device or executes a privileged instruction. The Linux operating system uses INT 0x80 for service calls.

Interrupts can be classed as maskable or non-maskable, though not all processors make provision for non-maskable interrupts. Non-maskable interrupts, as the name suggests, are interrupts that are always serviced. There is no ability to prevent or delay the recognition of a non-maskable interrupt. Non-maskable interrupts are themselves uninterruptible, with at most one non-maskable interrupt active at any time.

Exceptions are events detected by the processor. They are usually associated with the currently executing instruction. A common exception supported by all processors is “Divide by Zero,” which is generated as a result of a DIV instruction with a denominator of zero. Processors detect a variety of conditions including protection violations, page faults, and invalid instructions. The processor also monitors other processor conditions that may not be strictly correlated to the current instruction being executed. On the Intel platform these are known as machine check exceptions. Machine check exceptions include system bus errors, ECC errors, parity errors, cache errors, and translation look-aside buffer (TLB) errors. The machine check details are recorded in machine-specific registers.

Precise and Imprecise Exceptions

Exceptions can be categorized as precise or imprecise. Precise exceptions are those that indicate precisely the address of the instruction that caused the exception. Again, the divide-by-zero exception is an excellent example of a precise exception because the faulting instruction can be identified. Imprecise exceptions, on the other hand, cannot directly be associated with an instruction. The processor has continued execution of an indeterminate number of instructions between the time the exception was triggered and when the processor processed it; alternatively, the exception was generated by an event that was not due to an instruction execution. An example of an imprecise exception is the detection of an uncorrectable ECC error discovered in a cache. Imprecise exceptions are not generally recoverable; although the Linux machine check handler does all it can to avoid a kernel panic and the resulting reboot, imprecise exceptions are referred to as aborts on Intel architectures. Precise exceptions fall into two categories on Intel architectures, faults and traps:

Faults. A fault is an exception that can generally be corrected and that, once corrected, allows the program to be restarted with no loss of continuity. When a fault is reported, the processor restores the machine state to the state prior to the beginning of execution of the faulting instruction. The return address (saved contents of the CS and EIP registers) for the fault handler points to the faulting instruction, rather than to the instruction following the faulting instruction.

Traps. A trap is an exception that is reported immediately following the execution of the trapping instruction. Traps allow execution of a program or task to be continued without loss of program continuity. The return address for the trap handler points to the instruction to be executed after the trapping instruction. Traps are generated by INT 3 and INTO (overflow) instructions.

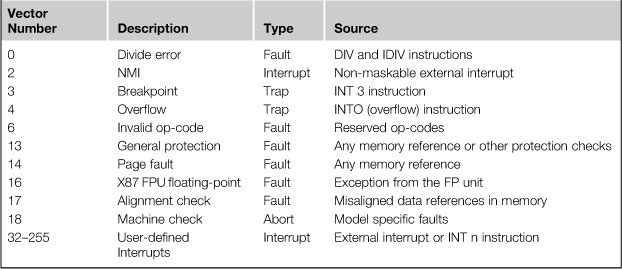

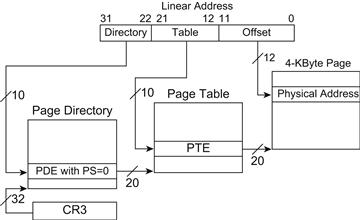

You may have noticed that the fault handler points to the faulting instruction for faults; this is because the handler is likely to rerun the faulting instruction once the underlying reason for the fault is resolved. For example, if a page fault is generated, the operating system will load the page from disk, set up the page table to map the page, and then rerun the instruction. Instructions that generate a trap, on the other hand, are not rerun. On other embedded platforms such as ARM, the fault address recorded on the exception is always that of the next instruction to run. However, when all instructions are the same size (32-bits) it’s a trivial matter to establish the faulting instruction; it’s not quite as straightforward with a variable size instruction set. The list of exceptions and interrupts that a processor supports is part of a processor’s architecture definition. All exceptions and interrupts are assigned a vector number. The processor uses the vector assigned to the exception or interrupt as an index into a vector table. On Intel architectures this vector table is called the Interrupt Descriptor Table (IDT). The table provides the entry point to the exception or interrupt handler. IA-32 defines an allowable vector range of 0 to 255. Vectors from 0 to 31 are reserved for architecture-defined exceptions and interrupts. Vectors 32 to 255 are designed as user-defined interrupts. The user-defined vectors are typically allocated to external (to the processor) hardware generated interrupts and software interrupts. Table 5.11 is a partial list of the IA-32 Protected mode exceptions and interrupts. See Chapter 5 of IA-32 Intel Architecture Systems Programming Guide.

Table 5.11. Abbreviated IA-32 Exceptions and Interrupts

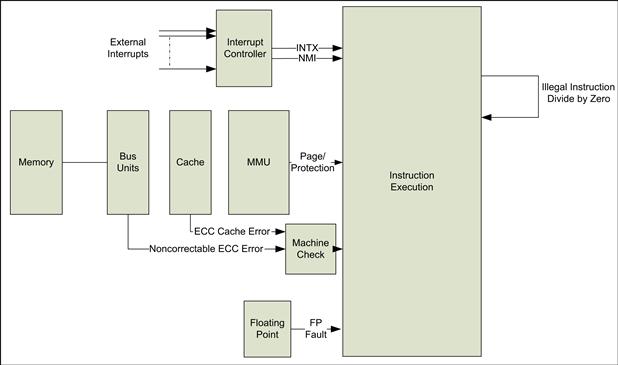

In summary, exceptions and interrupts can come from a wide range of sources from the processor core, caches, floating-point units, bus interfaces, and external peripherals. Figure 5.5 shows the wide range of sources.

FIGURE 5.5 Exception and Interrupt Sources.

Vector Table Structure

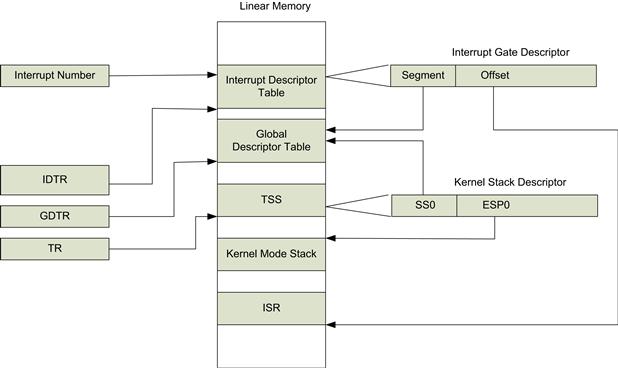

All processors provide mechanisms to translate an interrupt or exception into a handler for the interruption. Different processor architectures provide differing levels of hardware support in identification of the underlying hardware exception. As we mentioned above, external hardware interrupts are assigned a vector. PowerPC and some ARM architectures have a single IRQ line to the processor. The exception handler must then resolve the underlying cause of the interrupt, look up a software-based vector table, and transfer control to the interrupt handler. On Intel processors, the processor hardware itself identifies the underlying cause of the interrupt and transfers control to the exception handler without software intervention. An Intel processor takes a number of steps in the transition to the interrupt handler. Figure 5.6 shows the structures and registers that are used in the process.

FIGURE 5.6 Interrupt Descriptor Dereferencing.

The IA-32 processor automatically takes several steps in transfer of control to the exception function handler. The hardware interrupt controller sends an interrupt N message to the CPU. The interrupt controller is called the Local Advanced Peripheral Interrupt Controller (Local APIC) on Intel processors. The CPU reads the interrupt descriptor from the interrupt descriptor table (IDT). The interrupt descriptor table is located in system memory. The IDT stores a collection of gate descriptors that provide access to interrupt and exception handlers. The linear address for the base of the IDT is contained in the interrupt descriptor table register (IDTR). The IDT descriptor can contain one of three types of descriptor: task gate, interrupt gate, and trap gate. The IDT contains either an interrupt gate or trap gate descriptor for external interrupts. The difference between an interrupt gate and a trap gate is its effect on the IF flag: using an interrupt gate clears the IF flag, which prevents other interrupts from interfering with the current interrupt handler. The interrupt gate contains the following information:

• Segment selector. The segment selector selects a segment in the global or local descriptor table; this provides a base address for the IRQ handler table.

• Segment offset. This offset is added to the base address found in the referencing of the segment selector to produce the linear address of the ISR handler.

• Privilege level. This is usually set to zero (same privilege level as kernel mode code).

The address of the actual interrupt service routine is

ISR Linear Address =

GDT[(IDTR[Vector Number ].SegmentSelector)].BaseAddress +

IDTR[Vector Number].SegmentOffset

For Linux the values populated in the tables degenerate to the following (the processor still performs the lookups but the value returned is zero):

ISR Linear Address =

IDTR[Vector Number].SegmentOffset

Before the processor transfers control to the ISR, it must identify the appropriate stack to use to save registers. Two situations can occur. The first is when the interrupt privilege is the same level as the currently executing code. This occurs when an interrupt occurs while the processor is running kernel mode software. In this scenario the processor saves the EFLAGS, CS, and EIP registers on the current stack. The other situation occurs when the interrupt privilege level is lower than the currently executing code, for example, when the processor is interrupted while running user mode application code. In this case the segment selector and stack pointer for the stack to be used by the handler are obtained from the Task State Segment (TSS) for the currently executing task. On this new stack, the processor pushes the stack segment selector and stack pointer of the interrupted procedure. The processor then saves the current state of the EFLAGS, CS, and EIP registers on the new stack. The processor then transfers control to the interrupt service routine. The following sections describe the stack frame established by the processor and the resulting software handlers.

Exception Frame

A considerable amount of data must be saved when an exception or interrupt occurs. The state is saved on a stack, and the format of the saved data is known as an exception frame, as shown in Figure 5.7 . The exception frame can be split into two distinct portions: the first is the portion saved automatically by the processor, and the second is the set of additional registers that are saved by operating system interrupt service routine before it loads the software handler that deals with the actual device interrupt.

FIGURE 5.7 Stack Frames.

Some processor-generated exception frames include an error number on the stack. For example, a page fault will provide an error code on the stack frame. These error codes provide additional information such as whether the fault was due to a page not being present or a page-level protection violation.

The actual IRQ function call is usually written in assembly language. The function is then responsible for saving the registers that may be destroyed in the handling of the interrupt. For example, the MACRO SAVE_ALL defined in the Linux kernel file entry_32.s saves all the required registers as part of interrupt handler call. Once the interrupt has been processed, the system must return to normal execution. The IRET instruction will restore the processor to the same state prior to the interrupt (once the software saved state is also unrolled). In a simple embedded system this is the normal mechanism used to return to the pre-interrupt operation; however, in a multitasking operating system environment, the operating system may not necessarily return directly to the task that was interrupted. For example, if the operating system timer IRQ fires while a user process is running, the operating system will save the user space process registers into a process-specific storage area, execute the interrupt handler, and then identify what code should run next. It most likely will execute the kernel scheduler function. The kernel scheduler identifies the process to continue with, and transfers control to that process.

Masking Interrupts

As we discussed, interrupts fall into two classes, maskable and non-maskable interrupts. Processors provide a control mechanism to disable the servicing of interrupts received by the processor core. For Intel CPUs the Interrupt Enable (IF) flag in the EFLAGs register provides the control. If the flag is set, then the processor will service interrupts. If the flag is cleared, the processor will not service maskable interrupts. A number of mechanisms can be used to control the state of the IF flag. First, there is an instruction specifically assigned to allow you to set and clear the flag directly. The STI (set interrupt enable flag) instruction sets the flag, and the CLI (clear interrupt enable flag) clears the flag.

The use of STI/CLI (or its equivalent on other processors) has been quite prevalent in embedded systems to provide a low-cost method of mutual exclusion. For instance, if two threads were working with a linked list, it was quite common to disable interrupts while the pointer updates associated with link element insertion took place. Then the interrupts were re-enabled. This allowed the multiple pointer updates associated with a linked list insertion to be atomic. The mechanism has also been used between interrupt handlers and threads used for deferred processing.

However, two issues arise with this approach. The first issue relates to the introduction of multiple hardware threads in processors (multiple processor cores, or hardware threading on a single core). The STI/CLI instructions mask interrupts on the hardware thread that issued the instruction (the local CPU). Interrupts can be processed by other hardware threads. As a result, the guarantee of atomicity has been lost. In this case other synchronization mechanisms such as locks may be needed to ensure that there are no race conditions. The second issue arises because masking of interrupts can affect the real-time behavior of the platform. Masking interrupt introduces non-determinism associated with the overall interrupt latency performance. In embedded systems, having a deterministic latency time to service specific interrupts can be very important. For both these reasons and others, directly enabling and disabling of interrupts to provide mutual exclusion should be avoided. A case in point: the Linux kernel 2.4 had a significant number of sti/cli calls in the device drivers. The introduction of version 2.6 kernels was aligned with multi-core processors being much more prevalent, and correspondingly there are almost no driver calls left in the drivers for the 2.6.∗ Linux kernel. In fact, function wrappers for sti()/cli() have been removed from the kernel.

The interrupt flags can also be affected by the following operations: the PUSHF instruction saves the flags register onto the stack where it can be examined, and the POPF and IRET instructions load the flags register from the stack, and as a result can be used to modify the interrupt enable flag.

The interrupts are automatically masked when you enter an interrupt handler, and the driver or operating system will re-enable interrupts.

Acknowledging Interrupts

When a device indicates an interrupt request to the interrupt controller, the interrupt controller typically latches the request in an interrupt status pending register. The interrupt handling software must eventually clear the interrupt in the device and also indicate to the interrupt controller that the interrupt has been serviced. The device driver is typically responsible to consume all events that are associated with the interrupt and explicitly clear the source on the device. The interrupt controller (such as 8259/IOAPIC) must also be notified that an interrupt has been processed so the interrupt controller state can be updated.

The device interrupts on Intel systems may be presented to the interrupt controller as either edge- or level-triggered interrupts. For level-based interrupts, the device will de-assert the interrupt line once the underlying device event has been acknowledged. Level-triggered interrupts consist of a message sent to the interrupt controller that an interrupt has been raised. The actual interrupt line is now represented by a message being routed on a bus. There is no message signal to indicate that the interrupt has been cleared. The legacy (8259) interrupt controller has a register known as the End of Interrupt (EOI) register. The system interrupt handler may have to write to this register depending on the configuration.

Interrupt Latency

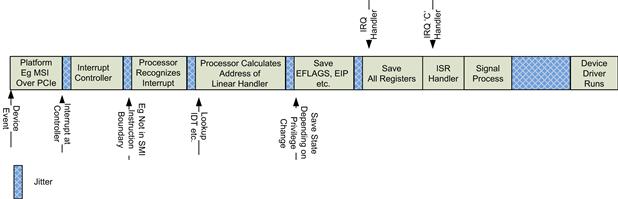

It is important to understand both the latency and the jitter associated with interrupt latency on embedded systems, as shown in Figure 5.8. The interrupt latency is defined as the time from when the hardware event occurred to the time when the software handler starts to execute. The contributions to the overall latency (and its variability) are:

• Hardware detection of the interrupt and its propagation to the processor core.

• Waiting for the interrupt to be at the appropriate level, for example, waiting for a current interrupt handler to complete.

• Recognition by the processor either by waiting for the current instruction to complete or by interrupting the current instruction at the next available cycle.

• The processor must identify the linear address of the interrupt handler. On Intel processors this requires reading the GDT and IDT tables, which could result in cache and translation look-aside buffer misses.

• The processor saves critical registers and transfers control to the interrupt handler.

• The interrupt handler then saves the remaining registers before the tradition C interrupt handler is called.