12

ARCHIVES, BACKUPS, AND LINEAR TAPE

Backing up data and archiving data are frequent activities that occur in operations where information is stored on magnetic spinning disk media or solid state drives. At the enterprise level, these processes need to occur for protective reasons, for legal verification reasons, and for the long-term preservation of assets. Backing up data versus archiving data are two separate functions and the terms are not synonymous. Backing up data is a protective action, usually the process of making a temporary copy of a file, record, or data set that is intended for recovery in the event the data are corrupted, the media itself is lost or destroyed, or for use in a disaster recovery or data protection model.

Archiving involves the long-term preservation of data. An archive is a permanent copy of the file for the purpose of satisfying data records management or for future, long-term library functions intended for the repurposing, repackaging, or reuse of the information at a later date.

Backing up and archiving involve two distinct processes with succinctly different purposes. Many do not understand the differences, and even today, the terminologies and practices are sometimes used interchangeably. Many enterprises would use their backup copies for both DR and archive purposes, a practice that is risky at best and costly at worst.

A library is a physical or virtual representation of the assets that the enterprise has in its possession. The physical library may consist of the original material as captured (film, videotape, or files); and the virtual library may be the database that describes the material with a pointer to where it is physically stored in either the digital data archive, the vault, or in a remote secure site.

This chapter looks into the various physical and logical applications of archiving and backing up digital files to physical media such as tape, optical media such as DVD or Blu-ray Disc, or on magnetic spinning disk both active and inactive. How the organization addresses these options, and what steps they should take to mitigate risk and reduce cost, while at the same time developing a strategy for file management that is usable, reliable, and extensible are the topics to be covered in this chapter.

KEY CHAPTER POINTS

•The requirements of the organization, how its users need to address protective storage, and how often it plays a key part in determining how content is stored for short term, for disaster, or for long-term archive

•Differentiating a backup from an archive

•Determining when the archive is better served on tape or disk media

•Components of the archive, what is a datamover, and how data are moved between active storage and inactive storage

•Archive management

•Digital tape and tape libraries, previous versions and workflows, how it works today, and what is the roadmap to the future

•What to be looking for in choosing an archive or backup solution, and what is on the horizon for standards in archive interchange

Organizational Requirements for Data

In looking to establish an archive and backup process, the organization first needs to define their business activity, which then helps determine the user needs and accessibility requirements for its digital assets. The fundamental backup/archive activities for business data compliance will use an approach that differs from those activities that are strictly media-centric in nature. These approaches are determined by many factors, some of which include the duration of retention, the accessibility and security of the information, the dynamics of the data, and who needs to use that data and when.

The organization, as expressed early in this chapter, must recognize that simply “backing up to a tape” does not make an archive. The use of data tape brings comparatively higher time and costs associated with it; thus, the use of data tape for shortterm business data compliance purposes is not as practical as employing spinning disks. When the organization has insufficient active storage capacity on spinning disks, it may turn to tape as its alternative. In routine daily uses, it can take an excessive amount of time for the users to find, examine, and recover (or retrieve) needed information. These are activities better suited for spinning disks used as near-line/near-term storage.

However, for a broadcaster with a large long-form program library that is accessed only occasionally, as in one episode a day from a library of hundreds of programs or thousands of clips or episodes, retaining that content on data tape makes better economic and protective sense than keeping it only on spinning disk.

Legal Requirements for Archive or Backup

Organizations that present material in the public interest, such as television news, have different policies pertaining to what is kept and for how long. Businesses that produce content for the Web, for their own internal training, or for the training of others, most likely have a data retention policy for moving media applications and content.

IT departments have developed their own policies and practices for the retention (archive) and the protection (backup) of data files such as email, databases, intercompany communications, etc. However, with multimedia becoming more a part of everyday operations, there may be only a small number of corporate retention policies in place due to the sophistication of cataloging moving media, the sheer size of the files themselves, and the unfamiliarity of the files, formats, and methods to preserve anything from the raw media files, to proxies, and to the varying release packages.

Broadcasters retain many of their key news and program pieces in physical libraries, on shelves, and in the master control operations area. The days of the company librarian have long gone and electronic data records seem to be the trend since its metadata can be linked to proxies, masters, and air checks. How the “legal-side” requirements for content retention at these kinds of operations works is a subject much broader than can be covered by these few chapters. Yet, more products are coming of age that do this automatically, and include such features as speech-to-text conversion, scene change detection, and on- screen character and facial recognition (such as “LIVE” or “LATE BREAKING” text indicating an important feature), in addition to more traditional segment logging and closed caption detection.

Air checks or logging can require enormous amounts of space if they use full bandwidth recording; so many of these activities have converted to lower bit rate encoding techniques that produce a proxy capable of being called up from a Web browser, or archived as MPEG-1 or MPEG-4 at a fraction of the space the original material would consume.

Repacking and Repurposing

The archive libraries carry untold amounts of information that organizations and enterprises have elected to retain for their or another’s future. In many cases, the uses for these libraries have yet to be determined, and for others there are very succinct purposes for retaining the data contents. Over time, archives will become the principle repository for housing historical information in all forms, especially moving media. We will find the content linked into massively intelligent databases that will become the resource catalog that is driven by enterprise-class search engines strategically linked to third-party services such as Bing, Google, Amazon.com, and others we have not seen yet.

Repackaging is the process of taking existing content and making it available in a different venue or on a different platform. With the proliferation of mobile technologies, the repackaging of full length features into shorter “episode”-like programs is an opportunity waiting for an audience. An archive that is the central repository for such content that is upstream of the secondary post-production process will obtain good value for those businesses that have the magic and the insight to develop these potential new revenue generating opportunities.

Repurposing is much like repackaging, except that it includes legacy content that is then re-cut (re-edited) or shaped to a different length, for a different audience or purpose. Such businesses as stock footage library resale, links into electronic publications referenced from mobile readers or Internet connections, downloadable content for on demand, etc., all can make good use from archives that they may own themselves or have contracted access to.

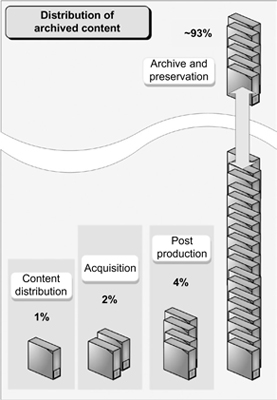

For the media and entertainment industry, ongoing directives to provide new revenues driven from existing content will be enabled by many of those archive platforms under their control. Multi-channel video program distributors (MVPD) continually find new methods to utilize their owned content in ways that would not have been cost effective in the past. Content management, like its close relative broadcasting, will become a growing venture as the population continues down the “any content—at any time—and anywhere” direction.

A look at activities related to program production that will utilize a combination of media asset management applications in conjunction with archive systems includes

•Versioning (language related)—the adaptation of one set of video media by marrying it with different foreign languages and captioning (open and closed) services, in order to produce a new set of programs.

• Versioning (program length)—the creation of different programs aimed at differing delivery platforms, for example, short form for Web or mobile devices, long form for paid video-on-demand, etc.

•Versioning (audience ratings)—producing versions for children’s audiences, mature or adults-only audiences, or broadcast versus cable/satellite purposes.

•Automated assembly—using the asset library to reconstruct full length day part programs from short clips, such as is done on the Cartoon Network where segments (cartoons) are already prepared in the library (archive), and then called for assembly to a server for playback.

•Advertising directed—specific programs that accentuate a particular brand or manufacturer that is produced for that advertiser’s channel or program segment.

Preparing content for video-on-demand is a common activity for most MVPDs. Today, these processes are highly transcodercentric, as different delivery service providers might require different encoding methods. The content, however, is kept on the archive in a master format at a high resolution, and then is called out to a server cache where it is then prepared for the service using a massive transcode farm that can simultaneously make multiple (compressed) versions and deliver them over content delivery networks (CDNs) to the end users and service providers.

Added Operational Requirements

An archive becomes both the protective copy and the master version when duplicate sets of the data are stored in different geographic locations. Protection copies may be seen as shortterm backups and become part of an organization’s disaster recovery plan. In the event that the master library is compromised, the protective copies become the new master, and the organization then renews the process of creating another protective duplicate from the new master.

When integrated with an asset management plan that includes the replication of all content and the distribution to other sites for protective purposes, the archive may be the best solution short of physically duplicating the media over a network to another near- or on-line spinning disk storage center. Cloud storage is surfacing as a modern means for archiving or protecting data because it is now in “virtual” storage, which by its very nature has a fundamental ability to have its own “one-to-many” replications of the stored data.

Backup or Archive: There are Differences

The main differences between data backups and data archives were introduced earlier, but only at a high level. This next section delves into the topic as it applies to the both the individual and the enterprise.

First and foremost, the purpose of a data backup is for disaster recovery (DR). For data archives, its principle use is for preservation. The differences in the two activities are based upon purpose, and that is what makes them really different from each other.

Backups

A data backup will be used for the recovery or restoration of lost or corrupted files. The highest use of the backup is to recover an accidentally deleted file or an entire drive of files because you had a laptop fail or it was stolen, or there was a double disk failure in a RAID 5 array that requires a complete restoration of the file sets to what they looked like previously.

Backups should be made when the equipment used to store those files is frequently moved or when it is routinely accessed by many users in any uncontrolled environment. Backups should be made immediately for content that is at risk of loss or has a value so high (as in a “once in a lifetime occurrence”) that losing it would be more than just disastrous.

Backups are preventative in nature. For example, if something bad happened to a user’s files yesterday, having a backup from a day ago might allow you to at least restore them to the point of a day or two or three ago. This is of course better than never finding even a trace of the file.

Backup software applications allow you to set up many different operating models, that is, what is backed up, how often, and under what circumstances. In media production, backups are usually made at the onset of a new version of a project. Material is transferred from the reusable in-field capturing media (e.g., XDCAM, P2, or a transportable disc) to a nearline/ near-term store as it is ingested and before it is used on the nonlinear editing platform. This is in reality a transfer and not a backup, as the original content on the original media becomes the backup until a successful copy or editing session is completed.

Backups will help to support the user in obtaining a version of a file from a few weeks or months prior, provided the backups are not purged or removed from access. Recordings or media should never be erased or destroyed until after a bona fide duplicate or backup is verified.

Acting like Archives

Data archives generally will show a history of files, where they existed, when they existed, potentially who accessed them and when, and who modified them in any way. Backup systems generally are not sufficient to perform these kinds of tasks, and they will frequently only “restore” the files to a previous location or to a local desktop application. It is possible to obtain these features in some backup applications, but is probably much more difficult or costly.

Some backup applications state they are able to create an archive version, but those claims should be thoroughly understood and tested before assuming the backup and the archive could be one and the same.

Data Backups—Not Archives

Backups are essentially considered to be short term and dynamic. They are performed incrementally and append only data that have “changed” since the previous full backup. Backups do not easily allow access to individual files, instead they are highly compressed and complex to restore if and when needed. Backup processes may be placed into these general categories.

Full

A full backup is where every file or folder selected is duplicated to another location on physical media such as a DVD, Blu-ray, a portable or external hard disk drive, or a data tape (e.g., Linear Tape Open (LTO)).

Incremental

In an incremental backup, only those files that have changed since the last backup of any kind are updated. The process also resets the archive flag to indicate that the file has been saved. Incremental backups do not delete the older version of a previous version of the backed up file(s); it simply adds a copy of the newest version.

Differential

Backs up all changed files and deletes the files that are missing plus the older versions of files currently to be backed up.

If all the attributes of the old files completely match the current version of what will be the new archive files, then it is included in this differential backup process.

“Mirror”

A mirror is where the set of backup folders is continuously synchronized with the source set of folders. When a source file changes in any way, there is a copy of that change placed into the backup. This process usually happens immediately, similar in function to a RAID-mirror (RAID 1), but it will place all the backup files in a separate location on a separate set of media.

Snapshot

A snapshot is an instant capture in time of the current files, the same as a full backup, but without marking the files as saved. High performance intelligent storage systems are enabled to take recursive snapshots of the file systems so as to maintain as current an image of the overall system as possible. Most backup applications are not that sophisticated.

Internal Application-Specific Backups

Individual media software applications frequently have their own versions of backups that are used to return a set of functions to their previous state, usually called the “undo” function. In nonlinear editing (NLE) platforms, production bins which contain raw material, in-production segments, and associated metadata are retained until the final composition is committed to rendering. Once the finished piece is rendered, some or all of the elements plus the history of those operations may be pushed off to an archive as permanent storage. The more sophisticated platforms may boil all the backed up individual steps from the time line and retain them so that segments of the work in process can be recovered for future use.

Some applications have plug-ins that link to third-party applications that manage the migration of these files, elements, and metadata to a tape or disk (disc)-based archive. If all the elements are migrated to the archive, recovery of the entire production process is possible. However, once a file is “flattened” and the timeline information is purged, the historical information surrounding the editing process is most likely lost forever. A representation of how one product line from Avid Technology has bridged the integration gaps in third-party archive solutions follows next.

Archive and Transfer Management—Nonlinear Editing

The Avid TransferManager “Dynamically Extensible Transfer” (TM-DET) is part of their tool kit that migrates OMF-wrapped media files (JFIF, DV, I-frame MPEG) to third-party destinations such as a central archive. DET further enables a vendor to restore previously transferred media files back into Avid Unity (“DET Pull”) with Interplay Transfer or TransferManager performing an Interplay Engine or MediaManager check-in (essentially, logging media metadata into the centralized database).

DET is the appropriate API to use when the target file is already an Avid media file wrapped with MXF or OMF metadata. To allow native editing, the media files must be wrapped in MXF or OMF by an Avid editor, by the ingest platform or through the Avid TransferManager.

The Avid TransferManager “Data Handling Module” (TM-DHM) tool kit enables vendors to create a plug-in that works with Interplay Transfer and TransferManager to perform playback and ingest transfers. Playback and ingest transfers are distinct from standard file transfers in that they first convert a sequence to or from a stream of video frames and audio samples that are then transferred over an IP network.

During an ingest session, the Transfer application accepts a multiplexed stream of video and audio frames, and then converts the audio and video stream into an Avid-compatible clip by wrapping them in MXF or OMF format and checking the associated metadata into the Interplay Engine or MediaManager system. When incorporated with third-party archive applications, these solutions provide an integrated approach to media production, protection, and extensibility as production requirements change and the need to reconstruct the post-production processes surface.

Differentiation

Differentiating between how the archive stores the data and what data the archive stores is fundamental to understanding backup and archive functionality. If we look deeper into archives, especially those which are moving media process oriented, one may find there are subsets of processes related to archiving: those that occur in real time during capture or ingest; and those that happen at the conclusion of a production process or asset management routine.

In e-discovery (also “eDiscovery”), the data archive process watches the system and archives its data as it is created and in real time. On the other hand, the data backup routine runs as a batch process at prescribed intervals, usually nightly.

Another difference between the backup and the archive processes is the level of detail that the archive will store. Data archives will store all of the supplemental information associated with a file, both as structural metadata about the file and descriptive metadata about the content. For example, when email is archived, the data archiving software product stores information such as the subject line, the sender, and the receiver or it may look into the body and attachments for key words. Google Desktop is an example of the depths that an archive might take for what are purely “search” functions, and what can be done with that data from an extensibility perspective.

Archives store this information in a linked database, as well as the document and email attachments, in a similar way that a data backup system would. Archives take this data, create metatags and other relevant statistics, and then put it into a searchable database that is accessible to users and search engines. In contrast, a backup system is data that are simply stored on a tape or an external drive, without the tagging or the search integration; it is essentially a file system record without search capabilities.

Archiving for Discovery

A backup application would not be used for e-discovery or for determining which references are to be used as discovery in civil litigation dealing with the exchange of information in electronic format. These data, which are also referred to as Electronically Stored Information (ESI), are usually (but not always) an element of one of the processes of digital forensics analysis that is performed to recover evidence. The people involved in these processes include forensic investigators, lawyers, and IT managers. All these differences lead to problems with confusing terminology.

Data that are identified as relevant by attorneys is then placed on “legal hold,” thus forcing the archive (and sometimes temporary backups performed prior to archive) to be put into suspension, excluding the system from further use until properly collected as evidence. This evidence, once secured, is extracted and analyzed using digital forensic procedures, and is often converted into a paper format or equivalent electronic files (as PDF or TIFF) for use in the legal proceedings or court trials.

If data are put on hold because you are being sued or investigated by a legal entity, you will most likely not be asked to restore things to the way they looked in the past. Instead, a snapshot of the entire data system is made or the entire storage system is archived so that no loss or corruption of the data/information (e.g., all emails with specific keywords in them between one person and another, company to company, or intra-company files in a particular directory) can occur.

The time, sequence, and who created or modified the content is the prime requirement to be preserved in this kind of data recovery and collection.

Data Archiving

The process of moving data that are no longer actively used to a separate data storage device for long-term retention is called “data archiving.” The material in data archives consists of older data that are still important and necessary for future reference, as well as data that must be retained for regulatory compliance. Data archives are indexed and have search capabilities so that files and parts of files can be easily located and retrieved.

Data archives are often confused with data backups, which are copies of data. This distinction is not wise and, for the reasons discussed earlier, is risky and not recommended. To review, data backups are used to restore data in case it is corrupted or destroyed. In contrast, data archives protect older information that is not needed for everyday operations but may occasionally need to be accessed.

Data may be archived to physical media and to virtual space such as “the cloud.” Physical media includes spinning magnetic disks, optical media, holographic media, and solid state memory platforms. Virtual space is where media is sent and managed by another entity, one who has taken sufficient precautions to duplicate, backup, and store into other forms of physical media, all of which are probably unknown to the user who contracts for this virtual storage or archive space.

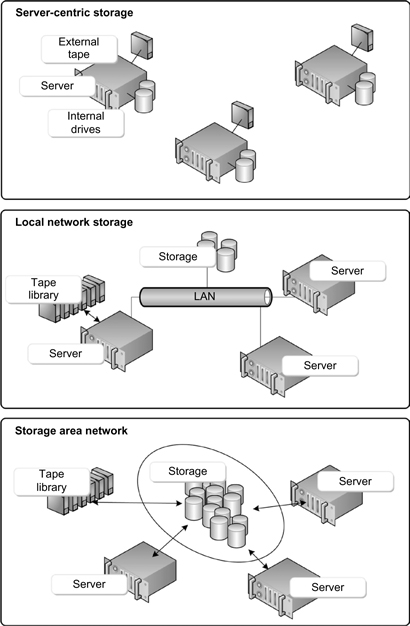

The evolution of tape storage management began with simple, direct attached storage (DAS) devices and has grown steadily into the storage area network (SAN) domains. Tape, while looked upon as more than just a solution that addresses the demands for increased storage capacity, has followed in lock step with the developments of disk drive storage applications. Figure 12.1 shows three hierarchical variances of storage management using server centric storage, local area network storage and storage area network (SAN) approaches.

The next sections of this chapter will open the door to the two foremost methods of storing digital media in the archive: diskbased and tape-based physical media.

Figure 12.1 Storage management hierarchy.

Tape or Disk

The vital process of archiving moving media data is important for several reasons. Archiving data, whether for the long term or the short term, allows the organization to retrieve that data for alternative uses while simultaneously preserving the aesthetics of that time and place in history for an eternity. Government regulations are driving the retention of data, which must now be kept for a specific number of years. With these perspectives, companies will look to data archiving strategies that allow them to fulfill the government regulations, and their own internal policies.

Three principle archiving storage methods are available today. The organization may select either disk-based archiving, tapebased archiving, or a combination of both. Although there was an interest in looking beyond these two, specifically to holographic solid state archiving, those hopes seemed to peak in around the 2005 time period and have slowly disappeared from the landscape in recent years.

Pros and Cons

Marketing has a wonderful way of making one product, technology or implementation a whole lot better than its opposing counterpart. The end user is forever challenged by the means used to represent the performance, reliability, and longevity of the two primary alternatives—tape and disk—for archiving purposes.

There are several advantages and disadvantages to disk archiving and tape archiving. Organizations often choose a hybrid mix of disk and tape, using disk for short-term archives that require a high volume of data exchanges, which are termed “near-line” or “near-term” storage. Once the necessity for rapid access diminishes significantly, the data are then transferred, i.e., “archived” to data tape for long-term retention.

Access requirements are going to vary depending upon your workflow and your mission. In the first few years of any retention period, keeping your active data highly accessible so that you can get them back quickly is critical. The content that you just need to keep around and do not need access to is the first reason to port the data to tape. Ultimately, at least for this period in technology, tape becomes the most logical choice for long-term archives, regardless of the capabilities you might have or desire in a disk platform.

For short-term archives of databases, i.e., files not containing moving media, metadata, or search engine data sets, etc., users could employ technologies such as Oracle’s Sun Storage Archive Manager (SAM) to move data to disk. This software provides data classification, centralized metadata management, policy-based data placement, protection, migration, long-term retention, and recovery to help enterprise-class entities to effectively manage and utilize data according to business requirements.

Self-Protecting Storage Systems

The Oracle SAM uses a self-protecting file system that offers continuous backup and fast recovery methods to aid in productivity and improve the utilization of data and system resources.

One of the patented methods that is used to monitor attempts to access protected information, called a self-protecting storage system, allows access for authorized host systems and devices while unauthorized access is prevented. In this process, authorization includes a forensic means for tracking that involves inserting a watermark into access commands, such as I/O requests, that are sent to the storage device itself. These access commands are then verified before access is permitted.

Passwords are certainly the most frequently used methods for protecting access to data; however, in one embodiment, the block addresses in I/O requests are encrypted at the host device and decrypted at the self-protecting storage device. Decrypted block addresses are compared to an expected referencing pattern. Should a sufficient match be determined, access to the stored information is provided.

Self-protection can be provided to a range of storage devices including, for example, SD flash memory, USB thumb drives, computer hard drives, and network storage devices. A variety of host devices can be used with the self-protecting storage devices, such as cell phones and digital cameras. This methodology could be added to tape archive systems, such as LTO, which could embed metadata and track location identifiers into the physical properties of the cassette itself. It could also be added to all camcorders or solid state media storage subsystems at the time of image capture, allowing that means of access control to be carried forward throughout the production and post-production stages and on through release and archive.

Disk Archiving Pluses

Disk archiving has several advantages. Disks will provide a much faster means to archive and to restore data than tape. Disks are easier to index and to search than tape archives. Users wanting to locate and recover a single file will only take a short time with disk. However, the storing of large files as archives on disk will consume a lot of unnecessary storage, not to mention floor space and energy. For a large scale, occasionally accessed archive, the exclusive use of disk storage is a costly process.

Tape Archiving Pluses

Many organizations archive huge amounts of data on tape for long-term storage. This method requires less floor space, less energy, and less cooling capacity when compared with comparable storage capacities on spinning disks.

Using tape for archiving purpose can provide better security, especially when the files are encrypted. Leaving any system online and connected over any network is an opportunity waiting for a hacker. Although IT-administrators hope not to have to go through and search individual tapes for information, which is not particularly complex if managed by an asset management system directly coupled to the archive platform, they know the option is there should it be necessary.

The scale of the storage requirement is a prime reason for using a tape-based archive system. Density-wise, tape will most likely always have an edge versus tape. If you are looking at tape because you have more than 100 TB of data, that is a good benchmark for today. However, if your needs are for less than 50 TB of data, you should evaluate the requirements for a tape library solution. It could be that a single transport application-specific tape system might serve the organization well.

Storage Retention Period

When the organization intends to store its data for 10–15 years, the total cost of ownership (TOC) for a tape-based solution will be less than a disk-based solution even with the prices for disks continuing to slide downward as capacities move upward. With tape, you do not have to go through migration; and if it is not spinning, then you are not paying for the energy, maintenance, or replacements of costly individual components.

Disadvantages of Tape

Tape has significant disadvantages that many organizations will struggle with. Those that use a mix of disk and tape to archive data say they still run into trouble when trying to restore from older tape media. Tape cartridges can become corrupted over time; they may be found unreadable, and the transport devices as well as the physical media become aged. Older formats are most likely no longer supported, meaning users may not be able to recover data that have been sitting on older tapes when they need to. Tape formats are often proprietary and can only be recovered on the same transports with the same software (especially if encrypted). This is one of the means that vendors will use to lock the user into their format until that equipment is discarded or abandoned for another.

For legal activities (such as e-discovery), the indexing and search capabilities on tape systems without an external metadata or asset management system are time consuming and frustrating. When the tape library of 9 years ago was archived using a different backup software or operating system than what is currently in place, users may be in for a long process of restoration and searching just to try and extract one or a few files from the library.

The importance of a secondary tracking system mitigates the considerable amount of time required to identify the right tapes and the right place on the tapes where the data are located. Without this kind of third-party application support, a user might end up literally restoring as many tapes as they can, only hoping that they will find the missing needle in the haystack.

Legacy Tape Archive Problems

For those organizations that feel tape is the best method for archiving, third-party solutions are available that aid in making search and indexing easier. Tape backup programs have useful lives built around the operating system that was the most prominent at the time the company wrote the application. As time marches on, those programs become obsolete, cease to be supported, or worse yet, the company that provided the software simply evaporates.

This makes the selection process for both the tape library and the software applications that support the backup or archive all the more complicated. There are programs available that are designed to search and extract files that are stored on tape archives without a secondary asset management routine or application. These “tape assessment programs” are able to search old tape libraries for a specific file, or set of files, and once found, can extract them without having to use the original backup software that was used to create those files.

Standards-Based Archive Approaches

For the moving media industries (film and television), there are new developments that help in the support of mitigating legacy format issues like those expressed previously. Part of the efforts put forth into designing the Material eXchange Format (MXF), by SMPTE, has been added to the latest releases of LTO-5. This application-specific technology is discussed later in this chapter in the section on LTO. There is also work underway for an open archive protocol.

Common Backup Formats

Common backup formats for the data side of the enterprise include:

•CA Technology’s “ARCServe”

•CommVault’s “Simpana”

•EMC’s “NetWorker”

•IBM’s “Tivoli Storage Manager” (TSM)

•Symantec’s “Backup Exec” and “NetBackup”

According to storage and archive reports (2009), 90% of the marketplace uses these platforms for backup protection.

As for physical tape, Digital Linear Tape (DLT) and LTO seem to be the remaining leading players. For the purposes of recovering legacy data on older formats or media, anything that can attach to a SCSI connection should be recoverable using alternative third-party resources or applications. It is a matter of how often, and under what time constraints, the organization wants to experience the pain of recovering data from a poorly kept or mismanaged archive.

Determining the Right Archiving Method

Today companies will set their overall data archiving strategy and choose their archive systems based upon storage requirements, business activities, and sometimes legal policies. When your need is only to store data for a short period of time, if you are not going to be storing hundreds of terabytes, and you would not (or do not) have long-term retention requirements, archiving to a disk-based platform is the better solution.

If your requirements are to store a large amount of data long term that the organization does not need to access (at least in a relatively short time frame), but your organization needs to keep the content around for litigation purposes, archiving to tape is the easier and less costly option. Nonetheless, many companies are made to lean toward using both tape and disk methods for archiving. This, at least for the present, is often the better way to go.

Cloud Data Protection

An up and coming alternative that makes the financial side of operations pleased is the employment of the “cloud”—the ubiquitous virtual place in the sky that you cannot touch, see, or feel. Enterprises that protect their data through exclusive use of on- premises data storage, backup, or archive systems face potential challenges associated with complexities of implementation, capital and operational total costs of ownership, and capacity shortfalls.

The infrastructure necessary for sufficient protection of the enterprise’s digital assets represent a substantial capital investment and must factor in a continual investment over time. Even after acquiring the hardware and software, the enterprise must deal with the networking subsystems and the headache of maintenance including the responsibility for a complex and burdensome disk and/or tape management process—the story does not end there.

The ongoing “lights-on” expenses and resource commitments place continued burdens that might prevent you from using the limited resources available on more strategic projects that are paramount to your actual business. Spending time monitoring the progress of backups and finding answers to troublesome problems is counterproductive to meeting the mandate of doing more with less.

Organizations, given the current economic climate of the years surrounding 2010, are turning to cloud-based data protection as a solution to their unforeseen problems. Cloud solutions are growing, with a nearly 40% expansion in the use of thirdparty cloud storage for off-site copies expected by 2012 (reference: “Data Protection Trends,” ESG, April 2010).

Cloud Benefits

Data protection (not necessarily archiving) is an ideal application that can be moved to the cloud. For most companies, data protection is not a strategic part of their core operation; it is more a task that is necessary because of policies that are both legal and financial in structure.

It is relatively easy to buy, deploy, and manage cloud-based data protection. Cloud-based solutions avoid the upfront capital investments and the costly requirement of continued upgrades to networks, tape, disk, and server infrastructures. Relieving this burden enables the enterprise to remain agile and to provide a competitive edge without having to support, train, replace, manage, and handle the ongoing issues of data backup and/or archive. This storage solution also becomes an OpEx instead of a CapEx commitment.

Scaling

When capacity requirements are unknown, such as when a business acquisition requires a new structure in data retention, cloud-based storage capacity can scale effortlessly to meet the dynamics of the enterprise with regard to backup and archive.

Services Provided

When looking to the cloud for backup or archive support, look at features such as advanced encryption and other security technologies that are aimed at safeguarding your data during the transmission, storage, and recovery processes. A cloud-based service should be able to provide built in deduplication for capacity and bandwidth optimization. The service should employ underground data storage facilities that can satisfy the strictest security standards.

Insist that all data are replicated to a second geographically separate fully redundant data center. There should be a proactive administration and monitoring of the data as an automated, standard service. Continuous backups should cover open files and databases. For media assets, the system’s network access points must be able to handle the requirements of jumbo frames and multi-gigabyte sized files.

For operational activities and for disaster recovery, the cloud should provide appliances that are capable of a fast recovery for large amounts of data, especially when deadlines loom or when collaborative post-production processes span continents.

The connections into a content delivery network suited to the business practices and requirements of your organization should also be considered. The gateway into the cloud-storage protective service should be capable of optimizing network traffic without necessarily depending upon a private network.

Holographic Storage for the Archive

Holographic storage is truly a form of solid state storage. Making use of the same principles as those in producing holograms, laser beams are employed to record and read back computer-generated data that are stored in three dimensions on a durable physical piece of plastic, glass, or polymer type media. The target for this technology is to store enormous amounts of data in a tiny amount of space.

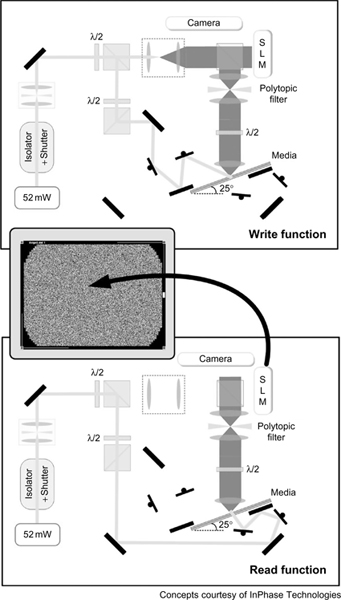

Although not yet successfully mass-commercialized, there have been many vendors who have worked on it, including (in 2002) Polaroid spinoff Aprilis, and Optware (Japan). The best known of these vendors is InPhase Technologies. Originally founded in December 2000 as a Lucent Technologies venture that spun out of Bell Labs research, InPhase worked on a holographic storage product line capable of storing in excess of 200 gigabytes of data, written four times faster than the speed of current DVD drives. InPhase ceased doing business in February 2010, yet it was quickly reacquired in March 2010 by Signal Lake, a leading investor in next-generation technology and computer communications companies, who was also responsible for the original spinoff from Bell in late 2000. Figure 12.2 shows the fundamental read and write processes employed in holographic storage.

Figure 12.2 System architecture for holographic data storage.

Holography breaks through the density limits of conventional storage by recording through the full depth of the storage medium. Unlike other technologies that record one data bit at a time, holography records and reads over a million bits of data with a single flash of laser light. This produces transfer rates significantly higher than current optical storage devices. Holographic storage combines high-storage densities and fast transfer rates with a durable low cost media at a much lower price point that comparable physical storage materials.

The flexibility of the technology allows for the development of a wide variety of holographic storage products that range from consumer handheld devices to storage products for the enterprise. Holographic storage could offer 50 hours of high definition video on a single disk; 50,000 songs held on media the size of a postage stamp, or half a million medical X-rays on a credit card.

For permanent archiving, the holographic storage medium is a technology with tremendous potential since the life expectancy of the media and the data could be centuries in duration. The advantage of holography over tape storage then becomes increased density, higher durability, and less subjectivity to destruction by electromagnetics, water, or the environment in general.

The first demonstrations of products aimed at the broadcast industry were at the National Association of Broadcasters (NAB) 2005 convention held annually in Las Vegas. The prototype was shown at the Maxell Corporation of America booth. In April 2009, GE Global Research demonstrated its own holographic storage material that employed discs, which would utilize similar read mechanisms as those found on Blu-ray Disc players. The disc was to reportedly store upwards of 500 Gbytes of data.

RAIT

Introduced in the late 1990s and early part of 2000, Redundant Array of Inexpensive Tapes (RAIT) is a means of storing data as a collection of N+1 tapes that are aggregated to act as a single virtual tape. Typically, data files are simultaneously written in blocks to N tapes in stripes, with parity information written in bit wise exclusive-OR fashion stored on from one to many additional tapes. A RAIT system will have higher performance compared to systems that only store duplicate copies of information. The drawback is that because data are striped across multiple tapes, to read a particular set of data requires that all the tapes storing that data must be mounted and then simultaneously and synchronously read in order to reconstruct any of the files stored on the tape.

Even though the system utilizes parity information for the benefit of reconstructing data lost because a tape has gone missing or has unrecoverable data, should one or more tapes be found unreadable, the system must wait until all of the remaining tapes can be read and parity information can be used to reconstruct the data set.

Since their introduction, manufacturers of tape-based storage systems looked at means to protect tape-based information in archives and backup systems in a similar fashion to RAID protection for disks. The concepts included extending high data rate throughput, robustness, and virtualization. Through the use of striping, the quality of service (QoS) would be improved by combining the number of data stripes (N) with the number of parity stripes (P). Defined as “scratch mount time,” N + P sets would define the QoS for a particular configuration.

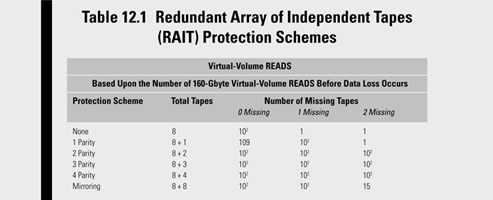

By implementing parity, much like that in a disk RAID set (e.g., RAID 5), the number of virtual-volume reads before data loss is mitigated. For example, by prescribing an array of striped tapes in an RAIT configuration, composed of a set of eight striped tapes plus from one to four parity tapes, the tolerance profile for the number of virtual-volume reads before data loss when one or two tapes go missing out of the set is elevated according to Table 12.1.

RAIT works essentially the same way as RAID, but with data tape instead of spinning media. RAIT is ranked by six increasing levels of protection (or security) ranging from RAIT Level 0 to RAIT Level 5.

Mirroring—Level 1 RAIT

Known as disk mirroring or disk duplexing; it is where all data are written to two separate drives simultaneously. Should a drive fail for any reason, the other drive continues operation unaffected.

Data Striping—Level 3 RAIT

Level 3 RAIT employs data striping, in which the data stream is divided into equal parts, depending on how many drives there are in the array. In Level 3 RAIT, there is a drive dedicated to maintaining parity. A parity drive can potentially cause a bottleneck because all the Read-write requests must first go through the parity drive. In the event a drive should fail, the parity information is then used to reconstruct the lost data.

Data Striping—Level 5 RAIT

The parity information is divided equally among all drives. Level 5 RAIT provides maximum data integrity, however, often at the expense of data throughput.

Not for Random Access

RAIT technologies are not a substitute for random access to data, and have not been widely accepted in the data archiving or backup circles. Although StorageTek stated a commitment to COTS RAIT in 2001, the development of virtual tape library technologies seem to have taken the marketing side of RAIT and put it on the back burner. As of this writing, there has been only a marginal commitment to RAIT at either the network or the architectural level.

Automated Tape Libraries (ATL)

In the era before file-based workflow was an everyday term, the broadcast media industry faced a challenge of how to manage both their expanding off-line and near-line digital storage requirements. Initially, the solution was the integration of a data cassette storage library coupled with their videoserver systems. That began in the mid-1990s with both dedicated single drives and with multiple drive robotic libraries using tape formats designed exclusively for data libraries, such as the Exabyte platform. This method was not widely accepted as a solution for extending the storage capacities for video media, and drove the implementation of other alternatives.

In those early years for broadcast media, those “near-line” and “long-term” archives consisted of a robotics-based automated tape library (ATL) built around a specific digital tape media format, and controlled by a data management software system generally coupled with the facility automation system. An ATL for data purposes is somewhat of a descendant of the videotape-based library cart systems from the Sony Betacart and Library Management System (LMS), the Panasonic MII-Marc, or the Ampex ACR-25 and ACR-225 eras of the 1990s. Yet the ATL is a different breed closely aligned with the computer-centric data tape management systems for transaction processing and database server systems employed by the financial records departments of banks and the medical records industry.

ATLs for broadcast and media delivery systems could not stand by themselves. They required a third-party interface placed between the tape-based (or disk-based) storage system and the server to effectively do their job on a routine operational basis. In this infant world of digital media data archiving, it was the tape format that had as much to do with the amount of data that could be stored as it did with how that data moved between the servers, the near-line, and the online systems. Automated tape libraries would not be purchased solely for the purpose of removing the burden from the videoserver. The ATL was expected to become the tool that would change the way the facility operates in total.

The selection of an ATL was based upon how that tool could create significant operational cost savings both initially and over the life of the system. Choosing an ATL was no easy matter, as the hardware and software together formed the basis for a new foundational model for broadcasting or content delivery. That model has evolved significantly since the introductory days of broadcast servers attached to automated tape libraries.

Stackers, Autoloaders, or Libraries

The distinction among tape automation systems is in the way they handle data backup and physical media access management. Stackers, sometimes referred to as autoloaders, were the first tape automation products for small and mid-size information systems operations. Typically, these systems had just one drive, with the tapes being inserted and removed by the system’s mechanical picker, sometimes called an elevator, in sequential order. Operationally, if a stacker is configured to perform a full database backup, the system would begin with tape “0” and continue inserting and removing tapes until the backup was completed, or the supply of cartridges is exhausted.

An autoloader, still with only one drive, has the added capability of providing accessibility to any tape in its magazine upon request. This ability to randomly select tapes makes autoloaders appropriate for small scale network backup and near on-line storage applications.

Libraries offer the same type of functionality as autoloaders, but are generally equipped with multiple drives for handling large-scale backups, near on-line access, user-initiated file recovery, and the ability to serve multiple users and multiple hosts simultaneously. On those larger libraries, multiple robotic mechanisms may also be employed to improve data throughput and system response time.

Server and Archive Components

The dedicated videoserver archive systems consists of three fundamental entities. First is the physical tape drive (or drives); second is the library mechanism (the robot) that moves the tapes to and from the drives; and third is the software that manages the library/tape interface between the server, the datamover systems, and the facility’s automation systems. Among other functions, it is the archive software that contains the interface that enables the historical media’s data library and the physical archive library databases.

This portion will focus on the first of three entities, the tape drive, which consists primarily of two different technologies: helical scan technology and linear tape technology. The latter, developed by Digital Equipment Corporation as a backup media, is commonly referred to as digital linear tape or DLT.

Throughout the evolution of tape-based storage for digital assets, we have seen various products come and go. Digital tape drives and formats have spanned a tape technology evolution that has included Exabyte Corporation (acquired by Tandberg Data on November 20, 2006) with its 8 mm, Quantum, which produces DLT (ranging from the TK50 in 1984 to SuperDLT in 2001), DAT/ DDS and Travan-based technologies, Ampex with its DST products, and Sony’s AIT and DTF formats on their Petasite platform.

Evolution in Digital Tape Archives

Tape storage technology for broadcast-centric media archiving has its roots in the IT domain of computer science. The original concept of tape storage stems from a need to backup data from what at one time were fragile, prone to catastrophic failure devices known all too well as hard disk drives. Today, with high reliability, low cost drives—coupled with RAID technology—the need for protecting the archive takes on a different perspective. It is not the active RAID protected data that concerns us, it is inactive data that have consumed the majority of the storage capacity on the server or render farm that needs migration to another platform so that new work may continue.

Modern videoservers are built with hardware elements that can be upgraded or retrofitted without undue restructuring or destruction of the media library. Unlike the disk drive upgrades in the videoserver, which can be replaced or expanded when new capacities are required, the tape system will most likely not be changed for quite some time. While it is simply not feasible to replace the entire library of tapes or the tape drive transports, manufacturers of tape systems have recognized there are paths to upgrades for the archive in much the same manner as videoservers are upgraded.

Those who have experienced the evolution of videotape technologies should heed this strong advice. The selection of a tape storage platform is an important one. The process should involve selecting a technology that permits a smooth transition to nextgeneration devices that will not let the owner head down a dead end street at an unknown point several years down the road—a difficult challenge in today’s rapidly advancing technological revolution.

Information technology has provided a lot of good insight into videoserver technologies. With respect to data backup in IT, the information systems manager typically looks for short runtime backup solutions that can be executed during fixed time periods and are designed to perform an incremental update of a constantly evolving database. These backups must have a high degree of data integrity, should run reliably, and generally with minimal human intervention. For the data sets captured, errordetection and correction is essential, with the name of the game being “write-the-data-once” and “read it many times thereafter.”

In contrast, the video/media server model can operate in a variety of perspectives, depending upon the amount of online disk storage in service, the volume and type of material in the system, and the formats being stored on those systems. Variables such as the degree of media protection and the comfort level of the on-air facility overall make management of the archive a customizable solution with paths to the future. Thus, making the choice in a tape media for archive or backup becomes a horse of a different color—even though the industry consensus leans toward the LTO and DLT formats so prominent in data circles.

Tape Technology Basics

Historically, when tape was first used as a backup solution, helical scan technology was an acceptable, relatively inexpensive way to meet the goal of secondary storage for protection, backup, and expanded off-line storage. Linear tape technologies, with or without fixed position heads, were developed later, offering alternatives that over time have allowed a viable competition to develop between both physical tape methodologies.

Tape Metrics

Factors that users should be aware of and contribute to the selection of a tape technology (whether for broadcast or IT solutions) include the following.

•Average access time—the average time it takes to locate a file that is halfway into the tape; it assumes this is the first access requested from this specific tape, so the drive is starting to seek the file from the beginning of the tape.

•Transfer rate (effective)—the average number of units of data (expressed as bits, characters, blocks, or frames) transferred per unit time from a source. This is usually stated in terms of megabytes per second (Mbytes/second) of uncompressed data, or when applicable, compressed data at an understood, specified compression ratio (e.g., 2:1 compression).

•Data integrity (error correction)—the uncorrected bit error rate, sometimes referred to as the hard error rate, the number of erroneous bits that cannot be corrected by error correction algorithms.

•Data throughput (system or aggregate)—the sum of all the data rates delivered to all the terminals (devices) in a network or through a delivery channel. Usually expressed in terms of megabytes per second (Mbytes/second) of uncompressed data, or when applicable, compressed data at an understood, specified compression ratio (e.g., 2:1 compression).

•Durability (media)—measured in tape passes, i.e., how many times can the tape be run through the unit before it wears out.

•Load time—the time from when a cartridge is inserted into the drive, until the cartridge is ready to read or write the data.

•Seek time—the total time it takes from the time a command is issued to the time the data actually start to be recovered or delivered. This metric is on the order of milliseconds for a spinning disk, but is in seconds for linear tape cassettes.

•Reposition time—the time for a linear tape to move from its current position to the position that it is instructed to move to, usually the place where the start of the delivery of the requested data begins.

•Head life—the period in time (days, months, years) that a recording and reproducing head on a tape transport (or equivalent) can reliably produce meaningful information (data) 100% of the time.

•Error detection—the recognition of errors caused by disturbances, noise, or other impairments during the transmission from the source (transmitter) to the destination (receiver).

•Error correction—the recognition of errors and the subsequent reconstruction of the original data/information that is error-free.

•Peak transfer or burst—usually referring to the maximum transfer rate of the bus; it is faster than sustained transfer rates and is occasional (i.e., “bursty”).

•Tape life cycle—the total period of time from first use to retirement of a magnetic data recording material, specifically linear tape based.

Linear Serpentine

This recording technology uses a non-rotating (fixed) head assembly, with the media paths segmented into parallel horizontal tracks that can be read at much higher speeds during search, scan, or shuttle modes. The longitudinal principle allows for multiple heads aligned for read and write operations on each head grouping. The head will be positioned so that it can move perpendicularly to the direction of the tape path allowing it to be precisely aligned over the data tracks, which run longitudinally. The technology will simultaneously record and read multiple channels, or tracks, effectively increasing transfer rates for a given tape speed and recording density.

Note further that there are read and write heads in line with the tape blocks, which allows a “read immediately after write” for data integrity purposes. Digital linear tape is not required to “wrap” a rotating head drum assembly, as is the case with its counterpart helical scan recording.

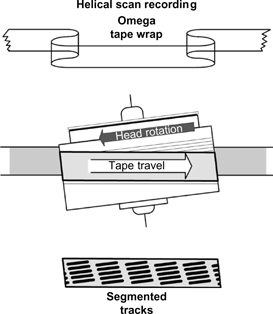

Helical Scan Recording

Helical scan recording places data in diagonal stripes across the tape, allowing for overlapping data, which results in higher data density. Helical recording uses a spinning drum assembly with tiny heads attached to the edge of the drum that contact the tape as it is wrapped around the drum and travels linearly. The wrapping process, in the shape of the Greek letter “omega,” allows for a nearly continuous contact between tape and heads as the tape moves past it.

Figure 12.3 Linear tape profile using a serpentine recording method.

Historical Development of Data Tape

Helical scan tape technology was first developed as an inexpensive way to store data, with roots in the mid-1980s. At that time, manufacturers of home videotape saw 8 mm tape, a helical scan technology, as a potential high-capacity method in which to store computer data for mid- and low-end systems. Over the years, 4 mm DAT (digital audio tape) and QIC (quarter-inch cartridge) linear tape had replaced most of the early 8 mm systems. While smaller systems still relied on these technologies, many felt that DLT was the right solution (at that time) for servers, data storage, or network backup systems because of its reliability and higher performance.

QIC and DAT

Introduced by the 3M Company in 1972, long before the personal computer, QIC was then used to store acquisition data and telecommunications data. QIC did not employ helical scan recording. It came in form factors including 5.25-inch data cartridges and a mini-cartridge of 3.5 inches. Tape width is either quarter-inch or in the Travan model 0.315 inches in width. QIC uses two reels in an audio-cassette style physical cartridge construction. Pinch rollers hold tape against a metal capstan rod. Opponents of this technology touted that this capstan-based method produced the highest point of wear to the physical tape media.

QIC, like DLT, which would follow almost 10 years later, used serpentine recording principles mainly in a single channel Readwrite configuration. The industry eventually moved away from QIC, which was hindered by evolving standards and a steady movement toward the Travan TR-4 format.

Figure 12.4 Helical scan recording with segmented blocks and tape, which wraps a rotating head drum assembly.

Travan

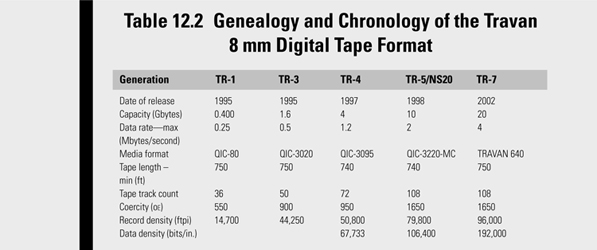

This medium is an 8 mm magnetic tape cartridge design that was developed by the 3M Company. The format was used for backup and mass data storage in early computer systems between the years of 1995 and 2002. Over time, versions of Travan cartridges and drives were developed that provided greater data capacity, while retaining the standard 8 mm width and 750-inch length. Travan is standardized under the QIC body, and it competed with the DDS, AIT, and VXA formats.

The Travan tape hierarchy is shown in Table 12.2. Note that an 800-Mbyte native capacity, 0.25 Mbytes/second version called “TR-2” was designed by 3M, but never marketed.

8 mm Recording Technologies

Popularly referred to as “Exabyte” (the amount equivalent to 1000 petabytes), this nearly generic product line was named after the company that made it most popular, Exabyte Corporation. 8 mm tape drive technologies founded much of what we know today about digital tape backup for computer data systems. The concepts presented in this section are applicable not only to 8 mm, but also to its 4 mm counterpart and on to other digital tape technologies.

The 8 mm technology for computer data was vastly different from previous generation products adapted from the take- offs of what became consumer product lines. Tape systems must be designed to meet the high duty cycle applications found in the ATL, including reliability, transportability, and scalability. The transports incorporate a high-speed scanner that provides a cushion of air between the tape media and the head structure. The capstanless reel-to-reel models provide for gentler tape handling, minimizing the possibilities for tape damage.

8 mm tape systems obtained greater data density by employing data compression, which can be enabled or disabled in software. The average compression ratio is 2:1, but can vary depending upon the type of data to be compressed. The choice for compression should be set by the applications that it serves. In the case of contiguous blocks of data, such as those found in media files, the data already have been spatially and/or temporally compressed by the media compression codecs native in the media server or the image capturing devices (camcorders, transcoders, or editing systems). Adding further compression is of little value.

For data processing or document-related data, where continuous large blocks of data are uncommon, compression is nearly always a practical option. Some tape drives will automatically switch between compression and no compression depending upon whether compression will actually decrease the size of the data block. The automatic selection process can be made either by the user or by other software-enabled parameters.

Tape Error Detection

In the write-to-tape process, a data buffer will hold a set of uncompressed data while the same mirrored data also enter a compression circuit. A cyclic redundancy check (CRC), often a 16-bit number, is calculated on the original data as the compression circuit compresses the same mirrored data. Figure 12.5 shows schematically how the error checking process works.

If the incoming data from the bus are able to be compressed, only the compressed data leave the buffer for writing to the tape. Before it is written, the system decompresses the data, recalculates the CRC, and compares it with the CRC held from the original data CRC calculation. If both CRCs match, data are written to the tape.

Immediately after data are recorded on the tape, a read-head then performs a read-after-write operation, checks the CRC, and compares it with the previous CRC stored before writing the data to the tape. If the data differ (for example, the sum of the two CRC values is not zero), a “rewrite-necessary” command is issued and the process is repeated. Once this logical block passes all the tests, the buffer is flushed and the next group of data are processed.

Figure 12.5 Error checking using CRC and READ-AFTER- WRITE processes.

Tape Media Formats and Fabrication Parameters

Items including areal data density, coefficient of friction and stiction, output levels, and drop-out characteristics are parameters that affect the longevity and performance of one form of media versus another. The tape wear specification has a dependency based upon the type of transport employed and the number of cycles that the tape must go through over its life cycle. Media bases and tape construction technologies contribute to variances in life cycle expectancy.

Metal Particle to Advanced Metal Evaporated

Newer technologies continue to emerge as the demand for storage increases. The move from metal particle (MP) media to advanced metal evaporated (AME) media is one principle used to increase capacity, especially at high bit densities. Often, as is the case with the Exabyte helical scan Mammoth 2 technology, the media and the transport system are developed in harmony where the transport is matched to the tape base, and in turn to system storage capacities. These manufacturing and research practices continue today.

The AME process was relatively new in 1998, employing two magnetic layers, which are applied by evaporating the magnetic material onto the base layers in a vacuum chamber. The magnetic recording layer of the AME media contains a pure evaporated cobalt doping that is sealed with a diamond-like carbon (DLC) coating and lubricant. AME contains 80–100% magnetic recording media that results in a 5-dB increase in short wavelength read back output compared to conventional metal evaporated tape. The average life of the tape allows for 30,000 end-to-end passes.

The 100% cobalt magnetic material is evaporated using an electron beam, which creates a much thinner, pure magnetic layer free of any binders or lubricants. The use of two magnetic layers improves the total output and frequency response while improving the signal-to-noise ratio of the media. Exabyte, at the time of its release to production, stated that their AME media is expected to withstand 20,000 passes.

Only AME media can be used on AIT drives.

Advanced Intelligent Tape (AIT)

Introduced in 1996, developed by Sony, and since licensed by Seagate, AIT was the next generation in 8 mm tape format. The new AIT drives, with increased capacity and throughput, were a significant improvement over other 8 mm products.

Sony solved the reliability problems with a redesigned tapeto- head interface. AIT uses a binderless, evaporative metal media that virtually ends the particle shedding debris deposit problems of older 8 mm drives and significantly decreases the drive failures so often experienced in 8 mm as a result of contaminants clogging the mechanisms.

One of the complaints with predecessor tape storage systems is in accessing of files and/or data directories. Legacy model tape systems use a beginning of the tape indexing structure that must be written to each time data are appended or eliminated from the tape. This requires that the tape’s indexing headers be read every time the tape is loaded and then written to every time it has completed any form of data modification.

When this header is located at the beginning (or sometimes at the end) of the media, the tape must therefore be rewound to the header, advanced for the first reading of the header, then written to and reread for verification. Changing tapes was further compounded by this concept, which lay in the data-tracking mechanism. Besides taking the additional time for cycling media, any errors in this process would render the entire data tape useless, as this became the principal metadata location that contained the data about the data that were stored on the tape.

Sony, possibly from its experiences in some of the company’s broadcast tape applications, took to task another method of storing the bits about the bits. Instead of just writing on the header, Sony placed header information at a location that does not require the tape be mounted in order to be read. Sony introduced its Memory-in-Cassette (MIC), which is a small 16 kbit chip (64 kbit on AIT-2) that keeps the metadata stored on the physical cassette, and not on the actual media. This reduces file access location time, and searches can be achieved in 27 seconds (for 25/50-Gbyte data tapes) and 37 seconds for the larger cassette format. Sony claimed a nearly 50% reduction in search time compared with DLT of the same generation.

One consequential advantage to the MIC concept is the “mid-tape-load” principle, whereby the transport can park the tape in up to 256 on-tape partitions instead of rewinding to the beginning before ejecting. When the tape is mounted, you are a theoretical average of 50% closer to any location on the tape than a non-mid-tape load DLT, and the time involved with reading directories in headers is gone. MIC improves media data access time, with AIT-2’s built-in 64 kilobyte EEPROM to store TOC and file location information.

This concept has been extended to many other products, tape, and other forms of storage ever since.

AIT-1 technology employs a 4800 RPM helical scan head, with the AIT-2 version spinning at 6400 RPM. Higher-density data are written at an angle relative to the tape’s edge. A closed-loop, selfadjusting tape path provides for highly accurate tape tracking. The AIT transport features an “auto-tracking/following” (ATF) system whereby the servo adjusts for tape flutter, which lets data be written with much closer spacing between tracks.

A redesigned metal-in-gap (MIG) tape head puts head life at an average of 50,000 h. Combining their tension control mechanism, which maintains tape tension at one-half that of other helical scan tape technologies, and Sony’s unique head geometry, head-to-media pressure is reduced even further. This servo system senses and controls tension fluctuations and in turn helps to reduce tape and head wear. In addition, Sony’s cooling design and Active Head Cleaner result in a mean time between failures (MTBF) of 200,000 hours for the drive itself.

AIT-5 media has a 400-Gbyte native (1040-Gbyte compressed) capacity and a transfer rate of 24 Mbytes/second. The media is only compatible with AIT-5 platforms and is available in WORM format.

Super and Turbo AIT

Sony also produced an updated format, called Super AIT (SAIT), around the 2003–2004 time frame. Their “Super Advanced Intelligent Tape” (SAIT) half-inch format utilizes advanced features in helical-scan recording technology and AME media to achieve a 500-Gbyte native (up to 1.3 TB compressed) storage capacity, within a half-inch, SAIT 1500 single-reel tape cartridge.

The SAIT format provides for a sustained data transfer rate of 30-Mbytes/second native and 78 Mbytes/second compressed. The maximum burst transfer rate is dependent upon the interface, which is 160 Mbytes/second (for Ultra 160 SCSI LVD/SE) and 2 Gbits/second on Fibre Channel. Sony’s Remote-Sensing Memory-in-Cassette (R-MIC) continued to provide sophisticated and rapid data access capabilities, high-speed file search, and access. An automatic head cleaning system coupled with AME media, their “leader block” tape threading system, and a sealed deck design with simplified tape load path, results in enterpriseclass reliability of 500,000 POH with 100% duty cycle.

AIT Turbo (T-AIT) is another enhancement in both capacity and speed over earlier generations of the 8 mm AIT format and technology. The AIT Turbo series provides capacities from 20 to 40-Gbyte native (52–104-Gbyte compressed) .

Despite all their technical advancements, in March 2010 Sony formally announced that it had discontinued sales of AIT drives and the AIT library and automation systems.

Digital Data Storage (DDS)

Digital Data Storage (DDS) is a format for data storage that came from the 4 mm DAT technology and was developed by Sony and Hewlett-Packard. DDS products include DDS-1 through DDS-5, and DAT 160.

DDS-5 tape was launched in 2003, but the product name was changed from DDS to Digital Audio Tape (DAT) as DAT-72. Used as a backup solution, DDS-5 media provides a storage capacity of 36-Gbyte native (72-Gbyte compressed), which is double the capacity of its predecessor DDS-3 and DDS-4 tapes. The DDS-5 transfers data at more than 3 Mbytes/second (compressed data at 6.4 Mbytes/second), with a tape length for DDS-5 of 170 m.

DDS-5 drives were made by Hewlett-Packard (HP) in alliance with tape drive manufacturer Certance, which was acquired by Quantum in 2005. DDS-3 and DDS-4 media is backwards compatible with the DDS-5. The DDS-5 tape media uses ATTOM technology to create an extremely smooth tape surface.

Data Storage Technology (DST)

Another contender in the digital tape playing field was Ampex Data Systems. The Ampex DST product was created in 1992. The format was a high speed, DDT 19 mm helical scan tape format supporting a drive search speed of 1600 Mbytes/second. Ampex drives were typically integrated with their DST series Automated Cartridge Library (ACL), a mass data storage system that contains hundreds of small to large cassettes per cabinet.

The Ampex Data Systems drive technology is founded on the same concepts and similar construction to their professional broadcast series digital videotape transport architectures. The DST tape drive interface is via the company’s cartridge handling system (CHS) control via dual 16-bit fast differential SCSI-2 or single-ended 8-bit SCSI-2 (Ultra SCSI).

Error rates are specified at 1 × 10e17 with transfer rates between 15 Mbytes/second on the DST 15 series drives to 20 Mbytes/ second on the DST 20 transports. Ampex also manufactured a line of instrumentation drives (DIS series) that support 120–160 Mbytes/second over a serial instrumentation and UltraSCSI interface.

With its DST products, Ampex offered the highest transfer rate of any digital tape products currently in production (1992). While their transports were physically the largest and they had the widest tape width (19 mm), at 20 Mbytes/second (160 Mbits/ second), program transfers between videoservers with MPEG-2 compression ratios of 10 Mbits/second could occur at up to 16 times real-time speed (provided other portions of the system and the interfaces were capable of sustaining those rates).

In 1996, Ampex offered a double density version of the DST tape with 50 Gbytes (small cassette), 150 Gbytes (medium cassette), and 330 Gbytes (large cassette). This capacity was doubled again in 2000 to quad density.

Digital Tape Format (DTF)

Following the introduction of DTF-1, Sony introduced the second generation GY-8240 series, which supports the DTF-2 tape format, providing five times more storage than DTF-1, at 200-Gbytes native capacity (518 Gbytes with ALDC compression) on a single L-cassette. DTF is a 1/2-inch metal particle tape in two sizes: L (large), and a S (small) cassette with 60-Gbyte native capacity (155 Gbytes with 2.59:1 compression). Tape reliability is 20,000 passes and drive MTBF is 200,000 hours (roughly 22.8 sustained years).