CHAPTER

11

New Features for Developers, Report Writers, and Power Users

If you’re an Oracle developer, report writer, DBA, or call yourself a power user, Oracle Database 12c Release 2 has some new features for you. For developers in particular, many of these new features will make your job easier. First, I’ll show how most of the existing conversion functions (and a couple of new ones) give you the opportunity to recover from conversion issues more gracefully. As a report writer, you’ll be able to return results more quickly by using the existing approximation functions more automatically. As a DBA, you’ll be able to help report writers by changing just one or two initialization parameters, and you’ll also be able to document database objects more thoroughly by leveraging the new limits for identifier lengths.

New and Enhanced Functions

Oracle’s function library is the backbone of report queries, ETL processes, and PL/SQL code. This includes both single-row and multi-row functions ranging from simple transformations to complex analytical functions. Every release of Oracle Database contains new and enhanced functions so that you don’t have to write them.

The following sections review many of these new and revised functions, such as CAST, VALIDATE_CONVERSION, and LISTAGG. The main focus of these functions is enhanced error checking and recovery: in many cases, you can move your error handling out of the client and into the database—avoiding extra PL/SQL code as well!

CAST

The CAST function is an ANSI-standard SQL method to convert a built-in data type to another. It’s very powerful in two ways. First, you can specify any constant, column, or expression as the argument and convert it to almost any Oracle data type. Second, the argument can be a collection type; in other words, it can be the result of a query, which can then return that result set as a new collection whose elements are of a different data type. For example, you may want to convert string expressions to numeric, or vice versa. Other functions such as TO_CHAR and TO_NUMBER are shorthand versions of the CAST function.

The downside of using CAST, however, has always been that bad data ends up in the argument to the CAST function, such as non-numeric characters in a string expression that you want to convert to a number. Sometimes, you have to deal with raw data that the front-end application could not or cannot clean up, such as the following output, as you’ve no doubt seen hundreds of times:

To deal with these scenarios prior to Oracle Database 12c Release 2, you had a couple of options. You could make sure that the data entry system would validate the numeric and date fields before sending the contents of the web form to the database. That’s still a good idea, but you might not catch all possible conversion scenarios. You could also use a PL/SQL processing step that would catch errors like these with an EXCEPTION WHEN OTHERS block. But that logic can be complex and requires PL/SQL programming knowledge, and gets even more complicated when you have to deal with hundreds of data elements.

In Oracle Database 12c Release 2, you have another option to deal with bad data you encounter when converting the field in a SELECT statement. Figure 11-1 shows the expanded syntax of the CAST function.

FIGURE 11-1. Enhanced CAST function syntax

The addition to the syntax for the CAST function is the DEFAULT clause. In the DEFAULT clause, you specify a valid value for the function’s result if the conversion fails. Using this feature, the CAST function will never generate an error, although you may still want to do some post-processing on return values that had to be mapped to the default. Here is what the previous examples look like when we add the DEFAULT clause:

The element return_value in the DEFAULT clause must be a constant or bind variable, unfortunately; maybe in Oracle Database 12c Release 3 it will be an expression:

But wait, there’s more! Notice in Figure 11-1 that you can also use a format mask for the converted value regardless of whether a conversion error was triggered. In this example, I’m converting a string to a DATE data type:

Per the previous example, the current value for NLS_DATE_FORMAT is 'YYYY/MM/DD HH24:MI:SS', but the date string to be converted is in a different format, so we can specify that format as part of the function call.

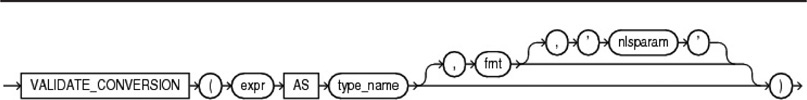

VALIDATE_CONVERSION

The VALIDATE_CONVERSION function is new to Oracle Database 12c Release 2 and can further simplify your code development for both SQL and PL/SQL. It attempts to convert a specified expression to the specified data type and returns 1 if the conversion will be successful and 0 otherwise. Figure 11-2 shows the syntax for VALIDATE_CONVERSION.

FIGURE 11-2. Syntax diagram for VALIDATE_CONVERSION

This function would be useful not only in a SQL SELECT statement, but also in a PL/SQL procedure where you don’t necessarily want to rely on an EXCEPTION block to handle conversion errors (save the EXCEPTION code for truly serious errors!). Here is how you might use VALIDATE_CONVERSION in a PL/SQL procedure:

You can use the fmt parameter with string expressions that you want to convert to DATE values, as in this example:

The string '2018/02/28' is a valid date string, but only if the NLS_DATE_FORMAT is 'YYYY/MM/DD'. Attempting to convert it using a date mask of 'DD-MON-YYYY' will fail.

LISTAGG

The LISTAGG function has been available for several Oracle Database releases and is very useful to group rows based on the ORDER BY clause, then take a specified column or expression and concatenate that column’s values into a single string. If you use the LISTAGG function in a SELECT statement with GROUP BY, you get one aggregate per grouping, and the items within the group are ordered.

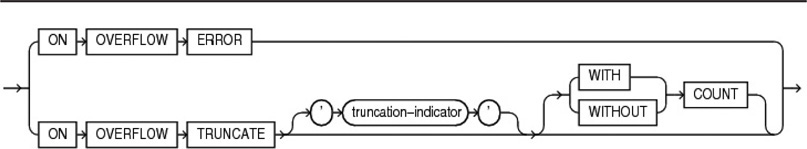

Until Oracle Database 12c Release 2, there was a major complication with using LISTAGG—the rolled-up string value returned might exceed the maximum length of a VARCHAR2 data type. The problem is that you don’t know ahead of time that you’ll have some really long string values, a fact that you discover after you run the SELECT statement, at which point the SELECT statement fails with an ORA-01489 and you get no results. Thus, prior to Oracle Database 12c Release 2, using LISTAGG was an “all or nothing” proposition, whereas most of the time you might be happy with almost all of the results you’re looking for instead of no results. Figures 11-3 and 11-4 present the syntax diagrams for the revised LISTAGG function.

FIGURE 11-3. LISTAGG syntax diagram

FIGURE 11-4. LISTAGG overflow clause

NOTE

The maximum length of a VARCHAR2 column is 4000 if MAX_STRING_SIZE=STANDARD and is 32767 if MAX_STRING_SIZE=EXTENDED. The default is STANDARD.

Figure 11-4 shows two options: ON OVERFLOW ERROR or ON OVERFLOW TRUNCATE. Using ON OVERFLOW ERROR means that you want to use the previous behavior: the SELECT statement fails with an ORA-01489 if any of the aggregated strings exceeds the maximum length for a VARCHAR2. For the ON OVERFLOW TRUNCATE clause, the string is truncated at the nearest complete measure, and you specify these options when the aggregated string is too long:

![]() Truncation-indicator is the string to append to the truncated results and defaults to “…”

Truncation-indicator is the string to append to the truncated results and defaults to “…”

![]() WITH COUNT (the default) appends the number of truncated measures to the end of the string.

WITH COUNT (the default) appends the number of truncated measures to the end of the string.

![]() WITHOUT COUNT does not append the count of truncated measures after the truncation-indicator.

WITHOUT COUNT does not append the count of truncated measures after the truncation-indicator.

A practical example will show you how LISTAGG works when string truncation occurs (without generating an ORA-01489 error!). Suppose I want a report for the DBA that shows the largest database segments by type and ordered by size within each type. I could easily use a standard SELECT statement with GROUP BY and ordering by segment type within all segment type groups, but I want a much more compact report that the DBA can review every week. The following version of the query I wrote in Oracle Database 12c Release 1 was not very useful because it failed most of the time:

Now that I have the ON OVERFLOW TRUNCATE option available, I’ll use that:

Here are a few things to point out in the preceding example:

![]() As part of the output, I’m including the number of blocks for each table in the list, but sorting by the number of bytes—as long as I have only one block size in my database, the lists will sort the same either way.

As part of the output, I’m including the number of blocks for each table in the list, but sorting by the number of bytes—as long as I have only one block size in my database, the lists will sort the same either way.

![]() I’m using the HAVING clause to limit my results to just tables, so only tables and table partitions appear in the output.

I’m using the HAVING clause to limit my results to just tables, so only tables and table partitions appear in the output.

![]() Because there are too many tables to fit into a VARCHAR2, the list is truncated at the last complete table, indicated by the “more …” label. After the label, I show the number of tables left off the list, and in this case there were 475 tables that didn’t make the list.

Because there are too many tables to fit into a VARCHAR2, the list is truncated at the last complete table, indicated by the “more …” label. After the label, I show the number of tables left off the list, and in this case there were 475 tables that didn’t make the list.

![]() There are not very many table partitions in this database, so they all fit within the limits for a VARCHAR2 and there is no need for a “more …” label or the count.

There are not very many table partitions in this database, so they all fit within the limits for a VARCHAR2 and there is no need for a “more …” label or the count.

Approximate Query Processing

Databases and computer technology in general are all about automation, precision, and accuracy. When we want to know how many orders went out yesterday, we typically want the exact number—and the accountants want to know for those orders exactly how many payments were made, what types of payments were made, and the total amount of money that was paid for goods and services. However, we when we are looking at trends over the last few months or even the last few weeks, accuracy to the closest thousand orders and sales to the nearest thousand dollars may be good enough.

Calculating statistics on every table every day may not be necessary for overall database performance and will require lots of CPU and I/O resources that may not always be available. In addition, an analyst may run many “what if” queries across decades of historical tables every day but only needs approximate answers, especially in the early stages of analysis. The approximate query processing feature can cut down processing time by orders of magnitude for typical “big data” analytics while providing results that are approximate but close enough for their intended purpose.

You may already be using the approximation-based function APPROX_COUNT_DISTINCT introduced in Oracle Database 12c Release 1, but what if you have thousands of PL/SQL applications that already have COUNT(DISTINCT …) in the SELECT statements and you’d like to use approximation on the fly? To address this scenario, Oracle Database 12c Release 2 adds features to approximate query processing to avoid code changes when you might need results more quickly.

The new built-in functions related to approximation include the following:

![]() APPROX_COUNT_DISTINCT_DETAIL

APPROX_COUNT_DISTINCT_DETAIL

![]() APPROX_COUNT_DISTINCT_AGG

APPROX_COUNT_DISTINCT_AGG

![]() TO_APPROX_COUNT_DISTINCT

TO_APPROX_COUNT_DISTINCT

![]() APPROX_MEDIAN

APPROX_MEDIAN

![]() APPROX_PERCENTILE

APPROX_PERCENTILE

![]() APPROX_PERCENTILE_DETAIL

APPROX_PERCENTILE_DETAIL

![]() APPROX_PERCENTILE_AGG

APPROX_PERCENTILE_AGG

![]() TO_APPROX_PERCENTILE

TO_APPROX_PERCENTILE

The algorithms, according to Oracle, will provide a 99 percent accurate result. They were introduced in Oracle Database 12c Release 1, but they have been enhanced in Oracle Database 12c Release 2 by adding a few new initialization parameters.

Configuring Approximate Query Processing

It’s great to have the APPROX_* functions available, but what if you won’t be able to change all of your code right away, or better yet, what if you want to automatically use the approximation functions on demand with no code changes? Three new parameters help you control this behavior:

![]() APPROX_FOR_COUNT_DISTINCT

APPROX_FOR_COUNT_DISTINCT

![]() APPROX_FOR_AGGREGATION

APPROX_FOR_AGGREGATION

![]() APPROX_FOR_PERCENTILE

APPROX_FOR_PERCENTILE

All three of those parameters can be set either at the system level or at the session level. However, you should avoid setting these at the system level so that queries with existing COUNT(DISTINCT …) clauses won’t suddenly return imprecise results when the report writer expected exact results!

Both APPROX_FOR_COUNT_DISTINCT and APPROX_FOR_AGGREGATION default to FALSE, and APPROX_FOR_PERCENTILE is not set. Setting any of these parameters is as easy as any ALTER SESSION command:

![]()

These can also be set with the OPT_PARAM query hint if including an ALTER SESSION command in your code is not feasible.

Using Approximate Query Processing

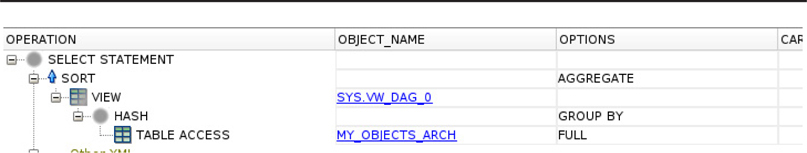

The value of approximate query processing is in the accuracy of the results and how fast you get the results back. How do you know if the approximation is kicking in? First, you’ll get the results back a lot faster. But to make absolutely sure that the approximation is happening, you can look at the execution plan. For example, when I run this SELECT statement after setting APPROX_FOR_COUNT_DISTINCT to FALSE:

the execution plan appears as shown in Figure 11-5, as expected.

FIGURE 11-5. Execution plan for non-approximate COUNT(DISTINCT)

NOTE

The default value for APPROX_FOR_COUNT_DISTINCT is FALSE, but when you’re not sure if the target database will have it set to either TRUE or FALSE, programming best practices dictate that you set it explicitly in your code.

Next, I change the APPROX_FOR_COUNT_DISTINCT parameter at the session level to TRUE and run the query again:

Figure 11-6 shows the new execution plan. Notice the different execution plan option AGGREGATE APPROX.

FIGURE 11-6. Execution plan for approximate COUNT(DISTINCT)

In this case, the execution time is considerably less and gives me a result that is within 1.1 percent of the actual count. That’s close enough for my CFO to figure out if our last quarter was profitable or not and whether a bonus is possible at the end of the year!

Data Dictionary and Data Type Enhancements

A few new data dictionary views in Oracle Database 12c Release 2 help both DBAs and developers alike. DBA_INDEX_USAGE gives the DBA a more fine-grained approach to index monitoring, and DBA_STATEMENTS helps PL/SQL developers cross reference SQL statements in their code.

For everyone who uses Oracle Database, the expansion of identifier lengths to 128 bytes is a welcome change! I’ll demonstrate this new feature in detail.

Identifier Length Changes

From a developer’s perspective, the 30-byte identifier length limitation has been an impediment to database documentation. The more you have to abbreviate each table name, column name, constraint, and so forth, the less readable your code will be and the more likely it is that errors will occur when automated code-generation tools push identifier lengths over the 30-byte limit: for example, materialized view logs prepend MLOG$_ to the name, which further restricts what you can name your table if it ever becomes the master table for a materialized view.

To address all of these concerns, most Oracle objects now can have a name with up to 128 bytes. There are still a few identifiers that have lower limits:

![]() Database names are limited to 8 bytes.

Database names are limited to 8 bytes.

![]() These database objects have a limit of 30 bytes:

These database objects have a limit of 30 bytes:

![]() ASM disk groups

ASM disk groups

![]() Pluggable databases (PDBs)

Pluggable databases (PDBs)

![]() Rollback segments

Rollback segments

![]() Tablespaces

Tablespaces

![]() Tablespace sets

Tablespace sets

To leverage the longer identifier length, the COMPATIBLE parameter must be set to 12.2 or higher.

NOTE

The identifier length limit is 128 bytes. Therefore, depending on the national character set for your database, the number of characters for an identifier may be less than 128.

These identifiers with lower length limits will likely not have an impact on your development, even if you have 4000 PDBs in your CDB! Think of the possibilities. Your schema names, table names, and column names can each be 128 bytes. So this works:

If you decide to use quoted identifiers (that is, use lowercase characters in your identifier names), you have to count the double quote character " in the length of the identifier. Using lowercase in a column name is generally not recommended from a development perspective, so stick with uppercase Oracle object names.

New Data Dictionary Views

For almost every new or enhanced feature in Oracle Database 12c Release 2, there is some kind of a change to an existing data dictionary view, and many of the new features are supported by a new data dictionary view. We’ll cover three of the most consequential new data dictionary views in this section: DBA_INDEX_USAGE, DBA_STATEMENTS, and DBA_IDENTIFIERS.

DBA_INDEX_USAGE

From Oracle Database 11g through Oracle Database 12c Release 1, you can mark an existing index with the MONITORING USAGE clause to see if that index is referenced in a SELECT statement over some arbitrary period of time. If the index doesn’t get used during that time, the dynamic performance view V$OBJECT_USAGE indicates this, thereby identifying that index as one you could potentially drop to save space and index maintenance time during ETL.

There are several deficiencies with that strategy. First, even if the index is flagged as having been referenced, you don’t know how many times it was referenced or how many blocks were retrieved from the index. In addition, the index might have been flagged as used when operations other than SELECT statements accessed the index; for example, an EXPLAIN PLAN run on the query may have indicated that the index was probably going to be used when the query is run, but the index may not have actually been used at run time.

To address these deficiencies, in Oracle Database 12c Release 2, the data dictionary view DBA_INDEX_USAGE has been added to provide a much more granular level of detail for all indexes—they don’t have to be marked to be monitored. Here is what the view looks like:

The data dictionary view DBA_INDEX_USAGE is covered in more detail in Chapter 7.

DBA_STATEMENTS and DBA_IDENTIFIERS

If you are a developer and you use PL/Scope in your PL/SQL development, the two new views DBA_STATEMENTS and DBA_IDENTIFIERS will help you automatically identify occurrences of static and native dynamic SQL statements in each PL/SQL unit. You might not need these views if your PL/SQL application is only a couple hundred lines, but if you have hundreds of procedures and thousands of lines, you want to know how often and where a particular SQL statement is referenced. Here is what DBA_STATEMENTS looks like:

When using PL/Scope, all the metadata from functions and procedures is stored in DBA_STATEMENTS. The column TYPE shows what kind of statement was referenced, such as a SELECT, DELETE, FETCH, or even EXECUTE IMMEDIATE. This view is a big time-saver when your development manager wants to know how many times you reference the ORDER_ENTRY table or open a cursor in your e-commerce application, for example.

Summary

Oracle Database 12c Release 2 brings many developer-related enhancements to the table (no pun intended). One of the biggest changes is the addition of more error checking to conversion-specific functions such as CAST, VALIDATE_CONVERSION, and LISTAGG. These new and enhanced functions enable you to move some of your validation logic to the database layer, where it makes sense and avoids many error conditions that would otherwise cause delays in report processing due to bad data making it through to the data warehouse tables.

For agility and improved response time of ad hoc analytic queries, you can more easily leverage the approximate query functions even when you’re not directly referencing functions such as APPROX_COUNT_DISTINCT. At the session level, you can change an initialization parameter to transparently substitute one of the APPROX_ functions for a query with a traditional SELECT DISTINCT clause.

Developers benefit as well: new data dictionary views such as DBA_STATEMENTS help a PL/SQL developer keep track of statement and column references in their PL/SQL applications.

The next and final chapter takes a look at Oracle Enterprise Manager Cloud Control 13c Release 2 and its new features related to the new features of Oracle Database 12c Release 2.