23. Behaviorism and Schedules of Reinforcement

The way [...] reinforcement is carried out is more important than the amount.

—B. F. SKINNER

What do dog trainers, casino owners, and some free-to-play game designers have in common? They all use schedules of reinforcement to get their users to engage and re-engage repeatedly with what they are trying to teach or sell. To understand what this means and why it is important for the future of games, you need to first learn about a Russian scientist who studied digestion.

Operant Conditioning

Ivan Pavlov (Figure 23.1) won the Nobel Prize in 1904 for his work on understanding the digestive system. His most popular contribution to science is the concept of the conditioned reflex. To simplify Pavlov’s research, he fed dogs and coupled the feeding with a stimulus, such as a bell ringing or a buzzer sounding. Quickly, the dogs associated the sound of the stimulus with the act of feeding. When dogs anticipate food, they begin to salivate. Pavlov was able to get dogs to salivate as if they were receiving food even when they only heard a bell ring but were not given food.

PUBLIC DOMAIN IMAGE. WELCOME COLLECTION.

Figure 23.1 Ivan Pavlov, the Michael Jordan of measuring saliva.

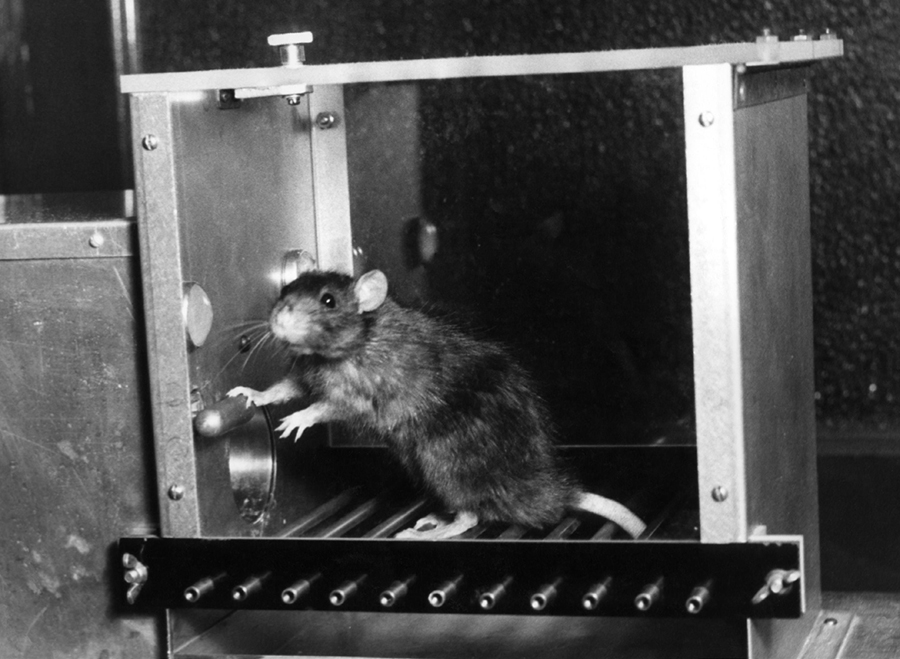

How this concept could be used to modify behavior was named classical conditioning. Later scientists modified this and discovered operant conditioning—instead of pairing a neutral stimulus (ringing a bell) with a reward, they paired rewards with whether or not a behavior was successful. B. F. Skinner is the psychologist most closely associated with operant conditioning and its larger school of psychology, known as behaviorism. The Skinner box bears his name (Figure 23.2).

SKINNER BOX, © SCIENCE HISTORY IMAGES/ALAMY STOCK PHOTO

Figure 23.2 An operant conditioning chamber, or Skinner box.

In a Skinner box, a test subject (the rat in Figure 23.2) is able to press a lever; that action creates some sort of response based on what the researcher is looking for. It may reward the rat with a food pellet, it may cause the floor to be electrified, it may cause an annoying siren to go off, or it may do nothing at all. For our purposes, the details of how and why operant conditioning experiments work are not relevant. What is relevant are the results that Skinner and later psychologists were able to discover about how humans and animals act for rewards and punishments. He and others found that certain “schedules of reinforcement,” or how the rewards were given out, affected how much the subject subsequently pulled the lever.

For instance, if the rat received a pellet of food every time it pulled the lever, what would you expect it to do? Naturally, it would pull the lever whenever it got hungry, and it would not pull the lever when it was not hungry. But what if it got a pellet every tenth time it pulled the lever? What if it received a pellet randomly, but on average after ten pulls? What if it received a pellet every time it pulled the lever but only after 10 seconds had passed since the last time it received food? What if it received a pellet only after a random amount of time, which averaged 10 seconds? How would these setups affect the rat’s behavior?

Schedules of Reinforcement

All of these setups have names:

• FIXED RATIO. This is when the reward is given after n responses. For instance, if a player in an RPG levels up after killing every tenth monster, that is reinforcement on a fixed-ratio schedule. Continuous reinforcement is a type of fixed-ratio schedule in which the reward is given after every response. Pull the lever, get the food. This is essentially a fixed ratio of 1:1.

• VARIABLE RATIO. This is when the reward is given after an average of n responses but is unpredictable. If in an RPG each monster had a 10-percent chance of dropping a “level up” gem, this is a variable-ratio schedule. The player may get the gem on the first monster or the twentieth monster. No one knows.

• FIXED INTERVAL. This is when the reward is given on the first response after t minutes. In an RPG, if the player levels up after killing the first monster once after every hour of play, that is a fixed-interval schedule.

• VARIABLE INTERVAL. This is when the reward is given on the first response after a random amount of time that averages to t minutes. In an RPG, if the player levels up after killing a monster at random, but on average every 10 minutes, that is a variable-interval schedule.

There are many other types of reward schedules, but these four (fixed ratio, variable ratio, fixed interval, and variable interval) are the most often cited. Which of these four schedules do you think gets the player to kill the most monsters? Why?

If you are a casino owner and you want to develop a new slot machine, how do you design it? Your goal is clearly to get people to spend as much money as possible by providing rewards that keep them inserting coins and pulling levers. Is this not extremely similar to the problem Skinner studied? You have a subject (customer) and you want to understand what it will take for that subject (customer) to want to pull the lever (play the slot machine) as much as possible. This form works for many games in which rewards are important. Which reward schedule should you use?

The results of using a fixed ratio are easiest to understand. In a fixed ratio, the subject slows activity after the reinforcement, but after time, it picks back up. Think of getting food when you are hungry. Generally, you wait until you are hungry to get food. Then after you eat, you do not think about food again until you are hungry.

The variable ratio is what most slot machine designers use. It causes the greatest amount of activity. When the rats do not know when the food pellet is coming, they push the lever furiously. Players who do not know when the next jackpot is coming anxiously insert coins into slot machines in hopes of a reward.

Note

eBay has changed to an actively updating page simply because too many users were refreshing pages repeatedly near auction ends.

The fixed interval causes a flurry of activity when the deadline approaches, but little activity at other times. Think about eBay auctions. When do you check the auction status? A day before the auction closes you peek in once to see if you are still winning. But as the deadline approaches, you repeatedly click that refresh button to make sure you are still winning.

The fixed interval also has a problem with a phenomenon known as extinction. Extinction is a melodramatic way of saying that the reward no longer produces the conditioned behavior. Another way of putting it is that the subject just stops pressing the lever. In fixed-interval schedules, once the interval passes, the subject has no reinforcement for pressing the lever for a while, so he quits and may not come back. In games, this is catastrophic! If someone puts your game away, it may be difficult to ever get that player back. Early reinforcement is great for getting someone to do something initially. However, if you do not switch to one of the other schedules, extinction happens quickly.

The variable interval is expected to generate steady activity: The subject is more engaged than with the fixed ratio, the activity is not as furious as it is with the variable ratio, and yet there is no rapid extinction as there is when using the fixed interval. This type still has a higher rate of extinction than the variable ratio (think of how hard it is to pull an engaged player from a slot machine!), and both variable intervals and variable ratios suffer less from extinction than do their fixed cousins (perhaps because it takes subjects longer to figure out that a reward is less likely).

You should use the variable interval when you want some steady activity to persist. For instance, say you are a teacher and you want your students to do their homework every night. However, you don’t have time to check every student’s work every day. But to you, homework is a steady activity that you want the students to do. You don’t want students to do a flurry of homework one night and then ignore the homework the next night, so the variable ratio seems inappropriate. How can you use a variable interval to get this steady activity? Perhaps you can say that three homework assignments each month will be checked, but you don’t let the students know which ones. The students cannot anticipate which will be checked, so they have to do them all as if they will be checked (that is, until they figure out when no more assignments will be graded).

Figure 23.3 shows a summary of the schedules. Variable interval is great for slow and steady engagement. Fixed interval is largely effective only for engagement around the interval points. Fixed ratio or continuous reinforcement is great for a burst of engagement. Variable ratio is great for lots of engagement.

Figure 23.3 Responses based on reward schedule.

Anticipation and Uncertainty

What were the dogs in Pavlov’s experiments doing when they salivated just because they heard a bell ring? They were not excited about the bell; they were excited about what the bell signified. They were anticipating food. Never underestimate the power of anticipation.

It’s a stereotype to think of scientists as people in lab coats holding clipboards and watching rats finish a maze, but that is exactly what Dr. Clark Hull did in the 1930s to discover what he named the goal-gradient effect.1 Designers use this effect quite a lot in games. It says that as subjects get closer to a goal, they accelerate their behavior. Hull found that his rats would run faster in his mazes as they neared the end.

1 Hull, C. L. (1934). “The Rat’s Speed-of-Locomotion Gradient in the Approach to Food.” Journal of Comparative Psychology, 17(3), 393.

Does this work with humans? Consider this: The Baltimore Marathon had to ask spectators not to yell at participants “almost there!” unless they are, in actuality, almost at the end of the marathon.2 It turns out that even highly trained distance runners who have intimate knowledge of how far along they are in a race can be subtly tricked into exerting more energy than they would otherwise choose to do just by being assured by strangers that the end of the race is near!

2 “Baltimore Marathon Specific about Suggested Cheers.” (2014, October 15). Retrieved November 18, 2014, from http://washington.cbslocal.com/2014/10/15/baltimore-marathon-specific-about-suggested-cheers.

This does not just work in races for humans and rats. Have you ever received a frequent buyer card from a restaurant? On these cards you get a “punch” for each meal you buy, and when you fill up the card with these punches you get some sort of award, like a free meal. Ran Kivetz did a study on this in which he gave one group of customers a 10-space punch card and another group a 12-space punch card with two spaces already filled in.3 Even though both cards required 10 more purchases to fill them, the group who received the two “free” punches filled up their cards faster. Why? The 12-space group was already one-sixth of the way there in their minds. “Almost there!”

3 Kivetz, R., Urminsky, O., & Zheng, Y. (2006). “The Goal-Gradient Hypothesis Resurrected: Purchase Acceleration, Illusionary Goal Progress, and Customer Retention.” Journal of Marketing Research, 43(1), 39–58.

One of the great behavioral economists, George Loewenstein, showed that anticipation itself can hold value.4 Another group of psychologists found that a group who received a prize in a condition of uncertainty (such as, “At the end of this trial, you may receive chocolates or one of these other prizes”) were happier than those who received the same prize under a condition of certainty (such as, “At the end of this trial, you will receive chocolates”).5 In that study, participants even spent more time looking at the prize they won under the uncertain conditions than they did under the certain conditions.

4 Loewenstein, G. (1987). “Anticipation and the Valuation of Delayed Consumption.” The Economic Journal, 666–684.

5 Kurtz, J. L., Wilson, T. D., & Gilbert, D. T. (2007). “Quantity versus Uncertainty: When Winning One Prize Is Better Than Winning Two.” Journal of Experimental Social Psychology, 43(6), 979–985.

It is likely that all of us can cite a situation in our experiences in which we gained a lot of pleasure from anticipating something, only to be underwhelmed when we finally had it in our hands. This can be the case for a number of reasons. The most obvious is that the thing we desire is not any good in the first place. Another is that what we value is less the thing and more the concept of the thing. A third is that we may just be tired. Psychologists call this the post-reward resetting phenomenon.6 In a nutshell, after receiving a reward, we tend to care less about receiving subsequent similar rewards. This is a problem in games! After you give a player the Mega Sword, you want him to desire the next step up, the Ultra Sword.

6 Drèze, X., & Nunes, J. C. (2011). “Recurring goals and learning: the impact of successful reward attainment on purchase behavior.” Journal of Marketing Research, 48(2), 268-281.

Note

Psychologists Drèze and Nunes would likely suggest that you have to make the quests for the Mega and Ultra Swords challenging so that the player feels successful through her own self-efficacy upon attaining it.

In Games: Coin Pusher

Machines such as the one in Figure 23.4 are popular at redemption arcades. They are colloquially known as “coin pushers.” The player drops a coin in, and it lands on the upper shelf. The upper shelf moves back and forth so that, with a sufficient mass of coins, the back-and-forth motion pushes a coin, or several, from the upper shelf onto the bottom shelf. The same is true of the bottom shelf. Eventually, a mass of coins causes some coins to fall over the front edge. The player then wins a prize based on the number of coins pushed over.

COIN PUSHER, © SHUTTERSTOCKSTUDIO/SHUTTERSTOCK

Figure 23.4 A coin pusher.

These coin pushers are an example of a game that masterfully manipulates schedules of reinforcement. Not only is the player reinforced on a variable ratio (he does not know how many coins he will have to drop in to both knock coins off the top shelf and then knock coins off the bottom shelf), but coin pushers have one advantage over standard slot machines: Slot machines use the gambler’s fallacy as a matter of trust. An engaged (or addicted, if you prefer) player may be unwilling to quit because they say a win is “due.” In a coin pusher, that estimation is not just based on faith. The player can actually see that a win is about due, yet because of the random (or at least extremely difficult) nature of the game, all he can do is keep playing and anticipate the win.

Even better, after a win, a slot machine patron can use the same gambler’s fallacy logic to quit, saying that a win will not happen again soon. Yet a coin pusher player who just knocked some coins over the ledge can still see areas where coins are cantilevered over the edge. “If I just play one more coin, I bet I could knock that one over too! It is almost there!”

So what does a coin pusher do successfully to get players engaged?

• It offers a variable-ratio reward schedule that keeps players engaged.

• The view through the glass, the tendency of coins to stack upon themselves and cantilever, and the slow methodical action of the movement of the shelf provide anticipation to the player.

• The view through the glass always shows that the player is almost at an award, satisfying the goal-gradient effect.

In Games: Destiny Drops

Bungie’s Destiny uses a particular reward technique that exemplifies what I have been describing. In Destiny, killing enemies has a random chance of dropping a piece of loot called an “engram.” These engrams are color-coded based on their rarity, so you immediately know when you find something rare.

However, the player cannot know what item the engram will give until she exchanges it later. Sometimes an engram gives her exactly what she wants. But often it gets her something worthless because she is not the correct player class to use the rewarded item. It’s all chance.

How is this successful in keeping players slaying bad guys?

• Drops themselves are based on a variable-ratio reward schedule.

• Cashing in the engram reveals another variable-ratio reward schedule.

• There is anticipation gained from the uncertainty of what the engram contains.

The engram system is basically like a slot machine on which the player wins tokens to play another slot machine.

Ethical and Practical Concerns

In many instances, principles of behaviorism work well. It’s why you see echoes of it so often in games today. However, behaviorism fell out of vogue in psychology many decades ago because it has massive limitations. One of the most salient limitations is that it’s only concerned with inputs and outputs. What happens within the subject is known as a “black box” and is outside the domain of inquiry. This is problematic for game designers because concepts like “fun” and “value” live inside that black box.

When I discuss the concept of motivation in Chapter 25, I cover the differences between intrinsic and extrinsic motivations. This concept is key because it shows that all behavior is not equal. A game designer’s goal is not wholly contained within “get a player to kill a Destiny bad guy.” There are larger concerns, such as “Is the player having fun?” or “Will the player continue to have fun?” Operant conditioning predicts behavior well in the short term under controlled conditions. However, game designers often don’t have that experimental luxury. Many designers want players to be satisfied, not simply engaged in behavior.

Beyond the practical concerns are the ethical concerns. Mike Rose writes in Gamasutra about a player who was addicted to buying keys in Team Fortress 2 (a mechanic much like the engrams discussed earlier, except that a player spends real money on them) and who could not pay his medical bills because of his addiction to the key-crate system.7 The New York Times recounts the story of a Clash of Clans player who would take five iPads with him while he showered so that none of his accounts would become inactive.8 Similar stories regarding these kinds of game mechanics abound. The possibility of addiction alone does not an ethical concern make, but it serves as an illustrative example of what extremes of behavior can surround these game mechanics.

7 Rose, M. (2013, July 9). “Chasing the Whale: Examining the Ethics of Free-to-Play Games.” Retrieved June 26, 2019, from www.gamasutra.com/view/feature/195806/chasing_the_whale_examining_the_.php.

8 Bai, M. (2013, December 21). “Master of His Virtual Domain.” Retrieved June 26, 2019, from www.nytimes.com/2013/12/22/technology/master-of-his-virtual-domain.html?pagewanted=all.

Tip

A recommended read on the ethics of game design for gambling is Addiction by Design: Machine Gambling in Las Vegas, by Natasha Dow Schüll (2012, Princeton University Press).

It’s just as important for a game designer to examine what a game’s mechanics say about what the designer values as a designer. The slot machine designer’s goal is clear: to get you to pump as many coins into that slot machine as possible. But should a game designer worry about how repetitious a player’s behavior is as long as the player is having fun? That is a question for each individual designer. Some believe that it’s solely the player’s responsibility to regulate what they do with a game. Others believe that the designer has an explicit ethical responsibility to make all reasonable attempts to create systems that minimize possible harm to the player.

Summary

• Behaviorists use experiments to record the effects of stimuli on a subject’s observable behaviors.

• A variable-ratio schedule of reinforcement tends to generate the most response.

• Extinction occurs when a reward no longer generates the anticipated behavior.

• The goal-gradient effect suggests that the closer a player is to a goal, the greater the player’s effort will be to achieve that goal.

• What do your game mechanics say about what you value? If you respect players and want them to have fun, is that reflected in your mechanics? Or are your mechanics just a highly complicated Skinner box used to extract money from players? Neither is a priori bad, but be honest about what you value.