10

PROCEDURAL AUDIO

1. Introduction, Benefits and Drawbacks

With the advent of PCM playback systems throughout the 80s and 90s, video game soundtracks gained a great deal in terms of realism and fidelity when compared to the limited capabilities of early arcades and home entertainment systems. All of sudden, explosions sounded like actual explosions rather than crunchy pink noise, video games started to include actual dialog rather than type on the screen punctuated by cute chirp sounds and the music sounded like it was played by actual instruments. The improvements were so significant that almost no one seemed to have noticed or minded that they came at the expense of others: flexibility and adaptivity.

We sometimes forget that an audio recording is a snapshot of a sound at an instant, frozen in time, and while we can use a number of techniques to make a recording come to life, we can only take it so far before we simply need another sample. Still, it wasn’t too much of an issue until two technological advancements made the limitations of sample playback technology obvious. The first one was the advent of physics in games. Once objects started to fall, bounce, scrape and everything in between, the potential number of sounds they could generate became exponentially larger. Other technical limitations, however, remained. Ram budgets didn’t change, and audio hardware and software didn’t either. All of sudden, the limitations of our current technologies became quite apparent as sound designers and game developers had to develop new techniques to deal with these developments and to come up with enough sounds to cover all the potential situations that could arise. Even though a single object could now make dozens, if not hundreds of potential different sounds, we were still confronted with the same limitations in terms of RAM and storage. Even if these limitations weren’t there and we could store an unlimited number of audio assets for use in a game, spending hundreds of hours coming up with sounds for every possible permutation an object could make would be an entirely unproductive way to spend one’s time, not to mention a hopeless task.

The other major development that highlighted the issues associated with relying on playing back samples is virtual reality. Experiencing a game through a VR headset drastically changes the expectations of the user. Certainly, a higher level of interactivity with the objects in the game is expected, which creates a potentially massive numbers of new scenarios that must be addressed with sound. PCM playback and manipulation again showed its limitations, and a new solution was needed and had been for some time: procedural studio.

1. What Is Procedural Audio?

The term procedural audio has been used somewhat liberally, often to describe techniques relying on the manipulation and combination of audio samples to create the desired sound. For the sake of simplicity, we will stick to a much more stringent definition that excludes any significant reliance on audio recordings.

The term procedural assets, very specifically, refers to assets that are generated at runtime, based on models or algorithms whose parameters can be modified by data sent by the game engine in real time. Procedural asset generation is nothing new in gaming; for some time now textures, skies and even entire levels have been generated procedurally, yet audio applications to this technology have been very limited.

a. Procedural Audio, Pros and Cons

Let’s take a closer look at some of the pros and the cons of this technology before taking a look at how we can begin to implement some of these ideas, starting with the pros:

- Flexibility: a complete model of a metal barrel would theoretically be able to recreate all the sounds an actual barrel could make – including rolls and scrapes, bounces and hits – and do so in real time, driven by data from the game engine.

- Control: a good model will give the sound designer a lot of control over the sound, something harder to do when working with recordings.

- Storage: procedural techniques also represent a saving in terms of memory, since no stored audio data is required. Depending on how the sound is implemented, this could mean savings in the way of streaming or ram.

- Repetition avoidance: a good model will have an element of randomness to it, meaning that no two hits will sound exactly alike. In the case of a sword impact model, this can prove extremely useful if we’re working on a battle scene, saving us the need to locate, vary and alternate samples. This applies to linear post production as well.

- Workflow/productivity: not having to select, cut, and process variations of a sound can be a massive time saver, as well as a significant boost in productivity.

Of course, there are also drawbacks to working with procedural audio, which must also be considered:

- CPU costs: depending on the model, CPU resources needed to render the model in real time may be significant, in some cases making the model unusable in the context of an actual game.

- Realism: although new technologies are released often, each improving upon the work of previous ones, some sounds are still difficult to model and may not sound as realistic as an actual recording, yet. As research and development evolve, this will become less and less of an issue.

- A new paradigm: procedural audio represents a new way of working with sound and requires a different set of skills than traditional recording-based sound design. It represents a significant departure in terms of techniques and the knowledge required. Some digital signal processing skills will undoubtedly be helpful, as well as the ability to adapt a model to a situation based on physical modeling techniques or programming. Essentially, procedural audio requires a new way of relating to sound.

- Limited implementation: this is perhaps the main hurdle to the widespread use of this technology in games. As we shall see shortly, certain types of sounds are already great candidates for procedural audio techniques; however, implementation of tools that would allow us to use these technologies within a game engine is very limited still at the time of this writing and makes it difficult to apply some of these techniques, even if every other condition is there (realism, low CPU overhead etc.).

It seems inevitable that a lot of the technical issues now confronting this technology will be resolved in the near future, as models become more efficient and computationally cheaper, while at the same time increasing in realism. A lot of the current drawbacks will simply fade over time, giving us sound designers and game developers a whole new way of working with sound and an unprecedented level of flexibility.

Candidates for Procedural Audio

Not every sound in a game might be a good candidate for procedural audio, and careful consideration should be given when deciding which sound to use procedural audio techniques for. Certain sounds are natural candidates, however, either because they can be reproduced convincingly and at little computational cost, such as hums, room tones or HVAC sounds or because, although they might use a significant amount of resources, they provide us with significant ram savings or flexibility, such as impacts.

b. Approaches to Procedural Audio

When working on procedural audio models, while the approach may differ from traditional sound design techniques, it would be a mistake to consider it a complete departure from traditional, sampled-based techniques, but rather it should be considered an extension. The skills you have accumulated so far can easily be applied to improve and create new models.

Procedural audio models fall in two categories:

- Teleological Modeling: teleological modeling relies on the laws of physics to create a model of a sound by attempting to accurately model the behavior of the various components of an object and the way they interact with each other. This is also known as a bottom-up approach.

- Ontological modeling: Ontological modeling is the process of building a model based on the way the object sounds rather than the way it is built, a more empirical and typical philosophy in sound design. This is also known as a top-down approach.

Both methods for building a model are valid approaches. Traditional sound designers will likely be more comfortable with the ontological approach, yet a study of the basic law of physics and of physical modeling synthesis can be a great benefit.

Analysis and Research Stage

Once a model has been identified, the analysis stage is the next logical step. There are multiple ways to break down a model and to understand the mechanics and behavior of the model over a range of situations.

In his book Designing Sound (2006), Andy Farnell identifies five stages of the analysis and research portion:

- Waveform analysis.

- Spectral analysis.

- Physical analysis.

- Operational analysis.

- Model parametrization.

Waveform and Spectral Analysis

The spectral analysis of a sound can reveal important information regarding its spectral content over time and help identify resonances, amplitude envelopes and a great deal more. This portion isn’t that different from looking at a spectrogram for traditional sound design purposes.

Physical Analysis

A physical analysis is the process of determining the behavior of the physical components that make up the body of the object in order to model the ways in which they interact. It is usually broken down into an impulse and the ensuing interaction with each of the components of the object.

The impulse is typically a strike, a bow, a pluck, a blow etc. Operational analysis refers to the process of combining all the elements gathered so far into a coherent model, while the model parametrization process refers to deciding which parameters should be made available to the user and what they should be labelled as.

2. Practical Procedural Audio: A Wind Machine and a Sword Collision Model

The way to apply synthesis techniques to the area of procedural audio is limited mainly by our imagination. Often, more than one technique will generate convincing results. The choice of the synthesis methods we choose to implement and how we go about it should always be driven by care for resource management and concern for realism. Next we will look at how two different synthesis techniques can be used in the context of procedural audio.

1. A Wind Machine in MaxMSP With Subtractive Synthesis

Noise is an extremely useful ingredient for procedural techniques. It is both a wonderful source of raw material and computationally inexpensive. Carefully shaped noise can be a great starting point for sounds such as wind, waves, rain, whooshes, explosions and combustion sounds, to name but a few potential applications. Working with noise or any similarly rich audio source naturally lends itself to subtractive synthesis. Subtractive synthesis consists in carving away frequency material from a rich waveform using filters, modulators and envelopes until the desired tone is realized. Using an ontological approach we can use noise and a few carefully chosen filters and modulators to generate a convincing wind machine that can be both flexible in terms of the types of wind it can recreate as well as represent significant savings in terms of audio storage, as wind loops tend to be rather lengthy in order to avoid sounding too repetitive.

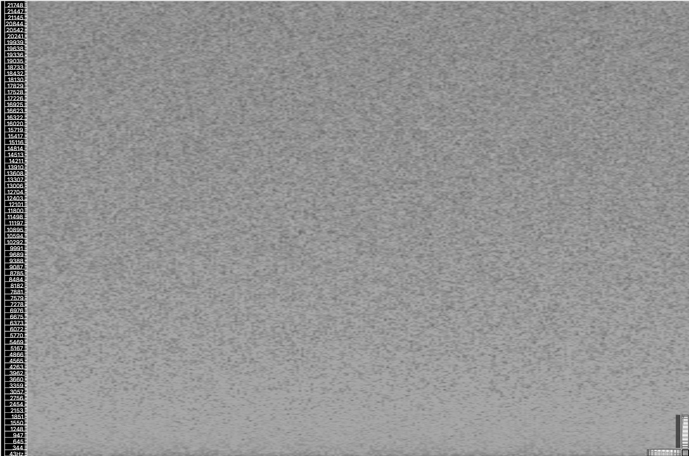

We can approximate the sound of wind using a noise source. Pink noise, with its lower high frequency content will be a good option to start from, although interesting results can also be achieved using white, or other noise colors.

Figure 10.1

Figure 10.2

White noise vs. pink noise. The uniform distribution of white noise is contrasted.

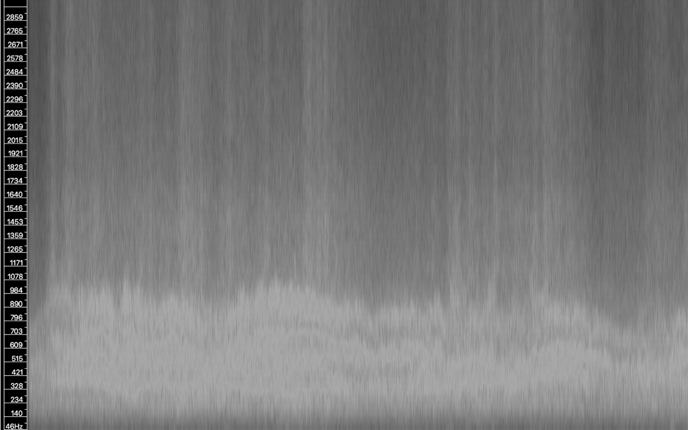

Figure 10.3

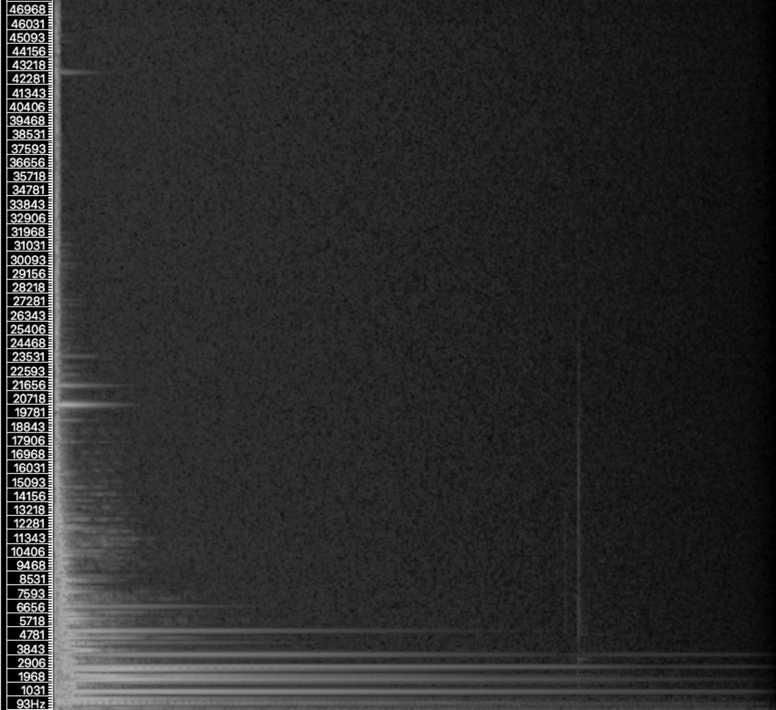

The spectrogram reveals how the frequency content of this particular wind sample evolves over time

Figure 10.4

Broadband noise will still not quite sound like wind yet, however. Wind tends to sound much more like bandpass filtered noise, and wind isn’t static, either in terms of amplitude or perceived pitch. Both evolve over time. Wind also tends to exhibit resonances more or less pronounced depending on the type of wind we are trying to emulate. A look at a few spectral analyses of wind recordings can be used to extract more precise data which can be used to tune the parameters of the model, such as the center frequency of our bandpass filter(s), the amount of variation over time of amplitude and pitch and many more.

From a starting point of pink noise, in order to make our wind more convincing, first we need to apply a resonant bandpass filter to our noise source. The center frequency of our bandpass filter will determine the pitch of the wind. One way to find the right center frequency for the bandpass filter is to take a look at a spectral analysis of a few wind samples in the same vein as the sound we are trying to emulate, use these as a starting point and adjust until your ears agree. Once we’ve achieved convincing settings for center frequency and bandwidth of the bandpass filter, we must animate our model so that the output is not static. For our model to be realistic we ‘re going to need to modulate both the overall amplitude of the output, as well as the center frequency of the bandpass filter. The frequency of the bandpass filter, which would be the perceived ‘pitch’ of the wind, needs our attention first. Using a classic modulation technique, such as an LFO with a periodic waveform, would sound too predictable and therefore sound artificial. Therefore, it makes more sense to use a random process. A truly random process, however, would cause the pitch of the wind to jump around, and the changes in the pitch of the wind would feel disconnected from one another, lacking the sense of overall purpose. In the real world the perceived pitch of wind doesn’t abruptly change from one value to another but rather ebbs and flows. The random process best suited to match this kind of behavior would be a random walk, a random process where the current value is computed from the previous value and constricted to a specific range to keep the values from jumping randomly from one pitch to another.

The center frequency for the overall starting pitch of the wind will be determined by the center frequency of the bandpass filter applied to the noise source, to which a random value will be added at semi-regular intervals. By increasing the range of possible random values at each cycle in the random walk process we can make our wind appear more or less erratic. The amount of time between changes should also not be regular but determined by a random range, which the sound designer can use to create a more or less rapidly changing texture.

A similar process can be applied to the amplitude of the output, so that we can add movement to the volume of the wind model as well. By randomizing the left and right amplitude output independently we can add stereo movement to our sound and increase the perceived width of the wind.

Making the Model Flexible

In order to make our model flexible and capable of quickly adapting to various situations that can arise in the context of a game, a few more additions would be welcome, such as the implementation of gusts, of an intense low rumble for particularly intense winds and the ability to add indoors vs. outdoors perspective.

Wind gusts are perceived as rapid modulation of amplitude and/or frequency; we can recreate gusts in our model by rapidly and abruptly modulating the center frequency, and/or the bandwidth of the filter.

In a scenario where the player is allowed to explore both indoors and outdoors spaces or if the camera viewpoint may change from inside to outside a vehicle, the ability to add occlusion to our engine would be very convenient indeed. By adding a flexible low pass filter at the output of our model, we can add occlusion by drastically reducing the high frequency content of the signal and lowering its output. In this setting, it will appear as if the wind is happening outside, and the player is indoors.

Rumble can be a convincing element to create a sense of intensity and power. We can add a rumble portion to our patch by using an additional noise source, such as pink noise, low pass filter its output and distort the output via saturation or distortion. This can act as a layer the sound designer may use to make our wind feel more like a storm and can be added at little additional computational cost.

The low rumble portion of the sound can itself become a model for certain types of sounds with surprisingly little additional work, such as a rocket ship, a jet engine and other combustion-based sounds. As you can, the wind-maker patch is but a starting point. We could make it more complex by adding more noise sources and modulating them independently. It would also be easy to turn it into a whoosh maker, room tone maker, ocean waves etc. The possibilities are limitless while the synthesis itself is relatively trivial computationally.

2. A Sword Maker in MaxMSP With Linear Modal Synthesis

Modal synthesis is often used in the context of physical modeling and is especially well suited to modeling resonant bodies such as membranes and 2D and 3D resonant objects. By identifying the individual modes or resonant frequencies of an object under various conditions, such as type of initial excitation, intensity and location of the excitation, we can understand which modes are activated under various conditions allowing us to model the sound the object would make, allowing us to build a model of it.

The term modes in acoustics is usually associated with the resonant characteristics of a room when a signal is played within it. Modes are usually used to describe the sum of all the potential resonant frequencies within a room or to identify individual frequencies. They are also sometimes referred to as standing waves, as the resonances created tend to stem from the signal bouncing back and forth against the walls, thus creating patterns of creative and destructive interference. A thorough study of resonance would require us to delve into differential equations and derivatives; however, for our purposes we can simplify the process by looking at the required elements for resonance to occur.

Resonance requires two elements:

- A driving force: an excitation, such as a strike.

- A driven vibrating system: often a 2D or 3D object.

When a physical object is struck, bowed or scrapped, the energy from the excitation source will travel throughout the body of the object, causing it to vibrate, thus making a sound. As the waves travel and reflect back onto themselves, complex patterns of interference are generated and energy is stored at certain places, building up into actual resonances. Modal synthesis is in fact a subset of physical modeling. Linear modal synthesis is also used in engineering applications to determine a system’s response to outside forces. The main characteristics that determine an object’s response to an outside force are:

- Object stiffness.

- Object mass.

- Object damping.

Other factors are to be considered as well, such as shape and location of the excitation source, and the curious reader is encouraged to find out more about this topic.

We distinguish two types of resonant bodies (Menzies):

- Non-diffuse resonant bodies: that exhibit clear modal responses, such as metal.

- Diffuse resonant bodies: exhibit many densely packed modes, typically wood or similar non-homogenous materials.

Modeling non-diffuse bodies is a bit simpler, as the resonances tend to happen in more predictable ways, as we shall see with the next example: a sword impact engine.

Modal synthesis is sometimes associated with Fourier synthesis, and while these techniques can be complementary, they are in fact distinct. The analysis stage is important to modal synthesis in order to identify relevant modes and their changes over time. In some cases, Fourier techniques may be used for the analysis but also to synthesize individual resonances. In this case, we can take a different approach; using a spectral analysis of a recording of a sword strike we can identify the most relevant modes and their changes over time. We will model the most important resonances (also referred to as strategic modeling) using highly resonant bandpass filters in MaxMSP. The extremely narrow bandwidth will make the filters ring and naturally decay over time, which will bypass the need for amplitude envelopes. The narrower the bandpass filter, the longer it will resonate. Alternatively, envelope sine waves can be used to model individual modes; sometimes both methods are used together.

Note: we are using filters past their recommended range in the MaxMSP manual; as always with highly resonant filters, do exercise caution as the potential for feedback and painful resonances that can incur hearing damage is possible. I recommend adding a brickwall limiter to the output of the filters or overall output of the model in order to limit the chances for potential accidents.

Spectral Analysis

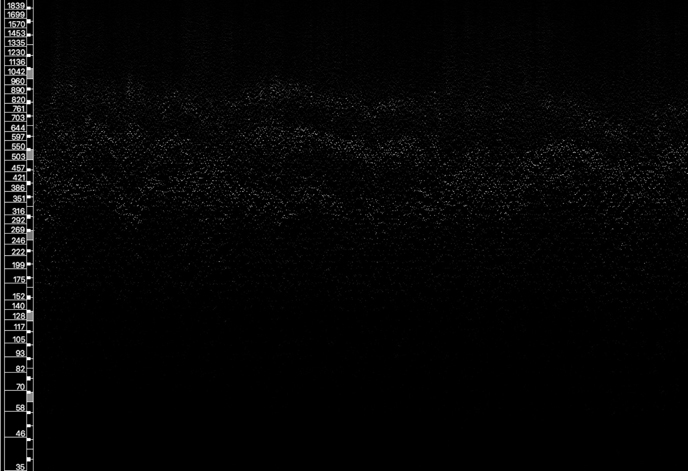

We’re going to start our modeling process by taking a look at a spectrogram of a sword strike. This will help us understand exactly what happens when a sword hit occurs:

Figure 10.5

Looking at this information can teach us quite a bit about the sound we are trying to model. The sound takes place over the course of 2.3 seconds, and this recording is at 96Khz, but we shall only concern ourselves with the frequencies up to 20Khz in our model. The sound starts with a very sharp, short noise burst lasting between 0.025 and 0.035 seconds. This is very similar to a broadband noise burst and is the result of the impact itself, at the point of excitation.

After the initial excitation, we enter the resonance or modal stage. A sword falling in the category of non-diffuse bodies exhibits clear resonances that are relatively easy to identify with a decent spectrogram. The main resonances fall at or near the following frequencies:

- 728Hz.

- 1,364Hz.

- 2,264Hz.

- 2,952Hz.

- 3,852Hz.

All these modes have a similar length and last 2.1 seconds into the sound, the first four being the strongest in terms of amplitude. Additionally, we can also identify secondary resonance at the following:

- 5,540Hz, lasting for approximately 1.4 second.

- 7,134Hz, lasting for approximately 0.6 second.

Further examination of this and other recordings of similar events can be used to extract yet more information, such as the bandwidth of each mode and additional relevant modes. To make our analysis stage more exhaustive it would be useful to analyze strikes at various velocities, as to identify the modes associated with high velocity impact and any changes in the overall sound that we might want to model.

We can identify two distinct stages in the sound:

- A very short burst of broadband noise, which occurs at the time of impact and lasts for a very short amount time (less than 0.035 seconds).

- A much longer resonant stage, made of a combination of individual modes, or resonances. We identified seven to eight resonances of interest with the spectrogram, five of which last for about 2.2 seconds, while the others decay from 1.4 seconds to 0.6 seconds approximately.

Next we will attempt to model the sound, using the information we extracted from the spectral analysis.

Modeling the Impulse

The initial strike will be modeled using enveloped noise and a click, a short sample burst. The combination of these two impulse sources makes it possible to model an impulse ranging from a mild burst to a long scrape and everything in between. Low-pass filtering the output of the impulse itself is a very common technique with physical modeling. A low pass-filtered impulse can be used to model impact velocity. A low-pass filtered impulse will result in fewer modes being excited and at lower amplitude, which is what you would expect in the case of a low velocity strike. By opening up the filter and letting all the frequencies of the impulse through, we excite more modes, at higher amplitude, giving us the sense of a high velocity strike.

Scrapes can be obtained by using a longer amplitude envelope on the noise source.

Modeling the Resonances

This model requires a bank of bandpass filters in order to recreate the modes that occur during the collision; however, we will group the filters into three banks, each summed to a separate mixing stage. We will split the filters according to the following: initial impact, main body resonances and upper harmonics, giving us control over each stage in the mix.

Making the Model Flexible

Once the individual resonances have been identified and successfully implemented, the model can be made flexible in a number of ways at low additional CPU overhead.

A lot can be done by giving the user control over the amplitude and length of the impulse. A short impulse will sound like an impact, whereas a sustained one will sound more like a scrape. Strike intensity may be modeled using a combination of volume control and low-pass filtering. A low-pass filter can be used to model the impact intensity by opening and closing for high velocity and low velocity impacts. Careful tuning of each parameter can be the difference between a successful and unusable model.

Similarly to the wind machine, this model is but a starting point. With little modification and research we can turn a sword into a hammer, a scrape generator or generic metallic collisions. Experiment and explore!

Conclusion

These two examples were meant only as an introduction to procedural audio and the possibilities it offers as a technology. Whether for linear media, where procedural audio offers the possibility to create endless variations at the push of a button or for interactive audio, for which it offers the prospect of flexible models able to adapt to endless potential scenarios, procedural audio offers an exciting new way to approach sound design. While procedural audio has brought to the foreground synthesis methods overlooked in the past such as modal synthesis, any and all synthesis methods can be applied toward procedural models, and the reader is encouraged to explore this topic further.

Note: full-color versions of the figures in this chapter can be found on the companion website for this book.