![]()

Triggers

Triggers define the code that runs in response to specific events. There are three types of triggers available in SQL Server:

- DML triggers fire when data modification occurs. You can use DML triggers in cases when you need to enforce specific business rules during data modifications, and the system does not have a dedicated data-access tier implemented. You can think about audit-trail functional, which captures who changed the data in the table as an example. When a system has multiple applications working with the database directly, audit-trail implementation based on triggers is the simplest one.

- DDL triggers fire in response to events that change database and server objects. You can use DDL triggers to prevent or audit those changes, for example, dropping tables, altering stored procedures, or creating new logins.

- Logon triggers fire during the user login process. You can use triggers for audit purposes as well as to prevent users from logging in to the system when needed.

DML triggers allow you to define the code that will be executed during data modification operations, such as insert, update, or delete. There are two types of DML triggers: INSTEAD OF and AFTER triggers. INSTEAD OF triggers run as a replacement of the actual data modification operation on a table or view. With these types of triggers, you can evaluate and/or implement business rules. You also need to issue the actual DML statement against a table if you want the data to be modified. AFTER triggers fire following a data modification operation, when the data in the table has been changed.

Let’s see what happens when we insert the data into a table that has triggers and constraints defined. First let’s create the table using the code shown in Listing 8-1.

Listing 8-1. Inserting data into the table: Table and two triggers creation

create table dbo.OrderLineItems

(

OrderId int not null,

OrderLineItemId int identity(1,1) not null,

ProductId int not null,

ProductName nvarchar(64) not null,

CreationDate smalldatetime not null,

constraint DEF_OrderLineItems_CreationDate

default GetUtcDate(),

Quantity decimal(9,3) not null,

Price smallmoney not null,

constraint PK_OrderLineItems

primary key clustered(OrderId, OrderLineItemId),

constraint CHK_OrderLineItems_PositiveQuantity

check (Quantity > 0),

constraint FK_OrderLineItems_Orders

foreign key(OrderId)

references dbo.Orders(OrderId),

constraint FK_OrderLineItems_Products

foreign key(ProductId)

references dbo.Products(ProductId)

)

go

create trigger trg_OrderLineItems_InsteadOfInsert on dbo.OrderLineItems

instead of insert

as

begin

if @@rowcount = 0

return

set nocount on

if not exists(select * from inserted)

return

insert into dbo.OrderLineItems(OrderId, ProductId, ProductName, Quantity, Price)

select i.OrderId, i.ProductId, p.ProductName, i.Quantity, i.Price

from inserted i join dbo.Products p on

i.ProductId = p.ProductId

end

go

create trigger trg_OrderLineItems_AfterInsert on dbo.OrderLineItems

after insert

as

begin

if @@rowcount = 0

return

set nocount on

if not exists(select * from inserted)

return

if exists

(

select *

from inserted i join dbo.Orders o on

i.OrderId = o.OrderId

where o.Status = 'CLOSED'

)

begin

raiserror('Cannot change the closed order',16,1)

rollback tran

return

end

end

go

The table has primary and foreign keys as well as default and check constraints. INSTEAD OF and AFTER triggers are also defined. Let’s take a look what happens when we run an insert statement against the table, as shown in Listing 8-2.

Listing 8-2. Inserting data to the table: Insert statement

insert into dbo.OrderLineItems(OrderId, ProductId, ProductName, Quantity, Price)

values(@OrderId, @ProductId, @ProductName, @Quantity, @Price)

In the first step, SQL Server creates and populates inserted and deleted virtual tables that contain the information about the new and old versions of the rows affected by the DML statement. Those tables will be accessible in the INSTEAD OF trigger. In our case, the inserted table would have one row with the values that we provided in the insert statement and the deleted table would be empty because there is no “old” version of the row when we insert it. We will talk about those tables later in this chapter, but for now let us remember one very important thing: DML triggers have the statement scope and would be fired just once regardless of how many rows are affected. The virtual tables could have more than one row, and the implementation needs to handle that correctly.

In the next step, SQL Server fires the trg_OrderLineItems_InsteadOfInsert INSTEAD OF trigger. In the trigger, we are implementing the business logic and executing an insert statement against the actual table. Our implementation of the trigger ignores the ProductName value provided by original insert statement and replaces it with the actual product name from Products table. Inner join also filters out the rows that do not have corresponding products in the system. Even if we enforce the same rule by foreign key constraint, such an implementation behaves differently. Violation of the foreign key constraint terminates the entire batch without inserting any rows, while a join in the trigger just filters out incorrect rows and inserts the correct ones.

![]() Tip Whether you should use such an approach, ignoring the incorrect rows rather than terminating the batch, depends on the business requirements. Although it can help in some cases, it complicates system troubleshooting. At a bare minimum, I suggest that you log information about the skipped rows somewhere in the system.

Tip Whether you should use such an approach, ignoring the incorrect rows rather than terminating the batch, depends on the business requirements. Although it can help in some cases, it complicates system troubleshooting. At a bare minimum, I suggest that you log information about the skipped rows somewhere in the system.

As a next step, when the INSTEAD OF trigger runs the insert statement, SQL Server performs the following tasks in the sequence:

- It assigns the default constraint value to CreationDate column.

- It validates the not null, primary key, unique, check constraints, and unique indexes in the table, and it terminates the statement in the case of constraint or uniqueness violations.

- It checks foreign key constraints, and terminates the statement in case of violations. Otherwise, it inserts the new rows into the table.

![]() Note AFTER triggers do not fire in the case of constraints or index uniqueness violations.

Note AFTER triggers do not fire in the case of constraints or index uniqueness violations.

Finally, we have the new inserted and deleted tables created and AFTER triggers fired. At this point, the new row has already been inserted into the table and, if we need to rollback the changes, SQL Server would undo the insert operation. In the example above, it would be more efficient to have the order status check implemented as part of INSTEAD OF rather than the AFTER trigger.

As I already mentioned, triggers are running on a per-statement rather than a per-row basis. Our implementation needs to work correctly when inserted and deleted tables have more than one row. For example, the implementation in Listing 8-3 would fail with the exception that the subquery used in the set operator returned more than one row if multiple rows were updated.

Listing 8-3. Triggers implementation: Incorrect implementation

create trigger Trg_OrderLineItems_AfterUpdate_Incorrect on dbo.OrderLineItems

after update

as

begin

-- Some code here

declare

@OrderId int

set @OrderId = (select OrderId from inserted)

-- Some code here

end

Error Message:

Msg 512, Level 16, State 1, Procedure Trg_OrderLineItems_AfterUpdate_Incorrect, Line 9

Subquery returned more than 1 value. This is not permitted when the subquery

follows =, !=, <, <= , >, >= or when the subquery is used as an expression.

Alternatively, triggers would fire even if the DML statement does not change (insert, update, or delete) any data. In that case, both inserted and deleted tables would be empty. In order to create an efficient implementation, you need to have a few checks in the beginning of the trigger to prevent unnecessary code from being executed. Let’s look at our implementation again, as shown in Listing 8-4.

Listing 8-4. Triggers implementation: Preventing unnecessary code from being executed

create trigger trg_OrderLineItems_InsteadOfInsert on dbo.OrderLineItems

instead of insert

as

begin

if @@rowcount = 0

return

set nocount on

if not exists(select * from inserted)

return

-- Some code here

end

The first statement in the trigger—if @@rowcount= 0—checks if our insert statement did, in fact, insert any rows. As an example, you can think about the insert/select pattern when the select query did not return any data. You would like to avoid having a trigger code from being executed in such cases.

The second statement—set nocount on—stops SQL Server from returning the message that displays the number of rows affected by the code in the trigger. Some client libraries do not handle multiple messages correctly.

The last statement—if not exists(select * from inserted) —is trickier. While @@rowcount can help you detect when there are no affected rows by INSERT, UPDATE, or DELETE statements, it would not work very well with MERGE. That operator, introduced in SQL Server 2008, allows you to combine all three INSERT, DELETE, and UPDATE actions into the single statement. Triggers would fire even if there were no corresponding actions. @@rowcount in the trigger represents the total number of the rows affected by MERGE statement. Let’s create a simple table with three triggers that display the value of @@rowcount and the number of the rows in inserted and deleted tables. You can see this code in Listing 8-5.

Listing 8-5. Triggers and MERGE statement: Table and three triggers creation

create table dbo.Data(Col int not null)

go

create trigger trg_Data_AI on dbo.Data

after insert

as

select

'After Insert' as [Trigger]

,@@RowCount as [RowCount]

,(select count(*) from inserted) as [Inserted Cnt]

,(select count(*) from deleted) as [Deleted Cnt]

go

create trigger trg_Data_AU on dbo.Data

after update

as

select

'After Update' as [Trigger]

,@@RowCount as [RowCount]

,(select count(*) from inserted) as [Inserted Cnt]

,(select count(*) from deleted) as [Deleted Cnt]

go

create trigger trg_Data_AD on dbo.Data

after delete

as

select

'After Delete' as [Trigger]

,@@RowCount as [RowCount]

,(select count(*) from inserted) as [Inserted Cnt]

,(select count(*) from deleted) as [Deleted Cnt]

go

Now let’s run the MERGE statement, as shown in Listing 8-6.

Listing 8-6. Triggers and MERGE statement:MERGE

merge into dbo.Data as Target

using (select 1 as [Value]) as Source

on Target.Col = Source.Value

when not matched by target then

insert(Col) values(Source.Value)

when not matched by source then

delete

when matched then

update set Col = Source.Value;

Because the dbo.Data table is empty, the merge statement would insert one row there. Let’s look at the output from the triggers, as shown in Figure 8-1.

Figure 8-1. @@rowcount, inserted and deleted tables with MERGE operator

As you see, all three triggers were fired. In each of them, @@rowcount represented the number of rows affected by the MERGE. However, with the AFTER UPDATE and AFTER DELETE triggers, the inserted and deleted tables were empty. You need to check the content of those tables to prevent the code in the trigger from being executed.

As you can guess, there is overhead associated with the triggers. At a bare minimum, SQL Server needs to create inserted and deleted virtual tables when triggers are present. SQL Server does not analyze if there is any logic that references those tables within the trigger and always creates them. While the overhead associated with INSTEAD OF triggers is not particularly large, this is not the case with AFTER triggers. AFTER triggers store the data from those tables in the special part of tempdb called version store, keeping it until the end of transaction.

![]() Note SQL Server uses version store to maintain multiple versions of the rows, and it supports several features, such as optimistic transaction isolation levels, online indexing, multiple active result sets (MARS), and triggers. We will talk about version store in greater detail in Chapter 21, “Optimistic Isolation Levels.”

Note SQL Server uses version store to maintain multiple versions of the rows, and it supports several features, such as optimistic transaction isolation levels, online indexing, multiple active result sets (MARS), and triggers. We will talk about version store in greater detail in Chapter 21, “Optimistic Isolation Levels.”

While version store usage introduces addition tempdb load, there is another important factor that you need to keep in mind. In order to maintain the links between the new and old versions of the rows, AFTER UPDATE and AFTER DELETE triggers add 14 bytes version store pointer to the rows they modified or deleted, which will stay until the index has been rebuilt. That could increase the row size and introduce the fragmentation similar to the insert/update pattern discussed in Chapter 5, “Index Fragmentation.” Let’s look at the example and create the table with some data, as shown in Listing 8-7.

Listing 8-7. Triggers and fragmentation: Table creation

create table dbo.Data

(

ID int not null identity(1,1),

Value int not null,

LobColumn varchar(max) null,

constraint PK_Data

primary key clustered(ID)

);

;with N1(C) as (select 0 union all select 0) -- 2 rows

,N2(C) as (select 0 from N1 as T1 cross join N1 as T2) -- 4 rows

,N3(C) as (select 0 from N2 as T1 cross join N2 as T2) -- 16 rows

,N4(C) as (select 0 from N3 as T1 cross join N3 as T2) -- 256 rows

,N5(C) as (select 0 from N4 as T1 cross join N4 as T2 ) -- 65,536 rows

,Numbers(Num) as (select row_number() over (order by (select null)) from N5)

insert into dbo.Data(Value)

select Num

from Numbers;

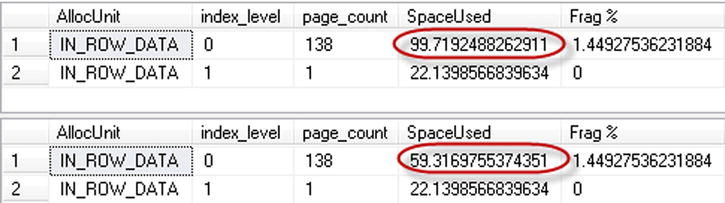

Now let’s delete every other row in the table, looking at the index’s physical statistics before and after the deletion. The code is found in Listing 8-8 and the results are shown in Figure 8-2.

Listing 8-8. Triggers and fragmentation: Physical index stats before and after deletion

select

alloc_unit_type_desc as [AllocUnit],

index_level,

page_count,

avg_page_space_used_in_percent as [SpaceUsed],

avg_fragmentation_in_percent as [Frag %]

from sys.dm_db_index_physical_stats(DB_ID(),OBJECT_ID(N'dbo.Data'),1,null,'DETAILED'),

delete from dbo.Data

where ID % 2 = 0;

select

alloc_unit_type_desc as [AllocUnit],

index_level,

page_count,

avg_page_space_used_in_percent as [SpaceUsed],

avg_fragmentation_in_percent as [Frag %]

from sys.dm_db_index_physical_stats(DB_ID(),OBJECT_ID(N'dbo.Data'),1,null,'DETAILED'),

Figure 8-2. Clustered index physical statistics after DELETE statement without AFTER DELETE trigger

As you remember, the DELETE operation does not physically remove the row from the page and just marks it as a ghost row. In our example, the only thing that was changed is amount of free space on the pages.

Now let’s truncate the table and populate it with the same data as before with code shown in Listing 8-9.

Listing 8-9. Triggers and fragmentation: Populating table with the data

truncate table dbo.Data;

;with N1(C) as (select 0 union all select 0) -- 2 rows

,N2(C) as (select 0 from N1 as T1 cross join N1 as T2) -- 4 rows

,N3(C) as (select 0 from N2 as T1 cross join N2 as T2) -- 16 rows

,N4(C) as (select 0 from N3 as T1 cross join N3 as T2) -- 256 rows

,N5(C) as (select 0 from N4 as T1 cross join N4 as T2 ) -- 65,536 rows

,Numbers(Num) as (select row_number() over (order by (select null)) from N5)

insert into dbo.Data(Value)

select Num

from Numbers;

Next, let’s create the empty AFTER DELETE trigger on the table, as shown in Listing 8-10.

Listing 8-10. Triggers and fragmentation: Trigger creation

create trigger trg_Data_AfterDelete

on dbo.data

after delete

as

return;

If you run the same deletion statement as you did previously, you would see the results shown in Figure 8-3.

Figure 8-3. Clustered index physical statistics after DELETE statement with AFTER DELETE trigger

Version store pointers increased the size of the rows and led to the massive page splits and fragmentation during DELETE operations. Moreover, in the end, we almost doubled the number of pages in the index.

![]() Note In some cases, when there is only IN_ROW allocation involved (for example, when a table does not have LOB columns nor variable width columns, which can potentially require it to store data in the ROW_OVERFLOW pages), SQL Server optimizes that behavior and does not add 14 bytes version store pointers to the rows.

Note In some cases, when there is only IN_ROW allocation involved (for example, when a table does not have LOB columns nor variable width columns, which can potentially require it to store data in the ROW_OVERFLOW pages), SQL Server optimizes that behavior and does not add 14 bytes version store pointers to the rows.

Triggers are always running in the same transaction as the statement that fired them. We need to make trigger execution time as short as possible to minimize the duration of locks being held.

![]() Note We will talk about different lock types, their behavior, and their lifetime in Part 3, “Locking, Blocking, and Concurrency.”

Note We will talk about different lock types, their behavior, and their lifetime in Part 3, “Locking, Blocking, and Concurrency.”

DDL Triggers

DDL triggers allow you to define the code that executes in response to various DDL events, such as creation, alteration, or deletion of database objects, changing permissions, and updating statistics. You can use these triggers for audit purposes as well as to restrict some operations on database schema. For example, the trigger shown in Listing 8-11 would prevent accidental altering or dropping of a table, and it could be used as the safety feature in production environment.

Listing 8-11. DDL Triggers: Preventing altering and dropping tables in production

create trigger trg_PreventAlterDropTable on database

for alter_table, drop_table

as

begin

print 'Table cannot be altered or dropped with trgPreventAlterDropTable trigger enabled'

rollback

end

While this approach helps in keeping tables and their schemas intact, it introduces one potential problem. DDL triggers fire after an operation is completed. As a result, using our example, if you have the session altering the table, SQL Server would perform the alteration before the trigger fires and then rollback all of the changes.

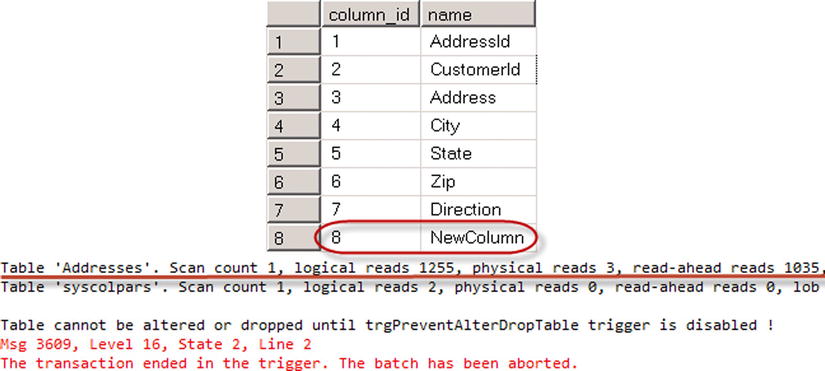

Let’s prove it now. As a first step, let’s alter the trigger to capture the information about table structure during execution. You can see the code that does this in Listing 8-12.

Listing 8-12. DDL Triggers: Trigger code

alter trigger trg_PreventAlterDropTable on database

for alter_table

as

begin

declare

@objName nvarchar(257) =

eventdata().value('/EVENT_INSTANCE[1]/SchemaName[1]','nvarchar(128)') +

'.' + eventdata().value('/EVENT_INSTANCE[1]/ObjectName[1]','nvarchar(128)')

select column_id, name

from sys.columns

where object_id = object_id(@objName)

print ' Table cannot be altered or dropped with trgPreventAlterDropTable trigger enabled'

rollback

end

This trigger returns the list of columns in the table at the moment the trigger fires.

Now let’s run the ALTER TABLE statement that adds a persistent computed column to the table, capturing I/O statistics during the execution. You can see the code for doing this in Listing 8-13.

Listing 8-13. DDL Triggers: ALTER TABLE statement

set statistics io on;

alter table Delivery.Addresses

add NewColumn as AddressId persisted;

This alteration adds another column to every data row in the table. We can see the results in Figure 8-4.

Figure 8-4. Table structure in DDL trigger with I/O statistics of the operation

As you see, when the trigger fires, the table has already been altered and a new column called NewColumn is already there. As a result, when the trigger rolls back the transaction, SQL Server needs to undo the table alteration. This process can be very inefficient, especially with the large tables.

As you already have seen, we were using the EVENTDATA function to get the information about the DDL event from within the trigger. This function returns an xml value that contains information about the type of event, session and DDL command, affected object, as well as other attributes. For instance, in our example, you would get the following XML code.

<EVENT_INSTANCE>

<EventType>ALTER_TABLE</EventType>

<PostTime>2013-05-12T12:26:44.453</PostTime>

<SPID>54</SPID>

<ServerName>SQL2012-STD1SQL2012</ServerName>

<LoginName>SQL2012-STD1Administrator</LoginName>

<UserName>dbo</UserName>

<DatabaseName>SqlServerInternals</DatabaseName>

<SchemaName>Delivery</SchemaName>

<ObjectName>Addresses</ObjectName>

<ObjectType>TABLE</ObjectType>

<AlterTableActionList>

<Create>

<Columns>

<Name>NewColumn</Name>

</Columns>

</Create>

</AlterTableActionList>

<TSQLCommand>

<SetOptions ANSI_NULLS="ON" ANSI_NULL_DEFAULT="ON" ANSI_PADDING="ON" QUOTED_IDENTIFIER="ON" ENCRYPTED="FALSE" />

<CommandText>alter table Delivery.Addresses

add NewColumn as AddressId persisted</CommandText>

</TSQLCommand>

</EVENT_INSTANCE>

DDL triggers can be created in either server or database scope. Some of the DDL events, CREATE_DATABASE for example, would require the trigger to have the server scope. Others events, ALTER_TABLE for example, could use either of them. When such a trigger is created on the server scope, it would fire in the instance of the corresponding event in any database on the server.

In SQL Server Management Studio, database level DDL triggers can be found under Programmability node in the database. Server level DDL triggers are displayed under Server Objects node. You can also use sys.triggers and sys.server_triggers catalog views to find them with T-SQL.

Logon triggers fire after a user successfully authenticates on the server, but before the session has been established. Some of the scenarios where you can use logon triggers are for preventing the same user from opening multiple database connections, or for restricting access to the system based on some custom criteria. The trigger in Listing 8-14 prevents HRLogin login from accessing the system outside of business hours.

Listing 8-14. Logon trigger

create trigger trg_Logon_BusinessHoursOnly

on all server

for logon

as

begin

declare

@currTime datetime = current_timestamp

if original_login() = 'HRLogin' and

( -- Check if today is weekend

((@@datefirst + datepart(dw, @currTime)) % 7 in (0,1)) or

(cast(@currTime as time) >= '18:00:00') or

(cast(@currTime as time) < '8:00:00')

)

rollback

end

Like DDL triggers, there is an EVENTDATA function that returns XML with additional information about a logon event. An example of this XML code follows.

<EVENT_INSTANCE>

<EventType>LOGON</EventType>

<PostTime>2013-05-12T17:55:40.090</PostTime>

<SPID>55</SPID>

<ServerName>SQL2012-STD1SQL2012</ServerName>

<LoginName>SQL2012-STD1Administrator</LoginName>

<LoginType>Windows (NT) Login</LoginType>

<SID>sid</SID>

<ClientHost><local machine></ClientHost>

<IsPooled>0</IsPooled>

</EVENT_INSTANCE>

You need to make sure that the logon trigger executes as fast as possible to prevent possible connection timeouts. You need to be very careful if the trigger is accessing external resources where response time is not guaranteed. Think about a CLR function that performs additional authentication against a corporate Active Directory as an example. That function needs to set a short timeout for AD queries and correctly handle the possible exceptions. Otherwise, nobody would be able to login to SQL Server.

UPDATE( ) and COLUMNS_UPDATED( ) functions

The UPDATE and COLUMNS_UPDATED functions allow you to check if specific columns were affected by insert or update operations.

The UPDATE function accepts a column name as the parameter and returns a Boolean value that shows if the column was affected by the statement that fires the trigger. For insert operations, it always returns TRUE. For update operations, it would return TRUE if an attempt was made or, more specifically, if a column was present in the list of columns that needed to be updated, regardless of whether it changed the value or not. For example, in the Listing 8-15, update statement does not change the value of column C in the row. Although, update(C) function in the trigger return TRUE because column C was included in the list of the columns in update statement.

Listing 8-15. UPDATE() function behavior

create trigger trg_T_AU

on dbo.T

after update

as

begin

-- Some code here

if update(C)

-- Some code here

end

go

declare @V int = null

update T set C = IsNull(@V, C) where ID = 1;

Listing 8-16 shows an example of the trigger that recalculates the order total when a line item price or quantity changes.

Listing 8-16. UPDATE() function implementation example

create trigger trg_OrderLineItems_AfterUpdate

on dbo.OrderLineItems

after update

as

begin

-- Some code here

if update(Quantity) or update(Price)

begin

-- recalculating order total

update o

set

o.Total =

(

select sum(li.Price * li.Quantity)

from dbo.OrderLineItems li

where li.OrderId = o.OrderId

)

from dbo.Orders o

where o.OrderId in (select OrderId from inserted)

end

-- Some code here

end

The COLUMNS_UPDATED function returns the varbinary value that represents the bitmask where each bit is set to 1 in case the column was affected by the statement. The order of the bits, from least significant to the most significant, corresponds to column_id value from the sys.columns catalog view.

Assuming that the column_id for the Quantity column is 4 and the column_id for the Price column is 5, we can replace the if operator above with the following bitmask comparison: if columns_updated() & 24 <> 0.

The integer value 24 represents the binary value 11000. The result of bitwise & (and) operator would be not equal to zero if either of the corresponding bits returned by the columns_updated function is set to one.

Both DDL and DML triggers are nested when their actions fire the triggers in the other tables. For example, you can have an AFTER UPDATE trigger on Table A that updates Table B, which has its own AFTER UPDATE trigger defined. When nested triggers are enabled, the trigger on Table B would be fired. You can control that behavior by setting the nested trigger server configuration option. The code in Listing 8-17 disables the nested trigger execution.

Listing 8-17. Disabling nested triggers

EXEC sp_configure 'show advanced options', 1;

GO

RECONFIGURE ;

GO

EXEC sp_configure 'nested triggers', 0 ;

GO

RECONFIGURE;

GO

By default, nested trigger execution is enabled. In the case of infinite loops, SQL Server terminates the execution and rolls back the transaction when the nesting level exceeds 32.

Another database option, recursive_triggers, controls if an AFTER trigger can fire itself. There are two types of recursion. With direct recursion, the trigger fires itself by performing the same action against the table where it has been defined. For example, when an AFTER UPDATE trigger updates the same table. By default, direct recursion is disabled. Indirect recursion, on the other hand, happens when Table A performs the action that fires the trigger in Table B and the trigger on Table B performs the action that fires the same trigger on Table A. To prevent indirect recursion from happening, we need to disable the nested triggers configuration option on the server level.

![]() Caution You need to be careful about changing the nested triggers or recursive_triggers options. Developers often rely on default trigger behavior, and you can break existing systems by changing those options.

Caution You need to be careful about changing the nested triggers or recursive_triggers options. Developers often rely on default trigger behavior, and you can break existing systems by changing those options.

First and Last Triggers

In a situation where a table has multiple AFTER triggers, you can specify what triggers are firing first and last by using the sp_settriggerorder system stored procedure. For example, the code in Listing 8-18 makes trg_Data_AUAudit the first in the execution.

Listing 8-18. Specifying triggers execution order

sp_settriggerorder @triggername = ' trg_Data_AUAudit', @order = 'first', @stmttype = 'UPDATE'

Each action—INSERT, UPDATE, and DELETE—can have its own first and last triggers specified. The value will be cleared when trigger is altered.

You cannot control the order in which triggers fire in any other way.

Every session has up to 128 bytes of binary data value associated with it. That value has the session scope and it can be used when you need to pass some parameters to or from triggers. You can set the value with the SET CONTEXT_INFO statement and retrieve it with the CONTEXT_INFO function.

As an example, let’s modify the DDL trigger trg_PreventAlterDropTable to allow table alteration when context information contains the string: ALLOW_TABLE_ALTERATION. The code for doing this is shown in Listing 8-19.

Listing 8-19. CONTEXT_INFO: Trigger code

create trigger trg_PreventAlterDropTable on database

for alter_table

as

begin

if isnull(convert(varchar(22),context_info()),'') <> 'ALLOW_TABLE_ALTERATION'

begin

print 'Table alteration is not allowed in such context'

rollback

end

end

To be able to alter the table, the session needs to set context_info, as shown in Listing 8-20.

Listing 8-20. CONTEXT_INFO: Setting CONTEXT_INFO value

declare

@CI varbinary(128) = convert(varbinary(22),'ALLOW_TABLE_ALTERATION')

set context_info @CI

alter table Delivery.Addresses add NewColumn int null

Context binary data also exposed through context_info column in sys.dm_exec_request, sys.dm_exec_sessions and sys.processes system views.

Summary

Triggers can help in certain scenarios. DDL triggers can validate and prevent unwanted metadata changes in the system. Login triggers can help implement custom authentication. DML triggers can help centralize some logic in the code, especially when there is no dedicated data access tier in the system. One example is the implementation of an audit trail function when you want to capture the information about users who change data. While there are other approaches to implement such tasks, trigger-based implementation can be the simplest.

Unfortunately, triggers come at a high cost. AFTER DML triggers introduce overhead related to the maintenance of inserted and deleted virtual tables. This leads to extra tempdb load and index fragmentation. INSTEAD OF triggers could lead to system supportability issues. It is easy to forget or overlook the logic implemented in such triggers.

DDL triggers run after schema changes are done. While you can rollback those changes from within the triggers, such operations can be very expensive in terms of I/O, CPU, and transaction log activity, especially with the large tables.

Finally, LOGON triggers can prevent users from logging into the system when incorrectly implemented due to bugs in the logic or connection timeouts introduced by long execution times, especially when those triggers access external resources.

Triggers always run in the context of a transaction. Any active locks; that is, data and schema, will be held while a trigger is running and until the transaction is completed. You need to make your triggers as quick and efficient as possible and avoid any actions that can potentially take a long time. For example, it is the bad idea to implement an audit trail function that uses an external (linked) server for the logging. If that server goes down, it will take a long time for a connection attempt to timeout. In addition, if you did not handle the exception properly, it would rollback the original transaction.

Keeping all of these implications in mind, you need to be very careful when dealing with the triggers. It is better to avoid them unless absolutely necessary.