Alerting is a contentious topic. It is contentious because no one wants to be alerted. An alert is a queue that requires you to work, so if an alert is implemented poorly, or without thought, it can quickly disrupt your life and sour your relationship with a project. It is such a common problem that almost every field, company, and person has an opinion on the topic. Doctors have policies that state how long they can work for, along with when and how they can be paged. The Federal Aviation Administration (FAA) of the United States has requirements for pilots and many countries keep a close eye on the health of their military units that work in high-stress situations.

Note

Being paged is an old but common term. It refers to the act of someone trying to alert you. It comes from the days when, when someone was oncall, they carried a physical device, called a pager, that would display a phone number you needed to call and make noise, so you knew you had to do something.

As we have become more connected online, some organizations have come to expect you to answer every email quickly, no matter the time of day. This can be overwhelming to you as an individual. When do you disconnect from work when you have a device with you all the time that can pull you back into work? Defining what your relationship is with work and making sure that your employer agrees with that relationship, and that it is at the right level for you, your family, your product, and your team is not trivial.

This all leads to the question of when to alert. What is the query that forces someone to get notified that something is wrong? We start with this question and not with who we alert, or how we alert, because of the question we asked in Chapter 2, Monitoring—is our service working?

If the answer to this question is often no, then it should create the most serious of alerts. You want someone to know and react. Often, this means a customer is affected. I usually think of a serious incident as being something that a customer will see and that prompts them to get in touch with us because our product is broken. For a media website, example queries are:

- Can our viewers see our content and access our website?

- Can our content creators create content?

For an infrastructure project, an incident could be that jobs are not running, data is being lost in the database, or services are not able to talk to each other. You want to focus on symptoms (things that are actually broken) and not things that could be causing an outage. We call this category of failures "page-worthy," "wake someone up," or "critical" alerts. I will stick with the term "critical" for the rest of the chapter, but I tend to think of this category of alerts as the ones that I would want to be woken up for at three in the morning.

The second category of alerts is the "eventually," "this will probably hurt us soon," "oh, that is weird," or "non-critical" bucket. I will stick with "non-critical" for the rest of the chapter, but this category is everything else you might worry about that doesn't have to be dealt with right now. Certain organizations might separate alerts in more detail and specify reaction times based on the type of alert:

- Critical: Respond within 30 minutes

- Non-critical, today: Respond within 24 hours

- Non-critical, eventually: Put into the ticket work queue for someone to pick up in the next few weeks

This categorization is an example. Whatever your categorization is, it will probably come from your team's SLIs, SLOs, and SLAs, if you have them. For example, you are breaking an SLO or SLA, so that is probably worthy of a critical alert.

The key to this bucketing is defining what type of reaction is necessary for the person who is reacting, and also making sure that the person responding agrees with the level. If you send an urgent alert to someone and there is nothing to do, or they do not believe it is urgent, then when something that truly is urgent comes up, the chance that they will ignore it will increase dramatically. See the story of the boy who cried wolf for an example: a shepherd lies to his village about there being a wolf attacking his flock. He repeatedly lies about the wolf. The villagers stop believing him. When there finally is a wolf, the wolf comes in and kills the shepherd and his flock. No one from the village comes to help him as he cries for help and is eaten alive. This is dramatic, but if a service keeps notifying you about things that you do not care about, you will ignore it. This is human nature.

One trick to solving this problem is to have a weekly email thread, or discussion, about all of the past week's alerts:

- Did we respond appropriately to all alerts? If we received an alert, did we care? If not, we should probably change the alert, or delete it, because we aren't reacting to it.

- Was each alert at the appropriate level? If we were woken up for something that we could not act on, was it worth waking up for? Should we have just waited until the morning to look at it? The inverse is true as well—did a subtler alert need to be more critical to gain more immediate attention?

- Should we keep this alert? Alerts take time away from the receiver. Alerts often cause adrenaline surges and interrupt everything. If this alert is not important, or a good use of our time, we should delete it for the sake of our sanity.

- Should we change this alert in any way? Maybe the text of the alert does not tell us what to look at or is not worded in a way that everyone understands what it is trying to tell us.

- Did things happen that we wish we were alerted about? Should we create new alerts for things that we should have seen but didn't?

These questions let us evaluate each alert that fired and decide as a group whether the alerts that fired were useful. This is where you can find out whether one engineer feels that an alert is too sensitive and he or she was never able to correlate the alert with a problem. You can also decide to adjust the thresholds for an alert that is valuable but doesn't work properly during off-hours because of low traffic.

In essence, like monitoring, alerting is a constantly changing thing. Be wary of alerting too often, because you will lower the effectiveness for whoever receives the alert. Constant evaluation is needed to make sure that alerts are useful and reacted to correctly.

Once you have defined when to alert, it is time to decide where alerts will go and how they will get to people. You can send them in many ways. Some common ways to notify people include:

- A phone call

- An SMS message

- A dedicated app that makes loud noises

- A group chat message

- An email

- A loud siren in an office

Some delivery methods are better than others. Choosing a delivery method depends on the frequency and volume of your alerts, and also on how people are expected to respond to an alert. A loud siren would probably result in everyone quitting if you were at a small software start-up, but for software running a nuclear reactor, maybe a loud siren isn't a bad idea. An IRC channel is an excellent place for a constant stream of alerts but can also be a place where you might miss some things. Google used to have a channel that was a stream of every alert, for every service across the entire company. This was great because you could see when similar failures hit teams and know if there was some dependency issue hitting lots of teams. The downside of this channel was that there were always alerts firing, so you couldn't easily follow anything.

An email can be great because you can include graphs and longer descriptions. More modern group chat applications, like Slack, allow you to embed images with messages from bots, so you can push alerts that have context for them. Phone calls can be great because you can configure most smartphones to always ring when calls from a certain number come in. Equally, mobile apps like PagerDuty and VictorOps can override system volume levels on a user's smartphone and make loud noises when things are critical. Sometimes, just a text message will do. You can use a service like Twilio to send a text message when there is an issue. One of the world's simplest monitoring and alerting systems is the following script on a one-minute cron:

#! /bin/bash

DOMAIN="fake.example.com"

status_code=$(curl -sL -o /dev/null -w "%{http_code}" $DOMAIN)

if [[ $status_code -ne 200 ]]; then

echo "$DOMAIN is down!"

curl -s -XPOST https://api.twilio.com/2010-04-01/Accounts/$TWILIO_ACCOUNT_SID/Messages.json

-d "Body=$DOMAIN%20is%20down!"

-d "To=%2B$MY_NUMBER"

-d "From=%2B13372430910"

-u "$TWILIO_ACCOUNT_SID:$TWILIO_ACCOUNT_TOKEN"

fiTip

Tip: It is not advisable to store passwords or tokens in code. This is because tools such as TruffleHog scan source code looking for things that look like passwords or account tokens. Instead, you can use external configuration tools or environment variables to pass in sensitive tokens to your application.

A quick explanation of this Bash script: #! /bin/bash is the shebang, sha-bang, or hash-bang. When a text file starts with a hash (#), a bang (!), and a path to an executable (/bin/bash), that executable is used to interpret the file. This is only true in Unix-like operating systems. In this case, we are writing code for Bash, a common shell and scripting language:

DOMAIN="fake.example.com"is setting the variable$DOMAINto the domain we want to check.status_code=$(curl -sL -o /dev/null -w "%{http_code}" $DOMAIN)is setting the variable$status_codeto the HTTP status code of sending a GET request to$DOMAIN. The-sLflags tellcurlto be silent and to follow any redirects.-o /dev/nulltellscurlto send its output to/dev/null, a special Unix file that throws away all of the content it receives.-w "%{http_code}"is tellingcurlwe only want to save the HTTP response code. Thestatus_code=$()is telling Bash we want to run the command inside of the parentheses and assign it to$status_code.if [[ $status_code -ne 200 ]]; thenis checking whether$status_codedoes not equal 200. If it does not, then we need to alert. Thefia few lines later closes thisifblock.- The final large

curlcommand is sending a POST request to Twilio's API.$TWILIO_ACCOUNT_SID,$TWILIO_ACCOUNT_TOKEN, and$MY_NUMBERare expected to come from the environment this script is being run inside of. Each of the-dflags are tellingcurlto send that piece of data in the post body. We are URL encoding the data we are sending, which is why we say theFromfield is%2B13372430910instead of+13372430910. It is also why we sayBodyequals$DOMAIN%20is%20down!instead of$DOMAIN is down!. -XPOSTand this tellscurlto send a POST request instead of the default GET.

Note

NOTE: We talk about URL and HTTP encoding more in Chapter 10, Linux and Cloud Foundations. We also talk about curl in Chapter 9, Networking Foundations.

The key to all of these systems is to find a system that is affordable, reliable, and something that whoever is receiving the alerts will look at. If no one at your work reads their emails, then don't send them alert emails. Also, speaking of reliable, you can always send multiple copies of an alert. I like sending one to chat, one to SMS, and one to email. I will get it somehow!

As we mentioned for monitoring systems, alerting systems are software too and are not always reliable. How reliable they are is up to you and your team. How dangerous is it if an alert doesn't reach you? You can diversify your alerting tools to improve the chance of an alert being delivered. You could use Twilio to send a text message, Google Mail to send an email, and IRC to deliver a chat message. The message system could be set up so that if one fails, another can pick up the slack. It all depends on what you pick, but often alerting systems operate using "Master Election" or "Leader Election". This is a process, usually built on top of the Paxos or Raft algorithms for a set of identical servers to decide on who is going to be the leader and do the actual work.

There are a lot of ways to send alerts. We have mainly been talking about the interface that developers have to send the alert. You also need to think about how the alerts will flow to the actual user. As with monitoring, there are a bunch of companies that you can pay to funnel your alerts from your monitoring system to a device of your choice:

- PagerDuty

- VictorOps

- OpsGenie

There are also open-source solutions and you can always write your own system. As I mentioned earlier in this section, there are services that deliver messages for you, so it all just depends where in the pipeline you want to stop being in control of things and when you want to delegate to others. I generally suggest companies do not handle alerting delivery if they can help it, because building a very reliable alert delivery system can be difficult and the consequences of not delivering an alert can be very severe.

Figure 8: A simplification of an alerting pipeline. The application streams metrics and events to the monitoring service. The alerting service queries the monitoring service for the status of things and if they are out of bounds, sends messages for alerting delivery. Alerting delivery then gets alerts to the developer (or whomever).

Let's look at an example alerting pipeline if you're building it yourself: if you use Amazon CloudWatch as your monitoring system, CloudWatch Alarms, its alerting service, is recommended to pipe that data to other places. How CloudWatch Alarms works is that it shoves all state changes around an alert into Amazon's Simple Notification Service (SNS). Amazon SNS is a hosted Pub/Sub service. Amazon gives you email delivery for free of all the notifications that come from an SNS topic for free.

You could also have a piece of software that turns SNS notifications into API calls to Twilio or you could run something like Plivo, which is an open-source piece of software that lets you make phone calls with code and have a small piece of code that subscribes to your SNS alert topic and pipes alerts into phone calls.

Note

NOTE: We talk about queues and Pub/Sub in Chapter 10, Linux and Cloud Foundations.

All of this is to say that you need to find the pipeline that is right for your company. Often, for many smaller companies, finding a cheap pipeline that keeps your engineers notified is all you need. For very large companies, you may want to build and control the entire pipeline, so you can have specific delivery guarantees. Google, for example, has custom software for every layer of its stack, from monitoring software to how it delivers alerts. Google has a global system that can essentially page any engineer using email. Each engineer determines how they want their pages delivered and specifies that in a config file. So, you send an alert email to a service target, which then looks up the schedule for that service, then alerts the on-call engineer in the way that the engineer prefers. This works for Google but that is three separate pieces of software that a team has to maintain. That might not be good for you and your constraints. At one job I worked at, we just used a piece of software to pipe alerts into Slack and two of our employees had notifications set to "always" for that channel. It wasn't great but it worked. The next step we were looking into was piping all of those alerts into a larger alert system, but that would have cost us around $200 USD a month, which is money that we just did not have. Find the balance that's right for you between cost, energy to maintain, and reliability.

So, what do we want to include in our alert? Once we know how to deliver it, we need to know what to say.

The first thing to think about is the initial string. This is often the subject of the email, the first message in a chat, or the alert header. It should be short and descriptive. Tim Pope, a prolific software developer in the Vim open-source community, has some formatting suggestions for committing messages in Git, a version control system, that I like for alerts as well (http://tbaggery.com/2008/04/19/a-note-about-git-commit-messages.html). Most of his advice is hyper-specific for Git, but there are some things that we can take away that are very useful:

- Subjects should be less than 50 characters. This provides a quick understanding of why the person is being alerted.

- Use the imperative. This is important especially because you will often want to give instructions on what to do if this error fires. For example, saying, "Go to AWS console and terminate instance i–1234567890."

- Provide an extra explanation after the subject. If your alerting service allows it, include live details like graphs or evaluations of metrics. Also, include links to documentation that would be useful to the person responding to the alert.

If you're receiving a phone call, often the software will read just the subject line of the alert into the call. As the receiver of the call, you will just hear a computer voice describing the alert. In comparison, an email or text message may have the full description and images. Other services just send a link to the description.

Now that we are sending alerts that are easy to understand and can get to people, we have to decide who should receive our alerts. Remember how, earlier in the chapter, I said that alerting is a contentious issue? This is because people often want alerts to be sent, but they do not want to be the person who receives the alert.

By definition, an alert is interruptive. The need to do incident response is very rarely something that you know is coming, so you are forced to drop everything immediately. It is also often the case that incident response is not a primary part of a person's job, so they also have to push off deadlines and stop what they are working on.

Usually, you create a schedule for who is on call. Sometimes this is a schedule per-service, whereas other times it's just a pool of all engineers. Two of the most well-known schedules for on call are "rotate once a week" and the "three-four split". On "rotate once a week" every seven days the current on-call (often called the primary) person is taken off-call and the on-call backup (often called the secondary, on call partner, or buddy) person becomes the primary. Then, a new person, who has been off call the longest, becomes the secondary.

Figure 9: Example of a weekly on-call rotation schedule. This image shows two weeks of an on-call schedule. The first row shows a primary on-call schedule and every seven days the person on call changes. The second row is that person's secondary or on-call partner. Usually, the same people are in both the rotation for primary and secondary. The same person should never be both primary and secondary, so secondary follows a similar but different schedule.

In the three-four split, Monday through to Thursday, person A is primary and person B is secondary. On Friday, Saturday, and Sunday, person A is secondary and person B is primary. On Monday, both primary and secondary change to new people.

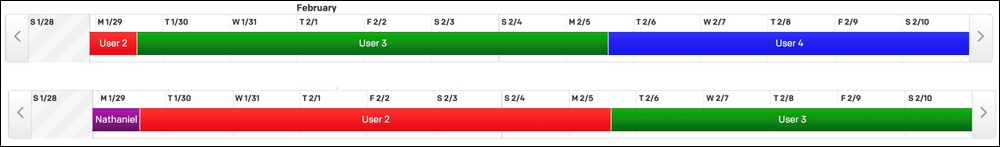

Figure 10: Example of a three-four split rotation schedule. Like the weekly schedule, the same people are in both the primary (first row) schedule and the secondary (second row) schedule, but the same person is never on call for both rotations. This image shows two weeks of on-call schedule. The shorter bars are the three-day weekend portion of the rotation, while the longer bars are the four-day weekday portion.

These are just two example schedules you could use. There are a million different schedules that you can use and the key is just figuring out a schedule that people are happy with. The options also change if you have multiple teams. If you have a team in London and a team in Seattle, then each office can do 12 hours and pass responsibilities back and forth. You can also have new people added only to the secondary schedule at first and then, after being secondary on-call once or twice, promote them to being in both schedules.

Whatever schedule your team picks, make sure that it works for everyone on call and people understand when they need to be available. If you can help it, try to avoid getting a message saying, "Oh shoot! Am I on call? I just sat down to watch a play and I don't have my laptop, so can someone else get this?"