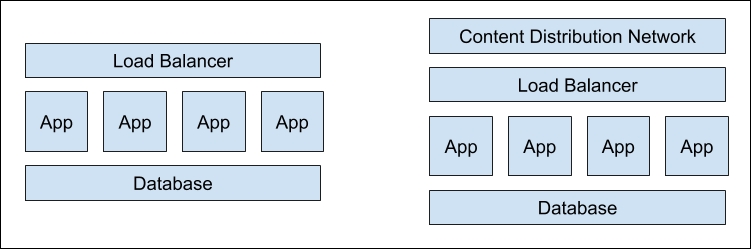

One of the most consistent reasons for dramatic performance changes is changes in infrastructure. You can dive into the code and find bugs or do deep research on tuning, but more often than not, putting a cache in the right place or removing a dependency will change performance with orders of magnitude. A quick example would be taking a simple web application and adding a Content Distribution Network (CDN) in front of it. This allows for a global cache of content, reducing the load on your actual applications, assuming the content can actually be cached.

Figure 6: An architecture of a simple web app, before (left) and after adding a CDN (right)

Now, because of this significant change in performance, architecture decisions can also have a dramatic effect on capacity planning. It is highly recommended that you try and get involved in the planning stages for architecture changes. The reasons for this are twofold:

- You have deep knowledge of how the infrastructure works and how code runs on it. So, because of this knowledge, you can often see things from a fresh perspective in the planning process.

- Decisions made could and probably will affect how many resources are available to other systems. Some architecture decisions could dramatically hurt other systems or increase the cost of your infrastructure beyond levels that you can afford.

An example of this is a rough approximation of three CMS (content management systems) we had when I was working for Hillary for America:

- A static generated website made from markdown files. It was generated by a script run on someone's desktop.

- A pipeline system where a dynamic website wrote content to a database and then a cron job read from the database and wrote out static files to S3.

- A dynamic system that generated pages every time there was a new request.

Figure 7: From left to right, systems #1, #2, and #3

System #3 was the fastest at getting new content to the consumers, but system #1 had the highest performance and lowest cost. System #2 had the best uptime. System #2 could also be easily modified to have better performance and had a faster publish time compared to system #3, by adding a cache and an automated cache clearing system. In the end, we scrapped systems #1 and #2 and created system #3, which ended up increasing our costs by a multiple of 10. We solved the content creator's need to have content out faster, but by not working through the full implications of the design early on by bringing SRE, developer, and product teams together, we lost a lot of time building the new solution and lost money operating the newly created system #3.

Another classic example is adding Varnish (an HTTP cache system) in front of a web server and telling Varnish to Vary on the Accept-Language header without normalizing it. The Vary header determines how many versions of a cache Varnish stores. You want a small number, so that every request to Varnish is a cache hit. However, because browsers send languages in many different ways, you can end up with many cache misses if you aren't normalizing the Accept-Language header before caching in Varnish. For example, if user A sends en-uk, en-us, user B sends en-us, en-uk, and user C sends en-uk, you might expect to send them all the same version of your website. However, because you are not normalizing the header, Varnish will store three separate caches of the content, instead of just one, and so all three users will get uncached content.