7.1. Memory configuration

As we saw in chapter 3, most versions of SQL Server 2008 can address the amount of memory supported by the underlying operating system. However, like previous versions, 32-bit editions of SQL Server 2008 are constrained to 2GB of RAM unless configured with special settings. Let's begin our coverage of memory configuration with a look at 32-bit memory management.

7.1.1. 32-bit memory management

All 32-bit systems can natively address a maximum of 4GB of memory (232 = 4,294,967,296 bytes). Until recent times, this limitation wasn't an issue; a quick scan of older documentation reveals terms such as very large when referring to memory beyond 4GB. In today's terms, systems with 8 or 16GB of RAM are considered normal, making correct memory configuration in 32-bit systems very important in order to derive the maximum performance benefit.

Apart from installing 64-bit versions of Windows and SQL Server, there are two ways of providing SQL Server with more than 2GB of memory; using the /3GB option or using Address Windowing Extensions (AWE) with the /PAE option.

/3GB

Of the 4GB of RAM that a 32-bit system can natively address, 2GB is reserved by Windows, leaving applications such as SQL Server with a maximum of 2GB. In Windows Server 2003, we used the /3GB option in the boot.ini file to limit Windows to 1GB of memory, enabling SQL Server to access up to 3GB. In Windows Server 2008, we use the BCDEdit command with the increaseuserva option with an optional parameter that determines the size of the available user space, such as 3072 for 3GB.

For 32-bit systems with 4GB of RAM, these options are a good way of squeezing more memory out of Windows for use by SQL Server, but limiting Windows to 1GB of RAM isn't always trouble free, particularly on systems with a large number of drivers and/or drivers that use a large amount of memory. Depending on the server configuration, these options may actually reduce performance and reliability, so use them with care.

For 32-bit systems with more than 4GB of RAM, we can use the /PAE option.

Pae and Awe

Intel first introduced 36-bit Physical Address Extensions (PAEs) in the Pentium Pro in the late 1990s. The extra 4 bits enable applications to acquire physical memory above 4GB (up to 64GB) as nonpaged memory dynamically mapped in the 32-bit address space.

Figure 7.1. The Memory page of a server's properties window contains the Use AWE to Allocate Memory option.

You enable the /PAE option in Windows Server 2003 in the boot.ini in the same way as the /3GB option. In Windows Server 2008, use the BCDEdit command with the /PAE option. After enabling PAE, you configure SQL Server with AWE to enable it to access the increased memory. You enable AWE either by using the sp_configure command or via the Server Properties window in SQL Server Management Studio (see figure 7.1).

Despite the increased memory that can be accessed with PAE/AWE, there are some limitations when used by SQL Server in 32-bit environments:

Memory above 4GB accessed using PAE/AWE can only be used by the SQL Server data cache. The procedure cache, used for query compilation plans, isn't able to take advantage of this memory. We'll cover the procedure cache in more detail in chapter 17, along with a number of related settings, such as forced parameterization.

Analysis Services and Integration Services components aren't able to utilize memory accessed using PAE/AWE.

Unlike a flat 64-bit environment, there's some overhead in mapping into the AWE memory space in 32-bit systems.

On 32-bit AWE-enabled systems, the service account running the SQL Server service must be given the Lock Pages in Memory right. As a consequence, AWE memory isn't paged out to disk by the operating system. As you can see in figure 7.2, you assign this right to an account by using the Windows Group Policy Editor.

So if the /PAE option allows us to address memory above 4GB and /3GB allows us to get an extra 1GB from Windows below 4GB, then to obtain the maximum amount of memory for SQL Server we should use both, right? Well, maybe not...

/3GB AND /PAE

When using PAE, Windows uses memory below 4GB to map to memory above 4GB. The more memory above 4GB to map to, the more memory below 4GB is required for the mapping. The magic number is 16GB. As shown in table 7.1, for systems with more than 16GB of memory, you must not use /3GB (or increaseuserva in Windows Server 2008) with /PAE. If you do, only 16GB will be addressable, and any additional memory beyond that is wasted.

Figure 7.2. The Group Policy Editor can be used to assign the Lock Pages in Memory right to the SQL Server service account.

| Startup option | Use if system RAM is... |

|---|---|

| Default settings | <4GB |

| /3GB (or increaseuserva) | 4GB |

| /3GB and /PAE | 5-16GB |

| /PAE | >16GB |

As I mentioned earlier, the /3GB option is known to cause stability issues in some circumstances, so even with systems containing between 5GB and 16GB of RAM, you must use this setting with caution.

One of the nice things about 64-bit systems is that all of the configuration issues we've just covered are no longer of concern.

7.1.2. 64-bit memory management

Unlike 32-bit systems, 64-bit systems don't require the memory configuration just described. The full complement of system RAM can be accessed by all SQL Server components without any additional configuration.

The one optional memory configuration for 64-bit systems is setting the Lock Pages in Memory right, as covered earlier. While this setting is optional for a 64-bit system, locking pages in memory is beneficial in order to prevent Windows from paging out SQL Server's memory. If you don't enable this setting, certain actions such as large file copies can lead to memory pressure with Windows paging, or trimming, SQL Server's memory. This sometimes leads to a sudden and dramatic reduction in SQL Server performance, usually accompanied by the "A significant part of sql server process memory has been paged out..." message. Setting the Lock Pages in Memory option prevents such incidents from occurring, and is therefore a recommended setting. Note that Windows Server 2008 handles memory trimming a lot better than 2003.

Regardless of the processor platform (32- or 64-bit), one of the important memory configuration tasks is to set the minimum and maximum server memory values.

7.1.3. Setting minimum and maximum memory values

When SQL Server starts, it acquires enough memory to initialize, beyond which it acquires and releases memory as required. The minimum and maximum memory values control the upper limit to which SQL Server will acquire memory (maximum), and the point at which it will stop releasing memory back to the operating system (minimum).

As you saw earlier in figure 7.1, the minimum and maximum memory values for an instance can be set in SQL Server Management Studio or by using the sp_configure command. By default, SQL Server's minimum and maximum memory values are 0 and 2,147,483,647, respectively. The default min/max settings essentially allow SQL Server to cooperate with Windows and other applications by acquiring and releasing memory in conjunction with the memory requirements of other applications.

On small systems with a single SQL Server instance, the default memory values will probably work fine in most cases, but on larger systems we need to give this a bit more thought. Let's consider a number of cases where setting min/max values is required, beginning with systems that lock pages in memory.

Lock Pages in Memory

As you know, when the Lock Pages in Memory setting is enabled, SQL Server's memory will not be paged out to disk. This is clearly good from a SQL Server performance point of view, but consider a case where the maximum memory value isn't set, and SQL Server is placed under significant load. The default memory settings don't enforce an upper limit to the memory usage, so SQL Server will continue to consume as much memory as it can get access to, all of which will be locked and therefore will potentially starve other applications (including Windows) of memory. In some cases, this memory starvation effect can lead to system stability issues.

In SQL Server 2005 and above, even with the Lock Pages in Memory option enabled, SQL Server will respond to memory pressure by releasing some of its memory back to the operating system. However, depending on the state of the server, it may not be able to release memory quickly enough, again leading to possible stability issues.

For the reasons just outlined, systems that use the Lock Pages in Memory option should also set a maximum memory value, leaving enough memory for Windows. We'll cover how much to leave shortly.

Multiple instances

A server containing multiple SQL Server instances needs special consideration when setting min/max memory values. Consider a server with three instances, each of which is configured with the default memory settings. If one of the instances starts up and begins receiving a heavy workload, it will potentially consume all of the available memory. When one of the other instances starts, it will find itself with very little physical memory, and performance will obviously suffer.

In such cases, I recommend setting the maximum memory value of each instance to an appropriate level (based on the load profile of each).

Shared servers

On servers in which SQL Server is sharing resources with other applications, setting the minimum memory value helps to prevent situations in which SQL Server struggles to receive adequate memory. Of course, the ideal configuration is one in which the server is dedicated to SQL Server, but this is not always the case, unfortunately.

A commonly misunderstood aspect of the minimum memory value is whether or not SQL Server reserves that amount of memory when the instance starts. It doesn't.

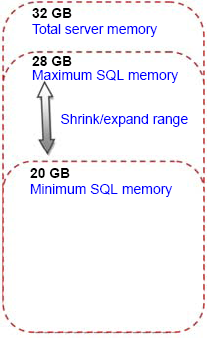

When started, an instance consumes memory dynamically up to the level specified in the maximum memory setting. Depending on the load, the consumed memory may never reach the minimum value. If it does, memory will be released back to the operating system if required, but will never drop below the value specified in the minimum setting. Figure 7.3 shows the relationship between a server's memory capacity and SQL Server's minimum and maximum memory values.

Figure 7.3. A SQL Server instance will consume memory up to the level specified by the maximum. Once past the minimum level, it will not release memory below the minimum level.

Clusters

As you learned in chapter 5, configuring memory maximums in a multi-instance cluster is important in ensuring stability during failover situations. You must ensure that the total maximum memory values across all instances in the cluster is less than the total available memory on any one cluster node that the instances may end up running on during node outage.

Setting the maximum memory values in such a manner is important to ensure adequate and consistent performance during failover scenarios.

Amount of memory to leave Windows

One of the important memory configuration considerations, particularly for 32-bit AWE systems and 64-bit systems that lock pages in memory, is the amount of memory to leave Windows. For example, in a dedicated SQL Server system with 32GB of memory, we'll obviously want to give SQL Server as much memory as possible, but how much can be safely allocated? Put another way, what should the maximum memory value be set to? Let's consider what other possible components require RAM:

Windows

Drivers for host bus adapter (HBA) cards, tape drives, and so forth

Antivirus software

Backup software

Microsoft Operations Manager (MOM) agents, or other monitoring software

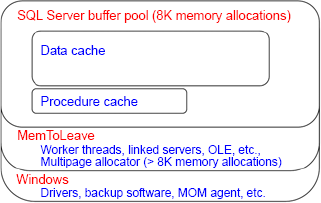

As shown in figure 7.4, in addition to the above non-SQL Server components, there are a number of SQL Server objects that use memory from outside of the buffer pool—that is, the memory area defined by the maximum memory setting. Memory for objects such as linked servers, extended stored procedures, and object linking and embedding (OLE) automation objects is allocated from an area commonly called the MemToLeave area.

Figure 7.4. The SQL Server buffer pool, as defined by the Max Server Memory setting, must share the server's physical memory with other memory consumers such as Windows and MemToLeave.

As you can see, even on a dedicated server, there's a potentially large number of components vying for memory access, all of which comes from outside the buffer pool, so leaving enough memory is crucial for a smooth-running server. The basic rule of thumb when allocating maximum memory is that the total of each instance's maximum memory values should be at least 2GB less than the total physical memory installed in the server; however, for some systems, leaving 2GB of memory may not be enough. For systems with 32GB of memory, a commonly used value for Max Server Memory (totaled across all installed instances) is 28GB, leaving 4GB for the operating system and other components that we discussed earlier.

Given the wide variety of possible system configuration and usage, it's not possible to come up a single best figure for the Max Server Memory value. Determining the best maximum value for an environment is another example of the importance of a load-testing environment configured identically to production, and an accurate test plan that loads the system as per the expected production load. Load testing in such an environment will satisfy expectations of likely production performance, and offers the opportunity to test various settings and observe the resulting performance.

One of the great things about SQL Server, particularly the recent releases, is its self-tuning nature. Its default settings, together with its ability to sense and adjust, make the job of a DBA somewhat easier. In the next section, we'll see how these attributes apply to CPU configuration.