5

Human Factors in UAV

5.1 Introduction

Several human factors in human–unmanned vehicle interaction are considered here through a synthesis of existing research evidence in the military domain. The human factor issues covered include the potential for the application of multimodal displays in the control and monitoring of unmanned vehicles (UVs) and the implementation of automation in UVs. Although unmanned aerial vehicles (UAVs) are the focus of this book, this chapter reviews research evidence involving the supervisory control of unmanned ground vehicles (UGVs), as the results are relevant to the control of UAVs. This chapter also aims to highlight how the effectiveness of support strategies and technologies on human–UV interaction and performance is mediated by the capabilities of the human operator.

Allowing operators to remotely control complex systems has a number of obvious benefits, particularly in terms of operator safety and mission effectiveness for UVs. For UVs, remote interaction is not simply a defining feature of the technology but also a critical aspect of operations. In a military setting, UAVs can provide ‘eyes-on’ capability to enhance commander situation awareness in environments which might be risky or uncertain. For example, in a ‘hasty search’ the commander might wish to deploy UAVs to provide an ‘over-the-hill’ view of the terrain prior to committing further resource to an activity [1]. In addition, advances in image-processing and data-communication capabilities allow the UAV to augment the operators’ view of the environment from the multiple perspectives using a range of sensors. Thus, augmentation is not simply a matter of aiding one human activity, but can involve providing a means of enhancing multiple capabilities (for example, through the use of thermal imaging to reveal combatants in hiding).

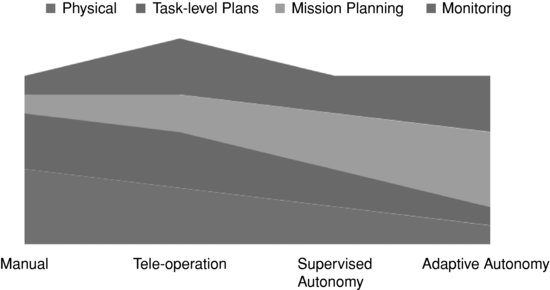

In parallel with improved imaging and sensing capabilities of UAVs comes the increasing autonomy of UAVs [2]. Thus, for example, UAVs can fly with little direct intervention; rather, the operator defines waypoints to which the vehicle routes itself. This leaves the operator free to concentrate on the control and analysis of the payload, i.e., onboard sensors. While UAVs are increasing in their ability to fly autonomously, it is likely that human-in-the-loop operation will remain significant. There are several reasons why there might need to be a human in the control loop, particularly for military UAVs. The first relates to accountability and responsibility for offensive action taken by the UAV; it remains imperative that any decisions to attack a target are made by a human operator. The second reason why there might need to be a human in the loop relates to the interpretation of imagery of potential targets (and the need to decide whether to reroute to take advantage of ‘opportunistic’ targets); even with excellent image-processing capabilities, the UAV might require assistance in dealing with ambiguity or changes in mission plan. The third reason relates to immediate change in UAV operation, either as the result of malfunction or of hostile intervention. These reasons imply a hierarchy of intervention, from high-level goals and accountability to low-level operation and monitoring. What is also apparent from this brief discussion is that the ‘control loop’ extends well beyond piloting the UAV. Schulte et al. [3] argue that there is a need to shift design from the conventional view of supervisory control (in which the human operator monitors and manages the behaviour of an autonomous system) to the development of cognitive and cooperative automation (in which the autonomous system works with its human operators to share situation awareness (SA), goals and plans). A common observation is that the tasks that people perform change as technology becomes more autonomous, i.e., from physical to cognitive tasks [4]. This shift in human activity, from physical to cognitive work, is illustrated in Figure 5.1.

Figure 5.1 Relative contribution of human activity to increasing levels of automation

This shift in human activity raises the question of what might constitute the role of the human operator in UAV operations. Alexander et al. [5] identify seven primary roles for humans in UAV operations (although they acknowledge that there may well be far more roles possible). These roles relate to the earlier discussion of the need to retain human-in-the-loop interaction with UAV, and are:

- Providing the capability to intervene if operations become risky, i.e., a safety function.

- Performing those tasks that cannot yet be automated.

- Providing the ‘general intelligence’ for the system.

- Providing liaison between the UAV system and other systems.

- Acting as a peer or equal partner in the human–UAV partnership.

- Repairing and maintaining the UAV.

- Retrieving and rescuing damaged UAVs.

It is worth noting that these roles are often (but not always) distributed across different members of the UAV crew and can require different skill sets. Furthermore, the last role, in particular, introduces some interesting issues for human factors because it moves the human from remote operator into the risky area in which the UAV is operating. For example, Johnson [6] describes an incident in which a British officer was killed attempting to retrieve a downed UAV.

As the level of autonomy increases, so the role of the human operator becomes less one of direct control and more one of monitoring and supervision. In other words, the human operator can potentially become removed from the direct ‘control loop’ and given tasks of monitoring or a disjointed collection of tasks that cannot be automated. The issue of human-in-the-loop has been discussed above, but failure to consider the ramifications of designing to explicitly remove the operator has the potential to lead to a state of affairs that has been termed the ‘irony’ of automation [7]. This raises two issues. First, humans can be quite poor at monitoring, particularly if this involves detecting events with low probability of occurrence [8]. As discussed later in this chapter, this performance can be further impaired if the information displayed to the operator makes event detection difficult to perform. Second, the role of humans in automated systems is often intended to be the last line of defence, i.e., to intervene when the system malfunctions or to take over control when the system is unable to perform the task itself. If the user interface does not easily support this action, then human operators can either fail to recognize the need to intervene or can make mistakes when they do intervene; if the human has been removed from the control loop, and has not received sufficient information on system status, then it can be difficult to correctly determine system state in order to intervene appropriately. A report from the Defense Science Study Board [9] suggested that 17% of UAV accidents were due to human error, and most of these occur at take-off or landing. In order to reduce such ‘human errors’, some larger UAVs, such as Global Hawk, use automated take-off and landing systems. However, our discussions with subject matter experts suggest there continue to be cases of medium-sized UAVs (such as the Desert Hawk flown by UK forces) crashing on landing. One explanation of these accidents is that the configuration of cameras on these UAVs is such that they support the primary reconnaissance task but, because the cameras are mounted to support a view of the ground while in flight, they do not readily support the forward view required to effectively land the UAV. The situation could be remedied by the inclusion of additional cameras, but obviously this adds to the weight of the payload and the need to manage additional video-streams. Another remedy would be to increase the autonomy of these UAVs to take off and land themselves, but this has cost implications for what are supposed to be small and relatively cheap vehicles. This raises the question of how operators remotely, or teleoperate, UAVs.

5.2 Teleoperation of UAVs

According to Wickens [10], there are three categories of automation. Each category has different implications for the role of the human operator.

i. Substitution. The first category concerns operations that the human cannot perform due to inherent limitations, either in terms of physical or cognitive ability. An example of this type of automation relating to teleoperation would be the control of a high-speed missile or a modern fighter aircraft, where the time delay in operator control could cause instability to the flight dynamics of the system. Thus, some teleoperated systems require automation in order to maintain stable and consistent performance, where human intervention could cause instability and inconsistency.

ii. Addition. The second category of automation concerns systems which perform functions that are demanding or intrusive for human performance. In other words, performance of these functions might cause undue increases in workload or might lead to disruption of primary task activity. An example of such automation is the ground proximity warning system (GPWS), which provides an auditory warning when a combination of measures indicates that the air vehicle is unacceptably close to the ground [11]. In this example, the automation continually monitors specific variables and presents an alert to the operator when the values exceed a defined threshold.

iii. Augmentation. The third example of automation can be considered as a form of augmentative technology. In other words, automation is used to complement and support human activity, particularly where the human is fallible or limited. One example of this is the use of automation to reduce clutter on a display, for example by merging tracks on a radar screen on the basis of intelligent combination of data from different sources. This could prove beneficial in teleoperation, particularly if several UVs are to be tracked and managed. However, this can lead to problems as the data fusion could reduce system transparency and remove the operator from the control loop [12].

In his review of human–machine systems built between the mid-1940s and mid-1980s, Sheridan [13] proposed four trends. The first trend was the removal of the human operator either ‘up’ (super-) or ‘away’ (tele-) from direct operation of the system under control. This meant significant changes in the form of control the operator could exercise over the system. The most obvious consequence of these changes was the reduction in direct physical and local contact with the system. This suggests the issue of engagement between human and system as a central theme. The question of engagement with teleoperated systems raises specific human factor questions about the operation of the system and about the type of feedback that the human operator can receive. This in turn leads to questions about the design of the control loop and the operator's position in that control loop. For example, a teleoperated system could be capable of fully autonomous flight, leaving the operator with the task of monitoring performance. The notion of a fully autonomous system introduces Sheridan's concept of super-operated systems, where the operator is effectively a reporting-line superior to the UAV – a manager who requires achievement of a specific goal without dictating means of achievement.

The second trend identified by Sheridan was the need to maintain systems with multiple decision-makers, each with partial views of the problem to be solved and the environment in which they were performing. This raises questions about teamwork and collaborative working. Thus, one needs to consider the role of teleoperation within broader military systems, particularly in terms of communications [14]. From this approach, one ought to consider the role of the UAV within a larger system, for example, if the UAV is being used to gather intelligence, how will this intelligence be communicated from the operator to relevant commanders? This might require consideration not only of the workstation being used to operate/monitor the UAV, but also the means by which information can be compiled and relayed to other parties within the system.

From a different perspective, if the role of the operator is reduced to monitoring and checking (possibly leading to loss of appreciation of system dynamics), operational problems may be exacerbated by the operator's degradation of skill or knowledge. While it might be too early to consider possible socio-technical system design issues surrounding teleoperation systems, it is proposed that some consideration might be beneficial. A point to note here, however, is that allocation of function often assumes a sharing of workload. Dekker and Wright [15] make the important point that not only will tasks be allocated to change workload levels, but that the very sharing of tasks will significantly transform the work being performed (by both the machine and the human). In a review of ‘adaptive aiding’ systems, Andes [16] argues that specific human factor issues requiring attention include: the need to communicate the current aiding mode to the operator, the use of operator preference for aiding (as evidenced by the studies reviewed above), and human performance effects of adding or removing aiding.

The third trend identified by Sheridan relates to the shift in operator skill from direct (and observable) physical control to indirect (unobservable) cognitive activity, often related to monitoring and prediction of system status. This relates, in part, to the question of engagement raised above. However, this also relates to the issue of mental models held by operators and the provision of information from the system itself.

This chapter has already noted the shift from physical to cognitive interaction between human operators and UAVs, leading to a tendency towards supervisory control. Ruff et al. [17] compared two types of supervisory control of UAVs – management by consent (where the operator must confirm automation decisions before the system can act) and management by exception (where the system acts unless prevented by the human operator). While management by consent tended to result in superior performance on the experimental tasks, it also (not surprisingly) resulted in higher levels of workload, especially when the number of UAVs under control increased from 1 to 4. In a later study, Ruff et al. [18] found less of an effect of management by consent. Indeed, the latter study suggested that participants were reluctant to use any form of automated decision support.

5.3 Control of Multiple Unmanned Vehicles

In their analysis of operator performance, Cummings and Guerlain [19] showed that it was possible for an operator to effectively monitor the flight of up to 16 missiles, with more than this amount leading to an observed degradation in performance. They compared this with the limit of 17 aircraft for air traffic controllers to manage. In a modelling study, Miller [20] suggested a limit of 13 UAVs to monitor. However, it should be noted that monitoring the movement of vehicles in airspace was only part of a UAV operator's set of tasks. When the number of control tasks increases, or with the demands of monitoring multiple information sources, it is likely that the number of UAVs will be much smaller. Indeed, Galster et al. [21] showed that operators could manage 4, 6 or 8 UAVs equally well unless the number of targets to monitor also increased. Taylor [22] suggested that 4 UAVs per operator is a typical design aim for future systems. Cummings et al. [23] presented a meta-review of UAVs with different levels of automation, and suggested that studies converge on 4–5 vehicles when control and decision-making were primarily performed by the operator (but 8–12 when UAVs had greater autonomy). Their model of operator performance suggested that control of 1 UAV is superior to 2, 3 or 4 (which result in similar performance), with degradation in performance when controlling 5 or more UAVs. Liu et al. [24] found significant impairment in performance on secondary tasks (response to warnings or status indicators) when operators controlled 4 UAVs, in comparison with controlling 1 or 2 UAVs. Research therefore clearly demonstrates that the level of automation applied to unmanned vehicles needs to be considered when determining the number of autonomous vehicles an operator can control effectively, and there is growing consensus that operators struggle to monitor more than 4 UAVs.

5.4 Task-Switching

The concurrent management and control of multiple UAVs by human operators will lead to prolonged periods of divided attention, where the operator is required to switch attention and control between UAVs. This scenario is further complicated by additional secondary tasks that will be required, such as the operator communicating with other team members. A consequence of switching attention in a multitask setting is the difficulty experienced by an operator in reverting their attentional focus back to the primary task at hand, such as controlling unmanned assets in a timely manner affording the appropriate identification, detection and response during critical situations [25]. Whether or not an individual can effectively conduct two or more tasks at once has been the subject of much basic psychological research. Basic research in dual-task interference has highlighted that people have difficulty in dual-task scenarios, despite a common misconception that they can perform tasks simultaneously. This is found to be the case, even with tasks that are relatively simple compared to the complex dynamic conditions within a military context (e.g. [26, 27]). Laboratory research on the consequences of task-switching highlights that the responses of operators can be much slower and more error-prone after they have switched attention to a different task (e.g. [28--30]).

Squire et al. [31] investigated the effects of the type of interface adopted (options available to the operator), task-switching and strategy-switching (offensive or defensive) on response time during the simulated game, RoboFlag. Response time was found to be slower by several seconds when the operator had to switch between tasks, in particular when automation was involved. Similarly, switching between offensive and defensive strategies was demonstrated to slow response time by several seconds. Therefore the task-switching effect is also seen when operators managing multiple UVs switch between different strategies. However, when operators used a flexible delegation interface, which allowed operators to choose between a fixed sequence of automated actions or selectable waypoint-to-waypoint movement, response time decreased even when task or strategy switching was required. This advantage was ascribed to operators recognizing conditions where the automation was weak and thus needed to be overridden by tasking the unmanned vehicles in a different way.

Chadwick [32] examined operator performance when required to manage 1, 2 or 4 semi-autonomous UGVs concurrently. Their performance on monitoring, attending to targets and responding to cued decision requests and detecting contextual errors was assessed. Contextual errors came about when the UGV was operating correctly but inappropriately given the context of the situation. One example is when the navigation system of a UGV fails and the operator is required to redirect the UGV to the correct route. The UGV was unable to recognize contextual errors and couldn't alert the operator and so it was down to the operator to recognize and respond to it. The tasks participants were asked to conduct included the detection and redirection of navigation errors as well as attending to targets. An attentional limitation was evident when operators were required to detect contextual errors, the detection of which was found to be very difficult when control of multiple UGVs was expected. When 1 UGV was controlled, contextual errors were spotted rapidly (within 10 seconds) whereas when 4 UGVs were controlled, the response to these errors slowed to nearly 2 minutes. Chadwick argued that having to scan the video streams from 4 UGVs inhibited operators from focusing attention on each display long enough to understand what was going on in each display.

Task-switching has been found to have an impact on situation awareness (SA). For example, when operators are required to switch attention from a primary task (for example, the supervisory control of UAVs) to an intermittent secondary task (for example, a communication task) SA is reduced when they switch their attention back to the primary task [33, 34]. There is also evidence to suggest that task-switching may result in change blindness, a perceptual phenomenon which refers to an individual's inability to detect a change in their environment. This perceptual effect in turn may have an affect on SA. The impact of this phenomenon within a supervisory control task was investigated by Parasuraman et al. [35]. The study involved operators monitoring a UAV and a UGV video feed in a reconnaissance tasking environment. The operators were asked to perform four tasks, of which target detection and route planning were the primary tasks. A change detection task and a verbal communication task were used as secondary tasks to evaluate SA. These latter two tasks interrupted the two former (primary) tasks. The routes for the UAV and UGV were programmed and so participants only had to control a UAV if it needed to navigate around an obstruction. For the change detection tasks, participants were asked to indicate every time a target icon, that they had previously detected, had unexpectedly changed position on a map grid. Half of these changes took place when participants were attending to the UAV monitoring task and the other half occurred during a transient event, when the UGV stopped and its status bar flashed. The results demonstrated the low accuracy of participants at detecting changes in the position of target icons, in particular during the transient events. Parasuraman et al.'s results indicate that most instances of change blindness occurred in the presence of a distractor (a transient event). However, change blindness was also observed when participants switched their attention from monitoring the UAV to monitoring the UGV.

In the wake of the current military need to streamline operations and reduce staff, research has been conducted on designing systems that will allow a shift in the control of a single UAV by multiple operators to a single operator. Similarly, there is a drive towards single operators controlling multiple vehicles, which applies to land, air and underwater vehicles [23]. This will require UAVs to become more autonomous and the single operator would be expected to attend to high-level supervisory control tasks such as monitoring mission timelines and responding to mission events and problems as they emerge [36, 37]. Each UV carries out its own set plan, and as a result a single operator may experience high workload when critical tasks for more than one UV require their attention concurrently. This situation can result in processing ‘bottlenecks’. The use of an automated agent to signal the likelihood of processing bottlenecks occurring has been shown to aid the operator by affording them the opportunity to prepare a course of action to lessen the effects of any bottleneck, if necessary [36]. For example, Cummings et al. [37] conducted a decision support experiment that examined the effectiveness of an intelligent schedule management support tool that provided different levels/types of automated decision support and alerted operators to potential scheduling conflicts for 4 UAVs. The effectiveness of a timeline display for each UAV which included an intelligent configurable display was examined. The configurable display, presenting potential scheduling conflicts, was called ‘Star Visualization’ (StarVis). A configurable display was a single geometrical form that mapped multiple variables onto it and changes in these individual variables resulted in form variation. The variables incorporated within the display were, for example, the type of schedule problem (late arrival or time-on-targets conflict) and the number of targets involved in a specific problem type and their relative priorities (low, medium or high). The configurable display featured a grey rectangle, which represents the ideal state. As problems were detected by the automation, grey triangles appeared on the display for one or more target problems.

The design of the configurable display was such that the emerging features (grey triangles) identified potential scheduling conflicts and these features influenced direct perception/action. This provided operators with the capability to use more effective perceptual processes as opposed to adopting cognitively demanding processes that require, for example, memory. This configuration also allowed operators to denote whether or not scheduling conflicts were present not only for one, but for all the UAVs at a glance.

The StarVis not only displayed current scheduling conflicts but also provided operators with the opportunity to view the effect of an accepted time-on-target delay request, for example, before they performed any action. This aided the decision-making process. Two different implementations of the configurable display were examined, the local StarVis and the global StarVis. In the local StarVis version, each UAV timeline only displayed problems and how time-on-target delay requests affected that specific UAV's schedule. Conversely, the global StarVis only displayed how a time-on-target delay request for a single UAV would impact on all UAVs. Cummings et al. [37] found that operators presented with the local StarVis version of the decision support aid performed better than those provided with the global StarVis version and no visualization for support. Moreover, when future problems for all 4 UAVs were presented in the global context, the performance of operators fell to a level that was equivalent to performance found when no decision support was provided. The fact that the global display did not aid performance was explained with reference to the fact that the global visualization presented information that was not vital in the decision-making process. In addition, operators had to look at all UAV displays to see the consequences of a decision. The local StarVis presented ‘just enough’ information to the operator to allow for efficient decision-making, a characteristic that would be effective in a time-critical command and control environment. Cummings et al. [37] suggest that these findings highlight the difficulties surrounding the design of automated decision support tools, particularly with respect to how information is mapped and presented on a display.

Research has indicated ways in which UV systems can be designed so as to support an operator's performance on high-level supervisory control tasks for multiple UVs by enabling them to manage the workload across UV systems effectively. Work with delegation interfaces showed that providing an operator flexibility in their decision-making on how to maintain awareness of the state of a UV or how to task it reduced the negative effects of task-switching [31]. Cummings et al. [37] demonstrated how decision support tools can help operators perform high-level supervisory control tasks for multiple UVs by helping them manage the workload effectively. Moreover, they recommend that decision support displays be designed to leverage the ability of operators to not only notice and identify the nature of a problem but also to help operators solve problems. Thus, any decision support tool adopted must present useful solutions to emerging critical events as opposed to only displaying visualizations of potential critical events requiring attention.

5.5 Multimodal Interaction with Unmanned Vehicles

There have been several studies of the potential application of multimodal display in the control and monitoring of UAVs (as the following review illustrates) but less work to date on the potential for multimodal control of payload. In such systems, feedback is typically visual and audio, although there is growing interest in uses of haptic feedback. Multimodal display appeared to both reduce UAV operator workload and provided access to multiple streams of information [38--41]. Auditory presentation of some information can be combined with ongoing visual tasks [42], and these improvements can be particularly important when dealing with multiple UAVs, providing they do not interfere with other auditory warnings [43]. However, combining the control of a UV with other tasks can impair performance on target detection [38,44] and reduce SA [45]. Chen [46] reported studies in which aided target recognition (AiTR) significantly enhanced operators’ ability to manage the concurrent performance of effector and vehicle control, in comparison with performing these tasks with no support.

Draper et al. [47] compared speech and manual data entry when participants had to manually control a UAV, and found speech yielded less interference with the manual control task than manual data entry. Chen et al. [48] showed that target detection was significantly impaired when participants had to combine search with control of the vehicle, in comparison with a condition in which the vehicle was semi-autonomous. Baber et al. [49] looked at the use of multimodal human–computer interaction for the combined tasks of managing the payload of an autonomous (simulated) UV and analysing the display from multiple UVs. Speech was the preferred mode of choice when issuing target categorization, whereas manual control was preferred when issuing payload commands. Speech combined with gamepad control of UVs led to greater performance on a secondary task. Performance on secondary tasks degraded when participants were required to control 5 UVs (supporting work cited earlier in this chapter).

The support for the benefits of multimodal interaction with UAVs (for both display of information and control of payload) not only speaks of the potential for new user interface platforms but also emphasizes the demands on operator attention. A common assumption, in studies of multimodal interfaces, is that introducing a separate modality helps the operator to divide attention between different task demands. The fact that the studies show benefits of the additional modality provides an indication of the nature of the demands on operator attention. Such demands are not simply ‘data limited’ (i.e., demands that could be resolved by modifying the quality of the data presented to the operator, in terms of display resolution or layout) but also ‘resource limited’ (i.e., imply that the operator could run out of attentional ‘resource’ and become overloaded by the task demands).

5.6 Adaptive Automation

The supervision of multiple UVs in the future is likely to increase the cognitive demands placed on the human operator and as such timely decision-making will need to be supported by automation. The introduction of decision aids into the system is likely to increase the time taken for tactical decisions to be made. These decision aids will be mandated owing to the high cognitive workload associated with managing several UVs [19]. It is important to consider the human–automation interaction in terms of how information gathering and decision support aids should be automated and at what level (from low (fully manual) to high (fully autonomous) automation). Consideration of the type of automation required is also paramount. The type and level of automation can be changed during the operation of a system, and these systems are referred to as adaptive or adaptable systems. Parasuraman et al. [50] argued that decision support aids should be set at a moderate level of automation, whereas information gathering and analysis functions can be at higher levels of automation, if required. However, the human operator is ultimately responsible for the actions of a UV system and thus even highly autonomous assets require some level of human supervision.

A level of human control is especially important when operating in high-risk environments, such as military tasks involving the management of lethal assets where, due to unexpected events that cannot be supported by automation, the operator would require the capability to override and take control. Parasuraman et al. therefore proposed moderate levels of automation of decision support functions because highly reliable decision algorithms cannot be assured and are thus coined ‘imperfect (less than 100%) automation’. In a study by Crocoll and Coury [51], participants were given an air-defence targeting task that required identification and engagement. The task incorporated imperfect automation where participants received either status information about a target or decision automation which provided recommendations regarding the identification of a target. Decision automation was found to have a greater negative impact on performance than information automation. Crocoll and Coury argued that when provided with only information concerning the status of a target, participants use this information to make their own decisions. This is because information automation is not biased towards any decision in particular, presenting the operator with the raw data from which they can generate alternative choices and thus lessen the effects of imperfect automation. Furthermore, the costs of imperfect decision support are observed for various degrees of automation [52]. Moderate levels allow the human operator to be involved in the decision-making process and ultimately the decision on an action is theirs. Moreover, automation in systems has not always been found to be successful, with costs to performance stemming from human interaction with automated systems which have involved unbalanced cognitive load, overreliance and mistrust [53]. Therefore, the analysis of an appropriate level of automation must consider the impact of imperfect automation, such as false alarms and incorrect information, on human–system interaction [50].

Context-sensitive adaptive automation has been shown to mitigate the issue of skills fade, reduced SA and operator overreliance arising from static (inflexible) automation introduced into systems [54--56]. In contrast to decision aids or alerts implemented in static automation, those presented using adaptive automation are not fixed at the design stage. Rather, their delivery is dictated by the context of the operational environment. The delivery of this adaptive automation by the system is based on operator performance, the physiological state of the operator or critical mission events [57].

The flexible nature of adaptive automation allows it to link with the tactics and doctrine used during mission planning. To illustrate, adaptive automation based on critical events in an aircraft air defence system would initiate automation only in the presence of specific tactical environmental events. In the absence of these critical events the automation is not initiated. To our knowledge a small number of empirical studies on adaptive automation to aid human management of UVs have been conducted (for example, [35,58,59]. Parasuraman et al. [35] presented adaptive support to participants who had to manage multiple UVs. This adaptive automated support was based on the real-time assessment of their change detection accuracy. Parasuraman et al. [35] compared the effects of manual performance, static (model-based) automation and adaptive automation on aspects of task performance including change detection, SA and workload in managing multiple UVs under two levels of communications load. Static (model-based) automation was invoked at specific points in time during the task based on the prediction of the model that human performance was likely to be poor at that stage. In contrast, adaptive automation was performance-based and only invoked if the performance of the operator was below a specified criterion level. The results demonstrated that static and adaptive automation resulted in an increase in change detection accuracy and SA and a reduction in workload compared to manual performance. Adaptive automation also resulted in a further increase in change detection accuracy and an associated reduction in workload in comparison to performance in the static condition. Participants also performed better on the communications task, providing further evidence that adaptive automation acts to reduce workload. Participants using static automation responded more accurately to communications than did those using manual performance. Parasuraman et al.'s findings demonstrate that adaptive automation leads to the availability of more attentional resources. Moreover, its context-sensitive nature supports human performance, in this case, when the change detection performance of participants is reduced indicating reduced perceptual awareness of the evolving mission events.

Parasuraman et al. [50] point out issues with adaptive automation. First, system unpredictability may impact on operator performance. Second, systems designed to reduce cognitive load may actually work to increase it. User acceptance is a problem raised in systems that implement adaptive automation, where the decision to invoke automation or pass control back to the operator is made by the system. Operators who see themselves as having manual control expertise may not be willing to comply with the authority of a system. These potential limitations highlight the issue of the efficacy of adaptive automation when compared to adaptable systems, where the human operator decides when and at which point in time to automate. However, providing the human operator with the capability to make decisions on automation may also act to increase workload. Therefore, Miller and Parasuraman [60] argue for a trade-off between increased workload versus increased unpredictability where automation is started by the system or by the human operator.

5.7 Automation and Multitasking

Several studies have investigated the influence of different factors on the ability of individuals to concurrently manage or operate unmanned vehicles whilst performing other primary tasks. Mitchell [61] carried out a workload analysis, using the Improved Performance Research Integration Tool (IMPRINT), on crewmembers of the Mounted Combat System (MCS). The MCS is a next generation tank, which forms part of the US Army Future Combat System (FCS). The FCS vision for the MCS is that it will be operated by three soldiers (vehicle commander, gunner and driver), one of which will be required to concurrently operate the UGV. As no operator is dedicated to the operation and control of the platoon's UGV, Mitchell modelled the workload of each crewmember in order to examine how the UGV could be most effectively used and who would be most appropriate to operate it. The gunner was observed to experience the least instances of work overload and was able to effectively assume control of the UGV and conduct the secondary tasks associated with its operation. However, scenarios that required the teleoperation of the UGV led to consistent instances of cognitive overload, rendering concurrent performance of the primary tasks of detecting targets more difficult. Mitchell's IMPRINT analysis also identified instances where the gunner ceased to perform their primary tasks of detecting and engaging targets in order to conduct UGV control tasks, which could have serious consequences during a military operation. This is supported by research demonstrating that target detection is lower when combined with teleoperation of a UGV in comparison to when the UGV is semi-autonomous [48]. UGV operation requires more attention, through more manual operation (teleoperation) and/or manipulation (for example, via an interface to label targets on a map) than simply monitoring information on a display. Moreover, situation awareness of UGV operators has been observed to be better when the UGV has a higher level of automation [45].

The level of automation and consideration of the cognitive capabilities of the human, in particular the demands placed on attention, is of critical importance. In general, lower levels of automation result in a higher workload whereas higher levels of automation produce a lower workload [62]. As discussed, research on adaptive systems indicates moderate levels of workload will produce optimal human performance. Unfortunately, automation has not always led to enhancement in system performance, which is mainly due to problems in using automated systems experienced by human operators. Examples of human–automation interaction problems stemming from a level of automation which is too low are: cognitive overload in time-critical tasks, fatigue and complacency and an increase in human interdependency and decision biases [63, 64]. In contrast, problems with human–automation interaction resulting from a level of automation which is too high are: an increase in the time taken to identify and diagnose failures and commence manual take-over when necessary, cognitive and/or manual skill degradation and a reduction in SA [63]. Thus, given the consequences of introducing an inappropriate level of automation into a system, automation should only be utilized when there is a requirement for its introduction.

Simulation experiments have been conducted in order to validate the results of Mitchell's [61] IMPRINT analysis and to assess the effect of AiTR for the combined job of gunner and UGV operator. Chen and Terrence [65] found that assisting the gunnery task using AiTR information significantly enhanced performance. Participants in this multitasking scenario not only had to detect and engage hostile targets but also neutral targets, which were not cued by the AiTR system. Significantly fewer neutral targets were detected when participants were required to teleoperate the UGV or when AiTR was employed to aid the gunnery tasks. This was taken to indicate that some visual attention had been removed from the primary gunnery task. A plausible explanation put forward by Chen and Terrence is that cueing information delivered by the AiTR augmented the participants’ ability to switch between the primary gunnery task and secondary task of controlling the UGV. Whilst enhancing task-switching capability, AiTR assistance had a negative impact on the detection of the neutral targets. In addition to the enhancement of gunnery (primary) task performance aided by AiTR, participants’ concurrent (secondary) task performance was observed to improve when the gunnery task was aided by AiTR. This was found for both UGV control and communication tasks. The work of Chen and Terrence demonstrated how reliable automation can improve performance on an automated primary task and a simultaneous secondary task.

Chen and Joyner [66] also conducted a simulation experiment to examine both performance and cognitive workload of an operator who performed gunner and UGV tasks simultaneously. They found that performance on the gunnery task degraded significantly when participants had to concurrently monitor, control or teleoperate an unmanned asset relative to their performance in the baseline control condition featuring only the gunnery task. Furthermore, the degradation in gunnery task performance was a function of the degree of control of the UV, such that the lowest level of performance was observed when the gunner had to teleoperate the unmanned asset concurrently. Looking at the concurrent task of operating the UV, performance was worst when the UGV that participants had to control was semi-autonomous. This finding was interpreted as indicative of an increased reliance on automation in a complex high-load multitasking scenario and of a failure to detect more targets that were not cued by automation. In contrast to Chen and Terrence [65], the semi-autonomous system in Chen and Joyner's simulation experiment was imperfectly reliable and thus seems more representative of real-world environments where AiTR systems are never perfectly reliable.

Research has also examined how unreliable AiTR systems can moderate task performance of operators. There are two types of alert in unreliable AiTR systems, those that deliver false alarms (signal the presence of a target when no target is present) and those that present misses (fail to alert an operator to the presence of a target). Research has demonstrated that the performance of participants on an automated primary task, such as monitoring system failures, is degraded when the false alarm rate is high [67]. This illustrates how high false alarm rates reduce operator compliance in false alarm prone (FAP) AiTR systems and as a consequence operators take fewer actions based on the alerts presented. High miss rates, however, impair performance on a concurrent task more than on an automated primary task as participants have to focus more visual attention to monitor the primary task. As a result, the reliance on automation is reduced. That is, there is a reduction in the assumption that an automated system is working properly and the failure to take precautionary actions when no alerts are emitted [67, 68]. However, research has refuted the idea that FAP and miss prone (MP) AiTR systems have independent effects on primary and concurrent task performance and how personnel use AiTR systems. A greater degradation in performance on automated tasks has been observed in FAP systems relative to that found in MP systems [69]. Nevertheless, performance on a concurrent robotics task was found to be affected equally negatively by both FAP and MP alerts. Both operator compliance and reliance was moderated by FAP systems, whereas automation producing high miss rates was found to only influence the reliance of the operator on the AiTR system [69].

5.8 Individual Differences

5.8.1 Attentional Control and Automation

Basic research has demonstrated that performance in multitasking environments is mediated by individual differences such that some individuals are less susceptible to performance impairment during multitask scenarios. For example, Derryberry and Read [70] conducted an experiment which looked at anxiety-related attentional biases and how these are regulated by attentional control during a spatial orienting task. They demonstrated that individuals with better attentional control could allocate their attentional resources more effectively and efficiently and were found to be better at resisting interference in a spatial orienting task.

Chen and Joyner [66] examined whether operators with higher perceived attentional control (PAC) could perform a gunnery and UGV control task better relative to operators exhibiting lower attentional allocation skills when they also had to perform a concurrent intermittent communication task. This concurrent task simulated the communication that would go on between the gunner and other tank crew members. PAC was measured using The Attention Control Questionnaire [70], which consists of 21 items that measure attentional control in terms of attention focus and shifting. Derryberry and Read [70] reported that factor analysis has confirmed the scale measures the general capacity for attentional control and has revealed correlated sub-factors associated with attention focus and attention shifting. An example of a sub-factor linked to attention focus is, ‘My concentration is good even if there is music in the room around me’ [70, p. 226]. An example of an item measuring attention shifting is, ‘It is easy for me to read or write while I'm also talking on the phone’ [70, p. 226].

Chen and Joyner's [66] findings provided partial support for the notion that operators who have higher attentional control are better able to allocate their attention between tasks. Operators reporting higher PAC performed the concurrent (communication) task more effectively in comparison to lower PAC operators, in particular when the UGV control tasks required more manipulation and attention. However, there was no significant difference between low and high PAC individuals in terms of performance on the gunnery and UGV control tasks. Chen and Joyner argue that the operators channelled most of their attentional resources into the gunnery and UGV control tasks (even more so for the UGV teleoperation) and that only operators with higher PAC could conduct the communication task more effectively. During the single gunnery task (baseline) and the UGV monitoring task, the high PAC and low PAC individuals displayed an equivalent level of performance for the communication task. Thus it seems that monitoring the video feed from a UGV left operators with sufficient visual attentional resources to perform the communication task.

Chen and Terrence [71] investigated the effect of unreliable automated cues in AiTR systems on gunners’ concurrent performance of gunnery (hostile and neutral target detection), UGV operation (monitor, semi-autonomous, teleoperation) and communication tasks. Moreover, Chen and Terrence examined whether participants with different attentional control skills react differently to FAP and MP AiTR systems. In other words, whether the reaction of low PAC and high PAC participants to automated cues delivered by a gunnery station differed depending on whether the system was FAP or MP. Following the methodology employed by Chen and Joyner [66], Chen and Terrence simulated a generic tank crew station setting and incorporated tactile and visual alerts to provide directional cueing for target detection in the gunnery task. The directional cueing for target detection was based on a simulated AiTR capability. The detection of hostile targets for the gunnery task was better when operators had to monitor the video feed of a UGV in comparison to when they had to manage a semi-autonomous UGV or teleoperate a UGV. This result is consistent with Chen and Joyner's findings and further supports the notion that operators have a lower cognitive and visual workload when required to only monitor the UGV video feed, affording more cognitive resources to be allocated to the gunnery task. Further, Chen and Terrence observed a significant interaction between type of AiTR automation system and the PAC of participants for hostile target detection. Individuals found to have high PAC did not comply with FAP alerts and did not rely on automation, detecting more targets than were cued when presented with MP alerts. This is in line with the idea that operator's compliance with and reliance on AiTR systems are independent constructs and are affected differently by false alarms and misses (e.g. [67]). The picture was rather different for those with low PAC. In the FAP condition, low PAC operators demonstrated a strong compliance with the alerts and as a consequence this led to good target detection performance. In contrast, with MP automation, low PAC operators relied too much on the AiTR which resulted in very poor performance. As workload became heavier (e.g. with more manual manipulation of the UGV), low PAC operators became increasingly reliant on automation, whereas operators with strong attention shifting skills retained a relatively stable level of reliance during the different experimental conditions.

Considering neutral target (not cued by the AiTR system) detection during the gunnery task, Chen and Terrence [71] found that when gunners had to teleoperate a UGV their detection was much poorer in comparison to when gunners had to manage a semi-autonomous UGV. This is consistent with Chen and Joyner's [66] finding, that operators allocated much less attentional resources to the gunnery task when the concurrent UV task required manual manipulation. Operators with low attention allocation skills performed at an equivalent level, independent of the AiTR system they were exposed to. However, operators with higher PAC displayed greater target detection performance when the AiTR system was MP, indicating that individuals with high PAC allocated more attentional resources to the gunnery task because they relied less on the MP cues for target detection.

For the concurrent robotics task, the highest level of performance observed by Chen and Terrence [71] was when the operator had only to monitor a video feed from a UGV. The type of AiTR received had no effect on performance in this condition. As observed for hostile and neutral target detection during the gunnery task, a greater adverse effect of MP cueing was observed when the concurrent robotics task became more challenging. When teleoperation of a UGV was required, MP cueing produced a larger performance decrement than did FAP. A significant interaction between type of AiTR and PAC was also found. Low PAC individuals’ performance was worse when presented with a MP AiTR system; however, FAP alerts received for the gunnery task improved their concurrent task performance. In contrast, high PAC individuals were less reliant on MP cues and thus demonstrated better concurrent task performance. Also, high PAC operators complied less with FAP cues, although this did not result in improved performance. Performance for the tertiary communication tasks was also moderated by the complexity of the robotics task. Better communication performance was demonstrated when operators were required to monitor the video feed of a UGV than when a UGV was teleoperated, as observed by Chen and Joyner [66]. Chen and Terrence [71] suggest the information-encoding processes required for manipulating a UGV during teleoperation demand more attention and are more susceptible to the impact of competing requirements in multitask scenarios.

It seems that reliance on automation during multitask scenarios is influenced by PAC. Only operators with a low attention shifting ability seemed to rely on AiTR in a heavy workload multitasking environment [67,71]. MP alerts seemed to be more detrimental to the performance of low PAC operators than FAP alerts [71], whereas FAP cues impaired performance on both automated (gunnery) and concurrent tasks in those with high PAC more than MP alerts [69,71]. Low PAC operators seemed to trust automation more than high PAC operators and found performing multiple tasks simultaneously more difficult, leading to an overreliance on automation when available. High PAC individuals, however, displayed reduced reliance on MP automation and seemed to have greater self-confidence in their ability to multitask in a complex environment. These findings therefore suggest that PAC can mediate the link between self-confidence and degree of reliance.

5.8.2 Spatial Ability

There is a growing body of research that discusses the influence of spatial ability (SpA) in the context of unmanned system performance and operations. Spatial ability can be divided into two sub-components: spatial visualization and spatial orientation (e.g. [72, 73]). Ekstrom et al. [72] defined spatial visualization as the ‘ability to manipulate or transform the image of spatial patterns into other arrangements’, and spatial orientation as the ‘ability to perceive spatial patterns or to maintain orientation with respect to objects in space’. Previous research has shown that these two sub-components are distinct [74, 75].

Spatial ability has been found to be a significant factor in military mission effectiveness [76], visual display domains [77], virtual environment navigation [78], learning to use a medical teleoperation device [79], target search task [48,65,66,71] and robotics task performance [80].

Lathan and Tracey [81] found that people with higher SpA completed a teleoperation task through a maze faster and with fewer errors than people with lower SpA. They have recommended that personnel with higher spatial ability should be selected to operate UVs. Baber et al. [49] have shown that participants with low spatial ability also exhibit greater deterioration in secondary task performance when monitoring 5 UVs; this could relate to the challenge of dividing attention across several UVs.

Chen et al. [48] found that people with higher SpA performed better on a situational awareness task than people with lower SpA. Chen and Terrence [65] also found a significant correlation between SpA and performance when performing concurrent gunnery, robotics control and communication tasks but when aided target recognition was available for their gunnery task, the participants with low SpA performed as well as those with high SpA. However, the test used to measure the relationship between spatial ability and performance is also important. For example, Chen and Joyner [66] used two different tests to measure spatial ability: the Cube Comparison Test (CCT) [82] and the Spatial Orientation Test (SOT) based on Gugerty and Brooks’ [83] cardinal direction test. They found that the SOT was an accurate predictor of performance but that the CCT was not. One possibility is that these two tests measured the two sub-components of spatial ability and that the task to be completed by the participants correlated with one sub-component but not the other (see [74,84] for a discussion of tests measuring each of the sub-components).

Research has shown spatial ability to be a good predictor of performance on navigation tasks. Moreover, individuals with higher spatial ability have been shown to perform target detection tasks better than people with lower spatial capability [46]. Chen [85] also found that participants with higher SpA performed better in a navigation task and that these participants also reported a lower perceived workload compared to participants with lower SpA. Similarly, Neumann [86] also showed that higher SpA was associated with a lower perceived workload in a teleoperating task.

Chen and Terrence [65,71] observed that the type of AiTR display preferred by operators is correlated with their spatial ability, such that individuals with low SpA prefer visual cueing over tactile cueing. However, in environments that draw heavily on visual processing, tactile displays would enhance performance and be more appropriate as operators would be able to allocate visual attention to the tasks required and not to the automated alerts.

5.8.3 Sense of Direction

Another individual difference that has been studied is sense of direction (SoD). Contrary to expectations, SoD and spatial ability are only moderately, if at all, correlated [87]. Self-reported SoD is measured with a questionnaire and is defined by Kozlowski and Bryant [88, p. 590] as ‘people's estimation of their own spatial orientation ability’. A study on route-learning strategies by Baldwin and Reagan [89] compared people with good and bad SoD. SoD was measured with a questionnaire developed by Takeuchi [90] and later refined by Kato and Takeuchi [91]. To do so, they used participants who scored at least one standard deviation above or below the mean of the larger sample including 234 respondents. This resulted in a sample of 42 participants, 20 participants with good SoD and 22 participants with poor SoD. The experiment involved a route-learning task and a concurrent interference task (verbal or spatial). Individuals classified as having good SoD traversed the routes faster and with fewer errors when learned under conditions of verbal interference relative to under conditions of visuospatial interference. Conversely, individuals with poor SoD were faster to go through the routes when they were learned under conditions of visuospatial interference relative to verbal interference. This interaction between SoD and the type of interference can be explained by the fact that good navigators tend to make greater use of survey strategies (cardinal directions, Euclidean distances and mental maps) which rely on visuospatial working memory whilst poor navigators tend to use ego-centred references to landmarks which rely on verbal working memory. When the interference task taps onto the same type of working memory used to perform navigation the performance drops, whereas the performance is less influenced by the other interference tasks. Also, individuals with better SoD learned the routes in fewer trials than individuals with poorer SoD. Finally, as with spatial ability, Baldwin and Reagan found that individuals with poor SoD reported a higher perceived workload than individuals with good SoD and the two SoD groups didn't differ in spatial ability as measured by the Mental Rotation Task [92].

Chen [85] also looked at SoD in a navigation task and found that it was correlated with map-marking scores, with participants having a higher SoD performing better. SoD was also negatively correlated with target search time, with participants having a better SoD taking less time than poorer participants. There was also an interaction between participants’ SoD and the lighting conditions. In the night condition, those with poor SoD took significantly more time than those with good SoD to find targets. However, in the day condition, no difference was found between participants with poor and good SoD. These results are in line with those reported in Baldwin and Reagan [89] showing that participants with poor SoD relied on landmarks and verbal working memory to perform a navigation task. As landmarks are a lot more difficult to use at night, the difference between participants using visuospatial and verbal working memory became apparent in this condition whereas during the day, the participants using verbal working memory (poor SoD) managed to perform as well as the participants using visuospatial working memory (good SoD).

5.8.4 Video Games Experience

Video games experience can have an effect on an operator's performance when remotely operating a UAV. A laboratory-based study by De Lisi and Cammarano [93] looked at the effect of playing video games on spatial ability. Participants who took part in this study were administered the Vanderberg Test of Mental Rotation (VTMR), a paper-and-pencil assessment of mental rotation, before and after two 30-minute experimental sessions. During these two sessions, half of the participants played the computer game Blockout (which requires mental rotation of geometric figures) whilst the other half played Solitaire (which is a game that does not require mental rotation). The interesting result was that the average score on the VTMR increased significantly in the space of a week and with only two sessions of 30 minutes when participants were playing Blockout. Moreover, the score on the pretest was positively correlated with the participant's reported computer usage. Similarly, Neumann [86] found that video games experience correlated with the number of targets detected and the number of collisions in a teleoperating task but did not correlate with the time to complete the task.

Finally, a study by Chen [85] found a significant difference in map-marking between men and women, with the men performing better than the women. When looking only at the men's performance, those who played video games more frequently performed better in map-marking accuracy than those who played video games less frequently. Moreover, 61% of the male participants and only 20% of the female participants played video games frequently, which could point to an effect of video games experience rather than a pure gender effect.

5.9 Conclusions

Many of the human factors challenges of interacting with UAVs have been comprehensively reviewed by Chen et al. [94]. These issues include the bandwidth, frame rates and motion artefacts of video imagery presented to the operator; time lags between control actions and system response; lack of proprioception and tactile feedback to operators controlling UVs; frame of reference and two-dimensional views for operators monitoring UVs; switching attention between different displays. In this chapter, we offered additional considerations of the potential uses of multimodal interaction with UVs to support attention to several sources of information, the impact of managing multiple UAVs on operators’ task-switching abilities, and the role of spatial ability and sense of direction. Empirical evidence related to the design challenges associated with the level and type of automation for information-gathering and decision-making functions was also considered. The chapter concluded with a discussion of the potential role of video games experience on UAV future operations.

As the range of applications of UVs increases in parallel with their increases in sophistication and autonomy, there is a likelihood that the operator will be required to perform fewer tasks related to the direct control of the UAV but a far wider range of tasks that are consequent upon the activity of the UAV. That is, rather than directly manipulating the UAV, the operator will control payload and respond to the data coming from the UAV (e.g. image interpretation and analysis). This is likely to lead to an increase in cognitive load for the operators, with a reduction in physical tasks. As the number of tasks increases, particularly when many of these might draw upon visual processing, then the capability to divide attention between tasks or UVs becomes important. Research shows that automating every feature of a UV is not the best strategy, especially when the human operator has to interact with the automation. Rather, a more efficient strategy is to design a flexible system that responds to context, operator requirements and the demands of the situation. This flexibility is provided by adaptive or adaptable automation, which may help the human operator more than inflexible static automation. However, designers of automation for UAS need to find a balance between how involved the user is in system modification in comparison to the system. Unpredictability increases if operators have little involvement with system modification and there is an increase in workload if an operator is too highly involved. Automation support, if used appropriately, has been shown to lead to better performance in multitasking environments involving the supervision and management of multiple UVs.

The fact that individual differences in attentional control impact on the effectiveness of automation needs to be considered in system designs. Operators with low attentional control find performing multiple tasks concurrently more difficult than those with higher attentional control, resulting in their overreliance on automation when available. The design of system displays also influences the ability of operators to divide attention between UVs. For example, tactile displays enhance performance in highly visual environments, allowing operators to allocate visual attention to the tasks and not to the automated cues.

A central theme of this chapter has been the challenge relating to ‘human-in-the-loop’ and automation. This is a significant issue for human factors and will continue to play a key role in the advancement of UAV design. Given the range of interventions in UAV operations that will involve human operators from imagery interpretation to maintenance, from mission planning to decisions on engaging with targets, the question is not whether the human can be removed from the ‘loop’, but rather: How will the operator(s) and UAV(s) cooperate as a team to complete the mission given some tasks can be automated? Appreciating some of the ways in which human performance can degrade, and the ways in which the adaptability, ingenuity and creativity of the human-in-the-loop can be best supported, will lead to a new generation of UAVs.

References

1. Cooper, J.L. and Goodrich, M.A., ‘Towards combining UAV and sensor operator roles in UAV-enabled visual search’, in Proceedings of ACM/IEEE International Conference on Human–Robot Interaction, ACM, New York, pp. 351–358, 2008.

2. Finn, A. and Scheding, S., Developments and Challenges for Autonomous Unmanned Vehicles: A Compendium, Springer-Verlag, Berlin, 2010.

3. Schulte, A., Meitinger, C. and Onken, R., ‘Human factors in the guidance of uninhabited vehicles: oxymoron or tautology? The potential of cognitive and co-operative automation’, Cognition, Technology and Work, 11, 71–86, 2009.

4. Zuboff, S., In the Age of the Smart Machine: The Future of Work and Power, Basic Books, New York, 1988.

5. Alexander, R.D., Herbert, N.J. and Kelly, T.P., ‘The role of the human in an autonomous system’, 4th IET International Conference on System Safety incorporating the SaRS Annual Conference (CP555), London, October 26–28, 2009.

6. Johnson, C.W., ‘Military risk assessment in counter insurgency operations: a case study in the retrieval of a UAV Nr Sangin, Helmand Province, Afghanistan, 11th June’, Third IET Systems Safety Conference, Birmingham, 2008.

7. Bainbridge, L., ‘Ironies of automation’, in J. Rasmussen, K. Duncan and J. Leplat (eds), New Technology and Human Error, John Wiley & Sons, New York, 1987.

8. Ballard, J.C., ‘Computerized assessment of sustained attention: a review of factors affecting vigilance’, Journal of Clinical and Experimental Psychology, 18, 843–863, 1996.

9. Defense Science Study Board, Unmanned Aerial Vehicles and Uninhabited Combat Aerial Vehicles, Office of the Under Secretary of Defense for Acquisition, Technology and Logistics, Washington, DC, 20301-3140, 2004.

10. Wickens, C.D., Engineering Psychology and Human Performance, Harper-Collins, New York, 1992.

11. Wiener, E.L. and Curry, E.R., ‘Flight deck automation: problems and promises’, Ergonomics, 23, 995–1012, 1980.

12. Duggan, G.B., Banbury, S., Howes, A., Patrick, J. and Waldron, S.M., ‘Too much, too little or just right: designing data fusion for situation awareness’, in Proceedings of the 48th Annual Meeting of the Human Factors and Ergonomics Society, Santa Monica, CA, HFES, 528–532, 2004.

13. Sheridan, T.B., ‘Forty-five years of man–machine systems: history and trends’, in G. Mancini, G. Johannsen and L. Martensson (eds), Analysis, design and evaluation of man–machine systems. Proceedings of the 2nd IFAC/IFIP/FORS/ IEA Conference, Pergamon Press, Oxford, pp. 1–9, 1985.

14. Baber, C., Grandt, M. and Houghton, R.J., ‘Human factors of mini unmanned aerial systems in network-enabled capability’, in P.D. Bust (ed.), Contemporary Ergonomics, Taylor and Francis, London, pp. 282–290, 2009.

15. Dekker, S. and Wright, P.C., ‘Function allocation: a question of task transformation not allocation’, in ALLFN’97: Revisiting the Allocation of Functions Issue, IEA Press, pp. 215–225, 1997.

16. Andes, R.C., ‘Adaptive aiding automation for system control: challenges to realization’, in Proceedings of the Topical Meeting on Advances in Human Factors Research on Man/Computer Interactions: Nuclear and Beyond, American Nuclear Society, LaGrange Park, IL, pp. 304–310, 1990.

17. Ruff, H.A., Narayanan, S. and Draper, M.H., ‘Human interaction with levels of automation and decision-aid fidelity in the supervisory control of multiple simulated unmanned air vehicles’, Presence, 11, 335–351, 2002.

18. Ruff, H.A., Calhoun, G.L., Draper, M.H., Fontejon, J.V. and Guilfoos, B.J., ‘Exploring automation issues in supervisory control of multiple UAVs’, paper presented at 2nd Human Performance, Situation Awareness, and Automation Conference (HPSAA II), Daytona Beach, FL, 2004.

19. Cummings, M.L. and Guerlain, S., ‘Developing operator capacity estimates for supervisory control of autonomous vehicles’, Human Factors, 49, 1–15, 2007.

20. Miller, C., ‘Modeling human workload limitations on multiple UAV control’, Proceedings of the Human Factors and Ergonomics Society 48th Annual Meeting, New Orleans, LA, pp. 526–527, September 20–24, 2004.

21. Galster, S.M., Knott, B.A. and Brown, R.D., ‘Managing multiple UAVs: are we asking the right questions?’, Proceedings of the Human Factors and Ergonomics Society 50th Annual Meeting, San Francisco, CA, pp. 545–549, October 16–20, 2006.

22. Taylor, R.M., ‘Human automation integration for supervisory control of UAVs’, Virtual Media for Military Applications, Meeting Proceedings Rto-Mp-Hfm-136, Paper 12, Neuilly-Sur-Seine, France, 2006.

23. Cummings, M.L., Bruni, S., Mercier, S. and Mitchell, P.F., ‘Automation architectures for single operator, multiple UAV command and control’, The International C2 Journal, 1, 1–24, 2007.

24. Liu, D., Wasson, R. and Vincenzi, D.A., ‘Effects of system automation management strategies and multi-mission operator-to-vehicle ratio on operator performance in UAV systems’, Journal of Intelligent Robotics Systems, 54, 795–810, 2009.

25. Mitchell, D.K. and Chen, J.Y.C., ‘Impacting system design with human performance modelling and experiment: another success story’, Proceedings of the Human Factors and Ergonomics Society 50th Annual Meeting, San Francisco, CA, pp. 2477–2481, 2006.

26. Pashler, H., ‘Attentional limitations in doing two tasks at the same time’, Current Directions in Psychological Science, 1, 44–48, 1992.

27. Pashler, H., Carrier, M. and Hoffman, J., 1993, ‘Saccadic eye-movements and dual-task interference’, Quarterly Journal of Experimental Psychology, 46A, 51–82, 1993.

28. Monsell, S., ‘Task switching’, Trends in Cognitive Sciences, 7, 134–140, 2003.

29. Rubinstein, J.S., Meyer, D.E. and Evans, J.E., ‘Executive control of cognitive processes in task switching’, Journal of Experimental Psychology: Human Perception and Performance, 27, 763–797, 2001.

30. Schumacher, E.H., Seymour, T.L., Glass, J.M., Fencsik, D.E., Lauber, E.J., Kieras, D.E. and Meyer, D.E., ‘Virtually perfect time sharing in dual-task performance: uncorking the central cognitive bottleneck’, Psychological Science, 12, 101–108, 2001.

31. Squire, P., Trafton, G. and Parasuraman, R., ‘Human control of multiple unmanned vehicles: effects of interface type on execution and task switching times’, Proceedings of ACM Conference on Human–Robot Interaction, Salt Lake City, UT, pp. 26–32, March 2–4, 2006.

32. Chadwick, R.A., ‘Operating multiple semi-autonomous robots: monitoring, responding, detecting’, Proceedings of The Human Factors and Ergonomics Society 50th Annual Meeting, San Francisco, CA, pp. 329–333, 2006.

33. Cummings, M.L., ‘The need for command and control instant message adaptive interfaces: lessons learned from tactical Tomahawk human-in-the-loop simulations’, CyberPsychology and Behavior, 7, 653–661, 2004.

34. Dorneich, M.C., Ververs, P.M., Whitlow, S.D., Mathan, S., Carciofini, J. and Reusser, T., ‘Neuro-physiologically-driven adaptive automation to improve decision making under stress’, Proceedings of the Human Factors and Ergonomics Society, 50th Annual Meeting, San Francisco, CA, pp. 410–414, October 16–20, 2006.

35. Parasuraman, R., Cosenzo, K.A. and De Visser, E., ‘Adaptive automation for human supervision of multiple uninhabited vehicles: effects on change detection, situation awareness, and mental workload’, Military Psychology, 21, 270–297, 2009.

36. Chen, J.Y.C., Barnes, M.J. and Harper-Sciarini, M., Supervisory Control of Unmanned Vehicles, Technical Report ARL-TR-5136, US Army Research Laboratory, Aberdeen Proving Ground, MD, 2010.

37. Cummings, M.L., Brzezinski, A.S. and Lee, J.D., ‘The impact of intelligent aiding for multiple unmanned aerial vehicle schedule management’, IEEE Intelligent Systems, 22, 52–59, 2007.

38. Dixon, S.R. and Wickens, C.D., ‘Control of multiple-UAVs: a workload analysis’, 12th International Symposium on Aviation Psychology, Dayton, OH, 2003.

39. Maza, I., Caballero, F., Molina, R., Pena, N. and Ollero, A., ‘Multimodal interface technologies for UAV ground control stations: a comparative analysis’, Journal of Intelligent and Robotic Systems, 57, 371–391, 2009.

40. Trouvain, B. and Schlick, C.M., ‘A comparative study of multimodal displays for multirobot supervisory control’, in D. Harris (ed.), Engineering Psychology and Cognitive Ergonomics, Springer-Verlag, Berlin, pp. 184–193, 2007.

41. Wickens, C.D., Dixon, S. and Chang, D., ‘Using interference models to predict performance in a multiple-task UAV environment’, Technical Report AHFD-03-9/Maad-03-1, 2003.

42. Helleberg, J., Wickens, C.D. and Goh, J., ‘Traffic and data link displays: Auditory? Visual? Or Redundant? A visual scanning analysis’, 12th International Symposium on Aviation Psychology, Dayton, OH, 2003.

43. Donmez, B., Cummings, M.L. and Graham, H.D., ‘Auditory decision aiding in supervisory control of multiple unmanned aerial vehicles’, Human Factors, 51, 718–729, 2009.

44. Chen, J.Y.C., Drexler, J.M., Sciarini, L.W., Cosenzo, K.A., Barnes, M.J. and Nicholson, D., ‘Operator workload and heart-rate variability during a simulated reconnaissance mission with an unmanned ground vehicle’, Proceedings of the 2008 Army Science Conference, 2008.

45. Luck, J.P., McDermott, P.L., Allender, L. and Russell, D.C., ‘An investigation of real world control of robotic assets under communication’, Proceedings of 2006 ACM Conference on Human–Robot Interaction, pp. 202–209, 2006.

46. Chen, J.Y.C., ‘Concurrent performance of military and robotics tasks and effects of cueing in a simulated multi-tasking environment’, Presence, 18, 1–15, 2009.

47. Draper, M., Calhoun, G., Ruff, H., Williamson, D. and Barry, T., ‘Manual versus speech input for unmanned aerial vehicle control station operations’, Proceedings of the 47th Annual Meeting of the Human Factors and Ergonomics Society, Santa Monica, CA, pp. 109–113, 2003.

48. Chen, J.Y.C., Durlach, J.P., Sloan, J.A. and Bowens, L.D., ‘Human robot interaction in the context of simulated route reconnaissance missions’, Military Psychology, 20, 135–149, 2008.

49. Baber, C., Morin, C., Parekh, M., Cahillane, M. and Houghton, R.J., ‘Multimodal human–computer interaction in the control of payload on multiple simulated unmanned vehicles’, Ergonomics, 54, 792–805, 2011.

50. Parasuraman, R., Barnes, M. and Cosenzo, K., ‘Decision support for network-centric command and control’, The International C2 Journal, 1, 43–68, 2007.

51. Crocoll, W.M. and Coury, B.G., ‘Status or recommendation: selecting the type of information for decision adding’, Proceedings of the Human Factors Society, 34th Annual Meeting, Santa Monica, CA, pp. 1524–1528, 1990.

52. Rovira, E., McGarry, K. and Parasuraman, R., ‘Effects of imperfect automation on decision making in a simulated command and control task’, Human Factors, 49, 76–87, 2007.

53. Parasuraman, R. and Riley, V.A., ‘Humans and automation: use, misuse, disuse, abuse’, Human Factors, 39, 230–253, 1997.

54. Parasuraman, R., ‘Designing automation for human use: empirical studies and quantitative models’, Ergonomics, 43, 931–951, 2000.

55. Scerbo, M., ‘Adaptive automation’, in W. Karwowski (ed.), International Encyclopedia of Ergonomics and Human Factors, Taylor and Francis, London, pp. 1077–1079, 2001.