10 Helping patients in performing online video search: evaluating the importance of medical terminology extracted from MeSH and ICD-10 in health video title and description

Abstract: Huge amounts of health-related videos are available on the Internet, and health consumers are increasingly searching for answers to their health problems and health concerns by availing themselves of web-based video sources. However, a critical factor in identifying relevant videos based on a textual query is the accuracy of the metadata with respect to video content. This chapter focuses on how reputable health videos providers, such as hospitals and health organizations, describe diabetes-related video content and the frequency with which they use standard terminology found in medical thesauri. In this study, we compared video title and description to medical terms extracted from the MeSH and ICD-10 vocabularies, respectively. We found that only a small number of videos were described using medical terms (4% of the videos included an exact ICD-10 term; and 7% an exact MeSH term). Furthermore, of all those videos that used medical terms in their title/description, we found an astonishingly low variety of diabetes-related medical terms used. For example, the video titles and descriptions brought up only 2.4% of the ICD-10 terms and 4.3% of MeSH terms, respectively. These figures give one pause to think as to how many useful health videos are haplessly eluding online patient search because of the sparse use of appropriate terms in titles and descriptions. Thus, no one would deny that including medical terms in video title and description is useful to patients who are searching for relevant health information. Adopting good practices for titling and describing health-related videos may similarly help producers of YouTube videos to identify and address the gaps in the delivery of informational resources that patients need to be able to monitor their own health. Sadly, as the situation is now, neither patients nor producers of health videos are able to explore the collection of online materials in the same systematic manner as the medical professional explores medical domains using MEDLINE. Why can we not have the same level of rigorous and systematic curating of patient-related health videos as we have for other medical content on the web?

10.1 Introduction

A huge amount of health information is available on the Internet, which has become a major source of information concerning many aspects of health (AlGhamdi & Moussa 2012; Griffiths et al. 2012). People are using the Internet to search for information about specific diseases or symptoms, read someone else’s commentary or experience about health or medical issues, watch online health videos, consult online reviews of drug or medical treatments, search for others who might have health concerns similar to theirs and follow personal health experiences through blogs (de Boer, Versteegen & van Wijhe 2007; Powell et al. 2011; Fox 2011b).

Health information on the Internet comes from many different sources, including hospitals, health organizations, government, educational institutions, for-profit actors and private persons. However, the general problem of information overload makes it difficult to find relevant, good-quality health information on the Internet (Purcell, Wilson & Delamothe 2002; Mishoe 2008). Adding to this problem is that many websites have inaccurate, missing, obsolete, incorrect, biased or misleading information, often making it difficult to discern between veritable information and specious information found on the Web (Steinberg et al. 2010; Briones et al. 2012; Singh, Singh & Singh 2012; Syed-Abdul et al. 2013).

An important factor for information trustworthiness is the credibility of the information source (Freeman & Spyridakis 2009). Users are for example much more likely to trust health information published or authored by physicians or major health institutions (Dutta-Bergman 2003; Moturu, Lui & Johnson 2008; Bermudez-Tamayo et al. 2013) than information circulating in the blogosphere by other patients. Thus, users show greater interest in health information emanating from hospitals and health organizations (such as The American Diabetes Foundation and Diabetes UK) because these sources are considered more credible than the average health information put out on the web by other patients.

In this chapter, we focus primarily on health information provided through videos, and look at ways to provide health consumers with relevant videos to satisfy their informational needs. One of the most important factors in identifying relevant videos based on a textual query is the organization of metadata for cataloguing video material. For example, making sure that both the video title and the description of the video itself are accurate with respect to the content of the video. Given the importance of metadata for proper classification and retrieval of key health-related videos we take a close look at how reputable health videos providers, such as hospitals and health organizations, describe their video content through the use of medical terminology. In this study, we compare video title and description to medical terms extracted from the MeSH and ICD-10 vocabularies.

For practicality we chose to base our study on a narrowly defined clinical topic. This study is based on health videos obtained from YouTube through textual search queries on diabetes-related issues. YouTube is today the most important video-sharing website on the Internet (Cheng, Dale & Liu 2008), and is it increasingly being used to share health information offered by hospitals, organizations, government, companies and private users (Bennett 2011) YouTube social media tools allow users to easily upload, view and share videos, and enable interaction by letting users rate videos and post comments. A persistent problem, however, is the difficulty in finding relevant health videos from credible sources such as hospitals and health organizations, given that search engine optimization tends to favor YouTube videos, thereby giving higher ranking favors to content stemming from popular sources (channels) than from more official sites. This should not be surprising given that unlike patient-generated YouTube videos, hospital and other healthcare organization videos do not readily benefit from social media interaction through likes/dislikes and comments, and for this, and other reasons, they tend to appear lower in the ranked list of online search results than the video material that is produced by lay sources.

We conducted the study by first issuing a number of diabetes-related queries to YouTube and identifying videos coming from more official healthcare organization sources. Title and description of those videos were checked for medical terms as found in the ICD-10 and MeSH vocabularies, and the prevalence of medical terms was determined.

The study was intended to answer the following questions: To what extent does title and description of diabetes health videos contain medical terms, as provided through the MeSH and ICD-10 vocabularies? Which medical terms are used in video title and description?

The chapter is broken down into several sections. Following the introductory section, above, Section 2 describes the MeSH and ICD-10 vocabularies, how test videos were obtained and how the study was conducted. Section 3 presents the results of the study, while Section 4 discusses the findings and how medical terminology in video title and description can be useful. Section 5 provides a conclusion to the chapter.

10.2 Data and methods

10.2.1 Obtaining video data

The videos used in this test were collected over a period of 44 days. We chose these parameters for the duration of our study because this 6 week or month and a half period constitutes a time frame that is a long enough period for extracting useful conclusions. Thus we began our study on the 28th of February 2013 and ended on April 12th of that year. For each day, 19 queries focusing on different aspects of diabetes were issued to the YouTube web site. Each day, the top-500 ranked videos from each query were examined in order to identify videos coming from credible sources.

Through our study of health videos on YouTube we identified credible YouTube channels, such as hospitals and health organizations, and organized them into white-lists86 of channels. The Health Care Social Media List started by Ed Bennett87 was used as an initial white-list for credible channels, which we expanded with more channels that were identified during our studies (Karlsen et al. 2013).

Since users also seek information from their peers, we also identified videos coming from users that we classified as active in publishing diabetes-related videos. The generous availability of peer-to-peer healthcare video material demonstrates the fact that not only do patients and their caregivers have knowledge and experiences that they want to share but that patients themselves eagerly seek such information from their peers (Fox 2011a). To recognize this information need and to, likewise, investigate the use of medical terms in user-provided videos, we identified, in a third white-list, channels of active users that predominantly produced diabetes videos.

Our white-lists contained a total of 699 channels, where 651 were hospitals, 30 were organizations, and 18 were active users. The 19 queries used in the study all included the term “diabetes” and focused on different aspects concerning the disease. We used queries such as “diabetes a1c,” “diabetes glucose,” “diabetes hyperglycemia” and “diabetes lada.”

To execute the first stage of the project, which was the information-collection stage, we implemented a system that for each day of the study automatically issued the 19 queries (with an English anonymous profile). For each query, the system extracted information about the top 500 YouTube results, and identified new videos from white-listed channels that were included in our set of test videos. After the 44-days of the study, we had a total of 1380 unique diabetes-related videos from hospitals, health organizations, and active users. The title and description of each video were extracted and compared against medical terms in order to detect videos where its metadata contained medical terminology.

10.2.2 Detecting medical terms in video title and/or description

The experiments were carried out using two different sets of medical terms: one from the ICD-10 database,88 and a second set from the MeSH database.89 The medical vocabulary used was the result of searching the online databases of ICD-10 and MeSH with the phrase “diabetes mellitus.” For ICD-10 we retrieved 167 medical terms related to diabetes, while MeSH returned 282 diabetes related terms. A term is either a single word or a phrase consisting of two or more words.

The set of terms were selected from the MeSH vocabulary (in the following denoted as MeSH terms set) and from the ICD-10 vocabulary (in the following denoted as ICD-10 terms set). We further distinguish between exact terms and partial terms. An exact term is a complete medical term as given in the ICD-10 or MeSH vocabulary. A partial term is a single word and a subset of an exact term (which may constitute a phrase). For example, “neuropathy” is a partial term of the exact term “diabetic autonomic neuropathy.”

To generate lists of partial terms from ICD-10 and MeSH vocabulary, we first removed stop words, such as “of” “the” “in” “with” “and” (Baeza-Yates and Ribeiro-Neto 2011). In addition, since diabetes is the general topic of the selected videos and vocabulary terms, we choose to discard the most common terms (“diabetes” “diabetic”) from our list of partial terms. This was done to focus the attention on more specific medical terms related to diabetes. We also did a manual revision of the partial terms, to discard terms that are not specifically related to the diabetes disease. This included terms such as (diet, coma, complications, obese, latent, chemical, onset), which were used in phrases such as “diabetic coma” and “diabetic diet.” After processing the medical phrases, we had two lists of partial medical terms, one for the ICD-10 terms set and the other for the MeSH terms set.

We used two analytic methods to identify medical terms in video title and description, where we focused on exact term match and partial term match to ICD-10 and MeSH terms set, respectively. Criteria for determining medical term prevalence in video title and description were as follows:

- Exact match: The system detects a positive match when the video title/ description contains an exact term from a vocabulary terms set.

- Partial match: The system detects a positive match when the video title/ description contains a partial term from a vocabulary terms set.

Fig. 10.1: Architecture for medical term detection.

Figure 10.1 shows how medical terms are detected in video title and description. Title and description of a video is, together with a vocabulary term set, given as input to a process that detects exact term matches and partial term matches for the video. If medical terms are detected, information about the video and the detected terms are stored. The system then continues with the next video, and searches for medical terms in the subsequent video.

We handle medical terms from ICD-10 and MeSH separately, meaning that the system only includes exact and partial terms from one of the vocabularies at any given time. Medical terms detection is thus executed twice for the videos: once for detecting ICD-10 terms; and subsequently, in the next execution of the system, for detecting MeSH terms. This was done in order to identify the impact different vocabularies had on medical terms detection.

10.2.3 Medical vocabularies

The International Classification of Diseases is maintained by WHO (World Health Organization) and is now in its tenth revision (ICD-10) since its first version in 1893. The purpose of ICD-10 is to provide a common foundation for definition of diseases and health conditions that allows the world to compare and share health information using a common language (WHO 2013). The classification is used to report and identify global health trends. The classification is used primarily by health workers. We have used the 10th Revision (ICD-10) to define exact and partial match with metadata description of the videos.

Medical Subject Headings (MeSH) is the National Library of Medicine’s controlled vocabulary thesaurus used for indexing articles for PubMed. MeSH is continuously updated with terms appearing in medical publications (NLM 2013). Terms like “Diabetes Mellitus” and even “Twitter Messaging” are defined in a hierarchical structure that permits searching at various specificity levels.

Together these vocabularies can be used to describe the relevant health condition covered by creators of some pieces of information while placing the piece(s) into a hierarchy of information that allows identification of the piece by healthcare information seekers.

10.3 Results

The test video collection contained 1380 distinct videos, including 270 hospital videos, 854 health organizations’ videos and 256 active users’ videos. The videos were uploaded from 73 hospital channels, 30 organization channels and 18 user channels. The videos’ title and description were compared against 167 ICD-10 terms and 282 MeSH terms.

Table 10.1 shows the results, in number of videos, from the two analytic methods: exact term match and partial term match. For both vocabularies a relatively high number of videos had a title and/or description including one or more terms that partially matched a term in the vocabulary. A low number of videos included exactly matching terms (3.9% for ICD-10 and 6.7% for MeSH), while around 40% of the videos did not include any medical terms at all.

Tab. 10.1: Final results. The number of videos with an exact match, partial match and no match to the ICD-10 and MeSH term sets (amount and percentage between parentheses).

10.3.1 ICD-10 results

When comparing ICD-10 terms to video title and description, we found that 54 of the 1380 videos (4%) had a title/description with an exact term match to at least one ICD-10 term. For partial ICD-10 terms, we detected a positive match for 816 videos (59%), while 510 videos (37%) did not have any ICD-10 terms in its title and/or description altogether (see Fig. 10.2a).

Fig. 10.2: Results of ICD-10 term matching, with total result over all videos (2a) and positive match distribution over hospital, health organization and active users videos (2b).

When analyzing the distribution of positive result videos from hospitals, health organizations and active users, we found that the majority (66%) of videos with medical terms came from health organizations (see Fig. 10.2b), while videos from hospitals and active users had an equally low prevalence of ICD-10 terms (17%).

Figure 10.3 displays the total number of videos within each group (i.e., hospitals, health organizations, and active users) that had a title/description with an exact match (3a) or partial match (3b) to ICD-10 terms. As shown in Fig. 10.3, the videos produced by organizations contained the highest number of both exact match terms and partial match terms (54% and 67%, respectively) when compared to hospital and user-generated videos. For hospitals however, which came in second place, we noticed a significantly higher occurrence of exact match terms (39%), (more than twice as many of the partial match terms (15%) found in such videos), a mirror opposite of organizations whose partial match terms exceeded their exact match terms.

Fig. 10.3: Exact and partial match distribution over hospital, health organization and active users’videos.

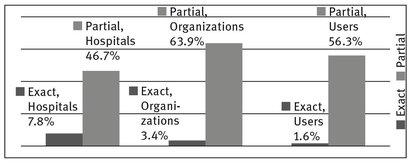

The relative proportion of videos from each group, with respect to exact and partial match, is displayed in Fig. 10.4. There we see that hospital videos had the largest relative proportion of exact match terms. Among the 270 hospital videos, 21 (7.8%) had an exact match with an ICD-10 term. The corresponding numbers for organizations and active users were 3.4% (29 of 854 videos) and 1.6%, (4 of 256 videos), respectively. The proportion of videos with a partial match to ICD-10 terms is much higher, with videos from health organizations coming out on top with 63.9%, followed successively by active users videos (56.3%) and hospital videos (46.7%).

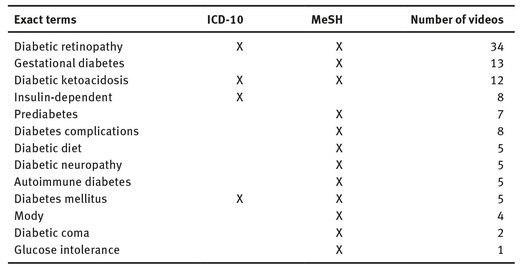

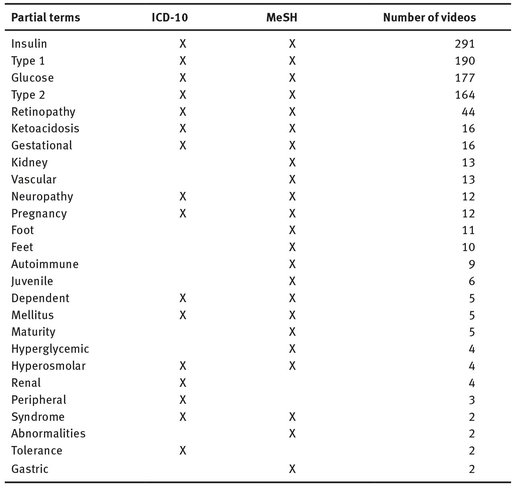

During the analysis we detected a total number of 27 distinct ICD-10 terms. Figure 10.5 presents the most frequently used ICD-10 terms, including all terms that were used in more than five videos. Terms with an occurrence of five or less are represented as “other terms,” and include 15 terms with a total of 39 occurrences. Exact terms in Fig. 10.5 are “diabetic retinopathy,” “diabetic ketoacidosis” and “insulin-dependent.” The rest of the terms are partial ICD-10 terms. For a complete list of both exact and partial terms, see Tabs. 10.2 and 10.3, respectively.

In Fig. 10.5, we include the total number of ICD-10 term occurrences. This means that if a title/description includes the terms “type 1” and “glucose,” both terms are counted and represented in the numbers given in Fig. 10.5. We observe that four terms, “type 1,” “type 2,” “insulin” and “glucose,” have a much higher number of occurrences than the other terms. These four terms represent 81% of the total amount of ICD-10 term occurrences.

Fig. 10.4: Relative proportion of videos with medical terms.

Fig. 10.5: The most frequently used ICD-10 terms in video title/descriptions.

10.3.2 MeSH Results

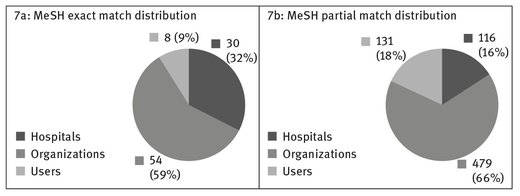

When comparing video title/description to MeSH terms, we found that 92 videos (7%) had an exact term match while 726 videos (52%) had a partial term match. Finally, there were 562 videos (41%) that did not have any MeSH terms in its title/ description (see Fig. 10.6a).

We used the same analysis method as for ICD-10 terms, and present in Fig. 10.6b the positive match distribution over hospitals’, organizations’ and active users’ videos, while in Fig. 10.7 we distinguish between exact match distribution and partial match distribution. For both ICD-10 and MeSH terms, we observe that hospital videos have a higher proportion of exact term matches compared with the proportion of partial term matches found in hospital videos.

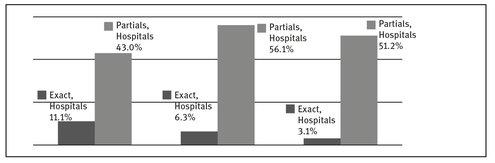

The relative proportion of videos with MeSH terms (see Fig. 10.8), follows the same pattern as ICD-10 term matches (see Fig. 10.4), where hospitals have the highest relative proportion of exact term matches and organization have the highest relative proportion of partial term matches. A difference we noticed when looking for terms found in the MeSH and ICD-10 vocabularies is that the proportion of exact terms are for all groups higher when using the MeSH thesaurus as opposed to the ICD-10 thesaurus. In contrast, the proportion of partial terms for all groups were lower in the MeSH vocabulary when compared to ICD-10 vocabulary. This is why it is so important when doing this kind of research to distinguish between these two medical vocabularies as well as the kind of term that is found, indicating whether it is an exact or partial term.

Fig. 10.6: Results of MeSH term matching, with total result over all videos and positive match distribution.

Fig. 10.7: MeSH exact and partial match distribution over hospital, health organization and active users videos.

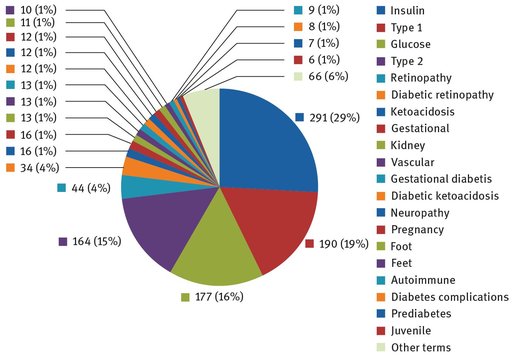

We detected a total number of 45 distinct MeSH terms. Figure 10.9 presents the most frequently used MeSH terms, including all terms that are used in more than five videos. Terms with an occurrence of 5 or less are represented as “other terms,” and include 25 terms with a total of 66 occurrences. Exact match terms in Fig. 10.9 are “diabetic retinopathy,” “gestational diabetes,” “diabetic ketoacidosis” and “prediabetes.” The rest of the terms consist of partial MeSH terms. The complete list of both exact and partial MeSH terms used in the test videos are seen in Tabs. 10.2 and 10.3, respectively.

As was the case for ICD-10 terms, the four terms (type 1, type 2, insulin, and glucose) had a very high number of occurrences while the majority of terms had relatively few occurrences. Using MeSH, we see that four most common terms represent 74% of the total amount of occurrences, while 41 terms are found among the remaining 26% of occurrences.

Fig. 10.8: Relative proportion of videos with MeSH terms.

Fig. 10.9: The most frequently used MeSH terms in video title/descriptions.

From the above analysis, we see that the MeSH results follow much of the same pattern as ICD-10 term detection. A main difference between ICD-10 and MeSH, is that a higher number of distinct terms were detected when using the MeSH vocabulary. We also found a higher number of exact match occurrences using MeSH.

10.3.3 Terms used in video titles and descriptions

The number of medical terms detected in video title and descriptions are few compared to the total number of diabetes related terms available from the ICD-10 and MeSH vocabularies. Among the 167 ICD-10 terms, only four exact terms were detected (2.4%), while 12 (out of 282) exact MeSH terms were detected (4.3%). Concerning partial terms, 23 were detected based on ICD-10 and 33 based on MeSH terms.

Below we present two tables listing the terms recognized in the exact term match (Tab. 10.2) and partial term match analysis (Tab. 10.3). Among the exact vocabulary terms from ICD-10 and MeSH, only 13 distinct terms were detected in video title and/or descriptions, while 36 distinct partial terms were found. Tables 10.2 and 10.3 list these terms, indicating to which vocabulary they belong, and provides the number of videos using each of these terms.

In addition to the partial terms listed in Tab. 10.3, we also detected 10 terms that appeared in title and/or description of one video only. These terms were: acidosis, intolerance, nephropathy, glycosylation, fetal, pituitary, resistant, products, central, and sudden.

We observe that a higher number of terms were detected when using the MeSH vocabulary. This may come as a consequence of the higher number of terms in the MeSH term set. Also, since the original use of MeSH is for indexing articles for PubMed, it might be natural (if one were to use medical terms) to choose a MeSH term also for describing videos.

Tab. 10.2: All exact terms detected in video title/descriptions. Including vocabulary affiliation and number of videos where the terms were used.

Tab. 10.3: Partial terms detected in video title/descriptions. Including vocabulary affiliation and number of videos where the terms were used. Not including terms with only one occurrence.

From Tabs. 10.2 and 10.3 we see that the two vocabularies complement each other, since some of the detected terms are only found in ICD-10 while others only in MeSH. To check the overlap between the ICD-10 and MeSH term sets, we merged the two selected term sets. We found that by removing duplicates and plural forms of terms, the total amount of non repetitive terms were 344. Among these, the number of terms included in both ICD-10 and MeSH were 105. When we set aside the overlapped terms between these two thesauri, we see that both ICD-10 and MeSH vocabularies contribute terms that are not identified in the other vocabulary respectively.

10.3.4 Occurrences of terms – when discarding the most common terms

As previously pointed out, the four partial terms (type 1, type 2, insulin, and glucose) had a very large number of occurrences when compared to the rest of the partial terms. To see how these four terms affect the total result, we made a new calculation of MeSH term matches, but now without recognizing the terms (type 1, type 2, insulin, glucose). We compared the new result (Fig. 10.10b) to the original result (Fig. 10.10a), and found that the number of “no match” videos dramatically increase (from 41% to 81%) when disregarding the (type 1, type 2, insulin, glucose) terms.

Figure 10.11 compares the relative proportion of partial MeSH term matches when all partial terms are included with those cases in which the four terms (type 1, type 2, insulin, glucose) were disregarded. When excluding the four most common terms, the proportion of videos including partial medical terms drops dramatically for videos from health organizations and active users, with a decrease of 83% and 87%, respectively. For hospital videos the proportion of partial MeSH term matches drops, but not to the same extent as for the two other groups. Here the decrease is 48%.

When disregarding the four most common terms, the distribution follows the same pattern as for exact term matches, where hospitals had the largest relative proportion of videos including medical terms. This indicates that hospitals more often, compared to health organizations and active users, describe videos using the more specific medical terms.

Fig. 10.10: The number of videos that have partial match for MeSH terms in title and/or description. In 10a all terms are included, while in 10b the four most common terms; (type 1, type 2, insulin, and glucose) were disregarded.

Fig. 10.11: Relative proportion of videos with MeSH partial terms. Analysis including all partial terms compared to analysis excluding the four most common terms (type 1, type 2, insulin, glucose).

10.4 Discussion

10.4.1 Findings

This study presents an analysis of medical terminology used in title and description of YouTube diabetes health videos uploaded by hospitals, health organizations and active users. The results show a low prevalence of medical terminology and that few distinct terms are actually used for describing videos.

We found that only 4% of the video title/descriptions included an exact ICD-10 term while 7% included an exact MeSH term. A larger amount of the total videos had a partial match with ICD-10 and/or MeSH terms. However, many of the positive results match one or more of the terms (type 1, type 2, insulin, glucose). When disregarding these very common terms, the amount of videos that include a partial MeSH terms was only 12%. The same applies to the findings of ICD-10 terms, where the amount of videos including a partial ICD-10 term drops to only 19% when disregarding the four common terms.

When distinguishing between videos provided by hospitals, organizations, and active users, we found that relative to the number of videos from each group, hospital videos had the highest prevalence of medical terms, followed by health organizations’ videos and lastly by active users’ videos. This was the case for exact term match to both ICD-10 and MeSH, and also for partial term match when disregarding the most common terms (type 1, type 2, insulin, glucose).

Even though hospital videos had the highest relative prevalence of medical terms (both exact and partial) the numbers were still low. Among hospital videos, only 11.1% included an exact MeSH term and 7.8% an exact ICD-10 term. The relative prevalence of partial MeSH terms (except the four common terms listed above) found in hospital videos was 22.2%.

In addition to low prevalence of medical terms found in video title and description, we also found a very low variety of diabetes-related medical terms in the title/description of such videos. Among the 167 ICD-10 terms, only four exact terms were detected (2.4%), while 12 (out of 282) exact MeSH terms were detected (4.3%). Concerning partial terms, 23 such terms were detected based on ICD-10 and 33 such terms were detected based on MeSH.

10.4.2 How ICD-10 and MeSH terms can be useful

Some hospitals and health institutions have for a long time provided patients with information about their disease and/or the medical procedure performed to help their ailment, especially on their discharge from treatment. This information normally contains very specific medical information that the patient may not only use to show to health personnel, explaining why they were at a hospital, but also to help them learn more about their health condition and how to prevent serious relapses.

In primary care, for example, there is an increasing trend towards providing patients with information about their condition. The medical terms present in the documentation given by primary care providers, especially the diagnostic or procedure codes, provide potential links to specific information that is relevant to the patient. For medical practitioners on the other hand such the diagnostic codes and MeSH terms can be used to identify literature that would be directly relevant for the patient through MEDLINE.90 The patient should also have the same opportunity to find relevant health information, including the wealth of medical information contained in patient health-related videos.

Providing both codes and the corresponding medical terms in video title and/ or description, will help the patient to find information targeted at their medical condition. Codes and corresponding medical terms will also reduce the uncertainty that a patient will experience confronted with an often unknown and very complex medical reality. Being certain that you have identified information that is relevant for the patients’ medical condition can therefore be of great value for the patient.

Using medical terms and codes in the title and description of videos will also help producers of information resources, like YouTube videos, to identify gaps in information resources that patients may need. As the situation is now, neither the patient nor the producers of the videos are able to explore the collection in the same systematic manner as the medical professional explores the medical domain using MEDLINE.

10.4.3 Discriminating power of terms

The specificity of a term is a measure of its ability to distinguish between documents in a collection (Spärck Jones 1972; Salton & McGill 1983) or in our case, between health videos. It is common to measure a term’s specificity using its Inverse Document Frequency (IDF) (Spärck Jones 1972; Baeza-Yates & Ribeiro-Neto 2011). The IDF measure is based on counting the number of documents, in the collection being searched, that contain the term in question. The intuition is that a term that occurs in many documents is not a good discriminator, and should be given less weight than one that occurs in few documents.

The results of our experiments show that the four partial terms (type 1, type 2, insulin, and glucose) have very low specificity among the diabetes related videos selected for this study. This reflects the common use of these terms. By describing a video using one or more of the most common terms, the video will be one among a large number of videos that are described in the same manner. To stand out, and be easy to identify and retrieve, a video needs a description that is (i) accurate with respect to video content and (ii) described through terms that have high specificity.

In this chapter we recommend providing textual descriptions to videos that also include medical terms and codes that as accurately as possible describe the content of the video. Following the consideration of specificity and discriminating power of terms, we also recommend avoiding the most common terms and rather describe video content through more specific medical terms.

10.4.4 The uniqueness of our study when compared to other work

A considerable amount of literature has been published on YouTube data analysis, such as studying the relations between video ratings and their comments (Yee et al. 2009) or focusing on the social networking aspect of YouTube and their social features (Cheng, Dale & Liu 2008; Chelaru, Orellana-Rodriguez & Sengor Altingovde 2012). Up till now, studies of YouTube performance have mainly focused on YouTube in general rather than on specific domains, such as health. However, some studies have evaluated YouTube health video content with respect to their quality of information for patient education and professional training (Gabarron et al. 2013; Topps, Helmer & Ellaway 2013). Such studies, focusing on different areas of medicine, include the work of Butler et al. (2012), Briones et al. (2012), Schreiber et al. (2013), Singh, Singh & Singh (2012), Steinberg et al. (2010), Murugiah et al. (2011), Fat et al. (2011), and Azer et al. (2013). In these studies reviewers evaluate the quality of selected videos, and assess their usefulness as an information source within their respective area.

Here is an illustration of how our study differs from those of our colleagues. Konstantinidis et al. (2013) identified the use of SNOMED terms among tags attached to YouTube health videos. The videos, providing information about surgery, were collected from a preselected list of hospital channels. This study examined tags from 4307 YouTube videos and found that 22.5% of these tags was a SNOMED term. Our study, however, stands in contrast to the work done by Konstantinidis et al. in a number of ways:

First, we detect the use of terminology from both the ICD-10 and MeSH vocabularies in video title and descriptions, which we found to be interesting medical vocabularies because of their purpose and application area. The MeSH terms were originally used for indexing articles for PubMed. However, such terms might also be relevant when health video creators describe the content of their videos. ICD, which is the standard diagnostic tool for epidemiology, health management, and clinical purposes, is used to classify diseases and other health problems.91 As such, the ICD-10 vocabulary should also be relevant for describing health related content in videos. Second, in contrast to the work presented by Konstantinidis et al., we expanded the types of YouTube channels to also include health organizations and users active in publishing videos about the disease, as opposed to just looking at hospital-produced YouTube videos. Third, we focused on a narrowly-defined medical condition, examining a broad range of health videos containing information specifically about diabetes, as opposed to the work of Kostantinidis et al. who examined health videos on the more general topic of surgery. For all these reasons, both individually and collectively, we feel that we can provide a richer and fuller understanding of how educational health videos measure up, when looking at how title and description conform to the medical terms that pertain to the health condition for which the user is seeking crucial web-based video material.

10.5 Conclusion

We have investigated the prevalence of medical terminology in title and description of diabetes videos obtained from YouTube. Our results show that hospitals and health organizations only to a modest degree use medical terms to describe the diabetes health videos they upload to YouTube.

When comparing video title and description to terminology from the ICD-10 and MeSH vocabularies, we found that only 4% of the videos were described using an exact ICD-10 term and 7% using an exact MeSH term. Also, when disregarding four very common terms (i.e., type 1, type 2, insulin, and glucose), the amount of videos that included a partial MeSH term was only 12%. We further found that very few of ICD-10 and MeSH terms (2.4% and 4.3%, respectively) were used for describing diabetes health videos. This resulted in a very low variety of diabetes-related medical terms found in such videos.

We believe that including medical terms and codes when describing videos can improve the availability of the videos and make it easier for patients to find relevant information during an online search. Given the trend for hospitals, health institutions and primary care facilities to use medical terms and codes when providing patients with information about their conditions (which is done via the distribution of health-related pamphlets and newsletters as well as in email alerts sent out to patients), if health-related video descriptions would likewise include these important medical terms and related codes, the patient could then obtain videos relevant to their condition by simply using these terms and codes in their search queries.

When including medical terms in title and/or description of videos, one should also consider the specificity of terms, and their ability to discriminate between videos. One should avoid the most common terms (in our tests these were type 1, type 2, insulin, and glucose) and select, instead, more specific medical terms to describe this useful and informative video content so that such material would be more accessible to patients.

Acknowledgments

The authors appreciate support from UiT The Arctic University of Tromsø through funding from Tromsø Research Foundation, and the important contributions of the ITACA-TSB Universitat Politècnica de València group.

References

AlGhamdi, K. M. & Moussa, N. A. (2012) ‘Internet use by the public to search for health-related information’, Int J Med Inform, 81(6):363–373, ISSN 1386-5056, doi: 10.1016/j. ijmedinf.2011.12.004.

Azer, S. A., AlGrain, H. A., AlKhelaif, R. A. & AlEshaiwi, S. M. (2013) ‘Evaluation of the educational value of youtube videos about physical examination of the cardiovascular and respiratory systems’, J Med Internet Res, 15(11):e241.

Baeza-Yates, R. A. & Ribeiro-Neto, B. (2011) Modern Information Retrieval, the Concepts and Technology Behind Search, Second edition. Bosto, MA: Addison-Wesley Longman Publishing Co., Inc.,

Bennett, E. (2011) Social Media and Hospitals: from Trendy to Essential, Futurescan Newsletter, Healthcare Trends and Implications 2011–2016, The American Hospital Association, Health Administration Press.

Bermudez-Tamayo, C., Alba-Ruiz, R., Jiménez-Pernett, J., García-Gutiérrez, J. F., Traver-Salcedo, V. & Yubraham-Sánchez, D. (2013) ‘Use of social media by Spanish hospitals: perceptions, difficulties, and success factors’, J Telemed eHealth, 19(2):137–145.

Briones, R., Nan, X., Madden, K. & Waks, L. (2012) ‘When vaccines go viral: an analysis of HPV vaccine coverage on YouTube’, Health Commun, 27(5):478–485.

Butler, D. P., Perry, F., Shah, Z. & Leon-Villapalos, J. (2012) ‘The quality of video information on burn first aid available on YouTube’, Burns, 2012 Dec 26:1.

Chelaru, S., Orellana-Rodriguez, C. & Sengor Altingovde, I. (2012) Can Social Features Help Learning to Rank YouTube Videos? In Proceedings of The 13th International Conference on Web Information Systems Engineering (WISE 2012).

Cheng, X., Dale, C. & Liu, J. (2008) Statistics and social networking of YouTube videos, In Proceedings of the IEEE International Workshop on Quality of Service.

de Boer, M. J., Versteegen, G. J. & van Wijhe, M. (2007) ‘Patients use of the Internet for pain-related medical information’, Patient Educ Couns, 68(1):86–97.

Dutta-Bergman, M. (2003) ‘Trusted online sources of health information: differences in demographics, health beliefs, and health-information orientation’, J Med Internet Res, [Online]. Available: http://www.jmir.org/2003/3/e21/HTML.

Fat, M. J., Doja, A., Barrowman, N. & Sell, E. (2011) ‘YouTube videos as a teaching tool and patient resource for infantile spasms’, J Child Neurol, 26(7):804–809.

Fox, S. (2011a) Peer-to-peer healthcare. Report, PewInternet, California HealthCare Foundation; Feb.

Fox, S. (2011b) The social life of health information. Report, PewInternet, California HealthCare Foundation; May.

Freeman, K. S. & Spyridakis, J. H. (2009) Effect of contact information on the credibility of online health information, IEEE Transactions on Professional Communication, 52(2), June.

Gabarron, E., Fernandez-Luque, L., Armayones, M. & Lau, A. Y. (2013) ‘Identifying measures used for assessing quality of YouTube videos with patient health information: a review of current literature’, Interact J Med Res, 2(1):e6.

Griffiths, F., Cave, J., Boarderman, F., Ren, J., Pawlikowska, T., Ball, R., Clarke, A. & Cohen, A. (2012) Social networks – The future for health care delivery, Soc Sci Med, 75(12), December, Available: http://dx.doi.org/10.1016/j.socscimed.2012.08.023.

Karlsen, R., Borras-Morell, J. E. B., Salcedo, V. T. & Luque, L. F. (2013) ‘A domain-based approach for retrieving trustworthy health videos from YouTube’, Stud HealthTech Inform, 192:1008.

Konstantinidis, S., Luque, L., Bamidis, P. & Karlsen, R. (2013) ‘The role of taxonomies in social media and the semantic web for health education,’ Methods Inf Med, 52(2):168–179.

Mishoe, S. C. (2008) ‘Consumer health care information on the Internet: does the public benefit?,’ Respir Care, 53(10):1285–1286.

Moturu, S. T., Lui, H. & Johnson, W. G. (2008) Trust evaluation in health information on the World Wide Web, Engineering in Medicine and Biology Society, EMBS 2008. 30th Annual International Conference of the IEEE.

Murugiah, K., Vallakati, A., Rajput, K., Sood, A. & Challa, N. R. (2011) ‘YouTube as a source of information on cardiopulmonary resuscitation’, Resuscitation, 82(3):332–334.

NLM (2013) Fact Sheet Medical Subject Headings (MeSH®) [cited 2013 Dec 12]. Available from: http://www.nlm.nih.gov/pubs/factsheets/mesh.html.

Powell, J., Inglis, N., Ronnie, J. & Large, S. (2011) The characteristics and motivations of online health information seekers: cross-sectional survey and qualitative interview study, J Med Internet Res, 13(1):e20. doi: 10.2196/jmir.1600. http://www.jmir.org/2011/1/e20/v13i1e20.

Purcell, G. P., Wilson, P. & Delamothe, T. (2002) ‘The quality of health information on the Internet’, Br Med J, 324:557–8.

Salton, G. & McGill, M. J. (1983) Introduction to Modern Information Retrieval, New York, NY: McGraw-Hill Inc.

Schreiber, J. J., Warren, R. F., Hotchkiss, R. N. & Daluiski, A. (2013) ‘An online video investigation into the mechanism of elbow dislocation’, J Hand Surg Am, 38(3):488–494.

Singh, A. G., Singh, S. & Singh, P. P. (2012) ‘YouTube for information on rheumatoid arthritis – a wakeup call?’ J Rheumatol, 39(5):899–903.

Spärck Jones, K. (1972) ‘A statistical interpretation of term specificity and its application in retrieval’, J Doc, 28.

Steinberg, P. L., Wason, S., Stern, J. M., Deters, L., Kowal, B. & Seigne, J. (2010) ‘YouTube as source of prostate cancer information’, Urology, 75(3):619–622.

Syed-Abdul, S., et.al. (2013) ‘Misleading health-related information promoted through video-based social media: anorexia on YouTube’, J Med Internet Res, 15(2):e30.

Topps, D., Helmer, J. & Ellaway, R. (2013) ‘YouTube as a platform for publishing clinical skills training videos’, Acad Med, 88(2):192–197.

WHO (2013) International Classification of Diseases (ICD) Information Sheet, WHO. [cited 2013 Dec 12]. Available from: http://www.who.int/classifications/icd/factsheet/en/index.html.

Yee, W. G., Yates, A., Liu, S. & Frieder, O. (2009) Are Web User Comments Useful for Search? In: Proc. of the 7th Workshop on Large-Scale Distributed Systems for Information Retrieval (LSDS-IR09).