Chapter 22

UX Design Guidelines

To err is human; forgive by design.

– Anonymous

Objectives

After reading this chapter, you will:

1. Appreciate the difficulties in using and interpreting UX design guidelines

2. Understand the role of human memory limitations in guidelines

3. Understand and apply some of the basic UX design guidelines with respect to user actions for each stage of the Interaction Cycle

22.1 Introduction

22.1.1 Scope and Universality

There are, of course, many books and articles on design guidelines for graphical user interfaces (GUIs) and other user interfaces and their widgets—how to create and employ windows, buttons, pull-down menus, pop-up menus, cascading menus, icons, dialogue boxes, check boxes, radio buttons, options menus, forms, and so on. But we want you to think about interaction design and design guidelines much more broadly than that, even well beyond Web pages and mobile devices.

You will find that this chapter takes a broader approach, transcending design just for computer interfaces and particular platforms, media, or devices. There is a world of designs out there and, as Don Norman (1990) says, seeing the guidelines applied to the design of everyday things helps us understand the application of guidelines to interaction by humans with almost anything kind of device or system.

Design (UX) Guidelines

A UX, or interaction, design guideline is a statement suggesting recommendations and considerations to inform the design of a specific aspect or component of interaction in a certain context. Some design guidelines come from study data, but most come from principles, maxims, and experience.

User Interfaces for Handheld Devices

Brad A. Myers

Carnegie Mellon University

The term “handheld devices” includes mobile phones (in particular, “smartphones,” which have more elaborate functions and user interfaces), as well as personal digital assistants, pagers, calculators, and specialized devices such as handheld scanners and data entry devices. Portable devices that are larger than the size of a hand, such as tablets such as the Apple iPad, are generally not considered to be handheld devices.

How do interfaces designed for handheld devices differ from conventional user interfaces? The first point to emphasize is that all of the processes and techniques described in this book, from contextual inquiries through iterative prototyping through user testing, all apply to handheld user interfaces, just as for any other user interface, so the process and techniques are not different. The key difference with handheld devices is the context of use. By definition, handheld devices are used while being held in one hand, which means that generally at most one other hand is available to perform input. Furthermore, handheld devices are mostly used on the go, which means that the user is busy doing other tasks (such as walking, talking on the phone, or taking an inventory at a store) at the same time as using the handheld. Another key difference is that handhelds have much smaller screens than conventional computers or tablets. Thus, information designs and even interaction techniques designed for conventional computers may not work on handhelds. For example, multicolumn Web pages are very difficult to read on handhelds, and a pop-up menu with more than 10 items cannot be used easily. Finally, because handheld devices are often controlled with a finger, as there typically is not a mouse pointer, target areas must be sufficiently large so that users can select what they want accurately. Some handhelds require the use of a stylus (a pen-like tool for pointing to or writing on the screen) instead of (or in addition to) a finger, which means that smaller items can be selected. However, stylus-based interfaces for handhelds are becoming less common.

The implications of these differences on the design of handheld user interfaces include the following.1:

![]() Optimize interactions for immediate use. User interfaces on handheld devices must make the most common actions available immediately. Users expect to be able to pull the device out of their pocket and perform a task quickly with minimal interactions and minimal waiting. For example, because the most common task for a calendar while on the go is to look up the next appointment, the user interface should default to showing what is on the calendar for the current time. The user interface must allow the user to exit immediately as well, as users can be interrupted at any time, for example, by the phone ringing. Designers of the original Palm handheld made the important observation that the most important tasks should be performed with one click, even at the cost of consistency, so on the Palm, creating a new event at a particular time just requires tapping on the calendar screen, compared to deleting an event (which is relatively rare), requires multiple taps and dialog boxes.2

Optimize interactions for immediate use. User interfaces on handheld devices must make the most common actions available immediately. Users expect to be able to pull the device out of their pocket and perform a task quickly with minimal interactions and minimal waiting. For example, because the most common task for a calendar while on the go is to look up the next appointment, the user interface should default to showing what is on the calendar for the current time. The user interface must allow the user to exit immediately as well, as users can be interrupted at any time, for example, by the phone ringing. Designers of the original Palm handheld made the important observation that the most important tasks should be performed with one click, even at the cost of consistency, so on the Palm, creating a new event at a particular time just requires tapping on the calendar screen, compared to deleting an event (which is relatively rare), requires multiple taps and dialog boxes.2

![]() Minimize textual input. Even more than minimizing input in general, text entry continues to be difficult with small devices, so requiring more than a word or two to be typed is problematic, and applications should be resilient to typing errors.

Minimize textual input. Even more than minimizing input in general, text entry continues to be difficult with small devices, so requiring more than a word or two to be typed is problematic, and applications should be resilient to typing errors.

![]() Concise output. The information to be displayed must be optimized for the small displays of these devices. Designs for desktops will typically need to be redesigned to make more efficient use of the screen space. For example, avoid blank lines.

Concise output. The information to be displayed must be optimized for the small displays of these devices. Designs for desktops will typically need to be redesigned to make more efficient use of the screen space. For example, avoid blank lines.

![]() Conform to platform conventions. Because interfaces must look like other applications on that particular handheld, iPhone user interfaces must look like other iPhone applications. If a user interface must run on different handhelds, it will probably need to be redesigned substantially. For example, the Android user interface conventions are quite different from the iPhone’s. Even within a platform there might be variations. For example, an Android phone can have a variety of physical buttons and form factors, which a user interface must use correctly.

Conform to platform conventions. Because interfaces must look like other applications on that particular handheld, iPhone user interfaces must look like other iPhone applications. If a user interface must run on different handhelds, it will probably need to be redesigned substantially. For example, the Android user interface conventions are quite different from the iPhone’s. Even within a platform there might be variations. For example, an Android phone can have a variety of physical buttons and form factors, which a user interface must use correctly.

![]() Allow disconnected and poorly connected use. Although networks continue to improve, an application should not assume it will always be well connected. Devices can go out of range, even in the middle of a transaction, and the user interface must respond appropriately. It is never acceptable for the handheld to refuse to respond to the user even if the network disappears. Users expect to be able to perform tasks even when the network is turned off, such as on an airplane.

Allow disconnected and poorly connected use. Although networks continue to improve, an application should not assume it will always be well connected. Devices can go out of range, even in the middle of a transaction, and the user interface must respond appropriately. It is never acceptable for the handheld to refuse to respond to the user even if the network disappears. Users expect to be able to perform tasks even when the network is turned off, such as on an airplane.

Because handheld devices will be the dominant way that most people in the world access computation, designing effective user interfaces for these devices will likely be a significant part of a designer’s activities.

The principles and guidelines in this chapter are universal; you will see in this chapter that the same issues apply to ATMs, elevator controls, and even highway signage. We, too, have a strong flavor of The Design of Everyday Things (Norman, 1990) in our guidelines and examples. We agree with Jokela (2004) that usability and a quality user experience are also essential in everyday consumer products.

We hope you will forgive us for excluding guidelines about internationalization or accessibility (as we advised in the Preface section, What We Do Not Cover). The book, and especially this chapter, is already large and we cannot cover everything.

Usability Principles for New Frontiers in the Virtual Environment User Experience

Theresa (Teri) A. O’Connell

President, Humans & Computers, Inc.

As the Starship Enterprise pushed ever deeper into space, Star Trek’s Captain Picard encountered the challenge of making new laws for new cultures in new environments. Usability and human factors engineers face the same challenge today in defining usability principles for virtual environments (VEs). We can learn a lot from Captain Picard’s experience. Like him, we adapt the traditional to the new, sometimes even deriving novel, untried usability principles from the old.

Some VEs, for example, training environments and advanced visual analytic tools, have a single purpose. Game worlds can have serious or entertainment purposes. Other VEs are multifunctional. Second Life can be a home-away-from home, classroom-away-from-campus, office-beyond-the-workplace, or exotic-vacation-sans-travel-time. Whatever their purpose, all VEs have one thing in common. The user experience when playing, working, learning, relaxing, or collaborating in a VE differs from that of traditional computing. So, designing a VE for a good user experience requires new ways of thinking about usability principles.

It turns out that many usability principles that apply when you are playing the role of a healer, hobnobbing with virtual buddies, or slaying monsters are the same as those that apply when you are surfing the Internet or writing a paper. We just have to apply them a bit differently in VEs. The resulting principles for design and testing are not mutually exclusive—they interact to create a successful and satisfying user experience. We can see how this works by taking a quick look at some usability principles from the perspective of the VE user experience for gamers and visual analysts.

Give users a sense of control over their experiences is a great grandparent of all usability principles. This prerequisite for user satisfaction is traditionally applied by providing obvious, consistently located, and easy-to-use controls. It applies directly to VE design in strategies, such as right clicking for immediate access to avatar controls, for example, teleportation in case of griefer attacks.

But, sometimes, collaboration requires ceding control, at least temporarily. This happens to players of massive multiplayer online role-playing games (MMORPG) and analysts manipulating huge complex visual analytic data sets when their success depends on collaboration. Adapting the user control usability principle, we give users control over their own interactions, but cede control to serve VE goals, in this case, to allow collaboration. For example, online game dashboard adaptability gives gamers autonomy over what is theirs. But an inability to steal the microphone while another player talks prevents interruptions, serving the goal of courteous collaboration. During testing, we measure collaboration success by comparing game scores of teams that must take turns sending voice communications to collaborate and teams that do not.

Engage the user is the e-commerce mantra. Its sticky goal is to keep shoppers onsite and buying. In games, the usability principle is engagement trumps everything else. Engagement is a primary purpose of VE design for many reasons. Engagement increases enjoyment and enhances learning. It draws gamers into gameplay, e.g., for example, by providing an enjoyable simple quest for novices and then progressing them to higher challenge levels.

Engagement enriches the user experience. But engagement intersects with another classic usability principle, prevent visual overload, for example, by streamlining backgrounds or minimizing animations. VEs can be visually dense. We engage gamers and inform analysts with lots of interesting things to look at, but we risk distraction and visual overload.

In first-person shooter games such as Left 4 Dead, the element of surprise is integral to engagement. In adventure games, while distracting, a dense, engaging background harbors surprise. In such a case, distraction is okay. The new principle becomes control visual overload, making sure that visually rich displays engage the user but do not impede VE goals.

Instead of minimizing animation, we turn animation into a tool to attract attention and engage, for example, with surprise attacks by nonplaying characters. In visual analytic tools, we sometimes interrupt workflow with an eye-catching animation when important new information becomes available. During testing, we measure impact on satisfaction by comparing survey responses from players or analysts who experience interruption and those who do not. We survey players and analysts to learn how engaging they consider different aspects of the user experience, for example, building game scores or answering a question in an analytical VE.

Visual density also leads to a usability principle that requires the VE to assist analysis by helping analysts identify important data quickly, for example, suspicious entities. To test, we measure success by logging and counting interactions with this data, for example, the number of times analysts manipulate isolated data points into clusters or social networks to investigate entity connections. Testing against ground truth, we count the number of known connections analysts identified. We use eye tracking to create heat maps showing analysts’ gaze paths and fixations. If their eyes continuously scan the environment, but never rest on salient new data, we know it is likely that the background is too dense and impedes analysis.

When we design and test the VE for a high-quality user experience, just like Captain Picard, we are going to encounter unimagined challenges. One of our strategies will be to update traditional usability principles. This means that every design or testing team facing the challenges of producing a VE that leads to a high-quality user experience needs a person who has a strong background in fields such as the human factors behind usability principles.

22.1.2 Background

We cannot talk about interaction design guidelines without giving a profound acknowledgement to what is perhaps the mother (and father) of all guidelines publications, the book of 944 design guidelines for text-based user interfaces of bygone days that Smith and Mosier of Mitre Corporation developed for the U.S. Air Force (Mosier & Smith, 1986; Smith & Mosier, 1986).

We were already working in human–computer interaction (HCI) and read it with great interest when it came out. Almost a decade later, an electronic version became available (Iannella, 1995). Other early guidelines collections include Engel and Granda (1975), Brown (1988), and Boff and Lincoln (1988).

Interaction design guidelines appropriate to the technology of the day appeared throughout the history of HCI, including “the design of idiot-proof interactive programs” (Wasserman, 1973); ground rules for a “well-behaved” system (Kennedy, 1974); design guidelines for interactive systems (Pew & Rollins, 1975); usability maxims (Lund, 1997b); and eight golden rules of interface design (Shneiderman, 1998). Every practitioner has a favorite set of design guidelines or maxims.

Eventually, of course, the attention of design guidelines followed the transition to graphical user interfaces (Nielsen, 1990; Nielsen et al., 1992). As GUIs evolved, many of the guidelines became platform specific, such as style guides for Microsoft Windows and Apple. Each has its own set of detailed requirements for compliance with the respective product lines.

As an example from the 1990s, an interactive product from Apple called Making it Macintosh (Alben, Faris, & Saddler, 1994; Apple Computer Inc, 1993) used computer animations to highlight the Macintosh user interface design principles, primarily to preserve the Macintosh look and feel. Many of the early style guides, such as OSF Motif (Open Software Foundation, 1990) and IBM’s Common User Access (Berry, 1988), came built into software tools for enforcing that particular style.

The principles behind the guidelines came mainly from human psychology. Our friend Tom Hewitt (1999) was probably the most steadfast HCI voice for understanding psychology as a foundation for UX design principles and guidelines. These principles first evolved into design guidelines in human factors engineering.

Some UX design guidelines, especially those coming from human factors, are supported with empirical data. Most guidelines, however, have earned their authority from a strong grounding in the practice and shared experience of the UX community—experience in design and evaluation, experience in analyzing and solving UX problems.

Based on the National Cancer Institute’s Research-Based Web Design and Usability Guidelines project begun in March 2000, the U.S. Department of Health and Human Services has published a book containing an extensive set of interaction design guidelines and associated reference material (U.S. Department of Health and Human Services, 2006). Each guideline has undergone extensive internal and external review with respect to tracking down its sources, estimating its relative importance in application, and determining the “strength of evidence,” for example, strong research support vs. weak research support, supporting the guideline.

As is the case in most domains, design guidelines finally opened the way for standards (Abernethy, 1993; Billingsley, 1993; L. Brown, 1993; Quesenbery, 2005; Strijland, 1993).

22.1.3 Some of Our Examples Are Intentionally Old

We have been collecting examples of good and bad interaction and other kinds of design for decades. This means that some of these examples are old. Some of these systems no longer exist. Certainly some of the problems have been fixed over time, but they are still good examples and their age shows how as a community we have advanced and improved our designs. Many new users may think the interfaces to modern commercial software applications have always been as they are. Read on.

22.2 Using and interpreting design guidelines

Are most design guidelines not obvious? When we teach these design guidelines, we usually get nods of agreement upon our statement of each guideline. There is very little controversy about the interaction design guidelines stated absolutely, out of context. Each general guideline is obvious; it just makes sense. How else would you do it?

However, when it comes to applying those same guidelines in specific usability design and evaluation situations, there is bewilderment. People are often unsure about which guidelines apply or how to apply, tailor, or interpret them in a specific design situation (Potosnak, 1988). We do not even agree on the meaning of some guidelines. As Lynn Truss (2003) says in the context of English grammar, that even considering people who are rabidly in favor of using the rules of grammar, it is impossible to get them all to agree on the rules and their interpretation and to pull in the same direction.

Bastien and Scapin (1995, p. 106) quote a study by de Souza and Bevan (1990): de Souza and Bevan “found that designers made errors, that they had difficulties with 91% of the guidelines, and that integrating detailed design guidelines with their existing experience was difficult for them.”

There is something about UX design guidelines in almost every HCI book, often specific to user interface widgets and devices. You will not see guidelines here of the type: “Menus should not contain more than X items.” That is because such guidelines are meaningless without interpretation within a design and usage context. In the presence of sweeping statements about what is right or wrong in UX design, we can only think of our long-time friend Jim Foley who said “The only correct answer to any UX design question is: It depends.”

We believe much of the difficulty stems from the broad generality, vagueness, and even contradiction within most sets of design guidelines. One of the guidelines near the top of almost any list is “be consistent,” an all-time favorite UX platitude. But what does it mean? Consistency at what level; what kind of consistency? Consistency of layout or semantic descriptors such as labels or system support for workflow?

There are many different kinds of consistency with many different meanings in many different contexts. Although we use the same words in discussions about applying the consistency guideline, we are often arguing about different things. That guideline is just too broad and requires too much interpretation for the average practitioner to fit it easily to a particular instance.

Another such overly general maxim is “keep it simple,” certainly a shoo-in to the UX design guidelines hall of fame. But, again, what is simplicity? Minimize the things users can do? It depends on the kind of users, the complexity of their work domain, their skills and expertise.

To address this vagueness and difficulty in interpretation at high levels, we have organized the guidelines in a particular way. Rather than organize the guidelines by the obvious keywords such as consistency, simplicity, and the language of the user, we have tried to associate each guideline with a specific interaction design situation by using the structure of the Interaction Cycle and the User Action Framework (UAF) to organize the guidelines. This allows specific guidelines to be linked to user actions for planning, making physical actions, or assessing feedback and user actions for sensing objects and other interaction artifacts, understanding cognitive content, or physically manipulating those objects.

Finally, we warn you, as we have done often, to use your head and not follow guidelines blindly. While design guidelines and custom style guides are useful in supporting UX design, remember that there is no substitute for a competent and experienced practitioner. Beware of the headless chicken guy unencumbered by the thought process: “Do not worry, I have the style guide.” Then you should worry, especially if the guide turns out to be a programming guide for user interface widgets.

22.3 Human memory limitations

Because some of the guidelines and much of practical user performance depend on the concepts of human working memory, we interject a short discussion of the same here, before we get into the guidelines themselves. We treat human memory here because:

![]() it applies to most of the Interaction Cycle parts, and

it applies to most of the Interaction Cycle parts, and

![]() it is one of the few areas of psychology that has solid empirical data supporting knowledge that is directly usable in UX design.

it is one of the few areas of psychology that has solid empirical data supporting knowledge that is directly usable in UX design.

Our discussion of human memory here is by no means complete or authoritative. Seek a good psychology book for that. We present a potpourri of concepts that should help your understanding in applying the design guidelines related to human memory limitations.

22.3.1 Sensory Memory

Sensory memory is of very brief duration. For example, the duration of visual memory ranges from a small fraction of a second to maybe 2 seconds and is strictly about the visual pattern observed, not anything about identifying what was seen or what it means. It is raw sensory data that allow direct comparisons with temporally nearby stimuli, such as might occur in detecting voice inflection. Sensory persistence is the phenomenon of storage of the stimulus in the sensory organ, not the brain.

For example, visual persistence allows us to integrate the fast-moving sequences of individual image frames in movies or television, making them appear as a smooth integrated motion picture. There are probably not many UX design issues involving sensory memory.

22.3.2 Short-Term or Working Memory

Short-term memory, which we usually call working memory, is the type we are primarily concerned with in HCI and has a duration of about 30 seconds, a duration that can be extended by repetition or rehearsal. Other intervening activities, sometimes called “proactive interference,” will cause the contents of working memory to fade even faster.

Working memory is a buffer storage that carries information of immediate use in performing tasks. Most of this information is called “throw-away data” because its usefulness is short term and it is undesirable to keep it longer. In his famous paper, George Miller (1956) showed experimentally that under certain conditions, the typical capacity of human short-term memory is about seven plus or minus two items; often it is less.

22.3.3 Chunking

The items in short-term memory are often encodings that Simon (1974) has labeled “chunks.” A chunk is a basic human memory unit containing one piece of data that is recognizable as a single gestalt. That means for spoken expressions, for example, a chunk is a word, not a phoneme, and in written expressions a chunk is a word or even a single sentence, not a letter.

Random strings of letters can be divided into groups, which are remembered more easily. If the group is pronounceable, it is even easier to remember, even if it has no meaning. Duration trades off with capacity; all else being equal, the more chunks involved, the less time they can be retained in short-term memory.

Not counting the area code, a phone number has seven digits, not a coincidence that this exactly meets the Miller estimate of working memory capacity. If you look up a number in the phone book, you are loading your working memory with seven chunks. You should be able to retain the number if you use it within the next 30 seconds or so.

With a little rehearsal and without any intervening interruption of your attention, you can stretch this duration out to 2 minutes or longer. A telephone number is a classic example of working memory usage in daily life. If you get distracted between memory loading and usage, you may have to look the number up again, a scenario we all have experienced. If the prefix (the first three digits) is familiar, it is treated as a single chunk, making the task easier.

Sometimes items can be grouped or recoded into patterns that reduce the number of chunks. When grouping and recoding is involved, storage can trade off with processing, just as it does in computers. For example, think about keeping this pattern in your working memory:

001010110111000

On the surface, this is a string of 15 digits, beyond the working memory capacity of most people. But a clever user might notice that this is a binary number and the digits can be grouped into threes:

001 010 110 111 000

and converted easily to octal digits: 12670. With a modicum of processing we have grouped and recoded the original 15 chunks into a more manageable 5.

The following is a trick case, but it is illustrative of the principle in an extreme setting. Ostensibly this sequence of letters contains 18 items:

NTH EDO GSA WTH ECA TRU

Because there is no obvious way to group or encode them into chunks, the 18 items, as shown, represent 18 chunks. If each three-letter group spelled a word, there would be 6 chunks. If you know the trick to this example and imagine the initial “N” being moved to the right-hand end, you get not only six words, but a sentence, which amounts to one large chunk:

THE DOG SAW THE CAT RUN

22.3.4 Stacking

One of the ways user working memory limitations are affected by task performance is when task context stacking is required. This occurs when another situation arises in the middle of task performance. Before the user can continue with the original task, its context (a memory of where the user was in the task) must be put on a “stack” in the user’s memory.

This same thing happens to the context of execution of a software program when an interrupt must be processed before proceeding: the program execution context is stacked in a last-in-first-out (LIFO) data structure. Later, when the system returns to the original program, its context is “popped” from the stack and execution continues. It is pretty much the same for a human user whose primary task is interrupted. Only the stack is implemented in human working memory.

This means that user memory stacks are small in capacity and short in duration. People have leaky stacks; after enough time and interruptions, they forget what they were doing. When people get to “pop” a task context from their stacks, they get “closure,” a feeling of cognitive relief due to the lifting of the cognitive load of having to retain information in their working memories. One way to help users in this regard is to design large, complex tasks as a series of smaller operations rather than one large hierarchically structured task involving significant stacking. This lets them come up for air periodically.

22.3.5 Cognitive Load

Cognitive load is the load on working memory at a specific point in time (G. Cooper, 1998; Sweller, 1988, 1994). Cognitive load theory (Sweller, 1988, 1994) has been aimed primarily at improvement in teaching and learning through attention to the role and limitations of working memory but, of course, also applies directly to human–computer interaction. While working with the computer, users are often in danger of having their working memory overloaded. Users can get lost easily in cascading menus with lots of choices at each level or tasks that lead through large numbers of Web pages.

If you could chart the load on working memory as a function of time through the performance of a task, you would be looking at variations in the cognitive load across the task steps. Whenever memory load reaches zero, you have “task closure.” By organizing tasks into smaller operations instead of one large hierarchical structure you will reduce the average user cognitive load over time and achieve task closure more often.

22.3.6 Long-Term Memory

Information stored in short-term memory can be transferred to long-term memory by “learning,” which may involve the hard work of rehearsal and repetition. Transfer to long-term memory relies heavily on organization and structure of information already in the brain. Items transfer more easily if associations exist with items already in long-term memory.

The capacity of long-term memory is almost unlimited—a lifetime of experiences. The duration of long-term memory is also almost unlimited but retrieval is not always guaranteed. Learning, forgetting, and remembering are all associated with long-term memory. Sometimes items can be classified in more than one way. Maybe one item of a certain type goes in one place and another item of the same type goes elsewhere. As new items and new types of items come in, you revise the classification system to accommodate. Retrieval depends on being able to reconstruct structural encoding.

When we forget, items become inaccessible, but probably not lost. Sometimes forgotten or repressed information can be recalled. Electric brain stimulation can trigger reconstructions of visual and auditory memories of past events. Hypnosis can help recall vivid experiences of years ago. Some evidence indicates that hypnosis increases willingness to recall rather than ability to recall.

22.3.7 Memory Considerations and Shortcuts in Command versus GUI Selection Interaction Styles

Recognition vs. recall

Because we know that computers are better at memory and humans are better at pattern recognition, we design interaction to play to each other’s strengths. One way to relieve human memory requirements in interaction design is by leveraging the human ability for recognition.

You hear people say, in many contexts, “I cannot remember exactly, but I will recognize it when I see it.” That is the basis for the guideline to use recognition over recall. In essence, it means letting the user choose from a list of possibilities rather than having to come up with the choice entirely from memory.

Recognition over recall does work better for initial or intermittent use where learning and remembering are the operational factors, but what happens to people who do learn? They migrate from novice to experienced userhood. In UAF terms, they begin to remember how to make translations of frequent intentions into actions. They focus less on cognitive actions to know what to do and more on the physical actions of doing it. The cognitive affordances to help new users make these translations can now begin to become barriers to performance of the physical actions.

Moving the cursor and clicking to select items from lists of possibilities become more effortful than just typing short memorized commands. When more experienced users do recall the commands they need by virtue of their frequent usage, they find command typing a (legal) performance enhancer over the less efficient and, eventually, more boring and irritating physical actions required by those once-helpful GUI affordances.

Even command users get some memory help through command completion mechanisms, the “hum a few bars of it” approach. The user has to remember only the first few characters and the system provides possibilities for the whole command.

Shortcuts

When expert users get stuck with a GUI designed for translation affordances, it is time for shortcuts to come to the rescue. In GUIs, these shortcuts are physical affordances, mainly “hot key” equivalents of menu, icon, and button command choices, such as Ctrl-S for the Save command.

The addition of an indication of the shortcut version to the pull-down menu choice, for example, Ctrl+S added to the Save choice in the File menu, is a simple and subtle but remarkably effective design feature to remind all users of the menu about the corresponding shortcuts. All users can migrate seamlessly between using the shortcuts on the menus to learn and remember the commands and bypassing the menus to use the shortcuts directly. This is true “as-needed” support of memory limitations in design.

22.3.8 Muscle Memory

Muscle memory is a little bit like sensory memory in that it is mostly stored locally, in the muscles in this case, and not the brain. Muscle memory is important for repetitive physical actions; it is about getting in a “rhythm.” Thus, muscle memory is an essential aspect of learned skills of athletes. In HCI, it is important in physical actions such as typing.

In this country at least, we use an arbitrary convention that moving an electrical switch up means “on” and down means “off.” Over a lifetime of usage, we develop muscle memory because of this convention and hit the switch in an upward direction as we enter the room without pausing.

However, if you have lights on a three-way switch, “on” and “off” cannot be assigned consistently to any given direction of the switch. It depends on the state of the whole set of switches. So, often you might find yourself hitting a light switch in an upward direction without thinking as you sweep past. If it is a three-way switch, sometimes the light fails to turn on because the switch was already up with the lights off. No amount of practice or trying to remember can overcome this conflict between muscle memory and this device.

22.4 Selected ux design guidelines and examples

The selected UX design guidelines in this section are generally organized by the UAF structure. We illustrate many of the guidelines and principles with examples that we have gathered over the years, including many design examples from everyday things, such as hair dryers, automobiles, road signage, public doorways, and so on, which demonstrate the universality of the principles and concepts. Those examples that are directly about computer interaction are mostly platform independent except, of course, screen shots that are specific to a particular system.

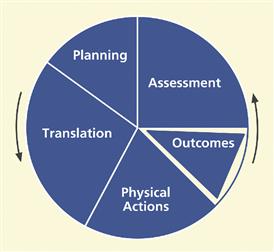

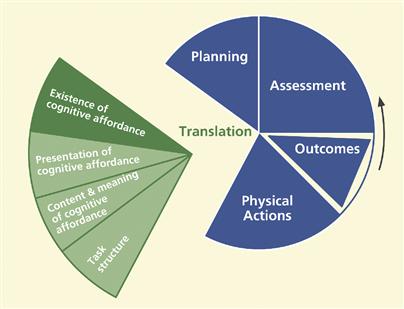

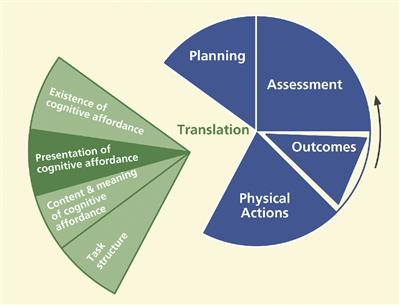

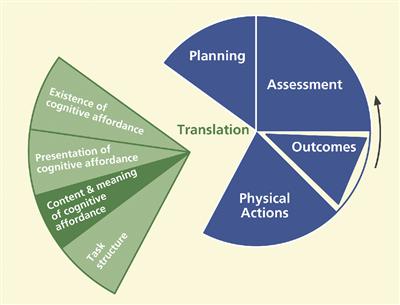

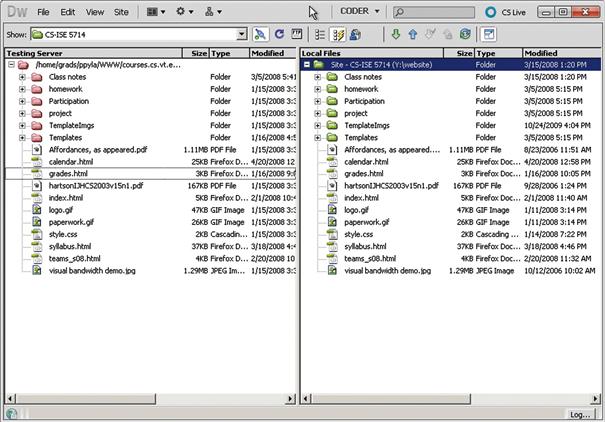

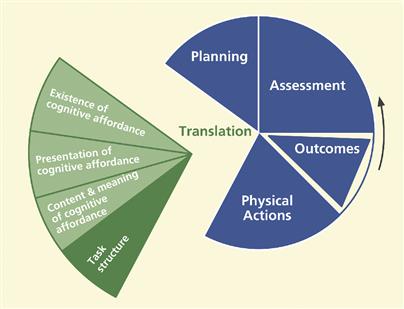

To review the structure of the Interaction Cycle from the previous chapter, we show the simplest view of this cycle in Figure 22-1.

Figure 22-1 Simplest view of the Interaction Cycle.

In sum, parts of the Interaction Cycle are:

![]() planning: how the interaction design supports users in determining what to do

planning: how the interaction design supports users in determining what to do

![]() translation: how the interaction design supports users in determining how to do actions on objects

translation: how the interaction design supports users in determining how to do actions on objects

![]() physical actions: how the interaction design supports users in doing those actions

physical actions: how the interaction design supports users in doing those actions

![]() outcomes: how the non-interaction functionality of the system helps users achieve their work goals

outcomes: how the non-interaction functionality of the system helps users achieve their work goals

![]() assessment of outcomes: how the interaction design supports users in determining whether the interaction is turning out right

assessment of outcomes: how the interaction design supports users in determining whether the interaction is turning out right

We will have sample UX design guidelines for each of these plus an overall category.

22.5 Planning

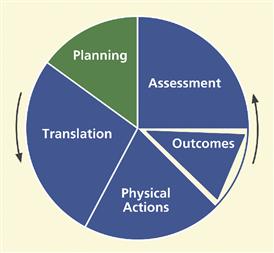

In Figure 22-2 we highlight the planning part of the Interaction Cycle. Support for user planning is often the missing color in the user interface rainbow.

Figure 22-2 The planning part of the Interaction Cycle.

Planning guidelines are to support users as they plan how they will use the system to accomplish work in the application domain, including cognitive user actions to determine what tasks or steps to do. It is also about helping users understand what tasks they can do with the system and how well it supports learning about the system for planning. If users cannot determine how to organize several related tasks in the work domain because the system does not help them understand exactly how it can help do these kinds of tasks, the design needs improvement in planning support.

22.5.1 Clear System Task Model for User

Support the user’s ability to acquire an overall understanding of the system at a high level, including the system model, design concept, and metaphors. (NB: the special green font used in the next line denotes such a guideline.)

Help users plan goals, tasks by providing a clear model of how users should view system in terms of tasks

Support users’ high-level understanding of the whole system with a clear conceptual design, not just how to use one feature.

Help users with system model, metaphors, work context

Support users’ overall understanding of the system, design concept, and any metaphors used with a clear conceptual design. Metaphors, such as the analogy of using a typewriter in a word processor design, are ways that existing user knowledge of previous designs and phenomena can be leveraged to ease learning and using of new designs.

Design to match user’s conception of high-level task organization

Support user task decomposition by matching the design to users’ concept of task decomposition and organization.

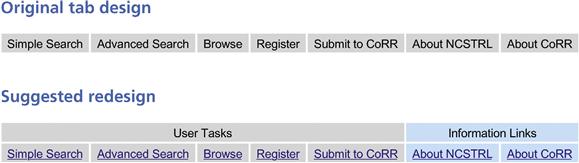

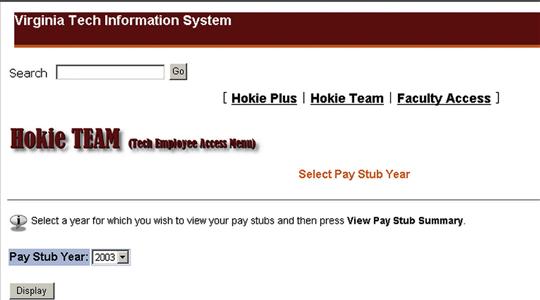

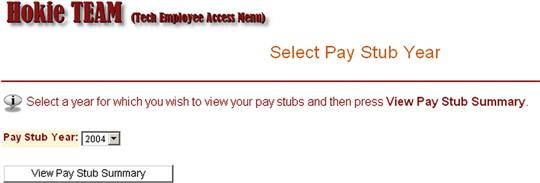

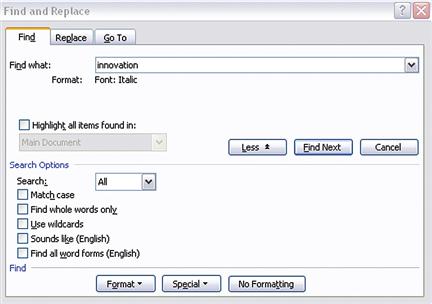

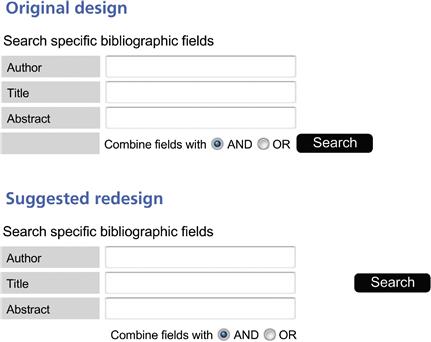

Tabs at the top of every page of a particular digital library Website are not well organized by task. They are ordered so that information-seeking tasks are mixed with other kinds of tasks, as shown in the top of Figure 22-3. Part of the new tab bar in our suggested new design is shown in the bottom of Figure 22-3.

Help users understand what system features exist and how they can be used in their work context

Figure 22-3 Tab reorganization to match task structure.

Support user awareness of specific system features capabilities and understanding of how they can use those features to solve work domain problems in different work situations. Support user ability to attain awareness of specific system feature or capability.

Consider the case of the Master Document feature in Microsoft Word™. For convenience and to keep file sizes manageable, users of Microsoft Word™ can maintain each part of a document in a separate file. At the end of the day they can combine those individual files to achieve the effect of a single document for global editing and printing.

However, this ability to treat several chapters in different files as a single document is almost impossible to figure out. The system does not help the user determine what can be done with it or how it might help with this task.

Help users decompose tasks logically

Support user task decomposition, logically breaking long, complex tasks into smaller, simpler pieces.

Make clear all possibilities for what users can do at every point

Help users understand how to get started and what to do next.

Keep users aware of system state for planning next task

Maintain and clearly display system state indicators when next actions are state dependent.

Keep the task context visible to minimize memory load

To help users compare outcomes with goals, maintain and clearly display user request along with results.

In the search mode within a library information system, users can find themselves deep down many levels and screens into the task where card catalog information is being displayed. By the time they dig into the information structure that deeply, there is a chance users may have forgotten their exact original search intentions. Somewhere on the screen, it would be helpful to have a reminder of the task context, such as “You are searching by author for: Stephen King.”

22.5.2 Planning for Efficient Task Paths

Help users plan the most efficient ways to complete their tasks

This is an example of good design, rather than a design problem, and it is from Borland’s 3-D Home Architect™. Using this house-design program, when a user tries to print a large house plan, it results in an informative message in a dialogue box that says: Current printer settings require 9 pages at this scale. Switching to Landscape mode allows the plan to be drawn with 6 pages. Click on Cancel if you wish to abort printing. This tip can be most helpful, saving the time and paper involved in printing it wrong the first time, making the change, and printing again.

Strictly as an aside here, this message still falls short of the mark. First, the term “abort” has unnecessary violent overtones. Plus, the design could provide a button to change to landscape mode directly, without forcing the user to find out how to make that switch.

22.5.3 Progress Indicators

Keep users aware of task progress, what has been done and what is left to do

Support user planning with task progress indicators to help users manage task sequencing and keep track of what parts of the task are done and what parts are left to do. During long tasks with multiple and possibly repetitive steps, users can lose track of where they are in the task. For these situations, task progress indicators or progress maps can be used to help users with planning based on knowing where they are in the task.

Filling out income tax forms is a good example of a lengthy multiple-step task. The designers of Turbo-Tax™ by Intuit, with a “wizard-like” step-at-a-time prompter, went to great lengths to help users understand where they are in the overall task, showing the user’s progress through the steps while summarizing the net effect of the user’s work at each point.

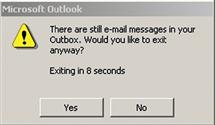

22.5.4 Avoiding Transaction Completion Slips

A transaction completion slip is a kind of error in which the user omits or forgets a final action, often a crucial action for consummating the task. Here we provide an associated guideline and some examples.

Provide cognitive affordances at the end of critical tasks to remind users to complete the transaction

A transaction completion slip can occur in the Ticket Kiosk System when the user gets a feeling of closure at the end of the interaction for the transaction and fails to take the tickets just purchased. In this case, special attention is needed to provide a good cognitive affordance in the interaction design to remind the user of the final step in the task plan and help prevent this kind of slip: “Please take your tickets” (or your bank card or your receipt).

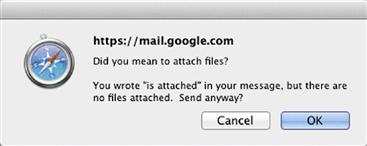

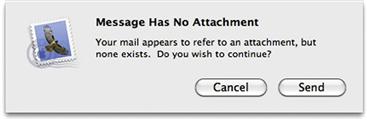

As another example, we cannot count the number of times we have sent or received email for which an attachment was intended but forgotten. Recent versions of Google’s Gmail have a simple solution. If any variation of the word “attach” appears in an email but it is sent without an attachment, the system asks if the sender intended to attach something, as you can see in Figure 22-4. Similarly, if the email author says something such as “I am copying …”, and there is no address in the Copy field, the system could ask about that, too.

Figure 22-4 Gmail reminder to attach a file.

An example of the same thing, this time using a plugin3 for the Mac Mail program, is shown in Figure 22-5.

Figure 22-5 Mac reminder to attach a file.

On one banking site, when users transfer money from their savings accounts to their checking accounts, they often think the transaction is complete when it is actually not. This is because, at the bottom right-hand corner of the last page in this transaction workflow, just below the “fold” on the screen, there is a small button labeled Confirm that is often completely missed.

Users close the window and go about their business of paying bills, unaware that they are possibly heading toward an overdraft. At least they should have gotten a pop-up message reminding them to click the Confirm button before letting them logout of the Website.

Later, when one of the users called the bank to complain, they politely declined his suggestion that they should pay the overdraft fee because of their liability due to poor usability. We suspect they must have gotten other such complaints, however, because the flaw was fixed in the next version.

As a final example of avoiding transaction completion slips, we cite a microwave oven. Because it takes time to defrost or cook the food, users often start it and do something else while waiting. Then, depending on the level of hunger, it is possible to forget to take out the food when it is done.

So microwave designers usually include a reminder. At completion, the microwave usually beeps to signal the end of its part of the task. However, a user who has left the room or is otherwise occupied when it beeps may still not be mindful of the food waiting in the microwave. As a result, some oven designs have an additional aspect to the feature for avoiding this classic slip. The beep repeats periodically until the door is opened to retrieve the food.

The design for one particular microwave, however, took this too far. It did not wait long enough for the follow-up beep. Sometimes a user would be on the way to remove the food and it would beep. Some users found this so irritating that they would hurry to rescue the food before that “reminder” beep. To them, this machine seemed to be “impatient” and “bossy” to the point that it had been controlling the users by making them hurry.

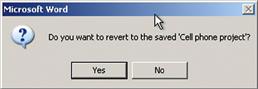

22.6 Translation

Translation guidelines are to support users in sensory and cognitive actions needed to determine how to do a task step in terms of what actions to make on which objects and how. Translation, along with assessment, is one of the places in the Interaction Cycle where cognitive affordances play the major role.

Many of the principles and guidelines apply to more than one part of the Interaction Cycle and, therefore, to more than one section of this chapter. For example, “Use consistent wording” is a guideline that applies to several the parts of the Interaction Cycle—planning, translation, and assessment. Rather than repeat, we will put them in the most pertinent location and hope that our readers recognize the broader applicability.

Translation issues include:

![]() existence (of cognitive affordance)

existence (of cognitive affordance)

![]() presentation (of cognitive affordance)

presentation (of cognitive affordance)

22.6.1 Existence of Cognitive Affordance

Figure 22-6 highlights the “existence of cognitive affordance” part within the breakdown of the translation part of the Interaction Cycle.

Figure 22-6 Existence of a cognitive affordance within translation.

If interaction designers do not provide needed cognitive affordances, such as labels and other cues, users will lack the support they need for learning and knowing what actions to make on what objects in order to carry out their task intentions. The existence of cognitive affordances is necessary to:

![]() show which user interface object to manipulate

show which user interface object to manipulate

![]() show how to manipulate an object

show how to manipulate an object

![]() help users get started in a task

help users get started in a task

![]() guide data entry in formatted fields

guide data entry in formatted fields

![]() indicate active defaults to suggest choices and values

indicate active defaults to suggest choices and values

![]() indicate system states, modes, and parameters

indicate system states, modes, and parameters

![]() remind about steps the user might forget

remind about steps the user might forget

Provide effective cognitive affordances that help users get access to system functionality

Support users’ cognitive needs to determine how to do something by ensuring the existence of an appropriate cognitive affordance. Not giving feed-forward cognitive affordances, cues such as labels, data field formats, and icons, is what Cooper (2004, p. 140) calls “uninformed consent”; the user must proceed without understanding the consequences.

Help users know/learn what actions are needed to carry out intentions

It is possible to build in effective cognitive affordances that help novice users and do not get in the way of experienced users.

Help users know how to do something at action/object level

Users get their operational knowledge from experience, training, and cognitive affordances in the design. It is our job to provide this latter source of user knowledge.

Help users predict outcome of actions

Users need feed-forward in cognitive affordances that explains the consequences of physical actions, such as clicking on a button.

Help users determine what to do to get started

Users need support in understanding what actions to take for the first step of a particular task, the “getting started” step, often the most difficult part of a task.

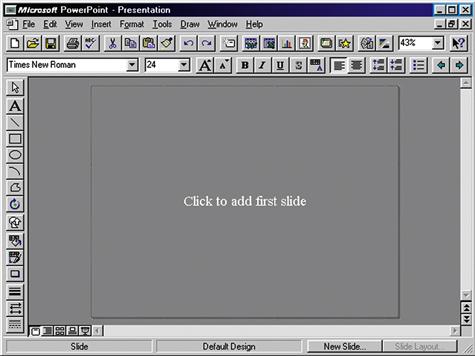

In Figure 22-7 there is a start-up screen of an early version of Microsoft PowerPoint. In applications where there is a wide variety of things a user can do, it is difficult to know what to do to get started when faced with a blank screen. The addition of one simple cognitive and physical affordance combination, Click to add first slide, provides an easy way for an uncertain user to get started in creating a presentation.

Figure 22-7 Help in getting started in PowerPoint

(screen image courtesy of Tobias Frans-Jan Theebe).

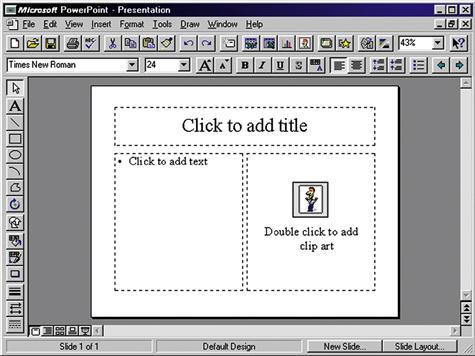

Similarly, in Figure 22-8 we show other such helpful cues to continue, once a new slide is begun.

Provide a cognitive affordance for a step the user might forget

Figure 22-8 More help in getting started

(screen image courtesy of Tobias Frans-Jan Theebe).

Support user needs with cognitive affordances as prompts, reminders, cues, or warnings for a particular needed action that might get forgotten.

22.6.2 Presentation of Cognitive Affordance

In Figure 22-9, we highlight the “presentation of cognitive affordance” portion of the translation part of the Interaction Cycle.

Figure 22-9 Presentation of cognitive affordances within translation.

Presentation of cognitive affordances is about how cognitive affordances appear to users, not how they convey meaning. Users must be able to sense, for example, see or hear, a cognitive affordance before it can be useful to them.

Support user with effective sensory affordances in presentation of cognitive affordances

Support user sensory needs in seeing and hearing cognitive affordances by effective presentation or appearance. This category is about issues such as legibility, noticeability, timing of presentation, layout, spatial grouping, complexity, consistency, and presentation medium, for example, audio, when needed. Sensory affordance issues also include text legibility and content contained in the appearance of a graphical feature, such as an icon, but only about whether the icon can be seen or discerned easily. For an audio medium, the volume and sound quality are presentation characteristics.

Cognitive affordance visibility

Obviously a cognitive affordance cannot be an effective cue if it cannot be seen or heard when it is needed. Our first guideline in this category is conveyed by the sign in Figure 22-10, if only we could be sure what it means.

Make cognitive affordances visible

Figure 22-10 Good advice anytime.

If a cognitive affordance is invisible, it could be because it is not (yet) displayed or because it is occluded by another object. A user aware of the existence of the cognitive affordance can often take some actions to summon an invisible cognitive affordance into view. It is the designer’s job to be sure each cognitive affordance is visible, or easily made visible, when it is needed in the interaction.

This example is about a user (shopper) whom we think would rate himself at least a little above the novice level in shopping at his local grocery store. But recently, on a trip to get some deodorant, he was forced to reconsider his rating when his quick-in-and-quick-out plan was totally foiled. First, the store had been completely remodeled so he could not rely on his memory of past organization. However, because he was looking for only one item, he was optimistic.

He made a fast pass down the center aisle, looking at the overhead signs in each side aisle for anything related to deodorant, but nothing matched his search goal. There were also some sub-aisles in a different configuration along the front of the store. He scanned those aisles unsuccessfully. Although the rubber on his shopping cart tires was burning, he felt his fast-shopping plan slipping away so he did what no guy wants to do, he asked for directions.

The clerk said, “Oh, it is right over there,” pointing to one of the upfront aisles that he had just scanned. “But I do not see any sign for deodorant,” he whined, silently blaming himself, the user, for the inability to see a sign that must have been somewhere right there in front on him. “Oh, yeah, there is a sign,” she replied (condescendingly, he thought), “you just have to get up real close and look right behind that panel on the top of the end shelf.” Figure 22-11 shows what that panel looked like to someone scanning these upfront aisles.

Figure 22-11 Aesthetic panel blocks visibility of sign as cognitive affordance.

In Figure 22-12 you can see the “deodorant” sign revealed if you “just get up real close and look right behind that panel on the top of the end shelf.”

Figure 22-12 The sign is visible if you look carefully.

The nice aesthetic end panels added much to the beauty of the shopping experience, but were put in a location that exactly blocked the “deodorant” sign and others, rendering that important cognitive affordance invisible from most perspectives in the store.

When our now-humbled shopper reminded the store clerk that this design violated the guideline for visibility for presentation of cognitive affordances, he was encouraged that he had reached her interaction design sensibilities when he overheard her hushed retort, “Whatever!”. He left thinking, “that went well.”

Cognitive affordance noticeability

Make cognitive affordances noticeable

When a needed cognitive affordance exists and is visible, the next consideration is its noticeability or likelihood of being noticed or sensed. Just putting a cognitive affordance on the screen is not enough, especially if the user does not necessarily know it exists or is not necessarily looking for it. These design issues are largely about supporting awareness. Relevant cognitive affordances should come to users’ attention without users seeking it. The primary design factor in this regard is location, putting the cognitive affordance within the users’ focus of attention. It is also about contrast, size, and layout complexity and their effect on separation of the cognitive affordance from the background and from the clutter of other user interface objects.

Message lines, status lines, and title lines at the top or bottom of the screen are notoriously unnoticeable. Each user typically has a narrow focus of attention, usually near where the cursor is located. A pop-up message next to the cursor will be far more noticeable than a message in a line at the bottom of the screen.

For some reason, many Websites have very small and inconspicuous log-in boxes, often mixed in with many objects most users do not even notice in the far top border of the page. Users have to waste time in searching visually over the whole page to find the way to log in.

Cognitive affordance legibility

Make text legible, readable

Text legibility is about being discernable, not about the words being understandable. Text presentation issues include the way the text of a button label is presented so it can be read or sensed, including such appearance or sensory characteristics as font type, font size, font and background color, bolding, or italics of the text, but it is not about the content or meaning of the words in the text. The meaning is the same regardless of the font or color.

Cognitive affordance presentation complexity

Control cognitive affordance presentation complexity with effective layout, organization, and grouping

Support user needs to locate and be aware of cognitive affordances by controlling layout complexity of user interface objects. Screen clutter can obscure needed cognitive affordances such as icons, prompt messages, state indicators, dialogue box components, or menus and make it difficult for users to find them.

Cognitive affordance presentation timing

Support user needs to notice cognitive affordance with appropriate timing of appearance or display of cognitive affordances. Do not present a cognitive affordance too early or too late or with inadequate persistence; that is, avoid “flashing.”

Present cognitive affordance in time for it to help the user before the associated action

Sometimes getting cognitive affordance presentation timing right means presenting at exactly the point in a task and under exactly the conditions when the cognitive affordance is needed.

Figures 22-13 and 22-14 are photographs of a paper towel dispenser in a public bathroom. They illustrate an example of a good design that involves just-in-time visibility of presentation of a cognitive affordance.

Figure 22-13 The primary cognitive affordance for taking a paper towel.

Figure 22-14 The backup cognitive affordance to help start a new paper towel.

In Figure 22-13, the next available towel is visible and the cognitive affordance in the sketch on the cover of the dispenser clearly says “Pull the towel down with both hands.”

In Figure 22-14 you can see how designers covered the case where the next towel failed to drop down so users cannot grab it. Now a different user action is needed to get a towel, so a different cognitive affordance is required.

Designers provided this new cognitive affordance, telling the user to Push the lever to get the next towel started down into position. When a towel was already in place, this second cognitive affordance was not needed and was not visible, being obscured by the towel, but it does become visible just when it is needed.

When a user wishes to paste something from one Word document to another, there can be a question about formatting. Will the item retain its formatting, such as text or paragraph style, from the original document or will it adopt the formatting from the place of insertion in the new document? And how can the choice be controlled by the user? When you want more control of a paste operation, you might choose Paste Special … from the Edit menu.

But the choices in the Paste Special dialogue box say nothing about controlling formatting. Rather, the choices can seem too technical or system centered, for example, Microsoft Office Word Document Object or Unformatted Unicode Text, without an explanation of the resulting effect in the document. While these choices might be precise about the action and its results to some users, they are cryptic even to most regular users.

In recent versions of Word, a small cognitive affordance, a tiny clipboard icon with a pop-up label Paste Options appears, but it appears after the paste operation. Many users do not notice this little object, mainly because by the time it appeared, they have experienced closure on the paste operation and have already moved on mentally to the next task. If they do not like the resulting formatting, then changing it manually becomes their next task.

Even if users do notice the little object, it is possible they might confuse it with something to do with undoing the action or something similar because Word uses that same object for in-context undo. However, if a user does notice this icon and does take the time to click on it, that user will be rewarded with a pull-down menu of useful options, such as Keep Source Formatting, Match Destination Formatting, Keep Text Only, plus a choice to see a full selection of other formatting styles.

Just what users need! But it is made available too late; the chance to see this menu comes after the user action to which it applied. If choices on this after-the-fact menu were available on the Paste Special menu, it would be perfect for users.

Cognitive affordance presentation consistency

When a cognitive affordance is located within a user interface object that is also manipulated by physical actions, such as a label within a button, maintaining a consistent location of that object on the screen helps users find it quickly and helps them use muscle memory for fast clicking. Hansen (1971) used the term “display inertia” in reference to one of his top-level principles, optimize operations, to describe this business of minimizing display changes in response to user inputs, including displaying a given user interface object in the same place each time it is shown.

Give similar cognitive affordances consistent appearance in presentation

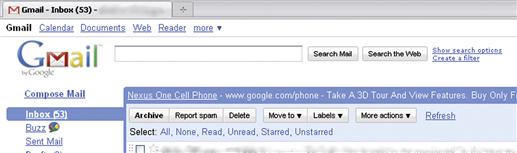

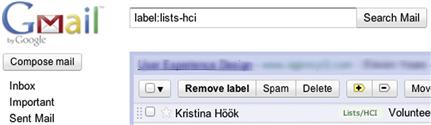

When users of an older version of Gmail were viewing the list of messages in the Inbox, the Archive button was at the far left at the top of the message pane, set off by the blue border, as shown in Figure 22-15.

Figure 22-15 The Archive button in the Inbox view of an older version of Gmail.

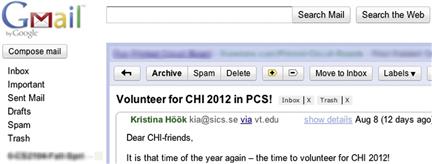

But on the screen for reading a message, Gmail had the Archive button as the second object from the left at the top. In the place where the Archive button was earlier, there was now a Back to Inbox link, as seen in Figure 22-16. Using a link instead of a button in this position is a slight inconsistency, probably without much effect on users. But users feel a larger effect from the inconsistent placement of the Archive button.

Figure 22-16 The Archive button in a different place in the message reading view.

Selected messages can be archived from either view of the email by clicking on the Archive button. Further, when archiving messages from the Inbox list view, the user sometimes goes to the message-reading view to be sure. So a user doing an archiving task could be going back and forth between the Inbox listing of Figure 22-15 and message viewing of Figure 22-16.

For this activity, the location of the Archive button is never certain. The user loses momentum and performance speed by having to look for the Archive button each time before clicking on it. Even though it moves only a short distance between the two views, it is enough to slow down users significantly because they cannot run the cursor up to the same spot every time to do multiple archive actions quickly. The lack of display inertia works against an efficient sensory action of finding the button and it works against muscle memory in making the physical action of moving the cursor up to click.

It seems that Google people have fixed this problem in subsequent versions, as attested to by the same kinds of screens in Figures 22-17 and 22-18.

Figure 22-17 The Archive button in the Inbox view of a later version of Gmail.

Figure 22-18 The Archive button in the same place in the new message reading view.

22.6.3 Content and Meaning of Cognitive Affordance

Just what part of quantum theory do you not understand?

– Anonymous

Figure 22-19 highlights the “content and meaning of cognitive affordance” portion of the translation part of the Interaction Cycle.

Figure 22-19 Content/meaning within translation.

The content and meaning of a cognitive affordance are the knowledge that must be conveyed to users to be effective in helping them as affordances to think, learn, and know what they need to make correct actions. The cognitive affordance design concepts that support understanding of content and meaning include clarity, distinguishability from other cognitive affordances, consistency, layout and grouping to control complexity, usage centeredness, and techniques for avoiding errors.

Help user determine actions with effective content/meaning in cognitive affordances

Support user ability to determine what action(s) to make and on what object(s) for a task step through understanding and comprehension of cognitive affordance content and meaning: what it says, verbally or graphically.

Clarity of cognitive affordances

Design cognitive affordances for clarity

Use precise wording, carefully chosen vocabulary, or meaningful graphics to create correct, complete, and sufficient expressions of content and meaning of cognitive affordances.

Precise wording

Support user understanding of cognitive affordance content by precise expression of meaning through precise word choices. Clarity is especially important for short, command-like text, such as is found in button labels, menu choices, and verbal prompts. For example, the button label to dismiss a dialogue box could say Return to …, where appropriate, instead of just OK.

Use precise wording in labels, menu titles, menu choices, icons, data fields

The imperative for clear and precise wording of button labels, menu choices, messages, and other text may seem obvious, at least in the abstract. However, experienced practitioners know that designers often do not take the time to choose their words carefully.

In our own evaluation experience, this guideline is among the most violated in real-world practice. Others have shared this experience, including Johnson (2000). Because of the overwhelming importance of precise wording in interaction designs and the apparent unmindful approach to wording by many designers in practice, we consider this to be one of the most important guidelines in the whole book.

Part of the problem in the field is that wording is often considered a relatively unimportant part of interaction design and is assigned to developers and software people not trained to construct precise wording and not even trained to think much about it.

This is one of our favorite examples of precise wording, probably overdone: “Wet Paint. This is a warning, not an instruction.”

This guideline represents a part of interaction design where a great improvement can be accrued for only a small investment of extra time and effort. Even a few minutes devoted to getting just the right wording for a button label used frequently has an enormous potential payoff. Here are some related and helpful sub-guidelines:

Use a verb and noun and even an adjective in labels where appropriate

Avoid vague, ambiguous terms

Be as specific to the interaction situation as possible; avoid one-size-fits-all messages

Clearly represent work domain concepts

As an example of matching the message to the reality of the work domain, signs such as “Keep this door closed at all times” probably should read something more like “Close this door immediately after use.”

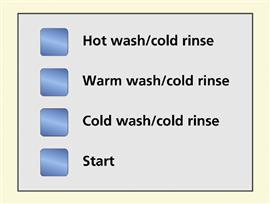

Use dynamically changing labels when toggling

When using the same control object, such as a Play/Pause button on an mp3 music player, to control the toggling of a system state, change the object label to show that it is consistently a control to get to the next state. Otherwise the current system state can be unclear and there can be confusion over whether the label represents an action the user can make or feedback about the current system state.

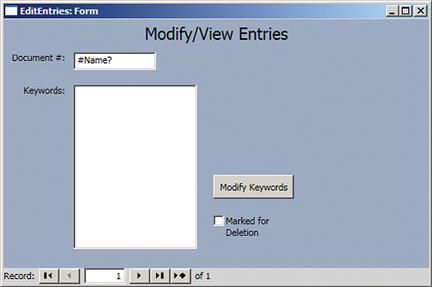

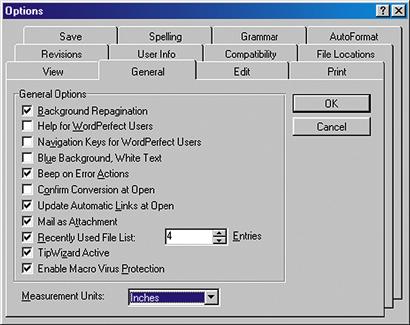

In Figure 22-20 we show an early prototype of a personal document retrieval system. The underlying model for deleting a document involves two steps: marking the document for deletion and later deleting all marked documents permanently. The small check box at the lower right is labeled: Marked for Deletion.

Figure 22-20 The Marked for Deletion check box in a document retrieval screen

(screen image courtesy of Raphael Summers.

The designer’s idea was that users would check that box to signify the intention to delete the record. Thereafter, until a permanent purge of marked records, seeing a check in this box signifies that this record is, indeed, marked for deletion. The problem comes before the user checks the box.

The user wants to delete the record (or at least mark it for deletion), but this label seems to be a statement of system state rather than a cognitive affordance for an action, implying that it is already marked for deletion. However, because the check box is not checked, it is not entirely clear. Our suggestion was to re-label the box in the unchecked state to read: Check to mark for deletion, making it a true cognitive affordance for action in this state. After checking, Marked for Deletion works fine.

Data value formats

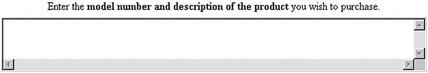

Support user needs to know how to enter data, such as in a form field, with a cognitive affordance or cue to help with format and kinds of values that are acceptable.

Provide cognitive affordances to indicate formatting within data fields

Data entry is a user work activity where the formatting of data values is an issue. Entry in the “wrong” format, meaning a format the user thinks is right but the system designers did not anticipate, can lead to errors that the user must spend time to resolve or, worse yet, have undetected data errors. It is relatively easy for designers to indicate expected data formats, with cognitive affordances associated with the field labels, with sample data values, or both.

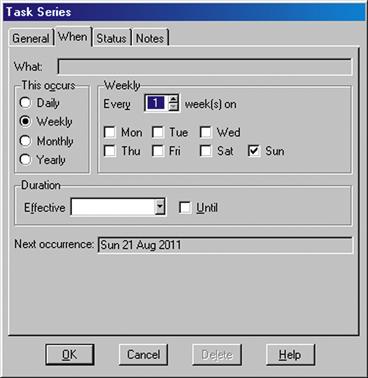

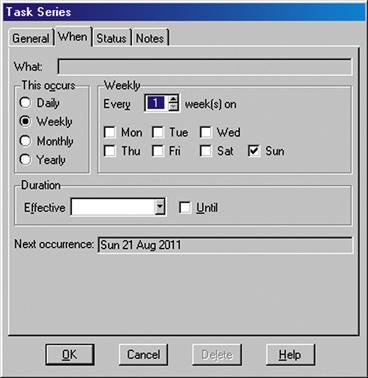

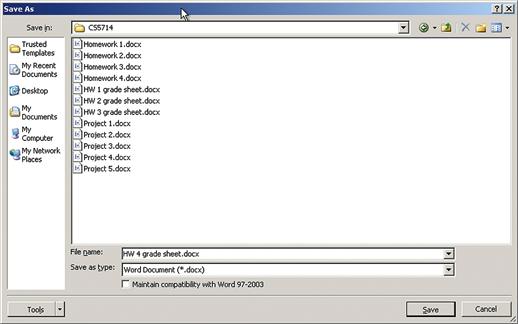

In Figure 22-21 we show a dialogue box that appears in an application that is, despite the cryptic title Task Series, for scheduling events. In the Duration section, the Effective Date field does not indicate the expected format for data values. Although many systems are capable of accepting date values in almost any format, new or intermittent users may not know if this application is that smart. It would have been easy for the designer to save users from hesitation and uncertainty by suggesting a format here.

Constrain the formats of data values to avoid data entry errors

Figure 22-21 Missing cognitive affordance about Effective Date data field format

(screen image courtesy of Tobias Frans-Jan Theebe).

Sometimes rather than just show the format, it is more effective to constrain values so that they are acceptable as inputs.

An easy way to constrain the formatting of a date value, for example, is to use drop-down lists, specialized to hold values appropriate for the month, day, and year parts of the date field. Another approach that many users like is a “date picker,” a calendar that pops up when the user clicks on the date field. A date can be entered into the field only by way of selection from this calendar.

A calendar with one month of dates at a time is perhaps the most practical. Side arrows allow the user to navigate to earlier or later months or years. Clicking on a date within the month on the calendar causes that date to be picked for the value to be used. By using a date-picker, you are constraining both the data entry method and the format the user must employ, effectively eliminating errors due to either allowing inappropriate values or formatting ambiguity.

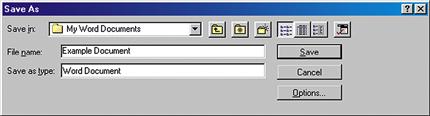

Provide clearly marked exits

Support user ability to exit dialogue sequences confidently by using clearly labeled exits. Include destination information to help user predict where the action will go upon leaving the current dialogue sequence. For example, in a dialogue box you might use Return to XYZ after saving instead of OK and Return to XYZ without saving instead of Cancel.

To qualify this example, we have to say that the terms OK and Cancel are so well accepted and so thoroughly part of our current shared conventions that, even though the example shows potentially better wordings, the conventions now carry the same meaning at least to experienced users.

Provide clear “do it” mechanism

Some kinds of choice-making objects, such as drop-down or pop-up menus, commit to the choice as soon as the user indicates the choice; others require a separate “commit to this choice” action. This inconsistency can be unsettling for some users who are unsure about whether their choices have “taken.” Becker (2004) argues for a consistent use of a “Go” action, such as a click, to commit to choices, for example, choices made in a dialogue box or drop-down menu. And we caution to make its usage clear to avoid task completion slips where users think they have completed making the menu choice, for example, and move on without committing to it with the Go button.

Be predictable; help users predict outcome of actions with feed-forward information in cognitive affordances. Predictability helps both learning and error avoidance

Distinguishability of choices in cognitive affordances

Make choices distinguishable

Support user ability to differentiate two or more possible choices or actions by distinguishable expressions of meaning in their cognitive affordances. If two similar cognitive affordances lead to different outcomes, careful design is needed so users can avoid errors by distinguishing the cases.

Often distinguishability is the key to correct user choices by the process of elimination; if you provide enough information to rule out the cases not wanted, users will be able to make the correct choice. Focus on differences of meaning in the wording of names and labels. Make larger differences graphically in similar icons.

This is an unfortunate, but true, story that evinces the reality that human lives can be lost due to simple confusion over labeling of controls. This is a very serious usability case involving the dramatic and tragic October 31, 1999 EgyptAir Flight 990 airliner crash (Acohido, 1999) possibly as a result of poor usability in design. According to the news account, the pilot may have been confused by two sets of switches that were similar in appearance, labeled very similarly, as Cut out and Cut off, and located relatively close to each other in the Boeing 767 cockpit design.

Exacerbating the situation, both switches are used infrequently, only under unusual flight conditions. This latter point is important because it means that the pilots would not have been experienced in using either one. Knowing pilots receive extensive training, designers assumed their users are experts. But because these particular controls are rarely used, most pilots are novices in their use, implying the need for more effective cognitive affordances than usual.

One conjecture is that one of the flight crew attempted to pull the plane out of an unexpected dive by setting the Cut out switches connected to the stabilizer trim but instead accidentally set the Cut off switches, shutting off fuel to both engines. The black box flight recorder did confirm that the plane did go into a sudden dive and that a pilot did flip the fuel system cutoff switches soon thereafter.

There seem to be two critical design issues, the first of which is the distinguishability of the labeling, especially under conditions of stress and infrequent use. To us, not knowledgeable in piloting large planes, the two labels seem so similar as to be virtually synonymous.