Chapter 21

The Interaction Cycle and the User Action Framework

Objectives

After reading this chapter, you will:

1. Understand Norman’s stages-of-action model of interaction

2. Understand the gulf of execution and the gulf of evaluation and their importance in interaction design

3. Understand the basic concepts of the Interaction Cycle and the User Action Framework (UAF)

4. Know the stages of user actions within the Interaction Cycle

21.1 Introduction

21.1.1 Interaction Cycle and User Action Framework (UAF)

The Interaction Cycle is our adaptation of Norman’s “stages-of-action” model (Norman, 1986) that characterizes sequences of user actions typically occurring in interaction between a human user and almost any kind of machine. The User Action Framework (Andre et al., 2001) is a structured knowledge base containing information about UX design, concepts, and issues.

Within each part of the UAF, the knowledge base is organized by immediate user intentions involving sensory, cognitive, or physical actions. Below that level the organization follows principles and guidelines and becomes more detailed and more particularized to specific design situations as one goes deeper into the structure.

To clarify the distinction, the Interaction Cycle is a representation of user interaction sequences and the User Action Framework is a knowledge base of interaction design concepts, the top level of which is organized as the stages of the Interaction Cycle.

21.1.2 Need for a Theory-Based Conceptual Framework

As Gray and Salzman (1998, p. 241) have noted, “To the naïve observer it might seem obvious that the field of HCI would have a set of common categories with which to discuss one of its most basic concepts: Usability. We do not. Instead we have a hodgepodge collection of do-it-yourself categories and various collections of rules-of-thumb.”

As Gray and Salzman (1998) continue, “Developing a common categorization scheme, preferably one grounded in theory, would allow us to compare types of usability problems across different types of software and interfaces.” We believe that the Interaction Cycle and User Action Framework help meet this need. They are an attempt to provide UX practitioners with a way to frame design issues and UX problem data within the structure of how designs support user actions and intentions.

As Lohse et al. (1994) state, “Classification lies at the heart of every scientific field. Classifications structure domains of systematic inquiry and provide concepts for developing theories to identify anomalies and to predict future research needs.” The UAF is such a classification structure for UX design concepts, issues, and principles, designed to:

![]() Give structure to the large number of interaction design principles, issues, and concepts

Give structure to the large number of interaction design principles, issues, and concepts

![]() Offer a more standardized vocabulary for UX practitioners in discussing interaction design situations and UX problems

Offer a more standardized vocabulary for UX practitioners in discussing interaction design situations and UX problems

![]() Provide the basis for more thorough and accurate UX problem analysis and diagnosis

Provide the basis for more thorough and accurate UX problem analysis and diagnosis

![]() Foster precision and completeness of UX problem reports based on essential distinguishing characteristics

Foster precision and completeness of UX problem reports based on essential distinguishing characteristics

Although we include a few examples of design and UX problem issues to illustrate aspects and categories of the UAF in this chapter, the bulk of such examples appear with the design guidelines (Chapter 22), organized on the UAF structure.

21.2 The interaction cycle

21.2.1 Norman’s Stages-of-Action Model of Interaction

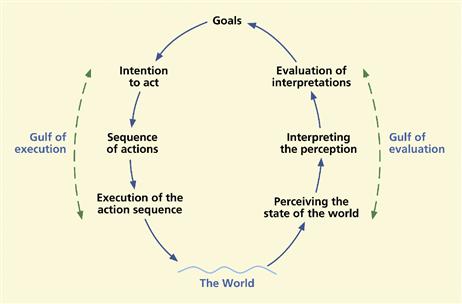

Norman’s stages-of-action model, illustrated in Figure 21-1, shows a generic view of a typical sequence of user actions as a user interacts with almost any kind of machine.

Figure 21-1 Norman’s (1990) stages-of-action model, adapted with permission.

The stages of action naturally divide into three major kinds of user activity. On the execution (Figure 21-1, left) side, the user typically begins at the top of the figure by establishing a goal, decomposing goals into tasks and intentions, and mapping intentions to action sequence specifications. The user manipulates system controls by executing the physical actions (Figure 21-1, bottom left), which cause internal system state changes (outcomes) in the world (the system) at the bottom of the figure.

On the evaluation (Figure 21-1, right) side, users perceive, interpret, and evaluate the outcomes with respect to goals and intentions through perceiving the system state by sensing feedback from the system (state changes in “the world” or the system). Interaction success is evaluated by comparing outcomes with the original goals. The interaction is successful if the actions in the cycle so far have brought the user closer to the goals.

Norman’s model, along with the structure of the analytic evaluation method called the cognitive walkthrough (Lewis et al., 1990), had an essential influence on our Interaction Cycle. Both ask questions about whether the user can determine what to do with the system to achieve a goal in the work domain, how to do it in terms of user actions, how easily the user can perform the required physical actions, and (to a lesser extent in the cognitive walkthrough method) how well the user can tell whether the actions were successful in moving toward task completion.

21.2.2 Gulfs between User and System

Originally conceived by Hutchins, Hollan, and Norman (1986), the gulfs of execution and evaluation were described further by Norman (1986). The two gulfs represent places where interaction can be most difficult for users and where designers need to pay special attention to designing to help users. In the gulf of execution, users need help in knowing what actions to make on what objects. In the gulf of evaluation, users need help in knowing whether their actions had the expected outcomes.

The gulf of execution

The gulf of execution, on the left-hand side of the stages-of-action model in Figure 21-1, is a kind of language gap—from user to system. The user thinks of goals in the language of the work domain. In order to act upon the system to pursue these goals, their intentions in the work domain language must be translated into the language of physical actions and the physical system.

As a simple example, consider a user composing a letter with a word processor. The letter is a work domain element, and the word processor is part of the system. The work domain goal of “creating a permanent record of the letter” translates to the system domain intention of “saving the file,” which translates to the action sequence of “clicking on the Save icon.” A mapping or translation between the two domains is needed to bridge the gulf.

Let us revisit the example of a thermostat on a furnace from Chapter 8. Suppose that a user is feeling chilly while sitting at home. The user formulates a simple goal, expressed in the language of the work domain (in this case the daily living domain), “to feel warmer.” To meet this goal, something must happen in the physical system domain. The ignition function and air blower, for example, of the furnace must be activated. To achieve this outcome in the physical domain, the user must translate the work domain goal into an action sequence in the physical (system) domain, namely to set the thermostat to the desired temperature.

The gulf of execution lies between the user knowing the effect that is desired and what to do to the system to make it happen. In this example, there is a cognitive disconnect for the user in that the physical variables to be controlled (burning fuel and blowing air) are not the ones the user cares about (being warm). The gulf of execution can be bridged from either direction—from the user and/or from the system. Bridging from the user’s side means teaching the user about what has to happen in the system to achieve goals in the work domain. Bridging from the system’s side means building in help to support the user by hiding the need for translation, keeping the problem couched in work domain language. The thermostat does a little of each—its operation depends on a shared knowledge of how thermostats work but it also shows a way to set the temperature visually.

To avoid having to train all users, the interaction designer can take responsibility to bridge the gulf of execution from the system’s side by an effective conceptual design to help the user form a correct mental model. Failure of the interaction design to bridge the gulf of execution will be evidenced in observations of hesitation or task blockage before a user action because the user does not know what action to take or cannot predict the consequences of taking an action.

In the thermostat example the gap is not very large, but this next example is a definitive illustration of the language differences between the work domain and the physical system. This example is about a toaster that we observed at a hotel brunch buffet. Users put bread on the input side of a conveyor belt going into the toaster system. Inside were overhead heating coils and the bread came out the other end as toast.

The machine had a single control, a knob labeled Speed with additional labels for Faster (clockwise rotation of the knob) and Slower (counterclockwise rotation). A slower moving belt makes darker toast because the bread is under the heating coils longer; faster movement means lighter toast. Even though this concept is simple, there was a distinct language gulf and it led to a bit of confusion and discussion on the part of users we observed. The concept of speed somehow just did not match their mental model of toast making. We even heard one person ask “Why do we have a knob to control toaster speed? Why would anyone want to wait to make toast slowly when they could get it faster?” The knob had been labeled with the language of the system’s physical control domain.

Indeed, the knob did make the belt move faster or slower. But the user does not really care about the physics of the system domain; the user is living in the work domain of making toast. In that domain, the system control terms translate to “lighter” and “darker.” These terms would have made a much more effective design for knob labels by helping bridge the gulf of execution from the system toward the user.

The gulf of evaluation

The gulf of evaluation, on the right side of the stages-of-action model in Figure 21-1, is the same kind of language gap, only in the other direction. The ability of users to assess outcomes of their actions depends on how well the interaction design supports their comprehension of the system state through their understanding of system feedback.

System state is a function of internal system variables, and it is the job of the interaction designer who creates the display of system feedback to bridge the gulf by translating a description of system state into the language of the user’s work domain so that outcomes can be compared with the goals and intentions to assess the success of the interaction.

Failure of the interaction design to bridge the gulf of evaluation will be evidenced in observations of hesitation or task blockage after a user action because the user does not understand feedback and does not fully know what happened as a result of the action.

21.2.3 From Norman’s Model to Our Interaction Cycle

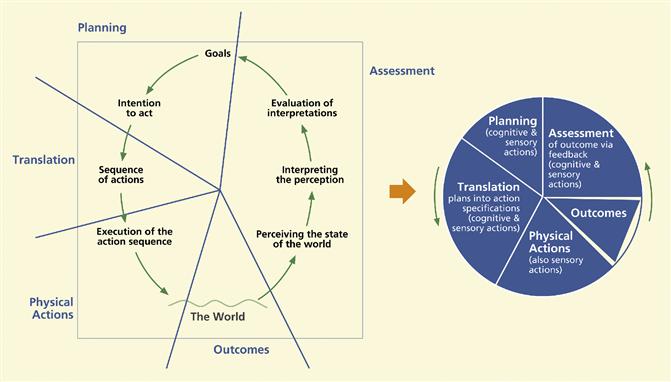

We adapted and extended Norman’s theory of action model of Figure 21-1 into what we call the Interaction Cycle, which is also a model of user actions that occur in typical sequences of interaction between a human user and a machine.

Partitioning the model

Because the early part of Norman’s execution side was about planning of goals and intentions, we call it the planning part of the cycle. Planning includes formulating goal and task hierarchies, as well as decomposition and identification of specific intentions.

Planning is followed by formulation of the specific actions (on the system) to carry out each intention, a cognitive action we call translation. Because of the special importance to the interaction designer of describing action and the special importance to the user of knowing these actions, we made translation a separate part of the Interaction Cycle.

Norman’s “execution of the action sequence” component maps directly into what we call the physical actions part of the Interaction Cycle. Because Norman’s evaluation side is where users assess the outcome of each physical action based on system feedback, we call it the assessment part.

Adding outcomes and system response

Finally, we added the concepts of outcomes and a system response, resulting in the mapping to the Interaction Cycle as shown in Figure 21-2. Outcomes is represented as a “floating” sector between physical actions and assessment in the Interaction Cycle because the Interaction Cycle is about user interaction and what happens in outcomes is entirely internal to the system and not part of what the user sees or does. The system response, which includes all system feedback and which occurs at the beginning of and as an input to the assessment part, tells users about the outcomes.

Figure 21-2 Transition from Norman’s model to our Interaction Cycle.

The resulting Interaction Cycle

We abstracted Norman’s stages into the basic kinds of user activities within our Interaction Cycle, as shown in Figure 21-2.

The importance of translation to the Interaction Cycle and its significance in design for a high-quality user experience is, in fact, so great that we made the relative sizes of the “wedges” of the Interaction Cycle parts represent the weight of this importance visually.

Example: Creating a Business Report as a Task within the Interaction Cycle

Let us say that the task of creating a quarterly report to management on the financial status of a company breaks down into these basic steps:

![]() Calculate monthly profits for last quarter

Calculate monthly profits for last quarter

![]() Write summary, including graphs, to show company performance

Write summary, including graphs, to show company performance

In this kind of task decomposition it is common that some steps are more or less granular than others, meaning that some steps will decompose into more sub-steps and more details than others. As an example, Step 1 might decompose into these sub-steps:

![]() Call accounting department and ask for numbers for each month

Call accounting department and ask for numbers for each month

![]() Create column headers in spreadsheet for expenses and revenues in each product category

Create column headers in spreadsheet for expenses and revenues in each product category

The first step here, to open a spreadsheet program, might correspond to a simple pass through the Interaction Cycle. The second step is a non-system task that interrupts the workflow temporarily. The third and fourth steps could take several passes through the Interaction Cycle.

Let us look at the “Print report” task step within the Interaction Cycle. The first intention for this task is the “getting started intention”; the user intends to invoke the print function, taking the task from the work domain into the computer domain. In this particular case, the user does not do further planning at this point, expecting the feedback from acting on this intention to lead to the next natural intention.

To translate this first intention into an action specification in the language of the actions and objects within the computer interface, the user draws on experiential knowledge and/or the cognitive affordances (Chapter 20) provided by display of the Print choice in the File menu to create the action specification to select “Print…”. A more experienced user might translate the intention subconsciously or automatically to the short-cut actions of typing “Ctrl-P” or clicking on the Print icon.

The user then carries out this action specification by doing the corresponding physical action, the actual clicking on the Print menu choice. The system accepts the menu choice, changes state internally (the outcomes of the action), and displays the Print dialogue box as feedback. The user sees the feedback and uses it for assessment of the outcome so far. Because the dialogue box makes sense to the user at this point in the interaction, the outcome is considered to be favorable, that is, leading to accomplishment of the user’s intention and indicating successful planning and action so far.

21.2.4 Cooperative User-System Task Performance within the Interaction Cycle

Primary tasks

Primary tasks are tasks that have direct work-related goals. The task in the previous example of creating a business report is a primary task. Primary tasks can be user initiated or initiated by the environment, the system, or other users. Primary tasks usually represent simple, linear paths through the Interaction Cycle.

User-initiated tasks. The usual linear path of user-system turn taking going counterclockwise around the Interaction Cycle represents a user-initiated task because it starts with user planning and translation.

Tasks initiated by environment, system, or other users. When a user task is initiated by events that occur outside that user’s Interaction Cycle, the user actions become reactive. The user’s cycle of interaction begins at the outcomes part, followed by the user sensing the subsequent system response in a feedback output display and thereafter reacting to it.

Path variations in the Interaction Cycle

For all but the simplest tasks, interaction can take alternative, possibly nonlinear, paths. Although user-initiated tasks usually begin with some kind of planning, Norman (1986) emphasizes that interaction does not necessarily follow a simple cycle of actions. Some activities will appear out of order or be omitted or repeated. In addition, the user’s interaction process can flow around the Interaction Cycle at almost any level of task/action granularity.

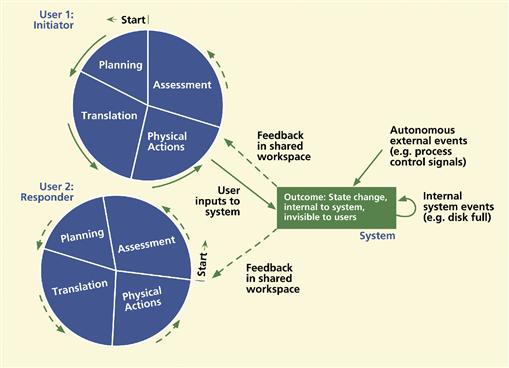

Multiuser tasks. When the Interaction Cycles of two or more users interleave in a cooperative work environment, one user will enter inputs through physical actions (Figure 21-3) and another user will assess (sense, interpret, and evaluate) the system response, as viewed in a shared workspace, revealing system outcomes due to the first user’s actions. For the user who initiated the exchange, the cycle begins with planning, but for the other users, interaction begins by sensing the system response and goes through assessment of what happened before it becomes that second user’s turn for planning for the next round of interaction.

Figure 21-3 Multiuser interaction, system events, and asynchronous external events within multiple Interaction Cycles.

Secondary tasks, intention shifts, and stacking

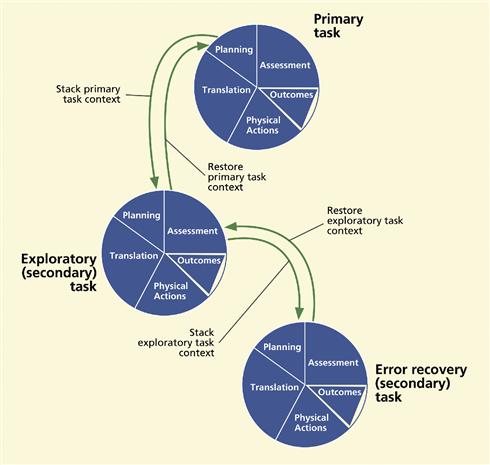

Secondary tasks and intention shifts. Kaur, Maiden, and Sutcliffe (1999), who based an inspection method primarily for virtual environment applications on Norman’s stages of action, recognized the need to interrupt primary work-oriented tasks with secondary tasks to accommodate intention shifts, exploration, information seeking, and error handling. Kaur, Maiden, and Sutcliffe (1999) created different and separate kinds of Interaction Cycles for these cases, but from our perspective, these cases are just variations in flow through the same Interaction Cycle.

Secondary tasks are “overhead” tasks in that the goals are less directly related to the work domain and usually oriented more toward dealing with the computer as an artifact, such as error recovery or learning about the interface. Secondary tasks often stem from changes of plans or intention shifts that arise during task performance; something happens that reminds the user of other things that need to be done or that arise out of the need for error recovery.

For example, the need to explore can arise in response to a specific information need, when users cannot translate an intention to an action specification because they cannot see an object that is appropriate for such an action. Then they have to search for such an object or cognitive affordance, such as a label on a button or a menu choice that matches the desired action (Chapter 20).

Stacking and restoring task context. Stacking and restoring of work context during the execution of a program is an established software concept. Humans must do the same during the execution of their tasks due to spontaneous intention shifts. Interaction Cycles required to support primary and secondary tasks are just variations of the basic Interaction Cycle. However, the storing and restoring of task contexts in the transition between such tasks impose a human memory load on the user, which could require explicit support in the interaction design. Secondary tasks also often require considerable judgment on the part of the user when it comes to assessment. For example, an exploration task might be considered “successful” when users are satisfied that they have had enough or get tired and give it up.

Example of stacking due to intention shift. To use the previous example of creating a business report to illustrate stacking due to a spontaneous intention shift, suppose the user has finished the task step “Print the report” and is ready to move on. However, upon looking at the printed report, the user does not like the way it turned out and decides to reformat the report. The user has to take some time to go learn more about how to format it better. The printing task is stacked temporarily while the user takes up the information-seeking task.

Such a change of plan causes an interruption in the task flow and normal task planning, requiring the user mentally to “stack” the current goal, task, and/or intention while tending to the interrupting task, as shown in Figure 21-4. As the primary task is suspended in mid-cycle, the user starts a new Interaction Cycle at planning for the secondary task. Eventually the user can unstack each goal and task successively and return to the main task.

Figure 21-4 Stacking and returning to Interaction Cycle task context instances.

21.3 The user action framework—adding a structured knowledge base to the interaction cycle

21.3.1 From the Interaction Cycle to the User Action Framework

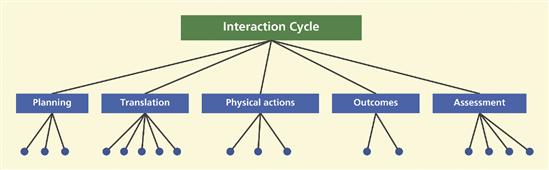

As shown in Figure 21-5, we use stages of the Interaction Cycle as the high-level organizing scheme of the UAF. The UAF is a hierarchically structured, interaction style-independent and platform-independent, knowledge base of UX principles, issues, and concepts. The UAF is focused on interaction designs and how they support and affect the user during interaction for task performance as the user makes cognitive, physical, or sensory actions in each part of the Interaction Cycle.

Figure 21-5 Basic kinds of user actions in the Interaction Cycle as the top-level UAF structure.

21.3.2 Interaction Style and Device Independent

The Interaction Cycle was an ideal starting point for the UAF because it is a model of sequences of sensory, cognitive, and physical actions users make when interacting with any kind of machine, and it is general enough to include potentially all interaction styles, platforms, and devices. As a result, the UAF is applicable or extensible to virtually any interaction style, such as GUIs, the Web, virtual environments, 3D interaction, collaborative applications, PDA and cellphone applications, refrigerators, ATMs, cars, elevators, embedded computing, situated interaction, and interaction styles on platforms not yet invented.

21.3.3 Common Design Concepts Are Distributed

The stage of action in the Interaction Cycle is at the top level of the User Action Framework. Consider the translation stage, for example. At the next level, design issues under translation will appear under various attributes such as sensory affordances and cognitive affordances. Under sensory actions, you will see various issues about presentation of cognitive affordances for translation, such as visibility, noticeability, and legibility. Under cognitive actions you will see issues about cognitive affordances, such as clarity of meaning and precise wording.

Eventually, at lower levels, you will see that common UX design issues such as consistency, simplicity, the user’s language, and concise and clear use of language are distributed with lots of overlap and sharing throughout the structure where they are applied to each of the various kinds of interaction situations. For example, an issue such as clarity of expression (precise use of language) can show up in its own way in planning, translation, and assessment. This allows the designer, or evaluator, to focus on how the concepts apply specifically in helping the user at each stage of the Interaction Cycle, for example, how precise use of wording applies to either the label of a button used to exit a dialogue box or the wording of an error message.

21.3.4 Completeness

We have tried to make the UAF as complete as possible. As more and more real-world UX data were fitted into the UAF, the structure grew with new categories and subcategories, representing an increasingly broader scope of UX and interaction design concepts. The UAF converged and stabilized over its several years of development so that, as we continue to analyze new UX problems and their causes, additions of new concepts to the UAF are becoming more and more rare.

However, absolute completeness is neither feasible nor necessary. We expect there will always be occasional additions, and the UAF is designed explicitly for long-term extensibility and maintainability. In fact, the UAF structure is “self extensible” in the sense that, if a new category is necessary, a search of the UAF structure for that category will identify where it should be located.

21.4 Interaction cycle and user action framework content categories

Here we give just an overview of top-level UAF categories, the content for each is stated abstractly in terms of UX design concepts and issues. In practice, these concepts can be used in several ways, including as a learning tool (students learn the concept), a design guideline (an imperative statement), in analytic evaluation (as a question about whether a particular part of a design supports the concept), and as a diagnostic tool (as a question about whether a UX problem in question is a violation of a concept).

Each concept at the top decomposes further into a hierarchy of successively detailed descriptions of interaction design concepts and issues in the over 300 nodes of the full UAF. As an example of the hierarchical structure, consider this breakdown of some translation topics:

Translation

Presentation of a cognitive affordance to support translation

Legibility, visibility, noticeability of the cognitive affordance

Fonts, color, layout

Font color, color contrast with background

21.4.1 Planning (Design Helping User Know What to Do)

Planning is the part of the Interaction Cycle containing all cognitive actions by users to determine “what to do?” or “what can I do?” using the system to achieve work domain goals. Although many variations occur, a typical sequence of planning activities involves these steps, not always this clear cut, to establish a hierarchy of plan entities:

![]() Identify work needs in the subject matter domain (e.g., communicate with someone in writing)

Identify work needs in the subject matter domain (e.g., communicate with someone in writing)

![]() Establish goals in the work domain to meet these work needs (e.g., produce a business letter)

Establish goals in the work domain to meet these work needs (e.g., produce a business letter)

![]() Divide goals into tasks performed on the computer to achieve the goals (e.g., type content, format the page)

Divide goals into tasks performed on the computer to achieve the goals (e.g., type content, format the page)

![]() Spawn intentions to perform the steps of each task (e.g., set the left margin)

Spawn intentions to perform the steps of each task (e.g., set the left margin)

After each traversal of the Interaction Cycle, the user typically returns to planning and establishes a new goal, intention, and/or task.

Planning concepts

Interaction design support for user planning is concerned with how well the design helps users understand the overall computer application relative to the perspective of work context, the work domain, and environmental requirements and constraints in order to determine in general how to use the system to solve problems and get work done. Planning support has to do with the system model, conceptual design, and metaphors, the user’s awareness of system features and capabilities (what the user can do with the system), and the user’s knowledge of possible system modalities. Planning is about user strategies for approaching the system to get work done.

Planning content in the UAF

UAF content under planning is about how an interaction design helps users plan goals and tasks and understand how to use the system. This part of the UAF contains interaction design issues about supporting users in planning the use of the system to accomplish work in the application domain. These concepts are addressed in specific child nodes about the following topics:

User model and high-level understanding of system. Pertains to issues about supporting user acquisition of a high-level understanding of the system concept as manifest in the interaction design. Elucidates issues about how well the interaction design matches users’ conception of system and user beliefs and expectations, bringing different parts of the system together as a cohesive whole.

Goal decomposition. Pertains to issues about supporting user decomposition of tasks to accomplish higher-level work domain goals. This category includes issues about accommodating human memory limitations in interaction designs to help users deal with complex goals and tasks, avoid stacking and interruption, and achieve task and subtask closure as soon as possible. This category also includes issues about supporting user’s conception of task organization, accounting for goal, task, and intention shifts, and providing user ability to represent high-level goals effectively.

Task/step structuring and sequencing, workflow. Pertains to issues about supporting user’s ability to structure tasks within planning by establishing sequences of tasks and/or steps to accomplish goals, including getting started on tasks. This category includes issues about appropriateness of task flow and workflow in the design for the target work domain.

User work context, environment. Pertains to issues about supporting user’s knowledge (for planning) of problem domain context, and constraints in work environment, including environmental factors, such as noise, lighting, interference, and distraction. This category addresses issues about how non-system tasks such as interacting with other people or systems relate to planning task performance in the design.

User knowledge of system state, modalities, and especially active modes. Pertains to supporting user knowledge of system state, modalities, and active modes.

Supporting learning at the planning level through use and exploration. Pertains to supporting user’s learning about system conceptual design through exploratory and regular use. This category addresses issues about learnability of conceptual design rationale through consistency and explanations in design, and about what the system can help the user do in the work domain.

21.4.2 Translation (Design Helping User Know How to Do Something)

Translation concerns the lowest level of task preparation. Translation includes anything that has to do with deciding how you can or should make an action on an object, including the user thinking about which action to take or on what object to take it, or what might be best action to take next within a task.

Translation is the part of the Interaction Cycle that contains all cognitive actions by users to determine how to carry out the intentions that arise in planning. Translation results in what Norman (1986) calls an “action specification,” a description of a physical action on an interface object, such as “click and drag the document file icon,” using the computer to carry out an intention.

Translation concepts

Planning and translation can both seem like some kind of planning, as they both occur before physical actions, but have a distinctive difference. Planning is higher level goal formation, and translation is a lower level decision about which action to make on which object.

Here is a simple example about driving a car. Planning for a trip in the car involves thinking about traveling to get somewhere, involving questions such as “where are you going,” “by what route,” and do we need to stop and get gas and/or groceries first?” So planning is in the work domain, which is travel, not operating the car.

In contrast, translation takes the user into the system, machine, or physical world domain and is about formulating actions to operate the gas pedal, brake, and steering wheel to accomplish the tasks that will help you reach your planning, or travel, goals. Because steps such as “turn on headlight switch,” “push horn button,” and “push brake pedal” are actions on objects, they are the stuff of translation.

Over the bulk of interaction that occurs in the real world, translation is arguably the single-most important step because it is about how you do things. Translation is perhaps the most challenging part of the cycle for both users and designers. From our own experience over the years, we guess that the largest bulk of UX problems observed in UX testing, 75% or more, falls into this category.

This experience is also reflected by that of Cuomo and Bowen (1992), who also classified UX problems per Norman’s theory of action and found the majority of problems in the action specification stage (translation). Clearly, translation deserves special attention in a UX and interaction design context.

Translation content in the UAF

UAF content under translation is about how an interaction design helps users know what actions to take to carry out a given intention. This part of the UAF contains interaction design issues about the effectiveness of sensory and cognitive affordances, such as labels, used to support user needs to determine (know) how to do a task step in terms of what actions to make on which UI objects and how. These concepts are addressed in specific child nodes about the following topics:

Existence of a cognitive affordance to show how to do something. Pertains to issues about ensuring existence of needed cognitive affordances in support of user cognitive actions to determine how to do something (e.g., task step) in terms of what action to make on what UI object and how. This category includes cases of necessary but missing cognitive affordances, but also cases of unnecessary or undesirable but existing cognitive affordances.

Presentation (of a cognitive affordance). Pertains to issues about effective presentation or appearance in support of user sensing of cognitive affordances. This category includes issues involving legibility, noticeability, timing of presentation, layout and spatial grouping, complexity, and consistency of presentation, as well as presentation medium (e.g., audio, when needed) and graphical aspects of presentation. An example issue might be about presenting the text of a button label in the correct font size and color so that it can be read/sensed.

Content, meaning (of a cognitive affordance). Pertains to issues about user understanding and comprehension of cognitive affordance content and meaning in support of user ability to determine what action(s) to make and on what object(s) for a task step. This category includes issues involving clarity, precision, predictability of the effects of a user action, error avoidance, and effectiveness of content expression. As an example, precise wording in labels helps user predict consequences of selection. Clarity of meaning helps users avoid errors.

Task structure, interaction control, preferences and efficiency. Pertains to issues about logical structure and flow of task and task steps in support of user needs within task performance. This category includes issues about alternative ways to do tasks, shortcuts, direct interaction, and task thread continuity (supporting the most likely next step). This category also includes issues involving task structure simplicity, efficiency, locus of user control, human memory limitations, and accommodating different user classes.

Support of user learning about what actions to make on which UI objects and how through regular and exploratory use. Pertains to issues about supporting user learning at the action-object level through consistency and explanations in the design.

21.4.3 Physical Actions (Design Helping User Do the Actions)

After users decide which actions to take, the physical actions part of the Interaction Cycle is where users do the actions. This part includes all user inputs acting on devices and user interface objects to manipulate the system, including typing, clicking, dragging, touching, gestures, and navigational actions, such as walking in virtual environments. The physical actions part of the Interaction Cycle includes no cognitive actions; thus, this part is not about thinking about the actions or determining which actions to do.

Physical actions—concepts

This part of the Interaction Cycle has two components: (2) sensing the objects in order to manipulate them and (2) manipulation. In order to manipulate an object in the user interface, the user must be able to sense, for example, see, hear, or feel, the object, which can depend on the usual sensory affordance issues as object size, color, contrast, location, and timing of its display.

Physical actions are especially important for analysis of performance by expert users who have, to some extent, “automated” planning and translation associated with a task and for whom physical actions have become the limiting factor in task performance.

Physical affordance design factors include design of input/output devices (e.g., touchscreen design or keyboard layout), haptic devices, interaction styles and techniques, direct manipulation issues, gestural body movements, physical fatigue, and such physical human factors issues as manual dexterity, hand–eye coordination, layout, interaction using two hands and feet, and physical disabilities.

Fitts’ law. The practical implications of Fitts’ law (Fitts, 1954; Fitts & Peterson, 1964) are important in the physical actions part of the Interaction Cycle. Users vary considerably in their natural manual dexterity, but all users are governed by Fitts’ law with respect to certain kinds of physical movement during interaction, especially cursor movement for object selection and dragging and dropping objects.

Fitts’ law is an empirically based mathematical formula governing movement from an initial position to a target at a terminal position. The time to make the movement is proportional to the log2 of the distance and inversely proportional to log2 of the width or cross-section of the target normal to the direction of the motion.

First applied in HCI, to the new concept of a mouse cursor control device by Card, English, and Burr (1978), it has since been the topic of many HCI studies and publications. Among the most well known of these developments are those of McKenzie (1992).

Fitts’ law has been modified and adapted to many other interaction situations, including among many, two-dimensional tasks (MacKenzie & Buxton, 1992), pointing and dragging (Gillan et al., 1990) trajectory-based tasks (Accot & Zhai, 1997), and moving interaction from visual to auditory and tactile designs (Friedlander, Schlueter, & Mantei, 1998).

When Fitts’ law is applied in a bounded work area, such as a computer screen, boundary conditions impose exceptions. If the edge of the screen is the “target,” for example, movement can be extremely fast because it is constrained to stop when you get to the edge without any overshoot.

Other software modifications of cursor and object behavior to exceed the user performance predicted by this law include cursor movement accelerators and the “snap to default” cursor feature. Such features put the cursor where the user is most likely to need it, for example, the default button within a dialogue box. Another attempt to beat Fitts’ law predictions is based on selection using area cursors (Kabbash & Buxton, 1995), a technique in which the active pointing part of the cursor is two dimensional, making selection more like hitting with a “fly swatter” than with a precise pointer.

One interesting software innovation for outdoing Fitts’ limitations is called “snap-dragging” (Bier, 1990; Bier & Stone, 1986), which, in addition to many other enhanced graphics-drawing capabilities, imbues potential target objects with a kind of magnetic power to draw in an approaching cursor, “snapping” the cursor home into the target.

Another attempt to beat Fitts’ law is to ease the object selection task with user interface objects that expand in size as the cursor comes into proximity (McGuffin & Balakrishnan, 2005). This approach allows conservation of screen space with small initial object sizes, but increased user performance with larger object sizes for selection.

Another “invention” that addresses the Fitts’ law trade-off of speed versus accuracy of cursor movement for menu selection is the pie menu (Callahan et al., 1988). Menu choices are represented by slices or wedges in a pie configuration. The cursor movement from one selection to another can be tailored continuously by users to match their own sense of their personal manual dexterity. Near the outer portions of the pie, at large radii, movement to the next choice is slower but more accurate because the area of each choice is larger. Movement near the center of the pie is much faster between choices but, because they are much closer together, selection errors are more likely.

Several design guidelines relate Fitts’ law to manual dexterity in interaction design in Chapter 22.

Haptics and physicality. Haptics is about the sense of touch and the physical contact between user and machine through interaction devices. In addition to the usual mouse as cursor control, there are many other devices, each with its own haptic issues about how best to support natural interaction, including joysticks, touchscreens, touchpads, eye tracking, voice, trackballs, data gloves, body suits, head-mounted displays, and gestural interaction.

Physicality is about real physical interaction with real devices such as physical knobs and levers. It is about issues such as using real physical tuning and volume control knobs on a radio as opposed to electronic push buttons for the same control functions.

Norman (2007b) called that feeling of grabbing and turning a real knob “physicality.” He claims, and we agree, that with real physical knobs, you get more of a feeling of direct control of physical outcomes.

Several design guidelines in Chapter 22 relate haptics to physicality in interaction design.

Physical actions content in the UAF

UAF content under physical actions is about how an interaction design helps users actually make actions on objects (e.g., typing, clicking, and dragging in a GUI, scrolling on a Web page, speaking with a voice interface, walking in a virtual environment, hand movements in gestural interaction, gazing with eyes). This part of the UAF contains design issues pertaining to the support of users in doing physical actions. These concepts are addressed in specific child nodes about the following topics:

Existence of necessary physical affordances in user interface. Pertains to issues about providing physical affordances (e.g., UI objects to act upon) in support of users doing physical actions to access all features and functionality provided by the system.

Sensing UI objects for and during manipulation. This category includes the support of user sensory (visual, auditory, tactile, etc.) needs in regard to sensing UI objects for and during manipulation (e.g., user ability to notice and locate physical affordance UI objects to manipulate).

Manipulating UI objects, making physical actions. A primary concern in this UAF category is the support of user physical needs at the time of actually making physical actions, especially making physical actions efficient for expert users. This category includes how each UI object is manipulated and how manipulable UI objects operate.

Making UI object manipulation physically easy involves controls, UI object layout, interaction complexity, input/output devices, interaction styles and techniques. Finally, this is the category in which you consider Fitts’ law issues having to do with the proximity of objects involved in task sequence actions.

21.4.4 Outcomes (Internal, Invisible Effect/Result within System)

Physical user actions are seen by the system as inputs and usually trigger a system function that can lead to system state changes that we call outcomes of the interaction. The outcomes part of the Interaction Cycle represents the system’s turn to do something that usually involves computation by the non-user-interface software or, as it is sometimes called, core functionality. One possible outcome is a failure to achieve an expected or desired state change, as in the case of a user error.

Outcomes—concepts

A user action is not always required to produce a system response. The system can also autonomously produce an outcome, possibly in response to an internal event such as a disk getting full; an event in the environment sensed by the system such as a process control alarm; or the physical actions of other users in a shared work environment.

The system functions that produce outcomes are purely internal to the system and do not involve the user. Consequently, outcomes are technically not part of the user’s Interaction Cycle, and the only UX issues associated with outcomes might be about usefulness or functional affordance of the non-user-interface system functionality.

Because internal system state changes are not directly visible to the user, outcomes must be revealed to the user via system feedback or a display of results, to be evaluated by the user in the assessment part of the Interaction Cycle.

Outcomes content in the UAF

UAF content under outcomes is about the effectiveness of the internal (non-user-interface) system functionality behind the user interface. None of the issues in this UAF category are directly related to the interaction design. However, the lack of necessary functionality can have a negative affect on the user experience because of insufficient usefulness. This part of the UAF contains issues about existence, completeness, correctness, suitability of needed backend functional affordances. These concepts are addressed in specific child nodes about the following topics:

Existence of needed functionality or feature (functional affordance). Pertains to issues about supporting users through existence of needed non-user-interface functionality. This category includes cases of missing, but needed, features and usefulness of the system to users.

Existence of needed or unwanted automation. Pertains to supporting user needs by providing needed automation, but not including unwanted automation that can cause loss of user control.

Computational error. This is about software bugs within non-user-interface functionality of the application system.

Results unexpected. This category is about avoiding surprises to users, for example through unexpected automation or other results that fail to match user expectations.

Quality of functionality. Though a necessary function or feature may exist, there may still be issues about how well it works to satisfy user needs.

21.4.5 Assessment (Design Helping User Know if Interaction Was Successful)

The assessment part of the Interaction Cycle corresponds to Norman’s (1986) evaluation side of interaction. A user in assessment performs sensory and cognitive actions needed to sense and understand system feedback and displays of results as a means to comprehend internal system changes or outcomes due to a previous physical action.

The user’s objective in assessment is to determine whether the outcomes of all that previous planning, translation, and physical actions were favorable, meaning desirable or effective to the user. In particular, an outcome is favorable if it helps the user approach or achieve the current intention, task, and/or goal; that is, if the plan and action “worked.”

Assessment concepts

The assessment part parallels much of the translation part, only focusing on system feedback. Assessment has to do with the existence of feedback, presentation of feedback, and content or meaning of feedback. Assessment is about whether users can know when an error occurred, and whether a user can sense a feedback message and understand its content.

Assessment content in the UAF

This part of the UAF contains issues about how well feedback in system responses and displays of results within an interaction design help users know if the interaction is working so far. Being successful in the previous planning, translation, and physical actions means that the course of interaction has moved the user toward the interaction goals. These concepts are addressed in specific child nodes about the following topics:

Existence of feedback or indication of state or mode. Pertains to issues about supporting user ability to assess action outcomes by ensuring existence of feedback (or mode or state indicator) when it is necessary or desired and nonexistence of feedback when it is unwanted.

Presentation (of feedback). Pertains to issues about effective presentation or appearance, including sensory issues and timing of presentation, in support of user sensing of feedback.

Content, meaning (of feedback). Pertains to issues about understanding and comprehension of feedback (e.g., error message) content and meaning in support of user ability to assess action outcomes. This category of the UAF includes issues about clarity, precision, and predictability. The effectiveness of feedback content expression is affected by precise use of language, word choice, clarity due to layout and spatial grouping, user centeredness, and consistency.

21.5 Role of affordances within the uaf

Each kind of affordance has a close relationship to parts of the Interaction Cycle. During interaction, users perform sensory, cognitive, and physical actions and require affordances to help with each kind of action, as shown abstractly in Figure 21-6. For example, in planning, if the user is looking at buttons and menus while trying to determine what can be done with the system, then the sensory and cognitive affordances of those user interface objects are used in support of planning.

Figure 21-6 Affordances connect users with design.

Similarly, in translation, if a user needs to understand the purpose of a user interface object and the consequences of user actions on the object, then the sensory and cognitive affordances of that object support that translation. Perhaps the most obvious is the support physical affordances give to physical actions but, of course, sensory affordances also help; you cannot click on a button if you cannot see it.

Finally, the sensory and cognitive affordances of feedback objects (e.g., a feedback message) support users in assessment of system outcomes occurring in response to previous physical actions as inputs.

In Figure 21-7, we have added indications of the relevant affordances to each part of the Interaction Cycle.

Figure 21-7 Interaction cycle of the UAF indicating affordance-related user actions.

21.6 Practical value of uaf

21.6.1 Advantage of Vocabulary to Think About and Communicate Design Issues

One of the most important advantages to labeling and structuring interaction design issues within the UAF is that it codifies a vocabulary for consistency across UX problem descriptions. Over the years this structured vocabulary has helped us and our students in being precise about how we talk about UX problems, especially in including descriptions of specific causes of the problems in those discussions.

As an analogy, the field of mechanical engineering has been around for much longer than our discipline. Mechanical engineers have a rich and precise working vocabulary that fosters mutual understanding of issues, for example, crankshaft rotation. In contrast, because of the variations and ambiguities in UX terminology, UX practitioners often end up talking about two different things or even talking at cross purposes. A structured and “standardized” vocabulary, such as can be found within the UAF, can help reduce confusion and promote agreement in discussions of the issues.

21.6.2 Advantage of Organized and Structured Usability Data

As early as 1994, a workshop at the annual CHI conference addressed the topic of what to do after usability data are collected (Nayak, Mrazek, & Smith, 1995). Researchers and practitioners took up questions about how usability data should be gathered, analyzed, and reported. Qualitative usability data are observational, requiring interpretation. Without practical tools to structure the problems, issues, and concepts, however, researchers and practitioners were concerned about the reliability and adequacy of this analysis and interpretation. They wanted effective ways to get from raw observational data to accurate and meaningful conclusions about specific flaws in interaction design features. The workshop participants were also seeking ways to organize and structure usability data in ways to visualize patterns and relationships.

We believe that the UAF is one effective approach to getting from raw observational data to accurate and meaningful conclusions about specific flaws in interaction design features. The UAF offers ways to organize and structure usability data in ways to visualize patterns and relationships.

21.6.3 Advantage of Richness in Usability Problem Analysis Schemes

Gray and Salzman (1998) cited the need for a theory-based usability problem categorization scheme for comparison of usability evaluation method performance, but they also warn of classification systems that have too few categories. As an example, the Nielsen and Molich (1990) heuristics were derived by projecting a larger set of usability guidelines down to a small set.

Although Nielsen developed heuristics to guide usability inspection, not classification, inspectors finding problems corresponding to a given heuristic find it convenient to classify them by that heuristic. However, as John and Mashyna (1997) point out in a discussion of usability tokens and types (usability problem instances and usability problem types), doing so is tantamount to projecting a large number of usability problem categories down to a small number of equivalence classes.

The result is that information distinguishing the cases is lost and measures for usability evaluation method classification agreement are inflated artificially because problems that would have been in different categories are now in the same super-category. The UAF overcomes this drawback by breaking down classifications into a very large number of detailed categories, not lumping together usability problems that have only passing similarities.

21.6.4 Advantage of Usability Data Reuse

Koenemann-Belliveau et al. (1994) made a case for leveraging usability evaluation effort beyond fixing individual problems in one user interface to building a knowledge base about usability problems, their causes, and their solutions. They state: “We should also investigate the potential for more efficiently leveraging the work we do in empirical formative evaluation—ways to ‘save’ something from our efforts for application in subsequent evaluation work” (Koenemann-Belliveau et al., 1994, p. 250).

Further, they make a case for getting more from the rich results of formative evaluation, both to improve the UX lifecycle process and to extend the science of HCI. Such a database of knowledge base of reusable usability problems data requires a storage and retrieval structure that will allow association of usability problem situations that are the “same” in some essential underlying way. The UAF, through the basic structure of the Interaction Cycle, provides just this kind of organization.

We will make use of the UAF structure to organize interaction design guidelines in Chapter 22.