Thermal Management Strategies for Three-Dimensional ICs

Abstract

Thermal management methodologies for three-dimensional (3-D) systems are presented in this chapter. These techniques either lower the overall power of the 3-D stack or carefully distribute the power densities across the tiers of a 3-D system to satisfy local temperature limitations. Hybrid methodologies are also discussed. Both physical and architectural level methods are surveyed. Physical design techniques include thermal driven floorplanning and placement. Architectural level techniques are primarily based on dynamic thermal management for multi-core systems and systems comprised of several tiers of memory. Moreover, design techniques that utilize additional interconnect resources to increase thermal conductivity within a multi-tier system are discussed. These techniques include inserting thermal through silicon vias to enhance vertical heat transfer and horizontal wires to facilitate heat spreading across each tier.

Keywords

Thermal TSVs; dynamic thermal management; thermal driven floorplanning; thermal driven placement; thermal wires

In the previous chapter, a number of thermal models within three-dimensional (3-D) systems are reviewed. These models, employed in the thermal analysis process, provide a temperature map of a circuit. Based on this information, thermal management techniques can, in turn, be applied to mitigate excessive temperatures (i.e., “hot spots”) or thermal gradients within and across tiers. These temperature related phenomena are expected to become more pronounced due to the increasing power densities in 3-D systems. This situation is also exacerbated by the greater distance of the heat sources from the heat sinks. The reduced volume of 3-D systems also allocates smaller area for the heat sink, reducing the heat transferred to the ambient [495].

Thermal management methodologies can be roughly divided into two broad categories: (1) approaches which control power densities within the volume of the 3-D systems, and (2) techniques that target an increase in the thermal conductivity of the 3-D stack. Methods that consider both objectives have also recently appeared and are discussed in this chapter. Note that this categorization of techniques is not based on the methods for achieving the target objective or the stage of the design flow. Rather, the thermal management methods discussed in this chapter are driven by the design objective, ensuring that the resulting 3-D circuits exhibit a low thermal risk. The subsections within each section are, alternatively, presented based on the means utilized to achieve the target objective. Consequently, those methodologies that manage the power density throughout the volume of the 3-D stack are discussed in Section 13.1. Strategies that accelerate the transfer of the generated heat within a 3-D circuit to the ambient are described in Section 13.2. Hybrid approaches, such as active cooling, where both objectives are simultaneously addressed, are discussed in Section 13.3. These concepts are summarized in Section 13.4.

13.1 Thermal Management Through Power Density Reduction

Careful control of the peak power density within a 3-D integrated system is a primary means to lower the peak temperature of a 3-D stack while reducing thermal gradients across each physical tier as well as among tiers. Methods in this category can be divided into two types. Those techniques applied during the design process that prudently distribute the power within a multi-tier system [351,496], and online (or real-time) techniques that adapt the spatial distribution of temperature within the stack over time by controlling the computational tasks executed by the system [497,498]. Both types of techniques are discussed in the following subsections.

The elimination of hot spots and reduction in thermal gradients within 3-D circuits requires the extension of physical design techniques to include temperature as a design objective. Several floorplanning, placement, and routing techniques for 3-D ICs and systems-on-package (SoP) have been developed that consider the high temperatures and thermal gradients throughout the tiers of these systems in addition to traditional objectives such as area and wirelength minimization. Emphasizing temperature can result in significant penalties in area—by placing for example, high power blocks far from each other—and performance due to a potentially significant increase in wirelength. Consequently, most techniques balance the various design objectives, producing systems that provide significant performance while satisfying temperature constraints. These techniques are applied to several steps of the design flow. In Section 13.1.1, thermal driven floorplanning techniques are discussed, while thermal driven placement techniques are reviewed in Section 13.1.2. Thermal management methodologies during circuit operation are discussed in Section 13.1.3.

13.1.1 Thermal Driven Floorplanning

Traditional floorplanning techniques for two-dimensional (2-D) circuits typically optimize an objective function that includes the total area of the circuit and the total wirelength of the interconnections among the circuit blocks. Linear functions that combine these objectives are often used as cost functions where, for 3-D circuits, an additional floorplanning requirement may be minimizing the number of intertier vias to decrease the fabrication cost and silicon area, as discussed in Chapter 9, Physical Design Techniques for Three-Dimensional ICs.

Different issues with thermal aware floorplanning can also lead to a number of tradeoffs. The techniques discussed in this section highlight the advantages and disadvantages of the different choices to produce highly compact and thermally safe floorplans.

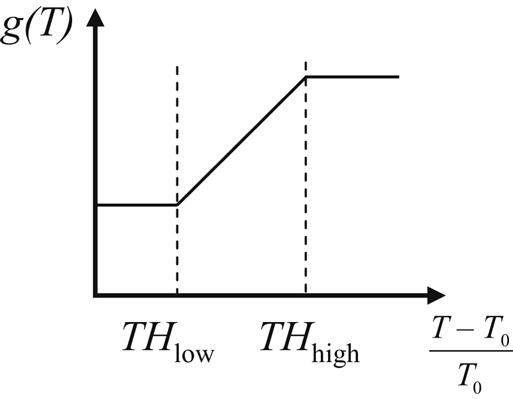

A thermal driven floorplanning technique for 3-D ICs includes the thermal objective,

(13.1)

where c1, c2, c3, and c4, are weight factors and wl, area, and iv are, respectively, the normalized wirelength, area, and number of intertier vias [351]. The last term is a cost function to represent the temperature. An example of this function is a ramp function of the temperature, as shown in Fig. 13.1. Note that the cost function does not intersect the abscissa but, rather, the plateaus. Consequently, this objective function does not minimize the temperature of the circuit but, rather, constrains the temperature within a specified level. Indeed, minimizing the circuit temperature may not be an effective objective, leading to prohibitively long computational times or failure to satisfy other design objectives.

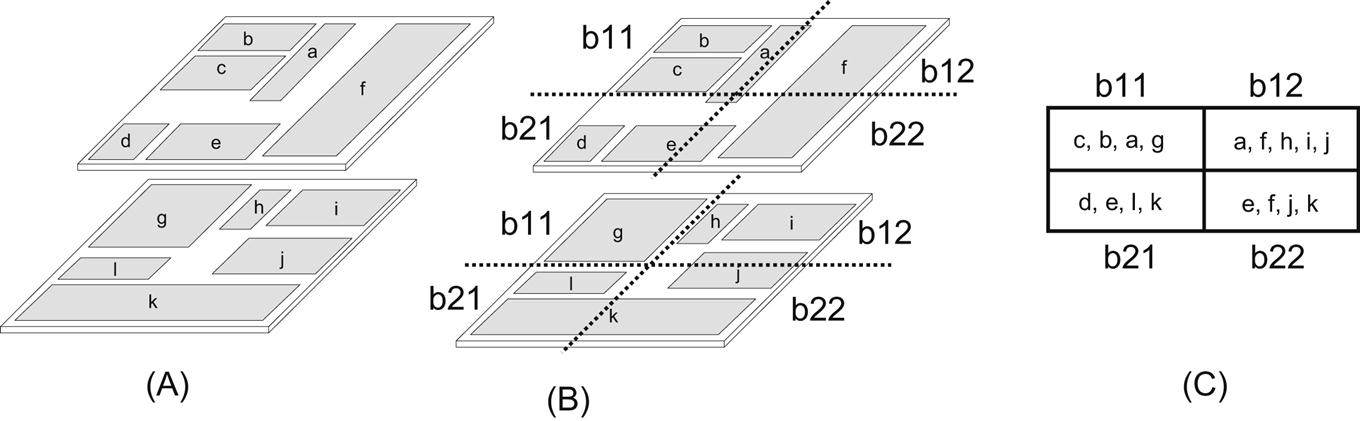

As with thermal unaware floorplanning techniques, the choice of floorplan representation also affects the computational time. Sequence pair and corner block list representations have been used for 3-D floorplanning, as discussed in Chapter 9, Physical Design Techniques for Three-Dimensional ICs. In addition to these approaches, a low overhead scheme is realized by representing the blocks within a 3-D system with a combination of 2-D matrices that correspond to the tiers of the system and a bucket structure that contains the connectivity information for the blocks located on different tiers (a combined bucket and 2-D array (CBA)) [351]. A transitive closure graph describes the intratier connections of the circuit blocks. The bucket structure can be envisioned as a group of buckets imposed on a 3-D stack. The indices of those blocks that intersect a bucket are included, irrespective of the tier on which a block is located. A 2×2 bucket structure applied to a two tier 3-D IC is shown in Fig. 13.2, where the index of the bucket is also depicted. To explain the bucket index notation, consider the lower left tile of the bucket structure shown in Fig. 13.2C (i.e., b21). The index of the blocks that intersects with this tile on the second tier is d and e, and the index of the blocks from the first tier is l and k. Consequently, b21 includes d, e, l, and k.

Simulated annealing (SA) is employed to optimize an objective function, as in (13.1) for thermal floorplanning of 3-D circuits. The SA scheme converges to the desired freezing temperature through several solution perturbations. These perturbations include one of the following operations, some of which are unique to 3-D ICs:

3. intratier reversal of the position of two blocks;

4. move of a block within a tier;

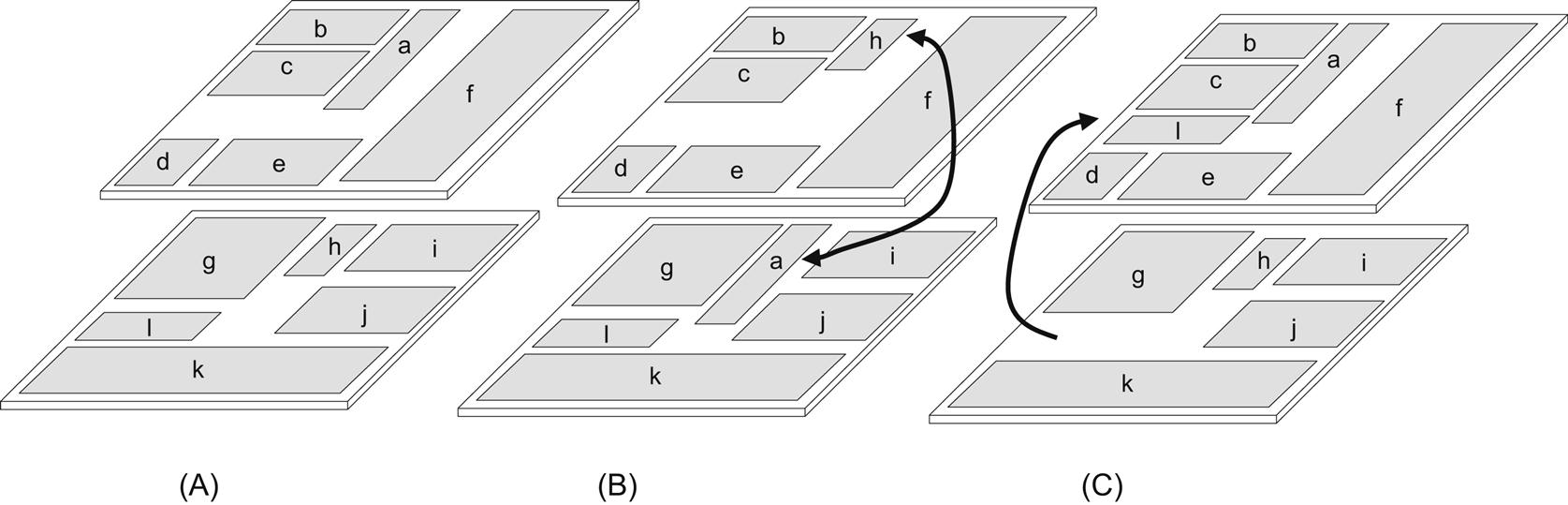

The last three operations are unique to 3-D ICs, while the z-neighbor swap can be treated as a special case of intertier swapping of two blocks. Therefore, two blocks located on adjacent tiers are swapped only if the relative horizontal distance between these two blocks is small. In addition, the z-neighbor move considers the move of a block to another tier of the 3-D system without significantly altering the x-y coordinates. Examples of these two operations are illustrated in Fig. 13.3.

Moreover, every time a solution perturbation occurs, the cost function is reevaluated to gauge the quality of the new candidate solution. Computationally expensive tasks, such as wirelength and temperature calculations, are therefore invoked. To avoid this exhaustive approach, incremental changes in wirelength for only the related blocks and interconnections are evaluated, as applied to the techniques described in Chapter 9, Physical Design Techniques for Three-Dimensional ICs. Note that the thermal profile of the heat diffusion can change the temperature across an area that extends beyond the recently moved blocks.

Consequently, each block perturbation requires the thermal profile of a 3-D circuit to be determined. This strict requirement, however, can increase the computational time, becoming a bottleneck for temperature related physical design techniques. A thermal profile, therefore, is invoked only after a specific operation or after a specified number of iterations. The reason behind this practice is that not all operations significantly affect the temperature of an entire system; rather, only a portion of the system. For example, intratier moves of two small area blocks or the rotation of a block are unlikely to significantly affect the temperature of a system, whereas other operations, such as a z-neighbor swap or a z-neighbor move, can significantly affect the temperature of the tiers and, more broadly, the entire system.

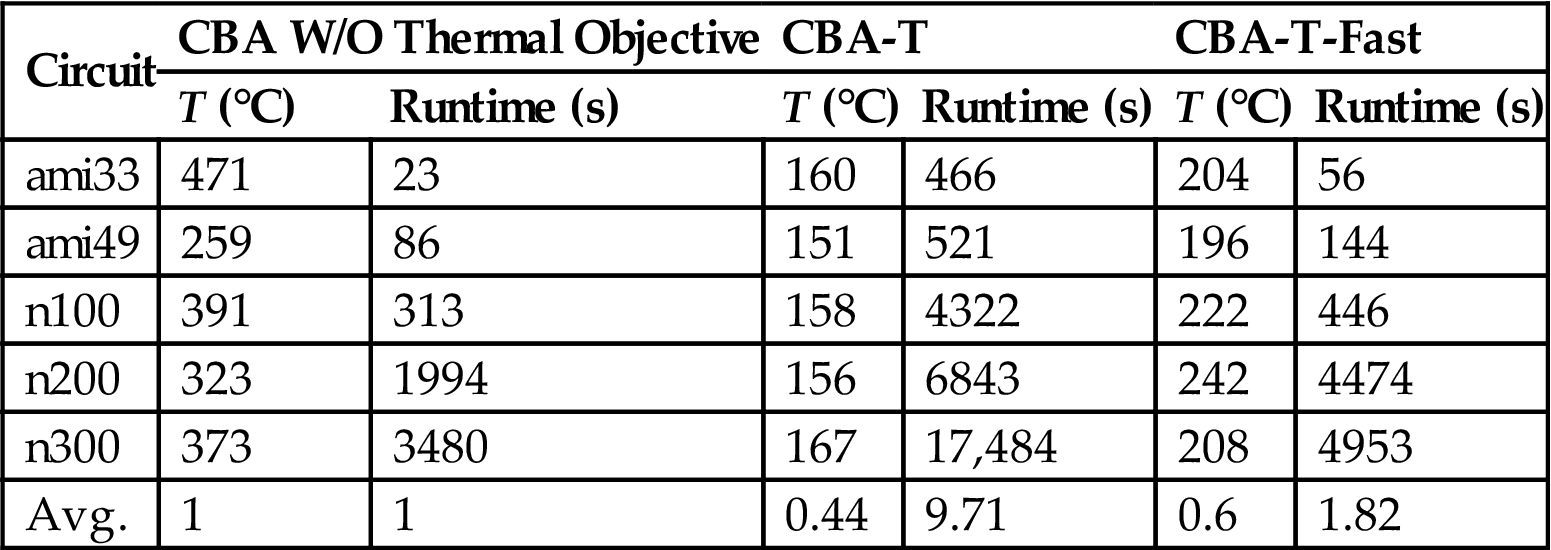

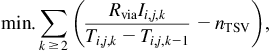

Thermal analysis techniques to determine the temperature of a 3-D circuit, each with different levels of precision and efficacy, can be applied, as discussed in Chapter 12, Thermal Modeling and Analysis. To ascertain the effects of different thermal analysis approaches on the total time of the thermal floorplanning process, thermal models with different accuracy and computational time have been applied to MCNC benchmarks in conjunction with this floorplanning technique. These results are reported in Table 13.1, where a compact thermal modeling approach is considered. A significant tradeoff between the computational runtime and the decrease in temperature exists between these thermal models. With thermal driven floorplanning, a grid of resistances is utilized to thermally model a 3-D circuit, exhibiting a 56% reduction in temperature [351]. The computational time, however, is increased by approximately an order of magnitude as compared to conventional floorplanning algorithms. Alternatively, if a closed-form expression is used for the thermal model of a 3-D circuit, the decrease in temperature is only 40%. The computational time is, however, approximately doubled in this case. Other design characteristics, such as area and wirelength, do not significantly change between the two models.

Table 13.1

Decrease in Temperature Through Thermal Driven Floorplanning [351]

| Circuit | CBA W/O Thermal Objective | CBA-T | CBA-T-Fast | |||

| T (°C) | Runtime (s) | T (°C) | Runtime (s) | T (°C) | Runtime (s) | |

| ami33 | 471 | 23 | 160 | 466 | 204 | 56 |

| ami49 | 259 | 86 | 151 | 521 | 196 | 144 |

| n100 | 391 | 313 | 158 | 4322 | 222 | 446 |

| n200 | 323 | 1994 | 156 | 6843 | 242 | 4474 |

| n300 | 373 | 3480 | 167 | 17,484 | 208 | 4953 |

| Avg. | 1 | 1 | 0.44 | 9.71 | 0.6 | 1.82 |

As the block operations allow intertier moves, exploring the solution space becomes a challenging task [352]. To decrease the computational time, floorplanning can be performed in two separate phases. In the first step, the circuit blocks are assigned to the tiers of a 3-D system to minimize area and wirelength, effectively ignoring the thermal behavior of the circuit during this first stage. This phase, however, can result in highly unbalanced power densities among the tiers. A second step that limits these unbalances is therefore necessary. An objective function to accomplish this balancing process is [496]

(13.2)

where c5, c6, c7, c8, and c9 notate weighting factors. Beyond the first two terms that include the area and wirelength of the circuit, the remaining terms consider other possible design objectives for 3-D circuits. The third term minimizes the imbalance that can exist among the dimensions of the tiers within the stack, based on the deviation dimension approach described in [350]. Tiers with particularly different areas or greatly uneven dimensions can result in a significant portion of unoccupied silicon area on each tier.

The last two terms in (13.2) consider the overall power density within a 3-D stack. The fourth term considers the power density of the blocks within the tier as in a 2-D circuit. Note that the temperature is not directly included in the cost function but is implicitly captured through management of the power density of the floorplanned blocks. The cost function characterizing the power density is based on a similarly shaped function as the temperature cost function depicted in Fig. 13.1. Thermal coupling among the blocks on different tiers is considered by the last term and is

(13.3)

(13.3)

(13.3)

where Pi is the power density of block i, and Pij is the power density due to overlapping block i with block j from a different tier. The summation operand adds the contribution from the blocks located on all of the other tiers other than the tier containing block j. If a simplified thermal model is adopted, an analytic expression as in (13.3) captures the thermal coupling among the blocks, thereby compensating for some loss of accuracy originating from a crude thermal model.

This two step floorplanning technique has been applied to several Alpha microprocessors [499]. Results indicate a 6% average improvement in the maximum temperature as compared to 3-D floorplanning without a thermal objective [496]. In addition, comparing a 2-D floorplan with a 3-D floorplan, an improvement in area and wirelength of, respectively, 32% and 50% is achieved [496]. The peak temperature, however, increases by 18%, demonstrating the importance of thermal issues in 3-D ICs.

The reduction in temperature is smaller than the one step floorplanning approach. Alternatively, for a two step approach, the solution space is significantly smaller, resulting in decreased computational time. The interdependence, however, of the intratier and intertier allocation of the circuit blocks is not captured, which can yield inferior solutions as compared to one step floorplanning techniques.

SA methods have also been employed for floorplanning modules in SoP (see Section 2.2), which is another variant of 3-D integration with coarse granularity. A cost function similar to (13.2) includes the decoupling capacitance and temperature in addition to area and wirelength. The modules in each tier of the SOP are represented by a sequence pair to capture the topographical characteristics of the SOP. To avoid the computational overhead caused by thermal analysis of the SOP during SA iterations, an approximation of the thermal profile of the circuit is used.

The temperature for an initial floorplan of the SoP is produced using the method of finite differences. The finite difference approximation given by (12.3) can be written as RP=T, where R is the thermal resistance matrix. The elements of the thermal matrix contain the thermal resistance (or conductance) between two nodes in a 3-D mesh, while the temperature T and power vector P contain, respectively, the temperature and power dissipation at each node. Any modification to the placement of the cells causes all of these matrices to change. To determine the resulting change in temperature, the thermal resistance is updated and multiplied with the power density vector. This approach, however, leads to long computational times. Consequently, assuming the modules in the SoP exhibit similar thermal conductivities dominated by the volume of silicon, the thermal resistance matrix is not updated, although any module move results in some change in the matrix.

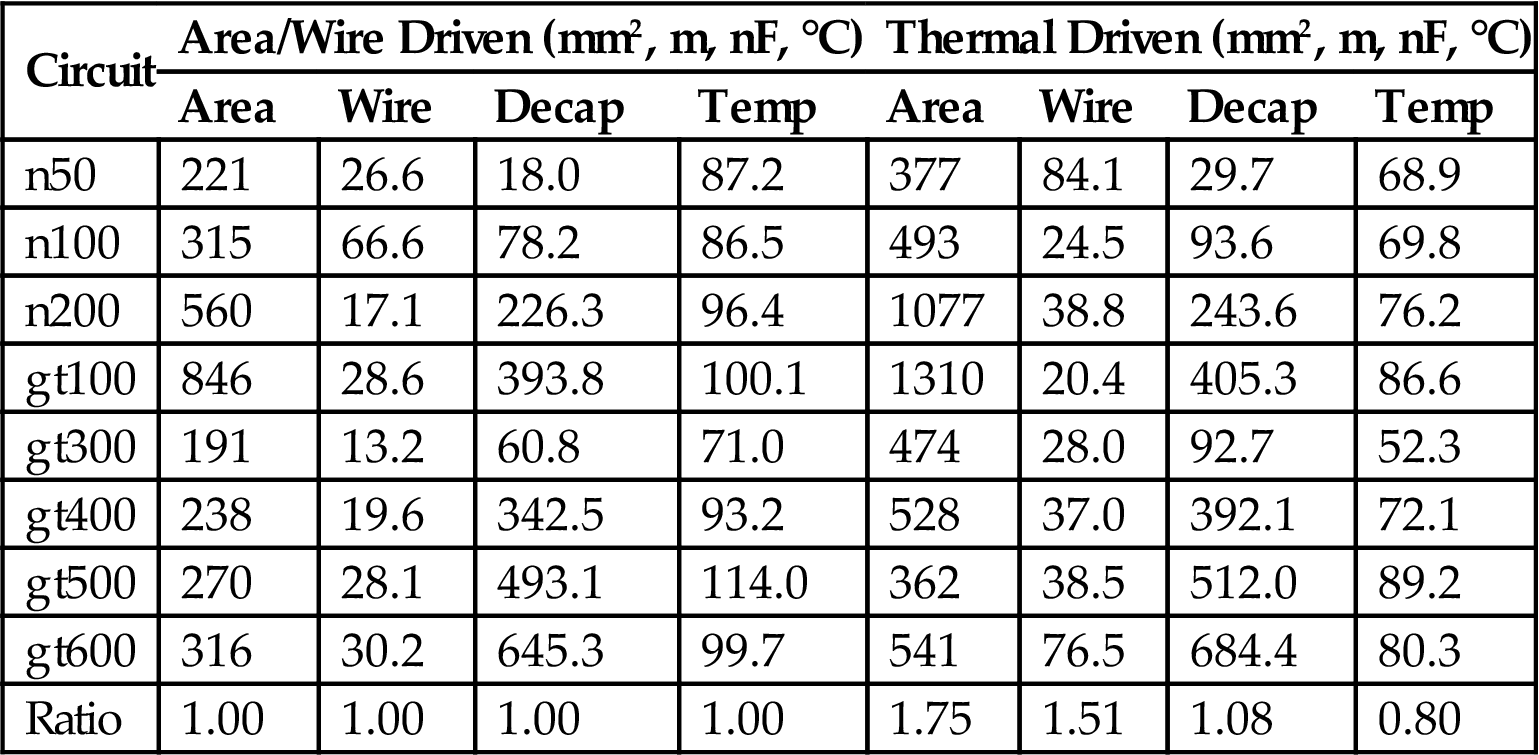

Only local changes in the power densities due to a move of a module are considered. Consequently, for each modification of the block placement, the change in the power vector ΔP is scaled by R, and the change in the temperature vector is evaluated. A new temperature vector is obtained after the latest move of the blocks within a 3-D system. Results from applying this thermally aware SoP floorplanning technique are listed in Table 13.2, where the results from a placement based on traditional area and wirelength objectives are also provided for comparison [397].

Table 13.2

Thermal Driven Floorplanning for Four Tier 3-D ICs [397]

| Circuit | Area/Wire Driven (mm2, m, nF, °C) | Thermal Driven (mm2, m, nF, °C) | ||||||

| Area | Wire | Decap | Temp | Area | Wire | Decap | Temp | |

| n50 | 221 | 26.6 | 18.0 | 87.2 | 377 | 84.1 | 29.7 | 68.9 |

| n100 | 315 | 66.6 | 78.2 | 86.5 | 493 | 24.5 | 93.6 | 69.8 |

| n200 | 560 | 17.1 | 226.3 | 96.4 | 1077 | 38.8 | 243.6 | 76.2 |

| gt100 | 846 | 28.6 | 393.8 | 100.1 | 1310 | 20.4 | 405.3 | 86.6 |

| gt300 | 191 | 13.2 | 60.8 | 71.0 | 474 | 28.0 | 92.7 | 52.3 |

| gt400 | 238 | 19.6 | 342.5 | 93.2 | 528 | 37.0 | 392.1 | 72.1 |

| gt500 | 270 | 28.1 | 493.1 | 114.0 | 362 | 38.5 | 512.0 | 89.2 |

| gt600 | 316 | 30.2 | 645.3 | 99.7 | 541 | 76.5 | 684.4 | 80.3 |

| Ratio | 1.00 | 1.00 | 1.00 | 1.00 | 1.75 | 1.51 | 1.08 | 0.80 |

Although SA is the dominant optimization scheme used in most floorplanning and placement techniques for 3-D ICs [351,397,409], thermal aware floorplanners based on the force directed method have also been investigated. The motivation for employing this method stems from the lack of scalability of the SA approach. The analytic nature of force directed floorplanners, however, formulates the floorplanning problem into a continuous 3-D space. A transition is required between placement in the continuous volume and assignment without overlaps (i.e., legalization) within the discrete tiers of a 3-D system, potentially leading to a nonoptimal floorplan. In addition, as block level floorplanning includes components of dissimilar sizes, a different solution process is required as compared to floorplanning at the standard cell level [500].

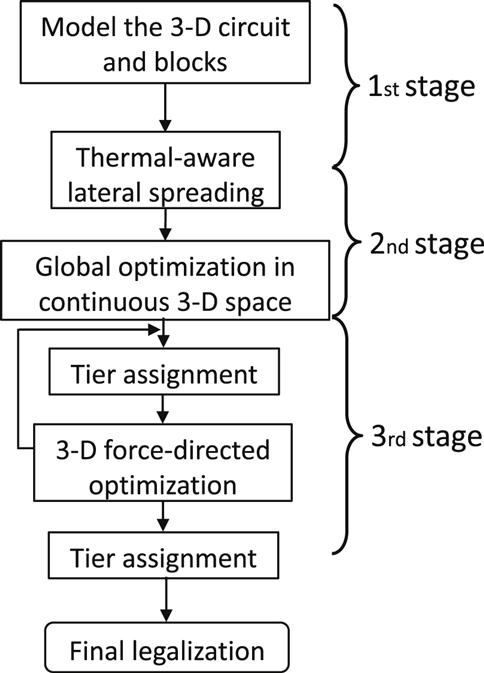

This process includes more stages as compared to a traditional force directed method, as discussed in Chapter 9, Physical Design Techniques for Three-Dimensional ICs, due to legalization issues that can result during tier assignment. The different stages of the method are illustrated in Fig. 13.4, which are distinguished as (1) temperature aware lateral spreading, (2) continuous global optimization, and (3) optimization and tier assignment among the tiers within the 3-D stack. Assuming that the floorplan is a set of blocks {m1, m2, …, mn}, the method minimizes (1) the peak temperature Tmax of the circuit, (2) the wirelength, and (3) the circuit area, the product of the maximum width and height of the tiers within the 3-D stack. Each block mi is associated with dimensions Wi and Hi, area Ai=Wi × Hi, aspect ratio Hi/Wi, and power density Pmi. The height of the blocks is a multiple of the thickness of the tiers, which is assumed to be D for all of the physical tiers and L tiers are assumed to comprise the 3-D stack. A valid floorplan is an assignment of non-overlapping blocks within a 3-D stack, where the position of each block is described by (xi, yi, li), denoting the horizontal coordinate of the lower left corner of the block and tier li.

The continuous 3-D space within which the blocks are allowed to move and rotate consists of homogeneous cubic bins. The height of these bins is set to D/2, and the other two dimensions are half the size of the minimum block size. To determine the length of the connections between blocks, the half perimeter wirelength (HPWL) model is utilized. Based on this structure, two different forces are exerted on the blocks, where the aim of a filling force Ff is to remove overlaps, while a thermal force Fth reduces the resulting peak temperature. A filling force is formed for each bin by considering the density of the blocks within this bin. This density is determined for each bin based on the sum of the blocks covering a bin. Having determined all of the forces for each bin, the filling force applied to each block is equal to the summation of the forces related to all of the bins occupied by this block. Alternatively, the thermal force is based on the thermal gradient within the 3-D space. Obtaining the thermal gradients requires thermal analysis of the system, which in [500] is achieved through a spatially adaptive thermal analysis package [501].

The combination of these forces is the total force exerted on each block in each physical direction. Similar to (9.14), a system of equations is solved, for which the total force applied to the blocks in the x-direction is

(13.4)

where ax and βx are weighting parameters. Parameter ax controls the significance of the wirelength, area, and thermal objectives. Equivalently, βx characterizes the relative importance between the two forces in each direction. All of these parameters are empirically determined.

Having determined the forces on each block, the floorplanning process begins by spreading the blocks laterally within an xy-plane rather than the entire 3-D space. This spreading is at odds with traditional force directed methods where the blocks collapse at the center of the floorplan with high overlaps (see Chapter 9, Physical Design Techniques for Three-Dimensional ICs). As this situation results in strong filling forces, allowing the blocks to scatter throughout the 3-D space causes some large blocks to move to the boundary of the 3-D space, for example, close to the tier adjacent to the heat sink. A consequence of this practice can be a poor initial floorplan subjected to hot spots. Alternatively, spreading the blocks only in the xy-plane initially avoids this situation while simultaneously reducing the strong filling forces. Furthermore, this initial lateral spreading more evenly distributes the thermal densities, offering an initial distribution of the interconnect.

The second stage follows with global placement of the blocks within the volume of the system based on the filling and thermal forces. An issue that arises during this step is determining the thermal forces, which requires a thermal analysis of the circuit. As the thermal tool to perform this task is based on a tiered structure [501], a continuous floorplan is temporarily mapped into a discrete space. The temperature map for each tier is produced, and the thermal forces ensure that the global placement in continuous space can proceed. The global placement iterates until the overlap between the blocks is reduced to 5 to 10%.

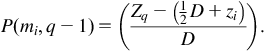

An approach to this interim tier mapping can be implemented stochastically, where the goal is to allocate the power density of the blocks to a specific tier to thermally analyze the system [500]. Considering that the center of block mi with coordinates (xi, yi, zi) is located between tiers q and q−1 during an iteration of the global placement, this block is placed within tier q or q−1 with a probability of, respectively,

(13.5)

(13.5)

(13.5)

(13.6)

(13.6)

(13.6)

Accordingly, the probability for a power density of block mi to be allocated to tier q or q−1 is, respectively,

(13.7)

(13.8)

Having produced a floorplan in a continuous 3-D space, tier assignment is realized. If this task takes place as a postprocessing step, however, inferior results can be produced. Instead, a third stage is introduced, as shown in Fig. 13.4, where tier assignment is integrated with floorplanning in a 2.5-D domain. As shown in Fig. 13.5 illustrating a continuous floorplan, a tier assignment of block 2 in either the first or second tier results in a different level of overlap in blocks 1 and 3. This overlap guides the force directed method with the tier assignment to ensure that the global placement produced by the previous stage is not significantly degraded.

This approach reduces the significant mismatches that can occur during tier assignment. Although these mismatches are often resolved as a postprocessing step, the heterogeneity of the shapes and sizes of the blocks can lead to significant degradation from the optimum placement produced during the second stage. Consequently, by integrating the tier assignment with the force directed method, these disruptive changes can be avoided.

The final step of the technique removes any remaining minor overlaps between blocks, where rotating the blocks has been demonstrated to improve the results as compared to moving the blocks within each tier. The topographical relationship among the blocks is captured in addition to the orientation of the blocks [502]. Although this technique is not based on a fixed outline, a boundary is assumed during legalization to detect an increase in the area of a tier, which can, in turn, cause an undesirable area imbalance. These violations are treated as additional overlaps. Additional moves and rotations are performed to remove these overlaps.

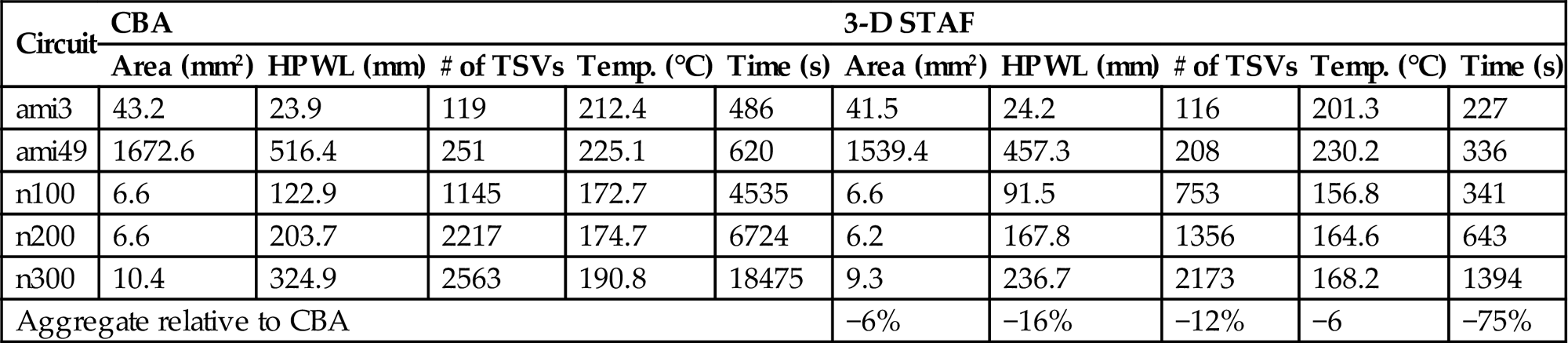

This force directed method has been compared to the SA based approach where CBA is employed. Some results are listed in Tables 13.3 and 13.4. In Table 13.3, the two methods are compared without considering thermal issues. The results indicate that the force directed method produces comparable results with CBA in area and number of through silicon vias (TSVs) but exhibits a decrease in wirelength. More importantly, the computational time is reduced by 31% [500]. If the thermal objective is added to the floorplanning process, the force directed method performs better in all of the objectives with a greater reduction in computational time than reported in Table 13.4. Note, however, that if the dependence between power and temperature is included in the thermal analysis process, the savings in time is significantly lower.

Table 13.3

Comparison for Area and Wirelength Optimization [500]

| Circuit | CBA | 3-D Scalable Temperature Aware Floorplanning (STAF) (No Temperature) | ||||||

| Area (mm2) | HPWL (mm) | # of TSVs | Time (s) | Area (mm2) | HPWL (mm) | # of TSVs | Time (s) | |

| ami3 | 35.30 | 22.5 | 93 | 23 | 37.9 | 22.0 | 122 | 52 |

| ami49 | 1490.00 | 446.8 | 179 | 86 | 1349.1 | 437.5 | 227 | 57 |

| n100 | 5.29 | 100.5 | 955 | 313 | 5.9 | 91.3 | 828 | 68 |

| n200 | 5.77 | 210.3 | 2093 | 1994 | 5.9 | 168.6 | 1729 | 397 |

| n300 | 8.90 | 315.0 | 2326 | 3480 | 9.7 | 237.9 | 1554 | 392 |

| Aggregate relative to CBA | +4% | −12% | −1% | −31% | ||||

Table 13.4

Comparison for Temperature Optimization [500]

| Circuit | CBA | 3-D STAF | ||||||||

| Area (mm2) | HPWL (mm) | # of TSVs | Temp. (°C) | Time (s) | Area (mm2) | HPWL (mm) | # of TSVs | Temp. (°C) | Time (s) | |

| ami3 | 43.2 | 23.9 | 119 | 212.4 | 486 | 41.5 | 24.2 | 116 | 201.3 | 227 |

| ami49 | 1672.6 | 516.4 | 251 | 225.1 | 620 | 1539.4 | 457.3 | 208 | 230.2 | 336 |

| n100 | 6.6 | 122.9 | 1145 | 172.7 | 4535 | 6.6 | 91.5 | 753 | 156.8 | 341 |

| n200 | 6.6 | 203.7 | 2217 | 174.7 | 6724 | 6.2 | 167.8 | 1356 | 164.6 | 643 |

| n300 | 10.4 | 324.9 | 2563 | 190.8 | 18475 | 9.3 | 236.7 | 2173 | 168.2 | 1394 |

| Aggregate relative to CBA | −6% | −16% | −12% | −6 | −75% | |||||

In addition to analytic techniques, other less conventional approaches to floorplan 3-D circuits have been developed. These approaches include genetic algorithms where, as an example, the thermal aware mapping of 3-D systems that incorporate a network-on-chip (NoC) architecture [503]. Merging 3-D integration with NoC is expected to further enhance the performance of interconnect limited ICs (the opportunities that emerge from combining these two design paradigms are discussed in Chapter 20, 3-D Circuit Architectures).

Consider the 3-D NoC shown in Fig. 13.6. The goal is to assign the tasks of a specific application to the processing elements (PEs) of each tier to ensure that the temperature of the system and/or communication volume among the PEs is minimized. The function that combines this objective characterizes the fitness of the candidate chromosomes (i.e., candidate mappings), described by

(13.9)

As with traditional genetic algorithms, an initial population is generated [504]. Crossover and mutation operations generate chromosomes, which survive to the next generation according to the relative chromosomal fitness. The floorplan with the highest fitness is selected after a number of iterations or if the fitness cannot be further improved.

13.1.2 Thermal Driven Placement

Placement techniques can also be enhanced by the addition of a thermal objective. The force directed method used in cell placement [371] and discussed in Section 9.3.1 has been extended to incorporate the thermal objective during the placement process [505] of standard cells in 3-D systems. In this approach, repulsive forces are applied to those cells that exhibit high temperatures (i.e., “hot blocks”) to ensure that the high temperature cells are placed at a greater distance from each other. The applied forces comprise both thermal forces and overlap forces. Since the objective is to reduce the temperature, the thermal forces are set equal to the negative of the thermal gradients. This assignment places the blocks far from the high temperature regions.

Determining these gradients, as discussed in the previous section, is a critical step of any temperature aware technique. Another method to obtain the temperature of a 3-D circuit is the finite element method [505]. The 3-D stack is discretized into a mesh consisting of unit cells, as discussed in Section 12.3. The thermal gradient of a point or node of an element is determined by differentiating the temperature vector along the different directions,

(13.10)

(13.10)

(13.10)

Exploiting the thermal electric duality, the modified nodal technique in circuit analysis [473], where each resistor contributes to the admittance matrix (i.e., matrix stamps), is utilized to construct element stiffness matrices for each element. These elemental matrices are combined into a global stiffness matrix for the entire system, notated as Kglobal. This matrix is included in a system of equations to determine the temperature of the nodes that characterizes the entire 3-D circuit. The resulting expression is

(13.11)

where P is the power consumption vector of the grid nodes. To determine this vector, the power dissipated by each element of the grid is distributed to the closest nodes. From solving (13.11), the temperature of each node is determined during each iteration of the force directed algorithm. The thermal forces can be determined from the thermal gradient between grid nodes, requiring the following expression to be solved,

(13.12)

where fi is the force vectors in the x, y, and z directions. Matrix C describes the cost of a connection between two nodes, as defined in [371].

After the stiffness matrices are constructed, an initial random placement of the circuit blocks is generated. Based on this placement, the initial forces are computed, permitting the placement of the blocks to be iteratively determined. This recursive procedure progresses as long as an improvement above some threshold value is exhibited. The procedure includes the following steps [505]:

1. the power vector resulting from the new placement is determined;

2. the temperature profile of the 3-D stack is calculated;

3. the new value of the thermal and overlap forces is evaluated;

After the algorithm converges to a final placement, a postprocess step follows. During this step, the circuit blocks are positioned without any overlap within the tiers of the system. If one tier is packed, the remaining cells, initially destined for this tier, are positioned onto an adjacent tier. A similar process takes place in the y-direction to ensure that the circuit blocks are aligned into rows. A divide and conquer method is applied to avoid any overlap within each row. A final sorting step in the x-direction includes a postprocessing procedure, after which no overlap among cells should exist.

The efficiency of this force directed placement technique has been evaluated on MCNC [396] and IBM-PLACE benchmarks [506], demonstrating a 1.3% decrease in average temperature, a 12% reduction in maximum temperature, and a 17% reduction in average thermal gradient. The total wirelength, however, increases by 5.5%. This technique achieves a uniform temperature distribution across each tier, resulting in a significant decrease in thermal gradients as well as maximum temperature. The average temperature throughout a 3-D IC, however, is only slightly decreased. This technique, consequently, focuses on mitigating hot spots across a multi-tier system.

In all of the techniques presented in this section, the heat is transferred from the upper tiers to the bottom tier primarily through the power and signal lines and the thin silicon substrates of the upper tiers. No additional means other than redistributing the major heat sources throughout the 3-D stack lessens any significant thermal gradients. Furthermore, these techniques typically assume the power density associated with each module, block, or cell is temporally fixed. However, thermal management is also applicable in real-time, where power densities are monitored and adjusted to prevent the appearance of hot spots that affect circuit performance and contribute to aging, degrading the reliability of the system. These methods are described in the following section.

13.1.3 Dynamic Thermal Management Techniques

Thermal management techniques during circuit operation—typically used in processors—have received significant attention since around 2000 due to increasing power densities. The advent of multi-core architectures has further fueled interest in these techniques as each core within a processor is managed separately, offering several ways to thermally manage these complex computing systems. Although techniques for dynamic power management exist [507], simply reducing power is not sufficient due to the thermal coupling of circuits and spatially varying thermal conductivities across a system. Decreasing power can lower the peak or average temperature of an integrated system, yet the thermal gradients may be higher if appropriate thermal management techniques are not applied. The appearance of hot spot(s) in some region(s) of a circuit can be attributed to the inability of power reduction techniques to eliminate these thermal gradients despite the average temperature of the circuit being maintained within thermal limits.

Dynamic thermal management (DTM) methods can be applied to both software and hardware, where a combination of techniques is often used. In hardware, dynamic frequency voltage scaling (DVFS) and clock gating (or throttling) are commonplace techniques, while in software, workload (or thread) migration is usually employed to cool down cores [508]. The granularity at which thermal policies are applied can also vary, in particular, for multi-core processors. For example, a single voltage/frequency pair can be chosen for an entire processor (a global policy) or each core may have a separate voltage/frequency depending upon the temperature of the core (a distributed policy).

A number of tradeoffs exist among these choices, leading to a relatively broad design space for dynamic thermal management. Software methods (driven by the operating system) are usually coarse grained methods and are less effective than hardware techniques since the latter can respond faster to alleviate steep transient thermal loads. Software methods can, however, be implemented relatively easily by scheduling tasks among the different parts of a system. In a multi-core system, threads are swapped among processors or assigned to different processors depending upon the temperature of each core, where these changes typically take place at tens of milliseconds [509,510]. Several techniques use a 10 ms interval for job scheduling as this interval is also used in the Linux kernel for timer interrupts [509]. DVFS mechanisms are more complex to implement. This complexity increases if a distributed (per core) DVFS scheme is employed. Despite the greater complexity, however, these techniques are widely used in modern processors to maintain the temperature within specified limits [511,512].

Determining the temperature of a circuit during operation is another important aspect of dynamic thermal management as slow responses can render these techniques inefficient or excessively strict. Different means are used to determine the temperature of a circuit including thermal sensors [512] and performance counters that characterize a core, such as accesses to register files, cycle counts, and number of executed instructions [508]. Information from thermal sensors is used directly, where the primary issue for sensors is the response time to changes in temperature, recognizing that thermal constants within integrated systems are typically on the order of milliseconds. Thermal sensors constitute a reliable means for measuring circuit temperature to guide a thermal management policy. Alternatively, the information from performance counters is loosely connected to temperature and, although widely explored in the literature, outputs from these components represent a proxy and should be used with caution [509].

Less complex dynamic thermal policies are also available, where the online workload schedule is based on the thermal profile of the target system obtained offline for the expected combination of workloads [513]. This strategy reduces the overhead of thermal management; the efficiency, however, may be lower than fully online methods. These methods are particularly effective for those scenarios where the workload combinations differ from those workloads employed during offline energy profiling of the system.

Several efforts employing dynamic thermal management approaches, both in software and hardware, have been published [497,508, 510–512]. Similarly, several works pay attention to the design and allocation of thermal sensors within 2-D circuits [514]. Although these techniques can also be applied to 3-D circuits, the resulting efficiency may not be similar. This drop in efficiency can be attributed to the strong thermal coupling between adjacent circuits. This coupling is more pronounced in the vertical direction due to the significantly smaller physical distance between circuits and that heat primarily flows in the vertical direction. Other reasons for developing novel thermal policies for 3-D systems include the case where memory (e.g., DRAM) tiers are stacked on top of a processor tier [515]. Although the processor tier is located next to the heat sink, care must be placed to ensure processor operation does not cause the temperature to rise beyond the strict thermal limits of DRAM [516]. Higher overall power can occur due to more frequent refreshing of the stored data. The remainder of this section reviews the evolution of thermal management techniques for 3-D systems, emphasizing either multi-tier processor architectures or a combination of a processor tier vertically integrated with tiers of memory.

13.1.3.1 Dynamic thermal management of three-dimensional chip multi-processors with a single memory tier

Although automatic control theory has been used to guide workload scheduling for thermal management [508], most techniques are based on heuristics due to low complexity characteristics. A heuristic OS-level scheduling algorithm targeting 3-D processors has been proposed in [498]. The key concept of this technique is to consider the strong thermal coupling along the vertical direction in the scheduling process to balance temperatures across the stack, thereby reducing thermal gradients and decreasing the frequency in those cores with hot spots. The occurrence of hot spots requires aggressive thermal measures which degrade system performance. Consider a 3-D multi-core system. The assumption is that irrespective of the floorplan, which can also be thermally driven, leading to the stacking of energy demanding cores with low power caches, stacking of cores may be unavoidable. In this case, careful scheduling lowers the temperature of the system, where the scheduling process considers the entire stack rather than individual tiers. Assigning tasks to those cores situated in the tier next to the heat sink should, therefore, not be based on the temperature of a specific tier (which is lower than in other tiers) but, rather, the temperature of those cores in tiers farther away.

Due to the vertical thermal coupling, rather than assigning a single task to one core, a stack of cores (for example, an entire 3-D system split into several pillars of cores) is considered, where a task is assigned to every processor within this stack. The terms, “super-core” and “super-tasks,” are used to describe the main features of the heuristic. Assuming a 3-D system with n tiers and m cores per tier, the tasks on n×m cores require scheduling. With this notation, a super-core consists of n cores, where a task is assigned to each core to distribute a balanced temperature. The assignment process forms super-tasks that require comparable power. These super-tasks are assigned to each super-core.

To balance the power across the super-cores, the power of all of the (n×m) tasks is sorted. Each task and associated power are assigned to the available m bins (of super-tasks). The assignment proceeds iteratively by inserting power values, in descending order, to that bin with the smallest total power during this iteration. The process continues until every task has been assigned to a bin. An example assignment is shown in Fig. 13.7, where sorting of power within tasks and the resulting task assignment to four bins are depicted for a twotier 3-D system containing four cores per tier. As the main operation of this heuristic is sorting, the time complexity is O(mnlog(mn)) [498].

In addition to task scheduling, DVFS within each super-core is assumed, although DVFS for a core is not necessarily straightforward. Thus, if a core exceeds a thermal threshold, DVFS scales the voltage supply of the core with the highest power (which may or may not be the overheated core within a super-core). The objective is to penalize the task that consumes the highest power, which may cause overheating in a vertically adjacent core. DVFS is applied to only one core within a super-core, which limits the benefits of this technique.

Job scheduling is applied to the benchmark suite of applications, SPEC2000 [517], where several workloads are combined to form benchmark sequences with diverse power profiles and thermal loads. For example, thermal analysis of the crafty and mcf workloads yields a HC (hot-cold) scenario. These workloads are applied to a 3-D multi-core architecture with two tiers and four cores per tier, where a P4 Northwood architecture operating at 3 GHz is assumed for each core.

To evaluate the effectiveness of the heuristic, other task assignments have also been considered. The baseline for comparison is a random assignment of tasks to cores based on the Linux 2.6 scheduler [509]. The scheduler works similarly to Linux but with a slightly smaller scheduling interval of 8 ms. A round robin scheduler is also utilized for comparison. Finally, another scheduling approach, where the objective is to balance temperature by assigning a high power task to a cool core but without considering the temperature of the vertically adjacent cores, is also evaluated for the purposes of comparison.

A comparison in terms of peak temperature among these task scheduling methods for the target workload scenarios demonstrate that the heuristic assignment reduces the peak temperature by up to 24°C as compared to a random assignment. Other techniques similarly decrease the peak temperature. Thermal coupling in task scheduling supports the removal of a large number of thermal problems when DVFS is employed. DVFS, however, often counteracts the benefits of job scheduling as DVFS requires a greater performance overhead.

In contrast to the method described in [498], where frequent use of DVFS is avoided, other techniques consider combining both software and hardware techniques. The objective of these methodologies is to balance the effectiveness of each technique against the overhead without favoring one technique over the other. Thus, a thermal management framework has been developed for 3-D chip multi-processors (CMP), where clock gating, DVFS, and workload scheduling are all applied to satisfy thermal limits without degrading performance [497]. This goal is achieved by applying these techniques both in a distributed (or local) and global (or centralized) manner. A distributed approach provides greater versatility when applying a thermal policy as allowing DVFS only for a core within each super-core is a restricted approach. The structure and operation of this framework are discussed in the following subsection.

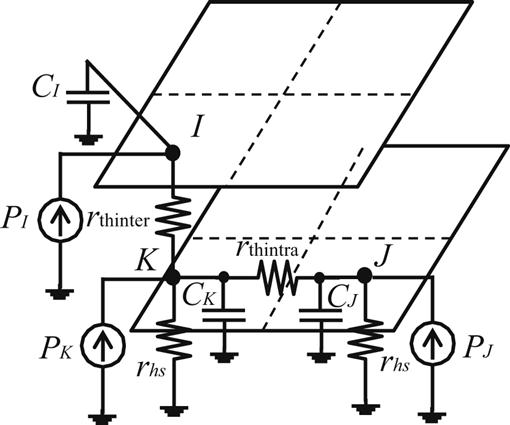

13.1.3.2 Software/hardware (SW/HW) thermal management framework for three-dimensional chip multi-processor

The development of this framework requires a set of tools for architectural, power, and thermal modeling. These tools include the M5 architectural simulator [518], a Wattch-based EV6 model [519], CACTI [520], the approach described in [521] for power modeling of the cores, caches, and leakage power, and a tool based on [522] for thermally analyzing these systems. Irrespective of the specific tools, architectural simulators, power models and/or simulators, and thermal analysis tools are all required to design and evaluate a dynamic thermal management policy for CMP or multi-processor system-on-chip (MPSoC) systems. The framework is based on specific guidelines, which are based on a first order thermal analysis of a two tier CMP, as illustrated in Fig. 13.8. In this example, the electrical-thermal duality is used to analyze the thermal behavior of a CMP, where each core is represented by a single node in an equivalent thermal model. Only a simple path for the heat to flow is assumed to exist within the stack. At first glance, the thermal conductivity of the cores in the upper tier (e.g., core I) is lower than the thermal conductivity of those cores in the lower tier, which implies that the temperature of core I is higher than in cores J and K. Additionally, thermal coupling between cores in the same tier (cores J and K) is considerably lower, implying comparable cooling efficiencies. These observations suggest that workload scheduling should consider the different cooling efficiencies of the cores and the effect that a workload can have on other cores within a system.

To extend this analysis to a CMP with m cores, specific data that affects the thermal behavior of the cores need to be determined. Consequently, the cooling efficiency of each core determines the workload schedule. The schedule is extracted from the steady-state heat conduction expression, T=PRth−1, where matrix Rth includes the thermal resistance connecting the nodes (i.e., cores) within the thermal model. Notating the temperature of core i as Ti, the temperature is

(13.13)

(13.13)

(13.13)

where rthi,j is the thermal resistance between cores i and j, and pj is the power consumed by core j. The row i,j of matrix Rth describes the effect of the cores on the temperature at core i. Furthermore, considering the quadratic relationship between dynamic power consumption and voltage, and that the operating frequency exhibits a roughly linear dependence on voltage, power dissipation can be described as pj=si,j fj3 where fj is the operating frequency of core j. The term si,j is the product of the switching activity of the core multiplied by the switched capacitance, which is linearly proportional to the number of instructions per cycle (IPC) of the job being executed on core j. The figure of merit, thermal impact per performance (TIP), is introduced to formulate guidelines for the thermal control of 3-D CMPs,

(13.14)

(13.15)

which denotes the effect of core j on the temperature of core i, where the frequency fi (and voltage) through DVFS and the workload IPCj are assigned to core j.

Robust thermal management of a CMP is intended to improve performance while satisfying specific thermal constraints, which can vary among cores. A metric to describe the performance of a CMP is the total number of instructions per second executed by a CMP,

(13.16)

(13.16)

(13.16)

The thermal constraints that apply to a CMP are determined by the thermal limit of each core, which is assumed to be equal for all cores. Consequently, the requirement is that ![]() . Satisfying this constraint requires equating the thermal impact per performance of all cores. This decision leads to assigning different frequencies among those cores with different cooling efficiencies, and executing jobs with different IPCs. This method results in two approximate design guidelines. For intertier processors, the frequencies and IPCs should be assigned based on the cooling efficiency of the cores, given by (13.13) to (13.16), where both the frequency and IPC are, in general, different. This guideline is in accordance with the heuristic described in [498], where the assignment of a workload to a core considers both the temperature as well as the location of a core within the stack. The capability to transfer heat to the ambient is affected by the power dissipated during an assigned workload. Alternatively, among cores situated within the same tier, where the cooling efficiency is roughly the same based on the thermal model of Fig. 13.8, the same frequency and workloads with similar IPC are assigned.

. Satisfying this constraint requires equating the thermal impact per performance of all cores. This decision leads to assigning different frequencies among those cores with different cooling efficiencies, and executing jobs with different IPCs. This method results in two approximate design guidelines. For intertier processors, the frequencies and IPCs should be assigned based on the cooling efficiency of the cores, given by (13.13) to (13.16), where both the frequency and IPC are, in general, different. This guideline is in accordance with the heuristic described in [498], where the assignment of a workload to a core considers both the temperature as well as the location of a core within the stack. The capability to transfer heat to the ambient is affected by the power dissipated during an assigned workload. Alternatively, among cores situated within the same tier, where the cooling efficiency is roughly the same based on the thermal model of Fig. 13.8, the same frequency and workloads with similar IPC are assigned.

These guidelines underpin the thermal management policy developed at the operating system level [497], where the temperature is obtained from the thermal sensors. Performance counters gather information for workload monitoring and to estimate the IPC. With this information and the aforementioned guidelines, this framework applies distributed workload migration and real-time thermal control, globally adapting the power and thermal attributes across CMPs.

At the global CMP (global) level, the power and thermal budgets are determined for each core using a hybrid online/offline technique. For each workload the optimal voltage/frequency pair is determined. The temperature of each workload is computed, and the power is updated to consider the dependence on temperature. The voltage/frequency (V/F) pair is chosen to satisfy the thermal limit. After several iterations, the temperature and temperature dependent power converge. The resulting V/F pair is stored in a look-up table. This table is integrated with the OS and is periodically invoked.

Thermal balancing is also leveraged by a distributed policy where the IPC of each core is monitored and, if required, adjusted to guarantee thermal safety. This adjustment is primarily between vertically adjacent cores, since greater thermal heterogeneity is noted. The workload migration swaps jobs to assign those workloads with high IPCs to those cores with higher cooling efficiencies. This migration of workloads takes place every 20 ms. If thermal transients, however, occur at faster rates, other thermal control measures are considered, such as DVFS and clock gating. These techniques are applied locally, thereby providing better control of thermal gradients across the stack as compared to a centralized approach. Moreover, due to the considerable impact on performance of clock gating, DVFS is the primary method. Clock gating is only used for thermal emergencies.

This framework is applied to a 3-D CMP consisting of three physical tiers. Two of these tiers host eight Alpha21264 cores (four in each tier) assuming a 90 nm CMOS node, and the third tier contains the L2 cache. A comparison of the framework with a strictly distributed thermal policy [508] for this 3-D CMP, where the simulated workloads are based on applications from SPEC2000 [517] and Media benchmark suites, exhibits an improvement of, on average, 30%. This situation is due to the strong thermal coupling of the vertically adjacent cores. Local control cannot capture this coupling since the workload and V/F pair are chosen for this core. Employing power and thermal budgeting at the global level can, however, address this limitation, improving the overall throughput of the CMP.

In addition to the cooling efficiency, an analysis of the characteristics of the workloads can yield greater performance of the CMP, assuming the same thermal limits [523]. To assess these improvements, the workloads are classified as compute bound or memory bound, depending upon the memory requirements of the workloads. Analyzing memory transfers between the core and in-stack memory provides useful information that can be included within the thermal policies. Considering that instructions are executed at a clock rate fCPU and the off-chip memory transfers due to L2 cache misses are performed at a clock rate foff-chip, the time to execute a task is [523],

(13.17)

where won-chip is the number of clock cycles to execute CPU instructions without a cache miss, and woff-chip is the number of clock cycles for external transfers if the core stalls. This simple expression describes whether a workload is compute bound (e.g., high won-chip) or memory bound (e.g., high woff-chip). Both of these quantities depend upon the type of application and the time needed for a core to execute an application. The off-chip clock rate is also considered constant, while the clock cycles for off-chip memory transfer are modeled as a function of the number of cache misses. woff-chip=(aNmiss+b)·fCPU is the number of L2 misses denoted by Nmiss [524]. The coefficients a and b are fixed and depend on the target architecture, while the number of misses is determined by performance monitors, such as PAPI [525].

Collection of this information can quantify the speed up required to execute a workload due to an increase in the core clock frequency (which affects the temperature). This speed up for each core and workload at two different frequencies is

(13.18)

This simple metric computes the gains in IPS for a workload with different core clock frequencies. These speed ups, however, cannot be exploited unless the temperature of the core is lower than the maximum temperature at the target frequency. Application of these higher frequencies is performed effectively if the instantaneous temperature rather than the steady state temperature (SST) of a core is employed. The use of the instantaneous temperature tracks the closeness of each core to the maximum allowed temperature and core frequency. This approach is not considered in [497] where the SST of a core determines the thermal effect on the cores and drives the workload allocation policy. Note that the term instantaneous temperature refers to the temperature of a core over several milliseconds, since thermal constants are orders of magnitude greater than the clock frequency.

A considerably lower instantaneous temperature, notated as Tinst from the maximum allowed temperature Tmax, provides a temperature slack [523] which can be exploited by operating the core at a higher frequency. Performance improvements can, therefore, be determined by SU for each workload. Thus, not only the cooling efficiency of each core but also the SU can improve the IPS of a 3-D CMP [523], as described by (13.16). These frequency adjustments of finer granularity should, however, be carefully performed due to the strong thermal coupling in the vertical direction. This coupling has a detrimental effect on the temperature of the other cores within the 3-D CMP, particularly those cores located in other tiers at the same 2-D position.

The optimization problem of maximizing (13.16) is solved subject to the constraint that the temperature of those cores farthest from the heat sink is equal to Tmax. Solving this optimization problem with the use of Laplace multipliers leads to the following expression applied to each core i [523],

(13.19)

where M is a constant. Determining the precise frequency for each core that satisfies (13.19), however, is not feasible since the clock frequency changes discretely. Only a small set of clock frequencies is supported by a CMP. Consequently, an approximate solution is offered to ensure that the frequency assigned to each core deviates the least from (13.19).

Solving (13.19) requires the thermal resistance Rthi, power Pi, and IPCi of each core i. The thermal resistance of each core in the CMP is illustrated in Fig. 13.8, while the power is determined by the IPC of the core. Allocation of the IPC to the cores for each workload proceeds according to the heuristic of Section 13.1.3.1, where an example is depicted in Fig. 13.7. Rather than explicit power values, the IPC of each workload matches the super-tasks (i.e., a set of tasks) to the super-cores of the CMP. This procedure is repeated and threads are migrated at regular intervals (of 100 ms) [523].

Several scenarios based on the benchmark applications SPEC2000/2006 [517] and ALPbench [526] are used to evaluate the efficiency of thread migration based on the instantaneous temperature of each core. These scenarios include workloads with high, low, and mixed IPCs denoted, respectively, as HIPC, LIPC, and MIPC. The SU of these benchmarks is also classified as high, low, and mixed, where the frequency of each core switches between 1 and 2 GHz. The resulting IPS for a 3-D CMP comprising two core tiers and one memory tier are compared for two different thread management approaches. The first approach follows from [497]. The HIPCs are assigned to those cores closer to the heat sink (3-DI) and to cores with a lower SST, setting the clock frequency of each core to ensure that the SST does not exceed Tmax (3-DI-SST). Alternatively, the thread allocation assigns core frequencies based on the temperature slack of each core (Ti(t)≤Tmax) (IT), and assigns the super-task with the highest sum of IPCs to the coolest super-core. The thread with the highest SU (3-DIS) is assigned to the core of each super-core located closest to the heat sink (3-DIS-IT). A comparison between these two thread allocation policies results in an average IPS improvement of 18.5% for those scenarios listed in Table 13.5. The temperature slack for 3-DIS-IT is much lower, demonstrating that most cores operate close to Tmax due to the higher frequency, yielding both a higher total IPS and power for each scenario.

Table 13.5

Average Power Dissipation (Pavg) and Average Temperature Slacks (Tslack) of 3-DIS-IT and 3-DI-SST [523]

| Benchmark Combination | 3-DI-SST | 3-DIS-IT | ||

| Pavg (W) | Tslack (°C) | Pavg (W) | Tslack (°C) | |

| hipc-hm | 91.63 | 7.29 | 123.40 | 2.46 |

| hipc-mm | 113.86 | 5.08 | 118.97 | 4.29 |

| hipc-lm | 99.00 | 6.95 | 104.63 | 6.02 |

| mipc-hm | 104.30 | 7.32 | 150.53 | 2.30 |

| mipc-mm | 105.94 | 5.17 | 115.32 | 4.25 |

| mipc-lm | 84.56 | 7.53 | 87.77 | 6.99 |

| lipc-hm | 69.98 | 10.44 | 70.14 | 10.40 |

| lipc-mm | 85.03 | 7.37 | 85.70 | 6.94 |

| lipc-lm | 119.34 | 5.74 | 124.64 | 4.85 |

13.1.3.2.1 Dynamic thermal management of three-dimensional chip multi-processors with multiple memory tiers

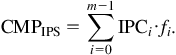

These techniques relate to CMPs when a single memory tier is considered. Adding more memory tiers improves performance as fewer cache misses occur; however, thermal management becomes a more acute issue as the distance of those tiers from the heat sink increases. The use of nonconventional memories, such as magnetic RAM [527], can alleviate this situation since a nonvolatile memory tier does not leak current, reducing the overall power of the stack. The introduction of these memory technologies requires different approaches for dynamic thermal management of 3-D CMPs since these memory technologies exhibit substantially different characteristics as compared to SRAM-based cache. For example, nonvolatile memories are slower and require higher energy to write, while the endurance is lower than SRAM. The main traits of different memory technologies are reported in Table 13.6.

Table 13.6

Parameters of Different Memory Technologies Fabricated in 65 nm Technology [529]

| SRAM | MRAM | PCRAM | |

| Cache size | 128 kb | 512 kb | 2 MB |

| Area (mm2) | 3.62 | 3.30 | 3.85 |

| Read latency (ns) | 2.252 | 2.318 | 4.636 |

| Write latency (ns) | 2.264 | 11.024 | 23.180 |

| Read energy (nJ) | 0.895 | 0.858 | 1.732 |

| Write energy (nJ) | 0.797 | 4.997 | 3.475 |

| Static power at 80°C (W) | 1.131 | 0.016 | 0.031 |

| Write endurance | 1016 | 4×1012 | 109 |

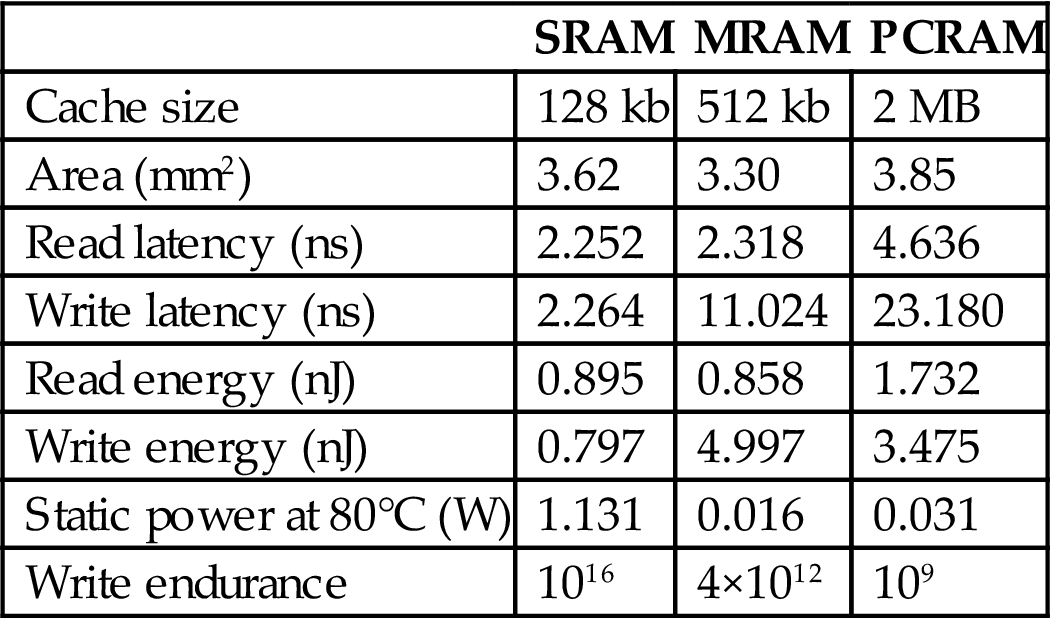

As magnetic memory is slower than SRAM, these memory tiers should be used to store less immediate data, while frequently accessed data or frequent write accesses should be placed within the SRAM tier. Considering a 3-D CMP that includes a mixture of memory tiers, an objective to improve the IPS of the CMP while satisfying thermal limits can be satisfied by power gating a cache at the “way-level” and applying DVFS for the cores of the CMP. An example of an architecture combining a mixture of memory technologies is shown in Fig. 13.9, where one processing tier with four cores is stacked with three tiers of SRAM and one tier of MRAM for L2 cache. L1 cache is integrated within the processing tier. The memory organization in each tier is also shown in the figure along with the vertical bus connecting the cache to the cores. The cores are connected through a crossbar switch (a large number of cores would require a NoC topology [528]). Allocation of the cache ways1 to each core lowers the temperature [527]. This allocation occurs dynamically both for the core and cache tiers where the leakage power of the memory tiers is also considered within the power budget. Key to this allocation strategy remains the notion of heterogeneous thermal coupling in the vertical direction throughout the entire stack, including the memory tiers.

To illustratively explain the concept behind the cache way and clock frequency allocation process, consider the example shown in Fig. 13.10. Five different schemes are applied to maximize IPS. In Fig. 13.10A, a low frequency clock (1 GHz) is chosen for both cores and cache ways allocated to each core from all of the SRAM tiers. As core 1 executes a memory demanding benchmark (Art), additional cache ways are provided to this core. The frequency cannot be substantially increased due to the greater power required by the L2 cache. In Fig. 13.10B, the clock frequency of both cores rises to 3 GHz but the cache available for each core is decreased to avoid excessive temperatures. Data would be lost and should therefore be transferred from main memory. In Fig. 13.10C, the clock of each core is adjusted through a DVFS mechanism, supporting a tradeoff between the capacity of the available L2 cache and the clock frequency. Replacing a tier of SRAM with MRAM (see Fig. 13.10D) and turning off some SRAM tiers supports a clock frequency of 3 GHz for both cores. Some data can be stored in the MRAM tiers, avoiding slow off-chip memory transfers, thereby achieving a better IPS than the system shown in Fig. 13.10B. Depending upon the workload, this hybrid-DVFS approach also employs DVFS to tradeoff the number of activated cache ways with clock frequency to ensure the resulting IPS of the 3-D CMP is maximized.

In the schemes shown in Figs. 13.10D and E, data stored in the MRAM tier are transferred into the faster SRAM tiers to limit the on-chip energy expended to transfer data from/to the memory. Data migration takes place with counters for each core that collect information on the least recently used and most recently used data blocks. This policy is implemented within the L2 memory controller [527]. Thus, a cache miss in the SRAM tier is compensated if a cache hit for this data occurs in the MRAM tier.

Maximizing IPS requires a set of parameters for both the cores and memory, including the clock frequency of each core, the number of SRAM and MRAM ways allocated to each core, and the number of activated SRAM and MRAM ways physically allocated on top of each core. This allocation occurs dynamically, where a configuration interval of 50 ms is employed [527]. Partition of the power gated memory blocks is also demonstrated in [530] but not to increase CMP throughput.

The IPS for the target 3-D architecture is analytically described in [527]. Two additional concepts are introduced to determine the number of cache ways allocated to each processor as well as which ways are activated. Consequently, the performance improvement (PI) of IPS in terms of the SRAM cache ways ![]() assigned to core i is

assigned to core i is

(13.20)

A similar expression applies to the cache ways of the MRAM, ![]() . Similar to PI, the performance loss (PL) incurred by the activation of one more cache way within a super-core

. Similar to PI, the performance loss (PL) incurred by the activation of one more cache way within a super-core ![]() , entails a decrease in clock frequency fi to maintain the temperature within specified limits,

, entails a decrease in clock frequency fi to maintain the temperature within specified limits,

(13.21)

(13.21)

(13.21)

A similar expression applies for the cache ways of the active MRAM, ![]() . With PI, PL, and the Lagrange multipliers, the IPS reaches a maximum for a specific temperature if the SRAM and MRAM cache ways for each core are selected to ensure that PI and PL are equal among all cores. Greedy heuristic algorithms and the bisection method are typically utilized to determine the SRAM and MRAM cache ways allocated to each core as well as the number of active ways within a configuration interval. The number of active ways depends upon the clock frequency of the core and the maximum tolerated temperature.

. With PI, PL, and the Lagrange multipliers, the IPS reaches a maximum for a specific temperature if the SRAM and MRAM cache ways for each core are selected to ensure that PI and PL are equal among all cores. Greedy heuristic algorithms and the bisection method are typically utilized to determine the SRAM and MRAM cache ways allocated to each core as well as the number of active ways within a configuration interval. The number of active ways depends upon the clock frequency of the core and the maximum tolerated temperature.

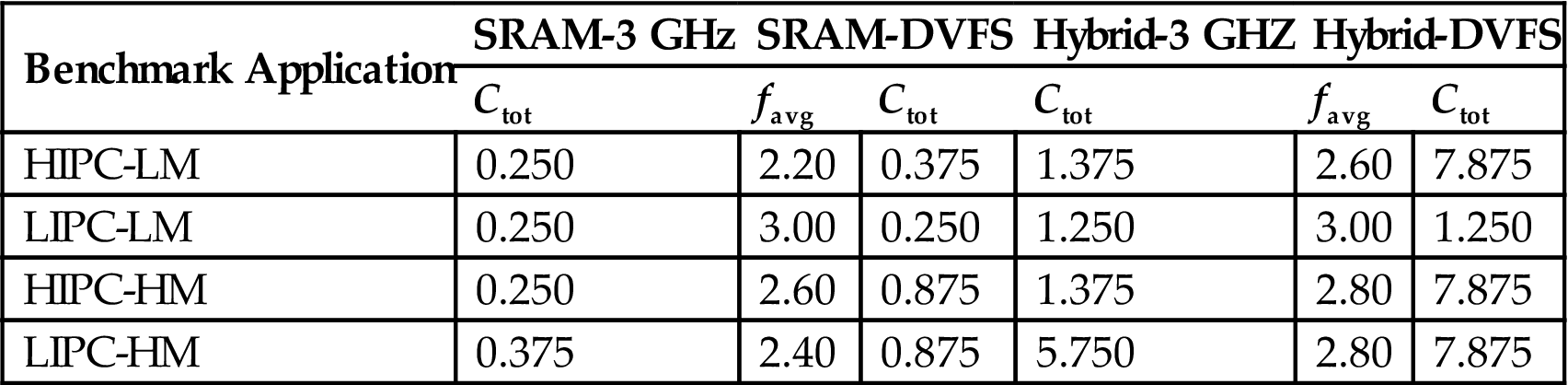

To demonstrate the efficiency of hybrid-memory and the performance benefits of the cache way allocation, a set of benchmarks is evaluated on the target architecture. The features of the memory tiers are listed in Table 13.6. The processor tier is based on the Intel Core i5 technology operating at 3 GHz. The benchmark applications are based on the SPEC2000/2006 and include combinations of workloads with HIPCs and low IPCs (LIPCs) as well as high memory (HM) and low memory (LM) demands (see Table 13.7). The schemes depicted in Figs. 13.10C–E are compared to the scheme where the cores operate at 3 GHz, SRAM is only used, and most of the cache ways are power gated to satisfy temperature requirements (Fig. 13.10B). The results of this comparison are reported in Table 13.8, where SRAM-DVFS (Fig. 13.10C) improves IPS by 26.7% and hybrid-DVFS exhibits an increase in IPS of, on average, 55.3%. Moreover, the energy-delay product (EDP) is also improved by 78.2% for SRAM-DVFS. This improvement is achieved by activating additional cache ways and using a lower clock frequency. The fewer cache misses allow the execution to finish earlier than SRAM-3 GHz, yielding improved EDP. Similarly, the hybrid-DVFS yields a lower EDP as compared to the hybrid-3 GHz of, on average, 32.1%.

Table 13.7

Combinations of Benchmark Applications to Compare the Performance of Different Thermal Management Schemes Based on SRAM/MRAM L2 Cache [527]

| Scenarios of Benchmark Applications | Benchmark Applications for Each Scenario |

| HIPC-LM | equake, parser |

| LIPC-LM | lbm, mcf06, sjeng, ammp |

| HIPC-HM | gcc, bzip |

| LIPC-HM | gcc, art |

Table 13.8

Average Clock Frequency favg (GHz) and Allocated L2 Cache Capacity Ctot (MB) for Each Thermal Management Scheme [527]

| Benchmark Application | SRAM-3 GHz | SRAM-DVFS | Hybrid-3 GHZ | Hybrid-DVFS | ||

| Ctot | favg | Ctot | Ctot | favg | Ctot | |

| HIPC-LM | 0.250 | 2.20 | 0.375 | 1.375 | 2.60 | 7.875 |

| LIPC-LM | 0.250 | 3.00 | 0.250 | 1.250 | 3.00 | 1.250 |

| HIPC-HM | 0.250 | 2.60 | 0.875 | 1.375 | 2.80 | 7.875 |

| LIPC-HM | 0.375 | 2.40 | 0.875 | 5.750 | 2.80 | 7.875 |

In all of these dynamic thermal management (DTM) techniques, thermal control is achieved by recognizing the thermal heterogeneity (e.g., cooling efficiency) among cores in a 3-D processor and carefully moving the heat generated within each region of the system. The thermal conductivity of these regions is fixed, similar to the physical design techniques discussed in Sections 13.1.1 and 13.1.2. However, as discussed in Section 12.3.1.1, the intertier interconnects can carry significant heat toward the heat sink, reducing the temperature and the thermal gradients within a 3-D IC. Consequently, these structures enhance the flow of heat to the ambient in addition to connecting circuits located on different physical tiers within the stack. Efficiently placing the available vertical connections or adding more TSVs to increase the thermal conductivity of the 3-D circuits facilitates the flow of heat towards the ambient, providing another method to control the thermal behavior of these circuits. These techniques are discussed in the following section.

13.2 Thermal Management Through Enhanced Thermal Conductivity

Methods that facilitate the removal of heat from a 3-D stack are discussed in this section. A 3-D system is typically designed to ensure that the thermal conductivity within each tier is increased and the thermal resistance towards the heat sink is as low as possible. As integrated systems consist primarily of layers of dielectric, metal, and silicon, emphasis is placed on increasing or redistributing the volume of metal within the 3-D stack to increase the thermal conductivity of specific regions within each tier. Furthermore, as the primary direction of heat flow is vertical, the density of the (metallic) TSVs plays a significant role in lowering the thermal resistance along this path. Alternatively, liquid cooling techniques for 3-D ICs can be employed as these techniques are more efficient in mitigating thermal issues. The fluid flows between adjacent tiers, enabling faster removal of heat through each tier, avoiding highly thermal resistive paths to the heat sink.

Several techniques exist to insert thermal vias to decrease the temperature in those tiers located farthest from the heat sink. The insertion of thermal intertier vias entails an area and/or wiring overhead, which depends upon both design and technological parameters. Furthermore, techniques exist that determine the number of these thermal vias applied to diverse stages of the design flow, resulting in different efficiencies. If thermal via insertion cannot satisfy the temperature constraints, auxiliary horizontal wires are used to facilitate the lateral spreading of heat towards the TSVs, another means to lower the temperature of a 3-D stack.

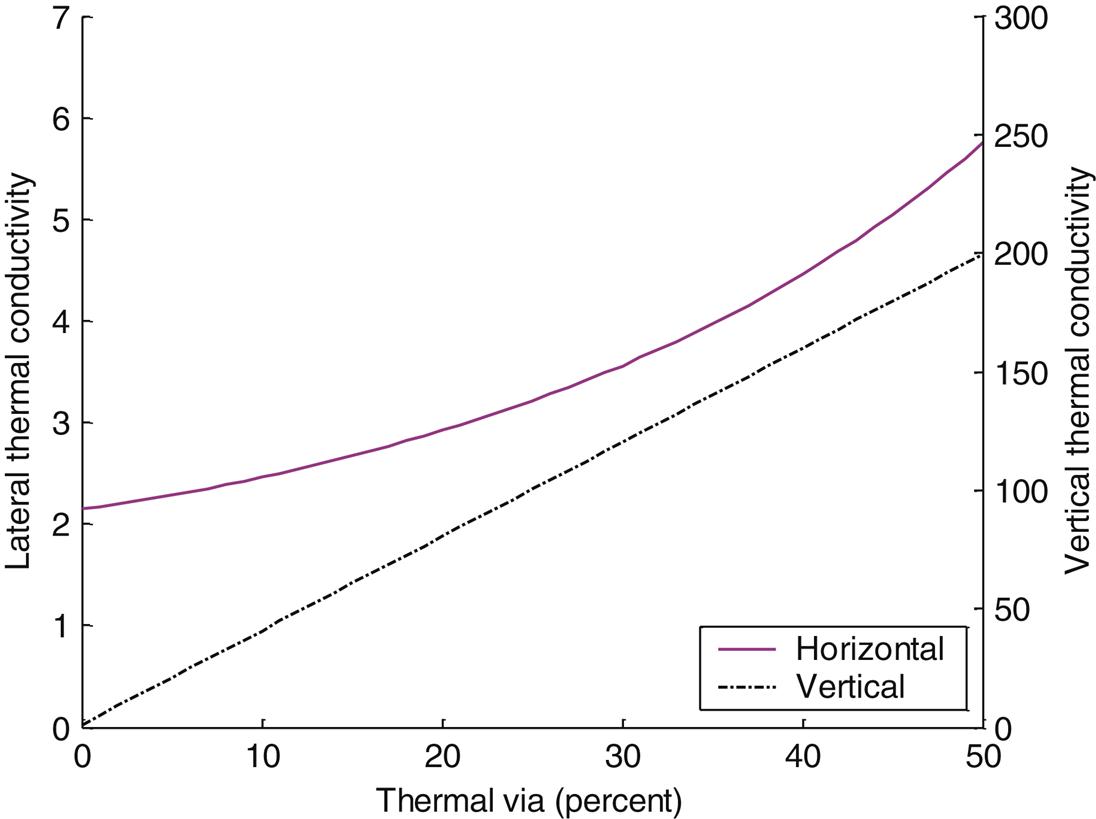

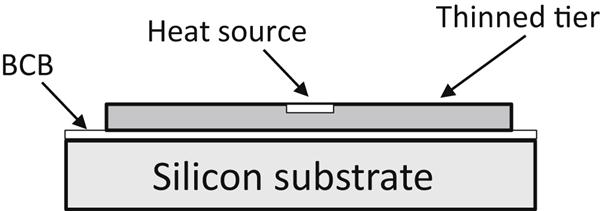

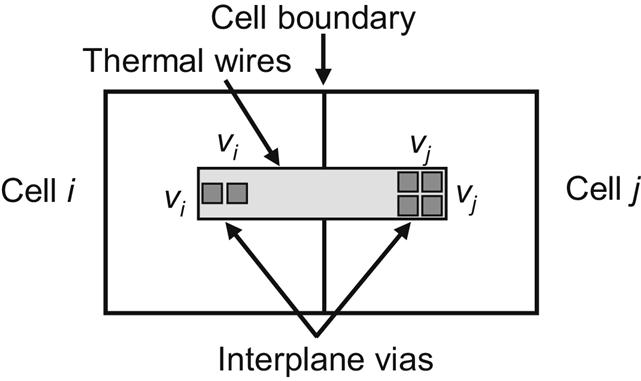

The use of both vertical and horizontal interconnections to move heat within a stacked system has been considered in systems-in-package (SiP) technologies [531]. Communication among tiers in SiP passes through vertical off-chip wires connected with wide metal stripes to the I/O pad area of each tier. An example of this technology is illustrated in Fig. 13.11. Prototype structures of this ultra-thin tier technology demonstrate several issues and tradeoffs related to thermal management of vertically integrated systems. Benzocyclobutene (BCB), for example, can be used as an adhesive layer with a thermal conductivity of 0.18 W/m-K. This material hinders the flow of heat in the vertical direction. Vertical flow of heat can be averted if the silicon substrate for each tier other than the first tier is thinned to reduce the length of the thermal path to the heat sink. This practice is beneficial; however, extreme substrate thinning in the range of 10 μm degrades thermal flow since the volume of the silicon is too small, preventing the heat from spreading laterally within the tiers, leading to hot spots [531]. Simulations have demonstrated that for the structure shown in Fig. 13.12, decreasing the BCB layer from 3 to 2 μm lowers the vertical thermal resistance of a two tier system by 22% (where the heat source area is 2×105 μm2). For the same sized heat source, the thermal resistance increases by 17% when the silicon substrate is thinned from 15 to 10 μm [531].

To further facilitate the flow of heat, an intermediate layer with embedded metal structures, such as a grid, is utilized. These structures allow the heat to spread laterally into the highly conductive vertical vias, lessening the rise in temperature. These structures, however, are only efficient for a certain physical distance from the vias, termed the effective transverse thermal transfer length,

(13.22)

where kCu and kBCB are, respectively, the thermal conductivity of copper and BCB, and tCu and tBCB are, respectively, the thickness of the copper grid/plate and BCB layer. As described in this expression, the effective thermal length does not change linearly with the transverse thermal resistance of the copper grid/plate [531].

Early investigation of thermal issues [531] demonstrated the potential and limitations of enabling faster flow of heat within 3-D systems. As fabrication processes for TSVs have evolved, the use of thermal TSVs (TTSVs) as heat conduits has gained popularity and TTSVs have been included in several physical design techniques. Alternatively, TSVs are employed as a means to shield a circuit from both rises in temperature and electrical noise generated by adjacent circuit blocks within the same tier [532]. The objective is not to facilitate the flow of heat but rather to block the rise in temperature of a circuit block from adjacent blocks dissipating significant power. The efficiency of this approach, where a metal guard ring is replaced by a ring of uniformly spaced TSVs, improves as the TSV diameter grows, as illustrated in Fig. 13.13 [532].

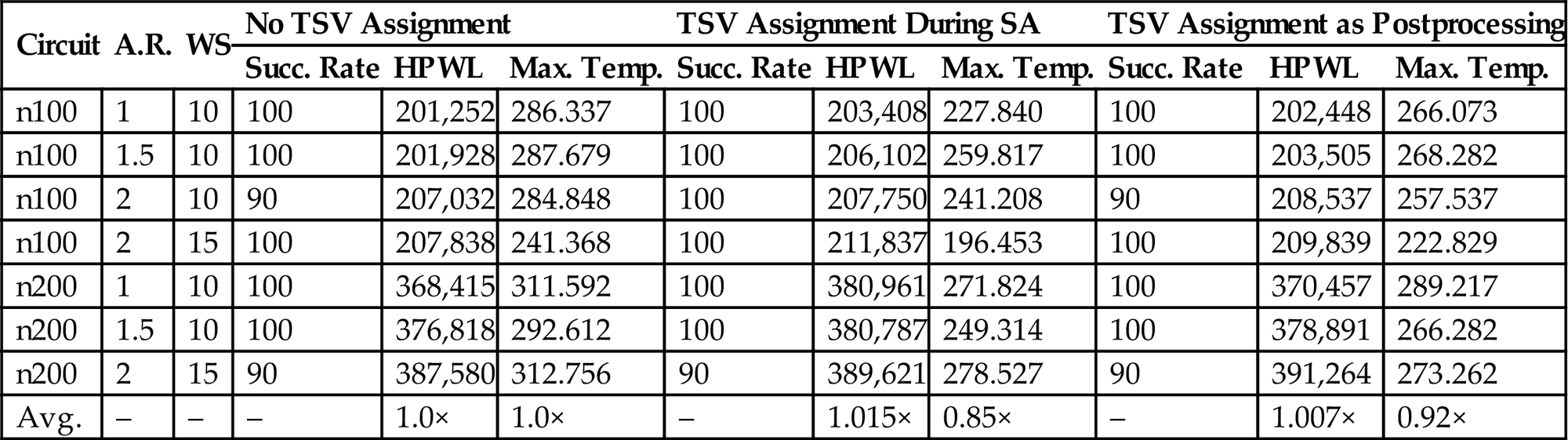

Although this practice is beneficial, hot spots developed within the block cannot be completely removed. This situation is more pronounced if heat is generated from the adjacent blocks in the vertical direction or if the effective thermal length limits efficient heat transfer to the periphery of the blocks. Consequently, integrating the TSV insertion process into the physical design process of a 3-D system can lead to more thermally robust and reliable solutions. Issues related to including TSVs within the physical design process include allocation or planning of the TTSVs, the system granularity to insert TSVs (for example, standard cell or block) whether TTSVs (and more broadly, temperature) is an objective or constraint, and the overhead of the TTSV on other design objectives such as performance, wirelength, and area. Several TSV planning techniques are discussed in the following subsection.

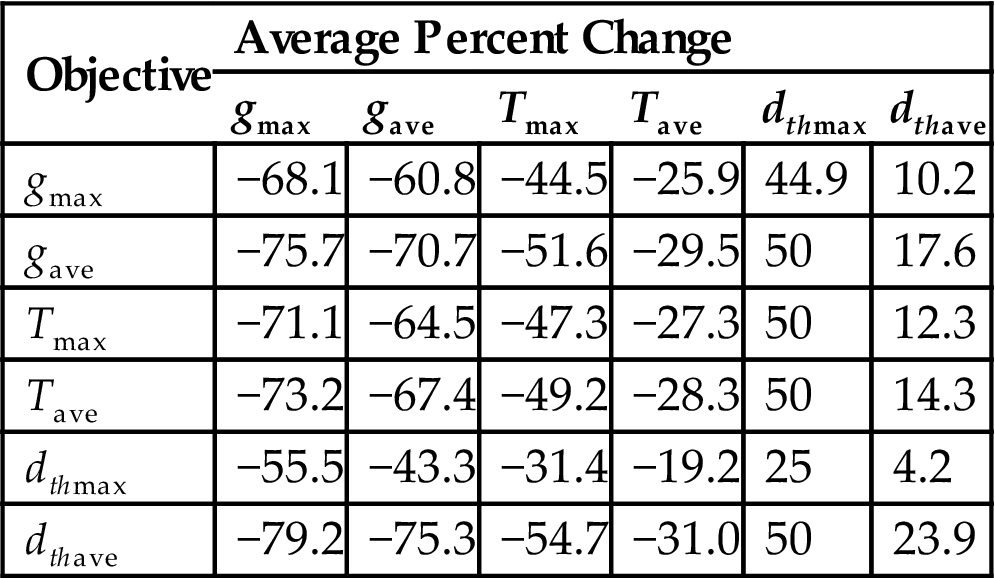

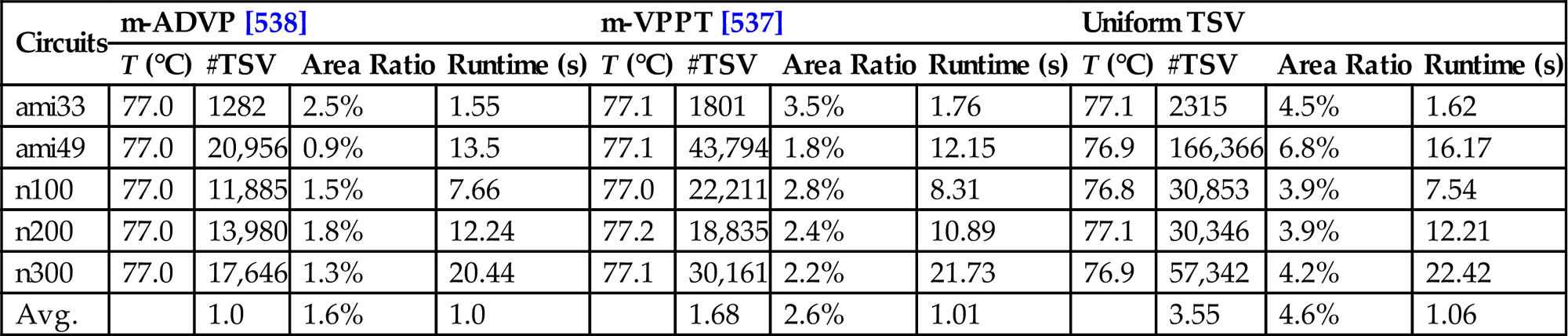

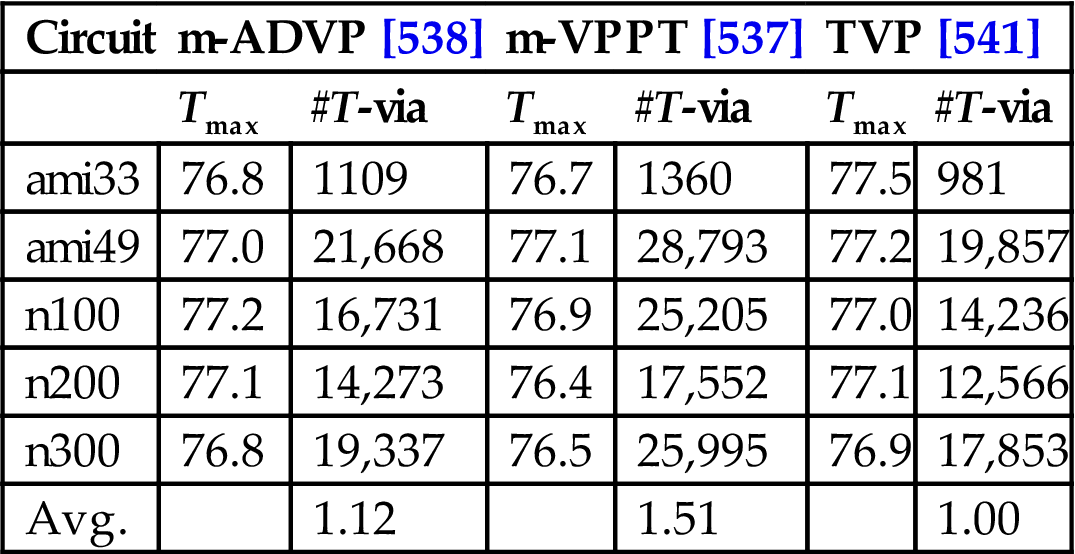

13.2.1 Thermal Via Planning Under Temperature Objectives

In Chapter 9, Physical Design Techniques for Three-Dimensional ICs, the available space among the cells or circuit blocks in each tier of a 3-D system is employed to allocate signal TSVs to least affect the placement of the components and the length of the interconnections. Similarly, the available space can also mitigate thermal issues by placing thermal vias within the whitespace. As thermal gradients differ across and among tiers, two different types of TTSV densities are typically computed. Vertical TTSV densities among tiers and a horizontal TTSV density for each tier are determined. Thermally driven methods for floorplanning, placement, and routing have been developed to determine these densities to achieve a broad repertoire of objectives.

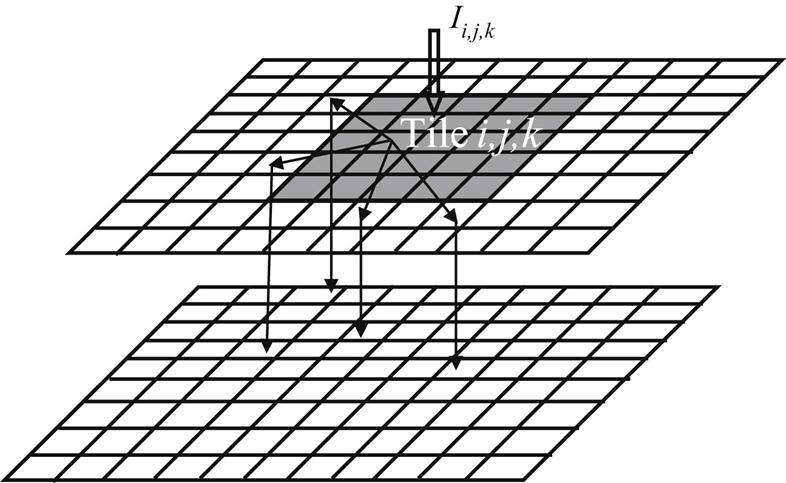

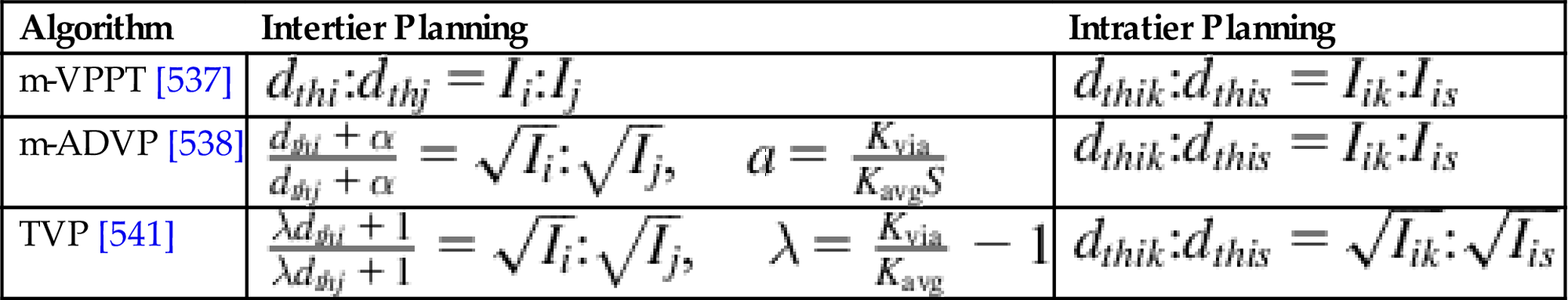

The temperature or thermal gradients within a 3-D stack is interestingly treated as an objective and the TTSV density as a design constraint and vice versa, leading to different problem formulations. Another issue that arises is whether TTSV planning should be integrated with the floorplanning or placement steps or be applied as a postprocessing step. Integrating TTSV planning within a physical design process rather than as a postprocessing step can lead to better results, but the need for thermal analysis during each iteration can adversely affect computational time.