Chapter 2. Why Change Current Networks? A Need for Change

The campus network itself has not changed dramatically over the past decade. Although technologies like Virtual Switching System (VSS) and virtual PortChannel (vPC) have been introduced to eliminate the need for Spanning Tree Protocol (STP), many campus networks still implement Spanning Tree Protocol, just to be safe. A similar statement can be made on the design itself; it has not changed dramatically. Some might even state that the campus network is quite static and not very dynamic. Then why would today’s campus network need to transform to Intent-based? There are some drivers that force the campus network to change. This chapter describes some of these major drivers and covers the following topics:

Wireless/Mobility Trends

Wireless networks were introduced in early 2000. (The first IEEE 802.11 standard was published in 1997; the wireless network standard that really evolved wireless networks was IEEE 802.11b, published in 1999.) Although you needed to have special adapters, the concept of having a wireless Ethernet network with connection to the emerging Internet and corporate infrastructure provided a lot of new concepts and possibilities. New use cases included providing “high speed” access for those users who were relying on the much slower cellular networks (megabits instead of 9600bps) as well as an optimization in logistics, where a wireless infrastructure would send the data to the order picker.

Note

The order picker is the person in the warehouse who “picks” the items of an individual order from the stock in the warehouse and prepares them for shipment.

In the early stages, wireless networks were primarily deployed and researched for these two use cases. A third use case was of course the wireless connection for the executives; the first enterprise campus wireless networks were implemented.

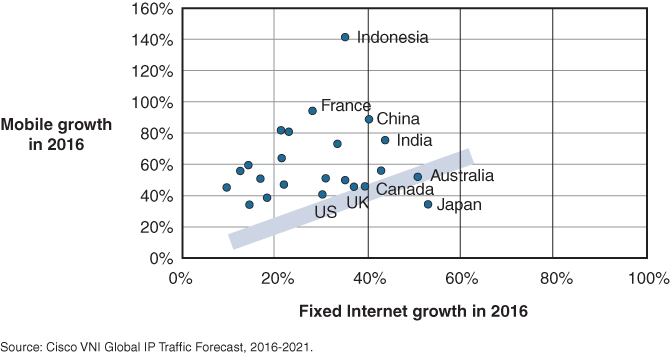

Once wireless adapters were built into laptops by default, more use cases emerged, such as hotel hospitality services and enterprise wireless campus networks. The introduction of mobile handheld devices such as smartphones and tablets helped in the exponential growth of connected wireless devices (see Figure 2-1 and Figure 2-2) to an average of 2.5 wireless devices per capita in 2016 compared to 0.2 wireless devices per employee in the past.

Figure 2-1 Global Devices and Connections Growth (Source: Cisco Systems, VNI 2017)

Figure 2-2 Fixed and Mobile Internet Traffic Growth Rates 2016 (Source: Cisco Systems, VNI 2017)

Traditional enterprise campus networks primarily focused on (static) wired access located on fixed workplaces, while wireless access to the network was a nice-to-have but not necessary feature. But focus has been changing over the past five years.

Use Case: FinTech Ltd.

At first, FinTech Ltd. wanted to introduce a wireless network for its visitors as well as for employee-owned devices. Because of media attention about another organization where journalists were able to access the internal network via a wireless access point, the executive board decided that the wireless guest network must be completely separated (both logically and physically) from the corporate network. A pilot project was started with a small controller with five access points at strategic locations (conference rooms, executive wing, and so on) to demonstrate the purpose and effect of the wireless network. The pilot project was successful, and over time the wireless guest network was expanded to the complete office environment, so that wireless guest access could be provided within the whole company with coverage over speed as a requirement, as the service was for guests and simply nice to have.

Recently, FinTech Ltd. executives also decided that they wanted to test using laptops instead of fixed workstations to improve collaboration and use the office space more efficiently. (FinTech Ltd. was growing in employees, and building space was becoming limited.)

Extra access points were placed to provide for more capacity (capacity over coverage), and a site survey was executed to place the access points to accommodate the new laptops being used for Citrix. Of course, the wireless network was still separated from the corporate network, and Citrix was used for connecting the laptop to the desktop.

Access points were properly installed, the pilot was successful, and a large rollout was initiated, using different kinds of laptops than originally piloted.

Shortly after rollout, some of the laptops began having connectivity issues. Troubleshooting was difficult because changes to the wireless network to support troubleshooting were not allowed as it would affect the ability of other users to work. Overnight, the wireless network went from “nice-to-have” to business critical without the proper predesign, a redundant controller, and an awareness at different management levels of what those consequences are.

Eventually, the wireless issues were solved by updating software on the wireless network as well as the laptops (which had client-driver issues compared to the tested pilot laptops), and the wireless network was back to operating as designed.

An example like FinTech Ltd. can be found across almost every enterprise. The wireless network has become the primary connection method for enterprise campus networks. Although perhaps less reliable than wired, the flexibility and ease of use by far outweigh this performance and reliability issue. Results are obvious if you look at an average office building 15 years ago versus a modern enterprise office environment (see Figure 2-3).

Figure 2-3 Office Area 15 Years Ago and a Modern Office Area

The strong success of wireless networks is also their weak spot. It’s not only that all wireless networks are in a free-to-use frequency band (also known as unlicensed spectrum), and with more devices connecting within the same location, the wireless network needs to be changed because of an increase of usage in both density and capacity. Users will complain if the Wi-Fi service inside the office is not fast and reliable. After all, the selection of hotels and other venues based on the quality of the Wi-Fi service provided is fairly commonplace these days.

In summary, the number of wireless devices is increasing, and thus high-density wireless networks (used to allow many wireless devices in a relatively small area) are not exclusively targeted for conference venues and large stadiums anymore. They have become a must-have requirement for the enterprise.

Connected Devices (IoT/Non-User Operated Devices)

The network infrastructure (Internet) has become a commodity infrastructure just like running water, gas, and electricity. Research by Iconic Displays states that 75% of the people interviewed reported that going without Internet access would leave them grumpier than going one week without coffee. The number of connected devices has already grown exponentially in the past decade due to the introduction of smartphones, tablets, and other IP-enabled devices. The network is clearly evolving, and this growth will not stop.

In the past, every employee used only a single device to connect to the network. During the introduction of Bring Your Own Device (BYOD), the sizing multiplier was 2.5. So every employee would on average use 2.5 devices. This number has only increased over time and will continue to do so. See Table 2-1 for the expected average number of devices per capita.

Table 2-1 Average Number of Devices and Connections per Capita (Source: Cisco VNI 2017)

Region |

2016 |

2021 |

CAGR* |

Asia Pacific |

1.9 |

2.9 |

8.3% |

Central and Eastern Europe |

2.5 |

3.8 |

9.1% |

Latin America |

2.1 |

2.9 |

7.0% |

Middle East and Africa |

1.1 |

1.4 |

5.4% |

North America |

7.7 |

12.9 |

11.0% |

Western Europe |

5.3 |

8.9 |

10.9% |

Global |

2.3 |

3.5 |

8.5% |

*Compound Annual Growth Rate |

|||

This table is sourced from Cisco’s Virtual Networking Index. Although the number might already seem high, it is based on adding up all the different types of connected devices seen at shops, grocery stores, homes, businesses, and so on. The numbers add up. Smartboards such as a Webex Board or videoconferencing system are also connected to the network and increasingly are being used to efficiently collaborate over distance.

The same is applicable for traditionally physical networks as well. More and more security systems, such as those that monitor physical access (such as a badge readers) or cameras, are connected to the network and have their own complexity requirements.

Another big factor in the growth of devices on the campus network is the Internet of Things (IoT). IoT is absolutely not about just industrial solutions anymore. An increasing number of organizations set up smart lighting and provide an IP-telephone in the elevator for emergencies, and even sensors in escalators provide live status feedback to the company servicing them. In general, IoT is coming in all different sorts and sizes to the campus network within an enterprise, more often than not driven by business departments that procure services from others.

Finally, a major reason for the explosive growth of devices is the consumerization of technology in personal devices such as smartwatches, health sensors, and other kinds of devices. Some designers are already working on smart clothing. All these devices will be used by employees and thus need a connection to the commodity service called the Internet.

Based on these developments, it is safe to state that the number of connected devices will increase dramatically in the coming years. And as a consequence, the number of network devices will increase. These network devices, of course, need to be managed by adequate staff.

Complexity

The campus network used to be simple. If required, a network port for a specific VLAN would be configured, and the device would be connected to that port. Every change in the network would require a change in configuration, but within an enterprise network not many changes would occur besides the occasional department move that would result in moving the HR VLAN to a different port. Some engineers or architects still regard today’s campus networks as simple; they are statically configured, and changes are predictable and managed by executing commands on-box.

This has not been the case for some time now. Several factors have already increased the complexity of the campus enterprise network. To name a few examples, IP telephony, instant messaging, and videoconferencing (now collectively known as collaboration tools), as well as video surveillance equipment, have all been added to the campus network infrastructure. Each of these types of network devices has its own requirements and dynamics, and all applications running on those devices require their services to work as expected. This has led to the introduction of quality of service in the campus network as a requirement. And the campus network needs to manage that expectation and behavior. The dynamics of the campus network are only increasing as more and more types of devices are connected to and use the campus network. As a result, complexity is increasing.

Use Case: QoS

The default design for the larger branch offices within SharedService Group is based on 1-Gigabit switches in the access layer with two 10 Gigabit uplinks to the distribution switch. As the employees primarily use Citrix, there was an assumption that Quality of Service was not necessary as there is sufficient bandwidth available for every user. Although not entirely correct, the ICA protocol used by Citrix would handle the occasional microburst or delays in the traffic. They could just happen sometimes.

In the past, IP phones were introduced in the network. During both the pilot project as well as the rollout of IP phones, no problems with audio quality or packet drops were found, so another application was introduced to the network, like any other new application.

Recently SharedService Group replaced the access layer made up of Catalyst 3750 switches with Catalyst 3650 switches as part of the lifecycle management. At that time problems started to occur with voice, and in the meantime video was introduced to the network as well. The protocols used for voice are predictable in bandwidth in contrast to video. Video uses compression techniques to reduce the used bandwidth. Because compression results can vary, the used bandwidth varies too, resulting in bursty traffic. Users complained about calls being dropped, having “silent” moments in the call, and other voice-related issues.

Once troubleshooting was started it became clear that there were output queue drops on some of the access switches facing the end users. To validate whether this was normal behavior, similar troubleshooting was executed on the older Catalyst 3750 switches. Although less frequent, they would sometimes occur.

Eventually the reason for the packet drops was found in the default behavior of the Catalyst 3650 switches compared to the older switches. In the older switches the QoS bits would be reset, unless you configured mls qos trust, and even if no QoS policies were configured, the bits were used in the egress queue by default.

However, the behavior of QoS on the Catalyst 3650 is very different. The bits are preserved in transiting the switches. But there is no queue-select on the egress port unless a selection policy for QoS has been set on the ingress port. In other words, if you do not “internally mark” the ingress traffic, all traffic is placed in the same default egress queue. And the default configuration for a Catalyst 3650 switch is to only use two of the eight egress queues.

The conclusion for SharedService Group is that in any enterprise campus network, some form of QoS must be implemented to make more queues and buffer space available in the switch, whether in the access or the distribution layer.

Besides the dynamics of adding more diverse devices to the network, security is becoming more important as well, putting even higher requirements on the campus network. The security industry is in a true rat race between offense (cybercriminals) and defense (security staff within the enterprise). The ransomware attacks of the past years emphasize the importance of security. The nPetya attack via Ukrainian Docme software in June 2017 even superseded those attacks in impact. Before the attack was launched, the malware was already distributed via the software update mechanism within Docme. Once the attack was launched, the malware became active, and in some environments had an infection rate of more than 10,000 per second. After cracking the local administrator password, the malware moved laterally through the network infecting and destroying any computer found in its path. It didn’t matter if the software was up-to-date or not. This malware software was written by professionals who knew networking.

Increased security requirements (which are necessary) to protect the organization result in an increase in the complexity of the campus enterprise network.

In summary, the campus network is slowly but surely increasing in complexity of configuration, operation, and management. And as this process occurs slowly over time, the configuration of the campus network is becoming much like a plate of spaghetti. Different patches, interconnections, special policies, access lists for specific applications, and other configurations also result in reduced visibility of the network, thus increasing the chance of a security incident as well as increasing the chance of a major failure that could have been prevented.

Manageability

Based on the trends of the previous sections, it is clear that the operations team that manages the campus network is faced with two major challenges: the exponential growth of the number of connected devices and the increased complexity of the network. Furthermore, the exponential growth of the number of connected devices will have a multiplying effect on the increase in complexity.

Often in today’s networks, the required changes, installations, and management are done based on a per-device method. In other words, creating a new VLAN requires the network engineer to log in to the device and create the new VLAN via CLI. Similar steps are executed when troubleshooting a possible network-related problem, by logging in to the device and tracing the steps where the problem might occur. Unfortunately, most IT related incidents are initially blamed on the network, so the network engineer also has to “prove” that it is not the network (Common laments are “the network is not performing,” or “I can’t connect to the network.”)

Current statistics (source: Cisco Systems, Network Intuitive launch) show that on average every network engineer can maintain an overview of roughly 200 concurrent devices. This overview includes having a mental picture of where devices are connected, which switches are touched on the network for a specific flow, and so on. In other words, the current network operations index is 200 devices / fte (full-time equivalent) approximately. Of course, this index value is dependent on the complexity of the network combined with the size of the organization and the applicable rules and regulations.

So an IT operations staff of 30 can manage 6,000 concurrent devices, which matches up to approximately 2,500 employees with the current 2.5 devices per employee. But the number of devices is also growing exponentially in the enterprise. The average number of devices per employee is moving toward 3.5 on average, but in certain areas can go up to 12.5. (This number includes all the IoT devices that connect to the same enterprise campus network, such as IP cameras, IP phones, Webex smart boards, light switches, window controls, location sensors, badge readers, and so on.) That means that for the existing 2,500 employees, a staggering 20,000 concurrent devices would be connected (taking a factor of 8). Add the number of smart sensors and smart devices into the count as well, and it is easy to have that same enterprise network handling 50,000 concurrent endpoints.

That would mean that the network operations staff should be increased to ± 300 employees (250 for the devices plus some extra for the added complexity). And that is simply not possible (not only for cost but also finding the resources). In summary, the manageability of the existing campus enterprise networks is in danger and needs to change.

Cloud (Edge)

The principle of using cloud infrastructures (in whichever form) for some or all applications in the enterprise has become part of the strategy for most enterprises. There are, of course, some benefits to the business for this strategy, such as ease of use, application agility, availability, and ease of scalability. However, it also implies some challenges to today’s enterprise networks.

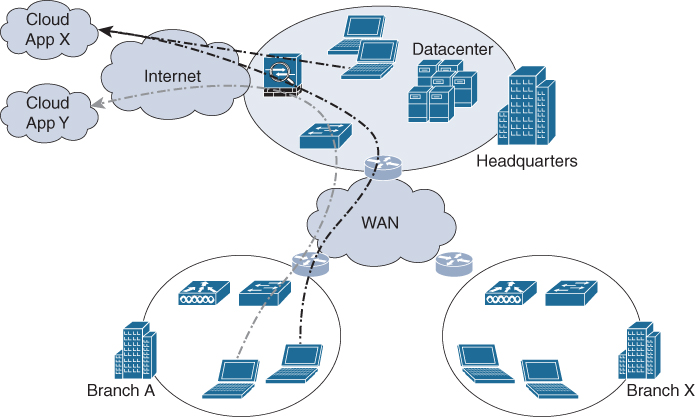

Most traditional enterprise networks are designed hierarchically with a central office (HQ) and an internal WAN that connects the several branch offices to the internal network. The Internet access for employees is usually managed via one (large and shared) central Internet connection. At this central Internet connection, the security policies are also managed and enforced. Figure 2-4 illustrates this global design.

Figure 2-4 Traditional Central Outbreak

Traditionally, most applications are hosted inside the enterprise datacenter, which is located in the HQ. With the transition of these applications to the cloud, the burden of these applications on the Internet connection is increasing. In essence, the enterprise’s datacenter is, from a data perspective, extended from its own location toward the different clouds that are being used. And the number of cloud services is increasing as an increasing number of companies provide software as a service (SaaS) and label this service as a cloud service. For example, take an enterprise with 3,000 employees with an average mailbox size of 2 Gigabytes moving its email service to Microsoft Office 365. The initial mailbox migration alone would already take 6 Terabytes of data to be transferred. Even with a dedicated 1 Gigabit Internet line and an average of 100 Megabytes per second, this transfer alone would take roughly 70 days.

Even after the initial migration, every time a user logs in to a new workstation, the user’s mailbox is going to be downloaded to that workstation as cache. So it is logical that with a move to the cloud, a significant burden is placed on the central Internet connection and its security policies.

In parallel, most enterprises have adopted WAN over Internet besides the traditional dedicated WAN circuits with providers using technologies such as Cisco Intelligent WAN (IWAN) and more recently the Cisco Software-Defined WAN (SD-WAN) based on Viptela. Some enterprises have completely replaced their dedicated WAN circuits with these technologies. As a result, not only the internal WAN capacity is increased, but a local Internet connection becomes available to provide wireless access to guests of the enterprise.

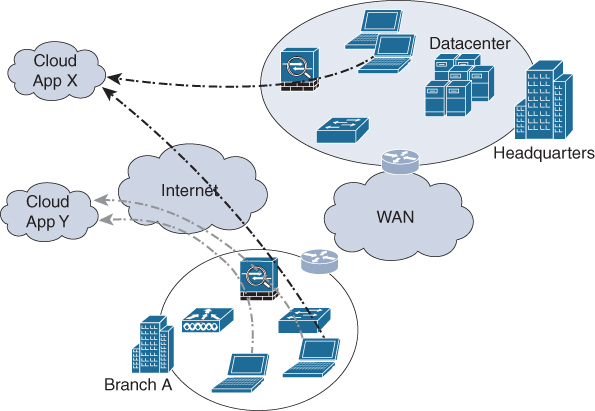

If you combine these two trends—local Internet access at the branch office and an increased use of cloud applications by the enterprise—it is only common sense that a preference starts to emerge for offloading cloud applications access to the local Internet breakout at the branch. Figure 2-5 illustrates this design.

Figure 2-5 Connecting Cloud Applications via Cloud Edge

This preference is also known as cloud edge and will impose some challenges on enforcing and monitoring security policies, but it will happen. And as a result, the campus network at that branch office will receive extra network functions such as firewalling, anti-malware detection, smart routing solutions, and the correct tooling and technologies to centrally manage and facilitate this trend.

(Net)DevOps

DevOps is a methodology that has emerged from within software engineering. DevOps is fulfilling the increased demand to release new versions and new features within software more rapidly. The DevOps methodology itself was being pushed because of the Agile software development methodology.

Classic software engineering methodologies usually follow a distinct five-step process for software development:

Step 1. Design

Step 2. Development

Step 3. Test

Step 4. Acceptance

Step 5. Release in production

Each of these processes was commonly executed by a dedicated team with the process as its specialty. For example, development would start only after the design was completed by the design team. The development team usually had limited to no input into the design process. As a result, the majority of software development projects resulted in products that were delayed, over-specified, over budget, and usually not containing the features the customer really wanted because the design team misunderstood the customer’s requirements and the customer’s requirements evolved over time.

The Agile software engineering methodology takes a different approach. Instead of having distinct teams of specialists executing the software engineering processes sequentially, multidisciplinary teams were created. In these teams, the design, development and testing engineers work together in smaller iterations to quickly meet the customer’s requirements and produce production-ready code that can be demonstrated to the customer. These smaller iterations are called sprints and contain mini-processes of design-build-test. The team closely cooperates with the customer on requirements and feature requests, where the primary aspects for prioritization are business value or business outcome in conjunction with development effort required.

This Agile software engineering methodology provides a number of benefits. First, due to the fast iterations, the software is released many times (with increased features over time) instead of a single final release. These releases are also discussed and shared with the customer, so the customer can provide feedback to the product, and as a result the product better matches the request of the customer, thus providing a better product.

Second, by having a team made responsible for the features, a shared team effort and interaction is created between designers, software engineers, and test engineers. That aspect results in better code quality because of the shared responsibility.

Within Agile software engineering teams, it is also common to use so-called automated build pipelines that integrate the different steps in building and deploying software in an automated method. Committed source code versions of the software are merged, automatically compiled, and unit tested to bugs. With that automation taking place, the release of a new software version can be deployed much faster.

However, Agile itself is primarily focused on the development of software—the “dev” side, so to speak. Taking the released code from the pipeline into operations usually still took quite some time, as that required change requests, new services, servers, and other aspects. Consequently, the deployment of new software versions within the IT systems of an enterprise took much more time and effort compared to deploying that same version into cloud-enabled services.

This is where DevOps emerged, where development (Dev) and operations (Ops) work closely together in single teams. The automation pipeline for the software engineering is extended with automation within the application-server side, so that the release of software is inside the same automated process. As a result, if an Agile team decides to release and publish software code, the deployment of that code is done automatically. This completed DevOps pipeline is also known as Continuous Integration/Continuous Delivery (CICD). Large technology organizations use this mechanism all the time to deploy new features or bug fixes in their cloud services.

Toolchains

Automation pipelines used within CI/CD (or CICD) are also referred to as toolchains. Instead of relying on a single tool that can do all, the team (and thus the pipeline) relies on a set of best tools of the trade chained together in a single automated method. This toolchain, depending on the way the team is organized, usually consists of the following tools:

Code: Code development and review tools, including source code version management

Build: Automated build tools that provide status if the code is compliable and language-error free

Test: Automated test tools that verify, based on unit tests, automatically if the code functions as expected

Package: Once accepted, the different sources are packaged and managed in repositories in a logical manner

Release: Release management code, with approvals, where the code from the different packages are bundled in a single release

Configure: Infrastructure configuration and management tooling, the process to pull the (latest) release from the repository and deploy it automatically on servers (with automatic configuration)

Monitor: Application performance monitoring tools to test and measure user experience and generic performance of the solution

There are also other similar toolchain sequences available, such as Plan, Create, Verify, and Package. This is usually dependent on the way the Agile methodology and toolchains are integrated within the team.

With DevOps, the release frequency of new features and bug fixes is increased, and the customer can get access to the new features even faster. But still if a new server needs to be deployed, or a complete new application, well, you do need to create VLANs, load balancer configurations, IP allocations, and firewalls. And all those operations are executed by the network operations team. Because of the potential impact (the network connects everything, usually also without a proper test environment), these changes are prepared carefully with as much detail as possible to minimize impact. Change procedures commonly require approval from a change advisory board, which takes some time. As a result, deploying new applications and servers still takes much more time than strictly necessary.

The term NetDevOps represents the integration of Network operations into the existing DevOps teams to reduce that burden. This integration is possible because of the increased automation and standardization capabilities inside the datacenter with technologies like Cisco Application Centric Infrastructure (ACI).

Although the NetDevOps trend is focused primarily on the datacenter, a parallel can be made into the campus environment. Because of the increased complexity and diversity of devices in the campus, more functional networks need to be created, deleted, and operated. End users within enterprises have difficulty understanding why creating a new network in the campus takes so much time without understanding that adding a new VLAN takes proper preparation to minimize impact to other services. Consequently, NetDevOps as a methodology is moving toward the campus environment as well.

Digitalization

If you ask a CTO of an enterprise what his top priorities are, the chances are that digital transformation (or digitalization) is part of the answer. What is digitalization, and what is its impact for the campus enterprise network?

IT is becoming an integral part of any organization. Without IT, the business comes to a standstill. Applications have become business critical in almost every aspect of a business, regardless of the specific industry. Traditionally, the business specified the requirements and features that an application would need to have to support itself. The business would then use that application and chug along, changing a bit here and there.

With more devices getting connected and entering the digital era, more data (and thus) information is becoming available for the enterprise to use. This data can be collected in data lakes so that smart algorithms (machine intelligence) and smart queries can be used to find patterns or flows that can be optimized in the business process.

This process of digitizing data and using it to optimize business processes is part of the digital transformation. In parallel, Moore’s law1 is causing the effect that technology velocity is ever-accelerating, enabling technology to solve more complex problems in ever-decreasing timeframes. This leads to the fact that technology can suggest or even automatically reorganize and improve business processes by finding the proper patterns in the data of the enterprise and finding ways to overcome those patterns. This process is called digitalization. The key for successful digitalization is that IT and business must be aligned with each other. They must understand each other and see that they jointly can bring the organization further. Thus, digitalization requires that the way the campus enterprise network is operated needs to be changed and be more aligned with the business. A more detailed explanation of digitization and its stages is provided in Chapter 12, “Enabling the Digital Business.”

1 Moore’s law is the observation that the number of transistors in a dense integrated circuit doubles about every two years.

Summary

The design and operation of a campus network have not changed much over time. However, the way the campus network is used has changed dramatically. Part of that change is that the campus network (whether it is a small consultancy firm or a worldwide enterprise with several production plants) has become critical for every enterprise. If the campus network is down, the business experiences a standstill of operations. That is a fact that not many organizations comprehend and support. The digital transformation (also known as digitalization) is emphasizing that IT and thus the network are becoming more important and that organizations not adapting will experience a fallout.

The complexity of the campus network has slowly but surely increased over time as more applications use the network and with each application having its own requirements. This added complexity results in reduced visibility with an increase in security risks and higher troubleshooting times. The digitization of environments means that more endpoints will be connected to the enterprise networks. These endpoints are often not operated and managed by employees, but they are managed and operated via a gateway; in other words, the campus network will also connect endpoints that are not owned or managed by employees. Both factors result in a complex and unmanageable campus enterprise network.

Faster adoption of new features is the way technology is now being used by organizations all over the world. As a result, IT departments are increasingly organized in multidisciplinary teams using efficient automation and standardization processes to deploy new technologies and features faster and become more aligned with the business processes that have that requirement. This adoption of automation and standardization needs to apply to campus networks as well; otherwise the campus network will not be able to keep up with the changes.

All these aspects combined make it necessary to change the way a campus network is designed, implemented, and operated; otherwise, the campus network will in time become an unmanageable, chaotic, and invisible network environment with frequent downtimes and disruptions as a result. And these disruptions can put any organization at risk for bankruptcy due to the loss of revenue and trust.

The Cisco Digital Network Architecture and Intent-Based Networking Paradigm are the way to cope with these challenges, transform the campus network, and prepare it for the new digital network in the future.