CHAPTER 9 Emerging Paradigms for User Interaction

It can be surprisingly easy to simulate intelligence in a computer. In the 1960s Joseph Weizenbaum developed the famous ELIZA program, a conversational system that uses simple language rules to simulate a Rogerian psychotherapist (Weizenbaum 1976). It responds in particular ways to mentions of keywords or phrases (e.g., “mother,” “I think”), rephrases stated concerns in a questioning fashion designed to draw people out, makes mild remarks about assertions, and so on. Weizenbaum made no claims of intelligence in ELIZA, but interactions with its simple teletype interface were sometimes quite convincing. Appearing below is an excerpt from a continuing dialog between ELIZA and a patient (available at www.word.com/newstaff/tclark/three/index.html):

Once you know how ELIZA works, it’s easy to trick it into saying silly things, or using language that is awkward or unlikely. Indeed, part of the fun of talking to ELIZA or its modern “chat-bot” descendants is seeing how your words are misconstrued. Weizenbaum’s personal goal was to highlight his growing concern about overattribution of intelligence to machines. His message was one of alarm at how engaged people were by this poor imitation of a human:

I was startled to see how very deeply people conversing with [the program] became emotionally involved with the computer and how unequivocally they anthropomorphised it…. Once my secretary, who had watched me work on the program for many months and therefore surely knew it to be merely a computer program, started conversing with it. After only a few interchanges, she asked me to leave the room. (Weizenbaum 1976, 76)

The last decade has been one of tremendous growth in information technology. Computer chips have become microscopically small while at the same time providing phenomenal increases in processing speed. Output displays exist for devices as small as a watch or as large as a wall. A robust network backbone interconnects diverse resources and enormous information stores. Complex models of people’s behavior can be built to aid task performance or to predict preferences and decisions. These developments enable a wide variety of interaction paradigms beyond the familiar WIMP user interface style. As user interface designers, it is easy to be swept up in the excitement of new interaction technology; as usability engineers, it is crucial to examine the implications of new technology for usefulness, ease of learning and use, and satisfaction (see “Updating the WIMP User Interface” sidebar).

Figure 9.1 revisits Norman’s (1986) framework in light of emerging paradigms for human-computer interaction. For example, the figure suggests some of the ways that new technologies will influence the process of planning and making sense of computer-based activities. A system with artificial intelligence may actively suggest likely goals. At the same time, the pervasive connectivity provided by networks adds group and organizational elements to goal selection and sense making. For instance, the figure shows an accountant planning for a budget meeting. But if he is collaborating with others over a network, the accountant may be expected to coordinate his actions with those of other people in his team or company, or to compare his results with work produced by others.

New interaction paradigms are also beginning to change the lower-level processes of action planning, execution, perception, and interpretation. Intelligent systems often support more natural human-computer interaction, such as speech or gesture recognition and, in general, the more complex multimodal behavior that we take for granted in the world (i.e., simultaneous processing and generating information through our senses). As the computer-based world becomes more nearly a simulation of the real world, the need to learn new ways of communicating with the system is significantly decreased. Of course, as we discussed in Chapter 3, this can be a double-edged sword—if we seek to simulate reality too closely, we will impose unwanted boundaries or restrictions on users’ expectations and behavior.

Updating the WIMP User Interface

Several years ago, Don Gentner and Jakob Nielsen wrote a brief paper designed to provoke debate about the status quo in user interface design (Gentner & Nielsen 1996). The article critiques the pervasive WIMP user interface style. The authors use the direct-manipulation interface popularized by the Apple Macintosh in the mid-1980s as an operational definition of this style, analyzing the relevance of the Macintosh user interface guidelines (Apple Computer 1992) for modern computing applications.

The general argument that Gentner and Nielsen make is simple: End user populations, their tasks, and computing technology have matured and gained sufficient sophistication that WIMP interfaces are no longer necessary. They argue that WIMP interfaces have been optimized for ease of learning and use, that is, for people just learning to use personal computers. Today’s users want more from their computing platforms and are willing to do extra work to enjoy extra functionality.

The following table lists the original Mac principles alongside the authors’ proposed revisions. Many of the Mac guidelines name usability issues discussed in earlier chapters. The alternatives they suggest have occasionally been raised as tradeoffs or discussion, but will be illustrated more elaborately in this chapter on emerging paradigms. Indeed, many of revised principles can be summarized as “build smarter systems.” Such systems are more likely to know what people really want, which means that people might be more willing to share control, let the system handle more of the details, and so on. At the same time, the principles imply much greater power and diversity in the computing platform, as illustrated in the sections on multimodal interfaces and virtual reality.

A new look for user interfaces (adapted from Gentner & Nielsen 1996, table 1, 72).

| Macintosh GUI | The New Look |

| Metaphors | Reality |

| Direct manipulation | Delegation |

| See and point | Describe and command |

| Consistency | Diversity |

| WYSIWYG | Represent meaning |

| User control | Shared control |

| Feedback and dialog | System handles details |

| Forgiveness | Model user actions |

| Aesthetic integrity | Graphic variety |

| Modelessness | Richer cues |

The increasingly broad concept of what counts as a “computer”—from mainframes and desktop workstations to tiny portable computers to the processing capacity that has been embedded in everyday objects—also has important implications for human-computer action. A typical handheld computer has a very small display with poor resolution, which severely restricts the range of interaction and display options possible. But its size and portability increase the flexibility of when and where computing will happen.

The opportunities raised by the emerging interaction paradigms are exciting and have created a flurry of technology-centered research projects and scientific communities. In a brief chapter such as this, it is impossible to summarize this work. In fact, attempting such a summary would be pointless, because the technology and context are evolving so quickly that the discussion would soon be obsolete. Thus, we restrict our discussion to four themes that are already having significant impact on the design of new activities and user interaction techniques—collaborative systems, ubiquitous computing, intelligent systems, and virtual reality.

9.1 Collaborative Systems

Group interaction takes place in many different settings, each with its own needs for collaboration support. Two general distinctions are the location of the group (whether the members are co-located or remote) and the timing of the work. Collaboration that takes place in real time is synchronous, while collaboration that takes place through interactions at different times is asynchronous (Ellis, Gibbs, & Rein 1991). To some extent these distinctions are artificial—a co-located group is not always in the same room, or conversations that start out in real time may be pursued through email or some other asynchronous channel. However, the distinctions help to organize the wide range of technologies that support collaborative work (see Figure 9.2).

Figure 9.2 Collaborative activities can be classified according to whether they take place in the same or different locations, and at the same or different points in time.

A typical example of co-located synchronous collaboration is electronic brainstorming. A group gathers in a room and each member is given a networked workstation; ideas are collected in parallel and issues are prioritized, as members vote or provide feedback by other means (Nunamaker, et al. 1991). An important issue for these synchronous sessions is floor control—the collaboration software must provide some mechanism for turn-taking if people are making changes to shared data. Of course, the same group may collaborate asynchronously as well, reviewing and contributing ideas at different points in time, but such an interaction will be experienced more as a considered discussion than as a rapid and free-flowing brainstorming session.

For asynchronous interactions, the distinction between co-located and remote groups becomes somewhat blurred. It refers to people who are able to engage in informal face-to-face communication versus those who can “get together” only by virtue of technology. At the other extreme from a co-located meeting is collaborative activity associated with Web-based virtual communities (Preece 2000). MUDs (multiuser domains) and MOOs are popular among online communities (Rheingold 1993; Turkle 1995). A MUD is a text-based virtual world that people visit for real-time chat and other activities. But because MUDs and MOOs also contain persistent objects and characters, they enable the long-term interaction needed to form and maintain a community.

Regardless of geographic or temporal characteristics, all groups must coordinate their activities. This is often referred to as articulation work, which includes writing down and sharing plans, accommodating schedules or other personal constraints, following up on missing information, and so on. The key point is that articulation work is not concerned with the task goal (e.g., preparing a budget report), but rather with tracking and integrating the individual efforts comprising the shared task. For co-located groups, articulation work often takes place informally—for example, through visits to a co-worker’s office or catching people as they walk down the hall (Fish, et al. 1993; Whittaker 1996). But for groups collaborating over a network, it can be quite difficult to maintain an ongoing awareness of what group members are doing, have done, or plan to do.

In face-to-face interaction, people rely on a variety of nonverbal communication cues—hand or arm gestures, eye gaze, body posture, facial expression, and so on—to maintain awareness of what communication partners are doing, and whether they understand what has been said or done. This information is often absent in remote collaboration. The ClearBoard system (Figure 9.3) is an experimental system in which collaborators can see each other’s faces and hand gestures, via a video stream layered behind the work surface. The experience is like working with someone who is on the other side of a window. ClearBoard users are able to follow their partners’ gaze and hand movements and use this information in managing a shared task (Ishii, Kobayashi, & Arita 1994).

Figure 9.3 ClearBoard, another system that addresses awareness issues, by merging video of a collaborator’s face with a shared drawing surface (Ishii, Kobayashi, & Arita 1994).

ClearBoard is an interesting approach, but it depends on special hardware and is restricted to pairs of collaborators. Other efforts to enhance collaboration awareness have focused on simpler information, such as the objects or work area currently in view by a collaborator. A radar view is an overview of a large shared workspace that includes rectangles outlining the part of the workspace in view by all collaborators (Figure 9.4). By glancing at an overview of this sort, group members can keep track of what others are working on, particularly if this visual information is supplemented by a communication channel such as text or audio chat (Gutwin & Greenberg 1998).

Figure 9.4 Supporting awareness of collaborators during remote synchronous interactions. The upper-left corner shows a radar view—an overview of the shared workspace that also shows partners’ locations (GroupLab team, University of Calgary, available at http://www.cpsc.ucalgary.ca/Research/grouplab/project_snapshot/radar_groupware/radar_groupware.html).

From a usability point of view, adding awareness information to a display raises a familiar tradeoff. The added information helps in coordinating work, but it becomes one more thing in the user interface that people must perceive, interpret, and make sense of (Tradeoff 9.1). When a collaborative activity becomes complex—for example, when the group is large or its members come and go often—it can be very difficult to present enough information to convey what is happening without cluttering a display. Suppose that the radar view in the upper left of Figure 9.4 contained five or six overlapping rectangles. Would it still be useful?

TRADEOFF 9.1 ![]()

Awareness of collaborators’ plans and actions facilitates task coordination, BUT extensive or perceptually salient awareness information may be distracting.

A related issue concerns awareness of collaborators’ contributions over time. In Microsoft Word, coauthors use different colors to identify individual input to a shared document. These visual cues may work well for a small group, but as the number of colors increases, their effectiveness as an organizing scheme decreases.

In the Virtual School project summarized in Chapter 1, students collaborate remotely on science projects over an extended period of time, sometimes in real time, but more often through asynchronous work in a shared notebook (the Virtual School was pictured in Figure 1.3). The environment promotes awareness of synchronous activity through buddy lists, video links, and by showing who has a “lock” on a page. It supports asynchronous awareness through a notice board that logs Virtual School interactions over time, text input that is colored to indicate authorship, and information about when and by whom an annotation was made. A good case can be made for any one of these mechanisms, but they combine to create a considerable amount of coordination information. It is still an open question which pieces of awareness information are worth the cost of screen space and complexity (Isenhour, et al. 2000).

Networks and computer-mediated communication allow distributed groups to work together. These systems increase the flexibility of work: Resources can be shared more effectively, and individual group members can enjoy a customized work setting (e.g., a teleworker who participates from home). But whenever technology is introduced as an intermediary in human communication, one side effect is that some degree of virtuality or anonymity in the communication is also introduced. Even in a telephone conversation, participants must recognize one another’s voices to confirm identity. Communicating through email or text chat increases the interpersonal distance even more.

An increase in interpersonal distance can lead individuals to exhibit personality characteristics or attitudes that they would not display in person (Sproull & Kiesler 1991). A worker who might never confront a manager about a raise or a personnel issue might feel much more comfortable doing so through email, where nonverbal feedback cues are avoided (Markus 1994). Members of Internet newsgroups or forums may be quite willing to disclose details of personal problems or challenges (Rosson 1999a). Unfortunately, the same loss of inhibition that makes it easier to discuss difficult topics also makes it easier to behave in rude or inappropriate ways (Tradeoff 9.2). Sometimes this simply means it will be more difficult to reach a consensus in a heated debate. But studies of virtual communities also report cases in which members act out fantasies or hostilities that are disconcerting or even psychologically damaging to the rest of the community (Cherny 1995; Rheingold 1993; Turkle 1995).

TRADEOFF 9.2 ![]()

Reduced interpersonal communication cues aid discussion of awkward or risky concerns, BUT may encourage inappropriate or abusive ideas and activities.

Deciding whether and how much anonymity to allow in a collaborative setting is a critical design issue. If a system is designed to promote creativity or expression of diverse or controversial opinions, anonymous participation may be desirable, even if this increases the chance of socially inappropriate behavior. More generally, however, groups expect that each member is accountable for his or her own behavior, and individuals expect credit or blame for the contributions they make. These expectations demand some level of identification, commonly through a personal log-in and authentication procedure, or through the association of individuals to one or more roles within a group. Once a participant is recognized as a system-defined presence, other coordination mechanisms can be activated, such as managing access to shared resources, organizing the tasks expected of him or her, and so on.

One side effect of supporting online collaboration is that recording shared information becomes trivial. Email exchanges can be stored, frequently asked questions (FAQs) tabulated, project versions archived, and procedures of all sorts documented. The process of identifying, recording, and managing the business procedures and products of an organization is often called knowledge management. Although it is hard to put a finger on what exactly constitutes the “knowledge” held by an organization, there is a growing sense that a great deal of valuable information is embedded in people’s everyday work tasks and artifacts.

Having a record of a shared task can be helpful in tracing an activity’s history—determining when, how, and why decisions were made—or when reusing the information or procedures created by the group. Historical information is particularly useful when new members join a group. But simply recording information is usually not enough; a large and unorganized folder of email or series of project versions is so tedious to investigate that it may well not be worth the effort. Group archives are much more useful if participants filter and organize them as they go. The problem is that such archives are often most useful to people other than those who create and organize them—for example, new project members or people working on similar tasks in the future (Tradeoff 9.3).

TRADEOFF 9.3 ![]()

Archiving shared work helps to manage group decisions and rationales, BUT archiving costs must often be borne by people who will not receive direct benefits.

What can be done to ensure that the people creating the archives enjoy some benefit? To some extent, this is a cultural issue. If everyone in an organization contributes equally to a historical database, then chances are higher that everyone will benefit at some point. On a smaller scale, the problem might be addressed by rotating members through a history-recording role, or by giving special recognition to individuals who step forward to take on the role of developing and maintaining the shared records.

9.2 Ubiquitous Computing

A decade ago Mark Weiser (1991) coined the term ubiquitous computing to describe a vision of the future in which computers are integrated into the world around us, supporting our everyday tasks. In Weiser’s vision, people do not interact with “boxes” on a desk. Instead, we carry small digital devices (e.g., a smart card or a personal digital assistant) just as we now carry drivers’ licenses or credit cards. When we enter a new setting, these devices interact with other devices placed within the environment to register our interests, access useful information, and initiate processes in support of task goals.

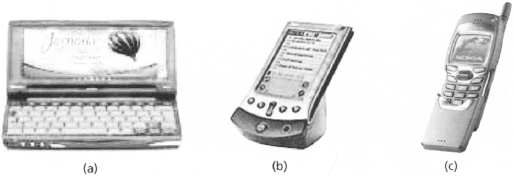

Current technology has moved us surprisingly close to Weiser’s vision. A range of small devices—such as pocket PCs, portable digital assitants (PDAs), and mobile phones (see Figure 9.5)—are already in common use. Such devices enable mobile computing, computer-based activities that take place while a person is away from his or her computer workstation. Laptop and pocket PCs offer services similar to those of a desktop system, but with hardware emphasizing portability and wireless network connections. PDAs such as the Palm series use simple character recognition or software buttons and a low-resolution display. They provide much less functionality than a desktop PC, but are convenient and easy to learn. PDAs are often used as mobile input devices, such as when keeping records of notes or appointments that are subsequently transferred to a full-featured desktop computer.

Figure 9.5 Several portable computing devices: (a) The Jornada 680 pocket PC made by Hewlett Packard; (b) the Palm V from 3Com; and (c) a Nokia mobile phone.

Some of the most important advances in ubiquitous computing are occurring in the telecommunications industry. People are comfortable with telephones; most already use phones to access information services (e.g., reservations and account status), so adding email or Web browsing is not a large conceptual leap. Mobile phones have become extremely popular, particularly in northern Europe—in Finland, 90% of the population owns a mobile phone! People’s general comfort level with telephones, combined with emerging protocols for wireless communication, makes them very attractive as a pervasive mobile computing device.

But these small mobile devices raise major challenges for usability engineers. People are used to interacting with computers in a workstation environment—a dedicated client machine with an Ethernet connection, a large color bitmap display, a mouse or other fine-grained input device, and a full-size keyboard. They take for granted that they will enjoy rich information displays and flexible options for user input. Many common tasks, such as checking email, finding stored documents, or accessing Web pages, have been practiced and even automated in such environments. A small display and simple input mechanisms are a constant frustration to these well-learned expectations and interaction strategies (Tradeoff 9.4).

TRADEOFF 9.4 ![]()

Small mobile interaction devices increase portability and flexibility, BUT these devices do not support the rich interaction bandwidth needed for complex tasks.

One solution to limitations in display and communication bandwidth is to cut back on the services provided—for example, PDAs are often used for personal databases of calendars, contact information, or brief notes, and these tasks have relatively modest bandwidth requirements. The problem is that as people use PDAs more and more, they want to do more and more things with them. Thus, user interface researchers are exploring techniques for scaling complex information models (such as Web sites) to a form appropriate for smaller devices.

One approach is to employ a proxy server (an intermediate processor) to fetch and summarize multiple Web pages, presenting them on the PDA in a reduced form specially designed for easy browsing. For example, in the WEST system (WEb browser for Small Terminals; Björk, et al. 1999), Web pages are chunked into components, keywords are extracted, and a focus+context visualization is used to provide an overview suitable for a small display. Specialized interaction techniques are provided for navigating among and expanding the chunks of information.

When mobile phones are used to access and manipulate information, designers are faced with the special task of designing an auditory user interface that uses spoken menus and voice or telephone keypad input. Of course, such systems have been in use for many years in the telecommunications industry, but the increasing use of mobile phones to access a variety of information services has made the usability problems with spoken interfaces much more salient.

As discussed in Chapter 5, people have a limited working memory, which means that we can keep in mind only a small number of menu options at any one time. When the overall set of options is large, the menu system will be a hierarchy with many levels of options and selections. Because an auditory menu is presented in a strictly linear fashion (i.e., it is read aloud), users may navigate quite deeply into a set of embedded menus before realizing that they are in the wrong portion of the tree. Usually, there is no way to undo a menu selection, so the user is forced to start all over, but now with the additional task of remembering what not to select. Because of the lack of control in these interfaces, the design of the menu hierarchy should be very carefully designed to satisfy users’ most frequent needs and expectations.

Access to small or portable computers is an important element of the ubiquitous computing vision. But another piece of the vision is that the support is distributed throughout the environment, or incorporated into objects and tools not normally considered to be computing devices. This is often termed augmented reality; the concept here is to take a physical object in the real world—a classroom whiteboard, a kitchen appliance, an office desk, or a car—and extend it with computational characteristics.

Much current work on augmented reality is directed toward novel input or output mechanisms. Researchers at MIT’s Media Lab have demonstrated ambient displays that convey status information (e.g., traffic flow and weather) as background “pictures” composed of visual or auditory information (Ishii & Ullmer 1997; see “Tangible Bits” sidebar). A new category of interactive services known as roomware provides enhancements to chairs, walls, tables, and so on; the intention is that the people in a room can access useful information and services at just the right moment, and in just the right place (Streitz, et al. 1999). Many researchers are investigating the possibilities for devices and services that are context aware, such that features of a person’s current setting, personal preferences, or usage history can be obtained and processed to provide situation-specific functions and interaction techniques (Abowd & Mynatt 2000).

An important practical problem for augmented reality is the highly distributed infrastructure of sensors or information displays that are needed to capture and process people’s actions in the world. For example, in the NaviCam system, a handheld device recognizes real-world objects from special tags placed on them, and then uses this information to create a context-specific visual overlay (Rekimoto & Nagao 1995). But this application assumes that the relevant objects have somehow been tagged. Bar codes might be used as one source of such information, but are normally available only for consumer retail items. Researchers are now exploring the more general concept of glyphs, coded graphical elements that can be integrated into a wide variety of displayed or printed documents (Moran, et al. 1999).

Hiroshi Ishii and colleagues at the MIT Media Lab are researching ways to bridge the gap between cyberspace and the physical environment (Underkoffler & Ishii 1999; Ishii & Ullmer 1997; Yarin & Ishii 1999). They are working with three key concepts: interactive surfaces, where the ordinary surfaces of architectural space (walls, doors, and windows) serve as an active interface between the physical and virtual worlds; coupling of bits and atoms, emphasizing the integration of everyday objects that can be grasped (cards and containers) with relevant digital information; and ambient models, the use of background media such as sound, light, or water movement to present information in the periphery of human perception. The following table summarizes several projects that illustrate these concepts.

Examples of projects that are exploring the concept of tangible bits.

| Project Name | Brief Description |

| meta Desk | A nearly horizontal back-projected graphical surface with embedded sensors that enables interaction with digital objects displayed on the surface, in concert with physical objects (including “phicons” or physical icons) that are grasped and placed on the desk |

| transBoard | A networked, digitally enhanced whiteboard that supports distributed access to physical whiteboard activity, including attachment of special-purpose phicons |

| ambientRoom | A 6’ x8’ room augmented with digital controls; auditory and visual background displays render ongoing activity such as Web-page hits or changes in the weather |

| URP | Urban planning environment that creates light and wind flow to simulate time-specific shadow, reflection, and pedestrian traffic for a physical model on a table |

| TouchCounters | Physical sensors and local displays integrated into physical storage containters and shelves, for recording and presenting usage history, including network-based access |

The integration of computational devices with everyday objects raises many exciting opportunities for situation- and task-specific information and activities. But even if the infrastructure problem can be resolved, the vision also raises important usability issues (Tradeoff 9.5). Few people will expect to find computational functionality in the objects that surround them. In the case of passive displays such as the experiments summarized in the “Tangible Bits” sidebar, this may not be too problematic. People may not understand what the display is communicating, but at worst they will be annoyed by the extraneous input. But for interactive elements such as a chair, wall, or kitchen appliance, the problems are likely to be more complex. Indeed, when a problem arises, people may be unable to determine which device is responsible, much less debug the problem!

TRADEOFF 9.5 ![]()

Distributing interaction technology throughout the environment ties computing power to task-specific resources, BUT people may not expect or appreciate such services.

Another concern hiding beneath the excitement over ubiquitous computing is the extent to which people really want to bring computers into their personal lives. Many companies are exploring flexible working arrangements that allow employees to work partially or entirely from home offices. This necessarily blurs the dividing line between work and home, and the advances in mobile computing and ubiquitous devices do so even more. As homes and other locations are wired for data and communication exchange, individuals and families will have less and less privacy and fewer options for escaping the pressures and interruptions of their working lives. The general public may not recognize such concerns until it is too late, so it is important for usability professionals to investigate these future scenarios now, before the supporting technology has been developed and broadly deployed.

9.3 Intelligent User Interfaces

What is an intelligent system? In the classic Turing test, a computer system is deemed intelligent if its user is fooled into thinking he or she is talking to another human. However, most designers of intelligent human-computer interfaces strive for much less. The goal is to collect and organize enough information about a user, task, or situation, to enable an accurate prediction about what the users want to see or do. As with any user interaction technology, the hope is that providing such intelligence in the computer will make users’ tasks easier and more satisfying.

9.3.1 Natural Language and Multimodal Interaction

Science fiction offers a persuasive vision of computers in the future: For instance, in Star Trek, Captain Kirk poses a question, and the computer immediately responds in a cooperative and respectful fashion. At the same time, there has always been a dark side to the vision, as illustrated by HAL’s efforts to overthrow the humans in 2001: A Space Odyssey. People want computers to be helpful and easy to talk to, but with a clearly defined boundary between the machine and its human controller.

From an HCI perspective, an interactive system that successfully understands and produces natural language short-circuits the Gulfs of Execution and Evaluation. People only have to say what is on their minds—there is no need to map task goals to system goals, or to retrieve or construct action plans. When the computer responds, there is no need to perceive and interpret novel graphical shapes or terminology. The problem, of course, is that there are not yet systems with enough intelligence to reliably interpret or produce natural language. Thus, a crucial design question becomes just how much intelligence to incorporate into (and imply as part of) an interactive system.

The challenges of understanding natural language are many. For example, when people talk to one another, they quickly establish and rely on common ground. Common ground refers to the expectations that communication partners have about each other, the history of their conversation so far, and anything else that helps to predict and understand what the other person says (Clark & Brennan 1991; Monk in press). For example, a brief introductory statement such as “Right, we met at last year’s CHI conference,” brings with it a wealth of shared experiences and memories that enrich the common ground. One partner in such an exchange can now expect the other to understand a comment such as “That design panel was great, wasn’t it?” The speaker assumes that the listener knows that CHI conferences have panel discussions, and that this particular meeting had a memorable panel on the topic of design.

Related problems stem from the inherent ambiguity of natural language. Suppose that the comment about a design panel was uttered in a conversation between two graphic artists or electrical engineers; rather different interpretations of the words “design” and “panel” would be intended in the two cases. Listeners use the current situational context and their knowledge of the real world to disambiguate words and phrases in a fluent and unconscious fashion.

Even if an intelligent system could accurately interpret natural language requests, interaction with natural language can be more tedious than with artificial languages (Tradeoff 9.6). As discussed in Chapter 5, the naturalness of human-computer communication very often trades off with power. Imagine describing to a computer your request that a particular document should be printed on a particular printer in your building—and then compare this to dragging a document icon onto a desktop printer icon.

TRADEOFF 9.6 ![]()

Natural language interaction simplifies planning and interpretation, BUT it may be less precise and take more time than artificial language interaction.

One approach to the problems of understanding natural language is to enable multiple communication modes. In a multimodal user interface, a user might make a spoken natural language request, but supplement it with one or more gestures. For example, the user might say “Put that there,” while also pointing with the hand or pressing a button (Bolt 1980). Pointing or scoping gestures can be very useful for inherently spatial requests such as group specification (e.g., removing the need for requests such as “Select the six blue boxes next to each other in the top left corner of the screen but skip the first one”). Interpreting and merging simultaneous input channels is a complex process, but multimodal user interface architectures and technology have become robust enough to enable a variety of demanding real-world applications (Oviatt, et al., 2002; see “Multimodal User Interfaces” sidebar).

Usability engineers often worry about the degree to which a computer system appears human or friendly to its users. A system that has even a little bit of information about a user can adjust its style to create a more personal interaction. For example, many Web applications collect and use personal information in this way. An online shopping system may keep customer databases that can be very helpful in pre-filling the tedious forms required for shipping address, billing address, and so on. However, the same information is also often used to construct a personalized dialog (“Welcome back, Mary Beth!”), with a sales pitch customized for the kinds of products previously purchased.

Current guidelines for user interface design stress that overly personal dialogs should be avoided (Shneiderman 1998). Novice users may not mind an overfriendly dialog, but they may conclude that the system is more intelligent than it really is and trust it too much. The ELIZA system (see starting vignette) is a classic example of how little intelligence is required to pass Turing’s test. This simple simulation of a psychiatrist asks questions and appears to follow up on people’s responses. But it uses a very simple language model (e.g., a set of key vocabulary words and the repetition of phrases) to create this perception.

Multimodal User Interfaces

Multimodal user interaction is motivated by tasks that are complex or variable, and by the desire to provide people with the freedom to choose an input modality (modality refers to types of sensations, such as vision, sound, smell, and pressure) that is most suitable for the current task or environment. For example, speech input is quick, convenient, and keeps the hands free for other actions. Pens can be used for drawing, as well as pointing, signing one’s name, and so on. The combination of pens with speech can lead to extremely effective performance in tasks with significant spatial components such as geographical information systems (Oviatt 1997). Pens and speech are particularly useful in mobile computing, where size and flexibility are at a premium.

In addition to increasing interaction flexibility, multimodal interaction can reduce errors by increasing redundancy. Someone who names an object but also points to it is more likely to enjoy accurate responses; a warning that flashes on the screen but also sounds a beep is more likely to be received by a person engaged in complex activities. The option to switch among modalities also alleviates individual differences such as handicaps, and can minimize fatigue in lengthy interactions.

Recent advances in spoken language technology, natural language processing, and in pen-based handwriting and gesture recognition have enabled the emergence of realistic multimodal applications that combine speech and pen input (Oviatt, et al., 2002). The table below lists several such projects underway at the Oregon Graduate Institute (OGI); IBM; Boeing; NCR; and Bolt, Beranek, & Newman (BBN).

Examples of systems using multimodal input and output interaction techniques.

| System Name | Use of Multimodal Input and Output |

| QuickSet (OGI) | Speech recognition, pen-based gestures, and direct manipulation are combined to initialize the objects and parameters of a spatial (map-based) simulation. |

| Human-Centric Word Processor (IBM) | Continuous, real-time speech recognition and natural language understanding, combined with pen-based pointing and selection, for medical dictation and editing. |

| Virtual Reality Aircraft Maintenanc Training (Boeing) | Speech understanding and generation are combined with a data :e glove for detecting hand gestures. Controls a 3D simulated aircraft in which maintenance procedures can be demonstrated and practiced. |

| Field Medic Information System (NCR) | Mobile computer worn around the waist of medical personnel, using speech recognition and audio feedback to access and manipulate patient records. Used in alternation with a handheld tablet supporting pen and speech input. |

| Portable Voice Assistant (BBN) | Handheld device supporting speech and pen recognition, using a wireless network to browse and interact with Web forms and repositories. |

Users today are more sophisticated than the people Weizenbaum (1976) observed using ELIZA. Most computer users are unlikely to believe that computers have human-like intelligence, and they often find simulated “friendliness” annoying or misleading (Tradeoff 9.7). A well-known failure of this sort was Microsoft Bob, a highly graphical operating system that included the pervasive use of human-like language (Microsoft 1992). Although young or inexperienced home users seemed to like the interface, the general public reacted poorly and Microsoft Bob was quietly withdrawn.

TRADEOFF 9.7 ![]()

Personal and “friendly” language can make an interaction more engaging or fun, BUT human-like qualities may mislead novices and irritate sophisticated users.

At the same time, it is wrong to reject this style of user interface entirely. Particularly as entertainment, commerce, and education applications of computing become more pervasive, issues of affective or social computing are a serious topic of research and development (see “Social Computing” sidebar). The concept of “fun” is also gaining focus in HCI, as the scope of computing increases (Hassenzahl, et al. 2000). If a primary requirement for a user interface is to persuade a highly discretionary user to pause long enough to investigate a new service, or to take a break from a busy workday, the pleasure or humor evoked by the user interface clearly becomes much more important.

9.3.2 Software Agents

One very salient consequence of the expansion of the World Wide Web is that computing services have become much more complex. People now can browse or search huge databases of information distributed in locations all over the world. Some of this information will be relevant to a person’s current goals, but most of it will not. Some of the information obtained will be trustworthy, and some will not. Some of the useful information will be usable as is, and some will need further processing to obtain summaries or other intermediate representations. Finding the right information and services and organizing the found materials to be as useful as possible are significant chores, and many researchers and businesses are exploring the use of software agents that can do all or part of the work.

As the ELIZA system showed, humans are quite willing to think of a computer in social terms, or as an intelligent and helpful conversation partner. Many user interface designers have been alarmed by this, and try to minimize the social character of systems as much as possible (Shneiderman 1998). But recent work by Clifford Nass and colleagues on social responses to communication technologies has documented a number of ways in which people respond to computers in the same way that they respond to other people. For example, users react to politeness, flattery, and simulated personality traits (Nass & Gong 2000). These researchers argue that people will respond this way regardless of designers’ intentions, so we should focus our efforts on understanding when and how such reactions are formed, so as to improve rather than interfere with their usage experience.

In one recent set of experiments, these researchers studied people’s responses to humor in a computer user interface, as compared with the same humor injected into interactions with another person (Morkes, Kernal, & Nass 1999). In the experiment, people were told that they were connected to another person remotely and would be able to see this person’s comments and contributions to a task (ranking items for relevance to desert survival). In fact, all of the information they saw was generated by a computer; half of the participants were shown jokes interspersed with the other comments and ratings. Other people were told that they were connected to a computer, and that the computer would make suggestions. Again, half of the participants received input that included jokes.

The reactions to the “funny” versus “not funny” computer were similar in many respects to those of people who thought they were communicating with another person. For example, people working with the joking computer claimed to like it more, made more jokes themselves, and made more comments of a purely sociable nature. These reactions took place without any measurable cost in terms of task time or accuracy. Although these findings were obtained under rather artificial conditions, the researchers suggest that they have implications for HCI, namely, that designers should consider building more fun and humor into user interactions, even for systems designed for “serious” work.

Software agents come in many varieties and forms (Maes [1997] provides a good overview). For example, agents vary in the target of their assistance—an email filter is user specific, but a Web crawler provides a general service to benefit everyone. Agents also vary in the source of their intelligence; they may be developed by end users, built by professional knowledge engineers, or collect data and learn on their own. Agents vary in location; some reside entirely in a user’s environment, others are hosted by servers, and others are mobile, moving around as needed. Finally, there is great variation in the roles played by agents. Example roles include a personal assistant, a guide to complex information, a memory aid for past experiences, a filter or critic, a notification or referral service, and even a buyer or seller of resources (Table 9.1).

Table 9.1 Software agents developed at the MIT Media Lab, illustrating a number of variations in target of assistance, source of intelligence, location, and task role.

| Software Agent | Brief Description |

| Cartalk | Cars equipped with PCs, GPS, and radio communicate with each other and stationary network nodes to form a constantly reconfigured network |

| Trafficopter | Uses Cartalk to collect traffic-related information for route recommendations; no external (e.g., road) infrastructure required |

| Shopping on the run | Uses Cartalk network to broadcast the interests from car occupants, and then receive specific driving directions to spots predicted to be of interest |

| Yenta | Finds and introduces people with shared interests, based on careful collection/analysis of personal data (email, interest lists) |

| Kasbah | User-created agents given directions for what to look for in an agent marketplace, seeks out sellers or buyers, negotiates to try to make the “best deal” based on user-specified constraints |

| BUZZwatch | Distills and tracks trends and topics in text collections over time, e.g., in Internet discussions or print media archives |

| Footprints | Observes and stores navigation paths of people to help other people find their way around |

A usability issue for all intelligent systems is how the assistance will impact users’ own task expectations and performance. As intelligent systems technology has matured, engineers are able to build systems that monitor and integrate data to assist in complex tasks—for example, air traffic control or engineering and construction tasks. Such systems are clearly desirable, but usability professionals worry that people may come to rely on them so much that they cede control or become less vigilant in their own areas of responsibility (Tradeoff 9.8). This can be especially problematic in safety-critical situations such as power plants or aircraft control (Casey 1993). Dialogs that are abrupt and “machine-like,” or that include regular alerts or reminders, may help to combat such problems.

Building user models that can automate or assist tasks can simplify use, BUT may reduce feelings of control, or raise concerns about storage of personal data.

An overeager digital assistant that offers help too often, or in unwanted ways, is more irritating than dangerous. For example, the popular press has made fun of “Clippy,” the Microsoft Windows help agent that seems to interrupt users more than help them (Noteboom 1998). Auto-correction in document or presentation creation can be particularly frustrating if it sometimes works, but at other times introduces unwelcome changes that take time and attention to correct. Reasoning through scenarios that seem most likely or important can be extremely valuable in finding the right level of intelligent support to provide by default in a software system.

A more specific issue arises when agents learn by observing people’s behavior. For example, it is now common to record online shopping behavior and use it as the basis for future recommendations. This, of course, is nothing new—marketing firms also collect behavior about buying patterns and target their campaigns accordingly. The usability concerns are simply that there is so much online data that is now easily collected, and that much of the data collection happens invisibly. These concerns might be addressed by making personal data collection more visible and enabling access to the models that are created.

Finally, software agents are often a focus for the continuing debates about anthropomorphic user interfaces, because it is common to render digital assistants as conversational agents. Thus, many researchers are exploring language characteristics, facial expressions, or other nonverbal behavior that can be applied to cartoon-like characters to make them seem friendly and helpful (Lieberman 1997). A particularly well-developed example is Rea, an agent that helps real estate clients learn about available real estate properties. Rea uses information about the buyer to make recommendations. However, the recommendations are presented in a simulated natural language dialog that includes a variety of nonverbal communication cues to her output (e.g., glancing away, hand gestures, and pausing slightly; see Figure 9.6), to make the interaction seem more realistic (Cassell 2000).

Figure 9.6 A brief conversation with an embodied conversational agent that is programmed to assist in real estate tasks (Cassell 2000, 73).

The earlier point about the emergence of entertainment-oriented computing is relevant to the discussion here as well. For example, one Web-based “chat-bot” provided for visitors’ enjoyment is the John Lennon AI project, a modern variant of the ELIZA program (available at http://www.triumphpc.com/john-lennon). People can talk to this agent to see if it reacts as they would expect Lennon to react; they can critique or discuss its capabilities, but there is little personal consequence in its failures or successes. In this sense, the entertainment domain may be an excellent test bed for new agent technologies and interaction styles—the cost of an inaccurate model or an offensive interaction style is simply that people will not buy (or use for free) the proffered service.

9.4 Simulation and Virtual Reality

Like the natural language computer on Captain Kirk’s bridge, Star Trek offers an engaging vision of simulated reality—crew members can simply select an alternate reality, and then enter the “holodeck” for a fully immersive interaction within the simulated world. Once they enter the holodeck, the participants experience all the physical stimulation and sensory reactions that they would encounter in a real-world version of the computer-generated world. A darker vision is conveyed by The Matrix film, where all human perception and experience is specified and simulated by all-powerful computers.

Virtual reality (VR) environments are still very much a research topic, but the simulation techniques have become refined enough to support real-world applications. Examples of successful application domains are skill training (e.g., airplane or automobile operation and surgical techniques), behavioral therapy (e.g., phobia desensitization), and design (e.g., architectural or engineering walkthroughs). None of these attempt to provide a truly realistic experience (i.e., including all the details of sensory perception), but they simulate important features well enough to carry out training, analysis, or exploration activities.

Figure 9.7 shows two examples of VR applications: the Web-based desktop simulation on the left uses VRML (Virtual Reality Markup Language) to simulate a space capsule in the middle of an ocean. Viewers can “fly around,” go in and out of the capsule, and so on (available at http://www.cosmo.com). On the right is an example from the Virginia Tech CAVE (Collaborative Automatic Virtual Environment). This is a room equipped with special projection facilities to provide a visual model that viewers can walk around in and explore, in this case a DNA molecule (available at http://www.cave.vt.edu).

Figure 9.7 Sample virtual reality applications: (a) VRML model of a space capsule floating on water, and (b) a molecular structure inside a CAVE.

A constant tension in virtual environments is designing and implementing an appropriate degree of veridicality, or correspondence to the real world. Because our interactions with the world are so rich in sensory information, it is impossible to simulate everything. And because the simulated world is artificial, the experiences can be as removed from reality as desired (e.g., acceleration or gravity simulations that could never be achieved in real life). Indeed, a popular VR application is games where players interact with exciting but impossible worlds.

From a usability perspective, maintaining a close correspondence between the simulated and real worlds has obvious advantages. Star Trek’s holodeck illustrates how a comprehensive real-world simulation is the ultimate in direct manipulation: Every object and service provided by the computer looks and behaves exactly as it would if encountered in the real world. No learning is required beyond that needed for interacting with any new artifact encountered in the world; there are no user interface objects or symbols intruding between people and the resources they need to pursue their goals.

But as many VR designers point out, a key advantage in simulating reality is the ability to deliberately introduce mismatches with the real world (Tradeoff 9.9). Examples of useful mismatches (also called “magic”; Smith 1987) include allowing people to grow or shrink, have long arms or fingers to point or grab at things, an ability to fly, and so on (Bowman in press). The objects or physical characteristics of a simulated world can also be deliberately manipulated to produce impossible or boundary conditions of real-world phenomena (e.g., turning gravity “off” as part of a physics learning environment).

Interaction techniques that simulate the real world may aid learning and naturalness, BUT “magic” realities may be more engaging and effective for some tasks.

Reasoning from scenarios that emphasize people’s task goals helps to determine the level of realism to include in a VR model. In a skill-training simulation, learning transfer to real-world situations is the most important goal. This suggests that the simulation should be as realistic as possible given processing and device constraints. In contrast, if the task is to explore a DNA molecule, realism and response time are much less critical; instead, the goal is to visualize and improve access to interesting patterns in the environment. In an entertainment setting, a mixed approach in which some features are very realistic but others are mismatched may lead to feelings of curiosity or humor. In all cases, careful analysis of the knowledge and expectations that people will bring to the task is required to understand when and how to add “magic” into the world.

Many people equate VR with immersive environments, where the simulated reality is experienced as “all around” the user. Immersive VR requires multimodal input and output. For example, a head-mounted display provides surround vision, including peripheral details; data gloves or body suits process physical movements as well as providing haptic (pressure) feedback; speech recognition and generation allows convenient and direct requests for services; and an eye-gaze or facial gesture analysis tool can respond automatically to nonverbal communication. All of these devices work in parallel to produce the sensation of looking, moving, and interacting within the simulated physical setting. The degree to which participants feel they are actually “in” the artificial setting is often referred to as presence.

The overhead in developing and maintaining immersive VR systems is enormous, and usage can be intimidating, awkward, or fatiguing (Tradeoff 9.10). Stumbling over equipment, losing one’s balance, and walking into walls are common in these environments. If a simulation introduces visual motion or movement cues that are inconsistent, or that differ significantly from reality, participants can become disoriented or even get physically sick.

TRADEOFF 9.10 ![]()

Immersive multimodal input and displays may enhance presence and situation awareness, BUT can lead to crowded work areas, fatigue, or motion sickness.

Fortunately, many VR applications work quite well in desktop environments. The space capsule on the left of Figure 9.7 was built in VRML, a markup language developed for VR applications on the World Wide Web. Large displays and input devices that move in three dimensions (e.g., a joystick rather than a mouse; see Chapter 5) can increase the sense of presence in desktop simulations. Again, scenarios of use are important in choosing among options. When input or output to hands or other body parts are crucial elements of a task, desktop simulations will not suffice. Desktop VR will never elicit the degree of presence produced by immersive systems, so it is less effective in tasks demanding a high level of physical engagement. But even in these situations, desktop simulations can be very useful in prototyping and iterating the underlying simulation models.

9.5 Science Fair Case Study: Emerging Interaction Paradigms

The four interaction paradigms discussed in the first half of the chapter can be seen as a combination of interaction metaphors and technology. Table 9.2 summarizes this view and serves as a launching point for exploring how these ideas might extend the activities of the science fair. For example, the intelligent system paradigm can be viewed as combining a “personal-assistant” metaphor with technology that recognizes natural language and builds models of users’ behavior. For simplicity, we have restricted our design exploration to just one design scenario for each interaction paradigm.

Table 9.2 Viewing the emerging paradigms as a combination of metaphor and technology.

| Interaction Paradigm | Associated Metaphors | Associated Technology |

| Coordinating work | Management, interpersonal | Network services, multimedia displays. |

| nonverbal communication | database management | |

| Ubiquitous | Just-in-time information | Wireless networks, handheld devices. |

| computing | and services | microchip controllers |

| Intelligent systems | Personal assistant | Speech, gesture, handwriting recognition, |

| artificial intelligence models and | ||

| inference engines, data mining of | ||

| historical records | ||

| Simulation and | Real-world objects and | High-resolution or immersive displays. |

| virtual reality | interactions | haptic devices |

9.5.1 Collaboration in the Science Fair

Coordinating work in the science fair raises questions of multiparty interaction—what do people involved in the fair want to do together, in groupings of two or more, that could benefit from greater awareness and coordination of one another’s activities? Of course, to a great extent we have been considering these questions all along, because the science fair has been a group- and community-oriented concept from the start.

For instance, we have many example of coordination features in the design—a mechanism that “welcomes” new arrivals, information about where other people are, an archive of people’s comments, and so on. However, in reviewing the design proposed thus far, we recognized that the judging scenario could benefit from further analysis of coordination mechanisms:

- Ralph decides to make changes on the judging form. The judging scenario describes Ralph’s desire to make this change and indicates that he is allowed to do it, as long as he includes a rationale. We did not yet consider the larger picture implied by this scenario, specifically, that a group of other judges or administrators might be involved in reviewing Ralph’s request for a change.

This coordination concern also provides a good activity context from which to explore the tradeoffs discussed earlier—awareness of shared plans and activities (Tradeoff 9.1), consequences of computer-mediated communication (Tradeoff 9.2), and managing group history (Tradeoff 9.3).

Figure 9.8 presents the judging scenario with most of the surrounding context abbreviated, and just the form editing activity elaborated. Table 9.3 presents the corresponding collaboration claims that were analyzed in the course of revising the scenario. Note that the elaboration of this subtask could be extracted and considered as a scenario on its own, but with a connection to the originating scenario that provided Ralph’s goals and concerns as motivation.

Table 9.3 Collaboration-oriented claims analyzed during scenario generation.

| Design Feature | Possible Pros (+) or Cons (-) of the Feature |

| Automatic requests for change review | + emphasizes that changes are a shared concern + simplifies notification of and access to changes |

| - but the change maker may feel that his or her work is being audited | |

| - but recipients may feel that immediate review is expected | |

| Group annotation of an editing form | + promotes consensus and confidence about individual proposals |

| - but annotation sets that are long or complex may make the form more difficult to read and process |

This elaboration is relatively modest and builds on the services already designed. For example, Ralph is able to check for other judges because the room metaphor of the science fair has been customized for the judging activity. He had easily noticed other judges on arrival and is quickly able to check to see if they are still there. When he decides to make the change on his own, the automatic notification simplifies the process for him—he does not need to remember to tell the other judges about it. At the same time, he may feel a bit concerned that now others will be checking on his work. His colleagues in turn may feel compelled to review the change and respond. This possible disadvantage echoes a general tradeoff discussed earlier, namely, that being “made aware” of a collaborator’s plans or activities may be felt as an unwanted demand for time and attention (Tradeoff 9.1).

The review process itself is supported by annotations on the judging form, which are essentially comments on Ralph’s explanation. This is nice because the result is a group-created piece of rationale. But because the annotations appear directly on the form, there is the risk that they compromise the form’s main job, which is to document one judge’s evaluation of a project.

9.5.2 Ubiquitous Computing in the Science Fair

According to the vision of ubiquitous computing, humans will be able to access and enjoy computer-based functionality whenever, wherever, and however it is relevant. For the science fair, this caused us to think about how we might push on the “containment” boundaries of the fair event, distributing parts of the fair into the world, or bringing parts of the world into the fair.

Again, we considered these options in light of the scenarios and claims already developed. Do constraints exist that are imposed by the virtual fair concept in general, or are there problems with specific features? One interesting issue that we have ignored is the implicit constraint that fair participants will have access to a computer for their activities. All of the scenarios assume this, even though they take place in school labs, home environments, office environments, and so on. But remember that a central goal for the project was to make access and participation more convenient and flexible. Does requiring access to a conventional computer really accomplish this goal?

This line of thought caused us to think about situations in which people might want to “visit” the fair without actually sitting down at a computer. Are there occasions when a participant might want to check in briefly, look at a specific piece of information, and then move on? If so, the simple act of moving to a computer and turning it on might not be worth the effort. We explored this general notion in the scenario where Mr. King coaches Sally, envisioning how he might use a mobile phone to check to see if Sally was at the fair before logging on to work with her. The resulting scenario segment appears in Figure 9.9, and the associated claims in Table 9.4.

Table 9.4 Claims analyzed while generating the ubiquitous computing scenario.

| Design Feature | Possible Pros (+) or Cons (-) of the Feature |

| Supporting a search with a telephone keypad | + builds on people’s experience with other phone-based applications + provides an important preview function when away from the full system |

| - but it will be tedious when entering long or arbitrary search strings | |

| Text-to-speech output | + makes it clear that a computer rather than a human is providing the service |

| + reinforces that any arbitrary object or person can be checked and reported | |

| - but listening to long utterances may be difficult or unpleasant |

This scenario highlights the “just-in-time” concept associated with ubiquitous computing. The information Mr. King needs is available when and where he realizes he needs it—in the car on his way to do something else. It also suggests that the phone has become familiar as an alternate input device; Mr. King already knows the convention of “closing off” an open-ended entry with a pound sign. However, the claims in Table 9.4 document the negative aspects of the proposed phone interface. The telephone buttons do not map well to typing out long names. Although the use of a speech synthesizer enables the system to speak the names of arbitrary objects and people, the pronunciation and intonation of synthetic speech can be difficult or tedious to understand.

9.5.3 Intelligence in the Science Fair

The most general metaphor of an intelligent system paradigm is assistance. So, in thinking about what this interaction paradigm might contribute to the science fair, the obvious candidates are tasks or subtasks that have special knowledge or complexities associated with them, particularly if these tasks have been affected by the introduction of online activities or information. Scanning the earlier scenarios and claims, we found the following issue:

- The selection of material for the highlights summary. It was easy for Rachel to identify the winning exhibits, because they had colored ribbons attached to them. But she then had to open each exhibit and explore it to find interesting examples of material.

We considered what sorts of models or guidance might be provided to reduce the tedium of browsing all the material in the winning exhibits. It seemed unlikely that the science fair could have an intelligent model of what is “interesting.” However, it seemed quite possible that the fair could record viewers’ interactions with the exhibits and use this information to make recommendations about what material was more or less popular. This could be a useful heuristic for Rachel (we are assuming that the people who visited the exhibits have good taste!).

The resulting scenario and claims appear in Figure 9.10 and Table 9.5. The concerns they raise are related to one of the general tradeoffs for intelligent systems—when an automated system collects and presents information about people’s activities, it is possible to base one’s own behavior on “data” rather than personal judgment, with the possible cost of ignoring the values normally used to make such judgments, or feeling less in control and less involved (Tradeoff 9.8). There is also the more general downside that some people (e.g., the exhibitors in this case) may not want these “objective” data collected and shared.

Table 9.5 Claims analyzed while generating the intelligent system scenario.

| Scenario Feature | Possible Upsides (+) or Downsides (-) of the Feature |

| Using browsing and interaction history as an “interest” indicator | + emphasizes that perceived value of activities is a community issue + simplifies access to, and influence of, the behavior of a community |

| - but may interfere with more traditional exhibit evaluation criteria | |

| - but may reduce people’s feeling of judgment and control | |

| - but students with exhibits judged less interesting may be demoralized | |

| Multiple options for ranking activities by interest level | + reveals something about how interest rankings may be calculated + stimulates comparison and reasoning about the implied criteria |

| - but may be confusing if widely disparate orderings are produced |

The related problem of evoking too much trust in a software agent is addressed to some extent by designing an interaction that offers different sorting options. Each button reveals a piece of the model that calculates “interest” rankings. Playing with them helps to demystify the process—Rachel is able to make some analysis of how the advice is generated. The agent is also not introduced as an “assistant”; there is no effort to anthropomorphize the service, but rather it is treated simply as a normal science fair service.

9.5.4 Simulating Reality in the Science Fair

The virtual reality paradigm has an obvious relationship to the current VSF design: It can be seen as an end point of the physical science fair metaphor. Our design uses a two-dimensional information model; maps and exhibit spaces evoke the physical world, but do not simulate it. We could have envisioned a three-dimensional information model in which visitors and judges walk around to get to the individual exhibits, with the exhibit components themselves arrayed in three dimensions.

We chose not to pursue the 3D model because it wasn’t clear what the simulated reality would add to the experience. We decided instead to expand one piece of Sally’s black hole exhibit. In lieu of the Authorware simulation, we considered what it would be like if she had created a demonstration in VRML. In this case, there seemed to be a legitimate application of the extra realism: By providing visitors with a vivid three-dimensional experience of being “sucked into” a black hole, the overall exhibit would have much more impact.

We explored this elaboration in the visit scenario of Alicia and Delia, focusing on just their encounter with this new black hole simulation. The resulting scenario and associated claims appear in Figure 9.11 and Table 9.6.

Table 9.6 Claims analyzed for the virtual reality interaction.

| Scenario Feature | Possible Upsides (+) or Downsides (-) of the Feature | |

| Using keyboard | + | makes it possible for all viewers to explore the visual model |

| arrow for 3D | + | leverages familiarity with arrow keys in other spatial tasks |

| controls | - | but provides a poor mapping to the third (depth) dimension |

| Three-dimensional | + | reinforces the connection of the scientific phenomenon to the real |

| movement through | world | |

| a simulated space | - | but some viewers may not know how to move in space |

| - | but it may be tedious to identify and navigate to the “interesting” | |

| spots |

For this particular application, we did not want to consider highly immersive virtual reality, because most visitors will be coming from home and will not have any special input or output devices. Thus, we proposed a simple mapping of the three dimensions to the arrows found on all computer keyboards. This ensures that anyone with a normal keyboard can navigate in Sally’s virtual model.

But we made this decision with the understanding that it was not a terrific match to the task, and that some individuals might have considerable trouble figuring out how to carry out these navigation movements.

In the miniscenario described here, Delia actually enjoys her exploration of the three-dimensional space, and at some point simply finds herself “sucked into” the black hole. But during claims analysis we also imagined someone who spent considerable time in the space but never encountered the black hole. Would this person have been as satisfied as Delia? Probably not. In fact, this is a common issue in virtual reality systems. People get around these spaces by walking or flying. If a task consists of searching a large open space for a few key elements, the simple task of navigating the space can become quite tedious.

9.5.5 Refining the Interaction Design

The emphasis in the chapter has been on emerging interaction paradigms. By definition, these interaction techniques are not yet standard practice; much of the underlying technology is still being developed. As a result, many of the design ideas they inspire may be not feasible for immediate refinement and implementation. They are more likely to be useful in discussions that take a longer view, or in thinking about how a system may evolve in future releases.

The science fair elaborations described in this chapter are not extreme, and they vary considerably in how much extra work is implied. For example, the mobile access via cell phone implies an entirely new user interface customized for that device. We have glossed over the extensive design process that would be necessary to define the features such an extension to the normal science fair should have. In contrast, the “What’s interesting” service would be relatively straightforward to design or implement, because people and their activities are already being stored as part of the overall MOOsburg infrastructure. Similarly, the proposed VRML extension to the black hole exhibit requires only that the science fair be prepared to support documents of this type, and there are already browsers available that do this.

Summary and Review

This chapter has explored interaction paradigms that go beyond the conventional WIMP style of user interface. The case study was elaborated to demonstrate how the metaphors and technology of computer-supported cooperative work, ubiquitous computing, intelligent systems, and virtual reality might be used to extend the science fair design. Central points to remember include:

- Emerging interaction paradigms influence all aspects of action planning and evaluation.

- Facilitating awareness of collaborators’ activities, both current and past, is a key challenge in the design of collaborative systems.

- As computers become smaller and more distributed, user interfaces are becoming more specialized, and the services provided less predictable to their users.

- People often treat computer systems as if they had human characteristics or intelligence, particularly when the system uses natural language or personalized user interfaces.

- The automatic storage and analysis of personal data are becoming a social issue as software agents are being developed to assist in many different service areas.

- An important challenge for virtual reality environments is determining when to go beyond a simple simulation of reality.

Exercises

- Analyze the collaborative project work you have been doing in this course. List the tasks that you carry out on a regular basis, and the roles and responsibilities of the group members in each task. Then describe the techniques that you use to coordinate your work, to stay in touch, share progress or status, distribute updates or documents, and so on. What are the biggest problems in coordinating your team’s work?

- Develop a proposal for a “smart kitchen.” Draw (by hand or using a graphics editor) your hypothetical kitchen, using arrows and labels to identify devices. Provide a key to the drawing that names each device and briefly describes its function.

- Try out the ELIZA program (or a more modern chat-bot). Experiment with it enough to make and test some hypotheses about how it is processing your input. Write up your hypotheses and how you tested them.

- Surf the Web to find two examples of VRML in use, including one that you judge to be a useful application, and a second where the VR is gratuitous or even contrary to task goals. Describe the two cases and provide a rationale for your comparative judgment. (Be careful not to fall into the trap of thinking that if it “looks good,” then it must be useful.)

Project Ideas

Elaborate your online shopping scenarios to explore the four interaction paradigms discussed in this chapter. Try to create at least one example of coordinating work, ubiquitous computing, intelligent systems, and virtual reality in the context of your current design. Be careful not to force the new interaction techniques—use claims analysis to reason about the situations where the alternative interaction styles can be of most benefit. Illustrate your proposals with sketches or storyboards if relevant.