Video production has always been a field that offers both excitement and opportunities for creativity, leadership, and personal growth. The field attracts all kinds of people—artists, technicians, organizers, and problem solvers, to name just a few. The process of putting audio and video elements together into something that tells a coherent story is more difficult than many people realize, and it is often more rewarding than they expect. It sounds like a cliché to say that video production offers something for nearly everyone, but in fact it is true.

As a field, video production has always been subject to rapid change. Since it is so dependent on equipment, it is always evolving in new and transformative ways as technology makes the tools smaller, faster, lighter, more efficient, less expensive, and more capable. However, the changes that have taken place over the past decade have been remarkable even by the standards of an industry that is used to rapid innovation. These changes—which involve the development and use of digital-based equipment and the availability of fast electronic connections between video producers and consumers—have affected both the way video production is done and the way people are viewing video programming.

Digital technology is described in greater technical detail later in this chapter and in various places throughout the book. For now, let’s just say that it allows video and audio equipment to function more like—and be able to interact more effectively with—computers. Digital technology has also been the driving force in making various production equipment more capable and less expensive. This is perhaps nowhere better seen than in the area of smartphones, which today offer not only the ability to access the Internet, countless apps, and messaging capabilities, but also in many cases function as very capable video cameras. In fact, some of today’s smartphones can record video rivaling the quality of that shot by equipment that would have cost thousands of dollars several years ago. This kind of development has been mirrored throughout the industry, as low-cost, medium-cost, and high-cost video and audio equipment has gotten better, more accessible, and easier to use. In a modern video production facility, everything is digital—from the time the light images go into the camera until they are shown on a monitor, and from the time sound waves are picked up by a microphone until they are broadcast over a speaker. As you will see throughout the book, this all-digital workflow affects nearly every aspect of video production.

The increased availability of fast connections between video producers and consumers has created changes that are perhaps even more profound. These connections—available via physical wire links or wirelessly through the air—are fundamentally changing the way people access and consume video content. You now no longer need to have an actual television to watch video—you can do it on your computer, tablet, or smartphone. Increasingly, you needn’t even watch your video according to a television station or network’s schedule—you can watch your favorite program when you want, you can watch it over and over, and you can even “binge watch” an entire season of a show in one afternoon if you like. An increasing number of consumers are “cutting the cable”—cancelling their cable television subscriptions and instead using the Internet and streaming services such as Amazon and Netflix for their video viewing. The rise of these new content producers has created increased demand for new programming and, hence, increased demand for video production.

In addition, digital technology and fast connections have made the range of types of video greater than ever before. This refers not only to subject matter (drama, documentary, comedy, etc.) but also to the source of the video content and the level of production quality. Digital technology has in many ways democratized video production, providing average people with access to near-professional level tools. That means that more people are creating and sharing video online through sites like YouTube and Facebook and that you can access videos from a wide variety of different sources—not just television networks or movie studios. This video content may vary widely in production quality, but today’s consumer for the most part has learned to accept video that is perhaps somewhat poorly lit or that has some shaky camera shots as long as it is conveying something interesting. In fact, someone may spend two hours watching a multimillion-dollar movie on his or her laptop, then go directly to YouTube and watch a series of cat videos that cost almost nothing to create. As long as people find the content compelling, they will often overlook lapses in quality.

That is not to say, however, that you shouldn’t strive for quality no matter what level of video production you’re doing. There are basic concepts and aesthetic considerations common to virtually all levels of video production, such as how to focus or how to properly frame a shot. This book is designed to introduce you to those basic concepts and aesthetic considerations, providing a basic foundation for video production at any level. It concentrates on traditional, studio-based video production, but also acknowledges more informal production techniques as well. Video production is an exciting field. Whether you are putting together a video of your college graduation ceremony to upload to YouTube or directing a hit show for network TV, you will find that the art of combining audio and visual elements into a meaningful whole is a creative process that is both stimulating and rewarding. In video production, you must interact with people and equipment. The purpose of this book is to give you the skills to do both.

Types of Productions

Imagine yourself in a cable network TV studio directing a show where famous actors discuss their careers and films. Imagine yourself interviewing dignitaries in China to report on the growth of the Chinese economy. Imagine yourself on the sidelines at the Super Bowl, hand-holding a camera to capture comments from the winning coach. Imagine yourself editing shots of an emotional medical drama, building the tension in the story as you work. Imagine yourself producing a web-based show that goes viral and becomes a national sensation. Imagine yourself in Antarctica experimenting with various microphones to pick up the noises made by penguins.

The above examples are just a sampling of the many ways to produce and classify video material. Video production can be divided into genre—drama, documentary, news, or reality, for example. It can be divided by its method of distribution—broadcast network TV, cable TV, satellite TV, or Internet. However, for the purposes of learning basic production techniques and disciplines, it is most useful to divide it in terms of studio production, field production, and remote production.

Studio production uses either a purpose-built studio or other controlled, indoor environment to produce video content. The studio will also be accompanied by a control room, where video and audio shot in the studio are channeled and mixed. News programs, game shows, talk shows, morning information programs, situation comedies, and soap operas are a few of the program types that are generally shot in a studio. Field production takes place outside of a controlled studio environment, venturing into the “real world” to gather footage. While field production offers less control over the shooting environment, it allows a broader range of scenes to be captured. Documentaries and dramas are shot primarily using field production, and many studio productions may also contain material shot through field production: interviews with witnesses of a robbery that are included in a newscast, the scene at an airport filmed for a soap opera, or footage of vegetables being grown on a farm used as part of a cooking show. Remote production is a combination of studio and field production. The control room is housed in a truck or set up temporarily in a designated area, and the “studio” is in the “field”; it might be a football stadium, a parade route, or an opera house.

Studio Production

Studio production is the main focus of this book, but many of the principles related to the studio apply equally well to field and remote production. The main difference is that field and remote production must take into account the technical problems created by the “real world,” such as rain, traffic noise, and unwanted shadows. The studio is a controlled environment specially designed for television production.

The Studio

This section describes an ideal studio; the studio you use may not have all of these features, or it may have additional features. To begin with, a television studio is typically housed in a large room at least 20 feet by 30 feet without any posts obstructing its space. (See Figure 1.1.) Usually, a set occupies one end of the studio, and the cameras move around in the other space, but, if a studio is large enough, there may be several sets and the cameras move among them. The floor should be level, usually made of concrete covered with linoleum or another surface so camera movement is smooth. The ceiling should be 12 to 14 feet high to accommodate a pipe grid from which lights are hung.

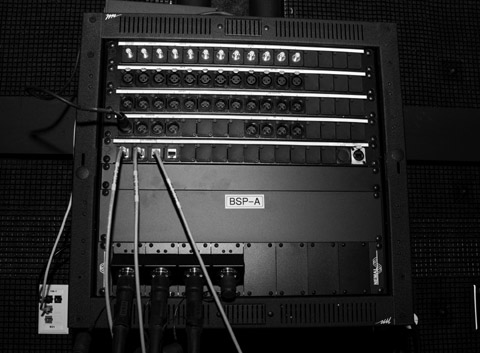

A studio is best located on the ground floor with large doors that open to the outside so set pieces and other objects can be brought in easily. Walls and ceilings should be soundproofed. The air-conditioning and ventilation systems need to be quiet so that the sound is not picked up by the microphones. Because lighting and other equipment require a lot of power, studios need to have access to much more electricity than the average room. Wall outlets must be provided not only for power but also to connect cameras, microphones, and other equipment to the control room, as shown in Figure 1.2.

Performers—who are often called talent—work in the television studio while one or more cameras pick up their images and microphones pick up the sound. Dedicated lights are usually necessary so that the cameras can pick up good images. Each camera’s shot is different so that the director, the person in charge of a studio production, can choose which shot to use.

A studio usually has TV sets called monitors that allow crew members and talent to see what is being shot. There is also a studio address loudspeaker system that personnel in the control room (see below) can use to talk to everyone in the studio, both talent and crew. This, of course, cannot be used during production because the microphones would pick up the sound as part of the program audio. That is why there is also an intercom system that allows various crew members to communicate with one another over headsets while a program is being recorded or aired. Many studios also have an IFB (interruptible foldback) system that enables control room personnel to talk to the talent, who wear small earpieces.

The Control Room

The control room, which is the operation center for the director and other crew members, is usually located near the studio. (See Figure 1.3.) It usually has a raised floor so the cables from the studio equipment can be connected to the appropriate pieces of equipment in the control room. Often, a window between the studio and control room allows those working in the control room to see what is happening in the studio. This window consists of two panes of glass, each of which is at a slightly sloped angle so that sounds do not reflect directly off the glass and create echo. The window should be well-sealed so sounds from the control room do not leak into the studio. Some control rooms, especially those used in teaching situations, have an area set aside for observers.

The control room contains a great deal of equipment, which will be discussed in detail in the following chapters. Basically, the images from the cameras go to a switcher that is used to select the pictures that can be sent to the recording equipment (which in school settings is often located in the control room). The sound picked up by the microphones goes to an audio console, where the volume can be adjusted and sounds can be mixed together. A graphics generator is used to prepare titles and other material that need letters, numbers, and figures. The graphics, too, go through the switcher (or are sometimes incorporated in the switcher) and can be combined with images from the cameras. Other inputs that might go to the switcher and audio board include a satellite feed and previously produced material that is fed into a show from a computer-based video server.

As noted, the control room will have a series of monitors, generally one for each input to the switcher and several to show what has been selected to be recorded or go out over the air. There are also speakers for audio and headsets so that the equipment operators can be on the intercom. In addition, numerous timing devices are used to ensure that the program and its individual segments will be the right length. The dimmer board that controls the studio lights may be in the control room or in the studio. The same is true for the controls for the prompter, the device that displays the script in front of the camera lens so that talent can read from it. There may also be camera control units (CCUs) that adjust settings on the studio cameras, such as the amount of light coming into the lens.

The trend in studios is to incorporate more and more computer controls that combine and automate tasks. This means that the people operating equipment need to understand various functions—for example, zooming the camera, adjusting the audio levels, composing graphics, dimming lights, and operating the switcher. A person who is a specialist in one field, such as audio, will sometimes be less valuable in the studio situation than a generalist who understands the techniques and disciplines needed to operate all the equipment.

Other Studio-Related Spaces

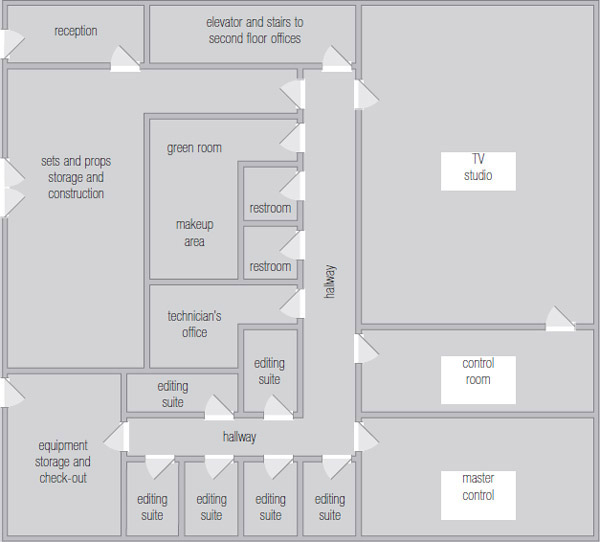

Most studios—whether they are housed in a dedicated production facility or are part of a television station or other entity—also have accompanying spaces where other production-related work is performed. The floor plan of a typical production facility is shown in Figure 1.4.

Studios that are part of TV stations or other entities that send a signal into the airwaves or to some other outside destination have an area called master control. The end product from the control room goes to master control, where it is processed in various ways to be sent on its journey. Sometimes programs and commercials are stored on large computer servers and are sent out from master control. Master control is also where satellite feeds or feeds from remote locations are received.

Most studio complexes have editing suites where material shot either in the studio or in the field can be edited. They may also have an area for storing portable and infrequently used equipment and an area where sets and props can be built and stored. Because constructing sets is noisy, this area is often some distance from the studio, but storage is placed as close as possible.

A green room is a waiting area for those about to go on a program, such as a talk-show guest or game-show contestant. It is called a green room because the walls are often painted green in line with the theory that the color will relax performers. A makeup room may also be found near the studio. As a student, you probably can’t change how your school’s studio facility is designed, but you can decide what features you like best and remember them in case you have a chance during your career to give input regarding the building or remodeling of a facility.

Makeshift Studios

In some production situations, a fully functioning studio and its ancillary spaces are not needed. For example, a political blogger might just need an area where she can interview politicians or other newsmakers and then post the video to her site. The finished video may just need to be a simple head and shoulders shot of the interviewee, edited to perhaps a minute or two. In this case, all that is really needed is a space, a camera, a microphone, and some relatively simple lighting. This space could be set up in the corner of a small office or perhaps even in an area in the blogger’s apartment; the specific area might be chosen based on natural lighting or sound quality. A makeshift studio might not be suitable for producing a network drama, but it can be perfectly fine for certain kinds of lower-budget video production.

Field Production

Field production often involves shooting and recording with a single camcorder. (See Figure 1.5.) The image that comes through the lens is recorded directly, bypassing the need for a switcher. Sound is recorded with a microphone, but it, too, is usually recorded directly.1 Later, the desired images and sounds are edited together in the proper order.

Because the “real world” is both the studio and the control room, there may be no need for a set, and the natural lighting from the sun or the lights in the room may suffice. This is usually the case for news and documentaries where you want to show the actual natural environment. But for other programs, especially dramas and comedies, you may find that natural lighting and sets are inadequate and that you need to spend a good deal of time working to combine available natural light with additional lighting you set up. Similarly, getting clean sound can be a challenge when doing field production, as unwanted extraneous noises may interfere with what you want to record.

In general, field production uses a smaller crew than studio production because there is less equipment. In fact, some news crews consist of one person who sets up the camera, attaches the microphone, and then steps in front of the camera to report the story. Various types of field production will be discussed in more detail in Chapter 12.

Remote Production

Remote production, as noted previously, is a combination of studio and field production, although it resembles studio production in that the outputs of the cameras are fed into a switcher and then either go out live or are recorded. However, remotes, such as the coverage of a football game, can be more complicated than studio productions and require a larger crew. In fact, sports productions (or parades or awards ceremonies) usually require many more camera operators than a studio production because of the size of the venue and all the angles that must be covered. As with field production, the location’s sound and lighting may create challenges that will need to be overcome.

The control room will either be in a truck or set up in a designated area convenient to the production. (See Figure 1.6.) These control rooms often resemble studio control rooms, albeit a little more cramped. There may be more recording and playback devices because of the need for instant replays and feature inserts. Editing equipment may also be used during production, for example, to put together game highlights to be shown during halftime. The control room may have the capability to send footage back to master control or to uplink it to a satellite.

.jpg)

.jpg)

Sometimes studio, field, and remote production are all used during the course of a program. For example, a newscast coming from a studio may include an edited story of a fire that was shot in the field earlier in the day as well as a live feed from a remote unit about to start televising a baseball game.

The Production Path

Another way to look at production is to consider a model that consists of five basic control functions related to audio and video—transducing, channeling, selecting/altering, monitoring, and recording/playback. (See Figure 1.7.) This isn’t the only model of production, but it serves as an appropriate overview of the video process.

Transducing

Transducing involves converting what we hear or see into electrical energy, or vice versa. For example, a microphone transduces spoken sound into electrical waveforms that can travel through wires and be recorded. A diaphragm within the microphone vibrates in response to sound waves and creates a current within electronic elements of the mic (see Chapter 7). It vibrates differently depending on whether the sounds are loud or soft, high-pitched or low-pitched. In essence, the microphone arranges electrons in a type of “code” that represents each particular sound. These electrons then travel through equipment as electrical energy until they come to a speaker. The speaker does the opposite of what a microphone does; it transduces the electrical waveforms back into sound waves that correspond to those originally picked up by the microphone.

A camera operates in the same way. It transduces light waves into electrical waveforms that can be stored or moved from place to place. When this electrical energy reaches a video monitor, it is transduced into a moving picture that the eye can see. Whether the production is studio, field, or remote, microphones and cameras act as transducers.

Channeling

Channeling refers to moving video and sound from one place to another. In television studios this is typically accomplished over wires called cables. Outlets in the studio allow the cables for the cameras and mics to run through the walls to the control room rather than being strewn all over the studio floor (see Figure 1.2). The most common way for the electrical signal to leave or enter a piece of equipment is through a connector. For example, one end of a camera cable will plug into a connector on the camera, and the other end will plug into a connector in the wall.

The channeling in studios and remote trucks is fairly similar. The walls of the truck and studio have places to plug in cameras and microphones, and these signals will then be transported through connectors and wires to audio consoles, switchers, recorders, monitors, and other equipment.

As you will see throughout this book, modern video production equipment usually uses digital signals. This means that audio and video information can travel over computer network connections. One advantage of these connections is that information can travel not just within the confines of a single room or building but also across town or to the other side of the world. Increasingly, channeling can be accomplished without wires at all, by using the airwaves in the same manner as a home wireless (wi-fi) network.

In the field, channeling is generally much simpler. Because the camera and recorder are one piece of equipment, external wires for video are often unnecessary. Although some cameras have built-in mics, an external microphone needs channeling to the camcorder, usually through a wire and connector.

Selecting and Altering

Signals that are transduced by microphones and cameras are usually channeled to an audio console or a switcher, where they can be selected. The switcher has buttons, each of which represents one of the video signals—camera 1, camera 2, server 2, graphics generator, satellite feed, and so forth. The person operating the switcher pushes a button to select the appropriate signal. Most switchers also allow for altering pictures to some degree. For example, you can take the picture from camera 1 and squeeze it so it only appears in the upper left-hand corner of the frame. (This will be explained in more detail in Chapter 9.)

Similarly, the audio board can be used to select one sound to go over the air, or it can mix together several sounds, such as music under a spoken announcement. Most audio consoles can also alter sounds, such as making a person’s voice sound thin and tinny, perhaps to represent a character from another planet.

Once again, these functions are similar for studio and remote production. In field production, sounds and pictures are also selected and altered, but usually this is done during the editing process, after the material has been shot. Most editing software can select, mix, and alter, so that the end result looks or sounds just like what would come from a switcher or an audio console.

Monitoring

Monitoring allows you to hear and see the material you are working with at various stages along the way. In addition to a monitor for each signal, a control room normally has a preview monitor, which is used to set up effects before they go out on the air, and a program monitor to show what is currently being sent out from the switcher.

Speakers are used for audio monitoring; some are used to hear the current program audio, while others are used to monitor the sound from a particular piece of equipment. Sometimes, the sound is fed not into a speaker but only into the audio operator’s headset. In that way the sound does not interfere with what crew members are saying to each other.

Pictures and sound can also be monitored with specialized electronic equipment. A VU (volume unit) meter, for example, shows how loud audio is, and a waveform monitor shows the brightness levels of a camera image. These pieces of equipment show things you cannot necessarily see or hear by using a video monitor or speakers (see Chapters 5 and 7).

Studios and trucks both have many monitoring devices, while monitoring in field production is often limited to seeing the picture you are taking through the display or viewfinder of the camera and listening to the audio you are recording through headphones plugged into the camera.

Recording and Playback

Recording equipment retains sound and picture in a permanent electronic form for later playback (see Chapter 10). For many years the only material available for recording and playing back audio and video was tape. However, today tape is seldom used, as other forms of recording media—such as hard drives, optical discs, and solid state media—have taken its place.

Some of the equipment discussed in this section performs more than one function. For example, some recording devices allow for several channels of audio and enable you to select which sound you want. Also, monitors act as transducers. There is no need to get hung up on categorizing each piece of equipment specifically according to its basic control function, but it is necessary to acknowledge that both picture and sound go through a process. When you are sending someone’s voice into a microphone, for example, you should take into account the way the sound might be channeled, selected, altered, monitored, recorded, and played back, so that you record it in a manner that will allow it to reach the final stage properly. The same is true for an image created by a camera.

Convergence and the Digital Age

During your professional career in video production, one thing will be certain—change. As noted earlier, one of the most important changes currently taking place is the convergence of media forms made possible by digital technology.

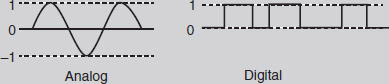

Differences between Analog and Digital

Both sound and light travel through the air naturally in the form of waves. For many years, the transducing process always resulted in electronic signals for sounds and video that were in the form of waves as well. These signals were called analog because the electronic waveform that resulted from the transducing process was analogous to the original sound or light wave. (See Figure 1.8.) In other words, it contained representations of the same essential elements—such as loudness, brightness, pitch, and color—that characterized the original sound or light wave. These representations varied continuously over a range of values; for example, a hypothetical analog signal might, at one moment, have a value of 8.64 and then, a second later, have a value of 10.37. This analog signal could travel through other equipment, such as switchers, audio consoles, and video recorders, eventually reaching a speaker and television screen.

Digital video technology, on the other hand, is based on the binary numbering system, which means there are only two possible values: 0 (off) or 1 (on). (See Figure 1.8.) Using digital technology, the original analog waveform (in the form of light and sound waves) is measured at very fast intervals, and the results are converted to a series of on-off pulses in a process called sampling. The individual on-off pulses are called bits, which are combined into groups of eight that are called bytes. A thousand bytes is a kilobyte, a thousand kilobytes is a megabyte, a thousand megabytes is a gigabyte, and a thousand gigabytes is a terabyte. A digital signal is often referred to as a bitstream, and it can be sent from one piece of digital equipment to another until it reaches the speaker or video screen. Here it must be converted back into analog because the human ear cannot hear and the human eye cannot see digital bits.

Why go to all the trouble to convert from analog to digital when digital must, in the end, be converted back to analog? One reason is the ability to duplicate digital signals with a consistent quality. An analog signal, as it moves from one place to another, deteriorates slightly. The waves representing the characteristics waver and slide, so that the end product is not exactly like the original wave. If you have seen an analog tape containing material that has been copied several times, you have seen the result of this deterioration—the colors run together, and the picture is washed out. This does not happen with digital technology. The discrete 0–1 pulses representing a byte do not change. They are the same at each stage of the production process. The difference is akin to trying to redraw the waves of an analog signal by hand—you are bound to draw them a little differently each time—as opposed to copying down a series of ones and zeroes in the correct order. In addition to duplication superiority, digital technologies create sharper images and crisper sound than analog. Finally, unlike analog signals, digital signals can be stored, transmitted, and manipulated by computer equipment.

High-Definition Television

Digital technology has been instrumental in the development of high-definition television (HDTV or HD). HDTV’s superior picture quality allowed it to rapidly replace its predecessor, an analog format called NTSC, or standard-definition television, that was first developed in the 1930s. There are actually a number of different HDTV formats, which are also referred to as ATSC. These are discussed in more detail in Chapter 5.

Some ATSC formats have much higher resolution than NTSC, and most use a wider aspect ratio (the relationship of screen height to screen width) than NTSC. Because ATSC formats have become dominant in nearly all areas of professional television production, this book concentrates on equipment and techniques that relate to ATSC. However, given that some universities may have not yet fully converted from NTSC systems, these are also mentioned.

Convergence

Digital technologies also allow for convergence, or combining, of multiple media forms. This is most prominent on the Internet, where video, audio, text, pictures, and animations can be combined seamlessly. As noted, much of today’s video production is viewed by its audience not on a traditional television in the family living room, as it was for most of the twentieth century, but on a computer of some kind. Desktop computers, laptops, tablets, and smartphones are among the many devices that allow video productions to reach their audiences.

Not surprisingly, many companies involved in television production are also involved in broader communications interests—telephones, radio, broadcast television, cable television, satellite broadcasting, and the Internet. When companies own a variety of distribution means, they try to engage in synergy and use the advantages of convergence to help all their operations. For example, a company that owns a book publishing house and a TV network may make shows from the books it publishes, hoping to boost the sales related to both. A company that owns several entities that distribute news may use the same reporters for stories for various outlets—a newspaper, a broadcast channel, a cable network, a podcast, and a website. In addition, media owned by the same parent company may also be used to create a website. We also use the term convergence to refer to the formation of these multimedia companies.

Sometimes convergence leads to layoffs as companies consolidate positions and duties. But convergence can also create new employment opportunities, especially for people who know how to do many different things. Given the choice between eliminating a production member who does only one job versus eliminating a person who can do many different jobs, most organizations will lay off the person who can do only one job. This is why, as you begin your video production career, you should learn as many different roles as possible and always be willing to learn new things.

A Short History of Video Production

The challenges posed by convergence are not new to the history of video production. Throughout its relatively short life, the field has seen technological processes come and go; various pieces of equipment shrink, combine, or disappear altogether; and the methods for bringing programming to the audience change significantly.

Early Television

Experiments with television date back to the 1880s and involved many different inventors working in the United States, Europe, and elsewhere. The first televisions used a small motor to turn a wheel on which different transparent pictures were mounted. The wheel spun, and the images passed in front of a lighting device, giving the illusion of movement. These motor-based systems, usually referred to as mechanical systems, eventually gave way to electronic systems in which the motor and wheel were replaced by a vacuum tube that displayed pictures without motors or moving parts.

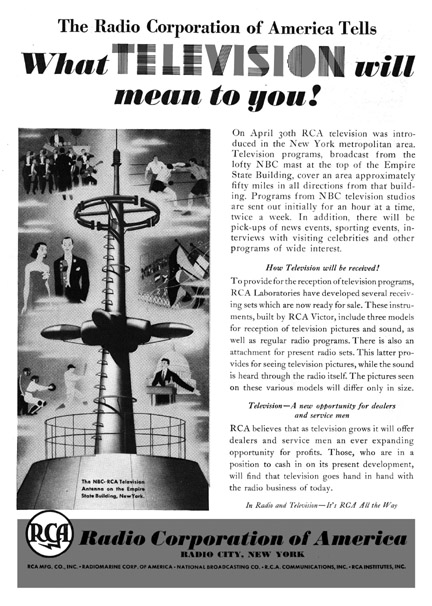

During the early part of the 1930s, the Federal Communications Commission (FCC)2 issued several licenses for experimental television broadcast stations, and engineers continued to work on developing systems that would meet public expectations for visual and sound quality. The Radio Corporation of America (RCA) was an early leader in pushing for mass-market television, and the company spearheaded an elaborate demonstration of the technology at the 1939 New York World’s Fair. RCA’s president, David Sarnoff, called television “an art which shines like a torch of hope in a troubled world.”3 The NBC network—owned by RCA—also inaugurated regularly scheduled television programming in conjunction with the demonstration. As Sarnoff had hoped, the World’s Fair publicity whetted the public’s appetite for television, but the United States’ entry into World War II in 1941 delayed the technology’s mass adoption.

By 1948, however, television was back in business, still in its black-and-white form. Many of the first broadcasts were live remotes. Bulky cameras attached to enormous trucks showed boxing and wrestling matches, baseball games, and dance bands from local ballrooms.

Studio production also became popular. Milton Berle’s Texaco Star Theater, a studio-produced comedy-variety show, became a phenomenal hit and was the reason many people bought their first TV set. Children’s programs were also popular during the late 1940s and 1950s, often featuring a clown or some other character who interacted with children in the studio audience and introduced cartoons. Commercials were usually performed live from the studio by freelance announcers with a few slides and a hand prop such as a vegetable slicer or a knife set.

TV sets were still relatively expensive, however, and in the early days very few people had televisions at home. A lot of early television viewing, in fact, took place in bars. There was also a geographic divide between the “haves” and “have nots” in television when it came to being able to pick up a television signal. These were times that preceded developments like cable, satellite broadcasting, tapes, and video discs, and so the only way to view television was to pick up an over-the-air broadcast signal. However, the FCC initially approved only twelve frequencies for television broadcasting, and those frequencies were quickly filled by stations in large cities, especially on the east and west coasts and in the Midwest. Viewers in rural areas—especially in the South and West—had no television stations to watch. It was only in the 1950s, when the FCC finally expanded the frequencies for television broadcasting, that these viewers finally had a chance to join the television revolution.

Figure 1.9 A 1939 RCA advertisement touts the benefits of the new technology of television.

Uses of Film and Live Camera

Early television reporters had no portable video cameras for capturing news, so they used film cameras instead. Because of the expense of film, however, they were used sparingly. Local newscasts would show still photos that came over the syndicated news wires to cover stories that had not been covered on film.

Although some programming was technically and creatively primitive during TV’s early years, a number of excellent musical and dramatic programs were produced in New York using multiple video-camera techniques. These productions ran straight through from start to finish with short breaks for commercials, and the actors worked “without a net”—if a line of dialogue was delivered wrong or forgotten, there was no way to edit it out. Timing was also critical because everything was live. Smart producers would come up with tricks to deal with unforeseen timing issues, such as inserting a scene near the end of a program where one of the characters would look for something. If the program was running long, the actor would find the object right away; if it was too short, he or she would hunt for a while.

The only way to record a live program in these early years was through the use of the kinescope film process. With a few refinements, this was basically a matter of pointing a film camera at the screen of a TV set. However, the resulting recordings suffered from a noticeable loss of picture and audio quality. (See Figure 1.10.) But in the late 1950s, many people in the western states could see prime-time network studio productions by only this means because the infrastructure for transporting signals across the country from production centers in New York had not yet been built.

In the early years, film was not used to make copies of entire programs because of its high cost. Aside from re-showing the program on the West Coast, no one could envision a reason for having a copy of a show; reruns did not cross people’s minds in these early years. But when I Love Lucy debuted in 1951, its creators decided, in what was then a maverick move, to film the programs. Later, the filming more than paid for itself because the programs could be sold overseas to a burgeoning international TV market. As filming began to pay off, more programs, especially Westerns, were filmed and shown on TV in the 1950s.

The Impact of Recorded and Edited Video

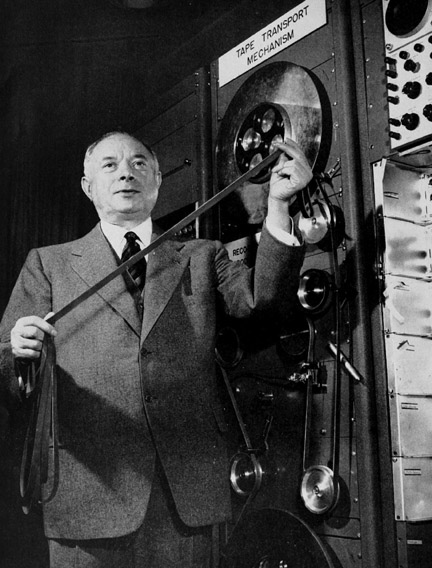

Eventually, the technology to record video electronically was developed. Introduced by Ampex in 1956, the videotape recorder (VTR) became the standard for studio production during the 1960s. The first VTRs were several feet tall and used tape that was two inches wide. (See Figure 1.11.)

VTRs changed television production procedures, at first simply because programs could be taped at a different time than they were aired. This was a big help for covering different time zones and for cast and crew members who could work during normal business hours rather than the wee hours of the night.

Soon, methods to edit videotape were developed. These early editing methods used razor blades and plastic adhesive tape—literally cutting the tape and splicing it together like film. Eventually, this process was replaced by electronic equipment that allowed taped segments to be edited without physical cutting and splicing.

Another important technological change of the 1960s was the gradual conversion to color TV, and with it a new array of aesthetic production possibilities.

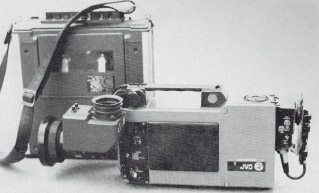

Portable Video Equipment

Videotape technology continued to develop, and by the 1970s Sony was championing its U-matic system, which used a three-quarter-inch tape packaged in a cassette. U-matic gave rise to legitimately portable systems, although the cameras and tape decks combined weighed upwards of 50 pounds. (See Figure 1.12.) At first the portable camera was separate from the portable recorder. Eventually, the recorders became even smaller, using half-inch and narrower tape. This gave rise to very portable camcorders that changed the way field production was undertaken.

News production crews no longer had to wait for film to be developed before editing. TV production, as a whole, became less studio-bound and better able to cover events in closer time proximity. A large portion of a program might come from a studio, but sections of it could incorporate the “real world,” either through live reports from the field or through something that had been prerecorded in the field and then edited.

Computer-Based Technologies

During the 1980s and 1990s, television production benefited greatly from the digital technologies originally developed for computers. One of the first changes was in the area of graphics. Prior to this period, opening and closing credits and any other words, numbers, or charts had to be drawn or printed. Stacks of graphic cards were placed on easels in the studio, and, as they were needed, one of the camera operators would focus a camera on them. In the 1980s, character generators were developed with which letters could be typed onto a computer screen and incorporated in a program as needed.

The next step was to use computers to control videotape equipment to transfer material from one recorder to another in a linear editing fashion. As computer storage increased, audio and video could be stored on hard drives. This, in turn, made nonlinear editing possible (see Chapter 11).

Computer-based digital technologies also led to digital cameras (see Chapter 5) that are superior in quality to the older analog ones. They also facilitated all of the visual effects we now see in movies and television programs. We are now firmly in the digital age, but, as with previous times, change is still the watchword. More changes are definitely a part of the future of video production.

Aspects of Employment in the Video Industry

To the outsider, and probably to some entry-level workers, video production might seem to be an endlessly fascinating combination of glamour and excitement. While it is true that status and income levels are often impressive and that the intensity found in many production situations can make the pulse race, other aspects of a video production career can also make it attractive.

For example, most video professionals feel a sense of pride in functioning as a part of a team that creates a product that is greatly valued—and sometimes seemingly worshipped—by our society. Whether you work on a TV news program or develop corporate training materials, the process of working together and creating a worthwhile end product provides a strong sense of satisfaction as well as a good deal of mutual respect among colleagues.

Work Patterns

While many network and cable shows are produced in large, fully equipped video studios, some programs are recorded through smaller production houses. These organizations employ many recently graduated students, often working at entry-level wages. The reality is that all too often during the early years of a media career, you must scrape by on a modest (at best) salary as you build experience.

But many of those who survive the difficult early years will find themselves in responsible positions with a fairly secure future. You can take heart in the knowledge that the early career problems facing today’s students are not very different from those that entry-level people have faced over the past decades.

Another challenge is that your work may not be steady. Often, people in the video business are employed on a daily hire basis. These workers are not on staff, and, even though they may work 3 or 4 days a week, they are not eligible for health care or other benefits. One result of this freelance employment is that many people now make their living working simultaneously in many different forms of electronic media production. Crew members are often called upon to perform a wide range of tasks such as audio, lighting, camera, and graphics. Those wishing to direct and produce are usually selected from the crew positions.

Types of Jobs

Many different types of positions are available for people who are trained in video production, including jobs in television entertainment and news. Another area is corporate video; large and small companies produce a variety of materials, such as orientation videos for new employees and video newsletters for websites that keep employees up-to-date. Sometimes, these companies have staff who use company equipment to produce the material in-house; other times, they hire freelancers or people who have small production houses. In both cases, jobs are available for people with video production skills.

One of the largest employers of media people is the government. Local, state, and federal agencies are involved in a myriad of telecommunications projects. Military applications, including the American Forces Radio and Television Services, have worldwide operations.

The medical field, too, needs video production people. Most hospitals use television and related media for patient education, in-service training, and public and community relations. Another growing field is religious production. In fact, the production level seen on many broadcasts of evangelical groups rivals that of major network programs. Many church bodies, in fact, operate their own networks.

You can also work for yourself. Any number of individuals have set up small companies to do event video: weddings, birthdays, school yearbooks, and other significant activities.

Interactive video—made possible by smartphones, videodiscs, video games, and the Internet—is having an important impact on the variety of new jobs related to designing web pages, producing “behind the scenes” bonus materials, and developing interactive products for clients.

Employment Preparation

Although a beginning video production course may seem a little early to be thinking about jobs, consider that you could be writing a short résumé for an internship or some other production-related position sooner than you think. Many schools offer or require internships, and holding down a part-time job in your area of interest is an excellent way to gain valuable experience.

In addition, it is fairly easy to experiment with various aspects of video production. Cameras and editing software are now inexpensive enough that most people can afford them, and many free open-source programs are available. You can distribute your work by uploading videos to video-sharing sites such as YouTube or burning your own videodiscs. The more you produce and show your work to others for critique, the better your skills will become. If you can handle simple projects well, the people interviewing you for jobs will notice. Also keep in mind that many of your classmates, especially those with whom you have worked on a team project, may help you get a job or be the ones you hire someday—so the work you do in school may be more important than you think.

Disciplines and Techniques

When you enter the professional world, you will need discipline to make yourself a valued member of a production team and experience in the techniques that will enable you to do your job, especially as it relates to equipment operation. Your college years will provide you with the opportunity to develop these traits. Disciplines relate to personal attitudes, such as acceptance of responsibility, dependability, and a careful manner of performance. Techniques are practical knowledge of such diverse activities as equipment operation, scriptwriting, and directing.

For example, one camera operator might use off-air time to double-check all aspects of focus for upcoming shots, while a second operator does only a cursory check and makes last-second adjustments if changes occur. Both operators may know the specific techniques needed to operate their cameras equally well, but only the first one has the discipline needed to be prepared for surprise occurrences. As another example, consider a camera operator who, by agreement with the director, has the discipline to take advantage of interesting but unplanned shots during a rock concert. In one nice shot she uses the camera lens and a light source to create a flare effect around a performer. Here we have an example of creativity relating to both discipline and technique.

An important reason to develop both discipline and technique is that producing a television program requires teamwork. In most cases, you cannot produce a program (especially a studio-based production) all by yourself. You can’t be on camera at the same time you are focusing the camera, adjusting the sound, and selecting the image to be recorded. Productions require the cooperation and skill of those you work with, and, conversely, those you work with depend on your discipline and techniques. The positive side of this teamwork is the personal bond that develops during production and often lasts a lifetime. The downside is that the productions are only as good as the team’s weakest link. In a team effort, everyone must help everyone else.

When you are the director, you are the leader of the production team. Most other times you will be a follower, but one who contributes ideas and is in charge of specific tasks. Learn to handle both roles equally well. As a leader, listen to ideas of others (see Chapter 4), and as a crew member, learn to make suggestions to the director in a constructive manner.

An interesting process takes place as groups of four or five people begin working on a project without a clearly defined leader. Such teams quickly find that it is difficult to function properly when everyone tries to have an equal say on all matters. One designated person must be responsible for final decisions. Those decisions are best made after the leader makes sure there has been an open exchange of ideas and opinions on every aspect of the production. A successful leader maintains this position not only by consistently presenting a good plan of action but also by acknowledging and adopting other team members’ ideas when they are appropriate. Teams usually function best when everyone has a chance to engage in creative thinking as well as production-related skills. The ability to balance the competitive and cooperative aspects of human nature successfully within a group is one of the essentials of good leadership. In the long run, the individual with a firm ego energy drive, balanced with ability and a willingness for honest self-evaluation, will be sought after not only for school projects but also throughout a professional career.

One of the most revealing tests of your production capabilities, in the classroom and in the working world, will be to answer this simple question: Do other people really want to work with you on their production team? We have subtitled this book Disciplines and Techniques because we have interwoven information about both qualities throughout the text. We hope this will help you develop the skills and attitudes needed to be successful in the significant world of video.

Focus Points

After reading this chapter, you should know …

- How digital technology and access to fast online connections are affecting video production.

- The differences and similarities in studio, field, and remote productions.

- The physical characteristics of studios and control rooms and the equipment found in them.

- Some real-world considerations of field production.

- The basic control functions of transducing, channeling, selecting/altering, monitoring, and recording/playback.

- Differences between analog and digital.

- A brief historical background of video production.

- Aspects of employment in the video industry.

- The importance of developing disciplines and techniques.

Review

- Discuss circumstances when it would be advantageous to use studio production, when it would be best to use field production, and when it would be appropriate to use remote production.

- What do you think video production will be like ten years from now?

- In your estimation, what have been the most important technical developments in video production over the years?

On Set

- Prepare a résumé that lists your various skills that relate in any way to video production. Consider any training in music, graphic arts, and writing you may have had, especially in combination with computer skills. Also, describe the qualities of leadership and discipline as demonstrated in your academic record, extracurricular activities, and employment history. You may even make a video résumé for yourself. Then, as you complete this or other courses, critique and re-shoot the video using what you have learned.

- Tour your school’s studio complex. After the tour, compare it to the one described in the “Studio Production” section of this chapter. Consider its size, ceiling height, layout, access for large set pieces and electrical needs, and amount and type of equipment. What improvements would you recommend for your school’s facilities?

Notes

1Sometimes, sound from the microphone goes through a portable mixer, where it is combined with other sounds. At other times, sound is sent to a separate recorder. These are often referred to as single-system sound and double-system sound. For single-system sound, the audio is recorded along with the video. For double-system sound, the picture is recorded in one place and the sound in another, such as a DAT. Double-system is used, particularly in movie production, so that the sound can be more carefully controlled during recording and more easily manipulated during the editing process.

2Actually, the FCC did not exist until 1934, when it replaced the Federal Radio Commission (FRC). Both the FRC and FCC issued experimental television licenses.

3“New York Display Dedicated by RCA,” Broadcasting, May 1, 1939, 21.