Chapter 11. Rule-Based AI

In this chapter we’re going to study rule-based AI systems. Rule-based AI systems are probably the most widely used AI systems for both real-world and game AI applications. In their simplest form, rule-based systems consist of a set of if-then style rules that are used to make inferences or action decisions. Technically, we’ve already looked at one form of rule-based system in Chapter 9 on finite state machines. There we used rules to handle state transitions. We also looked at another type of rule-based system in the previous chapter on fuzzy logic, Chapter 10.

In this chapter, we’re going to look specifically at rule-based systems that commonly are used in so-called expert systems. Examples of real-world, rule-based expert systems include medical diagnosis, fraud protection, and engineering fault analysis. One advantage of rule-based systems is that they mimic the way people tend to think and reason given a set of known facts and their knowledge about the particular problem domain. Another advantage of this sort of rule-based system is that it is fairly easy to program and manage because the knowledge encoded in the rules is modular and rules can be coded in any order. This gives some flexibility both when coding the system and modifying the system at a later time. These advantages hopefully will become clearer to you as we move through the material in this chapter. Before we get into the details, though, let’s discuss a couple of game examples that can use rule-based systems.

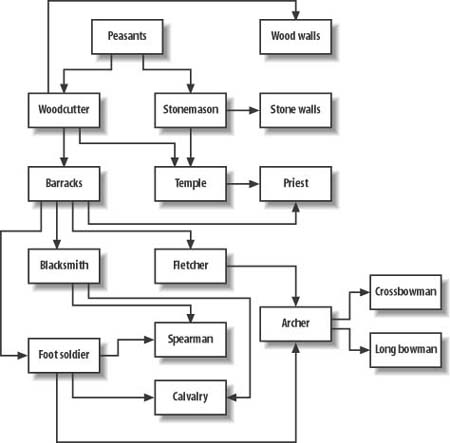

Imagine you’re writing a real-time strategy simulation game involving the typical technology tree, whereby players must train peasants, build facilities, and harvest resources. An illustrative technology tree is shown in Figure 11-1.

What we aim to do here is enable the computer opponent to keep track of the player’s current state of technology so that the computer opponent can plan and deploy offensive and defensive resources accordingly. Now, you could cheat and give the computer opponent perfect knowledge of the player’s state of technology. However, it would be fair and more realistic to have the computer gain knowledge and make inferences on the state of the player’s technology in much the same way that the player will have to so that she can assess the computer opponent’s state of technology. Both player and computer will have to send out scouts to collect information and then make inferences given the information as it is received. We can achieve this using a fairly simple rule-based system, as you’ll see in this chapter.

Let’s consider another example. Say you’re writing a martial arts fighting game and you want to give the computer the ability to anticipate the player’s next strike so that the computer opponent can make the appropriate countermove, such as a counter strike, a dodge, or a parry. The trick here is to somehow keep track of the player’s strike patterns—combinations of kicks and punches—and to learn which strike most likely will follow an observed set of previous strikes. For example, if during the fight the player throws a punch, punch combination, what will the player most likely throw next: a punch, a low kick, or a high kick? We can use a rule-based system to achieve such anticipation. We’ll come back to this example in much more detail later in this chapter.

Rule-Based System Basics

Rule-based systems have two main components: the working memory and the rules memory. The working memory stores known facts and assertions made by the rules. The rules memory, or rules, for short, contains if-then style rules that operate over the facts stored in working memory. As rules are triggered, or fired in rule-based system lingo, they can trigger some action or state change, such as in a finite state machine, or they can modify the contents of the working memory by adding new information called assertions.

Example 11-1 shows how the working memory for a real-time, strategy-game technology tree might look.

enum TMemoryValue{Yes, No, Maybe, Unknown};

TMemoryValue Peasants;

TMemoryValue Woodcutter;

TMemoryValue Stonemason;

TMemoryValue Blacksmith;

TMemoryValue Barracks;

TMemoryValue Fletcher;

TMemoryValue WoodWalls;

TMemoryValue StoneWalls;

TMemoryValue Cavalry;

TMemoryValue FootSoldier;

TMemoryValue Spearman;

TMemoryValue Archer;

TMemoryValue Temple;

TMemoryValue Priest;

TMemoryValue Crossbowman;

TMemoryValue Longbowman;For this example, we made each element in working memory a TMemoryValue type that can take on any one of four values: Yes, No, Maybe, or Unknown. The idea here is to keep track of the computer opponent’s current perception of the state of technology of the player opponent. A value of Yes implies that the player has the particular technology, whereas a value of No implies that she does not. If the player meets all the criteria to gain or posses a certain technology, but the state has yet to be confirmed by a scout, the value of Maybe is used. If the computer knows nothing about a particular technology capability for the player, Unknown is used.

The computer can gather facts on the player’s current state of technology by sending out scouts and making observations. For example, if the computer sends out a scout and sees that the player has built a temple, Temple would be set to Yes. Using a set of if-then style rules, the player can infer the state of technology for the player, before a scout confirms it, given some known facts. For example, referring to Figure 11-1, if the player has woodcutters and stonemasons, she is capable of having a temple. In this case, Temple is set to Maybe. A rule for this scenario might look like that shown in Example 11-2.

if(Woodcutter == Yes && Stonemason == Yes &&

Temple == Unknown)

Temple = Maybe;Inference can work the other way as well. For example, if the player has been observed to have a priest, the computer can infer that the player also must have a temple, and therefore also must have a barracks, a woodcutter, and a stonemason. A rule for this scenario might look like that shown in Example 11-3.

if(Priest == Yes)

{

Temple = Yes;

Barracks = Yes;

Woodcutter= Yes;

Stonemason= Yes;

}You can have many more rules for this type of technology tree. Example 11-4 shows a handful of other rules you can write.

if(Peasants == Yes && Woodcutter == Unknown)

Woodcutter = Maybe;

if(Peasants == Yes && Stonemason == Unknown)

Stonemason = Maybe;

if(Woodcutter == Yes && Barracks == Unknown)

Barracks = Maybe;

if(Woodcutter == Yes && Stonemason == Yes &&

Temple == Unknown)

Temple = Maybe;

if(Barracks == Yes && Blacksmith == Unknown)

Blacksmith = Maybe;

if(Fletcher == Yes && FootSoldier == Yes &&

Archer == Unknown)

Archer = Maybe;

if(Woodcutter == Yes && WoodWalls == Unknown)

WoodWalls = Maybe;

if(Stonemason == Yes && StoneWalls == Unknown)

StoneWalls = Maybe;

if(Archer == Yes && Crossbowman == Unknown)

Crossbowman = Maybe;

if(Archer == Maybe && Longbowman == Unknown)

Longbowman = Maybe;

if(Longbowman == Yes)

{

Archer = Yes;

Fletcher = Yes;

FootSoldier = Yes;

Barracks = Yes;

Woodcutter = Yes;

}

if(Cavalry == Yes)

{

Blacksmith = Yes;

FootSoldier = Yes;

Barracks = Yes;

Woodcutter = Yes;

}

if(StoneWalls == Yes)

Stonemason = Yes;As we stated already, these aren’t the only rules you can write for this example. You can develop several more, covering all possible technologies shown in Figure 11-1. The idea here is that you can write such rules and execute them continuously during the game—that is, each iteration through the game loop—to maintain an up-to-date picture of the computer opponent’s view of the player’s technology capabilities. In this example, the computer can use this knowledge in other AI subsystems to decide how to deploy its attack forces and defenses.

This example should give you a basic idea of how a rule-based system works. It really comes down to a set of if-then style rules and a set of facts and assertions. Note, however, that very often developers do not build rule-based systems using actual if-statements such as those shown in this section. We discuss alternatives a little later, but basically hardcoding the if-statements makes a certain type of inference hard to achieve. Further, developers often use scripting languages or shells so that they can create and modify rules without having to change source code and recompile.

Inference in Rule-Based Systems

In the previous section we took a look at the main components of rule-based systems and showed how you can use such a system to make inferences in a real-time strategy game. In this section we’re going to take a slightly more formal look at making inferences using rule-based systems. Our aim here is to distinguish between the two basic algorithms for making inferences and to introduce some standard rule-based system lingo in case you decide to dig further in the technical literature for more information on rule-based systems. (We give some references at the end of this chapter.)

Forward Chaining

The most common inference algorithm for rule-based systems is called forward chaining. This algorithm consists of three basic phases. The first phase involves matching rules to facts stored in working memory. You do this by checking the if-parts of each rule to see if they match the given set of facts or assertions in working memory. For example, in our technology tree example, if the working memory indicates that Peasants = Yes and Woodcutter = Unknown, we know the first rule shown in Example 11-4 matches and potentially can be fired. When a rule is fired, its then-part is executed. Potentially, more than one rule can match a given set of facts in working memory. In this case, we have to figure out which rule to fire. This leads to the so-called conflict resolution phase.

In the conflict resolution phase we have to examine all the matching rules and figure out which one we want to fire. We can make this decision in many ways. A common approach is to fire the first matching rule. Sometimes you can pick one at random. In other cases, the rules are weighted and the one with the highest weight is selected. We’re going to take this latter approach in our fighting example.

After the conflict resolution phase is executed and a rule is selected, we fire the rule. Firing a rule simply means executing its then-part. The rule might assert some new facts in working memory, such as in the rules in Example 11-4. It might trigger some event or call some other function that does some sort of processing.

After these three phases are executed, the whole process is repeated until no more rules can be fired. When this happens the working memory should contain everything the rule-based system can infer given the starting facts. Don’t worry if this is a bit nebulous at this point. Things will become clearer when we get to the fighting example.

Backward Chaining

Backward chaining is sort of the opposite of forward chaining. We still have working memory and rules memory, but instead of trying to match if-parts of rules to working memory, we try to match the then-parts. In other words, in backward chaining we start with some outcome, or goal, and we try to figure out which rules must be fired to arrive at that outcome or goal. Consider the technology tree example one more time. Let’s say the outcome is that the player has cavalry units—that is, Cavalry = Yes. To figure out how the player arrived at acquiring cavalry we can backward-chain to see which rules must be fired to set Cavalry to Yes.

Looking at Figure 11-1, we see that to have cavalry, the player must have had a blacksmith. A rule for this situation might look like the code shown in Example 11-5.

Continuing, if the player has a blacksmith, she must have had barracks. If the player had barracks, she must have had a woodcutter, and so on. We can continue this sort of logic backward up the technology tree from the goal, Cavalry = Yes, through all the rules and facts that are required to arrive at that goal. This is backward chaining.

In practice, backward chaining is recursive and more difficult to implement than forward chaining. Further, hardcoding if-statements such as those in our illustrative examples makes it difficult to match the then-parts of rules to facts stored in working memory during backward chaining. In the fighting example, we’ll look at how to implement rule-based systems without actually hardcoding if-then style rules.

Fighting Game Strike Prediction

In this example, we aim to predict a human opponent’s next strike in a martial arts fighting game. The basic assumption is that the player will try to use combinations of strikes to find the most effective combination. These combinations can be something such as low kick, low kick, high kick; or punch, punch, power kick; and so on. We want the computer opponent to somehow learn to anticipate which strike the player will throw next given the most recently thrown strikes and some history of the player’s strike patterns. If the computer can anticipate the next strike, it can throw an appropriate counter strike, or block, or take evasive action such as side-stepping or back-stepping. This will add a higher level of realism to the combat simulation and present new challenges for the player.

To achieve this, we’re going to implement a rule-based system with a learning capability. We will achieve this learning by weighting each rule to reinforce some while suppressing others. In Chapter 13 we’ll look at an alternative approach to this problem whereby instead of rules, we’ll use conditional probabilities to help predict the next strike.

To keep this example manageable for discussion purposes, we’re going to simplify things a bit. We’ll assume that the player’s strikes can be classified as punch, low kick, or high kick. And we’re going to track three-strike combinations. Even with these simplifications we still end up with 27 rules to capture all possible three-strike combinations of punch, low kick, and high kick. We’ll look at the rules in a moment, but first let’s take a look at the structures and classes we need to implement the working memory and rules memory.

Working Memory

Example 11-6 shows how the working memory is implemented.

enum TStrikes {Punch, LowKick, HighKick, Unknown};

struct TWorkingMemory {

TStrikes strikeA; // previous, previous strike (data)

TStrikes strikeB; // previous strike (data)

TStrikes strikeC; // next, predicted, strike (assertion)

// note: can add additional elements here for things such as which counter

to throw, etc....

};

TWorkingMemory WorkingMemory; // global working memory variableTStrikes is just an enumerated type for the possible strikes. Note that we include Unknown for the case when the computer does not know what strike will be thrown.

TWorkingMemory is the structure defining the working memory. Here we have three elements: strikeA, strikeB, and strikeC. strikeC will store the predicted next strike to be thrown. This will be asserted by forward chaining through the rules given the observed facts, strikeA and strikeB. strikeB represents the most recently thrown strike while strikeA represents the strike thrown before strikeB. The three-strike combinations are strikeA, then strikeB, then strikeC, in that order, where strikeC is predicted by the rule system.

We can add more facts or assertions to the working memory if desired. For example, we can include a counter strike element that can be asserted given the predicted next strike. If the predicted next strike is, say, low kick, we can have rules that assert an appropriate counter such as back step, and so on. Given the way we’re implementing the working memory and rules in this example, you easily can add new elements in the working memory as well as new rules.

Rules

Example 11-7 shows the rules class for this example. Note that we are not going to hardcode if-then rules. Instead, we’ll keep an array of TRule objects to represent the rules memory. We easily could have used if-then constructs; however, the approach we’re taking here makes it easier to add or delete rules and facilitates backward chaining, which we’re going to use to a limited extent. We’ll come back to this subject a little later.

class TRule {

public:

TRule();

void SetRule(TStrikes A, TStrikes B, TStrikes C);

TStrikes antecedentA;

TStrikes antecedentB;

TStrikes consequentC;

bool matched;

int weight;

};The TRule object contains five members. The first two are antecedentA and antecedentB. These members correspond to the previous two strikes thrown by the player. The next member, consequentC, corresponds to the predicted next strike—the strike that we’ll assert using the rules. If we were using standard if-statements for the rules, we’d have rules that look something like this:

In an if-then style rule such as if X then Y, the “if X” part is the antecedent, or the premise. The “then Y” part is the consequent, or conclusion. In our example, we’re assuming that our rules consist of the conjunction (logical AND) of two parameters: antecedentA and antecedentB. The then-part in our rules, consequentC, is the expected strike given the two previous strikes.

The next member in TRule is matched. This flag is set to true if the antecedents in the rule match the facts stored in working memory. More specifically, for a given rule, if antecedentA equals WorkingMemory.strikeA and antecedentB equals WorkingMemory.strikeB, the rule is matched. It’s possible that more than one rule will match a given set of facts. This matched member helps us keep track of those that do match so that we can pick one to fire during the conflict resolution phase.

The final member in TRule is weight. This is a weighting factor that we can adjust to reinforce or inhibit rules. In a sense it represents the strength of each rule. Looking at it from a different angle, the weight represents the computer’s belief that a given rule is more or less applicable relative to other potentially matching rules. During the conflict resolution phase where more than one rule matches, we’ll fire the one rule with the highest weight to make a strike prediction. If after the next strike is thrown, we see that we fired the wrong rule—that is, we made a wrong prediction—we’ll decrement the fired rule’s weight to suppress it. Further, we’ll figure out which rule should have been fired and increment its weight to reinforce it.

TRule contains only two methods, SetRule and the constructor. The constructor simply initializes matched to false and weight to 0. We use SetRule to set the other members—antecedentA, antecedentB, and consequentC—therefore defining a rule. SetRule is illustrated in Example 11-8.

void TRule::SetRule(TStrikes A, TStrikes B, TStrikes C)

{

antecedentA = A;

antecedentB = B;

consequentC = C;

}We need a few global variables for this example. The first is WorkingMemory, as we showed in Example 11-6. Example 11-9 shows the others.

TRule Rules[NUM_RULES]; int PreviousRuleFired; TStrikes Prediction; TStrikes RandomPrediction; int N; int NSuccess; int NRandomSuccess;

Here, Rules is an array of TRule objects. The size of the Rules array is set to NUM_RULES, which is defined as 27 for this example. PreviousRuleFired is an integer type that we’ll use to store the index to the rule fired during the previous game cycle. Prediction keeps track of the strike prediction the rule system makes. Technically we don’t need this because the prediction also is stored in working memory.

We’re going to use RandomPrediction to store a randomly generated prediction to compare with our rule-based prediction. What we’ll really compare is the success rate for our rule-based predictions versus the success rate for random guesses. The global variable N will store the number of predictions made. NSuccess will store the number of successful predictions made by our rule-based systems, while NRandomSuccess will store the number of successes for the random guesses. We calculate the success rates by dividing the number of successes by the total number of predictions.

Initialization

At the start of this simulation, or at the start of the game, we need to initialize all the rules and working memory. The Initialize function shown in Example 11-10 takes care of this for us.

void TForm1::Initialize(void)

{

Rules[0].SetRule(Punch, Punch, Punch);

Rules[1].SetRule(Punch, Punch, LowKick);

Rules[2].SetRule(Punch, Punch, HighKick);

Rules[3].SetRule(Punch, LowKick, Punch);

Rules[4].SetRule(Punch, LowKick, LowKick);

Rules[5].SetRule(Punch, LowKick, HighKick);

Rules[6].SetRule(Punch, HighKick, Punch);

Rules[7].SetRule(Punch, HighKick, LowKick);

Rules[8].SetRule(Punch, HighKick, HighKick);

Rules[9].SetRule(LowKick, Punch, Punch);

Rules[10].SetRule(LowKick, Punch, LowKick);

Rules[11].SetRule(LowKick, Punch, HighKick);

Rules[12].SetRule(LowKick, LowKick, Punch);

Rules[13].SetRule(LowKick, LowKick, LowKick);

Rules[14].SetRule(LowKick, LowKick, HighKick);

Rules[15].SetRule(LowKick, HighKick, Punch);

Rules[16].SetRule(LowKick, HighKick, LowKick);

Rules[17].SetRule(LowKick, HighKick, HighKick);

Rules[18].SetRule(HighKick, Punch, Punch);

Rules[19].SetRule(HighKick, Punch, LowKick);

Rules[20].SetRule(HighKick, Punch, HighKick);

Rules[21].SetRule(HighKick, LowKick, Punch);

Rules[22].SetRule(HighKick, LowKick, LowKick);

Rules[23].SetRule(HighKick, LowKick, HighKick);

Rules[24].SetRule(HighKick, HighKick, Punch);

Rules[25].SetRule(HighKick, HighKick, LowKick);

Rules[26].SetRule(HighKick, HighKick, HighKick);

WorkingMemory.strikeA = sUnknown;

WorkingMemory.strikeB = sUnknown;

WorkingMemory.strikeC = sUnknown;

PreviousRuleFired = -1;

N = 0;

NSuccess = 0;

NRandomSuccess = 0;

UpdateForm();

}Here we have 27 rules corresponding to all possible three-strike combinations of punch, low kick, and high kick. For example, the first rule, Rules[0], can be read as follows:

Examining these rules, it’s clear that more than one can match the facts stored in working memory at any given time. For example, if strikes A and B are punch, punch, respectively, the first three rules will match and the prediction could be punch, or low kick, or high kick. This is where the weight factor comes into play to help select which matching rule to fire. We simply select the rule with the highest weight. We pick the first rule encountered in the event that two or more rules have the same weight.

After all the rules are set, the working memory is initialized. Basically, everything in working memory is initialized to Unknown.

Strike Prediction

While the game is running we need to make a strike prediction after every strike the player throws. This will allow the computer opponent to anticipate the next strike the player will throw, as we’ve already discussed. In our example, we have one function, ProcessMove, to process each strike the player throws and to predict the next strike. Example 11-11 shows the ProcessMove function.

TStrikes TForm1::ProcessMove(TStrikes move)

{

int i;

int RuleToFire = -1;

// Part 1:

if(WorkingMemory.strikeA == sUnknown)

{

WorkingMemory.strikeA = move;

return sUnknown;

}

if(WorkingMemory.strikeB == sUnknown)

{

WorkingMemory.strikeB = move;

return sUnknown;

}

// Part 2:

// Process previous prediction first

// Tally and adjust weights

N++;

if(move == Prediction)

{

NSuccess++;

if(PreviousRuleFired != -1)

Rules[PreviousRuleFired].weight++;

} else {

if(PreviousRuleFired != -1)

Rules[PreviousRuleFired].weight--;

// Backward chain to increment the rule that

// should have been fired:

for(i=0; i<NUM_RULES; i++)

{

if(Rules[i].matched && (Rules[i].consequentC == move))

{

Rules[i].weight++;

break;

}

}

}

if(move == RandomPrediction)

NRandomSuccess++;

// Roll back

WorkingMemory.strikeA = WorkingMemory.strikeB;

WorkingMemory.strikeB = move;

// Part 3:

// Now make new prediction

for(i=0; i<NUM_RULES; i++)

{

if(Rules[i].antecedentA == WorkingMemory.strikeA &&

Rules[i].antecedentB == WorkingMemory.strikeB)

Rules[i].matched = true;

else

Rules[i].matched = false;

}

// Pick the matched rule with the highest weight...

RuleToFire = -1;

for(i=0; i<NUM_RULES; i++)

{

if(Rules[i].matched)

{

if(RuleToFire == -1)

RuleToFire = i;

else if(Rules[i].weight > Rules[RuleToFire].weight)

RuleToFire = i;

}

}

// Fire the rule

if(RuleToFire != -1) {

WorkingMemory.strikeC = Rules[RuleToFire].consequentC;

PreviousRuleFired = RuleToFire;

} else {

WorkingMemory.strikeC = sUnknown;

PreviousRuleFired = -1;

}

return WorkingMemory.strikeC;

}You can break this function into three distinctive parts, as indicated by the comments // Part 1, // Part 2, and // Part 3. Let’s consider each part in turn.

Part 1

The first part populates the working memory. At the start of the game, after working memory is initialized and before any strikes are thrown, the working memory contains only Unknown values. This is insufficient to make a prediction, so we want to collect some data from the player as he begins to throw strikes. The first strike thrown is stored in WorkingMemory.strikeA and ProcessMoves simply returns Unknown without attempting a prediction. After the second strike is thrown, ProcessMoves is called again and this time the second strike is stored in WorkingMemory.strikeB. ProcessMoves returns Unknown one more time.

Part 2

The second part in ProcessMoves takes care of processing the previous prediction—that is, the prediction returned the previous time ProcessMoves was called. The first task in part 2 is to determine whether the previous prediction was accurate. ProcessMoves takes move as a parameter. move is the strike the player threw most recently. Therefore, if move equals the previous prediction stored in Prediction, we have a success. In this case, we increment NSuccess so that we can update our success rate. Then we reinforce the previously fired rule because it was the correct one to fire given the strike history stored in working memory. To reinforce a rule we simply increment the rule’s weight.

If the previous prediction was wrong—that is, if move does not equal Prediction—we need to inhibit the previously fired rule. To do this we simply decrement the previously fired rule’s weight. At the same time we want to reinforce the rule that should have been fired. To do this we have to figure out which rule should have been fired the last time ProcessMoves was called. To this end, we need to backward-chain a bit. Essentially, we know the move; therefore, we know what consequent should have been returned for the previous prediction. So, all we have to do is cycle through the last set of matched rules and pick the one who’s consequentC equals move. Once we find the rule, we increment its weight and we’re done.

The remaining tasks in part 2 of ProcessMoves are relatively simple. The next task is to see if the previous random prediction was correct and, if so, to increment the number of successful random predictions, NRandomSuccess.

Finally, we need to update the strikes in working memory in preparation for making a new prediction. To this end, we simply shift the strikes in working memory and add the most recent move. Specifically, WorkingMemory.strikeB becomes WorkingMemory.strikeA and move becomes WorkingMemory.strikeB. Now we’re ready to make a new prediction for the new series of strikes stored in working memory.

Part 3

Referring to // Part 3 in Example 11-11, the first task in the prediction process is to find the rules that match the facts stored in working memory. We take care of this in the first for loop under the // Part 3 comment. Note that this is the so-called match phase of the forward chaining algorithm. Matching occurs when a rule’s antecedentA and antecedentB equal WorkingMemory.strikeA and WorkingMemory.strikeB, respectively.

After the match phase, we need to pick one rule to fire from those that were matched during the matching phase. This is the conflict resolution phase. Basically, all we do is cycle through the matched rules and pick the one with the highest weight. We take care of this in the second for loop after the // Part 3 comment in Example 11-11. After this loop does its thing, the index to the selected rule is stored in RuleToFire. To actually fire the rule we simply copy consequentC of Rules[RuleToFire] to WorkingMemory.strikeC.

ProcessMoves stores the index to the fired rule, RuleToFire, in PreviousRuleFired, which will be used in part 2 the next time ProcessMoves is called. Finally, ProcessMoves returns the predicted strike.

That’s pretty much all there is to this example. Upon running the example and simulating thrown strikes, by pressing buttons corresponding to punch, low kick, and high kick, we see that the rule-based system is pretty good at predicting the next strike. Our experiments saw success rates from 65% up to 80%. Comparing this to the roughly 30% success rate we achieved by guessing randomly, it’s clear that such a rule-based system works very well.

Further Information

We’ve only scratched the surface of rule-based systems in this chapter. Although we covered all the fundamental concepts and showed how effective rule-based systems are, other aspects to rule-based systems are worthwhile investigating if you plan to implement them for large-scale systems.

Optimization is one area that deserves attention. For small rule sets, forward chaining does not take much processing time; however, for larger sets of rules where many rules can match a given set of facts, it’s wise to optimize the conflict resolution phase. The most common algorithm for this is the so-called Rete algorithm. (Check out the article “Rete: A Fast Algorithm for the Many Pattern/Many Object Pattern Match Problem,” by C. L. Forgy, Artificial Intelligence, 1982.) Most textbooks on rule-based, or expert, systems cover the Rete algorithm.

As you saw with our fighting example, you don’t have to use if-then statements in a rule-based system. You don’t even have to use the sort of enumerated types or other types, such as integers, Booleans, and so on. You can use strings to represent facts in working memory and string matching routines to determine if the antecedents of a rule (also, strings) match facts stored in working memory. This approach opens the door to scripting rules outside of the compiled program, which paves the way for designers to script AI rules. Indeed, developers have been using scripting languages, such as the well-known Prolog, Lisp, and CLIPS languages, for scripting rule-based systems for decades now. (There’s even a relatively new Java-based language called JESS.) Another advantage to using a scripting language to implement rule-based systems is that it’s easy to change, delete, or expand upon the rules without having to modify the compiled game code.

Instead of using a third-party scripting language, you can write your own; however, caution is in order here. Writing a scripted rule system to handle facts that can take on a range of values, along with rules with compound antecedents and consequents that might even trigger other events, is far more complicated than writing a rule system with only Boolean facts and simple rule structures. If you’d like to see how you might go about such a task, check out Chapter 8 of AI Application Programming by M. Tim Jones (Charles River Media). Note that the author’s example is not a general-purpose scripting language such as Prolog and the others mentioned earlier, but it does show how to implement a simple rules-scripting algorithm from scratch. Recall that we covered basic scripting in Chapter 8 of this book. You can apply those same techniques to writing rule-based systems, as we discussed in this chapter.

As for other sources of information, the Internet is replete with web pages on rule-based systems and scripting shells. If you conduct an Internet search on rule-based systems, often abbreviated RBS, you’re sure to find tons of links to pages that discuss rule-based systems in some context or another. Here are some Web sites that we find helpful for beginners: