Chapter 14. Create Test Cases and Documentation

Use cases and test cases work well together in two ways: If the use cases for a system are complete, accurate, and clear, the process of deriving the test cases is straightforward. And if the use cases are not in good shape, the attempt to derive test cases will help debug the use cases.

—Ross Collard [Collard 1999]

What’s in this chapter?

This chapter presents an integral part of the software development process: testing and documenting the system. Use cases play a fundamental role in driving these activities. When use cases are combined with test cases and documentation, a round-trip vehicle is created to verify and explain the system functionality.

Documentation and test cases are the flip side of use cases. Use cases describe what the system will be like, while test cases ensure that the system is all that was promised. Documentation describes how systems actually behave. As a system is realized in the software development process, it moves from specification via use cases into testing, and finally into documentation. As you can see, there is a direct relationship between these three areas.

Use cases provide the vehicle for test cases and documentation. They are created early in development, so test and documentation plans can be put in place to coincide with the development schedule. When an incremental approach is used to construct a software system, testing and documentation of the system can be incremental as well. Performing test cases and writing documentation can be distributed over the course of software development instead of being left until the end.

Creating a Test Strategy

Many things can go wrong with a system. Perhaps the easiest to test is the functionality of the system as defined by a use case model. However, if we stopped testing and shipped our product having tested nothing else, we would be doing our user community and our company a great disservice. There are many other things that can go wrong.

Many types of problems occur based upon requirements not found in any use case. Some of these problems stem from the execution platform or interaction with other products, which are beyond the use case model entirely. But many problems represent implicit assumptions in the use case model. These assumptions can be used to test the use case model, as well as the product, for accuracy and consistency.

One problem frequently encountered is the interaction between the use cases. The use case model may have an implied order such as “Submit loan request,” “Evaluate loan request,” “Generate loan agreement,” and “Generate approval letter.” This is the natural order of the actions of loan processing. But what if I attempt to generate a loan agreement on a submitted (vs. approved) loan request? How about generating a loan agreement on a denied loan request?

Range checking and error handling of entered values represent another class of problems that the system must be able to handle. What is the maximum dollar amount that can be requested? Does the system handle sufficiently large dollar amounts? How about negative dollar amounts? How large is too large? (Remember that the year 1999 was always considered large enough to handle any system dates that might be encountered.) Ranges must reflect the ability for the system to “grow” over time.

A complete test strategy includes functional testing, interaction testing, testing range/error handling, and many more forms of testing beyond the scope of this book. A complete test strategy requires a plan of attack on the areas that present a risk to the project. Many of these areas are domain specific. Is the project mission critical, graphical user interface-centric, scalable, and security conscious? Answers to these questions will determine the areas that need to be tested to achieve the level of quality desired. We restrict this chapter to the three forms (functional, interaction, and range/error checking) that may be derived implicitly or explicitly from the use case model.

Creating a Test Plan

Once the initial use case model is complete, it is time to begin creating a test plan. The first decision that has to be made is the level of the plan. How many test plans should we build? If the answer is one, it must encompass the entire product and contain test information for each increment. This approach has the following disadvantages [Black 1999]:

• Communication. The test plan serves as a communication vehicle for developers, analysts, management, and testers. All these people should review the plan to ensure proper functional coverage and that all of the risks are accounted for. Monolithic documents can be intimidating to start with. A monolithic plan may make it difficult to find the information relevant to the area in which the stakeholder has concern.

• Timeliness. If an incremental approach is used to develop the system, timeliness may be an issue. High-priority use cases may be defined in the early increments while detailed descriptions of lower-priority use cases may be delayed until later increments. The result may be a test plan that is waiting for information when the first increments are ready for testing.

• Goals. Early test cases need to concentrate on ensuring that the architecture based upon the high-priority use cases will hold together. Prioritizing use cases to reduce risk in the product was discussed in Chapter 4. Test cases may help expose new risks or areas where changes in the architecture must be made to build a more stable product. Test cases in the middle increments focus on functionality. Later test cases focus more on integration and regression as more of the functionality is added. Each of these objectives may deserve its own test plan.

One advantage of the all-in-one test plan is ability to keep the information in a single document consistent. Less cutting and pasting is done, as there is one single document in which the information resides. Even with these advantages, this type of test plan is more apropos to a project utilizing the single-iteration or waterfall approach.

Another approach to test plans is to create one test plan for each use case [McGregor 1997]. Many project plans schedule increments around use cases or scenarios. This approach allows maximum flexibility should use cases be moved from one increment to another as plans change. It also allows traceability back to the requirements model should the requirements change [McGregor 1997]—and one thing you can depend on is requirements and plans changing.

Finally, test plans may be written for each increment of software development. Test plans are kept in sync with the software development project plans, and as the development plans change, so do the test plans. The test plan is partitioned so that each use case has its own section. Changes result in the section being moved from one plan to another, and both plans are updated accordingly.

Each of these approaches has merits. The characteristics of the project and the personal preferences of the managers involved ultimately determine which approach is used.

Elements of a Test Plan

There are many forms of a test plan template ([Black 1999], [Bazman 1998]), including several IEEE standards [IEEE 1998d]. These templates are generally divided into the following sections.

• Introduction. The introduction describes the system being tested, purpose of the plan, and objectives of the effort. Elements that differentiate this test plan from other test plans for the same project are discussed.

• Scope. This section describes what is to be tested as part of the plan and what is not. An inclusions/exclusions table or section clarifies the range of activities involved. Criteria necessary to enter and exit the test can be included.

• Schedule. The schedule determines when the testing effort should start and be completed. As the test relies on development to fix the bugs “blocking” test cases, a certain amount of turnaround time is included. The schedule is naturally contingent on receiving the deliverable from development on time.

• Resources. This section describes who will be testing and managing the test effort. Test equipment and other hardware or testing apparatus necessary for the test effort is included in this section.

• Risks. The risks section explains events that might keep the test plan from being carried out. Contingency plans, if available, are described in this section.

Use cases make their first impact on the testing process with the test plan. Use case names can be included in the scope section to describe the system uses that will be tested under the plan. When only certain scenarios of a use case are available, the test plan can include one specific scenario while excluding others.

The scheduling section can be directly tied to the use case model. As test cases are directly tied to the scenarios of a use case, test cases can be scheduled by their parent use case. This provides traceability back to the development schedule where the use case was used to schedule the development of certain functionality. Before this section of the test plan can be created, the test cases must be developed to understand how much work is involved.

Creating Test Cases

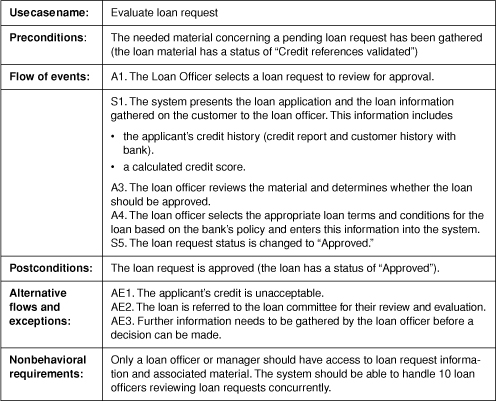

Although use cases play a small role in the development of test plans, they directly drive the development of the functional test case. In fact, we can create a suite of test cases or test suite from a single use case. Each test case represents a scenario in the use case. To see how functional test cases are created from use cases, let’s walk through a sample use case, “Evaluate loan request” (Figure 14-1).

Figure 14-1. The “Evaluate loan request” use case

Certain steps in the use case represent decision points where the system needs more information from an actor. Following [Collard 1999], we have numbered the steps in the use case to provide a conceptual view of how use cases can be made into test cases. The “A” next to a step indicates the step requires feedback from an actor to be performed; an “S” indicates that the system is performing that action. An “E” indicates that the step is an exception condition and not part of the normal course.

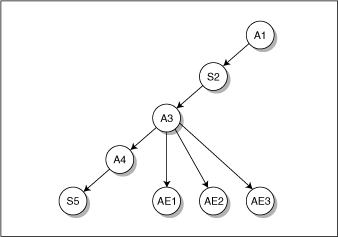

If you do not use the step approach to describing the use case flow of events, you can easily convert your prose description by circling the steps in the description and numbering them in the margin. Or, you can rewrite your prose or state-driven use cases in a step format. Once the steps are numbered, you can create a tree depicting the paths through the use case (Figure 14-2).

Figure 14-2. Graph of the paths through the “Evaluate loan request” use case

The “Evaluate loan request” use case indicates four paths through the system:

A1–S2–A3–A4–S5

A1–S2–A3–AE1

A1–S2–A3–AE2

A1–S2–A3–AE3

Of those paths, the first one leads to a successful outcome while the other three lead to an unsuccessful outcome. Right off the bat, we can create four test cases, one to test each path. We have one positive test case, a test case that leads to a successful result, and three negative test cases. These test cases are the absolute minimum necessary to test the base functionality of the system.

To create test cases from use cases, we reverse the process by which scenarios become use cases. We replace the abstract entities of the use case with specific instances [McGregor 1999a]. This replacement occurs in the preconditions, postconditions, flow of events, and exceptions. The preconditions map to the setup section of the test case and the postconditions to the tear-down section (Figure 14-3).

Figure 14-3. “Approve loan request” test case

Each step in the test case must have a status of passed, warning, or failed after the test case is completed. When a step is failed and the next step cannot be run until the problem is fixed, the step that cannot be run has a status of blocked. The overall status is determined by the sum of the status of the steps in the test case. Policies about failed, warning, blocked, and passed steps and their impact on the overall test case should be described in the test plan.

Testing Range/Error Handling

We have examined the various functional paths that software might traverse and created test cases. However, we have not determined that the system will proceed under adverse conditions. We also have done little to test a use case model. To provide better test coverage, we need to look at the inputs to the system, specifically, the circumstances in which the inputs will have an adverse effect on the system.

To understand where the system no longer has control of its information, we again look at the steps where the actors provide information to the system. We need to test the steps to determine if logic is accurately checking appropriate boundary conditions. We are also looking for places where implicit assumptions are made in the use case model.

Implicit assumptions are common to any area where communication takes place. Use cases are no exception. To understand implicit assumptions, we look at those places where decisions are made. Is the system limited in its ability to receive information beyond what is called for in the use case? If so, what is the impact of that information on the system?

A common indicator of these types of problems may be found in the use case path tree. Consider step A1 in the “Evaluate loan request” use case tree (Figure 14-4). What other potential responses could there be? What happens if no loan request applications exist? Obviously, a loan officer cannot select one, so what happens when a loan officer attempts to do so? There must be a missing precondition or system response.

Figure 14-4. Missing exception in the “Evaluate loan request” use case

The best way to track implicit assumptions is to look at the actor decision points (nodes marked with an “A”). Are all the possible inputs covered? It is unusual to find a decision point without any potential for errors. That is, there are almost always two or more children representing system responses to each actor response. One is usually the path to success, the others are the failure paths. Failure paths need not remain paths to failure. Corrections may be made that bring the use case back to the path of success.

Range checking is another area where the decision points can be exploited. Fundamental business rules come into play at decision these points to ensure that the information given is correct. For example, can a loan officer make a loan at 0% interest? How about a negative interest rate? Range checking involves giving the system potentially damaging data at these decision points to check its response.

The system may prevent some of these types of data from being entered. For example, the entry field for the interest rate may not allow a minus to be entered (it might ignore the minus) as it is invalid information. This must be stated in the use case (as negative interest rates are not allowed) or the system designers will not create the functionality for implementation. If invalid data is allowed to be entered into the system, the appropriate system response must be added to the use case model and the step tree.

Test cases can be written to test the completeness of the validation logic of the system. Boundaries of the data ranges should be tested to ensure that the conditions of the use case are met. These test cases follow the same format used in test cases to test system functionality.

Testing Interactions

Interactions between use cases is one of the most difficult areas to test. This is because of the sheer number of potential combinations formed by even a moderate size use case model. As a result, exhaustive testing of use case interactions is next to impossible. However, we can use certain techniques to look for areas where interaction between use cases is likely to cause problems.

The easiest area is the preconditions of a use case. If a use case has a certain precondition that must be met, is there another use case that leaves the system in a state where that precondition is violated? There are two ways this might happen. The first is when an intuitive step in the process is left out. For example, what if we perform “Submit loan request” followed by “Evaluate loan request,” leaving out the necessary credit information provided by “Enter validated credit references”?

The second scenario where the system might enter an awkward state is if an exception condition is met in a use case. What if a loan request is submitted, leaving out the principal? Matching up the use cases that present these types of problems is a simple way to test interactions (Figure 14-5). Once the use cases are matched up, select the test suites corresponding to the use cases. In the test suites, the appropriate functional test cases can be found and combined to form interaction test cases.

Figure 14-5. Combining test cases from different test suites to achieve an interaction test case

Preconditions are one form of interaction that need to be tested; there are other forms of interaction problems. One way of narrowing down interactions is to look at objects shared between use cases. In Chapter 12, we created CRUD matrices between objects and use cases. If we combine these matrices, we can see how use cases relate to each other via the objects they touch.

Specifically, we are looking for situations where an object is read, updated, or deleted in one use case and created in another. What happens when the object does not exist? We call this condition “read before creation” and denote it “RC.” Another condition we are looking for is when one use case deletes an object that another use case is reading or updating. We call this condition “read after delete” or “RD.”

Many interactions are caught using the precondition approach. However, missing preconditions can be found by creating an interaction matrix (Figure 14-6). The appropriate interaction test case is created by combining the functional test cases that involve the “RCs” and “RDs.”

Figure 14-6. Use case interaction matrix

There are many other forms of interactions, including those that occur at the subsystem and class level. For an explanation of testing that may be performed at these levels, see McGregor [1999b].

Creating User Documentation

We have seen how easy it is to create test cases given a use case model. Documentation is another area where the use case model can help to structure and scope the work involved. Like test cases, documentation involves much more than use cases. While use cases and test cases can assume some of the details, documentation cannot afford that luxury. This is because users may not be able to ask subject matter experts the kinds of questions that developers and testers can ask during the project. Documentation must be complete, and it must be easy to read.

Traditionally, documentation was structured by product function. Modern approaches to documentation are task-orientated [Hargis 1998]. The task-oriented approach describes how to use the system to do a specific task. This modern approach breaks from the traditional way of structuring documentation by product function.

The first step to writing good documentation is to understand your audience. The audience for the documentation is the people who will use the system. Of course, users are characterized in the use case model by actors. But writing documentation requires a little more than understanding the needs of users. It involves putting yourself in their shoes, understanding their needs, and then communicating how the system meets their needs.

Once an understanding of the audience is achieved, the next step is to examine the tasks they want to perform with the system. This is exactly what the use case model offers. The use case model describes the ideal system. As a result, many details that have been provided through implementation (such as user interfaces) are not part of the use case model. Hence, the use case model provides a task-based outline for the documentation. The technical writer can use the outline to express the value of the system in terms the user can understand.

Software system use cases usually map directly to topics or tasks in the documentation. These tasks, when logically ordered, form a user’s guide. Tasks should be placed in the user’s guide in the order in which the user will perform them. “Submit loan request,” for example, should appear in the user’s guide prior to “Evaluate loan request.”

Documenting the system can also be a form of system verification. Starting with the use case model as a guide to how the system should work and then performing the task with the actual system often leads to suggestions for usability improvements. Documentation people often contribute a valuable outside opinion that can lead to a polished system.

Conclusion

Many forms of testing must occur to verify a system. Some tests can be based directly on information in use cases; other tests, such as of platforms, interpretability with other products, scalability, and so on, cannot. In this chapter, we discuss test cases based on information in use cases (and some based on the absence of information in use cases). For a full discussion of testing, we refer the reader to the excellent work of John McGregor [2000, 2001].

Documentation, like all communication, is very much an art form. Those skilled in this craft can make technically challenging systems approachable. It is rare to find someone who is capable of understanding a very complex software system and can effectively communicate that understanding to every level of user from novice to power user. For more about task-based documentation, the reader is referred to Hargis [1998].