Chapter 19. Ensuring a Successful Use Case Modeling Effort

What’s in this chapter?

This chapter discusses the quality attributes of a good use case model when it is used to represent requirements. The various roles that use case modeling can play within a system analysis effort are discussed as well. Iterative and increment development with use cases is also briefly outlined.

How do you know you’re developing a use case model “right”? As we said in Chapter 5, use case modeling can be applied in many ways. Some developers like to do it very formally and comprehensively, while at the other extreme, others utilize use cases as an informal way to elicit requirements from users. In the formal approach, the use case model is large, comprehensive, and detailed; in the informal approach, the use case model is high level and omits cursory details. Some developers like to develop a comprehensive use case model early in project development; others create and implement the use case model iteratively. Which is the correct method? Is there a middle ground? We have seen projects successfully implement systems using each one of these approaches—and we have also seen projects fail with each of these approaches.

What separated the successful from the unsuccessful projects was the planning. The developers of successful projects understand the role that use cases will play in a project. They also understand the strengths and weaknesses of each approach and plan accordingly. For example, if a large project plans to use the use cases as the requirements and then creates a high-level, informal use case model, requirements will be missing, incomplete, and ambiguous, leading to a failed development effort. If, on the other hand, a project plans to use the use cases to help communicate the system requirements to the customers and then creates a very detailed, comprehensive model with lots of use cases, with many include and extend relationships, and with lots of logic and business rules in the flow of events, the validation can bog down in the details and the project is most likely to be unsuccessful.

Appropriate Level of Detail and Organization in the Use Case Model

No matter which of the different techniques and approaches for elaborating the use case model are used in a use case modeling effort, the base behaviors of the system must be captured. And, depending on the level of use case modeling ceremony and formality, it is important not to disregard the additional details, alternative flows, and common behaviors associated with a complex information system. It is important that the completed use case model be as comprehensive and thorough as possible to ensure that the user’s needs are understood and that the right system is being built. As we’ve said, the primary cause of system development failures is the lack of a good understanding of system requirements. At the same time, it is important to chose the right level of detail to expand the model. The right amount of detail depends on the complexity of the system being modeled.

Like other analysis techniques, a key to use case modeling is knowing when to stop. Remember that the goal is a good enough understanding of the system’s functionality so that the requirements can be captured and validated with the users. At the same time, it is important to avoid overanalysis and unnecessary detail.

How many use cases do you need? What is the proper level of detail? How should the use cases be organized? For large information systems, there may be dozens of base use cases. As extends, includes, and elaborated use case descriptions are created, the number of use cases may grow into the hundreds.

There is much discussion in the use case modeling community about the appropriate level of formality of a use case model. The level of formality of a use case model directly affects the questions of when to stop and what a “good” use case is. Use case modeling efforts can be anywhere on a continuum of formality (Figure 19-1). The key to understanding the role that use case modeling will play on a project is to know the implications of the continuum.

Figure 19-1. Use case modeling formality continuum

Based on where on the formality continuum a developer decides to model a project’s use cases, there will be different development expectations for how the use case model will represent the requirements. The key goal of the project team should be to capture a quality set of requirements that can be successfully specified, validated, and implemented.

Knowing when to stop use case modeling is not a question that can be answered without first knowing the place use cases have in the requirements analysis. No matter what approach a developer selects, careful organization of the use cases as well as maintenance of an appropriate and consistent level of detail are critical to the success of a modeling effort. The following are some criteria for judging whether a system is modeled at a sufficient level of detail.

• Can analysis objects and their behaviors be realized relatively smoothly from the use cases, or are some behaviors difficult to model with objects due to functionality that the use cases failed to model? In these situations, it might be helpful to continue use case modeling until it helps clear up the ambiguity.

• Is the high-priority functionality (i.e., the functionality is expected to be developed in the first increments or releases) modeled to the point where solid, validateable requirements can be derived? If not, more analysis is needed.

• Could system or acceptance test scripts be generated easily from the use cases? If the answer is yes, the use cases are probably detailed enough.

• Can a complete set of verifiable and unambiguous traditional requirements be derived from the use cases?

We prefer to use use cases to capture a broad understanding of the requirements and to keep the very detailed business logic and interface specification out of the use case model. The more detailed requirements, however, are defined and documented in the appropriate artifacts so that they can be traced back to the use cases. Requirements that are in forms difficult to represent in the use cases can also be associated this way. These representations might include spreadsheets, diagrams, report layouts, GUI screens, and so on.

Attributes of a Good Use Case Model When Specifying Requirements

Before we can discuss what a good use case is, we need to understand what a good requirement is. The IEEE [IEEE 1998b] defines a well-formed requirement as

a statement of system functionality (a capability) that can be validated, that must be met or possessed by a system to solve a customer problem or to achieve a customer objective and that is qualified by measurable condition and bounded by constraints.

If use cases have a place in modeling and representing requirements, it is only proper that we hold them to, and evaluate them based on, the standards to which we hold traditional requirements. A number of quality requirement frameworks are used to evaluate requirements and can be applied and customized for use cases. We choose the quality attributes outlined in the IEEE recommended practices for software requirements [IEEE 1998a] and Alan Davis’s book, Software Requirements: Objects, Functions, and States [Davis 1993]. These include:

• Correct

• Complete

• Understandable by the customers and users

Requirement quality attributes are also discussed in numerous other references including Davis [1997], Robertson [1999], and Leffingwell [2000]. A good use case model may not have all the attributes, depending on the role of the use case model. However, the quality attributes can be customized for specific use case modeling efforts. They can then be used to help organize use case walk-throughs and review sessions. If the use case model does not have these attributes, it is up to the developer to figure out alternative and/or supplemental means to represent requirements that do.

Correct

A use case model is correct if every use case contained in the model represents a piece of the system to be built; in other words, no use case should contain a behavior or nonbehavioral requirement that is not needed by the system. In addition, simply because a user initially states that the system should do something does not necessarily make that behavior right. It may be that given the resources, time constraints, or other development constraints, the behavior cannot be implemented. It may also be true that one user’s needs conflict with other stakeholders’ needs. To help ensure correctness, make sure that all the use cases are reviewed and agreed on by all the stakeholders. It may be that the user needs to be helped to “understand the real needs” with the assistance of the use case modelers.

A common mistake made in this regard is when use cases end up modeling the “as is,” and not the “to be.” It is very easy for users to provide information for the use cases that is based on their current perception of their needs, not what the system will need to do in the future. In one example, a set of users defined use case for reporting that stated that large amounts of paper reports would need to be generated. When we asked the users why, the response was that the information needed to be mailed to a number of other outside organizations. We helped the users understand that their real need was not to print a lot of paper but to distribute information to outside groups and that an electronic means was the best way.

Use cases also age; that is, a use case that represents a correct understanding of what the system should do now may not be correct a year and half from now, when the system is implemented. Changing business conditions, new customers, and users all impact correctness.

To address issues of correctness, ask the following questions.

• To what extent have the users/customers been involved in the use case process?

• Is each use case an accurate reflection of the users’/customers’ need? Why or why not?

• Have the users/customers given careful consideration to each use case?

• Are the use cases traced back to their source? Does each use case in the model trace to a business use case, BPR model, interview, or requirements workshop?

• Have domain experts who are independent from the users/customers reviewed the use cases?

• Has the project management determined that the use cases in the model are feasible and implementable?

• Have applicable industry standards, government regulations, and laws affecting the system’s functionality been considered?

Unambiguous

A use case model is unambiguous if every use case in it has only one interpretation; in other words, if 10 stakeholders looked at a use case, they would all agree on the system functionality that the use case represents. An unambiguous use case reduces the chances that someone later in development will say, “That is not what I thought the use case did.” Ambiguity in requirements is one of the major causes of disappointing system efforts. Many times people read into a use case what they would like the system to do. Although it might appear that the customers and developers agree on what the system should do, in reality they are expecting different functionality. The result is usually unintentional and innocent, but unmet expectations can cause hard feelings and lack of trust to develop between the customers and the development team.

For example, in the use case “Withdraw funds,” an activity in the flow of events is written as follows:

• The system shall take appropriate action if the customer attempts to overdraw the account.

The activity would be less ambiguous if it were written as follows:

• If an attempt is made to overdraw an account the system will

1. cancel the withdrawal.

2. respond with an message explaining the cancellation.

3. log the withdrawal attempt.

To address issues of ambiguity, ask the following questions.

• Is there a system glossary that is used in the use case model?

• Are all terms with multiple/unknown meanings defined (e.g., in the glossary)?

• Are use case activities quantifiable and verifiable?

• Can acceptance measures or testing criteria be assigned to each use case?

Complete unambiguity is a big challenge for a use case model. Use cases that are very unambiguous are likely to be very detailed, and they can be very long or have a very deep nesting of includes or subordinate use cases. This can significantly impact readability and therefore understandability. We find that an iterative approach to developing, elaborating, and implementing the use case model is a good way to deal with the situation. We also use supplemental and traditional requirements specifications to document and baseline detailed requirements. When developing a use case model for a large system, it can be a challenge to stuff all the requirements into an unambiguous use case model.

Complete

A use case model is a complete representation of the requirements [Davis 1993] if it includes

• all significant requirements relating to behaviors, performance, design constraints, and external interfaces,

• for each use case, the definition of the system outputs to all actor inputs for both valid and invalid flows,

• full labeling and references on all diagrams, figures, tables, and other documentation, and

• a definition of all terms and units of measure used.

A use case model should normally always try for broad completeness: Does it cover the broad range of behaviors that will be supported by the system? Completeness in terms of depth (i.e., does the use case model capture the detailed requirements?) is more of a challenge.

To address issues of completeness in a use case model, ask the following questions.

• Are any behaviors missing from the use cases? Business processes?

• Do the system use cases map to the business use cases or business processes?

• Do the system use cases map to the domain and analysis object models? Are CRUD or other mapping approaches used?

• Are all the actors participating in the use cases clearly defined?

• Has an interface analysis been performed? Is each interface between the system and the actors clearly defined (including information passed)?

• Are the expected input and output values defined for each actor interacting with the system?

• Are the exceptions and alternative flows documented?

• Are there any TBD references?

• Are there any undefined terms or references?

• Are all sections of the use case model complete?

To check that nonbehavioral attributes are completely specified, ask the following questions.

• Have performance considerations been addressed?

• Have security considerations been addressed?

• Have reliability considerations been addressed?

• Have capacity considerations been addressed?

Completeness in a use case model is not something that typically occurs in a waterfall approach. If an iterative development approach is taken, the use case model will be in various states of completeness. As the iterative development proceeds, completeness needs to be judged within this context.

Verifiable

A use case model is verifiable if every use case stated in it is verifiable. A use case is verifiable if a person or machine can check in a cost-effective way that the implemented system meets the behaviors contained in the use case.

To address issues of verifiability in a use case model, ask the following questions.

• Are there nonverifiable words or phrases in the use cases, such as “works well,” “fast,” “good performance,” and “usually happen”?

• Are use case activities stated in concrete terms and measurable quantities?

• Are the preconditions and postconditions stated in a manner that can be tested?

For example, a behavior in a use case such as “The system takes appropriate action if the customer attempts to overdraw an account” is not verifiable, since what is “appropriate action” is not defined.

If you are unsure whether a use case is verifiable, attempt to create acceptance test cases for it. If you cannot, then the use case is ambiguous and nonverifiable. This does not necessarily mean that the use case is flawed. If informal use case modeling is being performed intentionally, it just means that other techniques will have to be used to represent the detailed requirements.

Consistent

A use case model is consistent if no two individual use cases described in it have conflicting behaviors. Types of conflicts include the following.

• A common behavior specified in two different use cases has conflicting descriptions.

• The characteristics of a behavior or thing conflict. For example, a precondition of use case X implies that behaviors in use case Y must have occurred, and use case Y’s precondition implies that some behavior in use case X must have occurred.

• There is a time-related conflict between two specified behaviors. For example, one use case states that behavior A always has to occur before behavior B, but another use case states that behavior B always has to occur before behavior A.

• Two or more use cases describe the same behavior or object but use different terms.

To address issues of consistency, ask the following questions.

• Is the use case model organized in a manner that encourages consistency? Have include relationships been used to factor out common behaviors? Have business function packages and dependency streams been used to organize the model?

• Do the preconditions and postconditions of individual use cases match up?

• Do any behaviors conflict? Do statements about what a behavior does contradict each other? If two behaviors conflict, are they actually two different behaviors that need to be specified?

• Do any terms have conflicting definitions?

• Are there any temporal inconsistencies?

• Are all use cases modeled at a consistent level of detail with respect to importance?

We have found that inconsistencies are typically introduced in a use case model due to a number of possible situations, including:

• Multiple teams working on the use case model

• Updates to the model that create unseen conflicts

• Updating the model and not taking or having the time to review it for consistency

• Formally not defining terms

It is very important not to skip good review, integration, and validation practices in the use case modeling effort.

Understandable by Customers and Users

Use cases should be self-explanatory. That is, the notations, models, and format of use cases should allow the stakeholders (specifically customers and users) to review, understand, and validate the use cases with a minimum amount of effort. This is obviously where use cases shine. However, to ensure clarity, the use cases need to be written in the customer’s and user’s “language” using terms that are used in the business domain. The use cases should be grouped, as discussed in Chapter 15, in a way that helps the customer and user see the big picture. If the use cases are too long or have too many extend or include relationships, understandability can be adversely affected. The attribute of understandability can conflict with the attributes of unambiguity and verifiability. The more informal the use case model is, the more it tends to be “understandable” to the customer, but the more it tends to use ambiguous wording and represent system functionality in higher-level, nonverifiable terms (Figure 19-2). Because informal models are ambiguous, they can be hard to validate accurately. Formal techniques can be simplified and made more understandable using techniques such as use case organization (Chapter 15), use case views (Chapter 15), and levels of use case descriptions (Chapters 7 and 8).

Figure 19-2. Understandable versus unambiguous and verifiable

Extensible and Modifiable

A use case model is modifiable if changes to it can be made easily, completely, and consistently while retaining the structure and style. The use case model should be robust enough to handle changes or new functionality with relative ease.

To address issues of modifiability, ask the following questions.

• Are there a table of contents, glossaries, and an index?

• Are common use cases or behaviors, such as the same behavior appearing in multiple places in the document, factored into include relationships and cross-referenced?

• Is a CASE tool used to assist in maintaining the use cases?

Traceable

A use case model is traceable if the origin of each use case is clear and if it facilitates the referencing of each use case in future development or in enhancement documentation.

There are two forms of traceability:

• Backward traceability (traced). Each use case explicitly references its source (e.g., memo, law, meeting notes, business use cases, BPR results, and so on).

• Forward traceability (traceable). Each use case has a unique name or reference number so that design components, implementation modules, and so on can reference it.

Traceability is facilitated by uniquely identifying each use case, including each extending and included use case. In some situations, each activity within a use case will need to be traced; in this case, the unique ID would be a combination of the use case’s ID and the activity step number in the flow of events.

Prioritized

A use case model is prioritized if the relative importance of each use case is understood. A use case is prioritized if it has an identifier to indicate either its importance or its stability. Each system use case may not be equally important. Some use cases may be essential, while others may be desirable.

Example prioritized use cases for an ATM use case model might include

• Withdraw funds, deposit funds (essential)

• Pay bills (conditional)

• Buy certificate of deposit (CD) (optional)

To address issues of priority, ask the following questions.

• Is each use case identified and its priority noted?

• Are the criteria used to prioritize clearly defined?

• How are the use case priorities specified (e.g., essential, conditional, optional)?

• Are the priorities being used to drive schedule and cost?

Incremental and Iterative Development with Use Cases

As we mentioned in Chapter 5, it is neither likely nor desirable for a project to implement its entire set of functionality in one “big bang” approach. Rather, what typically happens is that requirements (e.g., use cases) are defined at a conceptual or base level. Based on project priorities, a subset of the use cases is expanded and developed, and feedback goes into the next increment, where the next set of selected use cases is expanded and realized in design and implementation. Hence, the use case model is in varying states of completeness at different times during iterative development. When discussing this form of development, we like the concepts used by Cockburn [1998] to distinguish between incremental and iterative development.

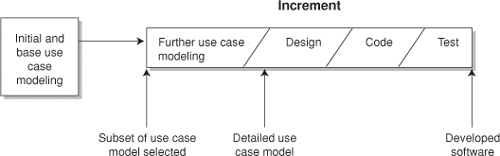

Incremental development is defined as the development of a system in a series of versions, or increments. A subset of functionality is selected, designed, developed, and then implemented. Additional increments build on this functionality until the system is completely developed. Iterative development is planned rework of existing functionality. It is common practice in the industry to use the term iterative development to represent both concepts.

There are many possible process models for system development, with no one approach that works best in all situations. We like to develop a complete set of initial use case descriptions up front, validate them with the stakeholders, and, depending on the size and complexity of the system, expand on the initial descriptions to create base use cases as well as major extend and include relationships. Then, based on development priorities, a subset of the base use cases, extensions, and includes is chosen and used to drive the incremental development. These use cases are a scoping mechanism for an increment; the acceptance criteria for an increment are based on the use cases selected to be developed. The use cases that are selected are further elaborated with detail early during the increment. A single increment is represented in Figure 19-3.

Figure 19-3. Single increment of incremental and iterative cycle

In early increments, select use cases to develop that are very important to the users/customers so that you can get feedback early in the development cycle. Also, architecturally significant use cases, such as ones that represent infrastructure that supports or enables functionality present in other use cases, will also be developed in early increments. Throughout this process, plan on different parts of the use case model being in different states of detail and completeness at different times. Also, since use case instances tend to be more specific than the use case they depend on, they can be used to more finely plan an incremental/iterative development effort.

Estimating and incremental definition take place at multiple places in the incremental/iterative development process. First, during or after initial and/or base use case modeling, a high-level master plan laying out the number of increments, their general scope, and the overall project length should be made. Then, when each increment is planned, another more detailed planning and estimating process should occur for that increment. Throughout the development process, the master plan will need be revisited and revised based on the actual results of the increments.

The advantage of an iterative and incremental approach is that it is easier to plan, estimate, and predict several four- to twelve-week increment development efforts than a two-year waterfall project. For increment scheduling, good acceptance criteria—that is, criteria that define what test cases or other evaluative measures will be applied to the completed system to ensure that it has met its requirements (based on the use cases and other requirements specifications)—are critical before beginning an increment. Without good verifiable acceptance criteria, functionality that should be developed in an early increment is intentionally shifted to later increments. The result is that the project subtly falls behind, with later increments becoming burdened with impossible amounts of functionality to develop. A common problem we have seen is that projects don’t have good increment exit criteria, so when the time is up for an increment, it is easy to move functionality that was not completed in that increment to a later increment, resulting in the same insidious “scope overload” in later increments. Once stakeholders agree on acceptance criteria early in the increment, the use cases and other requirements for that increment should be “base-lined.” If the users or customers wish to add functionality after this point, the new requirements should be assigned to a later increment. If the customer demands that new functionality be added to this increment, then the developers must explain that the increment will need to be “broken” and replanned and rescheduled based on the added functionality. A diagram representing a simplified increment cycle is presented in Figure 19-4.

Figure 19-4. Incremental development cycle with use cases

Early increments are typically more focused on requirements definition and testing the feasibility of architectural decisions, and later increments are more focused on code and testing. The amount of time you spend in each step will vary depending on which increment you are in. Additionally, it is dangerous to do too much coding before the majority of the requirements are known.

At the end of each increment, do a postmortem on what worked well and what did not work with the use cases (as well as the other development practices) [Armour 1996b]. Reviews help facilitate process improvement and the learning process. Modify your use case practices according to the lessons learned in the increment. Use case practices should be modified based on input from developers and stakeholders. This is an easy step to skip because you will feel pressure to start right in on the next increment. But if you do skip this step, you will pay for it later. The developers, not just management, should be actively involved in the review not only for valuable insight but also for ownership of the changes.

A number of factors affect incremental and iterative development, including architectural decisions, time, functional priority, and resources. We have addressed these issues only as they relate to use cases. For a further discussion of use cases and incremental development, see Rowlett [1998]. For a discussion of iterative development during OO development, see Chapter 4 of Krutchen [1999].

Know When Not to Use Use Cases

Use cases are excellent for capturing behavioral or functional aspects of a system. However, they are not normally as effective in modeling the nonbehavioral aspects, such as data requirements, performance, and security. Additional techniques are required to define these nonbehavioral aspects. For example, object and data modeling can be used in conjunction with use case modeling to capture information needs. Because of this, use cases should be integrated with other models. Maintaining consistency and traceability between the models is important and necessary.

Use cases or specific activities within use cases can be selected for prototyping. Prototypes complement use cases, particularly for behaviors that are difficult to model with use cases alone. For example, prototyping is an effective approach for modeling ad hoc queries, report generation, what-if analysis, and similar activities. Testing the feasibility of a use case can also be performed with prototyping.

Questions to Ask When Use Case Modeling

Following are some questions to ask when planning for a system use case modeling effort.

Goals of the use case model

• What is the goal for the use case model? What is the project attempting to model?

• Based on the goal for use case modeling, what type of use cases are going to be created (e.g., business system use cases, use cases to model the GUI, etc.)?

Use case model approach

• What stopping criteria will be used? How will you know when to stop use case modeling?

• What can’t be modeled well with use cases? What other techniques will be used to capture this information?

• How will the nonbehavioral requirements be modeled?

• What will the guidelines be for the length of the individual use cases?

• Are batch processes to be represented with use cases?

• Will iterative and conditional logic be used in the use cases?

• Will the use cases be mapped to traditional requirements? Will both techniques be used on the project?

• How will incremental and iterative development be performed with use cases?

• How are use case dependencies to be modeled?

• Will the use cases be used for non-OO development? If so, what other models will be used? How will they relate to the use cases?

• Will functionality not visible to the user be placed in the use cases?

Use case quality guidelines

• Have walkthrough/review tasks and procedures been formally defined and included in the task plan?

• Are configuration management procedures and tools in place to control the evolution of the use case model? (Yes, it will evolve.)

• What templates are being used to specify the use cases? Are the templates based on industry practice?

• What level of use case modeling is to be performed?

• Are there written standards/style guides for the use case model (e.g., a use case writer’s handbook)?

• Are the use cases traced back to their source?

• Is there an approach to deal with incompleteness (e.g., iteration, traditional SRS)?

• Are both behavioral and nonbehavioral requirements being specified?

Conclusion

At the beginning of a use case effort, it is critical that the project team determine first what the goals of the use case modeling are and how use case modeling fits into the overall system development goals. The use case modeling can vary from the very informal to the very formal. In any case, if the use cases represent requirements, quality requirements attributes should be applied to them. Iterative and incremental development can be used to expand and develop the use case model. No matter what development approach a project selects, it is critical to organize the use cases carefully and maintain an appropriate level of detail.

The goal of use case modeling is to understand system functionality sufficiently to be able to validate your understanding with users and to motivate the design and implementation, resulting in a system that meets the customers’ and the users’ expectations.