CHAPTER 10

NETWORK SECURITY1

THIS CHAPTER describes why networks need security and how to provide it. The first step in any security plan is risk assessment, understanding the key assets that need protection, and assessing the risks to each. There are a variety of steps that can be taken to prevent, detect, and correct security problems due to disruptions, destruction, disaster, and unauthorized access.

OBJECTIVES ![]()

- Be familiar with the major threats to network security

- Be familiar with how to conduct a risk assessment

- Understand how to ensure business continuity

- Understand how to prevent intrusion

CHAPTER OUTLINE ![]()

10.1.1 Why Networks Need Security

10.1.2 Types of Security Threats

10.2.1 Develop a Control Spreadsheet

10.2.2 Identify and Document the Controls

10.2.3 Evaluate the Network's Security

10.3 ENSURING BUSINESS CONTINUITY

10.3.2 Denial of Service Protection

10.3.4 Device Failure Protection

10.4.2 Perimeter Security and Firewalls

10.4.3 Server and Client Protection

10.4.6 Preventing Social Engineering

10.4.7 Intrusion Prevention Systems

10.5 BEST PRACTICE RECOMMENDATIONS

10.6 IMPLICATIONS FOR MANAGEMENT

10.1 INTRODUCTION

Business and government have always been concerned with physical and information security. They have protected physical assets with locks, barriers, guards, and the military since organized societies began. They have also guarded their plans and information with coding systems for at least 3,500 years. What has changed in the last 50 years is the introduction of computers and the Internet.

The rise of the Internet has completely redefined the nature of information security. Now companies face global threats to their networks, and, more importantly, to their data. Viruses and worms have long been a problem, but credit card theft and identity theft, two of the fastest-growing crimes, pose immense liability to firms who fail to protect their customers’ data. Laws have been slow to catch up, despite the fact that breaking into a computer in the United States—even without causing damage—is now a federal crime punishable by a fine and/or imprisonment. Nonetheless, we have a new kind of transborder cyber crime against which laws may apply but will be very difficult to enforce. The United States and Canada may extradite and allow prosecution of digital criminals operating within their borders, but investigating, enforcing, and prosecuting transnational cyber crime across different borders is much more challenging. And even when someone is caught they face lighter sentences than bank robbers.

Computer security has become increasingly important over the last 10 years with the passage of the Sarbanes-Oxley Act (SOX) and the Health Insurance Portability and Accountability Act (HIPAA). The number of Internet security incidents reported to the Computer Emergency Response Team (CERT) doubled every year up until 2003, when CERT stopped keeping records because there were so many incidents that it was no longer meaningful to keep track.2 CERT was established by the U.S. Department of Defense at Carnegie Mellon University with a mission to work with the Internet community to respond to computer security problems, raise awareness of computer security issues, and prevent security breaches.

Approximately 70 percent of organizations experienced security breaches in the last 12 months.3 The median number of security incidents was four, but the top 5 percent of organizations were attacked more than 100 times a year. About 60% reported they suffered a measurable financial loss due to a security problem, with the average loss being about $350,000, which is significantly higher than in previous years. The median loss was much smaller, under $50,000. Experts estimate that worldwide annual losses due to security problems exceed $2 trillion. Some of the most notable security breaches of 2010 were the AT&T's website hack that exposed the email addresses of 114,000 iPad3G owners and the Aurora Attack that targeted Google and affected dozens of other organizations that collaborate with Google.

Part of the reason for the increase in computer security problems is the increasing availability of sophisticated tools for breaking into networks. Ten years ago, someone wanting to break into a network needed to have some expertise. Today, even inexperienced attackers can download tools from a Web site and immediately begin trying to break into networks.

Two other factors are also at work increasing security problems. First, organized crime has recognized the value of computer attacks. Criminal organizations have launched spam campaigns with fraudulent products and claims, created viruses to steal information, and have even engaged in extortion by threatening to disable a small company's network unless it pays them a fee. Computer crime is less risky than traditional crime and also pays a lot better.

Second, there is considerable evidence that the Chinese military and security services have engaged in a major, ongoing cyberwarfare campaign against military and government targets in the western world.4 To date these attacks have focused on espionage and disabling military networks. Most large Chinese companies are owned by the Chinese military or security services or by leaders recently departed from these organizations. There is some evidence that China's cyberwarfare campaign has expanded to include industrial espionage against western companies in support of Chinese companies.

As a result, the cost of network security has increased. Firms spent an average of about 5 percent of their total IT budget on network security. The average expenditure was about $1,250 per employee per year—and that's all employees in the organization not per IT employee, so that an organization with 100 employees spends an average of $1.25 million per year on IT security. About 30 percent of organizations had purchased insurance for security risks.

10.1.1 Why Networks Need Security

In recent years, organizations have become increasingly dependent on data communication networks for their daily business communications, database information retrieval, distributed data processing, and the internetworking of LANs. The rise of the Internet with opportunities to connect computers anywhere in the world has significantly increased the potential vulnerability of the organization's assets. Emphasis on network security also has increased as a result of well-publicized security break-ins and as government regulatory agencies have issued security-related pronouncements.

The losses associated with the security failures can be huge. An average annual loss of about $350,000 sounds large enough, but this is just the tip of the iceberg. The potential loss of consumer confidence from a well-publicized security break-in can cost much more in lost business. More important than these, however, are the potential losses from the disruption of application systems that run on computer networks. As organizations have come to depend on computer systems, computer networks have become “mission-critical.” Bank of America, one of the largest banks in the United States, estimates that it would cost the bank $50 million if its computer networks were unavailable for 24 hours. Other large organizations have produced similar estimates.

Protecting customer privacy and the risk of identity theft also drives the need for increased network security. In 1998, the European Union passed strong data privacy laws that fined companies for disclosing information about their customers. In the United States, organizations have begun complying with the data protection requirements of the HIPAA, and a California law providing fines up to $250,000 for each unauthorized disclosure of customer information (e.g., if someone were to steal 100 customer records, the fine could be $25 million).

As you might suspect, the value of the data stored on most organizations’ networks and the value provided by the application systems in use far exceeds the cost of the networks themselves. For this reason, the primary goal of network security is to protect organizations’ data and application software, not the networks themselves.

10.1.2 Types of Security Threats

For many people, security means preventing intrusion, such as preventing an attacker from breaking into your computer. Security is much more than that, however. There are three primary goals in providing security: confidentiality, integrity, and availability (also known as CIA). Confidentiality refers to the protection of organizational data from unauthorized disclosure of customer and proprietary data. Integrity is the assurance that data have not been altered or destroyed. Availability means providing continuous operation of the organization's hardware and software so that staff, customers, and suppliers can be assured of no interruptions in service.

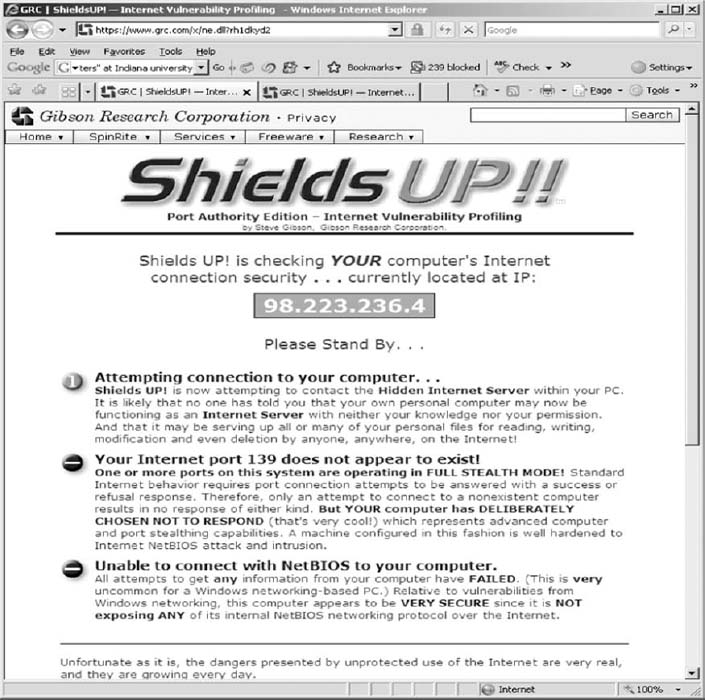

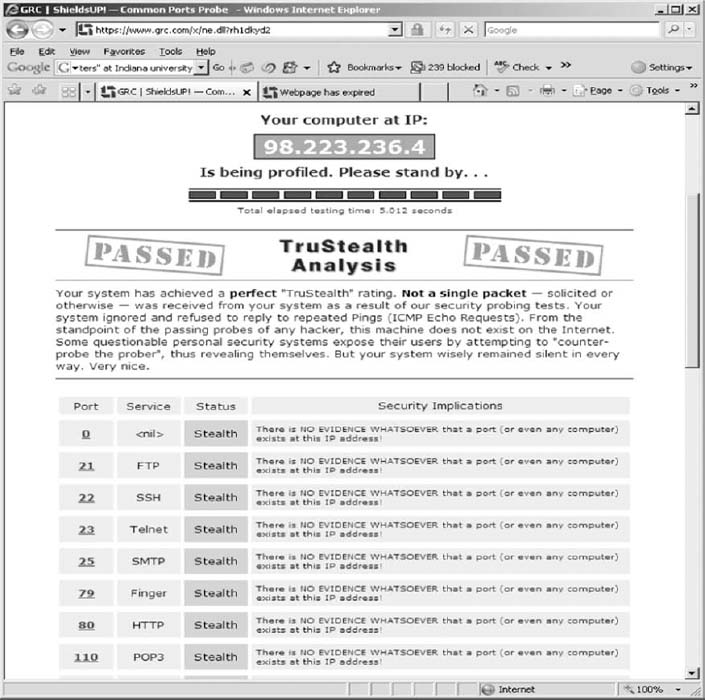

There are many potential threats to confidentiality, integrity, and availability. Figure 10.1 shows some threats to a computer center, the data communication circuits, and the attached computers. In general, security threats can be classified into two broad categories: ensuring business continuity and preventing unauthorized access.

Ensuring business continuity refers primarily to ensuring availability, with some aspects of data integrity. There are three main threats to business continuity. Disruptions are the loss of or reduction in network service. Disruptions may be minor and temporary. For example, a network switch might fail or a circuit may be cut, causing part of the network to cease functioning until the failed component can be replaced. Some users may be affected, but others can continue to use the network. Some disruptions may also be caused by or result in the destruction of data. For example, a virus may destroy files, or the “crash” of a hard disk may cause files to be destroyed. Other disruptions may be catastrophic. Natural (or human-made) disasters may occur that destroy host computers or large sections of the network. For example, hurricanes, fires, floods, earthquakes, mudslides, tornadoes, or terrorist attacks can destroy large parts of the buildings and networks in their path.

Intrusion refers primarily to confidentiality, but also to integrity, as an intruder may change important data. Intrusion is often viewed as external attackers gaining access to organizational data files and resources from across the Internet. However, almost half of all intrusion incidents involve employees. Intrusion may have only minor effects. A curious intruder may simply explore the system, gaining knowledge that has little value. A more serious intruder may be a competitor bent on industrial espionage who could attempt to gain access to information on products under development, or the details and price of a bid on a large contract, or a thief trying to steal customer credit card numbers or information to carry out identity theft. Worse still, the intruder could change files to commit fraud or theft or could destroy information to injure the organization.

10.1.3 Network Controls

Developing a secure network means developing controls. Controls are software, hardware, rules, or procedures that reduce or eliminate the threats to network security. Controls prevent, detect, and/or correct whatever might happen to the organization because of threats facing its computer-based systems.

FIGURE 10.1 Some threats to a computer center, data communication circuits, and client computers

Preventive controls mitigate or stop a person from acting or an event from occurring. For example, a password can prevent illegal entry into the system, or a set of second circuits can prevent the network from crashing. Preventive controls also act as a deterrent by discouraging or restraining someone from acting or proceeding because of fear or doubt. For example, a guard or a security lock on a door may deter an attempt to gain illegal entry.

Detective controls reveal or discover unwanted events. For example, software that looks for illegal network entry can detect these problems. They also document an event, a situation, or an intrusion, providing evidence for subsequent action against the individuals or organizations involved or enabling corrective action to be taken. For example, the same software that detects the problem must report it immediately so that someone or some automated process can take corrective action.

MANAGEMENT FOCUS

Even information technology giants, such as Google, are not safe when it comes to cyber security. The Aurora Attack began mid-2009 and ended in December 2009 when it was discovered by Google. The name Aurora is believed to be an internal name that the attackers gave to this operation. This attack is believed to be ordered by the Chinese government and its goal was to gain access and potentially modify source code repositories of high-tech, security, and defense contractors.

This wasn't a simple attack done by script kiddies, young adults who download scripts written by somebody else to exploit known vulnerabilities. Security experts were amazed by the sophistication of this attack and some claim it changed the threat model. Nearly a dozen pieces of malware and several levels of encryption were used to exploit a zero-day vulnerability in Internet Explorer and to break deeply into the corporate network while avoiding common detection methods.

This attack also hit 33 other companies in the United States, including Adobe, Yahoo, Symantec, and Dow Chemical. As a response to this attack, governments of other foreign countries publicly issued warnings to users of Internet Explorer. The Aurora Attack only reminds us that cyber security is a global problem and everybody who uses Internet can and probably will be under attack. Therefore learning about security and investing in it is necessary to survive and strive in the Internet era.

__________

SOURCES: http://www.wired.com/threatlevel/2010/01/operation-aurora/

http://en.wikipedia.org/wiki/OperationAurora http://www.wired.com/threatlevel/2010/01/google-hack-attack/

Corrective controls remedy an unwanted event or an intrusion. Either computer programs or humans verify and check data to correct errors or fix a security breach so it will not recur in the future. They also can recover from network errors or disasters. For example, software can recover and restart the communication circuits automatically when there is a data communication failure.

The remainder of this chapter discusses the various controls that can be used to prevent, detect, and correct threats. We also present a control spreadsheet and risk analysis methodology for identifying the threats and their associated controls. The control spreadsheet provides a network manager with a good view of the current threats and any controls that are in place to mitigate the occurrence of threats.

Nonetheless, it is important to remember that it is not enough just to establish a series of controls; someone or some department must be accountable for the control and security of the network. This includes being responsible for developing controls, monitoring their operation, and determining when they need to be updated or replaced.

Controls must be reviewed periodically to be sure that they are still useful and must be verified and tested. Verifying ensures that the control is present, and testing determines whether the control is working as originally specified.

It is also important to recognize that there may be occasions in which a person must temporarily override a control, for instance when the network or one of its software or hardware subsystems is not operating properly. Such overrides should be tightly controlled, and there should be a formal procedure to document this occurrence should it happen.

10.2 RISK ASSESSMENT

One key step in developing a secure network is to conduct a risk assessment. This assigns levels of risk to various threats to network security by comparing the nature of the threats to the controls designed to reduce them. It is done by developing a control spreadsheet and then rating the importance of each risk. This section provides a brief summary of the risk assessment process.5

10.2.1 Develop a Control Spreadsheet

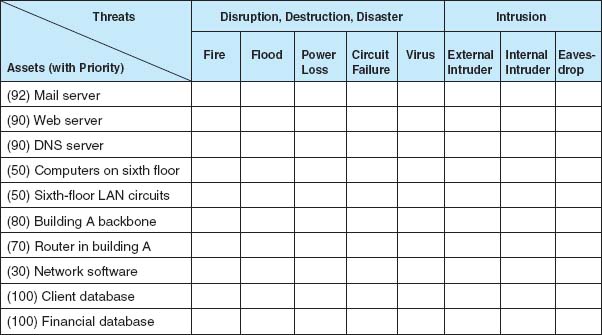

To be sure that the data communication network and microcomputer workstations have the necessary controls and that these controls offer adequate protection, it is best to build a control spreadsheet (Figure 10.2). Threats to the network are listed across the top, organized by business continuity (disruption, destruction, disaster) and intrusion, and the network assets down the side. The center of the spreadsheet incorporates all the controls that currently are in the network. This will become the benchmark on which to base future security reviews.

FIGURE 10.2 Sample control spreadsheet with some assets and threats. DNS = Domain Name Service; LAN = local area network

10.1 BASIC CONTROL PRINCIPLES OF A SECURE NETWORK

TECHNICAL FOCUS

- The less complex a control, the better.

- A control's cost should be equivalent to the identified risk. It often is not possible to ascertain the expected loss, so this is a subjective judgment in many cases.

- Preventing a security incident is always preferable to detecting and correcting it after it occurs.

- An adequate system of internal controls is one that provides “just enough” security to protect the network, taking into account both the risks and costs of the controls.

- Automated controls (computer-driven) always are more reliable than manual controls that depend on human interaction.

- Controls should apply to everyone, not just a few select individuals.

- When a control has an override mechanism, make sure that it is documented and that the override procedure has its own controls to avoid misuse.

- Institute the various security levels in an organization on the basis of “need to know.” If you do not need to know, you do not need to access the network or the data.

- The control documentation should be confidential.

- Names, uses, and locations of network components should not be publicly available.

- Controls must be sufficient to ensure that the network can be audited, which usually means keeping historical transaction records.

- When designing controls, assume that you are operating in a hostile environment.

- Always convey an image of high security by providing education and training.

- Make sure the controls provide the proper separation of duties. This applies especially to those who design and install the controls and those who are responsible for everyday use and monitoring.

- It is desirable to implement entrapment controls in networks to identify attackers who gain illegal access.

- When a control fails, the network should default to a condition in which everyone is denied access. A period of failure is when the network is most vulnerable.

- Controls should still work even when only one part of a network fails. For example, if a backbone network fails, all local area networks connected to it should still be operational, with their own independent controls providing protection.

- Don't forget the LAN. Security and disaster recovery planning has traditionally focused on host mainframe computers and WANs. However, LANs now play an increasingly important role in most organizations but are often overlooked by central site network managers.

- Always assume your opponent is smarter than you.

- Always have insurance as the last resort should all controls fail.

Assets The first step is to identify the assets on the network. An asset is something of value and can be either hardware, software, data, or applications. Probably the most important asset on a network is the organization's data. For example, suppose someone destroyed a mainframe worth $10 million. The mainframe could be replaced simply by buying a new one. It would be expensive, but the problem would be solved in a few weeks. Now suppose someone destroyed all the student records at your university so that no one knows what courses anyone had taken or their grades. The cost would far exceed the cost of replacing a $10 million computer. The lawsuits alone would easily exceed $10 million, and the cost of staff to find and reenter paper records would be enormous and certainly would take more than a few weeks. Figure 10.3 summarizes some typical assets.

FIGURE 10.3 Types of assets. DNS = Domain Name Service; DHCP = Dynamic Host Control Protocol; LAN = local area network; WAN = wide area network

An important type of asset is the mission-critical application, which is an information system that is critical to the survival of the organization. It is an application that cannot be permitted to fail, and if it does fail, the network staff drops everything else to fix it. For example, for an Internet bank that has no brick-and-mortar branches, the Web site is a mission-critical application. If the Web site crashes, the bank cannot conduct business with its customers. Mission-critical applications are usually clearly identified, so their importance is not overlooked.

Once you have a list of assets, they should be evaluated based on their importance. There will rarely be enough time and money to protect all assets completely, so it is important to focus the organization's attention on the most important ones. Prioritizing asset importance is a business decision, not a technology decision, so it is critical that senior business managers be involved in this process.

Threats A threat to the data communication network is any potential adverse occurrence that can do harm, interrupt the systems using the network, or cause a monetary loss to the organization. Although threats may be listed in generic terms (e.g., theft of data, destruction of data), it is better to be specific and use actual data from the organization being assessed (e.g., theft of customer credit card numbers, destruction of the inventory database).

Once the threats are identified they can be ranked according to their probability of occurrence and the likely cost if the threat occurs. Figure 10.4 summarizes the most common threats and their likelihood of occurring, plus a typical cost estimate, based on several surveys. The actual probability of a threat to your organization and its costs depend on your business. An Internet bank, for example, is more likely to be a target of fraud and to suffer a higher cost if it occurs than a restaurant with a simple Web site. Nonetheless, Figure 10.4 provides some general guidance.

FIGURE 10.4 Likelihood and costs of common risks

From Figure 10.4 you can see that the most likely event is a virus infection, suffered by more than 60 percent of organizations each year. The average cost to clean up a virus that slips through the security system and infects an average number of computers is about $33,000 per virus. Depending on your background, this was probably not the first security threat that came to mind; most people first think about unknown attackers breaking into a network across the Internet. This does happen, too; unauthorized access by an external hacker is experienced by about 30 percent of all organizations each year, with some experiencing an act of sabotage or vandalism. The average cost to recover after these attacks is $100,000.

Interestingly, companies suffer intrusion by their own employees about as often as by outsiders, although the dollar loss is usually less—unless fraud or theft of information is involved. While few organizations experience fraud or theft of information from internal or external attackers, the cost to recover afterward can be very high, both in dollar cost and bad publicity. Several major companies have had their networks broken into and have had proprietary information such as customer credit card numbers stolen. Winning back customers whose credit card information was stolen can be an even greater challenge than fixing the security breach.

You will also see that device failure and computer equipment theft are common problems but usually result in low dollar losses compared to other security violations. Natural disasters (e.g., fire, flood) are also common, and result in high dollar losses.

Denial of service attacks, in which someone external to your organization blocks access to your networks, are also common (35 percent) and somewhat costly ($25,000 per event). Even temporary disruptions in service that cause no data loss can have significant costs. Estimating the cost of denial of service is very organization-specific; the cost of disruptions to a company that does a lot of e-commerce through a Web site is often measured in the millions.

Amazon.com, for example, has revenues of more than $10 million per hour, so if its Web site were unavailable for an hour or even part of an hour it would cost millions of dollars in lost revenue. Companies that do no e-commerce over the Web would have lower costs, but recent surveys suggest losses of $100,000–200,000 per hour are not uncommon for major disruptions of service. Even the disruption of a single LAN has cost implications; surveys suggest that most businesses estimate the cost of lost work at $1,000–5,000 per hour.

There are two “big picture” messages from Figure 10.4. First, the most common threat that has a noticeable cost is viruses. In fact, if we look at the relative probabilities of the different threats, we can see that the threats to business continuity (e.g., virus, theft of equipment, or denial of service) have a greater chance of occurring than intrusion. Nonetheless, given the cost of fraud and theft of information, even a single event can have significant impact.6

The second important message is that the threat of intrusion from the outside intruder coming at you over the Internet has increased. For the past 30 years, more organizations reported encountering security breaches caused by employees than by outsiders. This has been true ever since the early 1980s when the FBI first began keeping computer crime statistics and security firms began conducting surveys of computer crime. However, in recent years, the number of external attacks has increased at a much greater rate while the number of internal attacks has stayed relatively constant. Even though some of this may be due to better internal security and better communications with employees to prevent security problems, much of it is simply due to an increase in activity by external attackers and the global reach of the Internet. Today, external attackers pose almost as great a risk as internal employees.

10.2.2 Identify and Document the Controls

Once the specific assets and threats have been identified, you can begin working on the network controls, which mitigate or stop a threat, or protect an asset. During this step, you identify the existing controls and list them in the cell for each asset and threat.

Begin by considering the asset and the specific threat, and then describe each control that prevents, detects, or corrects that threat. The description of the control (and its role) is placed in a numerical list, and the control's number is placed in the cell. For example, assume 24 controls have been identified as being in use. Each one is described, named, and numbered consecutively. The numbered list of controls has no ranking attached to it: the first control is number 1 just because it is the first control identified.

Figure 10.5 shows a partially completed spreadsheet. The assets and their priority are listed as rows, with threats as columns. Each cell lists one or more controls that protect one asset against one threat. For example, in the first row, the mail server is currently protected from a fire threat by a Halon fire suppression system, and there is a disaster recovery plan in place. The placement of the mail server above ground level protects against flood, and the disaster recovery plan helps here too.

FIGURE 10.5 Sample control spreadsheet with some assets, threats, and controls. DNS = Domain Name Service; LAN = local area network

10.2.3 Evaluate the Network's Security

The last step in using a control spreadsheet is to evaluate the adequacy of the existing controls and the resulting degree of risk associated with each threat. Based on this assessment, priorities can be established to determine which threats must be addressed immediately. Assessment is done by reviewing each set of controls as it relates to each threat and network component. The objective of this step is to answer the specific question: Are the controls adequate to effectively prevent, detect, and correct this specific threat?

The assessment can be done by the network manager, but it is better done by a team of experts chosen for their in-depth knowledge about the network and environment being reviewed. This team, known as the Delphi team, is composed of three to nine key people. Key managers should be team members because they deal with both the long-term and day-to-day operational aspects of the network. More important, their participation means the final results can be implemented quickly, without further justification, because they make the final decisions affecting the network.

10.3 ENSURING BUSINESS CONTINUITY

Business continuity means that the organization's data and applications will continue to operate even in the face of disruption, destruction, or disaster. A business continuity plan has two major parts: the development of controls that will prevent these events from having a major impact on the organization, and a disaster recovery plan that will enable the organization to recover if a disaster occurs. In this section, we discuss controls designed to prevent, detect, and correct these threats.7 We focus on the major threats to business continuity: viruses, theft, denial of service, attacks, device failure, and disasters. Business continuity planning is sometimes overlooked because intrusion is more often the subject of news reports.

10.3.1 Virus Protection

Special attention must be paid to preventing computer viruses. Some are harmless and just cause nuisance messages, but others are serious, such as destroying data. In most cases, disruptions or the destruction of data are local and affect only a small number of computers. Such disruptions are usually fairly easy to deal with; the virus is removed and the network continues to operate. Some viruses cause widespread infection, although this has not occurred in recent years.

10.2 ATTACK OF THE AUDITORS

MANAGEMENT FOCUS

Security has become a major issue over the past few years. With the passage of HIPPA and the Sarbanes-Oxley Act, more and more regulations are addressing security. It takes years for most organizations to become compliant, because the rules are vague and there are many ways to meet the requirements.

“If you've implemented commonsense security, you're probably already in compliance from an IT standpoint,” says Kim Keanini, Chief Technology Officer of nCricle, a security software firm. “Compliance from an auditing standpoint, however, is something else.” Auditors require documentation. It is no longer sufficient to put key network controls in place; now you have to provide documented proof that a control is working, which usually requires event logs of transactions and thwarted attacks.

When it comes to security, Bill Randal, MIS Director of Red Robin Restaurants, can't stress the importance of documentation enough. “It's what the auditors are really looking for,” he says. “They're not IT folks, so they're looking for documented processes they can track. At the start of our [security] compliance project, we literally stopped all other projects for another three weeks while we documented every security and auditing process we had in place.”

Software vendors are scrambling to ensure that their security software not only performs the functions it is designed to do, but also to improve its ability to provide documentation for auditors.

__________

SOURCE: Oliver Rist, “Attack of the Auditors,” InfoWorld, March 21, 2005, pp. 34–40.

Most viruses attach themselves to other programs or to special parts on disks. As those files execute or are accessed, the virus spreads. Macro viruses, viruses that are contained in documents, emails, or spreadsheet files, can spread when an infected file is simply opened. Some viruses change their appearances as they spread, making detection more difficult.

A worm is special type of virus that spreads itself without human intervention. Many viruses attach themselves to a file and require a person to copy the file, but a worm copies itself from computer to computer. Worms spread when they install themselves on a computer and then send copies of themselves to other computers, sometimes by emails, sometimes via security holes in software. (Security holes are described later in this chapter.)

The best way to prevent the spread of viruses is to install antivirus software such as that by Symantec. Most organizations automatically install antivirus software on their computers, but many people fail to install them on their home computers. Antivirus software is only as good as its last update, so it is critical that the software be updated regularly. Be sure to set your software to update automatically or do it manualy on a regular basis.

Viruses are often spread by downloading files from the Internet, so do not copy or download files of unknown origin (e.g., music, videos, screen savers), or at least check every file you do download. Always check all files for viruses before using them (even those from friends!). Researchers estimate that 10 new viruses are developed every day, so it is important to frequently update the virus information files that are provided by the antivirus software.

10.3.2 Denial of Service Protection

With a denial-of-service (DoS) attack, an attacker attempts to disrupt the network by flooding it with messages so that the network cannot process messages from normal users. The simplest approach is to flood a Web server, mail server, and so on with incoming messages. The server attempts to respond to these, but there are so many messages that it cannot.

One might expect that it would be possible to filter messages from one source IP so that if one user floods the network, the messages from this person can be filtered out before they reach the Web server being targeted. This could work, but most attackers use tools that enable them to put false source IP addresses on the incoming messages so that it is difficult to recognize a message as a real message or a DoS message.

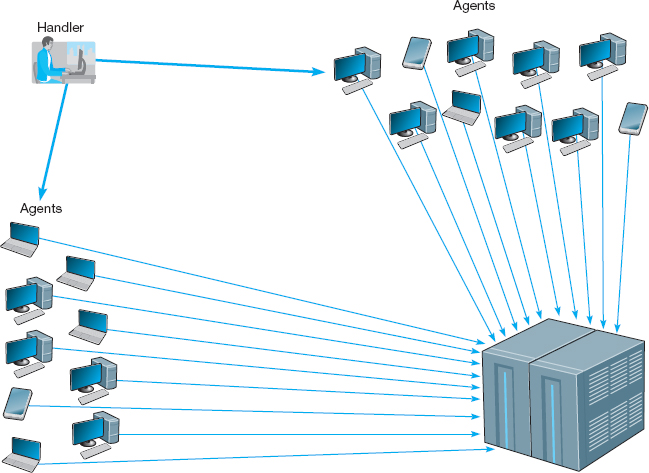

A distributed denial-of-service (DDoS) attack is even more disruptive. With a DDoS attack, the attacker breaks into and takes control of many computers on the Internet (often several hundred to several thousand) and plants software on them called a DDoS agent (or sometimes a zombie or a bot). The attacker then uses software called a DDoS handler (sometimes called a botnet) to control the agents. The handler issues instructions to the computers under the attacker's control, which simultaneously begin sending messages to the target site. In this way, the target is deluged with messages from many different sources, making it harder to identify the DoS messages and greatly increasing the number of messages hitting the target (see Figure 10.6). Some DDos attacks have sent more than one million packets per second at the target.

There are several approaches to preventing DoS and DDoS attacks from affecting the network. The first is to configure the main router that connects your network to the Internet (or the firewall, which will be discussed later in this chapter) to verify that the source address of all incoming messages is in a valid address range for that connection (called traffic filtering). For example, if an incoming message has a source address from inside your network, then it is obviously a false address. This ensures that only messages with valid addresses are permitted into the network, although it requires more processing in the router and thus slows incoming traffic.

A second approach is to configure the main router (or firewall) to limit the number of incoming packets that could be DoS/DDoS attack packets that it allows to enter the network, regardless of their source (called traffic limiting). Technical Focus box 10.2 describes some of the types of DoS/DDoS attacks and the packets used. Such packets have the same content as legitimate packets that should be permitted into the network. It is a flood of such packets that indicates a DoS/DDoS attack, so by discarding packets over a certain number that arrive each second, one can reduce the impact of the attack. The disadvantage is that during an attack, some valid packets from regular customers will be discarded so they will be unable to reach your network. Thus the network will continue to operate, but some customer packets (e.g., Web requests, emails) will be lost.

FIGURE 10.6 A distributed denial-of-service attack

10.3 CAN DDOS ATTACKS BREAK THE INTERNET?

MANAGEMENT FOCUS

Although the idea of DDoS is not new at all, it causes headaches to more and more companies with online presence. According to Arbor Networks Security Report, DDoS attacks have increased by 1000 percent since 2005. Attackers are now able to bombard a target at 100 Gbps, which is twice the size of the largest attack in 2009. The concern is that DDoS attacks may break the Internet in the near future.

There are three reasons for this. First, after the Wikileaks website was taken down for several hours in 2010 by a DDoS, we now realize that DDoS can be seen as a mass demonstration or protest that happens online. Second, faster household Internet connections make it easier to launch successful attacks because each bot now can generate more traffic. Third, some speculate that the very fast growing network of mobile devices that run on fast mobile networks can be used in DDoS. The concern that DDoS can be the threat of the next decade is even more tangible these days because of an announcement made by a computer science graduate, Max Schuchard. He claims that he discovered a way how to launch a DDoS on Border Gateway Protocol (BGP) that runs on all major Internet routers, and thus can crash the Internet. The question, then, is no longer whether one can break a website but rather whether one can break the Internet.

__________

SOURCES: http://www.pcworld.com/businesscenter/article/218533/will ddos attacks take over the internet.html

http://www.zdnet.com/blog/networking/how-to-crash-the-internet/680

A third and more sophisticated approach is to use a special-purpose security device, called a traffic anomaly detector, that is installed in front of the main router (or firewall) to perform traffic analysis. This device monitors normal traffic patterns and learns what normal traffic looks like. Most DoS/DDoS attacks target a specific server or device so when the anomaly detector recognizes a sudden burst of abnormally high traffic destined for a specific server or device, it quarantines those incoming packets but allows normal traffic to flow through into the network. This results in minimal impact to the network as a whole. The anomaly detector re-routes the quarantined packets to a traffic anomaly analyzer (see Figure 10.7). The anomaly analyzer examines the quarantined traffic, attempts to recognize valid source addresses and “normal” traffic, and selects which of the quarantined packets to release into the network. The detector can also inform the router owned by the ISP that is sending the traffic into the organization's network to reroute the suspect traffic to the anomaly analyzer, thus avoiding the main circuit leading into the organization. This process is never perfect, but is significantly better than the other approaches.

FIGURE 10.7 Traffic analysis reduces the impact of denial of service attacks

TECHNICAL FOCUS

A DoS attack typically involves the misuse of standard TCP/IP protocols or connection processes so that the target for the DoS attack responds in a way designed to create maximum trouble. Five common types of attacks include:

- ICMP Attacks:

The network is flooded with ICMP echo requests (i.e., pings) that have a broadcast destination address and a faked source address of the intended target. Because it is a broadcast message, every computer on the network responds to the faked source address so that the target is overwhelmed by responses. Because there are often dozens of computers in the same broadcast domain, each message generates dozens of messages at the target.

- UDP Attacks:

This attack is similar to an ICMP attack except that it uses UDP echo requests instead of ICMP echo requests.

- TCP SYN Floods:

The target is swamped with repeated SYN requests to establish a TCP connection, but when the target responds (usually to a faked source address) there is no response. The target continues to allocate TCP control blocks, expects each of the requests to be completed, and gradually runs out of memory.

- UNIX Process Table Attacks:

This is similar to a TCP SYN flood, but instead of TCP SYN packets, the target is swamped by UNIX open connection requests that are never completed. The target allocates open connections and gradually runs out of memory.

- Finger of Death Attacks:

This is similar to the TCP SYN flood, but instead the target is swamped by finger requests that are never disconnected.

- DNS Recursion Attacks:

The attacker sends DNS requests to DNS servers (often within the target's network), but spoofs the from address so the requests appear to come from the target computer which is overwhelmed by DNS responses. DNS responses are larger packets than ICMP, UDP, or SYN responses so the effects can be stronger

__________

SOURCE: “Web Site Security and Denial of Service Protection,” www.nwfusion.com.

Another possibility under discussion by the Internet community as a whole is to require Internet Service Providers (ISPs) to verify that all incoming messages they receive from their customers have valid source IP addresses. This would prevent the use of faked IP addresses and enable users to easily filter out DoS messages from a given address. It would make it virtually impossible for a DoS attack to succeed, and much harder for a DDoS attack to succeed. Because small- to medium-sized businesses often have poor security and become the unwilling accomplices in DDoS attacks, many ISPs are beginning to impose security restrictions on them, such as requiring firewalls to prevent unauthorized access (firewalls are discussed later in this chapter).

10.3.3 Theft Protection

One often overlooked security risk is theft. Computers and network equipment are commonplace items that have a good resale value. Several industry sources estimate that over $1 billion is lost to computer theft each year, with many of the stolen items ending up on Internet auction sites (e.g., eBay).

Physical security is a key component of theft protection. Most organizations require anyone entering their offices to go through some level of physical security. For example, most offices have security guards and require all visitors to be authorized by an organization employee. Universities are one of the few organizations that permit anyone to enter their facilities without verification. Therefore, you'll see most computer equipment and network devices protected by locked doors or security cables so that someone cannot easily steal them.

One of the most common targets for theft is laptop computers. More laptop computers are stolen from employee's homes, cars, and hotel rooms than any other device. Airports are another common place for laptop thefts. It is hard to provide physical security for traveling employees, but most organizations provide regular reminders to their employees to take special care when traveling with laptops. Nonetheless, they are still the most commonly stolen device.

10.3.4 Device Failure Protection

Eventually, every computer network device, cable, or leased circuit will fail. It's just a matter of time. Some computers, devices, cables, and circuits are more reliable than others, but every network manager has to be prepared for a failure.

The best way to prevent a failure from impacting business continuity is to build redundancy into the network. For any network component that would have a major impact on business continuity, the network designer provides a second, redundant component. For example, if the Internet connection is important to the organization, the network designer ensures that there are at least two connections into the Internet—each provided by a different common carrier, so that if one common carrier's network goes down, the organization can still reach the Internet via the other common carrier's network. This means, of course, that the organization now requires two routers to connect to the Internet, because there is little use in having two Internet connections if they both run through the same router; if that one router goes down, having a second Internet connection provides no value.

This same design principle applies to the organization's internal networks. If the core backbone is important (and it usually is), then the organization must have two core backbones, each served by different devices. Each distribution backbone that connects to the core backbone (e.g., a building backbone that connects to a campus backbone) must also have two connections (and two routers) into the core backbone.

The next logical step is to ensure that each access layer LAN also has two connections into the distribution backbone. Redundancy can be expensive, so at some point, most organizations decide that not all parts of the network need to be protected. Most organizations build redundancy into their core backbone and their Internet connections, but are very careful in choosing which distribution backbones (i.e., building backbones) and access layer LANs will have redundancy. Only those building backbones and access LANs that are truly important will have redundancy. This is why a risk assessment with a control spreadsheet is important, because it is too expensive to protect the entire network. Most organizations only provide redundancy in mission critical backbones and LANs (e.g., those that lead to servers).

Redundancy also applies to servers. Most organizations use a server farm, rather than a single server, so that if one server fails, the other servers in the server farm continue to operate and there is little impact. Some organizations use fault-tolerant servers that contain many redundant components so that if one of its components fails, it will continue to operate.

Redundant array of independent disks (RAID) is a storage technology that, as the name suggests, is made of many separate disk drives. When a file is written to a RAID device, it is written across several separate, redundant disks.

There are several types of RAID. RAID 0 uses multiple disk drives and therefore is faster than traditional storage, because the data can be written or read in parallel across several disks, rather than sequentially on the same disk. RAID 1 writes duplicate copies of all data on at least two different disks; this means that if one disk in the RAID array fails, there is no data loss because there is a second copy of the data stored on a different disk. This is sometimes called disk mirroring, because the data on one disk is copied (or mirrored) onto another. RAID 2 provides error checking to ensure no errors have occurred during the reading or writing process. RAID 3 provides a better and faster error checking process than RAID 2. RAID 4 provides slightly faster read access than RAID 3 because of the way it allocates the data to different disk drives. RAID 5 provides slightly faster read and write access because of the way it allocates the error checking data to different disk drives. RAID 6 can survive the failure of two drives with no data loss.

Power outages are one of the most common causes of network failures. An uninterruptable power supply (UPS) is a device that detects power failures and permits the devices attached to it to operate as long as its battery lasts. UPS for home use are inexpensive and often provide power for up to 15 minutes—long enough for you to save your work and shut down your computer. UPS for large organizations often have batteries that last for an hour and permit mission critical servers, switches, and routers to operate until the organization's backup generator can be activated.

10.3.5 Disaster Protection

A disaster is an event that destroys a large part of the network and computing infrastructure in one part of the organization. Disasters are usually caused by natural forces (e.g., hurricanes, floods, earthquakes, fires), but some can be humanmade (e.g., arson, bombs, terrorism).

Avoiding Disaster Ideally, you want to avoid a disaster, which can be difficult. For example, how do you avoid an earthquake? There are, however, some commonsense steps you can take to avoid the full impact of a disaster from affecting your network. The most fundamental is again redundancy; store critical data in at least two very different places, so if a disaster hits one place, your data are still safe.

Other steps depend on the disaster to be avoided. For example, to avoid the impact of a flood, key network components and data should never be located near rivers or in the basement of a building. To avoid the impact of a tornado, key network components and data should be located underground. To reduce the impact of fire, a fire suppression system should be installed in all key data centers. To reduce the impact of terrorist activities, the location of key network components and data should be kept a secret and should be protected by security guards.

MANAGEMENT FOCUS

As Hurricane Katrina swept over New Orleans, Ochsner Hospital lost two of its three backup power generators knocking out air conditioning in the 95-degree heat. Fans were brought out to cool patients, but temperatures inside critical computer and networking equipment reached 150 degrees. Kurt Induni, the hospital's network manager, shut down part of the network and the mainframe with its critical patient records system to ensure they survived the storm. The hospital returned to paper-based record keeping, but Induni managed to keep email alive, which became critical when the telephone system failed and a main fiber line was cut. e-mail through the hospital's T-3 line into Baton Rouge became the only reliable means of communication. After the storm, the mainframe was turned back on and the patient records were updated.

While Ochsner Hospital remained open, Kindred Hospital was forced to evacuate patients (under military protection from looters and snipers). The patients’ files, all electronic, were simply transferred over the network to other hospitals with no worry about lost records, X-rays, CT scans, and such.

In contrast, the Louisiana court system learned a hard lesson. The court system is administered by each individual parish (i.e., county) and not every parish had a disaster recovery plan or even backups of key documents–many parishes still use old paper files that were destroyed by the storm. “We've got people in jails all over the state right now that have no paperwork and we have no way to offer them any kind of means for adjudication,” says Freddie Manit, CIO for the Louisiana Ninth Judicial District Court. No paperwork means no prosecution, even for felons with long records, so many prisoners will simply be released. Sometimes losing data is not the worst thing that can happen.

__________

SOURCES: Phil Hochmuth, “Weathering Katrina,” NetworkWorld, September 19, 2005, pp. 1, 20; and M. K. McGee, “Storm Shows Benefits, Failures of Technology,” Informationweek, September 15, 2005, p. 34.

Disaster Recovery A critical element in correcting problems from a disaster is the disaster recovery plan, which should address various levels of response to a number of possible disasters and should provide for partial or complete recovery of all data, application software, network components, and physical facilities. A complete disaster recovery plan covering all these areas is beyond the scope of this text. Figure 10.8 provides a summary of many key issues. A good example of a disaster recovery plan is MIT's business continuity plan at web.mit.edu/security/www/pubplan.htm. Some firms prefer the term business continuity plan.

The most important elements of the disaster recovery plan are backup and recovery controls that enable the organization to recover its data and restart its application software should some portion of the network fail. The simplest approach is to make backup copies of all organizational data and software routinely and to store these backup copies off-site. Most organizations make daily backups of all critical information, with less important information (e.g., email files) backed up weekly. Backups used to be done on tapes that were physically shipped to an off-site location, but more and more, companies are using their WAN connections to transfer data to remote locations (it's faster and cheaper than moving tapes). Backups should always be encrypted (encryption is discussed later in the chapter) to ensure that no unauthorized users can access them.

FIGURE 10.8 Elements of a disaster recovery plan

Continuous data protection (CDP) is another option that firms are using in addition to or instead of regular backups. With CDP, copies of all data and transactions on selected servers are written to CDP servers as the transaction occurs. CDP is more flexible than traditional backups that take snapshots of data at specific times, or disk mirroring, that duplicates the contents of a disk from second to second. CDP enables data to be stored miles from the originating server and time-stamps all transactions to enable organizations to restore data to any specific point in time. For example, suppose a virus brings down a server at 2:45 P.M. The network manager can restore the server to the state it was in at 2:30 P.M. and simply resume operations as though the virus had not hit.

Backups and CDP ensure that important data are safe, but they do not guarantee the data can be used. The disaster recovery plan should include a documented and tested approach to recovery. The recovery plan should have specific goals for different types of disasters. For example, if the main database server was destroyed, how long should it take the organization to have the software and data back in operation by using the backups? Conversely, if the main data center was completely destroyed, how long should it take? The answers to these questions have very different implications for costs. Having a spare network server or a server with extra capacity that can be used in the event of the loss of the primary server is one thing. Having a spare data center ready to operate within 12 hours (for example) is an entirely different proposition.

Many organizations have a disaster recovery plan, but only a few test their plans. A disaster recovery drill is much like a fire drill in that it tests the disaster recovery plan and provides staff the opportunity to practice little-used skills to see what works and what doesn't work before a disaster happens and the staff must use the plan for real. Without regular disaster recovery drills, the only time a plan is tested is when it must be used. For example, when an island-wide blackout shut down all power in Bermuda, the backup generator in the British Caymanian Insurance office automatically took over and kept the company operating. However, the key-card security system, which was not on the generator, shut down, locking out all employees and forcing them to spend the day at the beach. No one had thought about the security system and the plan had not been tested.

Organizations are usually much better at backing up important data than are individual users. When did you last backup the data an your computer? What would you do if your computer was stolen or destroyed? There is an inexpensive alternative to CDP for home users. Online backup services such as mozy.com enable you to back up the data on your computer to their server on the Internet. You download and install client software that enables you to select what folders to back up. After you back up the data for the first time, which takes a while, the software will run every few hours and automatically back up all changes to the server, so you never have to think about backups again. If you need to recover some or all of your data, you can go to their Web site and download it.

Disaster Recovery Outsourcing Most large organizations have a two-level disaster recovery plan. When they build networks they build enough capacity and have enough spare equipment to recover from a minor disaster such as loss of a major server or portion of the network (if any such disaster can truly be called minor). This is the first level. Building a network that has sufficient capacity to quickly recover from a major disaster such as the loss of an entire data center is beyond the resources of most firms. Therefore, most large organizations rely on professional disaster recovery firms to provide this second-level support for major disasters.

Many large firms outsource their disaster recovery efforts by hiring disaster recovery firms that provide a wide range of services. At the simplest, disaster recovery firms provide secure storage for backups. Full services include a complete networked data center that clients can use when they experience a disaster. Once a company declares a disaster, the disaster recovery firm immediately begins recovery operations using the backups stored onsite and can have the organization's entire data network back in operation on the disaster recovery firm's computer systems within hours. Full services are not cheap, but compared to the potential millions of dollars that can be lost per day from the inability to access critical data and application systems, these systems quickly pay for themselves in time of disaster.

10.5 DISASTER RECOVERY HITS HOME

MANAGEMENT FOCUS

“The building is on fire” were the first words she said as I answered the phone. It was just before noon and one of my students had called me from her office on the top floor of the business school at the University of Georgia. The roofing contractor had just started what would turn out to be the worst fire in the region in more than 20 years although we didn't know it then. I had enough time to gather up the really important things from my office on the ground floor (memorabilia, awards, and pictures from 10 years in academia) when the fire alarm went off. I didn't bother with the computer; all the files were backed up off-site.

Ten hours, 100 firefighters, and 1.5 million gallons of water later, the fire was out. Then our work began. The fire had completely destroyed the top floor of the building, including my 20-computer networking lab. Water had severely damaged the rest of the building, including my office, which, I learned later, had been flooded by almost 2 feet of water at the height of the fire. My computer, and virtually all the computers in the building, were damaged by the water and unusable.

My personal files were unaffected by the loss of the computer in my office; I simply used the backups and continued working—after making new backups and giving them to a friend to store at his house. The Web server I managed had been backed up to another server on the opposite side of campus 2 days before (on its usual weekly backup cycle), so we had lost only 2 days’ worth of changes. In less than 24 hours, our Web site was operational; I had our server's files mounted on the university library's Web server and redirected the university's DNS server to route traffic from our old server address to our new temporary home.

Unfortunately, the rest of our network did not fare as well. Our primary Web server had been backed up to tape the night before and while the tapes were stored off-site, the tape drive was not; the tape drive was destroyed and no one else on campus had one that could read our tapes; it took 5 days to get a replacement and reestablish the Web site. Within 30 days we were operating from temporary offices with a new network, and 90 percent of the office computers and their data had been successfully recovered.

Living through a fire changes a person. I'm more careful now about backing up my files, and I move ever so much more quickly when a fire alarm sounds. But I still can't get used to the rust that is slowly growing on my “recovered” computer.

__________

SOURCE: Alan Dennis

10.4 INTRUSION PREVENTION

Intrusion is the second main type of security problem and the one that tends to receive the most attention. No one wants an intruder breaking into their network.

There are four types of intruders who attempt to gain unauthorized access to computer networks. The first are casual intruders who have only a limited knowledge of computer security. They simply cruise along the Internet trying to access any computer they come across. Their unsophisticated techniques are the equivalent of trying door-knobs, and, until recently, only those networks that left their front doors unlocked were at risk. Unfortunately, there are now a variety of hacking tools available on the Internet that enable even novices to launch sophisticated intrusion attempts. Novice attackers that use such tools are sometimes called script kiddies.

The second type of intruders are experts in security, but their motivation is the thrill of the hunt. They break into computer networks because they enjoy the challenge and enjoy showing off for friends or embarrassing the network owners. These intruders are called hackers and often have a strong philosophy against ownership of data and software. Most cause little damage and make little attempt to profit from their exploits, but those that do can cause major problems. Hackers that cause damage are often called crackers.

The third type of intruder is the most dangerous. They are professional hackers who break into corporate or government computers for specific purposes, such as espionage, fraud, or intentional destruction. The U.S. Department of Defense (DoD), which routinely monitors attacks against U.S. military targets, has until recently concluded that most attacks are individuals or small groups of hackers in the first two categories. While some of their attacks have been embarrassing (e.g., defacement of some military and intelligence Web sites), there have been no serious security risks. However, in the late 1990s the DoD noticed a small but growing set of intentional attacks that they classify as exercises, exploratory attacks designed to test the effectiveness of certain software attack weapons. Therefore, they established an information warfare program and a new organization responsible for coordinating the defense of military networks under the U.S. Space Command.

The fourth type of intruder is also very dangerous. These are organization employees who have legitimate access to the network, but who gain access to information they are not authorized to use. This information could be used for their own personnel gain, sold to competitors, or fraudulently changed to give the employee extra income. Many security break-ins are caused by this type of intruder.

The key principle in preventing intrusion is to be proactive. This means routinely testing your security systems before an intruder does. Many steps can be taken to prevent intrusion and unauthorized access to organizational data and networks, but no network is completely safe. The best rule for high security is to do what the military does: Do not keep extremely sensitive data online. Data that need special security are stored in computers isolated from other networks. In the following sections, we discuss the most important security controls for preventing intrusion and for recovering from intrusion when it occurs.

10.4.1 Security Policy

In the same way that a disaster recovery plan is critical to controlling risks due to disruption, destruction, and disaster, a security policy is critical to controlling risk due to intrusion. The security policy should clearly define the important assets to be safeguarded and the important controls needed to do that. It should have a section devoted to what employees should and should not do. Also, it should contain a clear plan for routinely training employees—particularly end-users with little computer expertise—on key security rules and a clear plan for routinely testing and improving the security controls in place (Figure 10.9). A good set of examples and templates is available at www.sans.org/resources/policies.

10.4.2 Perimeter Security and Firewalls

Ideally, you want to stop external intruders at the perimeter of your network, so that they cannot reach the servers inside. There are three basic access points into most networks: the Internet, LANS, and WLANs. Recent surveys suggest that the most common access point for intrusion is the Internet connection (70 percent of organizations experienced an attack from the Internet), followed by LANs and WLANs (30 percent). External intruders are most likely to use the Internet connection, whereas internal intruders are most likely to use the LAN or WLAN. Because the Internet is the most common source of intrusions, the focus of perimeter security is usually on the Internet connection, although physical security is also important.

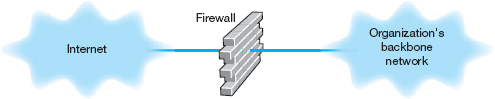

FIGURE 10.9 Elements of a security policy

A firewall is commonly used to secure an organization's Internet connection. A firewall is a router or special-purpose device that examines packets flowing into and out of a network and restricts access to the organization's network. The network is designed so that a firewall is placed on every network connection between the organization and the Internet (Figure 10.10). No access is permitted except through the firewall. Some firewalls have the ability to detect and prevent denial-of-service attacks, as well as unauthorized access attempts. Three commonly used types of firewalls are packet-level firewalls, application-level firewalls, and NAT firewalls.

FIGURE 10.10 Using a firewall to protect networks

Packet-Level Firewalls A packet-level firewall examines the source and destination address of every network packet that passes through it. It only allows packets into or out of the organization's networks that have acceptable source and destination addresses. In general, the addresses are examined only at the transport layer (TCP port id) and network layer (IP address). Each packet is examined individually, so the firewall has no knowledge of what packets came before. It simply chooses to permit entry or exit based on the contents of the packet itself. This type of firewall is the simplest and least secure because it does not monitor the contents of the packets or why they are being transmitted, and typically does not log the packets for later analysis.

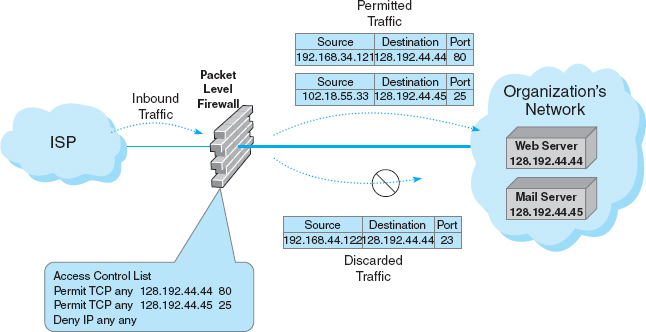

The network manager writes a set of rules (called an access control list [ACL]) for the packet level firewall so it knows what packets to permit into the network and what packets to deny entry. Remember that the IP packet contains the source IP address and the destination address and that the TCP segment has the destination port number that identifies the application layer software to which the packet is going. Most application layer software on servers uses standard TCP port numbers. The Web (HTTP) uses port 80, whereas email (SMTP) uses port 25.

Suppose that the organization had a public Web server with an IP address of 128.192.44.44 and an email server with an address of 128.192.44.45 (see Figure 10.11). The network manager wants to make sure that no one outside of the organization can change the contents of the Web server (e.g., by using telnet or FTP). The ACL could be written to include a rule that permits the Web server to receive HTTP packets from the Internet (but other types of packets would be discarded). For example, the rule would say if the source address is anything, the destination IP address is 128.192.44.44 and the destination TCP port is 80, then permit the packet into the network; see the ACL on the firewall in Figure 10.11. Likewise, we could add a rule to the ACL that would permit SMTP packets to reach the email server: If the source address is anything, the destination is 128.192.44.45 and the destination TCP port is 25, then permit the packet through (see Figure 10.11). The last line in the ACL is usually a rule that says to deny entry to all other packets that have not been specifically permitted (some firewalls come automatically configured to deny all packets other than those explicitly permitted, so this command would not be needed). With this ACL, if an external intruder attempted to use telnet (port 23) to reach the Web server, the firewall would deny entry to the packet and simply discard it.

FIGURE 10.11 How packet-level firewalls work

Although source IP addresses can be used in the ACL, they often are not used. Most hackers have software that can change the source IP address on the packets they send (called IP spoofing) so using the source IP address in security rules is not usually worth the effort. Some network managers do routinely include a rule in the ACL that denies entry to all packets coming from the Internet that have a source IP address of a subnet inside the organization, because any such packets must have a spoofed address and therefore obviously are an intrusion attempt.

Application-Layer Firewalls An application-level firewall is more expensive and more complicated to install and manage than a packet-level firewall, because it examines the contents of the application layer packet and searches for known attacks (see Security Holes later in this chapter). Application-layer firewalls have rules for each application they can process. For example, most application-layer firewalls can check Web packets (HTTP), email packets (SMTP), and other common protocols. In some cases, special rules must be written by the organization to permit the use of application software it has developed.

Remember from Chapter 5 that TCP uses connection-oriented messaging in which a client first establishes a connection with a server before beginning to exchange data. Application-level firewalls can use stateful inspection, which means that they monitor and record the status of each connection and can use this information in making decisions about what packets to discard as security threats.

Many application-level firewalls prohibit external users from uploading executable files. In this way, intruders (or authorized users) cannot modify any software unless they have physical access to the firewall. Some refuse changes to their software unless it is done by the vendor. Others also actively monitor their own software and automatically disable outside connections if they detect any changes.

Network Address Translation Firewalls Network address translation (NAT) is the process of converting between one set of public IP addresses that are viewable from the Internet and a second set of private IP addresses that are hidden from people outside of the organization. NAT is transparent, in that no computer knows it is happening. While NAT can be done for several reasons, the most common reason is security. If external intruders on the Internet can't see the private IP addresses inside your organization, they can't attack your computers. Most routers and firewalls today have NAT built into them, even inexpensive routers designed for home use.

The NAT firewall uses an address table to translate the private IP addresses used inside the organization into proxy IP addresses used on the Internet. When a computer inside the organization accesses a computer on the Internet, the firewall changes the source IP address in the outgoing IP packet to its own address. It also sets the source port number in the TCP segment to a unique number that it uses as an index into its address table to find the IP address of the actual sending computer in the organization's internal network. When the external computer responds to the request, it addresses the message to the firewall's IP address. The firewall receives the incoming message, and after ensuring the packet should be permitted inside, changes the destination IP address to the private IP address of the internal computer and changes the TCP port number to the correct port number before transmitting it on the internal network.

This way systems outside the organization never see the actual internal IP addresses, and thus they think there is only one computer on the internal network. Most organizations also increase security by using illegal internal addresses. For example, if the organization has been assigned the Internet 128.192.55.X address domain, the NAT fire-wall would be assigned an address such as 128.192.55.1. Internal computers, however, would not be assigned addresses in the 128.192.55.X subnet. Instead, they would be assigned unauthorized Internet addresses such as 10.3.3.55 (addresses in the 10.X.X.X domain are not assigned to organizations but instead are reserved for use by private intranets). Because these internal addresses are never used on the Internet but are always converted by the firewall, this poses no problems for the users. Even if attackers discover the actual internal IP address, it would be impossible for them to reach the internal address from the Internet because the addresses could not be used to reach the organization's computers.8

Firewall Architecture Many organizations use layers of NAT, packet-level, and application-level firewalls (Figure 10.12). Packet-level firewalls are used as an initial screen from the Internet into a network devoted solely to servers intended to provide public access (e.g., Web servers, public DNS servers). This network is sometimes called the DMZ (demilitarized zone) because it contains the organization's servers but does not provide complete security for them. This packet-level firewall will permit Web requests and similar access to the DMZ network servers but will deny FTP access to these servers from the Internet because no one except internal users should have the right to modify the servers. Each major portion of the organization's internal networks has its own NAT firewall to grant (or deny) access based on rules established by that part of the organization.

This figure also shows how a packet sent by a client computer inside one of the internal networks protected by a NAT firewall would flow through the network. The packet created by the client has the client's false source address and the source port number of the process on the client that generated the packet (an HTTP packet going to a Web server, as you can tell from the destination port address of 80). When the packet reaches the firewall, the firewall changes the source address on the IP packet to its own address and changes the source port number to an index it will use to identify the client computer's address and port number. The destination address and port number are unchanged. The firewall then sends the packet on its way to the destination. When the destination Web server responds to this packet, it will respond using the firewall's address and port number. When the firewall receives the incoming packets it will use the destination port number to identify what IP address and port number to use inside the internal network, change the inbound packet's destination and port number, and send it into the internal network so it reaches the client computer.

FIGURE 10.12 A typical network design using firewalls

Physical Security One important element to prevent unauthorized users from accessing an internal LAN is physical security: preventing outsiders from gaining access into the organization's offices, server room, or network equipment facilities. Both main and remote physical facilities should be secured adequately and have the proper controls. Good security requires implementing the proper access controls so that only authorized personnel can enter closed areas where servers and network equipment are located or access the network. The network components themselves also have a level of physical security. Computers can have locks on their power switches or passwords that disable the screen and keyboard.

In the previous section we discussed the importance of locating backups and servers at separate (off-site) locations. Some companies have also argued that by having many servers in different locations you can reduce your risk and improve business continuity. Does having many servers disperse risk, or does it increase the points of vulnerability? A clear disaster recovery plan with an off-site backup and server facility can disperse risk, like distributed server systems. Distributed servers offer many more physical vulnerabilities to an attacker: more machines to guard, upgrade, patch, and defend. Many times these dispersed machines are all part of the same logical domain, which means that breaking into one of them often can give the attacker access to the resources of the others. It is our feeling that a well backed-up, centralized data center can be made inherently more secure than a proliferated base of servers.

Proper security education, background checks, and the implementation of error and fraud controls are also very important. In many cases, the simplest means to gain access is to become employed as a janitor and access the network at night. In some ways this is easier than the previous methods because the intruder only has to insert a listening device or computer into the organization's network to record messages. Three areas are vulnerable to this type of unauthorized access: wireless LANs, network cabling, and network devices.

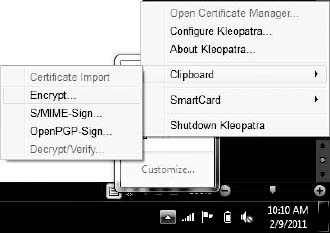

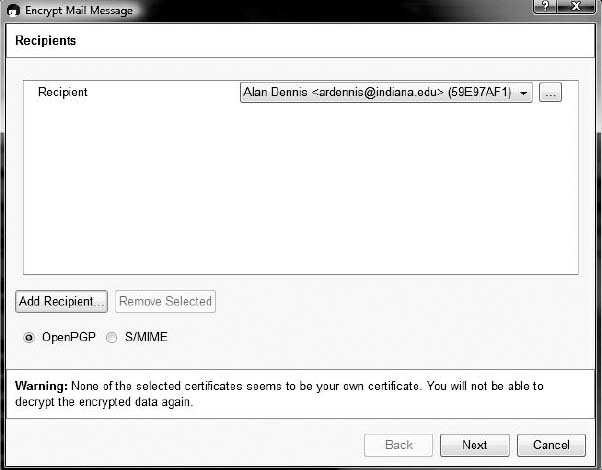

Wireless LANs are the easiest target for eavesdropping because they often reach beyond the physical walls of the organization. Chapter 6 discussed the techniques of WLAN security, so we do not repeat them here.