CHAPTER 1

INTRODUCTION TO DATA COMMUNICATIONS

THIS CHAPTER introduces the basic concepts of data communications and shows how we have progressed from paper-based systems to modern computer networks. It begins by describing why it is important to study data communications and how the invention of the telephone, the computer, and the Internet has transformed the way we communicate. Next, the basic types and components of a data communication network are discussed. The importance of a network model based on layers and the importance of network standards are examined. The chapter concludes with an overview of three key trends in the future of networking.

OBJECTIVES ![]()

- Be aware of the history of communications, information systems, and the Internet

- Be aware of the applications of data communication networks

- Be familiar with the major components of and types of networks

- Understand the role of network layers

- Be familiar with the role of network standards

- Be aware of three key trends in communications and networking

CHAPTER OUTLINE ![]()

1.1.1 A Brief History of Communications in North America

1.1.2 A Brief History of Information Systems

1.1.3 A Brief History of the Internet

1.2 DATA COMMUNICATIONS NETWORKS

1.3.1 Open Systems Interconnection Reference Model

1.3.3 Message Transmission Using Layers

1.4.1 The Importance of Standards

1.4.2 The Standards-Making Process

1.5.2 The Integration of Voice, Video, and Data

1.5.3 New Information Services

1.6 IMPLICATIONS FOR MANAGEMENT

1.1 INTRODUCTION

What Internet connection should you use? Cable modem or DSL (formally called Digital Subscriber Line)? Cable modems are supposedly six times faster than DSL, providing data speeds of 10 mbps to DSL's 1–5 Mbps (million bits per second). One cable company used a tortoise to represent DSL in advertisements. So which is faster? We'll give you a hint. Which won the race in the fable, the tortoise or the hare? By the time you finish this book, you'll understand which is faster and why, as well as why choosing the right company as your Internet service provider (ISP) is probably more important than choosing the right technology.

Over the past decade or so, it has become clear that the world has changed forever. We continue to forge our way through the Information Age—the second Industrial Revolution, according to John Chambers, CEO (chief executive officer) of Cisco Systems, Inc., one of the world's leading networking technology companies. The first Industrial Revolution revolutionized the way people worked by introducing machines and new organizational forms. New companies and industries emerged and old ones died off.

The second Industrial Revolution is revolutionizing the way people work through networking and data communications. The value of a high-speed data communication network is that it brings people together in a way never before possible. In the 1800s, it took several weeks for a message to reach North America by ship from England. By the 1900s, it could be transmitted within the hour. Today, it can be transmitted in seconds. Collapsing the information lag to Internet speeds means that people can communicate and access information anywhere in the world regardless of their physical location. In fact, today's problem is that we cannot handle the quantities of information we receive.

Data communications and networking is a truly global area of study, both because the technology enables global communication and because new technologies and applications often emerge from a variety of countries and spread rapidly around the world. The World Wide Web, for example, was born in a Swiss research lab, was nurtured through its first years primarily by European universities, and exploded into mainstream popular culture because of a development at an American research lab.

One of the problems in studying a global phenomenon lies in explaining the different political and regulatory issues that have evolved and currently exist in different parts of the world. Rather than attempt to explain the different paths taken by different countries, we have chosen simplicity instead. Historically, the majority of readers of previous editions of this book have come from North America. Therefore, although we retain a global focus on technology and its business implications, we focus exclusively on North America in describing the political and regulatory issues surrounding communications and networking. We do, however, take care to discuss technological or business issues where fundamental differences exist between North America and the rest of the world (e.g., ISDN [integrated services digital network]) (see Chapter 8).

One of the challenges in studying data communications and networking is that there are many perspectives that can be used. We begin by examining the fundamental concepts of data communications and networking. These concepts explain how data is moved from one computer to another over a network, and represent the fundamental “theory” of how networks operate. The second perspective is from the viewpoint of the technologies in use today—how these theories are put into practice in specific products. From this perspective, we examine how these different technologies work, and when to use which type of technology. The third perspective examines the management of networking technologies, including security, network design, and managing the network on a day-to-day and long-term basis.

In our experience, many people would rather skip over the fundamental concepts, and jump immediately into the network technologies. After all, an understanding of today's technologies is perhaps the most practical aspect of this book. However, network technologies change, and an understanding of the fundamental concepts enables you to better understand new technologies, even though you have not studied them directly.

1.1.1 A Brief History of Communications in North America

Today we take data communications for granted, but it was pioneers like Samuel Morse, Alexander Graham Bell, and Thomas Edison who developed the basic electrical and electronic systems that ultimately evolved into voice and data communication networks.

In 1837, Samuel Morse exhibited a working telegraph system; today we might consider it the first electronic data communication system. In 1841, a Scot named Alexander Bain used electromagnets to synchronize school clocks. Two years later, he patented a printing telegraph—the predecessor of today's fax machines. In 1874, Alexander Graham Bell developed the concept for the telephone at his father's home in Brantford, Ontario, Canada, but it would take him and his assistant, Tom Watson, another two years of work in Boston to develop the first telephone capable of transmitting understandable conversation in 1876. Later that year, Bell made the first long-distance call (about 10 miles) from Paris, Ontario, to his father in Brantford.

1.1 CAREER OPPORTUNITIES

MANAGEMENT FOCUS

It's a great time to be in information technology even after the technology bust. The technology-fueled new economy has dramatically increased the demand for skilled information technology (IT) professionals. The U.S. Bureau of Labor estimates that the number of IT-related jobs will increase 15%–20% by 2018. IT employers have responded: Salaries have risen rapidly. Annual starting salaries for undergraduates at Indiana University range from $50,000 to $65,000. Although all areas of IT have shown rapid growth, the fastest salary growth has been for those with skills in Internet development, networking, and telecommunications. People with a few years of experience in these areas can make $65,000 to $90,000—not counting bonuses.

The demand for networking expertise is growing for two reasons. First, Internet and communication deregulation has significantly changed how businesses operate and has spawned thousands of small start-up companies. Second, a host of new hardware and software innovations have significantly changed the way networking is done.

These trends and the shortage of qualified network experts have also led to the rise in certification. Most large vendors of network technologies, such as Microsoft Corporation and Cisco Systems, Inc., provide certification processes (usually a series of courses and formal exams) so that individuals can document their knowledge. Certified network professionals often earn $10,000 to $15,000 more than similarly skilled uncertified professionals—provided they continue to learn and maintain their certification as new technologies emerge.

__________

SOURCES: Payscale.com, Bureau of Labor Statistics (2011)

When the telephone arrived, it was greeted by both skepticism and adoration, but within five years, it was clear to all that the world had changed. To meet the demand, Bell started a company in the United States, and his father started a company in Canada. In 1879, the first private manual telephone switchboard (private branch exchange, or PBX) was installed. By 1880, the first pay telephone was in use. The telephone became a way of life, because anyone could call from public telephones. The certificate of incorporation for the American Telephone and Telegraph Company (AT&T) was registered in 1885. By 1889, AT&T had a recognized logo in the shape of the Liberty Bell with the words Long-Distance Telephone written on it.

In 1892, the Canadian government began regulating telephone rates. By 1910, the Interstate Commerce Commission (ICC) had the authority to regulate interstate telephone businesses in the United States. In 1934, this was transferred to the Federal Communications Commission (FCC).

The first transcontinental telephone service and the first transatlantic voice connections were both established in 1915. The telephone system grew so rapidly that by the early 1920s, there were serious concerns that even with the introduction of dial telephones (that eliminated the need for operators to make simple calls) there would not be enough trained operators to work the manual switchboards. Experts predicted that by 1980, every single woman in North America would have to work as a telephone operator if growth in telephone usage continued at the current rate. (At the time, all telephone operators were women.)

The first commercial microwave link for telephone transmission was established in Canada in 1948. In 1951, the first direct long-distance dialing without an operator began. The first international satellite telephone call was sent over the Telstar I satellite in 1962. By 1965, there was widespread use of commercial international telephone service via satellite. Fax services were introduced in 1962. Touch-tone telephones were first marketed in 1963. Picturefone service, which allowed users to see as well as talk with one another, began operating in 1969. The first commercial packet-switched network for computer data was introduced in 1976.

Until 1968, Bell Telephone/AT&T controlled the U.S. telephone system. No telephones or computer equipment other than those made by Bell Telephone could be connected to the phone system and only AT&T could provide telephone services. In 1968, after a series of lawsuits, the Carterfone court decision allowed non-Bell equipment to be connected to the Bell System network. This important milestone permitted independent telephone and modern manufacturers to connect their equipment to U.S. telephone networks for the first time.

Another key decision in 1970 permitted MCI to provide limited long-distance service in the United States in competition with AT&T. Throughout the 1970s, there were many arguments and court cases over the monopolistic position that AT&T held over U.S. communication services. On January 1, 1984, AT&T was divided in two parts under a consent decree devised by a federal judge. The first part, AT&T, provided long-distance telephone services in competition with other interexchange carriers (IXCs) such as MCI and Sprint. The second part, a series of seven regional Bell operating companies (RBOCs) or local exchange carriers (LECs), provided local telephone services to homes and businesses. AT&T was prohibited from providing local telephone services, and the RBOCs were prohibited from providing long-distance services. Intense competition began in the long-distance market as MCI, Sprint, and a host of other companies began to offer services and dramatically cut prices under the watchful eye of the FCC. Competition was prohibited in the local telephone market, so the RBOCs remained a regulated monopoly under the control of a multitude of state laws. The Canadian long-distance market was opened to competition in 1992.

During 1983 and 1984, traditional radio telephone calls were supplanted by the newer cellular telephone networks. In the 1990s, cellular telephones became commonplace and shrank to pocket size. Demand grew so much that in some cities (e.g., New York and Atlanta), it became difficult to get a dial tone at certain times of the day.

In February 1996, the U.S. Congress enacted the Telecommunications Competition and Deregulation Act of 1996. The act replaced all current laws, FCC regulations, and the 1984 consent decree and subsequent court rulings under which AT&T was broken up. It also overruled all existing state laws and prohibited states from introducing new laws. Practically overnight, the local telephone industry in the United States went from a highly regulated and legally restricted monopoly to multiple companies engaged in open competition.

Today, local and long-distance service in the United States is open for competition. The common carriers (RBOCs, IXCs, cable TV companies, and other LECs) are permitted to build their own local telephone facilities and offer services to customers. To increase competition, the RBOCs must sell their telephone services to their competitors at wholesale prices, who can then resell them to consumers at retail prices. Most analysts expected the big IXCs (e.g., AT&T) to quickly charge into the local telephone market, but they were slow to move. Meanwhile, the RBOCs have been aggressively fighting court battles to keep competitors out of their local telephone markets and merging with each other and with the IXCs.

Virtually all RBOCs, LECs, and IXCs have aggressively entered the Internet market. Today, there are thousands of ISPs who provide broadband access to the Internet to millions of small business and home users. Most of these are small companies that lease telecommunications circuits from the RBOCs, LECs, and IXCs and use them to provide Internet access to their customers. As the RBOCs, LECs, and IXCs continue to move into the Internet market and provide the same services directly to consumers, the smaller ISPs are facing heavy competition.

International competition has also been heightened by an international agreement signed in 1997 by 68 countries to deregulate (or at least lessen regulation in) their telecommunications markets. The countries agreed to permit foreign firms to compete in their internal telephone markets. Major U.S. firms (e.g., AT&T, BellSouth Corporation) now offer telephone service in many of the industrialized and emerging countries in North America, South America, Europe, and Asia. Likewise, overseas telecommunications giants (e.g., British Telecom) are beginning to enter the U.S. market. This should increase competition in the United States, but the greatest effect is likely to be felt in emerging countries. For example, it costs more to use a telephone in India than it does in the United States.

1.1.2 A Brief History of Information Systems

The natural evolution of information systems in business, government, and home use has forced the widespread use of data communication networks to interconnect various computer systems. However, data communications has not always been considered important.

In the 1950s, computer systems used batch processing, and users carried their punched cards to the computer for processing. By the 1960s, data communication across telephone lines became more common. Users could type their own batches of data for processing using online terminals. Data communications involved the transmission of messages from these terminals to a large central mainframe computer and back to the user.

During the 1970s, online real-time systems were developed that moved the users from batch processing to single transaction-oriented processing. Database management systems replaced the older file systems, and integrated systems were developed in which the entry of an online transaction in one business system (e.g., order entry) might automatically trigger transactions in other business systems (e.g., accounting, purchasing). Computers entered the mainstream of business, and data communications networks became a necessity.

The 1980s witnessed the personal computer revolution. At first, personal computers were isolated from the major information systems applications, serving the needs of individual users (e.g., spreadsheets). As more people began to rely on personal computers for essential applications, the need for networks to exchange data among personal computers and between personal computers and large mainframe computers became clear. By the early 1990s, more than 60 percent of all personal computers in U.S. corporations were networked—connected to other computers.

Today, the personal computer has evolved from a small, low-power computer into a very powerful, easy-to-use system with a large amount of low-cost software. Today's personal computers have more raw computing power than a mainframe of the 1990s. Perhaps more surprisingly, corporations today have far more total computing power sitting on desktops in the form of personal computers than they have in their large central mainframe computers.

The most important aspect of computers is networking. The Internet is everywhere, and virtually all computers are networked. Most corporations have built distributed systems in which information system applications are divided among a network of computers. This form of computing, called client-server computing, dramatically changes the way information systems professionals and users interact with computers. The office that interconnects personal computers, mainframe computers, fax machines, copiers, videoconferencing equipment, and other equipment has put tremendous demands on data communications networks.

These networks already have had a dramatic impact on the way business is conducted. Networking played a key role—among many other factors—in the growth of Wal-Mart Stores, Inc., into one of the largest forces in the North American retail industry. That process has transformed the retailing industry. Wal-Mart has dozens of mainframes and thousands of network file servers, personal computers, handheld inventory computers, and networked cash registers. (As an aside, it is interesting to note that every single personal computer built by IBM in the United States during the third quarter of 1997 was purchased by Wal-Mart.) At the other end of the spectrum, the lack of a sophisticated data communications network was one of the key factors in the bankruptcy of Macy's in the 1990s.

In retail sales, a network is critical for managing inventory. Macy's had a traditional 1970s inventory system. At the start of the season, buyers would order products in large lots to get volume discounts. Some products would be very popular and sell out quickly. When the sales clerks did a weekly inventory and noticed the shortage, they would order more. If the items were not available in the warehouse (and very popular products were often not available), it would take six to eight weeks to restock them. Customers would buy from other stores, and Macy's would lose the sales. Other products, also bought in large quantities, would be unpopular and have to be sold at deep discounts.

In contrast, Wal-Mart negotiates volume discounts with suppliers on the basis of total purchases but does not specify particular products. Buyers place initial orders in small quantities. Each time a product is sold, the sale is recorded. Every day or two, the complete list of purchases is transferred over the network (often via a satellite) to the head office, a distribution center, or the supplier. Replacements for the products sold are shipped almost immediately and typically arrive within days. The result is that Wal-Mart seldom has a major problem with overstocking an unwanted product or running out of a popular product (unless, of course, the supplier is unable to produce it fast enough).

1.1.3 A Brief History of the Internet

The Internet is one of the most important developments in the history of both information systems and communication systems because it is both an information system and a communication system. The Internet was started by the U.S. Department of Defense in 1969 as a network of four computers called ARPANET. Its goal was to link a set of computers operated by several universities doing military research. The original network grew as more computers and more computer networks were linked to it. By 1974, there were 62 computers attached. In 1983, the Internet split into two parts, one dedicated solely to military installations (called Milnet) and one dedicated to university research centers (called the Internet) that had just under 1,000 servers.

In 1985, the Canadian government completed its leg of BITNET to link all Canadian universities from coast to coast and provided connections into the American Internet. (BITNET is a competing network to the Internet developed by the City University of New York and Yale University that uses a different approach.) In 1986, the National Science Foundation in the United States created NSFNET to connect leading U.S. universities. By the end of 1987, there were 10,000 servers on the Internet and 1,000 on BITNET.

Performance began to slow down due to increased network traffic, so in 1987, the National Science Foundation decided to improve performance by building a new high-speed backbone network for NSFNET. It leased high-speed circuits from several IXCs and in 1988 connected 13 regional Internet networks containing 170 LANs (local area networks) and 56,000 servers. The National Research Council of Canada followed in 1989 and replaced BITNET with a high-speed network called CA*net that used the same communication language as the Internet. By the end of 1989, there were almost 200,000 servers on the combined U.S. and Canadian Internet.

Similar initiatives were undertaken by most other countries around the world, so that by the early 1990s, most of the individual country networks were linked together into one worldwide network of networks. Each of these individual country networks was distinct (each had its own name, access rules, and fee structures), but all networks used the same standards as the U.S. Internet network so they could easily exchange messages with one another. Gradually, the distinctions among the networks in each of the countries began to disappear, and the U.S. name, the Internet, began to be used to mean the entire worldwide network of networks connected to the U.S. Internet. By the end of 1992, there were more than 1 million servers on the Internet.

1.2 NETWORKS IN THE FIRST GULF WAR

MANAGEMENT FOCUS

The lack of a good network can cost more than money. During Operation Desert Shield/Desert Storm, the U.S. Army, Navy, and Air Force lacked one integrated logistics communications network. Each service had its own series of networks, making communication and cooperation difficult. But communication among the systems was essential. Each day a navy aircraft would fly into Saudi Arabia to exchange diskettes full of logistics information with the army—an expensive form of “wireless” networking.

This lack of an integrated network also created problems transmitting information from the United States into the Persian Gulf. More than 60 percent of the containers of supplies arrived without documentation. They had to be unloaded for someone to see what was in them and then reloaded for shipment to combat units.

The logistics information systems and communication networks experienced such problems that some Air Force units were unable to quickly order and receive critical spare parts needed to keep planes flying. Officers telephoned the U.S.-based suppliers of these parts and instructed them to send the parts via FedEx.

Fortunately, the war did not start until the United States and its allies were prepared. Had Iraq attacked, things might have turned out differently.

Originally, commercial traffic was forbidden on the Internet (and on the other individual country networks) because the key portions of these networks were funded by the various national governments and research organizations. In the early 1990s, commercial networks began connecting into NSFNET, CA*net, and the other government-run networks in each country. New commercial online services began offering access to anyone willing to pay, and a connection into the worldwide Internet became an important marketing issue. The growth in the commercial portion of the Internet was so rapid that it quickly overshadowed university and research use. In 1994, with more than 4 million servers on the Internet (most of which were commercial), the U.S. and Canadian governments stopped funding their few remaining circuits and turned them over to commercial firms. Most other national governments soon followed. The Internet had become commercial.

The Internet has continued to grow at a dramatic pace. No one knows exactly how large the Internet is, but estimates suggest there are more than 800 million servers on the Internet, which is still growing rapidly (see www.isc.org). In the mid-1990s, most Internet users were young (under 35 years old) and male, but as the Internet matures, its typical user becomes closer to the underlying average in the population as a whole (i.e., older and more evenly split between men and women). In fact, the fastest growing segment of Internet users is retirees.

One issue now facing the Internet is net neutrality. Net neutrality means that for a given type of content (e.g., email, web, video, music), all content providers are treated the same. For example, with net neutrality, all videos on the Internet would be transmitted at the same speed, regardless of whether they came from YouTube, Hulu, or CNN. Without net neutrality, Internet service providers can give priority to some content providers and slow down others. As we write this, some ISPs have admitted to taking payments from large media companies in return for speeding up video from their sites and slowing down—or even blocking—video from their competitors. Needless to say, many ISPs are strongly opposed to net neutrality—or a “government takeover of the Internet” as they put it—because with net neutrality they can no longer demand payments from content providers. Net neutrality will become more important as wireless Internet access increases and more people use their mobile phones to access the Internet.

1.2 DATA COMMUNICATIONS NETWORKS

Data communications is the movement of computer information from one point to another by means of electrical or optical transmission systems. Such systems are often called data communications networks. This is in contrast to the broader term telecommunications, which includes the transmission of voice and video (images and graphics) as well as data and usually implies longer distances. In general, data communications networks collect data from personal computers and other devices and transmit that data to a central server that is a more powerful personal computer, minicomputer, or mainframe, or they perform the reverse process, or some combination of the two. Data communications networks facilitate more efficient use of computers and improve the day-to-day control of a business by providing faster information flow. They also provide message transfer services to allow computer users to talk to one another via email, chat, and video streaming.

1.1 INTERNET DOMAIN NAMES

TECHNICAL FOCUS

Internet address names are strictly controlled; otherwise, someone could add a computer to the Internet that had the same address as another computer. Each address name has two parts, the computer name and its domain. The general format of an Internet address is therefore computer.domain. Some computer names have several parts separated by periods, so some addresses have the format computer.computer.computer.domain. For example, the main university Web server at Indiana University (IU) is called www.indiana.edu, whereas the Web server for the Kelley School of Business at IU is www.kelley.indiana.edu.

Since the Internet began in the United States, the American address board was the first to assign domain names to indicate types of organization. Some common U.S. domain names are

| EDU | for an educational institution, usually a university |

| COM | for a commercial business |

| GOV | for a government department or agency |

| MIL | for a military unit |

| ORG | for a nonprofit organization |

As networks in other countries were connected to the Internet, they were assigned their own domain names. Some international domain names are

| CA | for Canada |

| AU | for Australia |

| UK | for the United Kingdom |

| DE | for Germany |

New top-level domains that focus on specific types of businesses continue to be introduced, such as

| AERO | for aerospace companies |

| MUSEUM | for museums |

| NAME | for individuals |

| PRO | for professionals, such as accountants and lawyers |

| BIZ | for businesses |

Many international domains structure their addresses in much the same way as the United States does. For example, Australia uses EDU to indicate academic institutions, so an address such as xyz.edu.au would indicate an Australian university.

For a full list of domain names, see www.iana.org/root/db.

1.2.1 Components of a Network

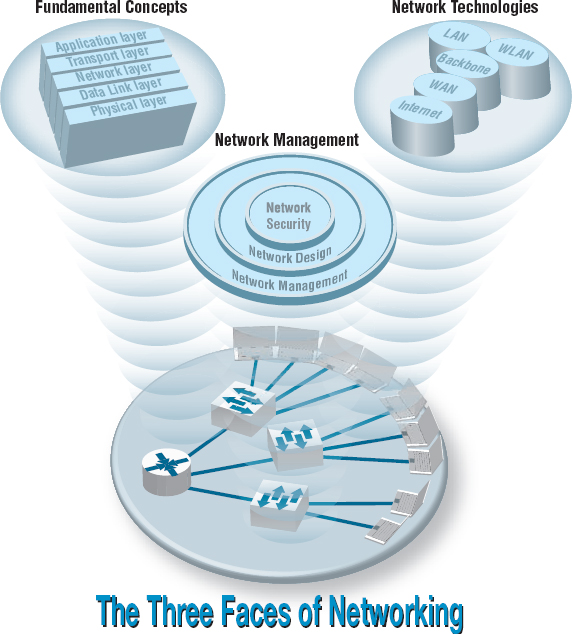

There are three basic hardware components for a data communications network: a server (e.g., personal computer, mainframe), a client (e.g., personal computer, terminal), and a circuit (e.g., cable, modem) over which messages flow. Both the server and client also need special-purpose network software that enables them to communicate.

The server stores data or software that can be accessed by the clients. In client-server computing, several servers may work together over the network with a client computer to support the business application.

The client is the input-output hardware device at the user's end of a communication circuit. It typically provides users with access to the network and the data and software on the server.

The circuit is the pathway through which the messages travel. It is typically a copper wire, although fiber-optic cable and wireless transmission are becoming common. There are many devices in the circuit that perform special functions such as switches and routers.

Strictly speaking, a network does not need a server. Some networks are designed to connect a set of similar computers that share their data and software with each other. Such networks are called peer-to-peer networks because the computers function as equals, rather than relying on a central server to store the needed data and software.

Figure 1.1 shows a small network that has four personal computers (clients) connected by a switch and cables (circuit). In this network, messages move through the switch to and from the computers. All computers share the same circuit and must take turns sending messages. The router is a special device that connects two or more networks. The router enables computers on this network to communicate with computers on other networks (e.g., the Internet).

The network in Figure 1.1 has three servers. Although one server can perform many functions, networks are often designed so that a separate computer is used to provide different services. The file server stores data and software that can be used by computers on the network. The print server, which is connected to a printer, manages all printing requests from the clients on the network. The Web server stores documents and graphics that can be accessed from any Web browser, such as Internet Explorer. The Web server can respond to requests from computers on this network or any computer on the Internet. Servers are usually personal computers (often more powerful than the other personal computers on the network) but may be minicomputers or mainframes.

1.2.2 Types of Networks

There are many different ways to categorize networks. One of the most common ways is to look at the geographic scope of the network. Figure 1.2 illustrates four types of networks: local area networks (LANs), backbone networks (BNs), metropolitan area networks (MANs), and wide area networks (WANs). The distinctions among these are becoming blurry. Some network technologies now used in LANs were originally developed for WANs, whereas some LAN technologies have influenced the development of MAN products. Any rigid classification of technologies is certain to have exceptions.

A local area network (LAN) is a group of computers located in the same general area. A LAN covers a clearly defined small area, such as one floor or work area, a single building, or a group of buildings. LANs often use shared circuits, where all computers must take turns using the same circuit. The upper left diagram in Figure 1.2 shows a small LAN located in the records building at the former McClellan Air Force Base in Sacramento. LANs support high-speed data transmission compared with standard telephone circuits, commonly operating 100 million bits per second (100 Mbps). LANs and wireless LANs are discussed in detail in Chapter 6.

FIGURE 1.1 Example of a local area network (LAN)

Most LANs are connected to a backbone network (BN), a larger, central network connecting several LANs, other BNs, MANs, and WANs. BNs typically span from hundreds of feet to several miles and provide very high speed data transmission, commonly 100 to 1,000 Mbps. The second diagram in Figure 1.2 shows a BN that connects the LANs located in several buildings at McClellan Air Force Base. BNs are discussed in detail in Chapter 7.

A metropolitan area network (MAN) connects LANs and BNs located in different areas to each other and to WANs. MANs typically span between 3 and 30 miles. The third diagram in Figure 1.2 shows a MAN connecting the BNs at several military and government complexes in Sacramento. Some organizations develop their own MANs using technologies similar to those of BNs. These networks provide moderately fast transmission rates but can prove costly to install and operate over long distances. Unless an organization has a continuing need to transfer large amounts of data, this type of MAN is usually too expensive. More commonly, organizations use public data networks provided by common carriers (e.g., the telephone company) as their MANs. With these MANs, data transmission rates typically range from 64,000 bits per second (64 Kbps) to 100 Mbps, although newer technologies provide data rates of 10 billion bits per second (10 gigabits per second, 10 Gbps). MANs are discussed in detail in Chapter 8.

FIGURE 1.2 The hierarchical relationship of a local area network (LAN) to a backbone network (BN) to a metropolitan area network (MAN) to a wide area network (WAN)

Wide area networks (WANs) connect BNs and MANs (see Figure 1.2). Most organizations do not build their own WANs by laying cable, building microwave towers, or sending up satellites (unless they have unusually heavy data transmission needs or highly specialized requirements, such as those of the Department of Defense). Instead, most organizations lease circuits from IXCs (e.g., AT&T, MCI, Sprint) and use those to transmit their data. WAN circuits provided by IXCs come in all types and sizes but typically span hundreds or thousands of miles and provide data transmission rates from 64 Kbps to 10 Gbps. WANs are also discussed in detail in Chapter 8.

Two other common terms are intranets and extranets. An intranet is a LAN that uses the same technologies as the Internet (e.g., Web servers, Java, HTML [Hypertext Markup Language]) but is open to only those inside the organization. For example, although some pages on a Web server may be open to the public and accessible by anyone on the Internet, some pages may be on an intranet and therefore hidden from those who connect to the Web server from the Internet at large. Sometimes an intranet is provided by a completely separate Web server hidden from the Internet. The intranet for the Information Systems Department at Indiana University, for example, provides information on faculty expense budgets, class scheduling for future semesters (e.g., room, instructor), and discussion forums.

An extranet is similar to an intranet in that it, too, uses the same technologies as the Internet but instead is provided to invited users outside the organization who access it over the Internet. It can provide access to information services, inventories, and other internal organizational databases that are provided only to customers, suppliers, or those who have paid for access. Typically, users are given passwords to gain access, but more sophisticated technologies such as smart cards or special software may also be required. Many universities provide extranets for Web-based courses so that only those students enrolled in the course can access course materials and discussions.

1.3 NETWORK MODELS

There are many ways to describe and analyze data communications networks. All networks provide the same basic functions to transfer a message from sender to receiver, but each network can use different network hardware and software to provide these functions. All of these hardware and software products have to work together to successfully transfer a message.

One way to accomplish this is to break the entire set of communications functions into a series of layers, each of which can be defined separately. In this way, vendors can develop software and hardware to provide the functions of each layer separately. The software or hardware can work in any manner and can be easily updated and improved, as long as the interface between that layer and the ones around it remain unchanged. Each piece of hardware and software can then work together in the overall network.

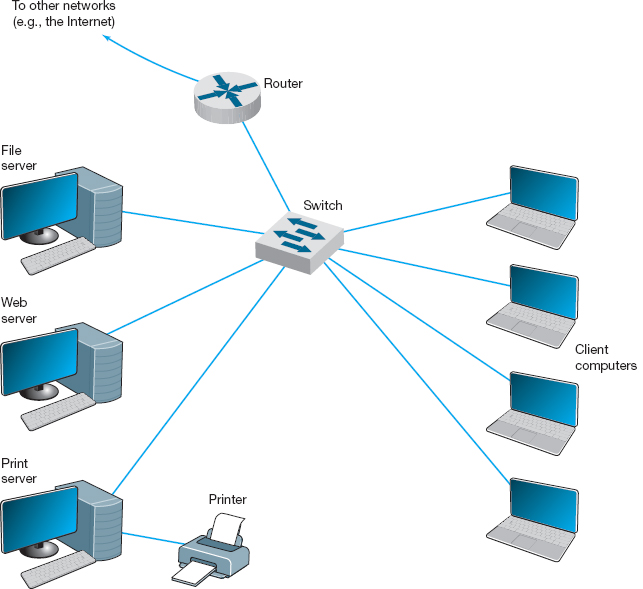

There are many different ways in which the network layers can be designed. The two most important network models are the Open Systems Interconnection Reference (OSI) model and the Internet model. The Internet model is the most commonly used of the two; few people use the OSI model, although understand it is commonly required for network certification exams.

1.3.1 Open Systems Interconnection Reference Model

The Open Systems Interconnection Reference model (usually called the OSI model for short) helped change the face of network computing. Before the OSI model, most commercial networks used by businesses were built using nonstandardized technologies developed by one vendor (remember that the Internet was in use at the time but was not widespread and certainly was not commercial). During the late 1970s, the International Organization for Standardization (IOS) created the Open System Interconnection Subcommittee, whose task was to develop a framework of standards for computer-to-computer communications. In 1984, this effort produced the OSI model.

The OSI model is the most talked about and most referred to network model. If you choose a career in networking, questions about the OSI model will be on the network certification exams offered by Microsoft, Cisco, and other vendors of network hardware and software. However, you will probably never use a network based on the OSI model. Simply put, the OSI model never caught on commercially in North America, although some European networks use it, and some network components developed for use in the United States arguably use parts of it. Most networks today use the Internet model, which is discussed in the next section. However, because there are many similarities between the OSI model and the Internet model, and because most people in networking are expected to know the OSI model, we discuss it here. The OSI model has seven layers (see Figure 1.3).

Layer 1: Physical Layer The physical layer is concerned primarily with transmitting data bits (zeros or ones) over a communication circuit. This layer defines the rules by which ones and zeros are transmitted, such as voltages of electricity, number of bits sent per second, and the physical format of the cables and connectors used.

Layer 2: Data Link Layer The data link layer manages the physical transmission circuit in layer 1 and transforms it into a circuit that is free of transmission errors as far as layers above are concerned. Because layer 1 accepts and transmits only a raw stream of bits without understanding their meaning or structure, the data link layer must create and recognize message boundaries; that is, it must mark where a message starts and where it ends. Another major task of layer 2 is to solve the problems caused by damaged, lost, or duplicate messages so the succeeding layers are shielded from transmission errors. Thus, layer 2 performs error detection and correction. It also decides when a device can transmit so that two computers do not try to transmit at the same time.

FIGURE 1.3 Network models. OSI = Open Systems Interconnection Reference

Layer 3: Network Layer The network layer performs routing. It determines the next computer the message should be sent to so it can follow the best route through the network and finds the full address for that computer if needed.

Layer 4: Transport Layer The transport layer deals with end-to-end issues, such as procedures for entering and departing from the network. It establishes, maintains, and terminates logical connections for the transfer of data between the original sender and the final destination of the message. It is responsible for breaking a large data transmission into smaller packets (if needed), ensuring that all the packets have been received, eliminating duplicate packets, and performing flow control to ensure that no computer is overwhelmed by the number of messages it receives. Although error control is performed by the data link layer, the transport layer can also perform error checking.

Layer 5: Session Layer The session layer is responsible for managing and structuring all sessions. Session initiation must arrange for all the desired and required services between session participants, such as logging onto circuit equipment, transferring files, and performing security checks. Session termination provides an orderly way to end the session, as well as a means to abort a session prematurely. It may have some redundancy built in to recover from a broken transport (layer 4) connection in case of failure. The session layer also handles session accounting so the correct party receives the bill.

Layer 6: Presentation Layer The presentation layer formats the data for presentation to the user. Its job is to accommodate different interfaces on different computers so the application program need not worry about them. It is concerned with displaying, formatting, and editing user inputs and outputs. For example, layer 6 might perform data compression, translation between different data formats, and screen formatting. Any function (except those in layers 1 through 5) that is requested sufficiently often to warrant finding a general solution is placed in the presentation layer, although some of these functions can be performed by separate hardware and software (e.g., encryption).

Layer 7: Application Layer The application layer is the end user's access to the network. The primary purpose is to provide a set of utilities for application programs. Each user program determines the set of messages and any action it might take on receipt of a message. Other network-specific applications at this layer include network monitoring and network management.

1.3.2 Internet Model

The network model that dominates current hardware and software is a more simple five-layer Internet model. Unlike the OSI model that was developed by formal committees, the Internet model evolved from the work of thousands of people who developed pieces of the Internet. The OSI model is a formal standard that is documented in one standard, but the Internet model has never been formally defined; it has to be interpreted from a number of standards.1 The two models have very much in common (see Figure 1.3); simply put, the Internet model collapses the top three OSI layers into one layer. Because it is clear that the Internet has won the “war,” we use the five-layer Internet model for the rest of this book.

Layer 1: The Physical Layer The physical layer in the Internet model, as in the OSI model, is the physical connection between the sender and receiver. Its role is to transfer a series of electrical, radio, or light signals through the circuit. The physical layer includes all the hardware devices (e.g., computers, modems, and switches) and physical media (e.g., cables and satellites). The physical layer specifies the type of connection and the electrical signals, radio waves, or light pulses that pass through it. Chapter 3 discusses the physical layer in detail.

Layer 2: The Data Link Layer The data link layer is responsible for moving a message from one computer to the next computer in the network path from the sender to the receiver. The data link layer in the Internet model performs the same three functions as the data link layer in the OSI model. First, it controls the physical layer by deciding when to transmit messages over the media. Second, it formats the messages by indicating where they start and end. Third, it detects and may correct any errors that have occurred during transmission. Chapter 4 discusses the data link layer in detail.

Layer 3: The Network Layer The network layer in the Internet model performs the same functions as the network layer in the OSI model. First, it performs routing, in that it selects the next computer to which the message should be sent. Second, it can find the address of that computer if it doesn't already know it. Chapter 5 discusses the network layer in detail.

Layer 4: The Transport Layer The transport layer in the Internet model is very similar to the transport layer in the OSI model. It performs two functions. First, it is responsible for linking the application layer software to the network and establishing end-to-end connections between the sender and receiver when such connections are needed. Second, it is responsible for breaking long messages into several smaller messages to make them easier to transmit and then recombining the smaller messages back into the original larger message at the receiving end. The transport layer can also detect lost messages and request that they be resent. Chapter 5 discusses the transport layer in detail.

Layer 5: Application Layer The application layer is the application software used by the network user and includes much of what the OSI model contains in the application, presentation, and session layers. It is the user's access to the network. By using the application software, the user defines what messages are sent over the network. Because it is the layer that most people understand best and because starting at the top sometimes helps people understand better, the next chapter, Chapter 2, begins with the application layer. It discusses the architecture of network applications and several types of network application software and the types of messages they generate.

Groups of Layers The layers in the Internet are often so closely coupled that decisions in one layer impose certain requirements on other layers. The data link layer and the physical layer are closely tied together because the data link layer controls the physical layer in terms of when the physical layer can transmit. Because these two layers are so closely tied together, decisions about the data link layer often drive the decisions about the physical layer. For this reason, some people group the physical and data link layers together and call them the hardware layers. Likewise, the transport and network layers are so closely coupled that sometimes these layers are called the internetwork layer. See Figure 1.3. When you design a network, you often think about the network design in terms of three groups of layers: the hardware layers (physical and data link), the internetwork layers (network and transport), and the application layer.

1.3.3 Message Transmission Using Layers

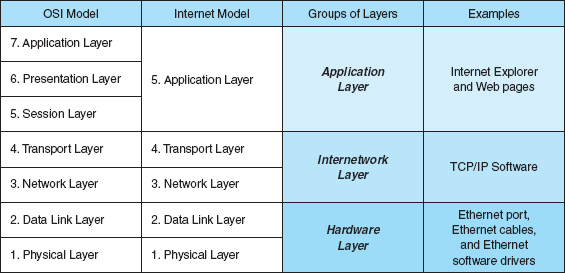

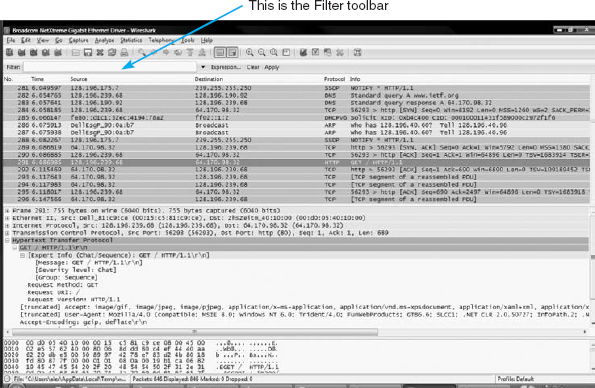

Each computer in the network has software that operates at each of the layers and performs the functions required by those layers (the physical layer is hardware, not software). Each layer in the network uses a formal language, or protocol, that is simply a set of rules that define what the layer will do and that provides a clearly defined set of messages that software at the layer needs to understand. For example, the protocol used for Web applications is HTTP (Hypertext Transfer Protocol, which is described in more detail in Chapter 2). In general, all messages sent in a network pass through all layers. All layers except the Physical layer add a Protocol Data Unit (PDU) to the message as it passes through them. The PDU contains information that is needed to transmit the message through the network. Some experts use the word packet to mean a PDU. Figure 1.4 shows how a message requesting a Web page would be sent on the Internet.

Application Layer First, the user creates a message at the application layer using a Web browser by clicking on a link (e.g., get the home page at www.somebody.com). The browser translates the user's message (the click on the Web link) into HTTP. The rules of HTTP define a specific PDU—called an HTTP packet—that all Web browsers must use when they request a Web page. For now, you can think of the HTTP packet as an envelope into which the user's message (get the Web page) is placed. In the same way that an envelope placed in the mail needs certain information written in certain places (e.g., return address, destination address), so too does the HTTP packet. The Web browser fills in the necessary information in the HTTP packet, drops the user's request inside the packet, then passes the HTTP packet (containing the Web page request) to the transport layer.

FIGURE 1.4 Message transmission using layers. IP = Internet Protocol; HTTP/Hypertext Transfer Protocol; TCP = Transmission Control Protocol

Transport Layer The transport layer on the Internet uses a protocol called TCP (Transmission Control Protocol), and it, too, has its own rules and its own PDUs. TCP is responsible for breaking large files into smaller packets and for opening a connection to the server for the transfer of a large set of packets. The transport layer places the HTTP packet inside a TCP PDU (which is called a TCP segment), fills in the information needed by the TCP segment, and passes the TCP segment (which contains the HTTP packet, which, in turn, contains the message) to the network layer.

Network Layer The network layer on the Internet uses a protocol called IP (Internet Protocol), which has its rules and PDUs. IP selects the next stop on the message's route through the network. It places the TCP segment inside an IP PDU, which is called an IP packet, and passes the IP packet, which contains the TCP segment, which, in turn, contains the HTTP packet, which, in turn, contains the message, to the data link layer.

Data Link Layer If you are connecting to the Internet using a LAN, your data link layer may use a protocol called Ethernet, which also has its own rules and PDUs. The data link layer formats the message with start and stop markers, adds error checking information, places the IP packet inside an Ethernet PDU, which is called an Ethernet frame, and instructs the physical hardware to transmit the Ethernet frame, which contains the IP packet, which contains the TCP segment, which contains the HTTP packet, which contains the message.

Physical Layer The physical layer in this case is network cable connecting your computer to the rest of the network. The computer will take the Ethernet frame (complete with the IP packet, the TCP segment, the HTTP packet, and the message) and send it as a series of electrical pulses through your cable to the server.

When the server gets the message, this process is performed in reverse. The physical hardware translates the electrical pulses into computer data and passes the message to the data link layer. The data link layer uses the start and stop markers in the Ethernet frame to identify the message. The data link layer checks for errors and, if it discovers one, requests that the message be resent. If a message is received without error, the data link layer will strip off the Ethernet frame and pass the IP packet (which contains the TCP segment, the HTTP packet, and the message) to the network layer. The network layer checks the IP address and, if it is destined for this computer, strips off the IP packet and passes the TCP segment, which contains the HTTP packet and the message to the transport layer. The transport layer processes the message, strips off the TCP segment, and passes the HTTP packet to the application layer for processing. The application layer (i.e., the Web server) reads the HTTP packet and the message it contains (the request for the Web page) and processes it by generating an HTTP packet containing the Web page you requested. Then the process starts again as the page is sent back to you.

The Pros and Cons of Using Layers There are three important points in this example. First, there are many different software packages and many different PDUs that operate at different layers to successfully transfer a message. Networking is in some ways similar to the Russian Matryoshka, nested dolls that fit neatly inside each other. This is called encapsulation, because the PDU at a higher level is placed inside the PDU at a lower level so that the lower level PDU encapsulates the higher-level one. The major advantage of using different software and protocols is that it is easy to develop new software, because all one has to do is write software for one level at a time. The developers of Web applications, for example, do not need to write software to perform error checking or routing, because those are performed by the data link and network layers. Developers can simply assume those functions are performed and just focus on the application layer. Likewise, it is simple to change the software at any level (or add new application protocols), as long as the interface between that layer and the ones around it remains unchanged.

Second, it is important to note that for communication to be successful, each layer in one computer must be able to communicate with its matching layer in the other computer. For example, the physical layer connecting the client and server must use the same type of electrical signals to enable each to understand the other (or there must be a device to translate between them). Ensuring that the software used at the different layers is the same is accomplished by using standards. A standard defines a set of rules, called protocols, that explain exactly how hardware and software that conform to the standard are required to operate. Any hardware and software that conform to a standard can communicate with any other hardware and software that conform to the same standard. Without standards, it would be virtually impossible for computers to communicate.

Third, the major disadvantage of using a layered network model is that it is somewhat inefficient. Because there are several layers, each with its own software and PDUs, sending a message involves many software programs (one for each protocol) and many PDUs. The PDUs add to the total amount of data that must be sent (thus increasing the time it takes to transmit), and the different software packages increase the processing power needed in computers. Because the protocols are used at different layers and are stacked on top of one another (take another look at Figure 1.4), the set of software used to understand the different protocols is often called a protocol stack.

1.4 NETWORK STANDARDS

1.4.1 The Importance of Standards

Standards are necessary in almost every business and public service entity. For example, before 1904, fire hose couplings in the United States were not standard, which meant a fire department in one community could not help in another community. The transmission of electric current was not standardized until the end of the nineteenth century, so customers had to choose between Thomas Edison's direct current (DC) and George Westinghouse's alternating current (AC).

The primary reason for standards is to ensure that hardware and software produced by different vendors can work together. Without networking standards, it would be difficult—if not impossible—to develop networks that easily share information. Standards also mean that customers are not locked into one vendor. They can buy hardware and software from any vendor whose equipment meets the standard. In this way, standards help to promote more competition and hold down prices.

The use of standards makes it much easier to develop software and hardware that link different networks because software and hardware can be developed one layer at a time.

1.4.2 The Standards-Making Process

There are two types of standards: de juro and de facto. A de juro standard is developed by an official industry or government body and is often called a formal standard. For example, there are de juro standards for applications such as Web browsers (e.g., HTTP, HTML), for network layer software (e.g., IP), for data link layer software (e.g., Ethernet IEEE 802.3), and for physical hardware (e.g., V.90 modems). De juro standards typically take several years to develop, during which time technology changes, making them less useful.

De facto standards are those that emerge in the marketplace and are supported by several vendors but have no official standing. For example, Microsoft Windows is a product of one company and has not been formally recognized by any standards organization, yet it is a de facto standard. In the communications industry, de facto standards often de juro become standards once they have been widely accepted.

The de juro standardization process has three stages: specification, identification of choices, and acceptance. The specification stage consists of developing a nomenclature and identifying the problems to be addressed. In the identification of choices stage, those working on the standard identify the various solutions and choose the optimum solution from among the alternatives. Acceptance, which is the most difficult stage, consists of defining the solution and getting recognized industry leaders to agree on a single, uniform solution. As with many other organizational processes that have the potential to influence the sales of hardware and software, standards-making processes are not immune to corporate politics and the influence of national governments.

International Organization for Standardization One of the most important standards-making bodies is the International Organization for Standardization (ISO),2 which makes technical recommendations about data communication interfaces (see www.iso.org). ISO is based in Geneva, Switzerland. The membership is composed of the national standards organizations of each ISO member country.

International Telecommunications Union—Telecommunications Group The Telecommunications Group (ITU-T) is the technical standards-setting organization of the United Nations International Telecommunications Union, which is also based in Geneva (see www.itu.int). ITU is composed of representatives from about 200 member countries. Membership was originally focused on just the public telephone companies in each country, but a major reorganization in 1993 changed this, and ITU now seeks members among public- and private-sector organizations who operate computer or communications networks (e.g., RBOCs) or build software and equipment for them (e.g., AT&T).

American National Standards Institute The American National Standards Institute (ANSI) is the coordinating organization for the U.S. national system of standards for both technology and nontechnology (see www.ansi.org). ANSI has about 1,000 members from both public and private organizations in the United States. ANSI is a standardization organization, not a standards-making body, in that it accepts standards developed by other organizations and publishes them as American standards. Its role is to coordinate the development of voluntary national standards and to interact with ISO to develop national standards that comply with ISO's international recommendations. ANSI is a voting participant in the ISO.

Institute of Electrical and Electronics Engineers The Institute of Electrical and Electronics Engineers (IEEE) is a professional society in the United States whose Standards Association (IEEE-SA) develops standards (see www.standards.ieee.org). The IEEE-SA is probably most known for its standards for LANs. Other countries have similar groups; for example, the British counterpart of IEEE is the Institution of Electrical Engineers (IEE).

1.3 HOW NETWORK PROTOCOLS BECOME STANDARDS

MANAGEMENT FOCUS

There are many standards organizations around the world, but perhaps the best known is the Internet Engineering Task Force (IETF). IETF sets the standards that govern how much of the Internet operates.

The IETF, like all standards organizations, tries to seek consensus among those involved before issuing a standard. Usually, a standard begins as a protocol (i.e., a language or set of rules for operating) developed by a vendor (e.g., HTML [Hypertext Markup Language]). When a protocol is proposed for standardization, the IETF forms a working group of technical experts to study it. The working group examines the protocol to identify potential problems and possible extensions and improvements, then issues a report to the IETF.

If the report is favorable, the IETF issues a Request for Comment (RFC) that describes the proposed standard and solicits comments from the entire world. Most large software companies likely to be affected by the proposed standard prepare detailed responses. Many “regular” Internet users also send their comments to the IETF.

The IETF reviews the comments and possibly issues a new and improved RFC, which again is posted for more comments. Once no additional changes have been identified, it becomes a proposed standard.

Usually, several vendors adopt the proposed standard and develop products based on it. Once at least two vendors have developed hardware or software based on it and it has proven successful in operation, the proposed standard is changed to a draft standard. This is usually the final specification, although some protocols have been elevated to Internet standards, which usually signifies mature standards not likely to change.

The process does not focus solely on technical issues; almost 90 percent of the IETF's participants work for manufacturers and vendors, so market forces and politics often complicate matters. One former IETF chairperson who worked for a hardware manufacturer has been accused of trying to delay the standards process until his company had a product ready, although he and other IETF members deny this. Likewise, former IETF directors have complained that members try to standardize every product their firms produce, leading to a proliferation of standards, only a few of which are truly useful.

__________

SOURCES: “How Networking Protocols Become Standards,” PC Week, March 17, 1997; “Growing Pains,” Network World, April 14, 1997.

Internet Engineering Task Force The IETF sets the standards that govern how much of the Internet will operate (see www.ietf.org). The IETF is unique in that it doesn't really have official memberships. Quite literally anyone is welcome to join its mailing lists, attend its meetings, and comment on developing standards. The role of the IETF and other Internet organizations is discussed in more detail in Chapter 8; also, see the box entitled “How Network Protocols Become Standards.”

1.4.3 Common Standards

There are many different standards used in networking today. Each standard usually covers one layer in a network. Some of the most commonly used standards are shown in Figure 1.5. At this point, these models are probably just a maze of strange names and acronyms to you, but by the end of the book, you will have a good understanding of each of these. Figure 1.5 provides a brief road map for some of the important communication technologies we discuss in this book.

1.4 KEEPING UP WITH TECHNOLOGY

MANAGEMENT FOCUS

The data communications and networking arena changes rapidly. Significant new technologies are introduced and new concepts are developed almost every year. It is therefore important for network managers to keep up with these changes.

There are at least three useful ways to keep up with change. First and foremost for users of this book is the Web site for this book, which contains updates to the book, additional sections, teaching materials, and links to useful Web sites.

Second, there are literally hundreds of thousands of Web sites with data communications and networking information. Search engines can help you find them. A good initial starting point is the telecom glossary at www.atis.org. Two other useful sites are networkcomputing.com and zdnet.com.

Third, there are many useful magazines that discuss computer technology in general and networking technology in particular, including Network Computing, Data Communications, Info World, Info Week, and CIO Magazine.

For now, there is one important message you should understand from Figure 1.5: For a network to operate, many different standards must be used simultaneously. The sender of a message must use one standard at the application layer, another one at the transport layer, another one at the network layer, another one at the data link layer, and another one at the physical layer. Each layer and each standard is different, but all must work together to send and receive messages.

Either the sender and receiver of a message must use the same standards or, more likely, there are devices between the two that translate from one standard into another. Because different networks often use software and hardware designed for different standards, there is often a lot of translation between different standards.

FIGURE 1.5 Some common data communications standards. HTML = Hypertext Markup Language; HTTP = Hypertext Transfer Protocol; IMAP = Internet Message Access Protocol; IP = Internet Protocol; IPX = internetwork package exchange; LAN = local area network; MPEG = Motion Picture Experts Group; POP = Post Office Protocol; SPX = sequenced packet exchange; TCP = Transmission Control Protocol

1.5 FUTURE TRENDS

The field of data communications has grown faster and become more important than computer processing itself. Both go hand in hand, but we have moved from the computer era to the communication era. There are three major trends driving the future of communications and networking. All are interrelated, so it is difficult to consider one without the others.

1.5.1 Pervasive Networking

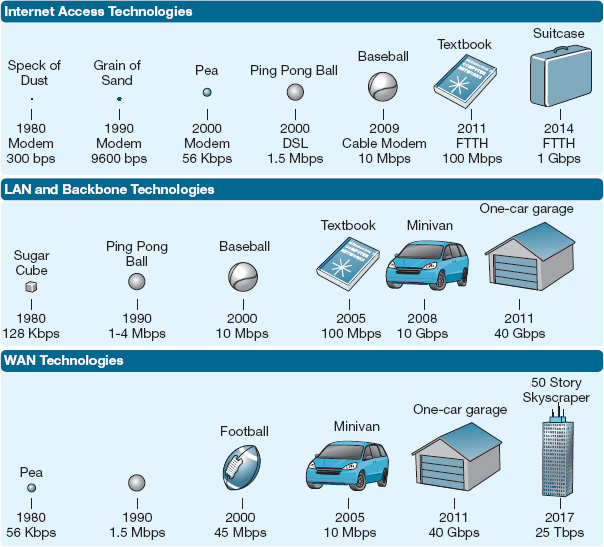

Pervasive networking means that communication networks will one day be everywhere; virtually any device will be able to communicate with any other device in the world. This is true in many ways today, but what is important is the staggering rate at which we will eventually be able to transmit data. Figure 1.6 illustrates the dramatic changes over the years in the amount of data we can transfer. For example, in 1980, the capacity of a traditional telephone-based network (i.e., one that would allow you to dial up another computer from your home) was about 300 bits per second (bps). In relative terms, you could picture this as a pipe that would enable you to transfer one speck of dust every second. By 1999, modems had increased to 56 Kbps (or a pea), and in 2000, DSL (Digital Subscriber Line) was introduced, which enabled about a ping pong ball to be transmitted every second. In 2009, cable modem technology moved to 10 Mbps and by 2011, FTTH (fiber to the home) was introduced. FTTH speeds are expected to increase to 1 Gbps in the near future.

FIGURE 1.6 Relative capacities of telephone, local area network (LAN), backbone network (BN), wide area network (WAN), and Internet circuits. DSL = Digital Subscriber Line; FTTH = Fiber to the Home

Between 1980 and 2005, LAN and backbone technologies increased capacity from about 128 Kbps (a sugar cube per second) to 100 Mbps (see Figure 1.6). Today, backbones can provide 40 Gbps, or the relative equivalent of a one-car garage per second.

The changes in WAN and Internet circuits has been even more dramatic (see Figure 1.6). From a typical size of 56 Kbps in 1980 to the 45 Mbps in 2000, most experts now predict a high-speed WAN or Internet circuit will be able to carry 25 Tbps (25 terabits, or 25 trillion bits per second) in a few years—the relative equivalent of a skyscraper 50 stories tall and 50 stories wide. Our sources at IBM Research suggest that future WAN circuits may reach a capacity of 1 Pbps (1 petabit, or 1000 terabits). To put this in perspective in 2011, the total amount of digital content stored on every computer in the world was estimated to be 10 million petabits.

The term broadband communication has often been used to refer to these new highspeed communication circuits. Broadband is a technical term that refers to a specific type of data transmission that is used by one of these circuits (e.g., DSL). However, its true technical meaning has become overwhelmed by its use in the popular press to refer to high-speed circuits in general. Therefore, we too will use it to refer to circuits with data speeds of 1 Mbps or higher.

The initial costs of the technologies used for these very high speed circuits will be high, but competition will gradually drive down the cost. The challenge for businesses will be how to use them. When we have the capacity to transmit virtually all the data anywhere we want over a high-speed, low-cost circuit, how will we change the way businesses operate? Economists have long talked about the globalization of world economies. Data communications has made it a reality.

1.5.2 The Integration of Voice, Video, and Data

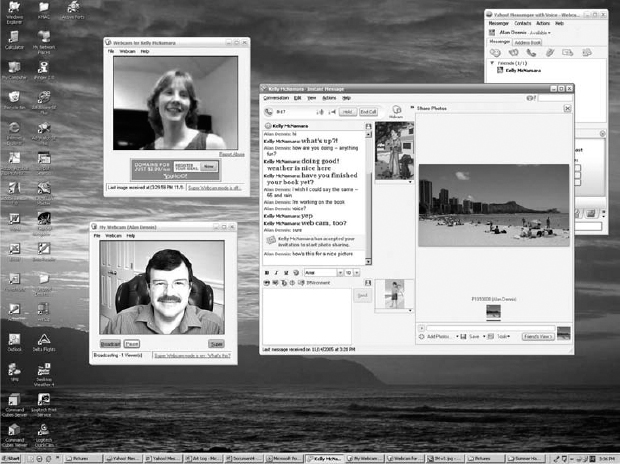

A second key trend is the integration of voice, video, and data communication, sometimes called convergence. In the past, the telecommunications systems used to transmit video signals (e.g., cable TV), voice signals (e.g., telephone calls), and data (e.g., computer data, email) were completely separate. One network was used for data, one for voice, and one for cable TV.

This is rapidly changing. The integration of voice and data is largely complete in WANs. The IXCs, such as AT&T, provide telecommunication services that support data and voice transmission over the same circuits, even intermixing voice and data on the same physical cable. Vonage (www.vonage.com) and Skype (www.skype.com), for example, permit you to use your network connection to make and receive telephone calls using Voice Over Internet Protocol (VOIP).

The integration of voice and data has been much slower in LANs and local telephone services. Some companies have successfully integrated both on the same network, but some still lay two separate cable networks into offices, one for voice and one for computer access.

MANAGEMENT FOCUS

The Columbia Association employs 450 full-time and 1,500 part-time employees to operate the recreational facilities for the city of Columbia, Maryland. When Nagaraj Reddi took over as IT director, the Association had a 20-year-old central mainframe, no data networks connecting its facilities, and an outdated legacy telephone network. There was no data sharing; city residents had to call each facility separately to register for activities and provide their full contact information each time. There were long wait times and frequent busy signals.

Reddi wanted a converged network that would combine voice and data to minimize operating costs and improve service to his customers. The Association installed a converged network switch at each facility, which supports computer networks and new digital IP-based phones. The switch also can use traditional analog phones, whose signals it converts into the digital IP-based protocols needed for computer networks. A single digital IP network connects each facility into the Association's WAN, so that voice and data traffic can easily move among the facilities or to and from the Internet.

By using converged services, the Association has improved customer service and also has reduced the cost to install and operate separate voice and data networks.

_________

SOURCE: Cisco Customer Success Story: Columbia Association, www.cisco.com, 2007.

The integration of video into computer networks has been much slower, partly because of past legal restrictions and partly because of the immense communications needs of video. However, this integration is now moving quickly, owing to inexpensive video technologies. Many IXCs are now offering a “triple play” of phone, Internet, and TV video bundled together as one service.

1.5.3 New Information Services

A third key trend is the provision of new information services on these rapidly expanding networks. In the same way that the construction of the American interstate highway system spawned new businesses, so will the construction of worldwide integrated communications networks. The Web has changed the nature of computing so that now, anyone with a computer can be a publisher. You can find information on virtually anything on the Web. The problem becomes one of assessing the accuracy and value of information. In the future, we can expect information services to appear that help ensure the quality of the information they contain. Never before in the history of the human race has so much knowledge and information been available to ordinary citizens. The challenge we face as individuals and organizations is assimilating this information and using it effectively.

Today, many companies are beginning to use application service providers (ASPs) rather than developing their own computer systems. An ASP develops a specific system (e.g., an airline reservation system, a payroll system), and companies purchase the service, without ever installing the system on their own computers. They simply use the service, the same way you might use a Web hosting service to publish your own Web pages rather than attempting to purchase and operate your own Web server. Some experts are predicting that by 2010, ASPs will have evolved into information utilities. An information utility is a company that provides a wide range of standardized information services, the same way that electric utilities today provide electricity or telephone utilities provide telephone service. Companies would simply purchase most of their information services (e.g., email, Web, accounting, payroll, logistics) from these information utilities rather than attempting to develop their systems and operate their own servers.

1.6 IMPLICATIONS FOR MANAGEMENT

At the end of each chapter, we provide key implications for management that arise from the topics discussed in the chapter. The implications we draw focus on improving the management of networks and information systems, as well as implications for the management of the organization as a whole.

There are three key implications for management from this chapter. First, networks and the Internet change almost everything. The ability to quickly and easily move information from distant locations and to enable individuals inside and outside the firm to access information and products from around the world changes the way organizations operate, the way businesses buy and sell products, and the way we as individuals work, live, play, and learn. Companies and individuals that embrace change and actively seek to apply networks and the Internet to better improve what they do, will thrive; companies and individuals that do not, will gradually find themselves falling behind.

FIGURE 1.7 One server farm with more than 1000 servers

Source:© zentilia/iStockphoto

Second, today's networking environment is driven by standards. The use of standard technology means an organization can easily mix and match equipment from different vendors. The use of standard technology also means that it is easier to migrate from older technology to a newer technology, because most vendors designed their products to work with many different standards. The use of a few standard technologies rather than a wide range of vendor-specific proprietary technologies also lowers the cost of networking because network managers have fewer technologies they need to learn about and support. If your company is not using a narrow set of industry-standard networking technologies (whether those are de facto standards such as Windows, open standards such as Linux, or de juro standards such as 802.11n wireless LANs), then it is probably spending too much money on its networks.

Third, as the demand for network services and network capacity increases, so too will the need for storage and server space. Finding efficient ways to store all the information we generate will open new market opportunities. Today, Google has almost half a million Web servers (see Figure 1.7).

SUMMARY

Introduction The information society, where information and intelligence are the key drivers of personal, business, and national success, has arrived. Data communications is the principal enabler of the rapid information exchange and will become more important than the use of computers themselves in the future. Successful users of data communications, such as Wal-Mart, can gain significant competitive advantage in the marketplace.

Network Definitions A local area network (LAN) is a group of computers located in the same general area. A backbone network (BN) is a large central network that connects almost everything on a single company site. A metropolitan area network (MAN) encompasses a city or county area. A wide area network (WAN) spans city, state, or national boundaries.