Chapter 7. Security Architecture and Design

This chapter covers the following topics:

• Security model concepts: Discusses the four main goals of security

• System architecture: Describes a process for designing a systems architecture

• System security architecture: Focuses on the security aspects of the system architecture

• Security architecture frameworks: Explores frameworks that have been developed to aid in secure architecture design

• Security architecture documentation: Covers the documentation process and describes best practices and guidelines by the ISO

• Security model types and security models: Surveys some of the most common security models

• Security modes: Examines various modes in which an operating system can function and the security effects of each

• System evaluation: Discusses formal evaluation systems used to assess the security of a system

• Certification and accreditation: Compares and contrasts these two processes

• Security architecture maintenance: Covers tasks required to maintain system security on an ongoing basis

• Security architecture threats: Examines common threats a security architecture must address

Although much progress has been made in protecting the edge or perimeter of networks, the software we create and purchase inside the network still can contain vulnerabilities that can be exploited. In a rush to satisfy the increasing demands placed on software, developers sometimes sacrifice security goals to meet other goals. In this chapter we take a closer look at some of the security issues that can be created during development, some guidelines for secure practices, and some of the common attacks on software that need to be mitigated.

Foundation Topics

Security Model Concepts

Security measures must have a defined goal if we want to ensure that the measure is successful. All measures are designed to provide one of a core set of protections. In this section the three fundamental principles of security are discussed. Also an approach to delivering these goals is covered as well.

Confidentiality

Confidentially is provided if the data cannot be read either through access controls and encryption for data as it exists on a hard drive or through encryption as the data is in transit. With respect to information security, confidentiality is the opposite of disclosure. The essential security principles of confidentiality, integrity, and availability are referred to as the CIA triad.

Integrity

Integrity is provided if you can be assured that the data has not changed in any way. This is typically provided with a hashing algorithm or a checksum of some kind. Both methods create a number that is sent along with the data. When the data gets to the destination, this number can be used to determine whether even a single bit has changed in the data by calculating the hash value from the data that was received. This helps to protect data against undetected corruption.

Some additional integrity goals are to

• Prevent unauthorized users from making modifications

• Maintain internal and external consistency

• Prevent authorized users from making improper modifications

Availability

Availability describes what percentage of the time the resource or the data is available. This is usually measured as a percentage of “up” time with 99.9 % of up time representing more availability than 99% up time. Making sure that the data is accessible when and where it is needed is a prime goal of security.

Defense in Depth

Communications security management and techniques are designed to prevent, detect, and correct errors so that the integrity, availability, and confidentiality of transactions over networks might be maintained. Most computer attacks result in a violation of one of the security properties confidentiality, integrity, or availability. A defense-in-depth approach refers to deploying layers of protection. For example, even when deploying firewalls, access control lists should still be applied to resources to help prevent access to sensitive data in case the firewall is breached.

System Architecture

Developing a systems architecture refers to the process of describing and representing the components that make up the planned system and the interrelationships between the components. It also attempts to answer questions such as:

• What is the purpose of the system?

• Who will use it?

• What environment will it operate in?

Developing the architecture of a planned system should go through logical steps to ensure that all considerations have been made. This section discusses these basic steps and covers the design principles, definitions, and guidelines offered by the ISO-IEC 42010:2011 standard.

System Architecture Steps

Various models and frameworks discussed in this chapter might differ in the exact steps toward developing systems architecture but do follow a basic pattern. The main steps include:

1. System design phase: In this phase system requirements are gathered and the manner in which the requirements will be met are mapped out using modeling techniques that usually graphically depict the components that satisfy each requirement and the interrelationships of these components. At this phase many of the frameworks and security models discussed later in this chapter are used to help meet the architectural goals.

2. Development phase: In this phase hardware and software components are assigned to individual teams for development. At this phase the work done in the first phase can help to ensure these independent teams are working toward components that will fit together to satisfy requirements.

3. Maintenance phase: In this phase the system and security architecture are evaluated to ensure that the system operates properly and that security of the systems are maintained. The system and security should be periodically reviewed and tested.

ISO-IEC 42010:2011

The ISO-IEC 42010:2011 uses specific terminology when discussing architectural frameworks. The following is a review of some of the more important terms.

Architecture: Describes the organization of the system, including its components and their interrelationships along with the principles that guide its design and evolution.

Architectural description (AD): Comprises the set of documents that convey the architecture in a formal manner.

Stakeholder: Individuals, teams, and departments, including groups outside the organization with interests or concerns to consider.

View: The representation of the system from the perspective of a stakeholder or a set of stakeholders.

Viewpoint: A template used to develop individual views that establish the audience, techniques, and assumptions made.

Computing Platforms

A computing platform comprises the hardware and software components that allow software to run. This typically incudes the physical components, the operating systems, and the programming languages used. From a physical and logical perspective, a number of possible frameworks or platforms are in use. This section discusses some of the most common.

Mainframe/Thin Clients

When a mainframe/thin client platform is used, a client/server architecture exists. The server holds the application and performs all the processing. The client software runs on the user machines and simply sends requests for operations and displays the results. When a true thin client is used very little exists on the user machine other than the software that connects to the server and renders the result.

Distributed Systems

The distributed platform also uses a client/server architecture, but the division of labor between the server portion and the client portion of the solution might not be quite as one-sided as you would find in a mainframe/thin client scenario. In many cases multiple locations or systems in the network might be part of the solution. Also, sensitive data is more likely to be located on the user’s machine, and therefore the users play a bigger role in protecting it with best practices.

Another characteristic of a distributed environment is multiple processing locations that can provide alternatives for computing in the event a site becomes unavailable.

Data is stored at multiple, geographically separate locations. Users can access the data stored at any location with the users’ distance from those resources transparent to the user.

Distributed systems can introduce security weaknesses into the network that must be considered. Among these are

• Desktop systems can contain sensitive information that might be at risk of being exposed.

• Users might generally lack security awareness.

• Modems present a vulnerability to dial-in attacks.

• Lack of proper backup might exist.

Middleware

In a distributed environment, middleware is software that ties the client and server software together. It is neither a part of the operating system nor a part of the server software. It is the code that lies between the operating system and applications on each side of a distributed computing system in a network. It might be generic enough to operate between several types of client-server systems of a particular type.

Embedded Systems

An embedded system is a piece of software built into a larger piece of software that is in charge of performing some specific function on behalf of the larger system. The embedded part of the solution might address specific hardware communications and might require drivers to talk between the larger system and some specific hardware.

Mobile Computing

Mobile code is instructions passed across the network and executed on a remote system. An example of mobile code is Java and ActiveX code downloaded into a web browser from the World Wide Web. Any introduction of code from one system to another is a security concern but is required in some situations. An active content module that attempts to monopolize and exploits system resources is called a hostile applet. The main objective of the Java Security Model (JSM) is to protect the user from hostile, network mobile code. As you might recall from Chapter 5, “Software Development Security,” it does this by placing the code in a sandbox, which restricts its operations.

Virtual Computing

Virtual environments are increasingly being used as the computing platform for solutions. In Chapter 3 you learned that most of the same security issues that must be mitigated in the physical environment must also be addressed in the virtual network.

In a virtual environment, instances of an operating system are called virtual machines. A host system can contain many virtual machines. Software called a hypervisor manages the distribution of resources (CPU, memory, and disk) to the virtual machines. Figure 7-1 shows the relationship between the host machine, its physical resources, the resident VMs, and the virtual resources assigned to them.

Security Services

The process of creating system architecture also includes design of the security that will be provided. These services can be classified into several categories depending on the protections they are designed to provide. This section briefly examines and compares types of security services.

Boundary Control Services

These services are responsible for placing various components in security zones and maintaining boundary control between them. Generally, this is accomplished by indicating components and services as trusted or not trusted. As an example, memory space insulated from other running processes in a multiprocessing system is part of a protection boundary.

Access Control Services

In Chapter 2, “Access Control” you learned about various methods of access control and how they can be deployed. An appropriate method should be deployed to control access to sensitive material and to give users the access they need to do their job.

Integrity Services

As you might recall integrity implies that data has not been changed. When integrity services are present they ensure that data moving through the operating system or application can be verified to not have been damaged or corrupted in the transfer.

Cryptography Services

If the system is capable of scrambling or encrypting information in transit, it is said to provide cryptography services. In some cases this service is not natively provided by a system and if desired must be provided in some other fashion, but if the capability is present it is valuable, especially in instances where systems are distributed and talk across the network.

Auditing and Monitoring Services

If the system has a method of tracking the activities of the users and of the operations of the system processes, it is said to provide auditing and monitoring services. Although our focus here is on security, the value of this service goes beyond security because it also allows for monitoring what the system itself is actually doing.

System Components

When discussing the way security is provided in an architecture, having a basic grasp of the components in computing equipment is helpful. This section discusses those components and some of the functions they provide.

CPU and Multiprocessing

The central processing unit (CPU) is the hardware in the system that executes all the instructions in the code. It has its own set of instructions for its internal operation, and those instructions define its architecture. The software that runs on the system must be compatible with this architecture, which really means the CPU and the software can communicate.

When more than one processor is present and available the system becomes capable of multiprocessing. This allows the computer to execute multiple instructions in parallel. It can be done with separate physical processors or with a single processor with multiple cores. Each core operates as a separate CPU.

CPUs have their own memory, and the CPU is able to access this memory faster than any other memory location. It also typically has cache memory where the most recently executed instructions are kept in case they are needed again. When a CPU gets an instructions from memory the process is called fetching.

An arithmetic logic unit (ALU) in the CPU performs the actual execution of the instructions. The control unit acts as the system manager while instructions from applications and operating systems are executed. CPU registers contain the instruction set information and data to be executed and include general registers, special registers, and a program counter register.

CPUs can work in user mode or privileged mode, which is also referred to as kernel or supervisor mode. When applications are communicating with the CPU, it is in user mode. If an instruction that is sent to the CPU is marked to be performed in privileged mode, it must be a trusted operating system process and is given functionality not available in user mode.

The CPU is connected to an address bus. Memory and I/O devices recognize this address bus. These devices can then communicate with the CPU, read requested data, and send it to the data bus.

When microcomputers were first developed, the instruction fetch time was much longer than the instruction execution time because of the relatively slow speed of memory access. This situation led to the design of the Complex Instruction Set Computer (CISC) CPU. In this arrangement the set of instructions were reduced (while made more complex) to help mitigate the relatively slow memory access.

After memory access was improved to the point where not much difference existed in memory access times and processor execution times, the Reduced Instruction Set Computer (RISC) architecture was introduced. The objective of the RISC architecture was to reduce the number of cycles required to execute an instruction, which was accomplished by making the instructions less complex.

Memory and Storage

A computing system needs somewhere to store information, both on a long-term and short-term basis. There are two types of storage locations: memory, for temporary storage needs, and long-term storage media. Information can be accessed much faster from memory than from long-term storage, which is why the most recently used instructions or information is typically kept in cache memory for a short period of time, which ensure the second and subsequent accesses will be faster than returning to long-term memory.

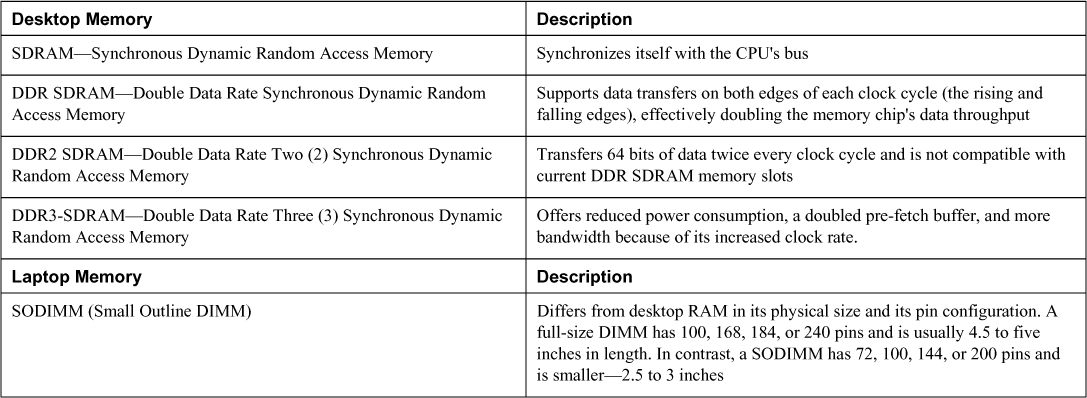

Computers can have both Random Access Memory (RAM) and Read Only Memory (ROM). RAM is volatile, meaning the information must continually be refreshed and will be lost if the system shuts down. Table 7-1 contains some types of RAM used in laptops and desktops.

ROM, on the other hand, is not volatile and also cannot be overwritten without executing a series of operations that depend on the type of ROM. It usually contains low-level instructions of some sort that make the device on which it is installed operational. Some examples of ROM are

• Flash memory: A type of electrically programmable ROM

• Programmable Logic Device (PLD): An integrated circuit with connections or internal logic gates that can be changed through a programming process

• Field Programmable Gate Array (FPGA): A type of PLD that is programmed by blowing fuse connections on the chip or using an antifuse that makes a connection when a high voltage is applied to the junction

• Firmware: A type of ROM where a program or low-level instructions are installed

Memory directly addressable by the CPU, which is for the storage of instructions and data that are associated with the program being executed, is called primary memory. Regardless of which type of memory in which the information is located, in most cases the CPU must get involved in fetching the information on behalf of other components. If a component has the ability to access memory directly without the help of the CPU it is called Direct Memory Access (DMA).

Some additional terms you should be familiar with in regards to memory include the following:

• Associative memory: Searches for a specific data value in memory rather than using a specific memory address

• Implied addressing: Refers to registers usually contained inside the CPU

• Absolute addressing: Addresses the entire primary memory space. The CPU uses the physical memory addresses that are called absolute addresses.

• Cache: A relatively small amount (when compared to primary memory) of very high speed RAM that holds the instructions and data from primary memory and that has a high probability of being accessed during the currently executing portion of a program

• Indirect addressing: The type of memory addressing where the address location that is specified in the program instruction contains the address of the final desired location.

• logical address: The address at which a memory cell or storage element appears to reside from the perspective of an executing application program

• Relative address: Specifies its location by indicating its distance from another address

• Virtual memory: A location on the hard drive used temporarily for storage when memory space is low

• Memory leak: Occurs when a computer program incorrectly manages memory allocations, which can exhaust available system memory as an application runs

Input/Output Devices

Input/output devices are used to send and receive information to the system. Examples are the keyboard, mouse (input), and printers. The operating system controls the interaction between the in/out devices and the system. In cases where the in/out device requires the CPU to perform some action it signals the CPU with a message called an interrupt.

Operating Systems

The operating system is the software that enables a human to interact with the hardware that comprises the computer. Without the operating system the computer would be useless. Operating systems perform a number of noteworthy and interesting functions as a part of the interfacing between the human and the hardware. In this section we look some of these activities.

A thread is an individual piece of work done for a specific process. A process is a set of threads that are part of the same larger piece of work done for a specific application. An application’s instructions are not considered processes until they have been loaded into memory where all instructions must first be copied to be processed by the CPU. A process can be in a running state, ready state, or blocked state. When a process is blocked, it is simply waiting for data to be transmitted to it, usually through user data entry. A group of processes that share access to the same resources is called a protection domain.

CPUs can be categorized according to the way in which they handle processes. A superscalar computer architecture is characterized by a processor that enables concurrent execution of multiple instructions in the same pipeline stage. A processor in which a single instruction specifies more than one CONCURRENT operation is called a Very Long Instruction Word processor. A pipelined processor overlaps the steps of different instructions whereas a scalar processor executes one instruction at a time, consequently increasing pipelining.

From a security perspective, processes are placed in a ring structure according to the concept of least privilege, meaning they are only allowed to access resources and components required to perform the task. A common visualization of this structure is shown in Figure 7-2.

When a computer system processes input/output instructions it is operating in Supervisor mode. The termination of selected, non-critical processing when a hardware or software failure occurs and is detected is referred to as a fail soft. It is in a fail safe state if the system automatically leaves system processes and components in a secure state when a failure occurs or is detected in the system.

Multitasking

Multitasking is the process of carrying out more than one task at a time. Multitasking can be done in two different ways. When the computer has a single processor, it is not really doing multiple tasks at once. It is dividing its CPU cycles between tasks at such a high rate of speed that it appears to be doing multiple tasks at once. However, when a computer has more than one processor or has a processor with multiple cores, then it is capable of actually performing two tasks at the same time. It can do this in two different ways:

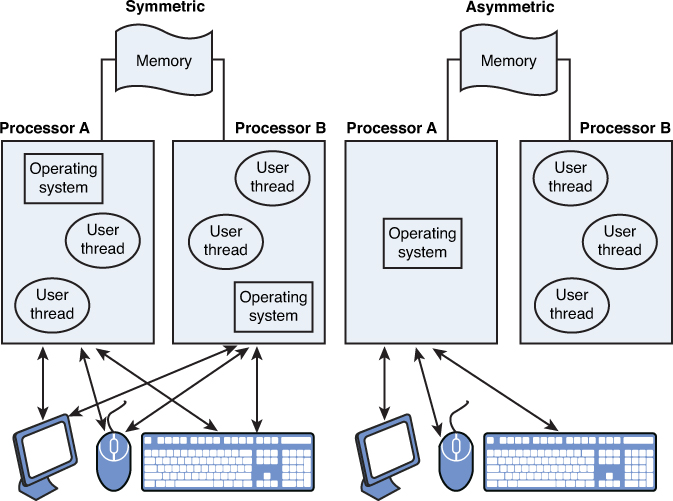

Symmetric mode: In this mode the processors or cores are handed work on a round-robin basis, thread by thread.

Asymmetric mode: In this mode a processor is dedicated to a specific process or application—when work needs done for that process it always is done by the same processor. Figure 7-3 shows the relationship between these two modes.

Figure 7-3. Types of Multiprocessing

Preemptive multitasking means that task switches can be initiated directly out of interrupt handlers. With cooperative (non-preemptive) multitasking, a task switch is only performed when a task calls the kernel and allows the kernel a chance to perform a task switch.

Memory Management

Because all information goes to memory before it can be processed, secure management of memory is critical. Memory space insulated from other running processes in a multiprocessing system is part of a protection domain.

System Security Architecture

Beyond their functional roles, the components that comprise the system can also be viewed from a security perspective, which describes its system security architecture. In this section concepts used to maintain security within the system are discussed.

Security Policy

The security requirements of a system should be derived from the security policy of the organization. Although this policy makes statements regarding the general security posture and goals of the organization, a system-specific policy that speaks to the level of security required on the device, operating system, or application level must be much more detailed. This section covers some terms and concepts required to discuss security at this level.

Note

Security policies are covered in detail in Chapter 4, “Information Security Governance and Risk Management.”

Security Requirements

In 1972, the Computer Security Technology Planning Study was commissioned by the U.S. government to outline the basic and foundational security requirements of systems purchased by the government. This eventually led to a Trusted Computer System Evaluation Criteria or Orange Book (discussed later in this chapter). This section defines some of the core tenets as follows:

Trusted Computer Base (TCB): The TCB comprises the components (hardware, firmware, and/or software) that are trusted to enforce the security policy of the system and that if compromised jeopardize the security properties of the entire system. The reference monitor is a primary component of the TCB. This term is derived from the Orange Book (discussed later in this chapter). All changes to the TCB should be audited and controlled, which is an example of a Configuration Management control.

Security Perimeter: This is the dividing line between the trusted parts of the system and those that are untrusted. According to security design best practices, components that lie within this boundary (which means they lie within the TCB) should never permit untrusted components to access critical resources in an insecure manner.

Reference Monitor: A reference monitor is a system component that enforces access controls on an object. It is an access control concept that refers to an abstract machine that mediates all accesses to objects by subjects. It was introduced for circumventing difficulties in classic approaches to computer security by limiting damages produced by malicious programs. The security risk created by a covert channel is that it bypasses the reference monitor functions. The reference monitor function should exhibit isolation, completeness, and verifiability. Isolation is required because of the following:

It can’t be of public access. The less access the better.

It must have a sense of completeness to provide the whole information and process cycles.

It must be verifiable, to provide security, audit, and accounting functions.

Security Kernel: A security kernel is the hardware, firmware, and software elements of a trusted computing base that implements the reference monitor concept. It is an access control concept, not an actual physical component. The security kernel should be as small as possible to make it easier to formally verify it. The security kernel implements the authorized access relationship between subjects and objects of a system as established by the reference monitor. While performing this role all accesses must be meditated, protected from modification, and verifiable. In the ring protection system discussed earlier in the CPU discussion, the security kernel is usually located in the lowest ring number or ring 0.

Security Zones

In the context of this discussion a security zone or security perimeter is the dividing line between the trusted parts of the system and the untrusted parts. An example of this is the ring structure for CPUs discussed earlier. The most trusted components are allowed in ring 0 whereas less trusted components might be located in outer rings. Communication between components in different rings is tightly controlled to prevent a less trusted component from compromising one that has more trust and thus more access to the system.

Security Architecture Frameworks

Security architecture frameworks are used to help define the security goals during development of a system and to ensure those goals are achieved in the final product. This section covers some of the more widely known security architecture frameworks.

Zachman Framework

The Zachman Framework is a two-dimensional model that intersects communication interrogatives (what, why, where, and so on) with various viewpoints (planner, owner, designer, and so on). It is designed to help optimize communication between the various viewpoints during the creation of the security architecture. This framework is detailed in Chapter 4 in Table 4-1.

SABSA

A similar model to Zachman for guiding the creation and design of a security architecture is the Sherwood Applied Business Security Architecture (SABSA). It also attempts to enhance the communication process between stakeholders. It is a framework in addition to a methodology in that it prescribes the processes to follow to build and maintain the architecture. This framework is detailed in Chapter 4 in Table 4-2

TOGAF

The Open Group Architecture Framework (TOGAF) has its origins in the U.S. Department of Defense as it was based on the Technical Architecture Framework for Information Management (TAFIM), developed since the late 1980s by the U.S. Department of Defense. It calls for an Architectural Development Method (ADM) that employs an iterative process that calls for individual requirements to be continuously monitored and updated as needed. It also enables the technology architect to understand the enterprise from four different views, allowing for the team to ensure that the proper environment and components are present to meet business and security requirements. Figure 7-4 shows the TOGAF 8.1.1 version of the ADM.

Figure 7-4. TOGAF 8.1.1 Version of the ADM

ITIL

A discussion of architectural frameworks would not be complete without mentioning the Information Technology Infrastructure Library (ITIL). In Chapter 4 you learned about this set of best practices, which have become the de facto standard for IT service management, of which creating the framework is a subset. If you review the ITIL discussion in Chapter 4, you will see that service design is a major portion of the process.

Note

All of these security frameworks and several others are discussed in greater detail in Chapter 4, “Information Security Governance and Risk Management” in the “Security Frameworks and Methodologies.” section.

Security Architecture Documentation

Documentation is important in the design of any system and with regards to the security architecture it is even more so. Documentation of the architecture provides valuable insight into how the architecture is supposed to operate and what the designers intended for various components to contribute to the security of a system. For example, any test plans and results should be retained as part of the system’s permanent documentation.

This section discusses two influential documents providing guidance for best practices for security architecture documentation.

ISO/IEC 27000 Series

The ISO/IEC 27000-series establishes information security standards published jointly by the International Organization for Standardization (ISO) and the International Electro technical Commission (IEC). Although each individual standard in the series addressees a different issue, all standards in the series provide guidelines for documentation. The ISO/IES 27000 series including both 27001 and 27002 are covered in more detail in the section “Security Frameworks and Methodologies” in Chapter 4.

COBIT

The Information Systems Audit and Control Association (ISACA) and the IT Governance Institute (ITGI), two important organizations with regard to offering guidance in operating and maintaining secure systems, created a set of control objectives used as a framework for IT governance called the Control Objectives for Information and Related Technology. It was derived from the COSO framework created by the Committee of Sponsoring Organizations of the Treadway Commission. Whereas the COSO framework deals with corporate governance, the CobiT deals with IT governance. It is covered in more detail in Chapter 4.

Security Model Types and Security Models

A security model describes the theory of security that is designed into a system from the outset. Formal models have been developed to approach the design of the security operations of a system. In the real world the use of formal models is often skipped because it delays the design process somewhat (although the cost might be a lesser system). This section discusses some basic model types along with some formal models derived from the various approaches available.

Security Model Types

A security model maps the desires of the security policy makers to the rules that a computer system must follow. Different model types exhibit various approaches to achieving this goal. The specific models that are contained in the section “Security Models” incorporate various combinations of these model types.

State Machine Models

The state of a system is its posture at any specific point in time. Activities that occur in the processing of the system operating alter the state of the system. By examining every possible state the system could be in and ensuring that the system maintains the proper security relationship between objects and subjects in each state, the system is said to be secure. The Bell-LaPadula model discussed in the later section “Security Models” is an example of a state machine model.

Multilevel Lattice Models

The lattice-based access control model was developed mainly to deal with confidentiality issues and focuses itself mainly on information flow. Each security subject is assigned a security label that defines the upper and lower bounds of the subject’s access to the system. Controls are then applied to all objects by organizing them into levels or lattices. Objects are containers of information in some format. These pairs of elements (object and subject) are assigned a least upper bound of values and a greatest lower bound of values that define what can be done by that subject with that object.

A subject’s label (remember a subject can be a person but it can also be a process) defines what level one can access and what actions can be performed at that level. With the lattice-based access control model, a security label is also called a security class. In summation this model associates every resource and every user of a resource with one of an ordered set of classes. The lattice-based model aims at protecting against illegal information flow among the entities.

Matrix-Based Models

A matrix-based model organizes tables of subjects and objects indicating what actions individual subjects can take upon individual objects. This concept is found in other model types as well such as the lattice model discussed in the previous section. Access control to objects is often implemented as a control matrix. It is straightforward approach that defines access rights to subjects for objects. The two most common implementations of this concept are access control lists and capabilities. In its table structure a row would indicate the access one subject has to an array of objects. Therefore, a row could be seen as a capability list for a specific subject. It consists of the following parts:

A list of objects

A list of subjects

A function that returns an object’s type

The matrix itself, with the objects making the columns and the subjects making the rows

Noninterference Models

In multilevel security models, the concept of non-interface prescribes those actions that take place at a higher security level but do not affect or influence those that occur at a lower security level. Because this model is less concerned with the flow of information and more concerned with a subject’s knowledge of the state of the system at a point in time, it concentrates on preventing the actions that take place at one level from altering the state presented to another level.

One of the attack types that this conceptual model is meant to prevent is inference. This occurs when someone has access to information at one level that allows them to infer information about another level.

Information Flow Models

Any of the models discussed in the next section that attempt to prevent the flow of information from one entity to another that violates or negates the security policy is called an information flow model. In the information flow model, what relates two versions of the same object is called the flow. A flow is a type of dependency that relates two versions of the same object, and thus the transformation of one state of that object into another, at successive points in time. In a multilevel security system (MLS), a one-way information flow device called a pump prevents the flow of information from a lower level of security classification or sensitivity to a higher level.

For example the Bell-LaPadula model (discussed in the section “Security Models”) concerns itself with the flow of information in the following three cases:

• When a subject alters on object.

• When a subject accesses an object.

• When a subject observes an object.

In summation the prevention of illegal information flow among the entities is the aim of an information flow model.

Security Models

A number of formal models incorporating the concepts discussed in the previous section have been developed and used to guide the security design of systems. This section discusses some of the more widely used or important security models including the following:

• Clark-Wilson integrity model

Bell-LaPadula Model

The Bell-LaPadula model was the first mathematical model of a multilevel system that used both the concepts of a state machine and those of controlling information flow. It formalizes the U.S. Department of Defense multilevel security policy. It is a state machine model capturing confidentiality aspects of access control. Any movement of information from a higher level to a lower level in the system must be performed by a trusted subject.

It incorporates three basic rules with respect to the flow of information in a system:

• The simple security rule: A subject cannot read data located at a higher security level than that possessed by the subject (also called no read up)

• The *- property rule: A subject cannot write to a lower level than that possessed by the subject (also called no write down or the confinement rule)

• The strong star property rule: A subject can perform both read and write functions only at the same level possessed by the subject

The *-property rule is depicted in Figure 7-5

Figure 7-5. The *- Property Rule

The main concern of the Bell-LaPadula security model and its use of these rules is confidentiality. Although its basic model is a mandatory access control (MAC) system, another property rule called the Discretionary Security Property (ds-property) makes a mixture of mandatory and discretionary controls possible. This property allows a subject to pass along permissions at its own discretion. In the discretionary portion of the model, access permissions are defined through an Access Control matrix using a process called authorization, and security policies prevent information flowing downward from a high security level to a low security level.

The Bell-LaPadula security model does have limitations. Among those are

• It contains no provision or policy for changing data access control. Therefore, it works well only with access systems that are static in nature.

• It does not address what are called covert channels. A low-level subject can sometimes detect the existence of a high object when it is denied access. Sometimes it is not enough to hide the content of an object; also their existence might have to be hidden.

• Its main contribution at the expense of other concepts is confidentiality.

This security policy model was the basis for the Orange Book (discussed in the later section “System Evaluation”).

Biba Model

The Biba model came after the Bell-LaPadula model and shares many characteristics with that model. These two models are the most well-known of the models discussed in this section. It is also a state machine model that uses a series of lattices or security levels, but the Biba model concerns itself more with the integrity of information rather than the confidentiality of that information. It does this by relying on a data classification system to prevent unauthorized modification of data. Subjects are assigned classes according to their trustworthiness; objects are assigned integrity labels according to the harm that would be done if the data were modified improperly.

Like the Bell-LaPadula model it applies a series of properties or axioms to guide the protection of integrity. Its effect is that data must not flow from a receptacle of given integrity to a receptacle of higher integrity.

• * integrity axiom: A subject cannot write to a higher integrity level than that to which he has access (no write up).

• Simple integrity axiom: A subject cannot read to a lower integrity level than that to which he has access (no read down).

• Invocation property: A subject cannot invoke (request service) of higher integrity.

Clark-Wilson Integrity Model

Developed after the Biba model, this model also concerns itself with data integrity. The model describes a series of elements that are used to control the integrity of data as listed here:

• User: An active agent

• Transformation procedure (TP): An abstract operation, such as read, write, and modify, implemented through programming

• Constrained data item (CDI): An item that can only be manipulated through a TP

• Unconstrained data item (UDI): An item that can be manipulated by a user via read and write operations

• Integrity verification procedure (IVP): A check of the consistency of data with the real world

This model enforces these elements by only allowing data to be altered through programs and not directly by users. Rather than employing a lattice structure, it uses a three-part relationship of subject/program/object known as a triple. It also sets as its goal the concepts of separation of duties and well-formed transactions.

• Separation of duties: This concept ensures that certain operations require additional verification.

• Well-formed transaction: This concept ensures that all values are checked before and after the transaction by carrying out particular operations to complete the change of data from one state to another.

To ensure that integrity is attained and preserved, the Clark and Wilson model asserts, integrity-monitoring and integrity-preserving rules are needed. Integrity-monitoring rules are called certification rules, and integrity-preserving rules are called enforcement rules.

Lipner Model

The Lipner model is an implementation that combines elements of the Bell-LaPadula model and the Biba model. The first way of implementing integrity with the Lipner model uses Bell-LaPadula and assigns subjects to one of two sensitivity levels — system manager and anyone else — and to one of four job categories. Objects are assigned specific levels and categories. Categories become the most significant integrity (such as access control) mechanism. The second implementation uses both Bell-LaPadula and Biba. This method prevents unauthorized users from modifying data and prevents authorized users from making improper data modifications. The implementations also share characteristics with the Clark-Wilson model in that it separates objects into data and programs.

Brewer-Nash (Chinese Wall) Model

This model introduced the concept of allowing access controls to change dynamically based on a user’s previous actions. One of its goals is to do this while protecting against conflicts of interest. This model is also based on an information flow model. Implementation involves grouping data sets into discrete classes, each class representing a different conflict of interest. Isolating data set within a class provides the capability to keep one department data separate another in an integrated data base.

Graham-Denning Model

The Graham-Denning model attempts to address an issue ignored by the Bell-LaPadula (with the exception of the Discretionary Security Property) and Biba models. It deals with the delegate and transfer rights. It focuses on issues such as:

• Securely creating and deleting objects and subjects

• Securely providing or transferring access right

Harrison-Ruzzo-Ullmen Model

This model deals with access rights as well. It restricts the set of operations that can be performed on an object to a finite set to ensure integrity. It is used by software engineers to prevent unforeseen vulnerabilities from being introduced by overly complex operations.

Security Modes

In MAC access systems, the system operates in different security modes at various times based on variables, such as sensitivity of data, the clearance level of the user and the actions they are authorized to take. This section provides a description of these modes.

Dedicated Security Mode

A system is operating in dedicated security mode if it employs a single classification level. In this system all users can access all data, but they must sign an NDA and be formally approved for access on a need-to-know basis.

System High Security Mode

In a system operating in system high security mode all users have the same security clearance (as in the dedicated security model) but they do not all possess a need-to-know clearance for all the information in the system. Consequently, although a user might have clearance to access an object, he still might be restricted if he does not have need-to-know clearance pertaining to the object.

Compartmented Security Mode

In the compartmented security mode system all users must possess the highest security clearance (as in both dedicated and system high security), but they must also have valid need-to-know clearance, a signed NDA, and formal approval for all information to which they have access. The objective is to ensure that the minimum number of people possible have access to information at each level or compartment.

Multilevel Security Mode

When a system allows two or more classification levels of information to be processed at the same time, it is said to be operating in multilevel security mode. They must have a signed NDA for all the information in the system and will have access to subsets based on their clearance level, and need-to-know and formal access approval. These systems involve the highest risk because information is processed at more than one level of security even when all system users do not have appropriate clearances or a need-to-know for all information processed by the system. This is also sometimes called controlled security mode. Table 7-2 shows a comparison of the four security modes and their requirements.

Table 7-2. Security Model Summary

Assurance

Whereas a trust level describes the protections that can be expected from a system, the assurance level refers to the level of confidence that the protections will operate as planned. Typically, higher levels of assurance are achieved by dedicating more scrutiny to security in the design process. The next section discusses various methods of rating systems for trust levels and assurance.

System Evaluation

In an attempt to bring order to the security chaos that surround both in-house and commercial software products (operating system, applications, and so on), several evaluation methods have been created to assess and rate the security of these products. An assurance level examination attempts to examine the security-related components of a system and assign a level of confidence that the system can provide a particular level of security. In the following sections organizations that have created such evaluation systems are discussed.

TCSEC

The Trusted Computer System Evaluation Criteria (TCSEC) was developed by the National Computer Security Center (NCSC) for the U.S. Department of Defense to evaluate products. They have issued a series of books focusing on both computer systems and the networks in which they operate. They address confidentiality, but not integrity. In 2005, TCSEC was replaced by the Common Criteria, discussed later in the chapter. However, security professionals still need to understand TCSEC because of its effect on security practices today and because some of its terminology is still in use.

With TCSEC, functionality and assurance are evaluated separately and form a basis for assessing the effectiveness of security controls built into automatic data-processing system products. For example, the concept of least privilege is derived from TSEC. In this section those books and the ratings they derive are discussed.

Rainbow Series

The original publication created by the TCSEC was the Orange Book (discussed in the next section), but as time went by other books were also created that focused on additional aspects of the security of computer systems. Collectively this set of more than 20 books is now referred to as the Rainbow Series, alluding to the fact that each book is a different color. For example, the Green Book focuses solely on password management. The balance of this section covers the most important books, the Red Book and the Orange Book.

Orange Book

The Orange Book is a collection of criteria based on the Bell-LaPadula model that is used to grade or rate the security offered by a computer system product. Covert channel analysis, trusted facility management, and trusted recoveries are concepts discussed in this book. As an example of specific guidelines, it recommends that diskettes be formatted seven times to prevent any possibility of data remaining.

The goals of this system can be divided into two categories, operational assurance requirements and life cycle assurance requirements, the details of which are defined next.

The operational assurance requirements specified in the Orange Book are as follows:

• System integrity

• Covert channel analysis

• Trusted facility management

• Trusted recovery

The life cycle assurance requirements specified in the Orange Book are as follows:

• Security testing

• Design specification and testing

• Configuration management

• Trusted distribution

TCSEC uses a classification system that assigns a letter and number to describe systems’ security effectiveness. The letter refers to a security assurance level or division, of which there are four, and the number refers to gradients within that security assurance level or class. Each division and class incorporate all the required elements of the ones below it. In order of least secure to most secure the four classes and their constituent divisions and requirements are as follow.

D — Minimal protection

Reserved for those systems that have been evaluated but that fail to meet the requirements for a higher division

C — Discretionary protection

C1 — Discretionary Security Protection

• Requires identification and authentication.

• Requires separation of users and data.

• Uses Discretionary Access Control (DAC) capable of enforcing access limitations on an individual or group basis

• Requires system documentation and user manuals.

C2 — Controlled Access Protection

• Uses a more finely grained DAC.

• Provides individual accountability through login procedures.

• Requires protected audit trails.

• Invokes object reuse theory.

• Requires resource isolation.

B — Mandatory protection

B1 — Labeled Security Protection

• Uses an Informal statement of the security policy.

• Requires data sensitivity or classification labels.

• Uses Mandatory Access Control (MAC) over selected subjects and objects.

• Capable of label exportation.

• Requires removal or mitigation of discovered flaws.

• Uses design specifications and verification.

B2 — Structured Protection

• Requires a clearly defined and formally documented security policy.

• Uses DAC and MAC enforcement extended to all subjects and objects.

• Analyzes and prevents covert storage channels for occurrence and bandwidth.

• Structures elements into protection-critical and non–protection-critical categories.

• Enables more comprehensive testing and review through design and implementation.

• Strengthens authentication mechanisms.

• Provides trusted facility management with administrator and operator segregation.

• Imposes strict configuration management controls.

B3 — Security Domains

• Satisfies reference monitor requirements.

• Excludes code not essential to security policy enforcement.

• Minimizes complexity through significant system engineering.

• Defines the security administrator role.

• Requires an audit of security-relevant events.

• Automatically detects and responds to imminent intrusion detection, including personnel notification.

• Requires trusted system recovery procedures.

• Analyzes and prevents covert timing channels for occurrence and bandwidth.

• An example of such a system is the XTS-300, a precursor to the XTS-400.

A — Verified protection

A1 — Verified Design

• Provides higher assurance than B3, but is functionally identical to B3.

• Uses formal design and verification techniques, including a formal top-level specification.

• Requires that formal techniques are used to prove the equivalence between the TCB specifications and the security policy model.

• Provides formal management and distribution procedures.

• An example of such a system is Honeywell’s Secure Communications Processor SCOMP, a precursor to the XTS-400.

Red Book

The Trusted Network Interpretation (TNI) extends the evaluation classes of the Trusted Systems Evaluation Criteria (DOD 5200.28-STD) to trusted network systems and components in the Red Book. So where the Orange Book focuses on security for a single system, the Red Book addresses network security.

ITSEC

TCSEC addresses confidentiality only and bundles functionality and assurance. In contrast to TCSEC, the Information Technology Security Evaluation Criteria (ITSEC) addresses integrity and availability as well as confidentiality. Another difference is that the ITSEC was mainly a set of guidelines used in Europe, whereas the TCSEC was relied on more in the United States.

ITSEC has a rating system in many ways similar to that of TCSEC. ITSEC has 10 classes, F1 to F10, to evaluate the functional requirements and 7 classes, E0 to E6, to evaluate the assurance requirements. The topics covered are listed next.

Security functional requirements include the following:

• F00: Identification and authentication

• F01: Audit

• F02: Resource utilization

• F03: Trusted paths/channels

• F04: User data protection

• F05: Security management

• F06: Product access

• F07: Communications

• F08: Privacy

• F09: Protection of the product’s security functions

• F10: Cryptographic support

Security assurance requirements include the following:

• E00: Guidance documents and manuals

• E01: Configuration management

• E02: Vulnerability assessment

• E03: Delivery and operation

• E04: Life-cycle support

• E05: Assurance maintenance

• E06: Development

• Testing

The two systems can be mapped to one another, but the ITSEC provides a number of ratings that have no corresponding concept in the TCSEC ratings. Table 7-3 shows a mapping of the two systems.

Table 7-3. Mapping of ITSEC and TSEC

The ITSEC has been largely replaced by Common Criteria, discussed in the next section.

Common Criteria

In 1990 the ISO identified the need for a standardized rating system that could be used globally. The Common Criteria (CC) was the result of a cooperative effort to establish this system. This system uses Evaluation Assurance levels (EALs) to rate systems with each representing a successively higher level of security testing and design in a system. The resulting rating represents the potential the system has to provide security. It assumes that the customer will properly configure all available security solutions so it is required that the vendor always provide proper documentation to allow the customer to fully achieve the rating. ISO/IEC 15408-1:2009 is the International Standards version of CC.

CC represents requirements for IT security of a product or system in two categories: functionality and assurance. This means that the rating should describe what the system does (functionality), and the degree of certainty the raters have that the functionality can be provided (assurance).

CC has seven assurance levels, which range from EAL1 (lowest), where functionality testing takes place, through EAL7 (highest), where thorough testing is performed and the system design is verified. The assurance designators used in the CC are as follow:

• EAL1: Functionally tested

• EAL2: Structurally tested

• EAL3: Methodically tested and checked

• EAL4: Methodically designed, tested, and reviewed

• EAL5: Semi-formally designed and tested

• EAL6: Semi-formally verified design and tested

• EAL7: Formally verified design and tested

CC uses a concept called a protection profile during the evaluation process. The protection profile describes a set of security requirements or goals along with functional assumptions about the environment. Therefore, if someone identified a security need not currently addressed by any products, he could write a protection profile that describes the need and the solution and all issues that could go wrong during the development of the system. This would be used to guide the development of a new product. A protection profile contains the following elements:

• Descriptive elements: The name of the profile and a description of the security problem that is to be solved.

• Rationale: Justification of the profile and a more detailed description of the real-world problem to be solved. The environment, usage assumptions, and threats are given along with security policy guidance that can be supported by products and systems that conform to this profile.

• Functional requirements: Establishment of a protection boundary, meaning the threats or compromises that are within this boundary to be countered. The product or system must enforce the boundary.

• Development assurance requirements: Identification of the specific requirements that the product or system must meet during the development phases, from design to implementation.

• Evaluation assurance requirements: Establishment of the type and intensity of the evaluation.

The result of following this process will be a security target. This is the vendor’s explanation of what the product brings to the table from a security standpoint. Intermediate groupings of security requirement developed along the way to a security target are called packages.

Certification and Accreditation

Although the terms are used as synonyms in casual conversion, accreditation and certification are two different concepts in the context of assurance levels and ratings, although they are closely related. Certification evaluates the technical system components, whereas accreditation occurs when the adequacy of a system’s overall security is accepted by management.

The National Information Assurance Certification and Accreditation Process (NIACAP) provides a standard set of activities, general tasks, and a management structure to certify and accredit systems that will maintain the information assurance and security posture of a system or site. The accreditation process developed by NIACAP has four phases:

• Phase 1: Definition

• Phase 2: Verification

• Phase 3: Validation

• Phase 4: Post Accreditation

NIACAP defines the following three types of accreditation:

• Type accreditation evaluates an application or system that is distributed to a number of different locations.

• System accreditation evaluates an application or support system.

• Site accreditation evaluates the application or system at a specific self-contained location.

Security Architecture Maintenance

Unfortunately, after a product has been evaluated, certified, and accredited, the story is not over. The product typically evolves over time as updates and patches are developed to either address new security issues that arise or to add functionality or fix bugs. When these changes occur, as ongoing maintenance, the security architecture must be maintained.

Ideally, solutions should undergo additional evaluations, certification, and accreditation as these changes occur, but in many cases the pressures of the real world prevent this time-consuming step. This is unfortunate because as developers fix and patch things, they often drift further and further from the original security design as they attempt to put out time-sensitive fires.

This is where maturity modeling becomes important. Most maturity models are based on the Software Engineer Institute’s CMMI, which is discussed in Chapter 5, “Software Development Security.” It has fives levels: initial, managed, defined, quantitatively managed, and optimizing.

IT Governance Institute (ITGI) developed the Information Security Governance Maturity Model to rank organizations against industry best practices and international guidelines. It includes six rating levels, numbered from zero to five: nonexistent, initial, repeatable, defined, managed, and optimized. The nonexistent level does not correspond to any CMMI level, but all the other levels do.

Security Architecture Threats

Despite all efforts to design a secure architecture, attacks to the security system still occur and still succeed. In this section we examine some of the more common types of attacks. Some of these have already been mentioned in passing or in the process of explaining the need for a particular protection mechanism.

Maintenance Hooks

From the perspective of software development, a maintenance hook is a set of instructions built into the code that allows someone who knows about the so-called “back door” to use the instructions to connect to view and edit the code without using the normal access controls. In many cases they are placed there to make it easier for the vendor to provide support to the customer. In other cases they are paced there to assist in testing and tracking the activities of the product and never removed later.

Note

A maintenance account is often confused with a maintenance hook. A maintenance account is a backdoor account created by programmers to give someone full permissions in a particular application or operating system. A maintenance account can usually be deleted or disabled easily, but a true maintenance hook is often a hidden part of the programming and much easier to disable. Both of these can cause security issues because many attackers try the documented maintenance hooks and maintenance accounts first. You would be surprised at the number of computers attacked on a daily basis because these two security issues are left unaddressed.

Regardless of how they got into the code they can present a major security issue if they become known to hackers who can use them to access the system. Countermeasures on the part of the customer to mitigate the danger are

• Use a host IDS to record any attempt to access the system using one of these hooks.

• Encrypt all sensitive information contained in the system.

• Implement auditing to supplement the IDS.

The best solution is for the vendor to remove all maintenance hooks before the product goes into production. Coded reviews should be performed to identify and remove these hooks.

Time-of-Check/Time-of-Use Attacks

Time-of-check/Time-of-use attacks attempt to take advantage of the sequence of events that occur as the system completes common tasks. It relies on knowledge of the dependencies present when a specific series of events occur in multiprocessing systems. By attempting to insert himself between events and introduce changes, the hacker can gain control of the result.

A term often used as a synonym for a time-of-check/time-of-use attack, is a race condition, which is actually a different attack. In this attack rather, the hacker inserts himself between instructions, introduces changes, and alters the order of execution of the instructions, thereby altering the outcome.

Countermeasures to these attacks are to make critical sets of instructions atomic. This means that they either execute in order and in entirety or the changes they make are rolled back or prevented. It is also best for the system to lock access to certain items it will use or touch when carrying out these sets of instructions.

Web-based Attacks

Attacks upon information security infrastructures have continued to evolve steadily over time, and the latest attacks use largely more sophisticated web application-based attacks. These attacks have proven more difficult to defend with traditional approaches using perimeter firewalls. All web application attacks operate by making at least one normal request or a modified request aimed at taking advantage of inadequate input validation and parameters or instruction spoofing. In this section, two web markup languages are compared on their security merits, followed by a look at an organization that supports the informed use of security technology.

XML

Extensible Markup Language (XML) is the most widely used web language now and has come under some criticism. The method currently used to sign data to verify its authenticity has been described as inadequate by some, and the other criticisms have been directed at the architecture of XML security in general. In the next section an extension of this language that attempts to address some of these concerns is discussed.

SAML

Security Assertion Markup Language (SAML) is an XML-based open standard data format for exchanging authentication and authorization data between parties, in particular, between an identity provider and a service provider. The major issue on which it focuses is the called the web browser single sign-on (SSO) problem.

As you might recall, SSO, which is discussed in Chapter 2, is the ability to authenticate once to access multiple sets of data. SSO at the Internet level is usually accomplished with cookies but extending the concept beyond the Internet has resulted in many propriety approaches that are not interoperable. SAML’s goal is to create a standard for this process

OWASP

The Open Web Application Security Project (OWASP) is an open-source application security project. This group creates guidelines, testing procedures, and tools to assist with web security. They are also are known for maintaining a top-ten list of web application security risks.

Server-Based Attacks

In many cases an attack focuses on the operations of the server operating system itself rather than the web applications running on top of it. Later in this section we look at the way in which these attacks are implemented focusing mainly on the issue of data flow manipulation.

Data Flow Control

Software attacks often subvert the intended dataflow of a vulnerable program. For example, attackers exploit buffer overflows (discussed in Chapter 2 and Chapter 5) and format string vulnerabilities to write data to unintended locations. The ultimate aim is either to read data from prohibited locations or write data to memory locations for the purpose of executing commands, crashing the system or making malicious changes to the system. As you have already learned, the proper mitigation for these types of attacks is proper input validation and data flow controls that are built into the system.

With respect to databases in particular, a dataflow architecture is one that delivers the instruction tokens to the execution units and returns the data tokens to the content addressable memory. (CAM is hardware memory, not the same as RAM.) In contrast to the conventional architecture, data tokens are not permanently stored in memory; rather they are transient messages that only exist when in transit to the instruction storage. This makes them less likely to be compromised.

Database Security

In many ways the database is the Holy Grail for the attacker. It is typically where the sensitive information resides. Some specific database attacks are reviewed briefly in this section.

Inference

Inference occurs when someone has access to information at one level that allows them to infer information about another level. The main mitigation technique for inference is polyinstantiation, which is the development of a detailed version of an object from another object using different values in the new object. It prevents low-level database users from inferring the existence of higher level data.

Aggregation

Aggregation is defined as assembling or compiling units of information at one sensitivity level and having the resultant totality of data being of a higher sensitivity level than the individual components. So you might think of aggregation as a different way of achieving the same goal as inference, which is to learn information about data on a level to which one does not have access.

Contamination

Contamination is the intermingling or mixing of data of one sensitivity or need-to-know level with that of another. Proper implementation of security levels is the best defense against these problems.

Data Mining Warehouse

A data warehouse is a repository of information from heterogeneous databases. It allows for multiple sources of data to not only be stored in one place but to be organized in such a ways that redundancy of data is reduced (called data normalizing) and more sophisticated data mining tools to be used to manipulate the data to discover relationships that may not have been apparent before. Along with the benefits they provide they also offer more security challenges.

The following are control steps that should be performed in data warehousing applications:

• Monitor summary tables for regular use.

• Monitor the data purging plan.

• Reconcile data moved between the operations environment and data warehouse.

Distributed Systems Security

Some specific security issues need discussion when operating in certain distributed environments. This section covers three special cases in which additional security concerns might be warranted.

Cloud Computing

Cloud computing is the centralization of data in a web environment that can be accessed from anywhere anytime. An organization can create a cloud environment (private cloud) or it can pay a vendor to provide this service (public cloud). While this arrangement offers many benefits, using a public cloud introduces all sorts of security concerns. How do you know your data is kept separate from other customers? How do you know your data is safe? It makes many uncomfortable to outsource the security of their data.

Grid Computing

Grid computing is the process of harnessing the CPU power of multiple physical machines to perform a job. In some cases individual systems might be allowed to leave and rejoin the grid. Although the advantage of additional processing power is great, there has to be concern for the security of data that could be present on machines that are entering and leaving the grid. Therefore, grid computing is not a safe implementation when secrecy of the data is a key issue.

Peer-to-Peer Computing

Any client-server solution in which any platform may act as a client or server or both is called peer-to-peer computing. A widely used example of this is instant messaging. These implementations present security issues that do not present themselves in a standard client-server arrangement. In many cases these systems operate outside the normal control of the network administrators.

Problems this can present are

• Viruses, worms, and Trojan horses can be sent through this entry point to the network.

• In many cases, lack of strong authentication allows for account spoofing.

• Buffer overflow attacks and attacks using malformed packets can sometimes be successful.

If these systems must be tolerated in the environment the following guidelines should be followed:

• Security policies should address the proper use of these applications.

• All systems should have a firewall and antivirus products installed.

• Configure firewalls to block unwanted IM traffic.

• If possible only allow products that provide encryption.

Exam Preparation Tasks

Key Topics

Review the most important topics in this chapter, noted with the Key Topics icon in the outer margin of the page. Table 7-4 lists a reference of these key topics and the page numbers on which each is found.

Define Key Terms

Define the following key terms from this chapter and check your answers in the glossary:

confidentially

integrity

availability

defense in depth

architectural description (AD)

view

middleware

embedded system

mobile code

flash memory

FPGA

firmware

Very Long Instruction Word processor

multitasking

Zachman framework

TOGAF

Sherwood Applied Business Security Architecture (SABSA)

ISO/IEC 27000

COBIT

state machine models

multilevel lattice models

noninterference models

information flow model

Bell-LaPadula model

Biba model

Clark-Wilson Integrity model

Lipner model

Brewer-Nash (Chinese Wall) model

Graham–Denning model

Harrison-Ruzzo-Ullmen model

Trusted Computer System Evaluation Criteria (TCSEC)

Information Technology Security Evaluation Criteria (ITSEC)

Common Criteria

certification

time-of-check/time-of-use attacks

Extensible Markup Language (XML)

Security Assertion Markup Language (SAML)

Open Web Application Security Project (OWASP)

data warehouse

cloud computing

grid computing

Review Questions

1. Which of the following is provided if the data cannot be read?

a. integrity

b. confidentiality

c. availability

d. defiance in depth

2. Which of the following ISO-IEC 42010:2011 terms is the set of documents that convey the architecture in a formal manner?

a. architecture

b. stakeholder

c. architectural description

d. view

3. In a distributed environment, which of the following is software that ties the client and server software together?

a. embedded systems

b. mobile code

c. virtual computing

d. middleware

4. Which of the following is a relatively small amount of very high speed RAM, which holds the instructions and data from primary memory?

a. cache

b. firmware

c. flash memory

d. FPGA

5. In which CPU mode are the processors or cores handed work on a round-robin basis, thread by thread?

a. Cache mode

b. Symmetric mode

c. Asymmetric mode

d. Overlap mode

6. Which of the following comprises the components (hardware, firmware, and/or software) that are trusted to enforce the security policy of the system?

a. security perimeter

b. reference monitor

c. trusted computer base

d. security kernel

7. Which of the following is the dividing line between the trusted parts of the system and those that are untrusted?

a. security perimeter

b. reference monitor

c. trusted computer base

d. security kernel

8. Which of the following is a system component that enforces access controls on an object?

a. security perimeter

b. reference monitor

c. trusted computer base

d. security kernel

9. Which of the following is the hardware, firmware, and software elements of a trusted computing base that implements the reference monitor concept?

a. security perimeter

b. reference monitor

c. trusted computer base

d. security kernel

10. Which of the following frameworks is a two-dimensional model that intersects communication interrogatives (what, why, where, and so on) with various viewpoints (planner owner, designer and so on)?

a. SABSA

b. Zachman framework

c. TOGAF

d. ITIL

Answers and Explanations

1. b. Confidentiality is provided if the data cannot be read. This can be provided either through access controls and encryption for data as it exists on a hard drive or through encryption as the data is in transit.

2. c. The ISO-IEC 42010:2011 uses specific terminology when discussing architectural frameworks. Architectural description (AD) comprises the set of documents that convey the architecture in a formal manner

3. d. In a distributed environment, middleware is software that ties the client and server software together. It is neither a part of the operating system nor a part of the server software. It is the code that lies between the operating system and applications on each side of a distributed computing system in a network

4. a. Cache is a relatively small amount (when compared to primary memory) of very high speed RAM, which holds the instructions and data from primary memory, that has a high probability of being accessed during the currently executing portion of a program.

5. b. In this CPU mode the processors or cores are handed work on a round-robin basis, thread by thread.

6. c. The TCB comprises the components (hardware, firmware, and/or software) that are trusted to enforce the security policy of the system and that if compromised jeopardize the security properties of the entire system.

7. a. This is the dividing line between the trusted parts of the system and those that are untrusted. According to security design best practices, components that lie within this boundary (which means they lie within the TCB) should never permit untrusted components to access critical resources in an insecure manner.

8. b. A reference monitor is a system component that enforces access controls on an object. It is an access control concept that refers to an abstract machine that mediates all accesses to objects by subjects