In the modern day of web-based and cloud services, it is also possible to persist both datasets and machine learning models to online cloud storage. This is a great solution when dealing with enormous amounts of data, since cloud solutions take care of both the storage and processing of huge amounts of data.

BigML (http://bigml.com/) is a cloud provider for machine learning resources. BigML internally uses Classification and Regression Trees (CARTs), which are a specialization of decision trees (for more information, refer to Top-down induction of decision trees classifiers-a survey), as a machine learning model.

BigML provides developers with a simple REST API that can be used to work with the service from any language or platform that can send HTTP requests. The service supports several file formats such as CSV (comma-separated values), Excel spreadsheet, and the Weka library's ARFF format, and also supports a variety of data compression formats such as TAR and GZIP. This service also takes a white-box approach, in the sense that models can be downloaded for local use, apart from the use of the models for predictions through the online web interface.

There are bindings for BigML in several languages, and we will demonstrate a Clojure client library for BigML in this section. Like other cloud services, users and developers of BigML must first register for an account. They can then use this account and a provided API key to access BigML from a client library. A new BigML account provides a few example datasets to experiment with, including the Iris dataset that we've frequently encountered in this book.

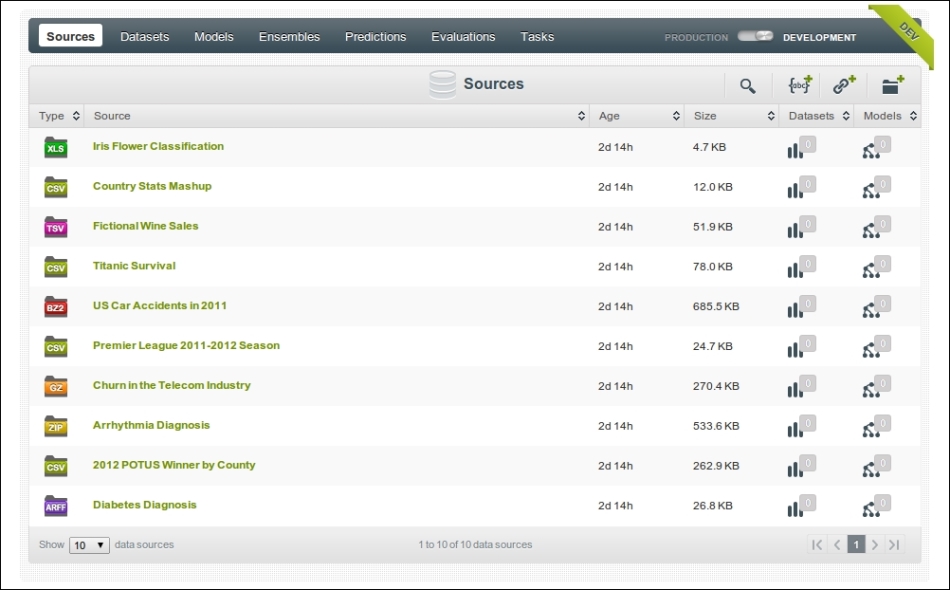

The dashboard of a BigML account provides a simple web-based user interface for all the resources available to the account.

BigML resources include sources, datasets, models, predictions, and evaluations. We will discuss each of these resources in the upcoming code example.

Note

The BigML Clojure library can be added to a Leiningen project by adding the following dependency to the project.clj file:

[bigml/clj-bigml "0.1.0"]

For the upcoming example, the namespace declaration should look similar to the following declaration:

(ns my-namespace

(:require [bigml.api [core :as api]

[source :as source]

[dataset :as dataset]

[model :as model]

[prediction :as prediction]

[evaluation :as evaluation]]))Firstly, we will have to provide authentication details for the BigML service. This is done using the make-connection function from the bigml.api namespace. We must provide a username, an API key, and a flag indicating whether we are using development or production datasets to the make-connection function, as shown in the following code. Note that this username and API key will be shown on your BigML account page.

(def default-connection (api/make-connection "my-username" ; username "a3015d5fa2ee19604d8a69335a4ac66664b8b34b" ; API key true))

To use the connection default-connection defined in the previous code, we must use the with-connection function. We can avoid repeating the use of the with-connection function with the default-connection variable by use of a simple macro, as shown in the following code:

(defmacro with-default-connection [& body]

'(api/with-connection default-connection

~@body))In effect, using with-default-connection is as good as using the with-connection function with the default-connection binding, thus helping us avoid repeating code.

BigML has the notion of sources to represent resources that can be converted to training data. BigML supports local files, remote files, and inline code resources as sources, and also supports multiple data types. To create a resource, we can use the create function from the bigml.source namespace, as shown in the following code:

(def default-source

(with-default-connection

(source/create [["Make" "Model" "Year" "Weight" "MPG"]

["AMC" "Gremlin" 1970 2648 21]

["AMC" "Matador" 1973 3672 14]

["AMC" "Gremlin" 1975 2914 20]

["Honda" "Civic" 1974 2489 24]

["Honda" "Civic" 1976 1795 33]])))In the previous code, we define a source using some inline data. The data is actually a set of features of various car models, such as their year of manufacture and total weight. The last feature is the mileage or MPG of the car model. By convention, BigML sources treat the last column as the output or objective variable of the machine learning model.

We must now convert the source to a BigML dataset, which is a structured and indexed representation of the raw data from a source. Each feature in the data is assigned a unique integer ID in a dataset. This dataset can then be used to train a machine learning CART model, which is simply termed as a model in BigML jargon. We can create a dataset and a model using the dataset/create and model/create functions, respectively, as shown in the following code. Also, we will have to use the api/get-final function to finalize a resource that has been sent to the BigML cloud service for processing.

(def default-dataset

(with-default-connection

(api/get-final (dataset/create default-source))))

(def default-model

(with-default-connection

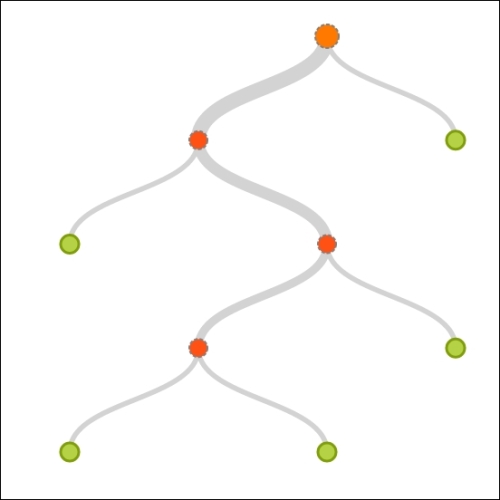

(api/get-final (model/create default-dataset))))BigML also provides an interactive visualization of a trained CART model. For our training data, the following visualization is produced:

We can now use the trained model to predict the value of the output variable. Each prediction is stored in the BigML cloud service, and is shown in the Predictions tab of the dashboard. This is done using the create function from the bigml.prediction namespace, as shown in the following code:

(def default-remote-prediction

(with-default-connection

(prediction/create default-model [1973 3672])))In the previous code, we attempt to predict the MPG (miles per gallon, a measure of mileage) of a car model by providing values for the year of manufacture and the weight of the car to the prediction/create function. The value returned by this function is a map, which contains a key :prediction among other things, that represents the predicted value of the output variable. The value of this key is another map that contains column IDs as keys and their predicted values as values in the map, as shown in the following code:

user> (:prediction default-remote-prediction)

{:000004 33}The MPG column, which has the ID 000004, is predicted to have a value of 33 from the trained model, as shown in the previous code. The prediction/create function creates an online, or remote, prediction, and sends data to the BigML service whenever it is called. Alternatively, we can download a function from the BigML service that we can use to perform predictions locally using the prediction/predictor function, as shown in the following code:

(def default-local-predictor

(with-default-connection

(prediction/predictor default-model)))We can now use the downloaded function, default-local-predictor, to perform local predictions, as shown in the following REPL output:

user> (default-local-predictor [1983])

22.4

user> (default-local-predictor [1983] :details true)

{:prediction {:000004 22.4},

:confidence 24.37119,

:count 5, :id 0,

:objective_summary

{:counts [[14 1] [20 1] [21 1] [24 1] [33 1]]}}As shown in the previous code, the local prediction function predicts the MPG of a car manufactured in 1983 as 22.4. We can also pass the :details keyword argument to the default-local-predictor function to provide more information about the prediction.

BigML also allows us to evaluate trained CART models. We will now train a model using the Iris dataset and then cross-validate it. The evaluation/create function from the BigML library will create an evaluation using a trained model and some cross-validation data. This function returns a map that contains all cross-validation information about the model.

In the previous code snippets, we used the api/get-final function in almost all stages of training a model. In the following code example, we will attempt to avoid repeated use of this function by using a macro. We first define a function to apply the api/get-final and with-default-connection functions to an arbitrary function that takes any number of arguments.

(defn final-with-default-connection [f & xs]

(with-default-connection

(api/get-final (apply f xs))))Using the final-with-default-connection function defined in the previous code, we can define a macro that will map it to a list of values, as shown in the following code:

(defmacro get-final-> [head & body]

(let [final-body (map list

(repeat 'final-with-default-connection)

body)]

'(->> ~head

~@final-body)))The get-final-> macro defined in the previous code basically uses the ->> threading macro to pass the value in the head argument through the functions in the body argument. Also, the previous macro interleaves application of the final-with-default-connection function to finalize the values returned by functions in the body argument. We can now use the get-final-> macro to create a source, dataset, and model in a single expression, and then evaluate the model using the evaluation/create function, as shown in the following code:

(def iris-model

(get-final-> "https://static.bigml.com/csv/iris.csv"

source/create

dataset/create

model/create))

(def iris-evaluation

(with-default-conection

(api/get-final

(evaluation/create iris-model (:dataset iris-model)))))In the previous code snippet, we use a remote file that contains the Iris sample data as a source, and pass it to the source/create, dataset/create, and model/create functions in sequence using the get-final-> macro we previously defined.

The formulated model is then evaluated using a composition of the api/get-final and evaluation/create functions, and the result is stored in the variable iris-evaluation. Note that we use the training data itself to cross-validate the model, which doesn't really achieve anything useful. In practice, however, we should use unseen data to evaluate a trained machine learning model. Obviously, as we use the training data to cross-validate the model, the accuracy of the model is found to be a 100 percent or 1, as shown in the following code:

user> (-> iris-evaluation :result :model :accuracy) 1

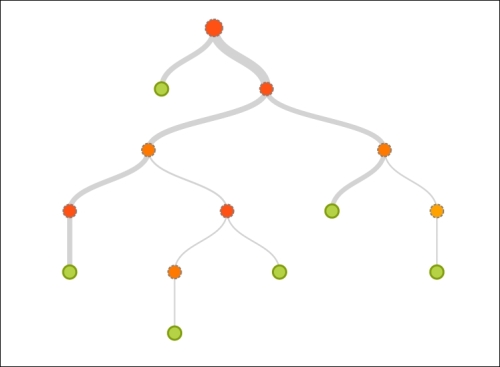

The BigML dashboard will also provide a visualization (as shown in the following diagram) of the model formulated from the data in the previous example. This illustration depicts the CART decision tree that was formulated from the Iris sample dataset.

To conclude, the BigML cloud service provides us with several flexible options to estimate CARTs from large datasets in a scalable and platform-independent manner. BigML is just one of the many machine learning services available online, and the reader is encouraged to explore other cloud service providers of machine learning.