12

Human–Machine Interaction and Visual Data Mining

Upasana Sinha1*, Akanksha Gupta2†, Samera Khan3‡, Shilpa Rani4§ and Swati Jain5

1J.K. Institute of Engineering, Bilaspur, India

2IT Department, GGV Central University, Bilaspur, India

3Amity University Chhattisgarh, Raipur, India

4CSE Department, NGIT, Hyderabad, India

5Govt. J.Y. Chhattisgarh College, Raipur, India

Abstract

Human-Computer Interaction (HCI) brings about colossal measures of information-bearing possibilities for understanding a human client’s aims, objectives, and wants. Realizing what clients need and need is a key to shrewd framework help. The hypothesis of psyche idea known from concentrates in creature conduct is embraced and adjusted for an expressive client displaying. Speculations of the brain are theoretical client models speaking to, somewhat, a human client’s musings. A hypothesis of the spirit may even uncover unsaid information. Along these lines, client displaying becomes information disclosure going past the human’s information and covering explicit space experiences. Speculations of the psyche are incited by mining HCI information. Information mining ends up being an inductive demonstrating. Insightful collaborator frameworks are inductively demonstrating a human client’s aims, objectives, and so forth, just as space information is, essentially, learning frameworks. To adapt to the danger of failing to understand the situation, learning frameworks are furnished with the expertise of reflection.

Here we proposed Gesture recognition; Gesture recognition is a developing theme in the present advancements. The fundamental focal point of this is to perceive the human motions utilizing numerical calculations for Human-Computer cooperation. Just a couple of methods of Human-Computer Interaction exist, are: through the console, mouse, contact screens, and so on.

Every one of these gadgets has its restrictions with regards to adjusting more flexible equipment in computers. Motion recognition is one of the basic methods to fabricate easily to use interfaces. Typically motions can be started from any substantial movement or state; however, they normally begin from the face or hand. Signal recognition empowers clients to interface with the gadgets without truly contacting them. This Chapter depicts how hand signals are prepared to play out specific activities like exchanging pages, looking up or down on a page.

Keywords: Human–Computer Interaction, HCI, visual data mining, data mining, data visualization

12.1 Introduction

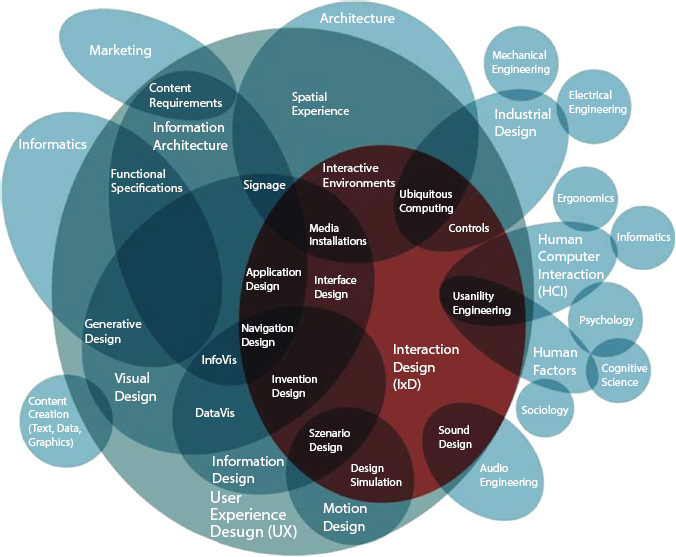

Human–Computer Interaction (HCI) is situated at the intersection of a variety of functional areas, comprising human brainpower, image recognition, facial recognition, follow-up activity, and so forth.

Of late, there has already been an increasing passion for enhancing many forms of communication between humans and computers. This is believed that to truly achieve effective human–computers shrewd cooperation (HCII), there is a necessity for computer to have the option of communicating properly with the user, such as how human–human communication takes place.

Systems interact with each other, ultimately via discussion, but also through body movement, to underscore a particular feature of debate and discussion and to display emotions. Mostly as an effect, the latest interface developments are progressively moving towards mandatory exchange in data through normal, observable methods of sight, sound, and touch. In a near and personal company, people use these forms of communication all the time and in combination, using one to complement and enhance others. To a large degree, the exchanged data is reflected in this distinctive, integrative architecture. Frequently, interactional collaboration has a focal mass in human interaction, with vision, look, specificity, and automatic signals that frequently contribute significantly, quite as much time adorning characteristics, such as emotion, disposition, attitude, and awareness. However it may be, the sections of the multiple methods and their interaction continue to be calculated and logically interpreted. What is needed is a study of human–computer correspondence that sets up a multi-modal “dialect” and “debate and discussion” scheme, just like the framework that we have established for spoken exchange.

A further important perspective is the development of human-centered information security. The most critical problem here is the way to achieve cohesion between man and machine. The word “Human-Centered” is used to illustrate how, while all available data systems have been designed for human clients, a significant number of them are a long way from possible to understand. What could the logical/design system be doing to make an advancement influence?

Data frameworks are pervasive in all human undertakings, including logical, clinical, military, transportation, and customer. Singular clients use them for picking up, looking for data (counting information mining), doing investigate (counting visual registering), and composing. Different clients (gatherings of clients and gatherings of clients) use them for correspondence and coordinated effort. Furthermore, either single or various clients use them for amusement. A data framework comprises of two parts:

Computer (information/information base and data are preparing motor), and people. It is the canny connection between the two that we are tending to. We plan to recognize the significant exploration issues and to discover conceivably productive future examination headings. Moreover, we will talk about how a domain can be made which helps complete such examination.

In numerous significant HCI applications, for example, computer helped to mentor and in learning; it is exceptionally attractive (even obligatory) that the reaction of the computer consider the passionate or intellectual condition of the human client. Feelings are shown via graphic, spoken, and other functional methods. There remains an emergent measure of proof demonstrating the enthusiastic aptitudes stay important for what is designated “knowledge” [1, 2]. Computers today can perceive a lot of what is stated, and somewhat, who said it. Yet, they are totally in obscurity with regards to how things are stated, the full of a feeling channel of data. This is genuine in the discourse, yet also in visual interchanges, notwithstanding the way that outward appearances, stance, and signal impart the absolute most basic data: how individuals feel. Full of feeling correspondence unequivocally think about how feelings can be perceived and communicated during Human–Computer cooperation. Figure 12.1 represents the CRISP and KDD process Flowchart.

Only as norm currently, in the condition that users participate in human-human collaboration and replace one of the humans with a Machine, psychological interaction fails at that stage. Besides, it isn’t because individuals quit conveying influence—surely we have all observed an individual communicating outrage at his machine. The issue emerges because the computer cannot perceive if the human is satisfied, irritated, intrigued, or exhausted. Consider so if this information were ignored by a user and began ranting long once we had winced, we wouldn’t recognize that person to be particularly focused. Acknowledgment of emotion is a central aspect of awareness. Operating systems have a clear effect diminished.

Figure 12.1 Shows the CRISP and KDD process flowchart.

Besides, if you embed a computer (as a channel of correspondence) between at least two people, at that point, the emotional transmission capacity might be incredibly diminished. Email might be the most as often as possible utilized method for electronic correspondence, yet regularly the entirety of the enthusiastic data is lost when our considerations are changed over to the Computerized media.

Exploration is accordingly required for better approaches to convey influence through computer intervened situations. Computer interceded correspondence today quite often has less emotional transfer speed than “being there, vis-à-vis”. The approach of full of feeling wearable computers, which could help enhance emotional data as seen from the physical condition of the organism, is indeed one ability to alter the concept of interaction.

12.2 Related Researches

The papers in the processes contain specific sections of the advances that support the human–computer interaction. The number of developers is sight researchers of machine that research is associated with a human–computer relation.

The report of Warwick and Gasson [3] describes the suitability of the instant connection between the human cognitive modality and the Machine organization. The developers evaluate the recent circumstance with neuronal assembles and discuss the possible implications of certain embedded technology as a widely useful human-computer interface for what is about to follow.

Human–robot collaboration (HRI) is already extended as of late. Peer-ruling, flexible devices can interpret and obey a user, understand his voice command, and perform an action to support him. A major thing that makes HRI distinctive from the normal HCI is that machines can not only passively bring data out of their situation but can also make good choices and efficiently alter their design. Huang and Weng [4] have implemented an interesting approach in this context. Their paper provides a compelling structure for HRI that incorporates interest and promotes learning. The machine creates a convincing structure via its interactions with the environment and instructors.

Paquin and Cohen also implemented a vision-based gestural guidance design for flexible, interactive levels [5]. The framework regulates the robot’s relative motion by using a variety of predetermined static analysis and dynamic handing gestures that are guided by the marshaling code. Images taken by an onboard camera are equipped to obey the hand and the head of the operator. Nickel and Stiefelhagen have adopted a qualitative approach [6]. Given the images provided by the modified audio system image, the highlighting and variance information are integrated into the multi-theories supporting the design to explore the 3D positions of the distinct body parts. Given the movements of the hands, an HMM-based methodology is adopted for the interpretation of object indications.

Mixed Reality (MR) introduces a wide framework for the Human-Computer Association. It is feasible, by computer vision techniques, to render advanced input devices. This device is presented by Tosas and Li [7]. They depict a virtual qwerty keyboard application that reflects the thinking of the virtual user interface. Image follow-up and comprehension of the client’s hand and wrist activity allows the recognition of keystrokes on the virtual touch screen. An application customized to render a proposal for a realistic MR workspace is implemented [8]. An expanded human reality graphic organizer for the position of objects is implemented [9]. A 3D pointing interface capable of performing 3D recognition of a bearing pointing arm is proposed [10]. Also, Licsar and Sziranyi are suggesting a system for the identification of hand gestures [11].

A hand-in-hand evaluation methodology is investigated [12]. They present an investigation of the classification plan for use in a more general approach to the different leveled image recognition. The new down-measuring of computers and tactile devices allows people to wear such devices like clothes. A few of the main fields of wearable finding analysis is smartly supporting people regularly. Authors in [13] suggest a body-connected system to collect visual and audio data relevant to the quality of service. This material provides function efficiently for the recording/examination of human activities and can be used in a wide variety of applications, such as computerized journals or organization inquiries. Some other interactive system has been developed [14].

The 3D head in a visual progression was viewed as necessary for intense outer appearance/emotion analysis, facial recognition, and model-based coding. The paper by Dornaika and Ahlberg [15] introduces a system for continuous head and facial movements using deformable 3D models. A comparative framework has been developed [16]. They are likely to use their ongoing global positioning system to view valid external appearances. Lee and Kim are suggesting a pose invariant approach to face recognition [17]. A 3D head-to-head assessment approach is proposed [18]. They introduce another strategy for the finding of the existing head using the projective invariance of the evaporating point. Du and Lin present a multi-see face image configuration using a factorization model [19]. The performance of the proposed method can be extended to a few HCI regions, such as free face recognition or face movement in a simple difference.

The creation of an interpretation of information would be another trend in the human–computer association. The state of the surrounding knowledge is sensitive to the involvement of individuals and responsive to their needs. Nature will be prepared to welcome us back home, to make a decision about our state of mind, and to change our condition to represent it. Such a realm is still a vision, but it is one that evokes an emotional reaction in the minds of researchers across the globe and is the focus of a few major industrial activities. One such operation is implemented [20]. They utilize discourse recognition and computer vision to demonstrate the modern age of applications in a private situation. A significant part of such a system is the localization module. The prospective use of this system is suggested by Okatani and Takuichi [21]. Another critical feature of the relevant, wise system is the analysis of the typical tasks carried out by the customer. Ma and Lin respond to this question [22].

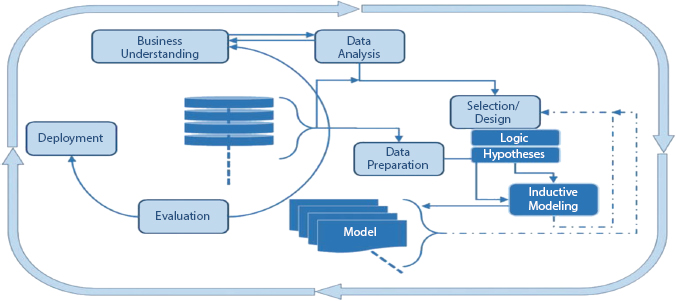

Figure 12.2 Shows data mining process.

12.2.1 Data Mining

Information Mining is characterized as the way toward working with information; it is a cycle that makes information helpful and mines data from it to arrive at intelligent, strong resolutions utilizing different techniques as indicated below. Data for the framework that can be taken from the Swedish disease register can be utilized for the pilot study. Data mining processes is shown in Figure 12.2.

12.2.2 Data Visualization

Information perceptions make it simpler for the individual to comprehend what the investigation of the information has derived. Under the area of discernment, the term representation is alluded to as the development of visual picture in the psyche targeting shaping a psychological model of the information that is being examined. Elementary representations incorporate pixel-based representations, chart-based perception, and mathematical-based perceptions. In this framework separated from these worldly perceptions, volumetric picture perception is a kind of information representation that is likewise utilized. For the representation of transient information, there are numerous procedures, for example, divider charts, plain portrayal, star portrayal, winding portrayal, concentric circles method, lifesaver strategy, etc. Temporal portrayals are done at each phase of the information-digging measure so that for future reference, they are available. In the situation of Volumetric Image Viewing strategies, we have a couple of standard methods, for example, multiplanar reconstruction (MPR), surface delivery (SR), and volume delivery (VR).

12.2.3 Visual Learning

In this part, we depict various parts of the visual structure that permit us to screen and steer the ML calculation. Figure 12.3 represents the Visual Analytics Flowchart.

- a) Affirmation inclination: Cognitive science shows that there is a propensity to look for new data in a manner that confirms the current theory and to nonsensically maintain a strategic distance from data and translations, which repudiate earlier convictions [23].

- b) No free lunch: From the hypothesis, we realize that there is no generally acceptable inquiry/learning configuration (English et al., 2000). Transforming one boundary may improve the exhibition for certain issues; however, it normally lessens it for other people. Human information is needed to indicate great boundaries and to control the AI calculation in the privileged direction. For the model, the choice can be locally ideal, yet the client comprehends that incapacitating pieces of the speculation port end well by the by.

- c) Qualities and shortcomings: The most significant quality of the human client is his capacity to comprehend the current issue. In this way, he can frequently choose when to perform coordinated inquiry (enhancement) and how to restrict the hunt space. Besides, given the perception, he is regularly ready to separate data from boisterous or fragmented information. The client can offer importance to the highlights recognized in the data. The machine then again has numerous capacities the client lacks: its work is reasonable, quick, and vigorous. It can perform enormous hunts, and we realize that heuristic pursuit calculations can perform very well in huge and unstructured inquiry spaces [24].

Figure 12.3 Shows visual analytics flowchart.

- d) No Labels: Flow highlights can be fluffy for different reasons. Regularly, their limits are not sharp. Bigger highlights (for example, vortices)are made out of littler structures that can highlight themselves. When investigating recreation information, it isn’t pre-figured out which information ranges are significant. Simply after the architect has increased comprehension of the circumstance, we acquire names for the information (e.g., ‘excessively hot’).

- e) Client Requirements: The three focal prerequisites are control, strength, and understandability. Control empowers the designer to define what he thinks about intriguing and to guide the AI cycle the correct way. Strength is the heartlessness toward little blunders or deviations from suspicions. The third necessity is understandability. This implies a direct perception of results and their literary portrayal to encourage revealing.

12.3 Visual Genes

We call the littlest thing, which conveys data a quality. In the current setting, we consider a solitary one-dimensional fluffy choice a quality. That is a tuple of the structure (fuzzyin, fullmin, fullmax, fuzzymax, ai), where the first four qualities define a fluffy choice and ai the pertinent trait. Point-by-point data about determinations is obvious in little dissipate plot symbols. These symbols go about as switch catches to permit the deactivation of all determinations in a single property sees. For more specific connections, the client can amplify these dissipate plots to alter, move, include, or eliminate choices. In the first steps of information investigation, one of the most significant undertakings is to find the information ascribes which apply to the current issue. In contrast, if an immense set of instances are available, algorithm calculations could develop more easily when only substantial attributes are considered. Subsequently, we expand the UI by a basic shading-based sign portraying which credits are normal in the genetic supply. For each quality, we process recurrence esteem and coordinate this data as the foundation shading into the presentation of theories. For instance, the significant ascribes a0and a1are incessant in the genetic supply and get hazier shading than the others.

12.4 Visual Hypotheses

Speculation is a fluffy rationale mix of choices. Every theory is pictured as an even edge of intuitive quality perspectives. Disjunctively joined perspectives (statements) are put one next to the other without isolating space. Conditions themselves are set in discrete edges. A combination of conditions shapes speculation. The client speculation is demonstrated by a little symbol on the left and further featured by an alternate foundation shading. Along these lines, the theories in a populace can be seen and thought about. To determine novel qualities, determinations from connected perspectives can be moved to the client theory. To analyze the contextual characteristics of a material hypothesis, the user should demonstrate a corresponding fuzzy option in the related 3D delivery, where fuzzy participation values can be integrated into the transfer work, e.g. as scheduling of the degree of the option of murkiness or highlighting.

12.5 Visual Strength and Conditioning

The goal is to find hypotheses that contribute to outlines that are relative in real objects and versatile in the selection of resources. These ideas should be as simple as it could be predicted. Throughout this way, we need initiatives that promote consistency, multi-layered existence, and differentiation to define the fitness of analysis. Constructing the amounts of these factors provides an overview of what to look for at the intensity training phase. Higher motivation attributes can lead to an elevated performance in one or more of the three segments, including proximity, human consciousness, and uncertainty. As these are important results, these three elements occur as modified shades of green in the fitness counter to one side of each prediction. For example, the advancement in fitness somewhere between the context of (2) and (3) is due to lower complexities (most limited modest green bar), while the differentiation between the range of (3) and (4) is due to better matching between the high points [26].

12.6 Visual Optimization

A vital shortcoming of streamlining calculations which do not have the help from foundation information is the way that they frequently can’t segregate among nearby and worldwide optima. Accordingly, they are inclined to stall out at neighborhood optima, where the fitness of a theory is moderately high yet a long way from a worldwide ideal. The inquiry of whether a nearby ideal is significant or not in the current setting can’t be replied to by the machine. Then again, regardless of whether including the nearby neighborhood just, a broad inquiry regularly can’t be performed by the human client. Thus, we add an advance catch to every proviso with the end goal that the client can choose when and where to look. We have actualized a straightforward hillclimbing administrator who plays out a sloping climb towards the nearby ideal: The operator passes each restriction part of each option and sets the fitness for all shifts. Of all possible changes, it’s the one with the highest enhancement that is recognized. This would be done for decreasing progress dimensions again until the greatest point drops below the defined edge of the user. Consider, for example, a one-dimensional, highly nonlinear decision S = (smin, smax ) contained in theory. For a given advance δs, the fitness of the theory changes when Sis replaced by S1 = (smin − δs, smax), S2 = (smin +δs, smax ), S3 = (smin, smax − δs) or S4 = (smin, smax +δs).

12.7 The Vis 09 Model

This model depends on a manufactured informational collection containing a well discernable component (‘Vis09’) which is identified with different ascribes in the information. Over a 3D space, we define ten scalars ascribes. Voxels outside the element have characteristic irregular qualities in the [25, 27] interval. The information esteems inside the element are picked with the end goal that the element is describable by two distinct theories. The first theory includes a choice on ascribes a0 and a1. The subsequent theory is defined among credits a2, a3, a4, a5, a6 and a7, which must be joined in a specific way. The ‘Vis’ portion of the element can be chosen by brushing the a2 vs. a3 scatterplot related to a6 vs. a7. The ‘09’ section can be chosen by brushing the a4 vs. a5 scatterplot related to a6 vs. a7. Neither a2 versus a3 nor a6 versus a7 alone outcome in helpful speculation. In this manner, the ‘Vis09’ highlight is of the structure ![]() Such a component is practically difficult to find for the human client, even though it is still fairly basic from the perspective of the machine. The engineered informational collection contains a third component defined in ascribes a8, a9 which is effortlessly found and chosen intelligently. It has been masterminded to contain the area of the ‘Vis09’ highlight. The client has figured out that ascribes a8 and a9 might depict a component of intrigue. This is typically where one characteristic generally portrays what a designer is searching for (for example, low weight is generally identified with vortices). The subsequent component isn’t exceptionally sharp, however.

Such a component is practically difficult to find for the human client, even though it is still fairly basic from the perspective of the machine. The engineered informational collection contains a third component defined in ascribes a8, a9 which is effortlessly found and chosen intelligently. It has been masterminded to contain the area of the ‘Vis09’ highlight. The client has figured out that ascribes a8 and a9 might depict a component of intrigue. This is typically where one characteristic generally portrays what a designer is searching for (for example, low weight is generally identified with vortices). The subsequent component isn’t exceptionally sharp, however.

12.8 Graphic Monitoring and Contact With Human–Computer

A key fundamental of the visual examination approach is the recognition that mechanical arrangements are inadequate to manage the complexities of data and the requirement for novel arrangements. Human examiners must use data innovation to accomplish knowledge and imaginative answers for new and advancing issues described by gigantic and complex information. In characterizing visual examination as a study of diagnostic thinking encouraged by intuitive representation innovations, the originators of visual investigation set equivalent accentuation on propelling our comprehension of human psychological cycles and on the plan, usage, and assessment of models and calculations for handling (e.g., AI), showing and connecting with data.

From this point of view, visual examination and HCI share a lot of shared opinions. Both accept the Human–Computer framework as an important unit of examination as they try to comprehend the unpredictability and assorted variety of clients’ capacities and exercises in innovative settings. On account of visual investigation, the center is all the more tuned to human perceptual, psychological, and synergistic capacities as they identify with the dynamic, intuitive visual portrayals of data with regards to scientific methodologies and techniques in the objective space. Visual investigation places specific accentuation on intellectual undertakings, and explicitly errands that are poorly characterized, e.g., “Toto distinguish the normal and find the unforeseen” [28]. The accentuation on complex cognizance, theory development, and assessment in outwardly rich settings requires visual examination scientists to coordinate their methodology and techniques with advancing speculations of psychological mastery, innovativeness, design recognition, and visual thinking from intellectual science.

Figure 12.4 Shows visual analytics and human–interaction.

In making the change to visual investigation work, customary HCI strategies, for example, convention examination, intellectual errand investigation, and action hypothesis must be adjusted to reveal insight into the utilization of complex visual presentations in scientific cycles. The study of visual examination is uncommon in that it was made in discourse with a specific way to deal with tackling complex issues, the utilization of intuitive and in some cases Mixed activity Human–Computer representation situations. This exchange among science and application makes limitations just as open doors for both. For understanding and propelling human collaboration with representation frameworks, difficulties to HCI may be shown in [29] Figure 12.4:

- a) Graphical legitimacy. Analysts must address complex perceptual conditions and errands. This necessity takes out by far most of the current research center examinations in recognition and comprehension.

- b) Frameworks are thinking. Exploration groups must expand the sociology model of the portrayal of human capacities in characteristic circumstances to seeing how human/innovation frameworks will play out their undertakings.

- c) Situational requirements. Specialists must expand their comprehension of human execution in novel circumstances, under time tension, and so forth. to empower “satisficing” execution of the human administrator under those conditions.

- d) Singular contrasts. Exploration must address execution contrasts inside a populace of clients, e.g., social contrasts, levels, and sorts of ability (particularly the remarkable attributes of profoundly talented people, “Thinking styles”, and so on.).

- e) Solid forecast. A study of cooperation must move past interface-plan rules to give explicit expectations of execution. Although evaluative concept analysis has its position, numerical and computational models, the autonomous limits of which can be modified and tested for the user and the usage environment, provide more analytical strength and should be emphasized.

Observationally approved assessment measurements could assist with figuring out what impacts singular contrasts (both intrinsic and obtained through involvement in intuitive perception) in perceptual and intellectual capacities may have on the capacity to pick up understanding through connection with the various visual portrayal of data. Throughout the analysis for all these predictive models, our investigation in the Personal Equation of Interaction (PEI) takes a closer look at potential measures of conceptual performance, including Trevarthan’s Two Visual Systems Hypothesis from Intellectual Neuroscience; Pylyshyn’s FINST Hypothesis of Spatial Consideration; and normally known quantitative figures, such as Rotter’s Locus of Control.

Similar research in research examines the social and organizational usage of automatic awareness structures. This approach is defined by the HCI Convention on Subjective Research on social psychological concepts and methods to Resolve Human–Mechanical Frameworks. Usually consider a “couple-examination” method, with a professional visual investigation device client (a supposed “VA Expert” or VAE) operating with a theme master to conduct a practical arrangement using real facts. Our pair-examination work looks at the airplane security investigation with the Boeing Company. As a team with these examiners, we recognized a few investigative issues to test the productivity of systematic visual devices. Our technique depended on utilizing our systematic visual specialists, who worked together intimately with Boeing wellbeing investigators to tackle a certifiable issue. This nearby cooperation dodged one of the impediments for innovative progress, in particular, the expenses in time and exertion for a specialist wellbeing examiner to gain proficiency with another product application and another way to deal with imagining and collaborating with security information. Video of diagnostic cooperation could then be examined utilizing Grounded Theory and Joint Activity Theory draws near.

Of equivalent significance for visual examination are the social and authoritative parts of innovation recognition. Our work with genuine perception applications exhibits scientific fitness is regularly conveyed across hierarchical, social, and social settings. Mechanical help for these cycles must be adjusted to their disseminated nature. The focal point of consideration here extends from the human–mechanical frameworks accentuation of pair investigation to socio-specialized frameworks that incorporate a further examination of social and social collaborations. A few difficulties, some of them notable to HCI professionals, are to be tended to here; among them: protection from mechanical advancements, psychological inclinations strengthened by social structures, and the impact of social contrasts on an understanding of visual portrayals.

Mechanical progress systems to bring visual examination into associations and decrease opposition must be additionally improved. What seems to work best is to initially comprehend hierarchical culture and objectives, at that point assemble client commitment in the creating and testing of models, trailed by the engaged utilization of existing and model visual examination apparatuses and methods to important expository issues and practical informational indexes. Recognizing lead trend-setters in the association whose systematic inquiries are not at present being tended to by accessible advances can create research partners and backers for hierarchical contribution. This has been the procedure of utilization situated visual examination designers, for example, those in the Charlotte Visualization Center at the University of North Carolina. Its WireVis application centers around helping master wire-extortion investigators distinguish designs in wire-move information that may show misrepresentation. In the improvement of these interfaces, visual investigation researchers work intimately with both master experts and innovation designers to address important issues with real clients and genuine information.

As we have seen, the visual investigation has aspiring objectives: to help complex individual expository discernment and social and social conveyed comprehension with balanced and one-to-numerous HCI, and to help these complex intellectual cycles under the strain of time, overpowering information, and restricted assets. Inquest for these objectives, psychological science specialists, analyze individual intellectual and perceptual processes and the intuitive visual pictures that encourage them, and cooperation and representation architects make collective interfaces that take into consideration simpler sharing and investigation of data between contributing gatherings. Computational visual examination analysts create numerical and computational devices for Mixed activity frameworks, in which the computer can start certain undertakings or cycles in the quest for an objective or produced theories. Establishing the whole cycle is progressing client tests and client contribution being developed cycles.

If fruitful, the innovation of visual investigation will test and approve the logical discoveries. On the off chance that the frameworks it makes can show quality improvement of psychological handling that can be offered as a powerful influence for certifiable issues, we can affirm the estimation of the science. Investigation of the utilization of VA frameworks will create new logical inquiries concerning the idea of intellectual preparing in innovative situations that can be tended to through research facility experimentation. Through this multidisciplinary, translational exploration approach [30], visual investigation tries to manufacture more prescient psychological models, science-mindful collaboration and perception plan techniques, and centered interdisciplinary programming improvement measures [31].

The last test that visual examination faces are maybe the hardest to conquer. In an attempt to re-visit our failure condition, we could see that the inclination towards the unpredictable nature of this situation would involve data and invention inventors and designers to know not only the requirements of the task and the circumstances of the user but also the impact of the new appearance and interaction on the cognitive, emotional and analytical cycles. We agree that this ability could ideally be done through a diverse consortium of HCI experts and condition monitoring practitioners. A virtual range of effects and seminars can be hosted at IEEE Visweek, the International Conference on Systems Sciences in Hawaii, and the annual VAC Consortium. Vibrant expenditure by HCI experts and practitioners on these and number of incidents would be fundamental to this essential and critical means of coping with the creation of data and communications to resolve the issues of the community and its structures [32].

12.9 Mining HCI Information Using Inductive Deduction Viewpoint

As opposed to before approaches that are far and wide (see Figure 12.1, wherein the CRISP-like model on the right, the “model” hub is brought forth, as it is absent in the first figure [33], the creators stress the viewpoints showed by the (four gatherings of) hazier boxes. Most importantly, information is not seen as a solid item inside the cycle idea yet as a rising succession. Second, while in the Fayyad cycle [34], the example idea shows up from no place, the wording of shaping theories is seen as a focal issue—the choice of a rationale and the plan of reasonable spaces of speculations, both conceivably subject to amendment after some time. Third, the inductive displaying methodology examined in some more detail all through this section is distinguishing proof by identification. HCI information mining approach with an accentuation on parts of inductive displaying is shown in Figure 12.5 [35].

Included sensible thinking may effectively get befuddling—less to a computer or a rationale program [36], yet to an individual. Inside the advanced game contextual analysis [37], the age of a solitary ordered group of legitimate recipes has been adequate. ID by list functions admirably for recognizing even somewhat treacherous human player expectations. Business applications, as in [38], are more unpredictable and may require unforeseeable updates of the wording being used, i.e., the dynamic age of spaces of speculations on request [39].

In Figure 12.6, the hazier boxes with white engravings mean customary ideas of recursion-hypothetical inductive derivation. The different boxes reflect formalizations of this current section’s center ways to deal with HCI information mining by methods for ID by the specification. The ideas got from the current part’s commonsense examinations structure a formerly obscure endless progression between the recently known ideas NUM and TOTAL.

Figure 12.5 HCI information mining approach with an accentuation on parts of inductive displaying.

Figure 12.6 Reflections of basic inductive learning ideas thought about and related; climbing lines mean the correct set consideration of the lower learning idea in the upper one. All through the rest of the aspect of this part, the creators limit themselves to just rudimentary ideas.

Learning coherent speculations is a lot like learning recursive capacities. Both have limited portrayals yet decide a typically unbounded measure of realities—the hypotheses of a hypothesis and the estimations of a capacity, separately. In the two cases, the arrangements of realities are recursively enumerable however normally undecidable. The profound transaction of rationale and recursion hypothesis is surely known for very nearly a century and gives a firm premise of original outcomes [40]. Inductively learning a recursive capacity implies, in some sense, mining the capacity’s chart, which is introduced in developing pieces after some time, a cycle fundamentally the same as mining HCI information.

12.10 Visual Data Mining Methodology

The information expert regularly specifies first a few boundaries to confine the pursuit space; information mining is then performed naturally by a calculation, and finally the examples found by the programmed information mining calculation are introduced to the information examiner on the screen. For information mining to be successful, it is essential to remember the human for the information investigation cycle and consolidate the flexibility, imagination, and general information on the human with the huge stockpiling limit and the computational intensity of the present computers. Since there is a gigantic measure of examples produced by a programmed information mining calculation in literary structure, it is practically unimaginable for the human to decipher and assess the example in detail and concentrate fascinating information and general qualities. Visual information mining targets incorporating the human in the information mining measure and applying human perceptual capacities to the examination of huge datasets accessible in the present computer frameworks. Implementing knowledge into an informative, visual framework also promotes new perspectives, encouraging the creation and acceptance of new assumptions to the limits of better problem solving and growing more knowledge in the sector [41].

Visual information investigation, for the most part, follows a three-advance cycle: Overview first, zoom and filter, and afterward subtleties on-request (which has been known as the Information Seeking Mantra [42]. To start with, the information examiner needs to get an outline of the information. In the diagram, the information examiner identifies fascinating examples or gatherings with regards to the information and spotlights on at least one of them. For investigating the examples, the information investigator needs to bore down and access the subtleties of the information. Perception innovation might be utilized for each of the three stages of the information investigation measure. Representation methods help demonstrate a diagram of the information, permitting the information expert to distinguish fascinating subsets. In this progression, it is essential to keep the review perception while zeroing in on the subset utilizing another representation method. The alternative is always to rotate the design description to empty in the fascinating subsections. It could be achieved by contributing a higher degree of the display to fascinating subsections while reducing monitor use for unremarkable content. Also, to examine interesting subtypes, the data analyst requires a drill-down capability to track knowledge perspectives. Notice that depiction invention not just provides the basic vision processes for each of the three levels but also links the gaps between the means. Visual data mining can be seen as an aging index of speculation; data expectations allow the data analyst to collect insight into evidence and develop new concepts. The verification of the theories should likewise be possible through information perception. However, it may likewise be cultivated via programmed procedures from insights, design recognition, or AI. Subsequently, visual information mining, for the most part, permits quicker information investigation and regularly gives better outcomes, particularly in situations where programmed information mining calculations come up short. What’s more, visual information investigation strategies give a lot of further extent of client fulfillment and confidence in the findings of the investigation. This reality prompts an appeal for visual investigation procedures and makes them basic related to programmed investigation techniques. Visual information mining depends on a programmed part, the information mining calculation, and an intelligent part, the perception method. There are three basic ways to deal with incorporate the human in the information investigation cycle to acknowledge various types of visual information mining approaches:

- a) Preceding Visualization (PV): Data is envisioned in some visual structure before running an information mining calculation. By cooperating with the crude information, the information examiner has full power over the examination in the inquiry space. Intriguing examples are found by investigating the information.

- b) Subsequent Visualization (SV): A programmed information mining calculation plays out the information mining task by extricating designs from a given dataset. These examples are envisioned to make them interpretable for the information examiner. The resulting perceptions empower the information investigator to indicate inputs. In light of the perception, the information investigator might need to re-visitation of the information mining calculation and utilize distinctive info boundaries to get better outcomes.

- c) Tightly Integrated Visualization (TIV): An programmed information mining calculation plays out an examination of the information yet doesn’t deliver the final results. A perception strategy is utilized to introduce the transitional consequences of the information investigation measure. The mix of some programmed information mining calculations and representation procedures empowers specified client criticism for the following information mining run. At that point, the information expert identifies fascinating examples with regards to the perception of the transitional outcomes dependent on his area information. The inspiration for this methodology is to accomplish the autonomy of the information mining calculations from the application. A given programmed information mining calculation can be helpful in one space however may have downsides in some other area. Since there is no programmed information mining algorithm(with one boundary setting) appropriate for all application spaces, firmly incorporated perception prompts a superior comprehension of the information and the separated patterns.

In expansion to the immediate inclusion of the human, the primary points of interest of visual information investigation over-programmed information mining procedures are the following:

Visual information investigation can undoubtedly manage exceptionally nonhomogeneous and loud data. Visual information investigation is instinctive and requires no comprehension of complex numerical or measurable calculations or boundaries. Perception can give a subjective diagram of the information, permitting information marvels to be disconnected for additional quantitative examination. Visual information mining procedures have been demonstrated to be of high incentive in exploratory information investigation and have a high potential for investigating huge information bases [43].

Visual information investigation is particularly valuable when little is thought about the information, and the investigation objectives are unclear and it is represented in Figure 12.7. Since the information examiner is straightforwardly engaged with the investigation cycle, moving and modifying the investigation objectives is consequently done if necessary. In the following segments, we show that the combination of the human in the information mining measure and applying human perceptual capacities to the examination of huge datasets can assist with giving more compelling outcomes insignificant information mining application spaces, for example, in the digging for affiliation rules, grouping, classification, and text recovery.

Figure 12.7 Shows human involvement in different data mining approaches.

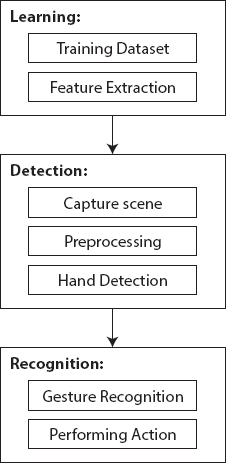

12.11 Machine Learning Algorithms for Hand Gesture Recognition

Image recognition is a tool used to recognize and analyze human nonverbal activity and to communicate with either the user in the very same way. This works to develop a plinth seen between the computer and the user to talk to each other. Image recognition becomes effective in relieving data that cannot be carried on by discussion or writing. Transmissions are the best way to convey that which is significant. This Chapter involves the use of a system that requires a vision-based hand motion recognition application with a high rate of position within an exceptional structure, which can work in a continuous Human–Computer Interaction framework without having any of the impediments (gloves, uniform foundation, and so on.) on the client condition. The framework can be characterized by utilizing a flowchart that contains three fundamental advances, they are Learning, Detection, Recognition [44].

12.12 Learning

It includes two angles, for example,

- Training dataset: This is the dataset that comprises of various kinds of hand motions that are utilized to prepare the framework dependent on which the framework plays out the activities.

- Feature Extraction: It includes deciding the centroid that isolates the picture into equal parts at its mathematical Center.

12.13 Detection

This progression includes:

- Capture scene: Captures the pictures through a web camera, which is utilized as a contribution to the framework that is assembled.

- Pre-processing: Images that are caught through the webcam are contrasted with the dataset with perceiving the substantial hand developments that are expected to play out the necessary activities.

- Hand Detection: The prerequisites for hand identification include the information picture from the webcam. The picture ought to be gotten with a speed of 20 edges for each second. Separation ought to likewise be kept up between the hand and the camera. The rough separation that ought to be between the hand the camera is around 30 to 100 cm. The video input is put away edge by outline into a framework in the wake of pre-processing.

Figure 12.8 Flowchart of gesture recognition.

12.14 Recognition

This progression includes:

- Gesture Recognition: The quantity of fingers present in the hand motion is dictated by utilizing imperfection focuses present in the signal. The resultant motion got is taken care of through a 3Dimensional Convolutional Neural Network sequentially to perceive the current signal is represented in Figure 12.8.

- Performing activity: The perceived motion is utilized as a contribution to play out the activities required by the client. These activities incorporate zooming in, zooming out, and swiping the page left or right.

12.15 Proposed Methodology for Hand Gesture Recognition

A hand signal recognition framework was created to catch the hand motions being performed by the client and to control a computer framework dependent on the approaching data. A considerable lot of the current frameworks in writing have executed signal recognition utilizing just spatial demonstrating, i.e., recognition of a solitary motion and not fleeting displaying for example recognition of movement of signals. Likewise, the current frameworks have not been actualized continuously, they utilize a pre caught picture as a contribution to signal recognition. To resolve these existing challenges, further innovation has indeed been developed that aims to design a vision-based hand expression showed system with a high correct position rate accompanying an influencing skills that can function in a consistent HCI environment without having any of the referenced extreme regulations (gloves, standardized platform, etc.) on the client’s situation shown in Figure 12.9.

Figure 12.9 Block diagram of Gesture Recognition framework.

The plan is made out of a human–computer association framework which uses hand motions as a contribution for correspondence.

The framework is partitioned into four subsystems:

- a) Identification of Hand: This module distinguishes the hand signal by catching a picture through the web camera.

Figure 12.10 (a, b, c, d): Shows hand gestures.

- b) Fingertip Detection and Defect Calculation: A limit box is drawn around the hand for which the fingertips are recognized and the foundation space around it is killed.

- c) Motion Recognition: The picture is contrasted with the data-set all together with remembering it and play out the activities required.

- d) Human–Computer Interaction: PyAutoGUI is utilized to interface with the PDF and play out the necessary activity shown in Figure 12.10.

Algorithm Used:

Figure 12.11 Zoom-in gesture recognized.

Figure 12.12 Zoom-out gesture recognized.

Figure 12.13 Towards right movement gesture recognized.

- a) A calculation called Haar Cascade is utilized.

- b) Haar Cascade is an AI object location calculation.

- c) It is utilized to distinguish the items in a picture or a video. and draws a limit around the recognized article.

12.16 Result

We can locate the accompanying figures as the yield, that perceives the signals caught from the web camera as zoom-in, zoom-out, and moving towards the right. The productivity of the model is around 80% since the model expects time to get prepared with the dataset and results are shown in Figures 12.11, 12.12 and 12.13.

12.17 Conclusion

In this chapter, we examined the human–computer association which is an especially wide zone which includes components from differing zones, for example, brain research, ergonomics, building, man-made consciousness, information bases, and so forth. This procedure speaks to a depiction of the cutting edge in human computer cooperation with an accentuation on keen Interaction through computer vision, man-made consciousness, and example recognition strategy. We hope that, quite far from now on, the research system may have made significant strides in the study of human–computer cooperation, and that new standard will be raised that will lead to common interaction between humans, machines, and the environment.

We likewise examine the significance of gesture recognition that lies in building a proficient human–machine connection. This paper depicts how the usage of the framework is done depends on the pictures caught, and how they are deciphered as signals by the computer to perform activities like exchanging the pages, looking up or down the page. The framework is manufactured utilizing OpenCV and TensorFlow item indicator.

References

1. Salovey, P. and Mayer, J., Emotional intelligence. Imagin. Cogn. Pers., 9, 185–211, 1990.

2. Rawat, N. and Raja, R., Moving Vehicle Detection and Tracking using Modified Mean Shift Method and Kalman Filter and Research. Int. J. New Technol. Res. (IJNTR), 2, 5, 96–100, 2016.

3. Warwick, K. and Gasson, M., Practical interface experiments with implant technology, in: International Workshop on Human–Computer Interaction, Lecture Notes in Computer Science, vol. 3058, Springer, pp. 6–16, 2004.

4. Huang, X. and Weng, J., Motivational system for human-robot interaction, in: International Workshop on Human-Computer Interaction, Lecture Notes in Computer Science, vol. 3058, Springer, pp. 17–27, 2004.

5. Paquin, V. and Cohen, P., A vision-based gestural guidance interface for mobile robotic platforms, in: International Workshop on Human–Computer Interaction, Lecture Notes in Computer Science, vol. 3058, Springer, pp. 38–46, 2004.

6. Nickel, K. and Stiefelhagen, R., Real-time person tracking and pointing gesture recognition for human–robot interaction, in: International Workshop on Human–Computer Interaction, Lecture Notes in Computer Science, vol. 3058, Springer, pp. 28–37, 2004.

7. Tosas, M. and Li, B., Virtual touch screen for mixed reality, in: International Workshop on Human–Computer Interaction, Lecture Notes in Computer Science, vol. 3058, Springer, pp. 47–57, 2004.

8. Gheorghe, L., Ban, Y., Uehara, K., Exploring interactions specific to mixed reality 3D modeling systems, in: International Workshop on Human–Computer Interaction, Lecture Notes in Computer Science, vol. 3058, Springer, pp. 113–123, 200.

9. Siegl, H., Schweighofer, G., Pinz, A., An AR human–computer interface for object localization in a cognitive vision framework, in: International Workshop on Human–Computer Interaction, Lecture Notes in Computer Science, vol. 3058, Springer, pp. 167–177, 2004.

10. Hosoya, E., Sato, H., Kitabata, M., Harada, I., Nojima, H., Onozawa, A., Arm-pointer: 3D pointing interface for real-world interaction, in: International Workshop on Human–Computer Interaction, Lecture Notes in Computer Science, vol. 3058, Springer, pp. 70–80, 2004.

11. Kumar, S., Jain, A., Shukla, A.P., Singh, S., Rani, S., A Comparative Analysis of Machine Learning Algorithms for Detection of Organic and Non-Organic Cotton Diseases, in: Mathematical Problems in Engineering, Special Issue—Deep Transfer Learning Models for Complex Multimedia Applications.

12. Stenger, B., Thayananthan, A., Torr, P., Cipolla, R., Hand pose estimation using hierarchical detection, in: International Workshop on Human–Computer Interaction, Lecture Notes in Computer Science, vol. 3058, Springer, pp. 102–112, 2004.

13. Yamazoe, H., Utsumi, A., Tetsutani, N., Yachida, M., A novel wearable system for capturing user view images, in: International Workshop on Human-Computer Interaction, Lecture Notes in Computer Science, vol. 3058, Springer, pp. 156–166, 2004.

14. Tsukizawa, S., Sumi, K., Matsuyama, T., 3D digitization of a hand-held object with a wearable vision sensor, in: International Workshop on Human–Computer Interaction, Lecture Notes in Computer Science, vol. 3058, Springer, pp. 124–134, 2004.

15. Dornaika, F. and Ahlberg, J., Model-based head and facial motion tracking, in: International Workshop on Human-Computer Interaction, Lecture Notes in Computer Science, vol. 3058, Springer, pp. 211–221, 2004.

16. Sun, Y., Sebe, N., Lew, M., Gevers, T., Authentic emotion detection in real-time video, in: International Workshop on Human–Computer Interaction, Lecture Notes in Computer Science, vol. 3058, Springer, pp. 92–101, 2004.

17. Lee, H.S. and Kim, D., Pose invariant face recognition using linear pose transformation in feature space, in: International Workshop on Human–Computer Interaction, Lecture Notes in Computer Science, vol. 3058, Springer, pp. 200–210, 2004.

18. Wang, J.G., Sung, E., Venkateswarlu, R., EM enhancement of 3D head pose estimated by perspective invariance, in: International Workshop on Human-Computer Interaction, Lecture Notes in Computer Science, vol. 3058, Springer, pp. 178–188, 2004.

19. Du, Y. and Lin, X., Multi-view face image synthesis using factorization model, in: International Workshop on Human–Computer Interaction, Lecture Notes in Computer Science, vol. 3058, Springer, pp. 189–199, 2004.

20. Kleindienst, J., Macek, T., Seredi, L., Sedivy, J., Djinn: Interaction framework for home environment using speech and vision, in: International Workshop on Human–Computer Interaction, Lecture Notes in Computer Science, vol. 3058, Springer, pp. 145–155, 2004.

21. Okatani, I. and Takuichi, N., Location-based information support system using multiple cameras and LED light sources with the compact battery-less information terminal (CoBIT), in: International Workshop on Human-Computer Interaction, Lecture Notes in Computer Science, vol. 3058, Springer, pp. 135–144, 2004.

22. Kumar, K.R. and Raja, R., Broadcasting the Transaction System by Using Blockchain Technology. pp. 2115–21, Design Engineering, India, June 2021, http://thedesignengineering.com/index.php/DE/article/view/1912.

23. Jeng., A selected history of expectation bias in physics. Am. J. Phys., 74, 578–583, 2006.

24. Shukla, S. and Raja, R., Digital Image Fusion using Adaptive Neuro-Fuzzy Inference System. Int. J. New Technol. Res. (IJNTR), 2, 5, 101–104, 2016.

25. Ankerst, M., Visual Data Mining. Ph.D. thesis, Faculty of Mathematics and Computer Science, University of Munich, Germany, 2000.

26. Asimov, D., The grand tour: a tool for viewing multidimensional data. SIAM J. Sci. Stat. Comput., 6, 1, 128–143, 1985.

27. Wirth, R. and Hipp, J., CRISP-DM. Towards a standard process model for data mining, in: Proceedings of the 4th International Conference on the Practical Applications of Knowledge Discovery and Data Mining, pp. 29–39, 2000.

28. Fayyad, U., Piatetsky-Shapiro, G., Smyth, P., The KDD process for extracting useful knowledge from volumes of data. Commun. ACM, 39, 27–34, 1996.

29. Schmidt, B., Theory of Mind Player Modeling [Bachelor Thesis], University of Applied Sciences, Erfurt, Germany, 2014.

30. Jantke, K.P., Schmidt, B., Schnappauf, R., Next-generation learner modeling by the theory of mind model induction, in: Proceedings of the 8th International Conference on Computer Supported Education (CSEDU 2016), Rome, Italy, 21–23 April 2016, SCITEPRESS, Sétubal, pp. 499–506.

31. Raja, R., Kumar, S., Rashid, Md., Color Object Detection Based Image Retrieval using ROI Segmentation with Multi-Feature Method. Wirel. Pers. Commun. Springer J., 1–24, 2020, https://link.springer.com/article/10.1007/s11277-019-07021-6, https://doi.org/10.1007/s11277-019-07021-6.

32. Arnold, O., Drefahl, S., Fujima, J., Jantke, K.P., Vogler, C., Dynamic identification by enumeration for co-operative knowledge discovery. IADIS Int. J. Comput. Sci. Inf. Syst., 12, 65–85, 2017.

33. Jantke, K.P. and Beick, H.R., Combining postulates of naturalness in inductive inference, in: EIK, vol. 17, pp. 465–484, 1981.

34. Gödel, K., Über formal unentscheidbare Sätze der “Principia Mathematica” und verwandter Systeme. Mon. Math. Phys., 38, 173–198, 1931.

35. Tiwari, L., Raja, R., Awasthi, V., Miri, R., Sinha, G.R., Alkinani, M.H., Polat, K., Detection of lung nodule and cancer using novel Mask-3 FCM and TWEDLNN algorithms. Measurement, 172, 1–14, 2021, 108882, https://doi.org/10.1016/j.measurement.2020.108882.

36. Panwar, M. and Mehra, P.S., Hand Gesture Recognition for Human–Computer Interaction. International Conference on Image Information Processing, India, 2011.

37. Sahu, A.K., Sharma, S., Tanveer, M., Internet of Things attack detection using hybrid Deep Learning Model. Comput. Commun., 176, 146–154, 2021, https://doi.org/10.1016/j.comcom.2021.05.024.

38. Sarkar, A.R., Sanyal, G., Majumder, S., Hand Gesture Recognition Systems: A Survey. Int. J. Comput. Appl., 71, 15, 26–37, May 2013.

39. Manjunath, A.E., Vijaya Kumar, B.P., Rajesh, H., Comparative Study of Hand Gesture Recognition Algorithms. Int. J. Res. Comput. Commun. Technol., 3, 4, 25–37, April-2014.

40. Dnyanada, R., Jadhav, L., M.R., Lobo, J., Navigation of PowerPoint Using Hand Gestures. Int. J. Sci. Res. (IJSR), 4, 833–837, 2015.

41. Patra, R.K., Raja, R., Sinha, T.S., Mahmood, Md R., Image Registration and Rectification using Background Subtraction method for Information security to justify Cloning Mechanism using High-End Computing Techniques. 3rd International Conference on Computational Intelligence and Informatics (ICCII-2018), held during 28–29 Dec 2018.

42. Xu, P. and Department of Electrical and Computer Engineering, University of Minnesota, A Real-time Hand Gesture Recognition and Human-Computer Interaction System, Research Paper, Xie Pu, University of Minnesota, USA, April 2017.

43. Suganya, P., Sathya, R., Vijayalakshmi, K., Detection, and Recognition of Gestures To Control The System Applications by Neural Networks. Int. J. Pure Appl. Math., 118, 399–405, 2018.

44. Chandrakar, R., Raja, R., Miri, R., Tandan, S.R.K., Laxmi, R., Detection and Identification of Animals in Wild Life Sancturies using Convolutional Neural Network. Int. J. Recent Technol. Eng. (IJRTE) that will publish at, 8, 5, 181–185, January 2020 in Regular Issue on 30/12/2020.

- *Corresponding author: [email protected]

- †Corresponding author: [email protected]

- ‡Corresponding author: [email protected]

- §Corresponding author: [email protected]