CHAPTER

7

Signaling over AWGN Channels

7.1 Introduction

Chapter 6 on the conversion of analog waveforms into coded pulses represents the transition from analog communications to digital communications. This transition has been empowered by several factors:

1. Ever-increasing advancement of digital silicon chips, digital signal processing, and computers, which, in turn, has prompted further enhancement in digital silicon chips, thereby repeating the cycle of improvement.

2. Improved reliability, which is afforded by digital communications to a much greater extent than is possible with analog communications.

3. Broadened range of multiplexing of users, which is enabled by the use of digital modulation techniques.

4. Communication networks, for which, in one form or another, the use of digital communications is the preferred choice.

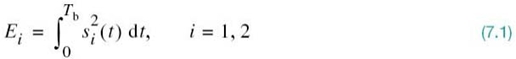

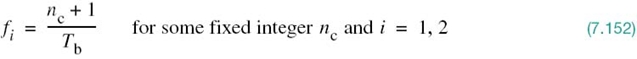

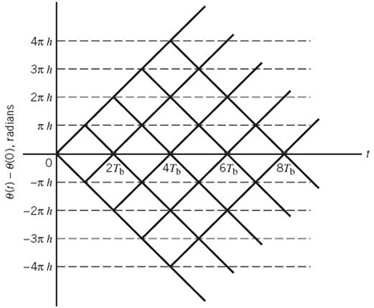

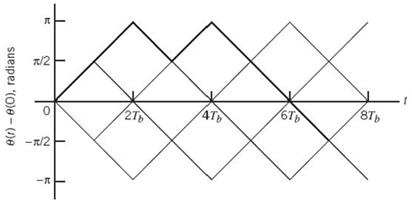

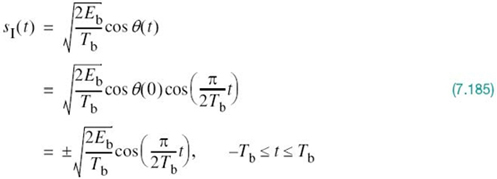

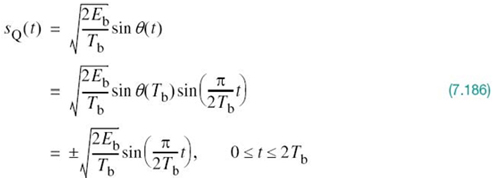

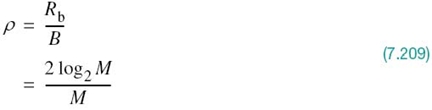

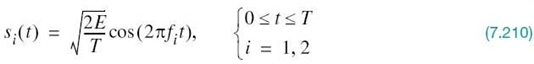

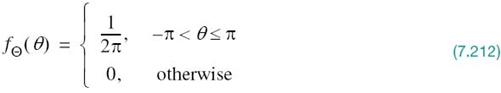

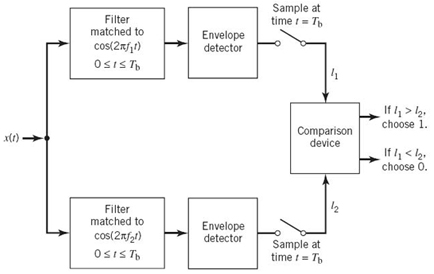

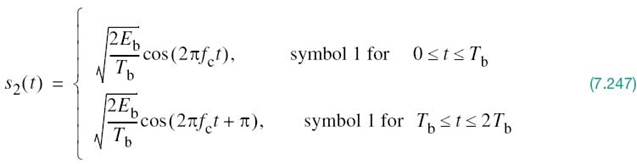

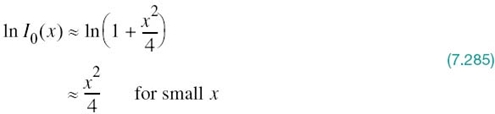

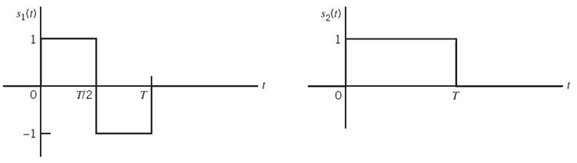

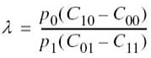

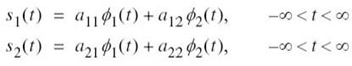

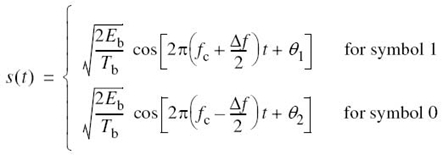

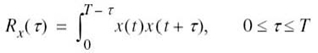

In light of these compelling factors, we may justifiably say that we live in a “digital communications world.” For an illustrative example, consider the remote connection of two digital computers, with one computer acting as the information source by calculating digital outputs based on observations and inputs fed into it; the other computer acts as the recipient of the information. The source output consists of a sequence of 1s and 0s, with each binary symbol being emitted every Tb seconds. The transmitting part of the digital communication system takes the 1s and 0s emitted by the source computer and encodes them into distinct signals denoted by s1(t) and s2(t), respectively, which are suitable for transmission over the analog channel. Both s1(t) and s2(t) are real-valued energy signals, as shown by

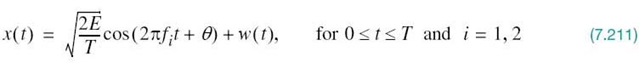

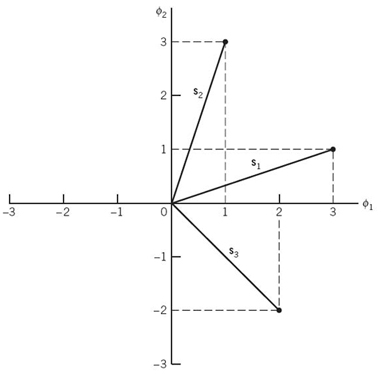

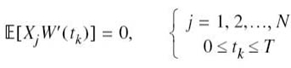

With the analog channel represented by an AWGN model, depicted in Figure 7.1, the received signal is defined by

where w(t) is the channel noise. The receiver has the task of observing the received signal x(t) for a duration of Tb seconds and then making an estimate of the transmitted signal si(t), or equivalently the ith symbol, i = 1, 2. However, owing to the presence of channel noise, the receiver will inevitably make occasional errors. The requirement, therefore, is to design the receiver so as to minimize the average probability of symbol error, defined as

where π1 and π2 are the prior probabilities of transmitting symbols 1 and

0, respectively, and![]() is the estimate of the symbol 1 or 0 sent by the source, which is computed by the receiver. The

is the estimate of the symbol 1 or 0 sent by the source, which is computed by the receiver. The![]() and

and ![]() are conditional probabilities.

are conditional probabilities.

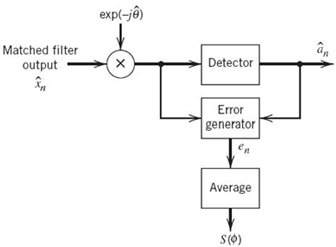

Figure7.1 AWGN moddel of a channel.

In minimizing the average probability of symbol error between the receiver output and the symbol emitted by the source, the motivation is to make the digital communication system as reliable as possible. To achieve this important design objective in a generic setting that involves an M-ary alphabet whose symbols are denoted by m1, m2,…,mM, we have to understand two basic issues:

1. How to optimize the design of the receiver so as to minimize the average probability of symbol error.

2. How to choose the set of signals s1(t), s2(t), …, sM(t) for representing the symbols m1, m2,…,mM, respectively, since this choice affects the average probability of symbol error.

The key question is how to develop this understanding in a principled as well as insightful manner. The answer to this fundamental question is found in the geometric representation of signals.

7.2 Geometric Representation of Signals

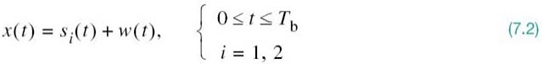

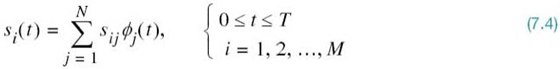

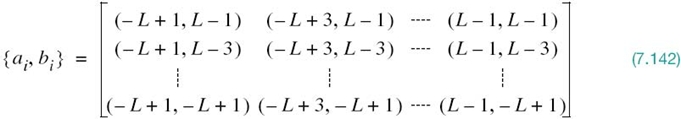

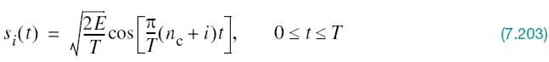

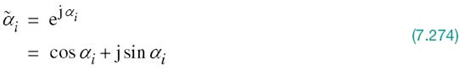

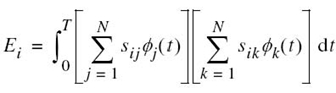

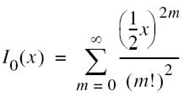

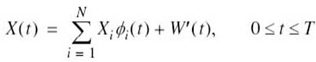

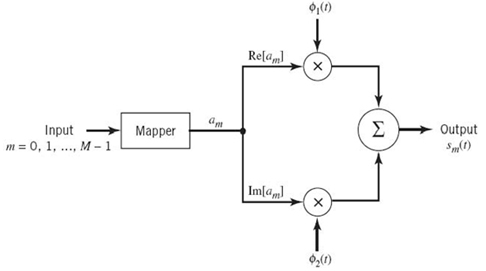

The essence of geometric representation of signals1 is to represent any set of M energy signals {si(t)} as linear combinations of N orthonormal basis functions, where N ≤ M. That is to say, given a set of real-valued energy signals, s1(t), s2(t),…,sM(t), each of duration T seconds, we write

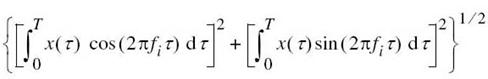

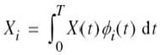

where the coefficients of the expansion are defined by

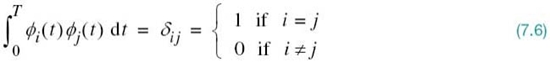

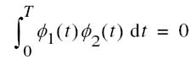

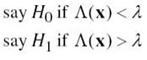

The real-valued basis functions ϕ1(t), ϕ2(t),…,ϕN(t) form an orthonormal set, by which we mean

where δij is the Kronecker delta. The first condition of (7.6) states that each basis function is normalized to have unit energy. The second condition states that the basis functions ϕ1(t), ϕ2(t),…,ϕN(t) are orthogonal with respect to each other over the interval 0 ≤ t ≤ T.

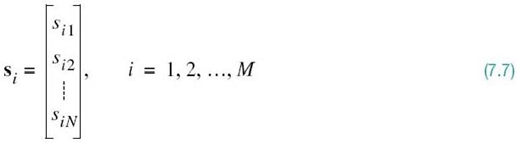

For prescribed i, the set of coefficients ![]() may be viewed as an N-dimensional signal vector, denoted by si. The important point to note here is that the vector si bears a one-to-one relationship with the transmitted signal si(t):

may be viewed as an N-dimensional signal vector, denoted by si. The important point to note here is that the vector si bears a one-to-one relationship with the transmitted signal si(t):

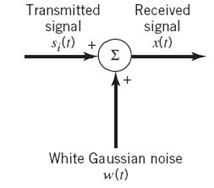

- Given the N elements of the vector si operating as input, we may use the scheme shown in Figure 7.2a to generate the signal si(t), which follows directly from (7.4). This figure consists of a bank of N multipliers with each multiplier having its own basis function followed by a summer. The scheme of Figure 7.2a may be viewed as a synthesizer.

- Conversely, given the signals si(t), i = 1,2,…,M, operating as input, we may use the scheme shown in Figure 7.2b to calculate the coefficients si1, si2, …, siN which follows directly from (7.5). This second scheme consists of a bank of N product-integrators or correlators with a common input, and with each one of them supplied with its own basis function. The scheme of Figure 7.2b may be viewed as an analyzer.

Figure 7.2, (a) Synthesizer for generating the signal si(t). (b) Analyzer for reconstructing the signal vector {si}.

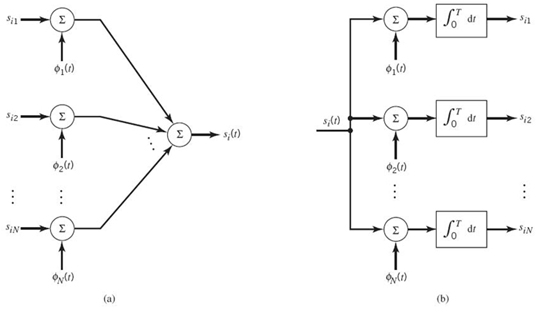

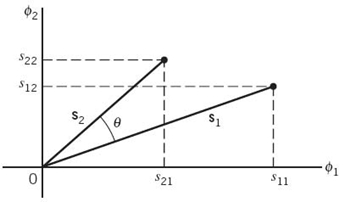

Figure 7.3 Illustrating the geometric representation of signals for the case when N = 2 and M = 3.

Accordingly, we may state that each signal in the set {si(t)} is completely determined by the signal vector

Furthermore, if we conceptually extend our conventional notion of two- and three-dimensional Euclidean spaces to an N-dimensional Euclidean space, we may visualize the set of signal vectors {si|i = 1,2,…,M} as defining a corresponding set of M points in an N-dimensional Euclidean space, with N mutually perpendicular axes labeled ϕ1, ϕ2,…,ϕN. This N-dimensional Euclidean space is called the signal space.

The idea of visualizing a set of energy signals geometrically, as just described, is of profound theoretical and practical importance. It provides the mathematical basis for the geometric representation of energy signals in a conceptually satisfying manner. This form of representation is illustrated in Figure 7.3 for the case of a two-dimensional signal space with three signals; that is, N = 2 and M = 3.

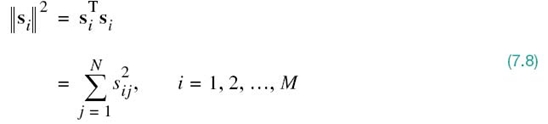

In an N-dimensional Euclidean space, we may define lengths of vectors and angles between vectors. It is customary to denote the length (also called the absolute value or norm) of a signal vector si by the symbol ||si||. The squared length of any signal vector si is defined to be the inner product or dot product of si with itself, as shown by

where sij is the jth element of si and the superscript T denotes matrix transposition.

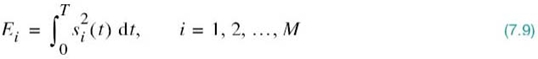

There is an interesting relationship between the energy content of a signal and its representation as a vector. By definition, the energy of a signal si(t) of duration T seconds is

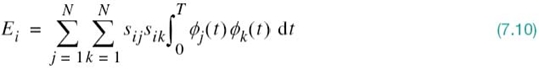

Therefore, substituting (7.4) into (7.9), we get

Interchanging the order of summation and integration, which we can do because they are both linear operations, and then rearranging terms we get

Since, by definition, the ϕj(t) form an orthonormal set in accordance with the two conditions of (7.6), we find that (7.10) reduces simply to

Thus, (7.8) and (7.11) show that the energy of an energy signal si(t) is equal to the squared length of the corresponding signal vector si(t).

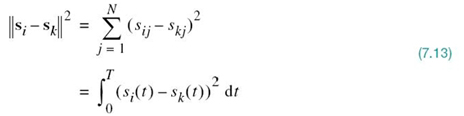

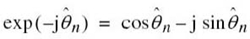

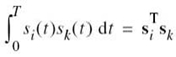

In the case of a pair of signals si(t) and sk(t) represented by the signal vectors si and sk, respectively, we may also show that

Equation (7.12) states:

The inner product of the energy signals si(t) and sk(t) over the interval [0, T] is equal to the inner product of their respective vector representations si and sk.

Note that the inner product ![]() is invariant to the choice of basis functions

is invariant to the choice of basis functions ![]() , in that it only depends on the components of the signals si(t) and sk(t) projected onto each of the basis functions.

, in that it only depends on the components of the signals si(t) and sk(t) projected onto each of the basis functions.

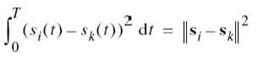

Yet another useful relation involving the vector representations of the energy signals si(t) and sk(t) is described by

where ![]() is the Euclidean distance dik between the points represented by the signal vectors si and sk.

is the Euclidean distance dik between the points represented by the signal vectors si and sk.

To complete the geometric representation of energy signals, we need to have a representation for the angle θik subtended between two signal vectors si and sk. By definition, the cosine of the angle θik is equal to the inner product of these two vectors divided by the product of their individual norms, as shown by

The two vectors si and sk are thus orthogonal or perpendicular to each other if their inner product ![]() | is zero, in which case θik = 90°; this condition is intuitively satisfying.

| is zero, in which case θik = 90°; this condition is intuitively satisfying.

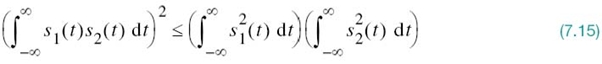

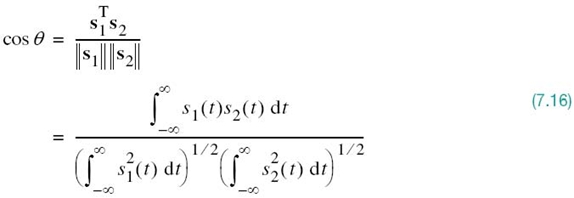

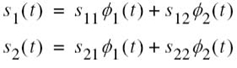

EXAMPLE 1 The Schwarz Inequality

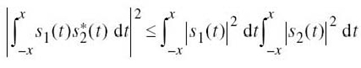

Consider any pair of energy signals s1(t) and s2(t). The Schwarz inequality states

The equality holds if, and only if, s2(t) = cs1(t), where c is any constant.

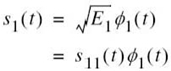

To prove this important inequality, let s1(t) and s2(t) be expressed in terms of the pair of orthonormal basis functions ϕ1(t) and ϕ2(t) as follows:

where ϕ1(t) and ϕ2(t) satisfy the orthonormality conditions over the time interval (–∞, ∞):

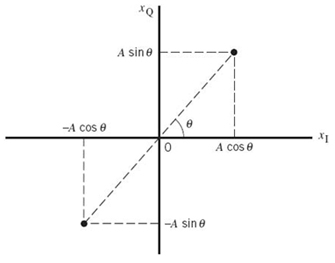

On this basis, we may represent the signals s1(t) and s2(t) by the following respective pair of vectors, as illustrated in Figure 7.4:

Figure 7.4 Vector representations of signals s1(t) and s2(t), providing the background picture for proving the Schwarz inequality.

From Figure 7.4 we readily see that the cosine of angle θ subtended between the vectors s1 and s2 is

where we have made use of (7.14) and (7.12). Recognizing that |cosθ | ≤ 1, the Schwarz inequality of (7.15) immediately follows from (7.16). Moreover, from the first line of (7.16) we note that |cosθ | = 1 if, and only if, s2 = cs1; that is, s2(t) = cs1(t), where c is an arbitrary constant.

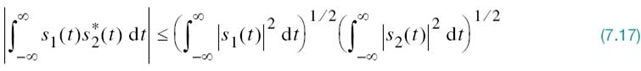

Proof of the Schwarz inequality, as presented here, applies to real-valued signals. It may be readily extended to complex-valued signals, in which case (7.15) is reformulated as

where the asterisk denotes complex conjugation and the equality holds if, and only if, s2(t) = cs1(t), where c is a constant.

Gram–Schmidt Orthogonalization Procedure

Having demonstrated the elegance of the geometric representation of energy signals with an example, how do we justify it in mathematical terms? The answer to this question lies in the Gram–Schmidt orthogonalization procedure, for which we need a complete orthonormal set of basis functions. To proceed with the formulation of this procedure, suppose we have a set of M energy signals denoted by s1(t), s2(t),…,sM(t). Starting with s1(t) chosen from this set arbitrarily, the first basis function is defined by

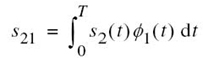

where E1 is the energy of the signal s1(t).

Then, clearly, we have

where the coefficient ![]() and ϕ1(t) has unit energy as required.

and ϕ1(t) has unit energy as required.

Next, using the signal s2(t), we define the coefficient s21 as

We may thus introduce a new intermediate function

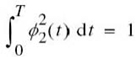

which is orthogonal to ϕ1(t) over the interval 0 ≤ t ≤ T by virtue of the definition of s21 and the fact that the basis function ϕ1(t) has unit energy. Now, we are ready to define the second basis function as

Substituting (7.19) into (7.20) and simplifying, we get the desired result

where E2 is the energy of the signal s2(t). From (7.20) we readily see that

in which case (7.21) yields

That is to say, ϕ1(t) and ϕ2(t) form an orthonormal pair as required.

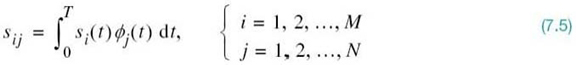

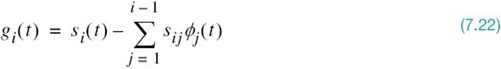

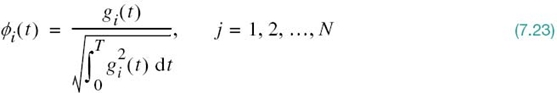

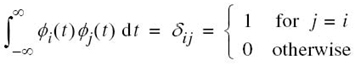

Continuing the procedure in this fashion, we may, in general, define

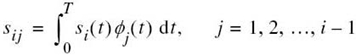

where the coefficients sij are themselves defined by

For i = 1, the function gi(t) reduces to si(t).

Given the gi(t), we may now define the set of basis functions

which form an orthonormal set. The dimension N is less than or equal to the number of given signals, M, depending on one of two possibilities:

- The signals s1(t), s2(t),…,sM(t) form a linearly independent set, in which case N = M.

- The signals s1(t), s2(t),…,sM(t) are not linearly independent, in which case N < M and the intermediate function gi(t) is zero for i > N.

Note that the conventional Fourier series expansion of a periodic signal, discussed in Chapter 2, may be viewed as a special case of the Gram–Schmidt orthogonalization procedure. Moreover, the representation of a band-limited signal in terms of its samples taken at the Nyquist rate, discussed in Chapter 6, may be viewed as another special case. However, in saying what we have here, two important distinctions should be made:

1. The form of the basis functions ϕ1(t), ϕ2(t),…,ϕN(t) has not been specified. That is to say, unlike the Fourier series expansion of a periodic signal or the sampled representation of a band-limited signal, we have not restricted the Gram–Schmidt orthogonalization procedure to be in terms of sinusoidal functions (as in the Fourier series) or sinc functions of time (as in the sampling process).

2. The expansion of the signal si(t) in terms of a finite number of terms is not an approximation wherein only the first N terms are significant; rather, it is an exact expression, where N and only N terms are significant.

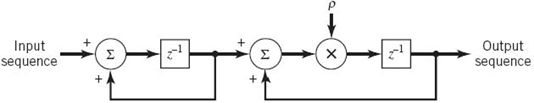

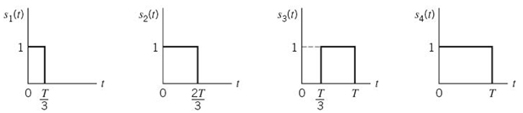

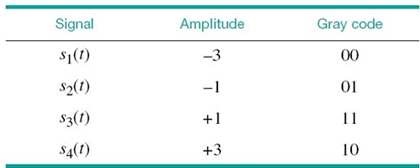

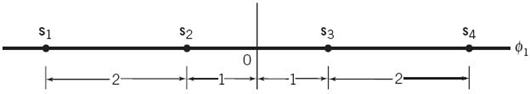

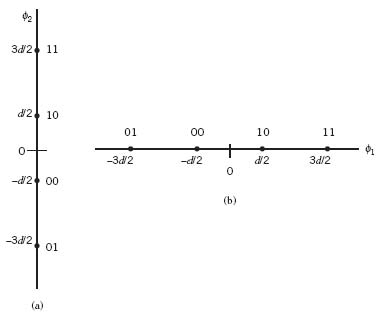

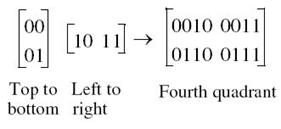

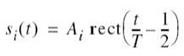

EXAMPLE 2 2B1Q Code

The 2B1Q code is the North American line code for a special class of modems called digital subscriber lines. This code represents a quaternary PAM signal as shown in the Gray-encoded alphabet of Table 7.1. The four possible signals s1(t), s2(t), s3(t), and s4(t) are amplitude-scaled versions of a Nyquist pulse. Each signal represents a dibit (i.e., pair of bits). The issue of interest is to find the vector representation of the 2B1Q code.

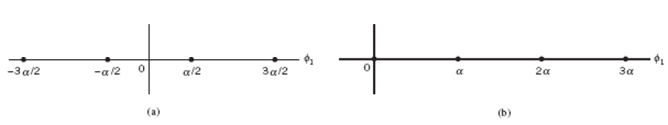

This example is simple enough for us to solve it by inspection. Let ϕ1(t) denote a pulse normalized to have unit energy. The ϕ1(t) so defined is the only basis function for the vector representation of the 2B1Q code. Accordingly, the signal-space representation of this code is as shown in Figure 7.5. It consists of four signal vectors s1, s2, s3, and s4, which are located on the ϕ1-axis in a symmetric manner about the origin. In this example, we have M = 4 and N = 1.

Table 7.1 Amplitude levels of the 2B1Q code

Figure 7.5 Signal-space representation of the 2B1Q code.

We may generalize the result depicted in Figure 7.5 for the 2B1Q code as follows: the signal-space diagram of an M-ary PAM signal, in general, is one-dimensional with M signal points uniformly positioned on the only axis of the diagram.

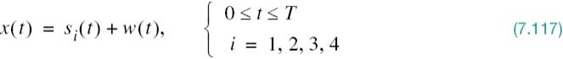

7.3 Conversion of the Continuous AWGN Channel into a Vector Channel

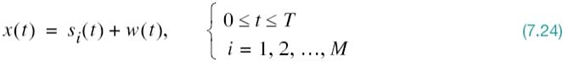

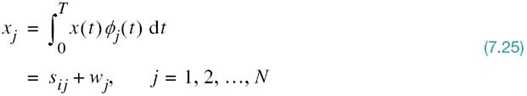

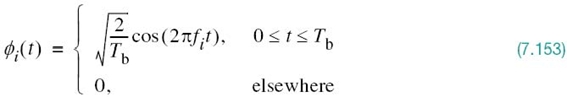

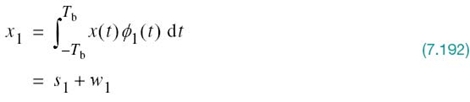

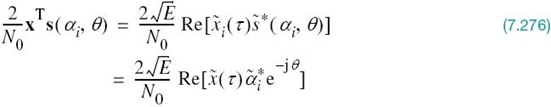

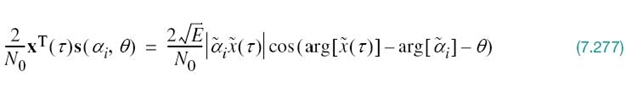

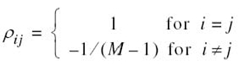

Suppose that the input to the bank of N product integrators or correlators in Figure 7.2b, is not the transmitted signal si(t) but rather the received signal x(t) defined in accordance with the AWGN channel of Figure 7.1. That is to say,

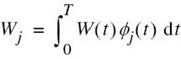

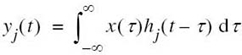

where w(t) is a sample function of the white Gaussian noise process W(t) of zero mean and power spectral density N0/2. Correspondingly, we find that the output of correlator j, say, is the sample value of a random variable Xj, whose sample value is defined by

The first component, sij, is the deterministic component of xj due to the transmitted signal si(t), as shown by

The second component, wj, is the sample value of a random variable Wj due to the channel noise w(t), as shown by

Consider next a new stochastic process X' (t) whose sample function x' (t) is related to the received signal x(t) as follows:

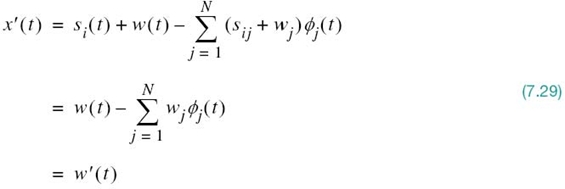

Substituting (7.24) and (7.25) into (7.28), and then using the expansion of (7.4), we get

The sample function x' (t), therefore, depends solely on the channel noise w(t). On the basis of (7.28) and (7.29), we may thus express the received signal as

Accordingly, we may view w'(t) as a remainder term that must be included on the right-hand side of (7.30) to preserve equality. It is informative to contrast the expansion of the received signal x(t) given in (7.30) with the corresponding expansion of the transmitted signal si(t) given in (7.4): the expansion of (7.4), pertaining to the transmitter, is entirely deterministic; on the other hand, the expansion of (7.30) is random (stochastic) due to the channel noise at the receiver input.

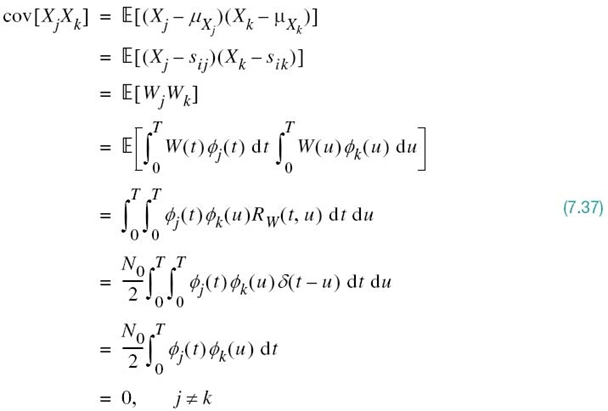

Statistical Characterization of the Correlator Outputs

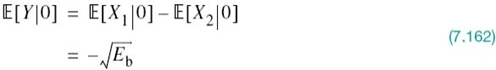

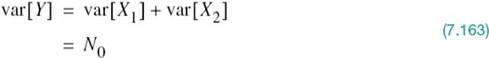

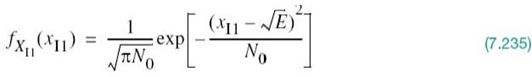

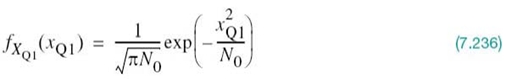

We now wish to develop a statistical characterization of the set of N correlator outputs. Let X(t) denote the stochastic process, a sample function of which is represented by the received signal x(t). Correspondingly, let Xj denote the random variable whose sample value is represented by the correlator output xj, j = 1,2,…,N. According to the AWGN model of Figure 7.1, the stochastic process X(t) is a Gaussian process. It follows, therefore, that Xj is a Gaussian random variable for all j in accordance with Property 1 of a Gaussian process (Chapter 4). Hence, Xj is characterized completely by its mean and variance, which are determined next.

Let Wj denote the random variable represented by the sample value wj produced by the jth correlator in response to the white Gaussian noise component w(t). The random variable Wj has zero mean because the channel noise process W(t) represented by w(t) in the AWGN model of Figure 7.1 has zero mean by definition. Consequently, the mean of Xj depends only on sij, as shown by

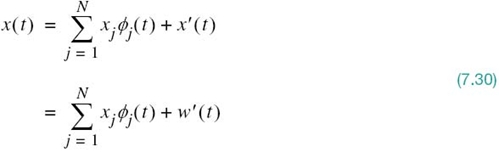

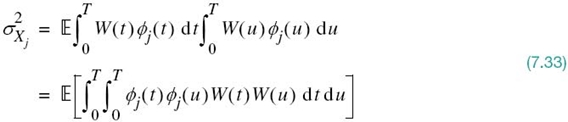

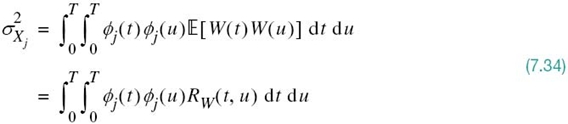

To find the variance of Xj, we start with the definition

where the last line follows from (7.25) with xj and wj replaced by Xj and Wj, respectively. According to (7.27), the random variable Wj is defined by

We may therefore expand (7.32) as

Interchanging the order of integration and expectation, which we can do because they are both linear operations, we obtain

where RW(t, u) is the autocorrelation function of the noise process W(t). Since this noise is stationary, RW(t, u) depends only on the time difference t – u. Furthermore, since W(t) is white with a constant power spectral density N0/2, we may express RW(t, u) as

Therefore, substituting (7.35) into (7.34) and then using the sifting property of the delta function δ(t), we get

Since the ϕj(t) have unit energy, by definition, the expression for noise variance ![]() reduces to

reduces to

This important result shows that all the correlator outputs, denoted by Xj with j = 1,2,…,N, have a variance equal to the power spectral density N0/2 of the noise process W(t).

Moreover, since the basic functions ϕj(t) form an orthonormal set, Xj and Xk are mutually uncorrelated, as shown by

Since the Xj are Gaussian random variables, (7.37) implies that they are also statistically independent in accordance with Property 4 of a Gaussian process (Chapter 4).

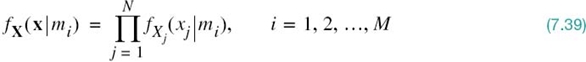

Define the vector of N random variables

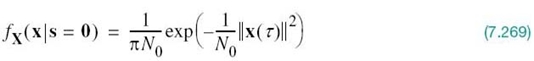

whose elements are independent Gaussian random variables with mean values equal to sij and variances equal to N0/2. Since the elements of the vector X are statistically independent, we may express the conditional probability density function of the vector X, given that the signal si(t) or the corresponding symbol mi was sent, as the product of the conditional probability density functions of its individual elements; that is,

where the vector x and scalar xj are sample values of the random vector X and random variable Xj, respectively. The vector x is called the observation vector; correspondingly, xj is called an element of the observation vector. A channel that satisfies (7.39) is said to be a memoryless channel.

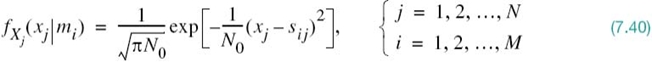

Since each Xj is a Gaussian random variable with mean sij and variance N0/2, we have

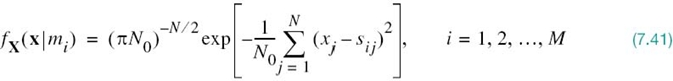

Therefore, substituting (7.40) into (7.39) yields

which completely characterizes the first term of (7.30).

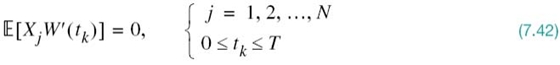

However, there remains the noise term w'(t) in (7.30) to be accounted for. Since the noise process W(t) represented by w(t) is Gaussian with zero mean, it follows that the noise process W'(t) represented by the sample function w'(t) is also a zero-mean Gaussian process. Finally, we note that any random variable W'(tk), say, derived from the noise process W'(t) by sampling it at time tk, is in fact statistically independent of the random variable Xj; that is to say:

Since any random variable based on the remainder noise W'(t) process is independent of the set of random variables {Xj} as well as the set of transmitted signals {si(t)}, (7.42) states that the random variable W'(tk) is irrelevant to the decision as to which particular signal was actually transmitted. In other words, the correlator outputs determined by the received signal x(t) are the only data that are useful for the decision-making process; therefore, they represent sufficient statistics for the problem at hand. By definition, sufficient statistics summarize the whole of the relevant information supplied by an observation vector.

We may now summarize the results presented in this section by formulating the theorem of irrelevance:

Insofar as signal detection in AWGN is concerned, only the projections of the noise onto the basis functions of the signal set ![]() affect the sufficient statistics of the detection problem; the remainder of the noise is irrelevant.

affect the sufficient statistics of the detection problem; the remainder of the noise is irrelevant.

Putting this theorem into a mathematical context, we may say that the AWGN channel model of Figure 7.1a is equivalent to an N-dimensional vector channel described by the equation

where the dimension N is the number of basis functions involved in formulating the signal vector si for all i. The individual components of the signal vector si and the additive Gaussian noise vector w are defined by (7.5) and (7.27), respectively. The theorem of irrelevance and its mathematical description given in (7.43) are indeed basic to the understanding of the signal-detection problem as described next. Just as importantly, (7.43) may be viewed as the baseband version of the time-dependent received signal of (7.24).

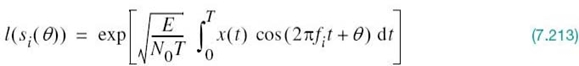

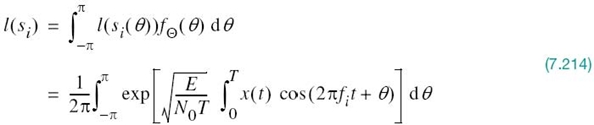

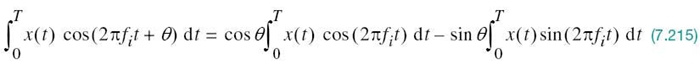

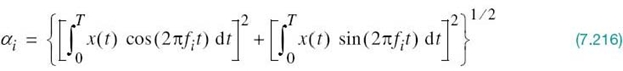

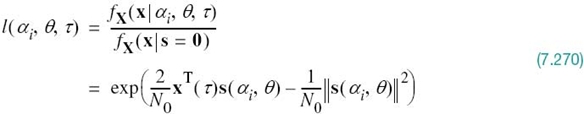

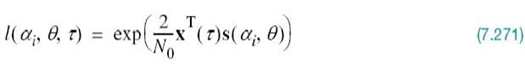

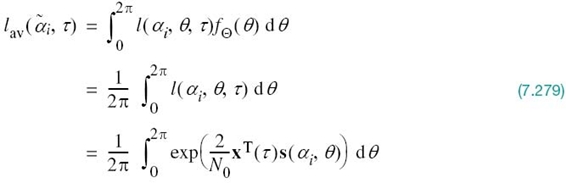

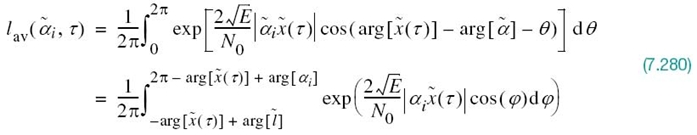

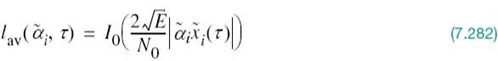

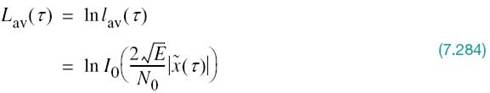

Likelihood Function

The conditional probability density functions fX(x|mi), i = 1,2,…,M, provide the very characterization of an AWGN channel. Their derivation leads to a functional dependence on the observation vector x given the transmitted message symbol mi. However, at the receiver we have the exact opposite situation: we are given the observation vector x and the requirement is to estimate the message symbol mi that is responsible for generating x. To emphasize this latter viewpoint, we follow Chapter 3 by introducing the idea of a likelihood function, denoted by L(mi) and defined by

However, tt is important to recall from Chapter 3 that although l(mi) and fX(x|mi) have exactly the same mathematical form, their individual meanings are quite different.

In practice, we find it more convenient to work with the log-likelihood function, denoted by L(mi) and defined by

where ln denotes the natural logarithm. The log-likelihood function bears a one-to-one relationship to the likelihood function for two reasons:

1. By definition, a probability density function is always nonnegative. It follows, therefore, that the likelihood function is likewise a nonnegative quantity.

2. The logarithmic function is a monotonically increasing function of its argument.

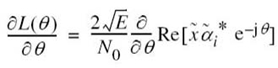

The use of (7.41) in (7.45) yields the log-likelihood function for an AWGN channel as

where we have ignored the constant term –(N/2)ln(πN0) since it bears no relation whatsoever to the message symbol mi. Recall that the sij, j = 1,2,…,N, are the elements of the signal vector si representing the message symbol mi. With (7.46) at our disposal, we re now ready to address the basic receiver design problem.

7.4 Optimum Receivers Using Coherent Detection

Maximum Likelihood Decoding

Suppose that, in each time slot of duration T seconds, one of the M possible signals s1(t), s2(t),…,sM(t) is transmitted with equal probability, 1/M.

For geometric signal representation, the signal si(t), i = 1,2,…,M, is applied to a bank of correlators with a common input and supplied with an appropriate set of N orthonormal basis functions, as depicted in Figure 7.2b. The resulting correlator outputs define the signal vector si. Since knowledge of the signal vector si is as good as knowing the transmitted signal si(t) itself, and vice versa, we may represent si(t) by a point in a Euclidean space of dimension N ≤ M. We refer to this point as the transmitted signal point, or message point for short. The set of message points corresponding to the set of transmitted signals ![]() is called a message constellation.

is called a message constellation.

However, representation of the received signal x(t) is complicated by the presence of additive noise w(t). We note that when the received signal x(t) is applied to the bank of N correlators, the correlator outputs define the observation vector x. According to (7.43), the vector x differs from the signal vector si by the noise vector w, whose orientation is completely random, as it should be.

The noise vector w is completely characterized by the channel noise w(t); the converse of this statement, however, is not true, as explained previously. The noise vector w represents that portion of the noise w(t) that will interfere with the detection process; the remaining portion of this noise, denoted by w'(t), is tuned out by the bank of correlators and, therefore, irrelevant.

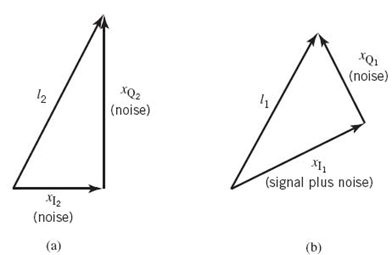

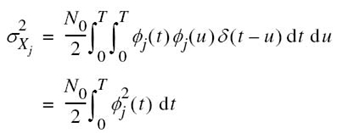

Based on the observation vector x, we may represent the received signal x(t) by a point in the same Euclidean space used to represent the transmitted signal. We refer to this second point as the received signal point. Owing to the presence of noise, the received signal point wanders about the message point in a completely random fashion, in the sense that it may lie anywhere inside a Gaussian-distributed “cloud” centered on the message point. This is illustrated in Figure 7.6a for the case of a three-dimensional signal space. For a particular realization of the noise vector w (i.e., a particular point inside the random cloud of Figure 7.6a) the relationship between the observation vector x and the signal vector si is as illustrated in Figure 7.6b.

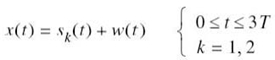

We are now ready to state the signal-detection problem:

Given the observation vector x, perform a mapping from x to an estimate m̂ of the transmitted symbol, mi, in a way that would minimize the probability of error in the decision-making process.

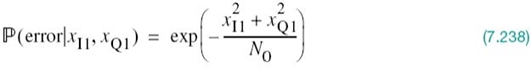

Given the observation vector x, suppose that we make the decision m̂ = mi. The probability of error in this decision, which we denote by Pe(mi|x), is simply

The requirement is to minimize the average probability of error in mapping each given observation vector x into a decision. On the basis of (7.47), we may, therefore, state the optimum decision rule:

![]()

The decision rule described in (7.48) is referred to as the maximum a posteriori probability (MAP) rule. Correspondingly, the system used to implement this rule is called a maximum a posteriori decoder.

Figure 7.6 Illustrating the effect of (a) noise perturbation on (b) the location of the received signal point.

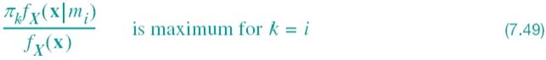

The requirement of (7.48) may be expressed more explicitly in terms of the prior probabilities of the transmitted signals and the likelihood functions, using Bayes’ rule discussed in Chapter 3. For the moment, ignoring possible ties in the decision-making process, we may restate the MAP rule as follows:

![]()

where πk is the prior probability of transmitting symbol mk, fX(x|mi) is the conditional probability density function of the random observation vector X given the transmission of symbol mk, and fX(x) is the unconditional probability density function of X.

In (7.49), we now note the following points:

- the denominator term fX(x) is independent of the transmitted symbol;

- the prior probability πk = πi when all the source symbols are transmitted with equal probability; and

- the conditional probability density function fX(x|mk) bears a one-to-one relationship to the log-likelihood function L(mk).

Accordingly, we may simply restate the decision rule of (7.49) in terms of L(mk) as follows:

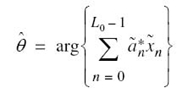

The decision rule of (7.50) is known as the maximum likelihood rule, discussed previously in Chapter 3; the system used for its implementation is correspondingly referred to as the maximum likelihood decoder. According to this decision rule, a maximum likelihood decoder computes the log-likelihood functions as metrics for all the M possible message symbols, compares them, and then decides in favor of the maximum. Thus, the maximum likelihood decoder is a simplified version of the maximum a posteriori decoder, in that the M message symbols are assumed to be equally likely.

It is useful to have a graphical interpretation of the maximum likelihood decision rule. Let Z denote the N-dimensional space of all possible observation vectors x. We refer to this space as the observation space. Because we have assumed that the decision rule must say m̂ = mi, where i = 1,2,…,M, the total observation space Z is correspondingly partitioned into M-decision regions, denoted by Z1,Z2,…,ZM. Accordingly, we may restate the decision rule of (7.50) as

Aside from the boundaries between the decision regions Z1,Z2,…,ZM, it is clear that this set of regions covers the entire observation space. We now adopt the convention that all ties are resolved at random; that is, the receiver simply makes a random guess. Specifically, if the observation vector x falls on the boundary between any two decision regions, Zi and Zk, say, the choice between the two possible decisions m̂ = mk and m̂ = mi is resolved a priori by the flip of a fair coin. Clearly, the outcome of such an event does not affect the ultimate value of the probability of error since, on this boundary, the condition of (7.48) is satisfied with the equality sign.

The maximum likelihood decision rule of (7.50) or its geometric counterpart described in (7.51) assumes that the channel noise w(t) is additive. We next specialize this rule for the case when w(t) is both white and Gaussian.

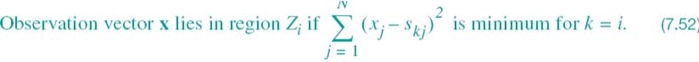

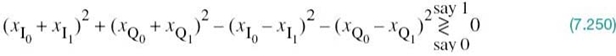

From the log-likelihood function defined in (7.46) for an AWGN channel, we note that

L(mk) attains its maximum value when the summation term  is minimized by the choice k = i. Accordingly, we may formulate the maximum likelihood decision rule for an AWGN channel as

is minimized by the choice k = i. Accordingly, we may formulate the maximum likelihood decision rule for an AWGN channel as

Note we have used “minimum” as the optimizing condition in (7.52) because the minus sign in (7.46) has been ignored. Next, we note from the discussion presented in Section 7.2 that

where ||x–sk|| is the Euclidean distance between the observation vector x at the receiver input and the transmitted signal vector sk. Accordingly, we may restate the decision rule of (7.53) as

In words, (7.54) states that the maximum likelihood decision rule is simply to choose the message point closest to the received signal point, which is intuitively satisfying.

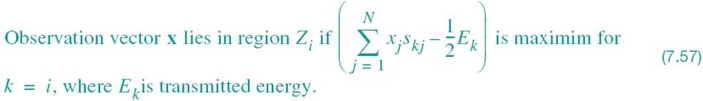

In practice, the decision rule of (7.54) is simplified by expanding the summation on the left-hand side of (7.53) as

The first summation term of this expansion is independent of the index k pertaining to the transmitted signal vector sk and, therefore, may be ignored. The second summation term is the inner product of the observation vector x and the transmitted signal vector sk. The third summation term is the transmitted signal energy

Accordingly, we may reformulate the maximum-likelihood decision rule one last time:

Figure 7.7 Illustrating the partitioning of the observation space into decision regions for the case when N = 2 and M = 4; it is assumed that the M transmitted symbols are equally likely.

From (7.57) we infer that, for an AWGN channel, the M decision regions are bounded by linear hyperplane boundaries. The example in Figure 7.7 illustrates this statement for M = 4 signals and N = 2 dimensions, assuming that the signals are transmitted with equal energy E and equal probability.

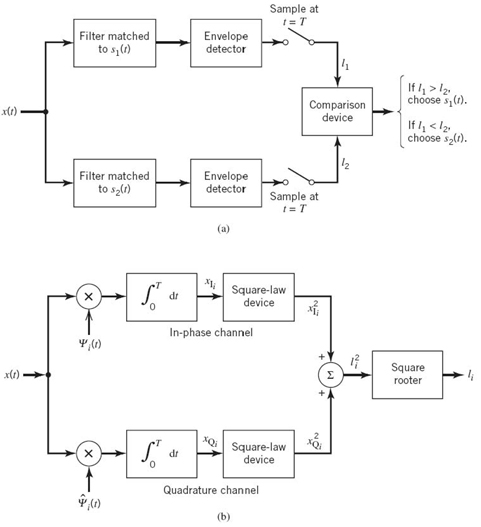

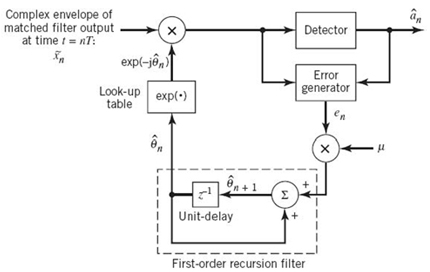

Correlation Receiver

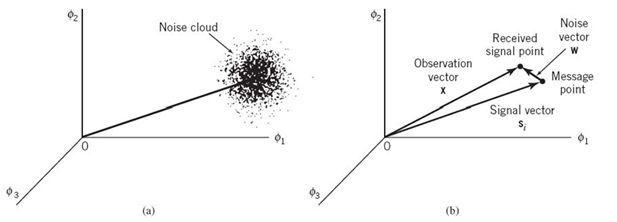

In light of the material just presented, the optimum receiver for an AWGN channel and for the case when the transmitted signals s1(t), s2(t),…,sM(t) are equally likely is called a correlation receiver; it consists of two subsystems, which are detailed in Figure 7.8:

1. Detector (Figure 7.8a), which consists of M correlators supplied with a set of orthonormal basis functions ϕ1(t), ϕ2(t),…,ϕN(t) that are generated locally; this bank of correlators operates on the received signal x(t), 0 ≤ t ≤ T, to produce the observation vector x.

2. Maximum-likelihood decoder (Figure 7.8b), which operates on the observation vector x to produce an estimate m̂ of the transmitted symbol mi, i = 1,2,…,M, in such a way that the average probability of symbol error is minimized.

In accordance with the maximum likelihood decision rule of (7.57), the decoder multiplies the N elements of the observation vector x by the corresponding N elements of each of the M signal vectors s1, s2,…, sM. Then, the resulting products are successively summed in accumulators to form the corresponding set of inner products {xTsk|k = 1,2,…,M}.

Figure 7.8 (a) Detector or demodulator. (b) Signal transmission decoder.

Next, the inner products are corrected for the fact that the transmitted signal energies may be unequal. Finally, the largest one in the resulting set of numbers is selected, and an appropriate decision on the transmitted message is thereby made.

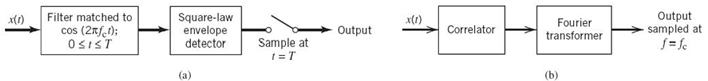

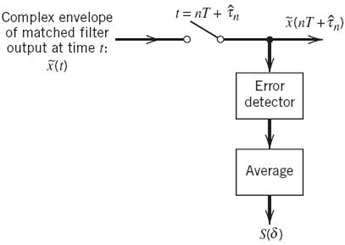

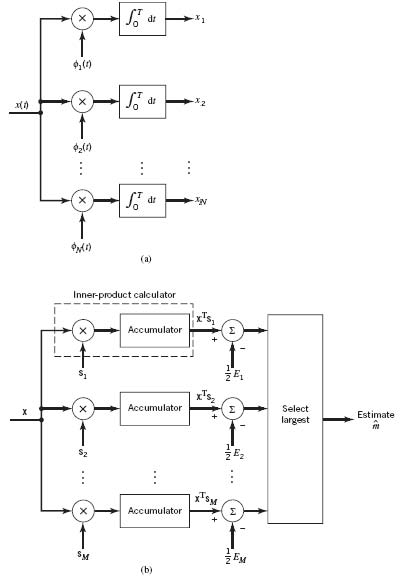

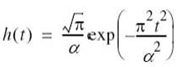

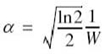

Matched Filter Receiver

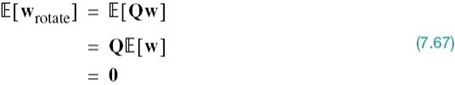

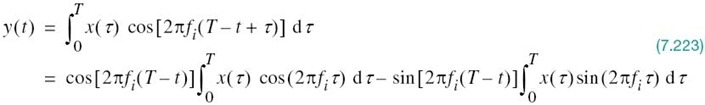

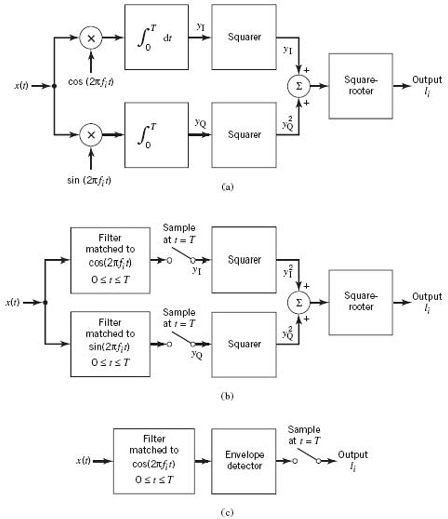

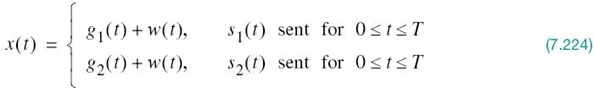

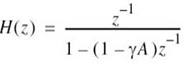

The detector shown in Figure 7.8a involves a set of correlators. Alternatively, we may use a different but equivalent structure in place of the correlators. To explore this alternative method of implementing the optimum receiver, consider a linear time-invariant filter with impulse response hj(t). With the received signal x(t) operating as input, the resulting filter output is defined by the convolution integral

To proceed further, we evaluate this integral over the duration of a transmitted symbol, namely 0 ≤ t ≤ T. With time t restricted in this manner, we may replace the variable τ with t and go on to write

Consider next a detector based on a bank of correlators. The output of the jth correlator is defined by the first line of (7.25), reproduced here for convenience of representation:

For yj(T) to equal xj, we find from (7.58) and (7.59) that this condition is satisfied provided that we choose

![]()

Equivalently, we may express the condition imposed on the desired impulse response of the filter as

We may now generalize the condition described in (7.60) by stating:

Given a pulse signal ϕ(t) occupying the interval 0 ≤ t ≤ T, a linear time-invariant filter is said to be matched to the signal ϕ(t) if its impulse response h(t) satisfies the condition

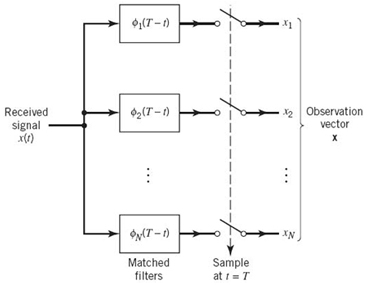

A time-invariant filter defined in this way is called a matched filter. Correspondingly, an optimum receiver using matched filters in place of correlators is called a matched-filter receiver. Such a receiver is depicted in Figure 7.9, shown below.

Figure 7.9 Detector part of matched filter receiver; the signal transmission decoder is as shown in Figure 7.8(b).

7.5 Probability of Error

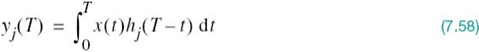

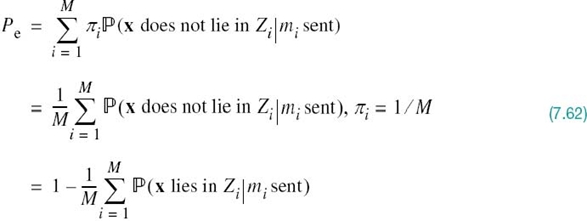

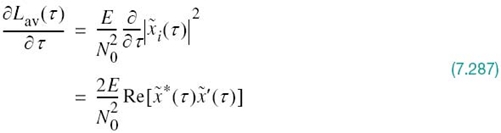

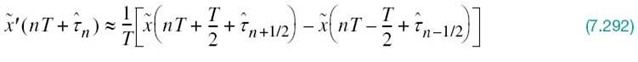

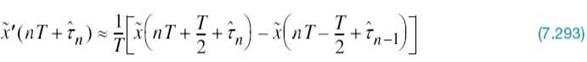

To complete the statistical characterization of the correlation receiver of Figure 7.8a or its equivalent, the matched filter receiver of Figure 7.9, we need to evaluate its performance in the presence of AWGN. To do so, suppose that the observation space Z is partitioned into a set of regions, ![]() , in accordance with the maximum likelihood decision rule. Suppose also that symbol mi (or, equivalently, signal vector si) is transmitted and an observation vector x is received. Then, an error occurs whenever the received signal point represented by x does not fall inside region Zi associated with the message point si. Averaging over all possible transmitted symbols assumed to be equiprobable, we see that the average probability of symbol error is

, in accordance with the maximum likelihood decision rule. Suppose also that symbol mi (or, equivalently, signal vector si) is transmitted and an observation vector x is received. Then, an error occurs whenever the received signal point represented by x does not fall inside region Zi associated with the message point si. Averaging over all possible transmitted symbols assumed to be equiprobable, we see that the average probability of symbol error is

where we have used the standard notation to denote the conditional probability of an event. Since x is the sample value of random vector X, we may rewrite (7.62) in terms of the likelihood function as follows, given that the message symbol mi is sent:

For an N-dimensional observation vector, the integral in (7.63) is likewise N-dimensional.

Invariance of the Probability of Error to Rotation

There is a uniqueness to the way in which the observation space Z is partitioned into the set of regions Z1,Z2, …, ZM in accordance with the maximum likelihood detection of a signal in AWGN; that uniqueness is defined by the message constellation under study. In particular, we may make the statement:

Changes in the orientation of the message constellation with respect to both the coordinate axes and origin of the signal space do not affect the probability of symbol error Pe defined in (7.63).

This statement embodies the invariance property of the average probability of symbol error Pe with respect to notation and translation, which is the result of two facts:

1. In maximum likelihood detection, the probability of symbol error Pe depends solely on the relative Euclidean distance between a received signal point and message point in the constellation.

2. The AWGN is spherically symmetric in all directions in the signal space.

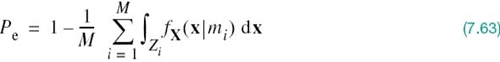

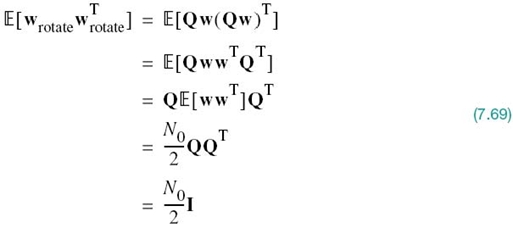

To elaborate, consider first the invariance of Pe with respect to rotation. The effect of a rotation applied to all the message points in a constellation is equivalent to multiplying the N-dimensional signal vector si by an N-by-N orthonormal matrix denoted by Q for all i. By definition, the matrix Q satisfies the condition

where the superscript T denotes matrix transposition and I is the identity matrix whose diagonal elements are all unity and its off-diagonal elements are all zero. According to (7.64), the inverse of the real-valued orthonormal matrix Q is equal to its own transpose. Thus, in dealing with rotation, the message vector si is replaced by its rotated version

Correspondingly, the N-by-1 noise vector w is replaced by its rotated version

However, the statistical characteristics of the noise vector are unaffected by this rotation for three reasons:

1. From Chapter 4 we recall that a linear combination of Gaussian random variables is also Gaussian. Since the noise vector w is Gaussian, by assumption, then it follows that the rotated noise vector wrotate is also Gaussian.

2. Since the noise vector w has zero mean, the rotated noise vector wrotate also has zero mean, as shown by

3. The covariance matrix of the noise vector w is equal to (N0/2)I, where N0/2 is the power spectral density of the AWGN w(t) and I is the identity matrix; that is

Hence, the covariance matrix of the rotated noise vector is

where, in the last two lines, we have made use of (7.68) and (7.64).

In light of these three reasons, we may, therefore, express the observation vector in the rotated message constellation as

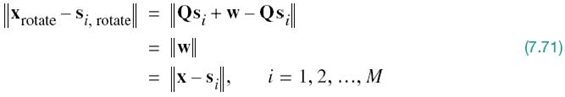

Using (7.65) and (7.70), we may now express the Euclidean distance between the rotated vectors xrotate and srotate as

where, in the last line, we made use of (7.43).

We may, therefore, formally state the principle of rotational invariance:

If a message constellation is rotated by the transformation

![]()

where Q is an orthonormal matrix, then the probability of symbol error Pe incurred in maximum likelihood signal-detection over an AWGN channel is completely unchanged.

EXAMPLE 3 Illustration of Rotational Invariance

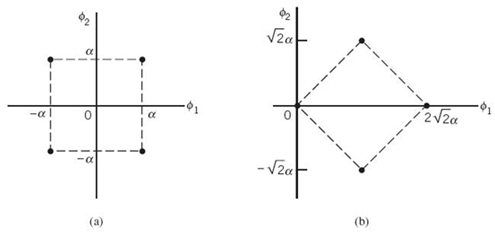

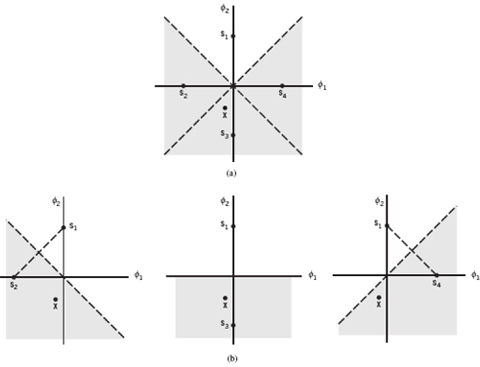

To illustrate the principle of rotational invariance, consider the signal constellation shown in Figure 7.10a. The constellation is the same as that of Figure 7.10b, except for the fact that it has been rotated through 45°. Although these two constellations do indeed look different in a geometric sense, the principle of rotational invariance teaches us immediately that the Pe is the same for both of them.

Figure 7.10 A pair of signal constellations for illustrating the principle of rotational invariance.

Invariance of the Probability to Translation

Consider next the invariance of Pe to translation. Suppose all the message points in a signal constellation are translated by a constant vector amount a, as shown by

The observation vector is correspondingly translated by the same vector amount, as shown by

From (7.72) and (7.73) we see that the translation a is common to both the translated signal vector si and translated observation vector x. We, therefore, immediately deduce that

and thus formulate the principle of translational invariance:

If a signal constellation is translated by a constant vector amount, then the probability of symbol error Pe incurred in maximum likelihood signal detection over an AWGN channel is completely unchanged.

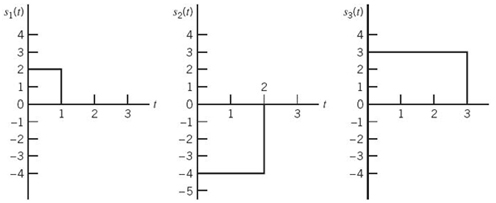

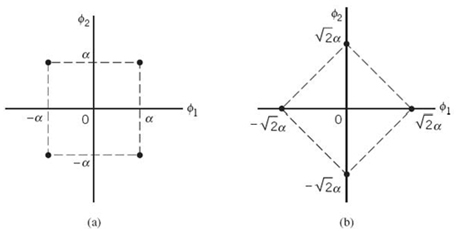

EXAMPLE 4 Translation of Signal Constellation

As an example, consider the two signal constellations shown in Figure 7.11, which pertain to a pair of different four-level PAM signals. The constellation of Figure 7.11b is the same as that of Figure 7.11a, except for a translation 3 α/2 to the right along the ϕ1-axis. The principle of translational invariance teaches us that the Pe is the same for both of these signal constellations.

Figure 7.11 A pair of signal constellations for illustrating the principle of translational invariance.

Union Bound on the Probability of Error

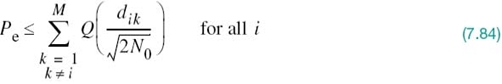

For AWGN channels, the formulation of the average probability of symbol error 2 Pe is conceptually straightforward, in that we simply substitute (7.41) into (7.63). Unfortunately, however, numerical computation of the integral so obtained is impractical, except in a few simple (nevertheless, important) cases. To overcome this computational difficulty, we may resort to the use of bounds, which are usually adequate to predict the SNR (within a decibel or so) required to maintain a prescribed error rate. The approximation to the integral defining Pe is made by simplifying the integral or simplifying the region of integration. In the following, we use the latter procedure to develop a simple yet useful upper bound, called the union bound, as an approximation to the average probability of symbol error for a set of M equally likely signals (symbols) in an AWGN channel.

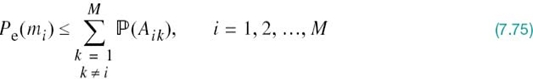

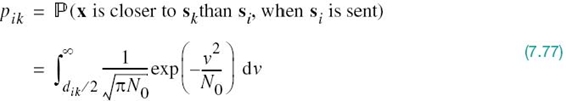

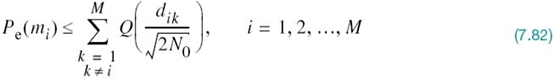

Let Aik, with (i, k) = 1,2,…,M, denote the event that the observation vector x is closer to the signal vector sk than to si, when the symbol mi (message vector si) is sent. The conditional probability of symbol error when symbol mi is sent, Pe(mi), is equal to the probability of the union of events, defined by the set  . Probability theory teaches us that the probability of a finite union of events is overbounded by the sum of the probabilities of the constituent events. We may, therefore, write

. Probability theory teaches us that the probability of a finite union of events is overbounded by the sum of the probabilities of the constituent events. We may, therefore, write

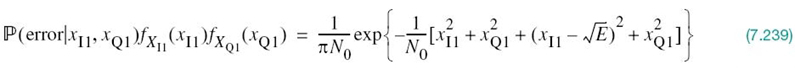

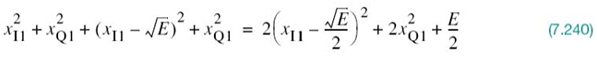

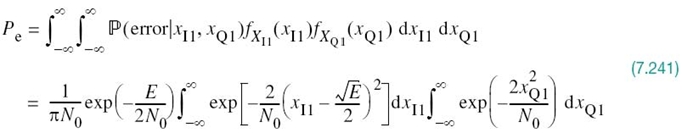

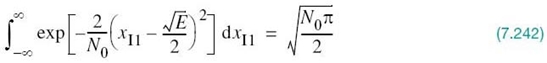

EXAMPLE 5 Constellation of Four Message Points

To illustrate applicability of the union bound, consider Figure 7.12 for the case of M = 4. Figure 7.12a shows the four message points and associated decision regions, with the point s1 assumed to represent a transmitted symbol. Figure 7.12b shows the three constituent signal-space descriptions where, in each case, the transmitted message point s1 and one other message point are retained. According to Figure 7.12a the conditional probability of symbol error, Pe(mi), is equal to the probability that the observation vector x lies in the shaded region of the two-dimensional signal-space diagram. Clearly, this probability is less than the sum of the probabilities of the three individual events that x lies in the shaded regions of the three constituent signal spaces depicted in Figure 7.12b.

Figure 7.12 Illustrating the union bound. (a) Constellation of four message points. (b) Three constellations with a common message point and one other message point x retained from the original constellation.

Pairwise Error Probability

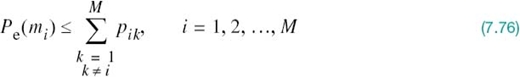

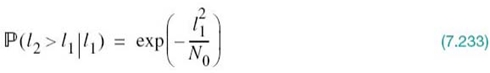

It is important to note that, in general, the probability ![]() is different from the probability

is different from the probability ![]() , which is the probability that the observation vector x is closer to the signal vector sk (i.e., symbol mk) than every other when the vector si (i.e., symbol mi) is sent. On the other hand, the probability

, which is the probability that the observation vector x is closer to the signal vector sk (i.e., symbol mk) than every other when the vector si (i.e., symbol mi) is sent. On the other hand, the probability ![]() depends on only two signal vectors, si and sk. To emphasize this difference, we rewrite (7.75) by adopting pik in place of

depends on only two signal vectors, si and sk. To emphasize this difference, we rewrite (7.75) by adopting pik in place of

![]() . We thus write

. We thus write

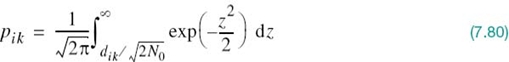

The probability pik is called the pairwise error probability, in that if a digital communication system uses only a pair of signals, si and sk, then pik is the probability of the receiver mistaking sk for si.

Consider then a simplified digital communication system that involves the use of two equally likely messages represented by the vectors si and sk. Since white Gaussian noise is identically distributed along any set of orthogonal axes, we may temporarily choose the first axis in such a set as one that passes through the points si and sk; for three illustrative examples, see Figure 7.12b. The corresponding decision boundary is represented by the bisector that is perpendicular to the line joining the points si and sk. Accordingly, when the vector si (i.e., symbol mi) is sent, and if the observation vector x lies on the side of the bisector where sk lies, an error is made. The probability of this event is given by

where dik in the lower limit of the integral is the Euclidean distance between signal vectors si and sk; that is,

To change the integral of (7.77) into a standard form, define a new integration variable

Equation (7.77) is then rewritten in the desired form

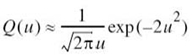

The integral in (7.80) is the Q-function of (3.68) that was introduced in Chapter 3. In terms of the Q-function, we may now express the probability pik in the compact form

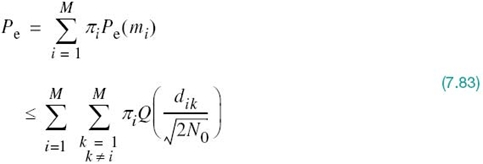

Correspondingly, substituting (7.81) into (7.76), we write

The probability of symbol error, averaged over all the M symbols, is, therefore, over-bounded as follows:

where πi is the probability of sending symbol mi.

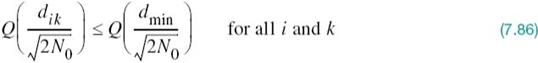

There are two special forms of (7.83) that are noteworthy:

1. Suppose that the signal constellation is circularly symmetric about the origin. Then, the conditional probability of error Pe(mi) is the same for all i, in which case (7.83) reduces to

Figure 7.10 illustrates two examples of circularly symmetric signal constellations.

2. Define the minimum distance of a signal constellation dmin as the smallest Euclidean distance between any two transmitted signal points in the constellation, as shown by

Then, recognizing that the Q-function is a monotonically decreasing function of its argument, we have

Therefore, in general, we may simplify the bound on the average probability of symbol error in (7.83) as

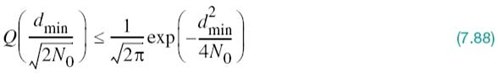

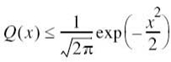

The Q-function in (7.87) is itself upper bounded as3

Accordingly, we may further simplify the bound on Pe in (7.87) as

In words, (7.89) states the following:

In an AWGN channel, the average probability of symbol error Pe decreases exponentially as the squared minimum distance, d2min.

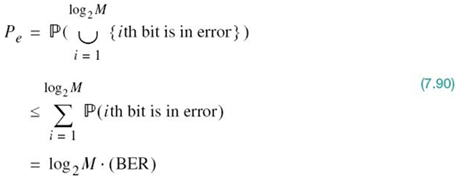

Bit Versus Symbol Error Probabilities

Thus far, the only figure of merit we have used to assess the noise performance of a digital communication system in AWGN has been the average probability of symbol (word) error. This figure of merit is the natural choice when messages of length m = log2 M are transmitted, such as alphanumeric symbols. However, when the requirement is to transmit binary data such as digital computer data, it is often more meaningful to use another figure of merit called the BER. Although, in general, there are no unique relationships between these two figures of merit, it is fortunate that such relationships can be derived for two cases of practical interest, as discussed next.

Case 1: M-tuples Differing in Only a Single Bit

Suppose that it is possible to perform the mapping from binary to M-ary symbols in such a way that the two binary M-tuples corresponding to any pair of adjacent symbols in the M-ary modulation scheme differ in only one bit position. This mapping constraint is satisfied by using a Gray code. When the probability of symbol error Pe is acceptably small, we find that the probability of mistaking one symbol for either one of the two “nearest” symbols is greater than any other kind of symbol error. Moreover, given a symbol error, the most probable number of bit errors is one, subject to the aforementioned mapping constraint. Since there are log2M bits per symbol, it follows that the average probability of symbol error is related to the BER as follows:

where, in the first line, ⋃ is the symbol for “union” as used in set theory. We also note that

It follows, therefore, that the BER is bounded as follows:

Case 2: Number of Symbols Equal to Integer Power of 2

Suppose next M = 2K, where K is an integer. We assume that all symbol errors are equally likely and occur with probability

where Pe is the average probability of symbol error. To find the probability that the ith bit in a symbol is in error, we note that there are 2K – 1 cases of symbol error in which this particular bit is changed and there are 2K – 1 cases in which it is not. Hence, the BER is

or, equivalently,

Note that, for large M, the BER approaches the limiting value of Pe/2. Note also that the bit errors are not independent in general.

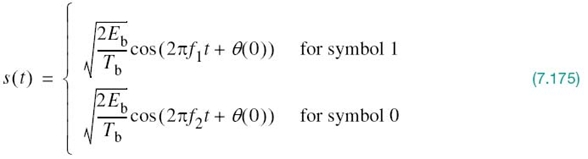

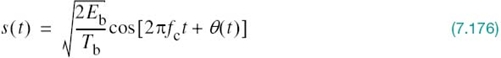

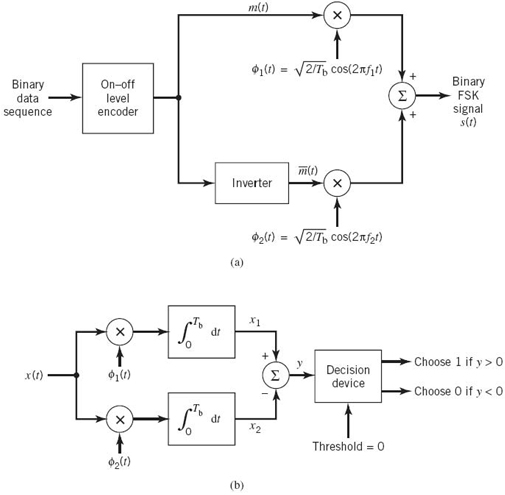

7.6 Phase-Shift Keying Techniques Using Coherent Detection

With the background material on the coherent detection of signals in AWGN presented in Sections 7.2–7.4 at our disposal, we are now ready to study specific passband data-transmission systems. In this section, we focus on the family of phase-shift keying (PSK) techniques, starting with the simplest member of the family discussed next.

Binary Phase-Shift Keying

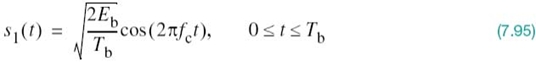

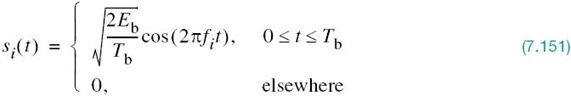

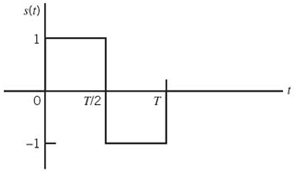

In a binary PSK system, the pair of signals s1(t) and s2(t) used to represent binary symbols 1 and 0, respectively, is defined by

where Tb is the bit duration and Eb is the transmitted signal energy per bit. We find it convenient, although not necessary, to assume that each transmitted bit contains an integral number of cycles of the carrier wave; that is, the carrier frequency fc is chosen equal to nc/Tb for some fixed integer nc. A pair of sinusoidal waves that differ only in a relative phase-shift of 180°, defined in (7.95) and (7.96), is referred to as an antipodal signal.

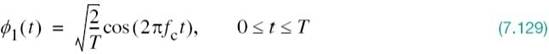

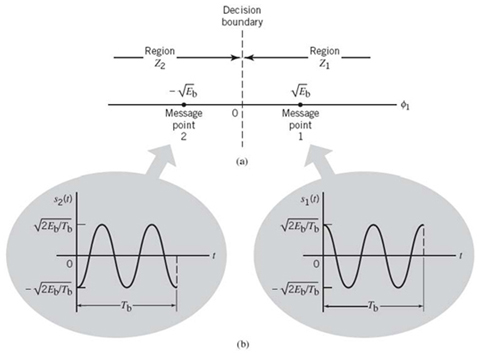

Signal-Space Diagram of Binary PSK Signals

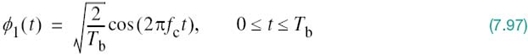

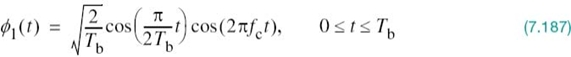

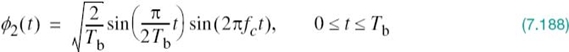

From this pair of equations it is clear that, in the case of binary PSK, there is only one basis function of unit energy:

Then, we may respectively express the transmitted signals s1(t) and s2(t) in terms of ϕ1(t) as

A binary PSK system is, therefore, characterized by having a signal space that is one-dimensional (i.e., N = 1), with a signal constellation consisting of two message points (i.e., M = 2). The respective coordinates of the two message points are

In words, the message point corresponding to s1(t) is located at![]() and the message point corresponding to s2(t) is located at

and the message point corresponding to s2(t) is located at ![]() . Figure

7.13a displays the signal-space diagram for binary PSK and Figure 7.13b shows example waveforms of antipodal signals representing s1(t) and s2(t). Note that the binary constellation of Figure

7.13 has minimum average energy.

. Figure

7.13a displays the signal-space diagram for binary PSK and Figure 7.13b shows example waveforms of antipodal signals representing s1(t) and s2(t). Note that the binary constellation of Figure

7.13 has minimum average energy.

Figure 7.13 (a) Signal-space diagram for coherent binary PSK system. (b) The waveforms depicting the transmitted signals s1(t) and s2(t), assuming nc = 2.

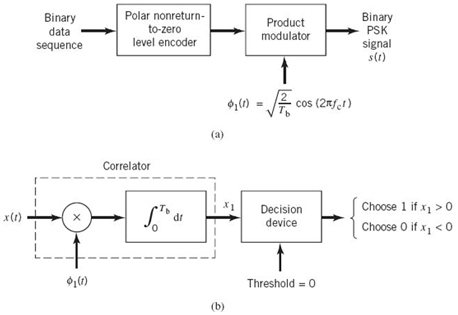

Generation of a binary PSK signal follows readily from (7.97) to (7.99). Specifically, as shown in the block diagram of Figure 7.14a, the generator (transmitter) consists of two components:

1. Polar NRZ-level encoder, which represents symbols 1 and 0 of the incoming binary sequence by amplitude levels and![]() respectively.

respectively.

2. Product modulator, which multiplies the output of the polar NRZ encoder by the basis function ϕ1(t); in effect, the sinusoidal ϕ1(t) acts as the “carrier” of the binary PSK signal.

Accordingly, binary PSK may be viewed as a special form of DSB-SC modulation that was studied in Section 2.14.

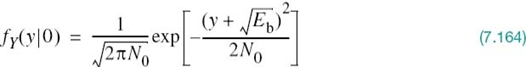

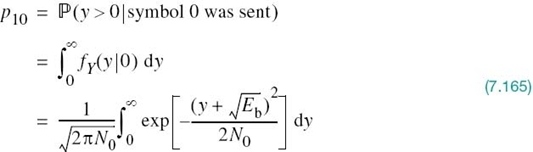

Error Probability of Binary PSK Using Coherent Detection

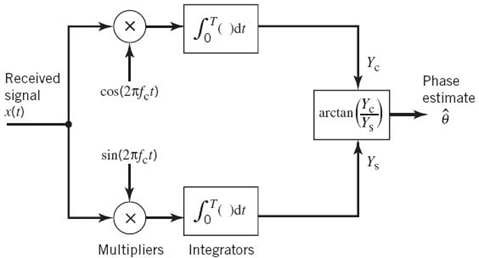

To make an optimum decision on the received signal x(t) in favor of symbol 1 or symbol 0 (i.e., estimate the original binary sequence at the transmitter input), we assume that the receiver has access to a locally generated replica of the basis function ϕ1(t). In other words, the receiver is synchronized with the transmitter, as shown in the block diagram of Figure 7.14b. We may identify two basic components in the binary PSK receiver:

1. Correlator, which correlates the received signal x(t) with the basis function ϕ1(t) on a bit-by-bit basis.

2. Decision device, which compares the correlator output against a zero-threshold, assuming that binary symbols 1 and 0 are equiprobable. If the threshold is exceeded, a decision is made in favor of symbol 1; if not, the decision is made in favor of symbol 0. Equality of the correlator with the zero-threshold is decided by the toss of a fair coin (i.e., in a random manner).

With coherent detection in place, we may apply the decision rule of (7.54). Specifically, we partition the signal space of Figure 7.13 into two regions:

- the set of points closest to message point 1 at

; and

; and - the set of points closest to message point 2 at

.

.

This is accomplished by constructing the midpoint of the line joining these two message points and then marking off the appropriate decision regions. In Figure 7.13, these two decision regions are marked Z1 and Z2, according to the message point around which they are constructed.

The decision rule is now simply to decide that signal s1(t) (i.e., binary symbol 1) was transmitted if the received signal point falls in region Z1 and to decide that signal s2(t) (i.e., binary symbol 0) was transmitted if the received signal point falls in region Z2. Two kinds of erroneous decisions may, however, be made:

1. Error of the first kind. Signal s2(t) is transmitted but the noise is such that the received signal point falls inside region Z1; so the receiver decides in favor of signal s1(t).

2. Error of the second kind. Signal s1(t) is transmitted but the noise is such that the received signal point falls inside region Z2; so the receiver decides in favor of signal s2(t).

Figure 7.14 Block diagrams for (a) binary PSK transmitter and (b) coherent binary PSK receiver.

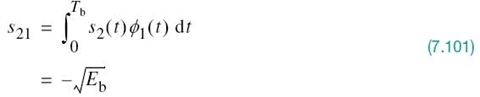

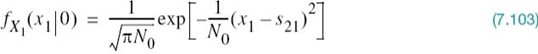

To calculate the probability of making an error of the first kind, we note from Figure 7.13a that the decision region associated with symbol 1 or signal s1(t) is described by

![]()

where the observable element x1 is related to the received signal x(t) by

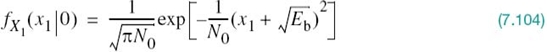

The conditional probability density function of random variable X1, given that symbol 0 (i.e., signal s2(t)) was transmitted, is defined by

Using (7.101) in this equation yields

The conditional probability of the receiver deciding in favor of symbol 1, given that symbol 0 was transmitted, is therefore

Putting

and changing the variable of integration from x1 to z, we may compactly rewrite (7.105) in terms of the Q-function:

Using the formula of (3.68) in Chapter 3 for the Q-function in (7.107) we get

Consider next an error of the second kind. We note that the signal space of Figure 7.13a is symmetric with respect to the origin. It follows, therefore, that p01, the conditional probability of the receiver deciding in favor of symbol 0, given that symbol 1 was transmitted, also has the same value as in (7.108).

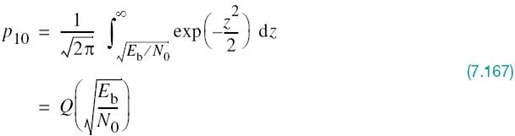

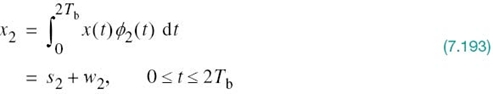

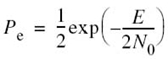

Thus, averaging the conditional error probabilities p10 and p01, we find that the average probability of symbol error or, equivalently, the BER for binary PSK using coherent detection and assuming equiprobable symbols is given by

As we increase the transmitted signal energy per bit Eb for a specified noise spectral density N0/2, the message points corresponding to symbols 1 and 0 move further apart and the average probability of error Pe is correspondingly reduced in accordance with (7.109), which is intuitively satisfying.

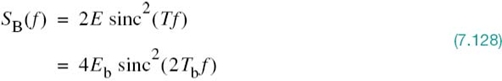

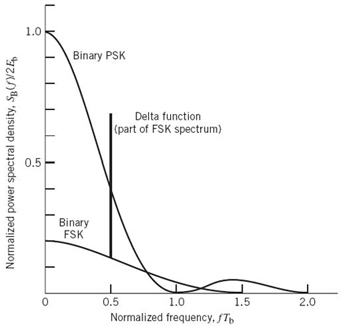

Power Spectra of Binary PSK Signals

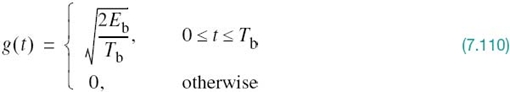

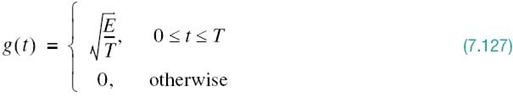

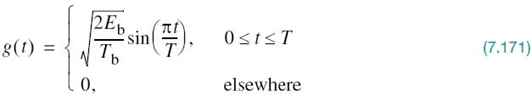

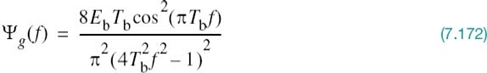

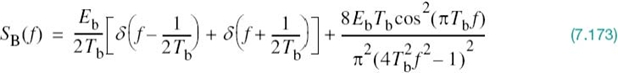

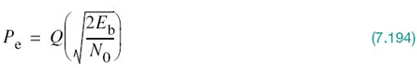

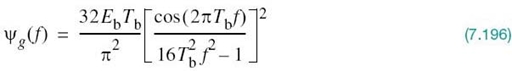

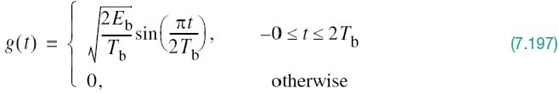

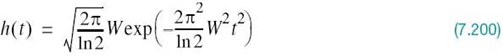

Examining (7.97) and (7.98), we see that a binary PSK wave is an example of DSB-SC modulation that was discussed in Section 2.14. More specifically, it consists of an in-phase component only. Let g(t) denote the underlying pulse-shaping function defined by

Depending on whether the transmitter input is binary symbol 1 or 0, the corresponding transmitter output is +g(t) or –g(t), respectively. It is assumed that the incoming binary sequence is random, with symbols 1 and 0 being equally likely and the symbols transmitted during the different time slots being statistically independent.

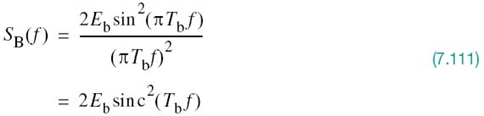

In Example 6 of Chapter 4, it was shown that the power spectral density of a random binary wave so described is equal to the energy spectral density of the symbol shaping function divided by the symbol duration. The energy spectral density of a Fourier-transformable signal g(t) is defined as the squared magnitude of the signal’s Fourier transform. For the binary PSK signal at hand, the baseband power spectral density is, therefore, defined by

Examining (7.111), we may make the following observations on binary PSK:

1. The power spectral density SB(f) is symmetric about the vertical axis, as expected.

2. SB(f) goes through zero at multiples of the bit rate; that is, f = ±1/Tb, ±2/Tb,…

3. With sin2(πTbf) limited to a maximum value of unity, SB(f) falls off as the inverse square of the frequency, f.

These three observations are all embodied in the plot of SB(f) versus f, presented in Figure 7.15.

Figure 7.15 also includes a plot of the baseband power spectral density of a binary frequency-shift keying (FSK) signal, details of which are presented in Section 7.8. Comparison of these two spectra is deferred to that section.

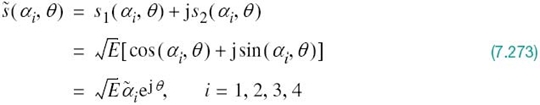

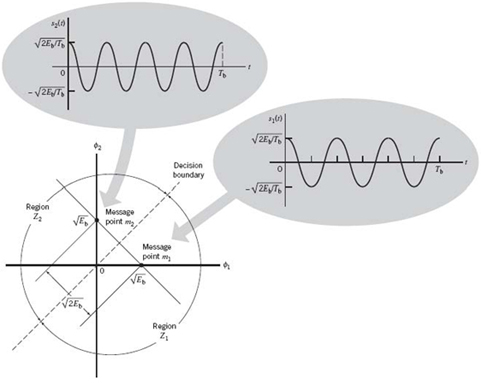

Quadriphase-Shift Keying

The provision of reliable performance, exemplified by a very low probability of error, is one important goal in the design of a digital communication system. Another important goal is the efficient utilization of channel bandwidth. In this subsection we study a bandwidth-conserving modulation scheme known as quadriphase-shift keying (QPSK), using coherent detection.

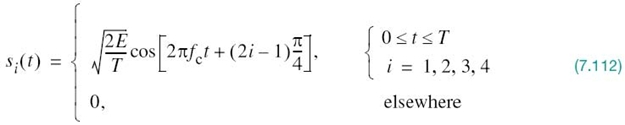

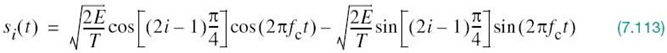

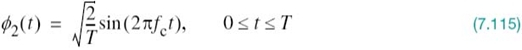

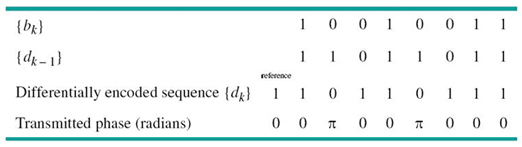

As with binary PSK, information about the message symbols in QPSK is contained in the carrier phase. In particular, the phase of the carrier takes on one of four equally spaced values, such as π/4, 3π/4, 5π/4, and 7π/4. For this set of values, we may define the transmitted signal as

where E is the transmitted signal energy per symbol and T is the symbol duration. The carrier frequency fc equals nc/T for some fixed integer nc. Each possible value of the phase corresponds to a unique dibit (i.e., pair of bits). Thus, for example, we may choose the foregoing set of phase values to represent the Gray-encoded set of dibits, 10, 00, 01, and

11, where only a single bit is changed from one dibit to the next.

Figure 7.15 Power spectra of binary PSK and FSK signals.

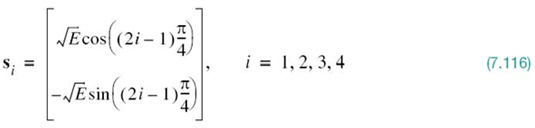

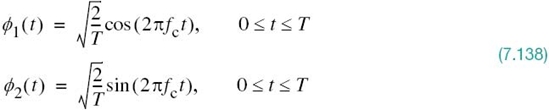

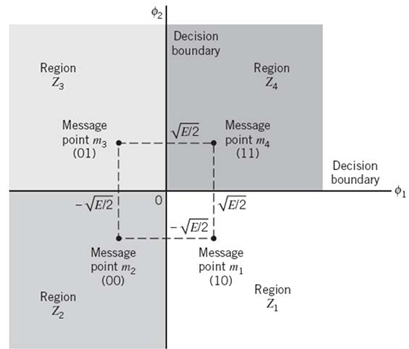

Signal-Space Diagram of QPSK Signals

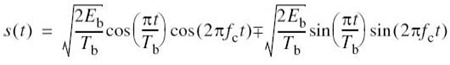

Using a well-known trigonometric identity, we may expand (7.112) to redefine the transmitted signal in the canonical form:

where i = 1, 2, 3, 4. Based on this representation, we make two observations:

1. There are two orthonormal basis functions, defined by a pair of quadrature carriers:

Figure 7.16 Signal-space diagram of QPSK system.

Table 7.2 Signal-space characterization of QPSK

2. There are four message points, defined by the two-dimensional signal vector

Elements of the signal vectors, namely si1 and si2, have their values summarized in Table 7.2; the first two columns give the associated dibit and phase of the QPSK signal.

Accordingly, a QPSK signal has a two-dimensional signal constellation (i.e., N = 2) and four message points (i.e., M = 4) whose phase angles increase in a counterclockwise direction, as illustrated in Figure 7.16. As with binary PSK, the QPSK signal has minimum average energy.

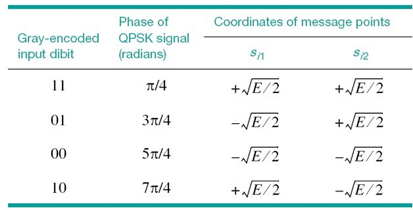

EXAMPLE 6 QPSK Waveforms

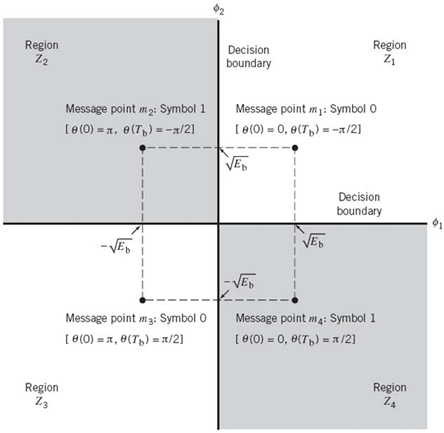

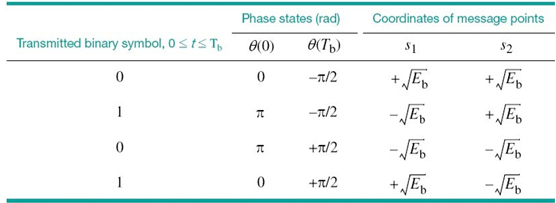

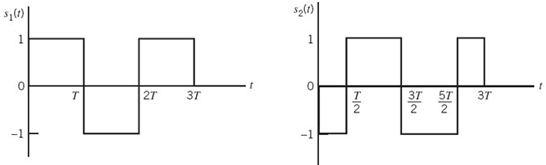

Figure 7.17 illustrates the sequences and waveforms involved in the generation of a QPSK signal. The input binary sequence 01101000 is shown in Figure 7.17a. This sequence is divided into two other sequences, consisting of odd- and even-numbered bits of the input sequence. These two sequences are shown in the top lines of Figure 7.17b and c. The waveforms representing the two components of the QPSK signal, namely si1ϕ1(t) and si2ϕ2(t) are also shown in Figure 7.17b and c, respectively. These two waveforms may individually be viewed as examples of a binary PSK signal. Adding them, we get the QPSK waveform shown in Figure 7.17d.

To define the decision rule for the coherent detection of the transmitted data sequence, we partition the signal space into four regions, in accordance with Table 7.2. The individual regions are defined by the set of symbols closest to the message point represented by message vectors s1, s2, s3, and s4. This is readily accomplished by constructing the perpendicular bisectors of the square formed by joining the four message points and then marking off the appropriate regions. We thus find that the decision regions are quadrants whose vertices coincide with the origin. These regions are marked Z1,Z2, Z3, and Z4 in Figure 7.17, according to the message point around which they are constructed.

Figure 7.17 (a) Input binary sequence. (b) Odd-numbered dibits of input sequence and associated binary PSK signal. (c) Even-numbered dibits of input sequence and associated binary PSK signal.(d) QPSK waveform defined as s(t) = si1 ϕ1 (t) + si2ϕ2 (t).

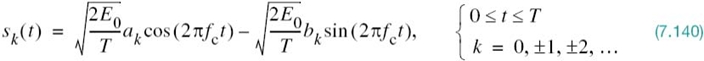

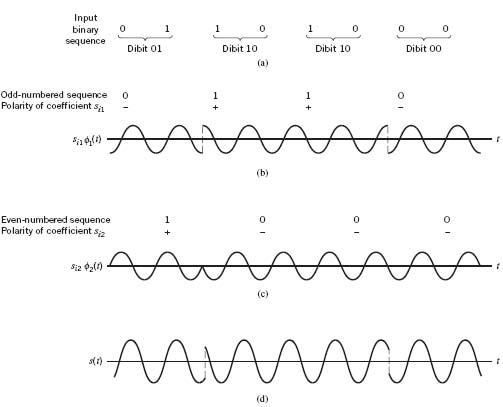

Generation and Coherent Detection of QPSK Signals

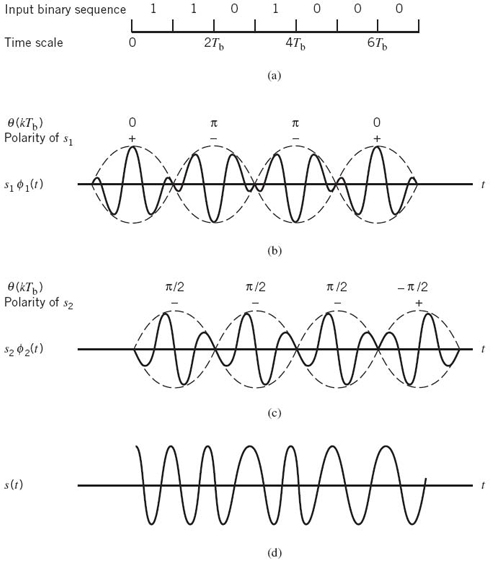

Expanding on the binary PSK transmitter of Figure 7.14a, we may build on (7.113) to (7.115) to construct the QPSK transmitter shown in Figure 7.18a. A distinguishing feature of the QPSK transmitter is the block labeled demultiplexer. The function of the demultiplexer is to divide the binary wave produced by the polar NRZ-level encoder into two separate binary waves, one of which represents the odd-numbered dibits in the incoming binary sequence and the other represents the even-numbered dibits. Accordingly, we may make the following statement:

The QPSK transmitter may be viewed as two binary PSK generators that work in parallel, each at a bit rate equal to one-half the bit rate of the original binary sequence at the QPSK transmitter input.

Figure 7.18 Block diagram of (a) QPSK transmitter and (b) coherent QPSK receiver.

Expanding on the binary PSK receiver of Figure 7.14b, we find that the QPSK receiver is structured in the form of an in-phase path and a quadrature path, working in parallel as depicted in Figure 7.18b. The functional composition of the QPSK receiver is as follows:

1. Pair of correlators, which have a common input x(t). The two correlators are supplied with a pair of locally generated orthonormal basis functions ϕ1(t) and ϕ2(t), which means that the receiver is synchronized with the transmitter. The correlator outputs, produced in response to the received signal x(t), are denoted by x1 and x2, respectively.

2. Pair of decision devices, which act on the correlator outputs x1 and x2 by comparing each one with a zero-threshold; here, it is assumed that the symbols 1 and 0 in the original binary stream at the transmitter input are equally likely. If x1> 0, a decision is made in favor of symbol 1 for the in-phase channel output; on the other hand, if x1 < 0, then a decision is made in favor of symbol 0. Similar binary decisions are made for the quadrature channel.

3. Multiplexer, the function of which is to combine the two binary sequences produced by the pair of decision devices. The resulting binary sequence so produced provides an estimate of the original binary stream at the transmitter input.

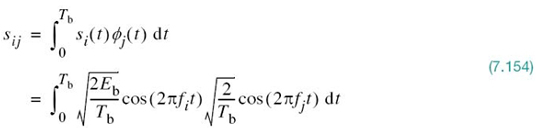

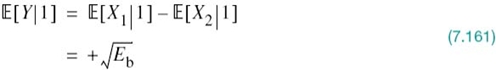

Error Probability of QPSK

In a QPSK system operating on an AWGN channel, the received signal x(t) is defined by

where w(t) is the sample function of a white Gaussian noise process of zero mean and power spectral density N0/2.

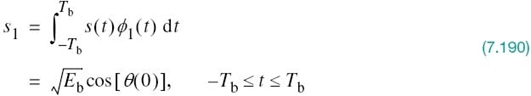

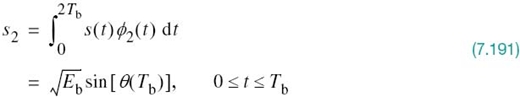

Referring to Figure 7.18a, we see that the two correlator outputs, x1 and x2, are respectively defined as follows:

and

Thus, the observable elements x1 and x2 are sample values of independent Gaussian random variables with mean values equal to ![]() and

and ![]() , respectively, and with a common variance equal to N0/2.

, respectively, and with a common variance equal to N0/2.

The decision rule is now simply to say that s1(t) was transmitted if the received signal point associated with the observation vector x falls inside region Z1; say that s2(t) was transmitted if the received signal point falls inside region Z2, and so on for the other two regions Z3 and Z4. An erroneous decision will be made if, for example, signal s4(t) is transmitted but the noise w(t) is such that the received signal point falls outside region Z4.

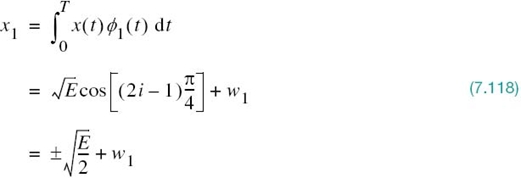

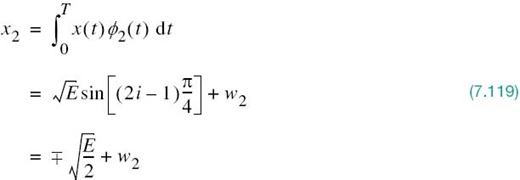

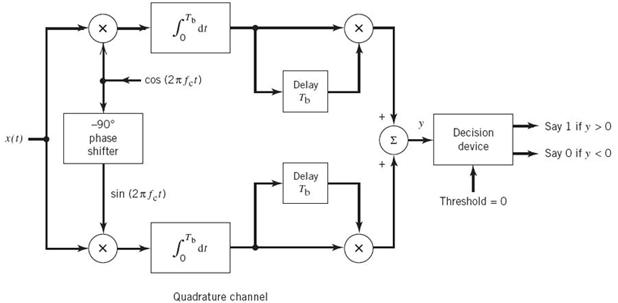

To calculate the average probability of symbol error, recall that a QPSK receiver is in fact equivalent to two binary PSK receivers working in parallel and using two carriers that are in phase quadrature. The in-phase channel x1 and the quadrature channel output x2 (i.e., the two elements of the observation vector x) may be viewed as the individual outputs of two binary PSK receivers. Thus, according to (7.118) and (7.119), these two binary PSK receivers are characterized as follows:

- signal energy per bit equal to E/2, and

- noise spectral density equal to N0/2.

Hence, using (7.109) for the average probability of bit error of a coherent binary PSK receiver, we may express the average probability of bit error in the in-phase and quadrature paths of the coherent QPSK receiver as

where E is written in place of 2Eb. Another important point to note is that the bit errors in the in-phase and quadrature paths of the QPSK receiver are statistically independent. The decision device in the in-phase path accounts for one of the two bits constituting a symbol (dibit) of the QPSK signal, and the decision device in the quadrature path takes care of the other dibit. Accordingly, the average probability of a correct detection resulting from the combined action of the two channels (paths) working together is

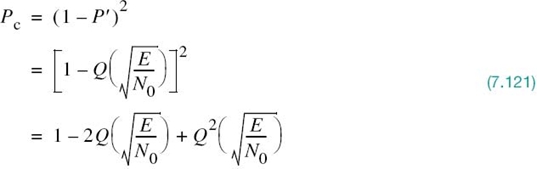

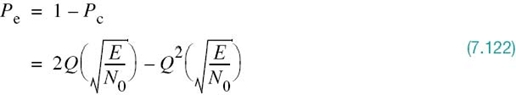

The average probability of symbol error for QPSK is therefore

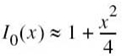

In the region where (E/N0) >> 1, we may ignore the quadratic term on the right-hand side of (7.122), so the average probability of symbol error for the QPSK receiver is approximated as

Equation (7.123) may also be derived in another insightful way, using the signal-space diagram of Figure 7.16. Since the four message points of this diagram are circularly symmetric with respect to the origin, we may apply the approximate formula of (7.85) based on the union bound. Consider, for example, message point m1 (corresponding to dibit 10) chosen as the transmitted message point. The message points m2 and m4 (corresponding to dibits 00 and 11) are the closest to m1. From Figure 7.16 we readily find that m1 is equidistant from m2 and m4 in a Euclidean sense, as shown by

![]()

Assuming that E/N0 is large enough to ignore the contribution of the most distant message point m3 (corresponding to dibit 01) relative to m1, we find that the use of (7.85) with the equality sign yields an approximate expression for Pe that is the same as that of (7.123). Note that in mistaking either m2 or m4 for m1, a single bit error is made; on the other hand, in mistaking m3 for m1, two bit errors are made. For a high enough E/N0, the likelihood of both bits of a symbol being in error is much less than a single bit, which is a further justification for ignoring m3 in calculating Pe when m1 is sent.

In a QPSK system, we note that since there are two bits per symbol, the transmitted signal energy per symbol is twice the signal energy per bit, as shown by

Thus, expressing the average probability of symbol error in terms of the ratio Eb/N0, we may write

With Gray encoding used for the incoming symbols, we find from (7.120) and (7.124) that the BER of QPSK is exactly

We may, therefore, state that a QPSK system achieves the same average probability of bit error as a binary PSK system for the same bit rate and the same Eb/N0, but uses only half the channel bandwidth. Stated in another way:

For the same Eb/N0 and, therefore, the same average probability of bit error, a QPSK system transmits information at twice the bit rate of a binary PSK system for the same channel bandwidth.

For a prescribed performance, QPSK uses channel bandwidth better than binary PSK, which explains the preferred use of QPSK over binary PSK in practice.

Earlier we stated that the binary PSK may be viewed as a special case of DSB-SC modulation. In a corresponding way, we may view the QPSK as a special case of the quadrature amplitude modulation (QAM) in analog modulation theory.

Power Spectra of QPSK Signals

Assume that the binary wave at the modulator input is random with symbols 1 and 0 being equally likely, and with the symbols transmitted during adjacent time slots being statistically independent. We then make the following observations pertaining to the in-phase and quadrature components of a QPSK signal:

1. Depending on the dibit sent during the signaling interval –Tb ≤ t ≤ Tb, the in-phase component equals +g(t) or –g(t), and similarly for the quadrature component. The g(t) denotes the symbol-shaping function defined by

Hence, the in-phase and quadrature components have a common power spectral density, namely, E sinc2(Tf).

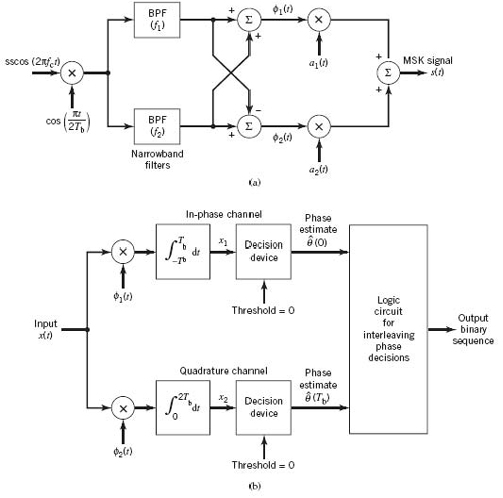

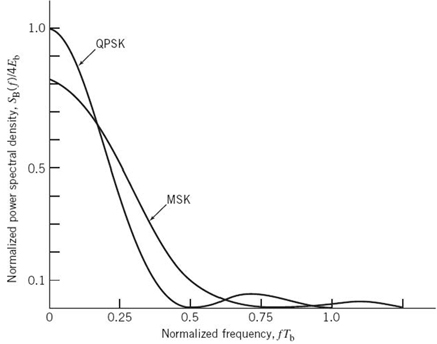

Figure 7.19 Power spectra of QPSK and MSK signals.

2. The in-phase and quadrature components are statistically independent. Accordingly, the baseband power spectral density of the QPSK signal equals the sum of the individual power spectral densities of the in-phase and quadrature components, so we may write

Figure 7.19 plots SB(f), normalized with respect to 4Eb, versus the normalized frequency Tbf. This figure also includes a plot of the baseband power spectral density of a certain form of binary FSK called minimum shift keying, the evaluation of which is presented in Section 7.8. Comparison of these two spectra is deferred to that section.

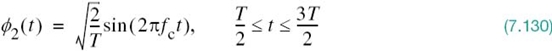

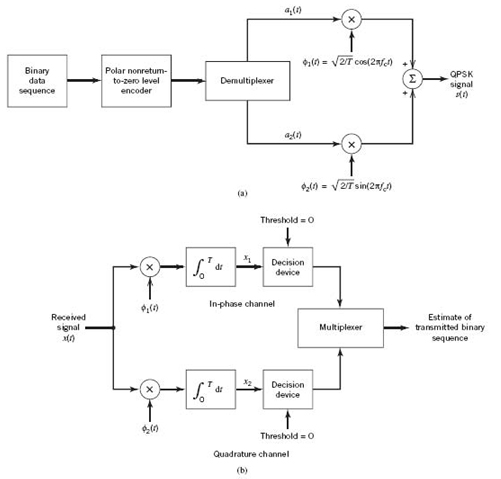

Offset QPSK

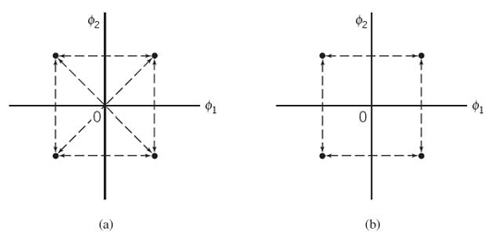

For a variation of the QPSK, consider the signal-space diagram of Figure 7.20a, that embodies all the possible phase transitions that can arise in the generation of a QPSK signal. More specifically, examining the QPSK waveform illustrated in Figure 7.17 for Example 6, we may make three observations:

1. The carrier phase changes by ±180° whenever both the in-phase and quadrature components of the QPSK signal change sign. An example of this situation is illustrated in Figure 7.17 when the input binary sequence switches from dibit 01 to dibit 10.

2. The carrier phase changes by ±90° whenever the in-phase or quadrature component changes sign. An example of this second situation is illustrated in Figure 7.17 when the input binary sequence switches from dibit 10 to dibit 00, during which the in-phase component changes sign, whereas the quadrature component is unchanged.

3. The carrier phase is unchanged when neither the in-phase component nor the quadrature component changes sign. This last situation is illustrated in Figure 7.17 when dibit 10 is transmitted in two successive symbol intervals.

Situation 1 and, to a much lesser extent, situation 2 can be of a particular concern when the QPSK signal is filtered during the course of transmission, prior to detection. Specifically, the 180° and 90° shifts in carrier phase can result in changes in the carrier amplitude (i.e., envelope of the QPSK signal) during the course of transmission over the channel, thereby causing additional symbol errors on detection at the receiver.

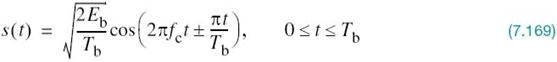

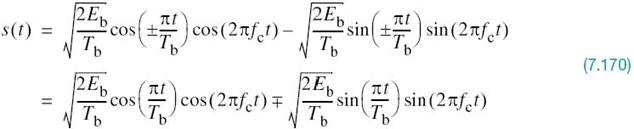

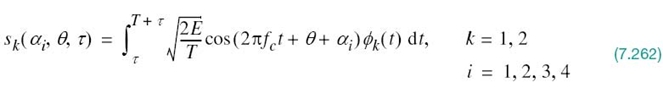

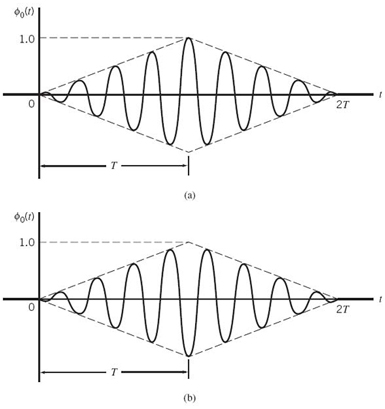

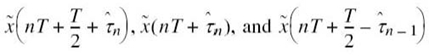

To mitigate this shortcoming of QPSK, we need to reduce the extent of its amplitude fluctuations. To this end, we may use offset QPSK.4 In this variant of QPSK, the bit stream responsible for generating the quadrature component is delayed (i.e., offset) by half a symbol interval with respect to the bit stream responsible for generating the in-phase component. Specifically, the two basis functions of offset QPSK are defined by

and

The ϕ1(t) of (7.129) is exactly the same as that of (7.114) for QPSK, but the ϕ2(t) of (7.130) is different from that of (7.115) for QPSK. Accordingly, unlike QPSK, the phase transitions likely to occur in offset QPSK are confined to ±90°, as indicated in the signal-space diagram of Figure 7.20b,. However, ±90° phase transitions in offset QPSK occur twice as frequently but with half the intensity encountered in QPSK. Since, in addition to ±90° phase transitions, ±180° phase transitions also occur in QPSK, we find that amplitude fluctuations in offset QPSK due to filtering have a smaller amplitude than in the case of QPSK.

Figure 7.20 Possible paths for switching between the message points in (a) QPSK and (b) offset QPSK.

Despite the delay T/2 applied to the basis function ϕ2(t) in (7.130) compared with that in (7.115) for QPSK, the offset QPSK has exactly the same probability of symbol error in an AWGN channel as QPSK. The equivalence in noise performance between these PSK schemes assumes the use of coherent detection at the receiver. The reason for the equivalence is that the statistical independence of the in-phase and quadrature components applies to both QPSK and offset QPSK. We may, therefore, say that Equation (7.123) for the average probability of symbol error applies equally well to the offset QPSK.

M-ary PSK

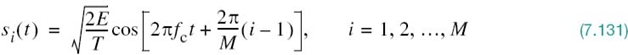

QPSK is a special case of the generic form of PSK commonly referred to as M-ary PSK, where the phase of the carrier takes on one of M possible values: θi = 2(i – 1)π/M, where i = 1,2,…,M. Accordingly, during each signaling interval of duration T, one of the M possible signals

is sent, where E is the signal energy per symbol. The carrier frequency fc = nc/T for some fixed integer nc.

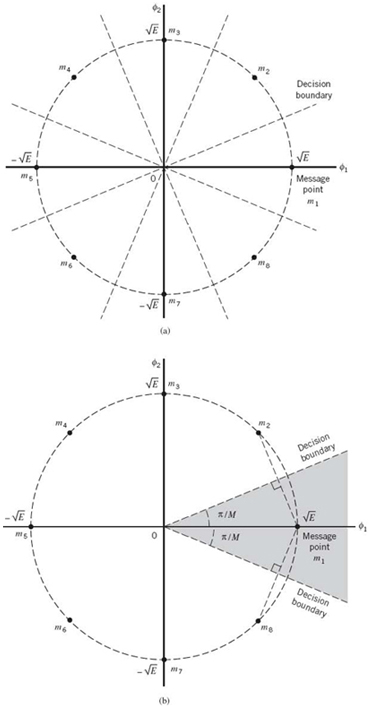

Each si(t) may be expanded in terms of the same two basis functions ϕ1(t) and ϕ2(t); the signal constellation of M-ary PSK is, therefore, two-dimensional. The M message points are equally spaced on a circle of radius![]() and center at the origin, as illustrated in Figure

7.21a, for the case of octaphase-shift-keying (i.e., M = 8).

and center at the origin, as illustrated in Figure

7.21a, for the case of octaphase-shift-keying (i.e., M = 8).

From Figure 7.21a, we see that the signal-space diagram is circularly symmetric. We may, therefore, apply (7.85), based on the union bound, to develop an approximate formula for the average probability of symbol error for M-ary PSK. Suppose that the transmitted signal corresponds to the message point m1, whose coordinates along the ϕ1- and ϕ2-axes are + ![]() and 0, respectively. Suppose that the ratio E/N0 is large enough to consider the nearest two message points, one on either side of m1, as potential candidates for being mistaken for m1 due to channel noise. This is illustrated in Figure 7.21b, for the case of M = 8. TheEuclidean distance for each of these two points from m1 is (for M = 8)

and 0, respectively. Suppose that the ratio E/N0 is large enough to consider the nearest two message points, one on either side of m1, as potential candidates for being mistaken for m1 due to channel noise. This is illustrated in Figure 7.21b, for the case of M = 8. TheEuclidean distance for each of these two points from m1 is (for M = 8)

![]()

Hence, the use of (7.85) yields the average probability of symbol error for coherent M-ary PSK as

where it is assumed that M ≥ 4. The approximation becomes extremely tight for fixed M, as E/N0 is increased. For M = 4, (7.132) reduces to the same form given in (7.123) for QPSK.

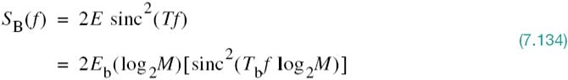

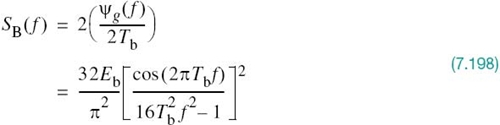

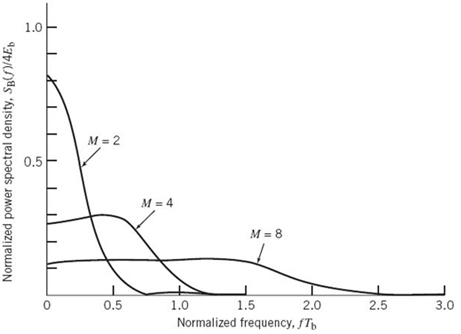

Power Spectra of M-ary PSK Signals

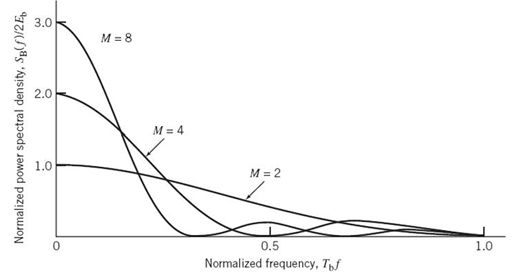

The symbol duration of M-ary PSK is defined by

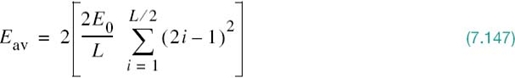

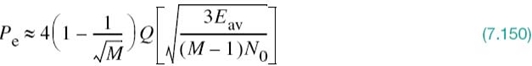

where Tb is the bit duration. Proceeding in a manner similar to that described for a QPSK signal, we may show that the baseband power spectral density of an M-ary PSK signal is given by

Figure 7.22 is a plot of the normalized power spectral density SB(f)/2Eb versus the normalized frequency Tbf for three different values of M, namely M = 2, 4, 8. Equation (7.134) includes (7.111) for M = 2 and (7.128) for M = 4 as two special cases.

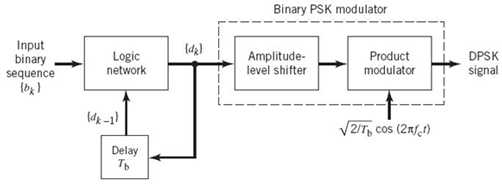

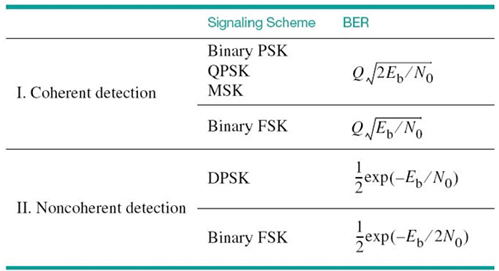

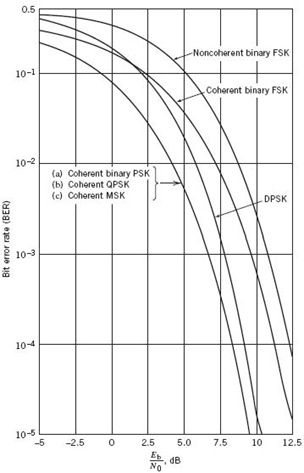

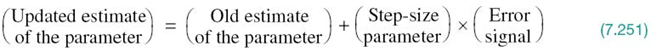

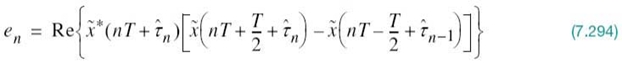

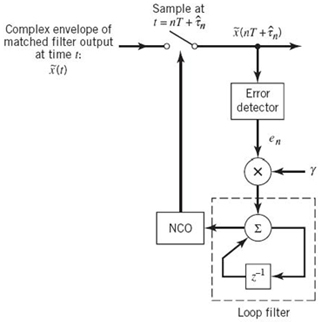

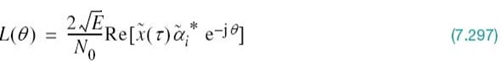

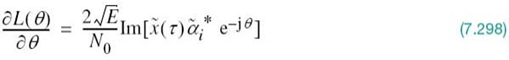

Figure 7.21 (a) Signal-space diagram for octaphase-shift keying (i.e., M = 8). The decision boundaries are shown as dashed lines. (b) Signal-space diagram illustrating the application of the union bound for octaphase-shift keying.