4

Investigation Methodology

So far, the last three chapters have set out the basics of incident response and how digital forensics plays a key role in understanding the nature of an incident. Another key component of incident response is the investigation component. An incident investigation is a methodology and process through which analysts form a hypothesis and test that hypothesis to answer questions regarding digital events. The main data that is fed into the digital investigation process comes from the proper handling and analysis of digital evidence. Figure 4.1 shows the relationship between digital forensics, incident response, and incident investigation.

Figure 4.1 – Relationship between digital forensics, incident investigation and incident response

This chapter will focus on the incident investigation as part of the overall incident response process. Through these methodologies, analysts will have a road map to follow that will allow them to approach an incident investigation according to an organized and systematic method.

This chapter will cover the following topics:

- An intrusion analysis case study: The Cuckoo’s Egg

- Types of incident investigation analysis

- Functional digital forensics methodology

- The cyber kill chain

- A diamond model of intrusion analysis

An intrusion analysis case study: The Cuckoo’s Egg

There have been very many high-profile incidents in the last 30 years, so finding one that encapsulates a good case study for an incident investigation is not difficult. It may be beneficial to go to the beginning and examine one of the first incident investigations where someone had to create methods of gathering evidence and tracking adversaries across the globe. One aspect of this analysis to keep in mind is that even without a construct, these individuals were able to craft a hypothesis, test it, and analyze the results to come to a conclusion that ultimately helped find the perpetrators.

In August 1986, astronomer and systems administrator at the Lawrence Berkley Laboratory (LBL), Cliff Stoll, was handed a mystery by his supervisor. During a routine audit, the staff at LBL discovered an accounting error by a margin of .75 dollars. At that time, computer resources were expensive. Every amount of computing that was used had to be billed to a project or department within the laboratory. This required very detailed logs of user account activity to be maintained and audited. A quick check of the error revealed a user account that had been created without any corresponding billing information.

The mystery deepened when the LBL received a message from the National Computer Security Center. They indicated that a user at LBL was attempting to access systems on the MILNET, the Defense Department’s internal network. Stoll and his colleagues removed the unauthorized account but the still-unidentified intruder remained. Stoll’s initial hypothesis was that a student trickster from the nearby University of California was playing an elaborate prank on both the LBL and the various MILNET entities that were connected. Stoll then began to examine the accounting logs in greater detail to determine who the individual behind the intrusion was. What followed was a ten-month odyssey through which Stoll traced traffic across the United States and into Europe.

After tossing the trickster hypothesis, Stoll’s first step was to create a log of all the activities that he and the other staff at LBL carried out. At the onset of his investigation, Stoll came upon an aspect of investigating network intrusions that other responders have had to learn the hard way: that an intruder may be able to access emails and other communications while having access to compromised systems. As a result, Stoll and his team resorted to in-person or telephone communications while keeping up a steady stream of fake emails to keep the intruder feeling confident that they had still not been detected. This log would come in handy at a later stage of the investigation when a clearer picture of the events emerged.

The first major challenge that Stoll and the team would have to contend with was the lack of any visibility into the LBL network and the intruder’s activity. In 1986, tools such as event logging, packet capture, and Intrusion Detection Systems (IDSs) did not exist. Stoll’s solution to this challenge was to string printers on all lines that were leading to the initial point of entry. These printers would serve as the logging for the unknown intruder’s activity. After examining one of the intruder’s connections, he was able to determine that the intruder was using the X.25 ports. From here, Stoll was able to set up a passive tap that captured the intruder’s keystrokes and output the data to either a floppy disk or a physical printer.

With the ability to monitor the network, Stoll then encountered another challenge: tracing the attacker back to their origin. At that time, remote connections were done over telephone lines and the ability to trace back a connection was dependent on a collection of phone companies. Compounding this challenge was the fact that the attacker would often use the LBL connection as a jumping-off point to other networks. This meant that the connections Stoll was hunting down would only last a few minutes. Stoll set up several alerts on the system that would page him via a belt pager, at which time he could remote into the LBL systems to begin another trace. Despite these steps, Stoll and his colleagues were still unable to maintain a connection long enough to determine the source.

The solution to this challenge was found in what the attacker was attempting to access. During his analysis, Stoll observed that the attacker was not only accessing the LBL network but had used the connection to search other networks belonging to the United States military and their associated defense contractors. The solution that Stoll came up with was based on a suggestion by his then-girlfriend. In order to keep the intruder connected, Stoll created a series of fake documents with titles that appeared to an outsider to be associated with the Strategic Defense Initiative (SDI) program. Anyone that was attempting to gain classified information would immediately recognize the strategic importance of these documents. Additionally, Stoll planted a form letter indicating that hard copy documents were also available by mail. Access to the files was strictly controlled with alerts placed on them to indicate when the attacker attempted to access them. This approach worked. Not only had the yet-to-be-identified intruder spent an hour reading the fictitious files, but they had also sent a request to be added to the mailing list via the post office.

The main hurdle that Stoll continually ran into during these 10 months was tracing the attacker’s connections to the LBL network. The intruder kept up a steady pattern of using a wide range of networks to connect through. Secondly, the intruder varied their connection times to only stay on for a few minutes at a time. Through his initial work, Stoll was able to identify a dial-up connection in Oakland, California. With cooperation from the telephone company, he was able to isolate a connection from a modem that belonged to a defense contractor in McLean, Virginia. With coordination from the data communications company Tymnet, Stoll and the team were able to trace the connection to the LBL network back to Germany.

It was after Stoll placed the fictitious documents on the LBL systems that he was able to complete his tracing. Even so, this tracing took a good deal of coordination between Stoll, Tymnet, various universities, and even German law enforcement. In the end, Stoll’s efforts paid off, as he was able to identify the perpetrators as members of the German Chaos Computer Club. The individuals were tied to additional break-ins associated with various universities and defense contractors in the United States.

It may seem odd to examine a network intrusion from 1986 but there is still a great deal we can learn from Stoll’s work. First, this was arguably the first publicly documented Advanced Persistent Threat (APT) attack. The subsequent investigation by the FBI and other governmental agencies determined that the Chaos Computer Club was attempting to gain financially by selling intelligence to the Komitet Gosudarstvennoy Bezopasnosti (KGB). An interesting aside is that initially, the KGB had very little experience in conducting network intrusions for intelligence purposes. Stoll uncovered a significant vulnerability that counterintelligence personnel should have been aware of: that adversarial intelligence agencies were now using the emerging internet as an intelligence collection method.

Cliff Stoll’s story

Cliff Stoll has written and spoken extensively on his experience tracking the LBL hacker. A copy of his article Stalking the Wily Hacker is included in the supplemental material for this chapter. Stoll also published a full book, The Cuckoo’s Egg. This is well worth reading, even though the events took place over 35 years ago.

A second lesson we can learn from this is that a comprehensive and detailed investigation can lead back to the origin of an attack. In this case, Stoll could have easily downed the connection and removed the attacker from the network. Instead, he set out to comprehensively detail the Tactics, Techniques, and Procedures (TTPs) of the attacker. With some deception, he was further able to tie an individual to a keyboard, a tall order in 1986. Overall, Stoll showed that through detailed investigation of an intrusion, he and others could uncover details of the wider scope of an attack that went far beyond a .75 cent accounting error into global espionage and cold war politics. These lessons are as salient today as they were in 1986. Keep this in mind as we examine how intrusion analysis serves as a source for our own understanding of attackers today.

Types of incident investigation analysis

Digital investigations are not all the same. There are a variety of reasons that a Computer Security Incident Response Team (CSIRT) will stop an investigation based on the time allowed, the type of incident, and the overall goal of the investigation. It makes no sense for two or three CSIRT analysts to spend a full day investigating a small-scale malware outbreak. On the other hand, a network intrusion where the adversary has been in the network for three months will require a much more detailed examination of the evidence to determine how the adversary was able to gain access, what information they aggregated and exfiltrated, and what the impact on the organization has been.

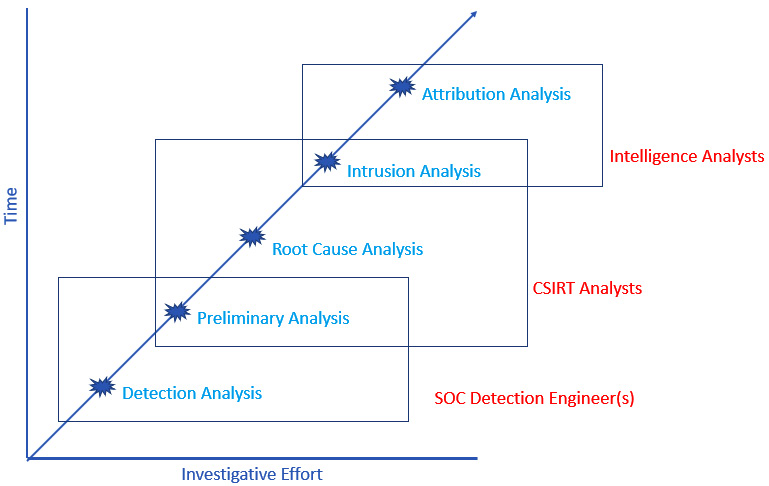

The result is that there are several different types of incident investigations conducted by various individuals within an organization. Figure 4.2 shows the five layers and the personnel involved, along with the corresponding time and the necessary investigative resources:

Figure 4.2 – Types of incident investigation

Let us discuss the five layers in detail:

- Detection analysis: This is the basic analysis that is often conducted at the first signs of a security event’s detection. For example, a security device’s telemetry indicates a network connection to a known Command-and-Control (C2) server. A quick check of the dashboard may indicate a localized event or potentially a wider incident. The detection analysis is often limited to telemetry and a secondary source, such as an external threat intelligence feed. The goal of this analysis is to determine whether the event is an incident that needs to be escalated to the CSIRT or not.

- Preliminary analysis: Security incidents are often ambiguous. CSIRT and SOC personnel need to develop insight into the initial infection, lateral movement, and how the adversary is maintaining control over the compromised system. The preliminary analysis utilizes tools that rapidly acquire selected evidence and analysis to determine the scope of an incident and provide information to the leadership, which can be used to contain an incident and gain time to decide on the next steps in investigation and response.

- Root-cause analysis: This type of investigation is usually executed in conjunction with containment steps. The main goal here is to acquire and analyze evidence to determine how the adversary was able to gain access to the network, what steps they took and what they were, and what the potential impact on the organization was. The aim of this type of investigation is to remediate vulnerabilities and improve the overall security of the enterprise to lessen the risk of future intrusions.

- Intrusion analysis: Organizations can glean a good deal of insight into the TTPs of an adversary through a root-cause analysis. An intrusion analysis goes into greater detail to present a comprehensive picture of how an adversary operated during the network intrusion. As Figure 4.2 shows, an intrusion analysis will often take a much longer time and more investigative effort than necessary to contain, eradicate, and recover from an incident. An intrusion analysis does benefit the organization, however, as far as it provides a comprehensive insight into the adversary’s behavior. This not only explains the adversary’s behavior but also provides valuable intelligence on more advanced adversaries in general.

- Attribution analysis: At the top end in terms of time and investigative effort is attribution. Attribution, simply put, ties an intrusion to a threat actor. This can be a group such as the Conti ransomware group, Fancy Bear, or, in some cases, such as with the Mandiant APT1 report (https://www.fireeye.com/content/dam/fireeye-www/services/pdfs/mandiant-apt1-report.pdf?source=post_page), intrusion activity may be tied to a specific unit of the Chinese military, along with specific individuals. The time and resources necessary for incident attribution are often outside the reach of CSIRTs. Attribution is most often reserved for cyber threat intelligence purposes. With that said, there are organizations that leverage the investigative experience, tools, and techniques of CSIRT members, so there is still a good chance that the team may be engaged in such an analysis.

Each one of these analyses has its place in incident investigations. Which type of analysis is conducted is dependent on several factors: first, the overall goal of the organization. For example, a network intrusion may be investigated to only determine the root cause, as the organization does not have the time or resources to go any farther. In other incidents, the organization may have legal or compliance requirements that dictate incidents are fully investigated, no matter how long it takes.

Second, the evidence available will largely dictate how far analysis can go. Without a good deal of evidence sources across the network, the ability to conduct a full intrusion analysis will be limited, or even impossible. Finally, the time available to conduct more intensive analysis is often a factor. The organization may not have the time or personnel to go in as deeply as a full intrusion analysis could, or it may feel that a root-cause analysis that removes the adversary from the network and prevents future occurrences suffices.

Functional digital forensic investigation methodology

There are several different methodologies for conducting analysis. The following digital forensics investigation methodology is based on the best practices outlined in the NIST Special Publication 800-61a, which covers incident response, along with Dr. Peter Stephenson’s End-to-End Digital Investigations methodology. These two methodologies were further augmented by the research publication Getting Physical with the Digital Investigation Process by Brian Carrier and Eugene H. Spafford.

The overall approach to this kind of methodology is to apply digital evidence and analysis to either prove or disprove a hypothesis. For example, an analyst may approach the intrusion based on the initial identification with the hypothesis that the adversary was able to gain an initial foothold on the network through a phishing email. What is necessary is for the analyst to gather the necessary information from the infected system, endpoint telemetry, and other sources to trace the introduction of malware through an email.

The following methodology utilizes 10 distinct phases of an incident investigation to ensure that the data acquired is analyzed properly and that the conclusion supports or refutes the hypothesis created.

Figure 4.3 – A ten-step investigation methodology

Identification and scoping

This is the first stage of an incident investigation, which begins once a detection is made and is declared an incident. The most likely scenario is that security telemetry such as an Endpoint Detection and Response (EDR) platform or an IDS indicates that either a behavior, an Indicator of Compromise (IOC), or some combination of the two has been detected within the environment. In other circumstances, the identification of an incident can come from a human source. For example, an individual may indicate that they clicked on a suspicious link or that their system files have been encrypted with ransomware. In other human circumstances, an organization may be informed by law enforcement that its confidential information has been found on an adversary’s infrastructure.

In any of these cases, the initial identification should be augmented with an initial examination of telemetry to identify any other systems that may be part of the incident. This sets the scope or the limits of the investigation. An organization does not need to address Linux systems if they were able to identify a Windows binary being used to encrypt Windows operating system hosts.

Collecting evidence

Once an incident has been identified and scoped, the next stage is to begin gathering evidence. Evidence that is short-lived should be prioritized, working down the list of volatility that was covered in Chapter 3. Incident response and digital forensics personnel should ensure that they preserve as much evidence as possible, even if they do not think that it will be useful in the early stages of an investigation. For example, if a network administrator knows that the firewall logs roll over every 24 hours, they should be captured immediately. If it is determined later that the firewall logs are of no use, they can be easily discarded but if they would have been useful and were not acquired, the organization may have lost some key data points.

The volatility and overall availability of evidence should be addressed in the incident response plan. For example, policies and procedures should be in place to address the retention of log files for a defined period. For example, the Payment Card Industry Data Security Standard (PCI DSS) requires that organizations covered by this standard have one year of logs available, with at least 90 days immediately available. Organizations need to balance storage costs with the potential evidentiary value of certain logs.

Several chapters of this book delve into further detail about evidence acquisition. The main point regarding an incident investigation is to have as complete an evidence acquisition as possible. The saying goes “the more, the better.” The last thing that an incident response or digital forensics analyst wants is a critical piece of the incident missing because the organization was not prepared for or able to acquire the evidence in a timely manner.

The initial event analysis

After the evidence has been acquired, the next stage of the investigative process is to organize and begin to examine the individual events. This may be difficult given the volume of data, so this is not necessarily a detailed examination, which comes later, but rather, the first analysis to determine which data points are of evidentiary value. For example, in a ransomware case, adversaries often make use of scripting languages such as Virtual Basic Scripting (VBS) or Base64-encoded PowerShell commands. A review of the Windows PowerShell logs may indicate the presence of an encoded command. A PDF or Word document found on an infected system may be examined to indicate the presence of VBS attacks.

This first stage is looking for obvious IOCs. An IOC can be defined as a data point that indicates that a system or systems is or was under adversarial control. IOCs can be divided into three main categories:

- Atomic indicators: These are data points that are indicators in and of themselves that cannot be further broken down into smaller parts, for example, an IP address or domain name that ties back to an adversary’s C2 infrastructure.

- Computational indicators: These are data points that are processed through some computational means, for example, the SHA256 file hash of a suspected malware binary.

- Behavioral: Behavioral indicators are a combination of both atomic and computational indicators that form a profile of adversary activity. Behavioral indicators are often phrases that place the various IOCs together. For example, the statement the adversary used a web shell with the following SHA256 value: 7e1861e4bec1b8be6ae5633f87204a8bdbb8f4709b17b5fa14b63abec6c72132. An analysis of the web shell indicated that once executed, it would call out to the baddomain.ru domain, which has been identified as the adversary’s C2 infrastructure.

During this stage of the investigation, there is an increased potential for false positive IOCs in the same data that true IOCs are in. This is expected at this stage. The key at this stage of the investigation is to determine what looks suspicious and include it in the investigation. There will be plenty of opportunities to remove false positives. A good rule to follow is if you have any doubt about an IOC, include it until such a time that you can positively prove it is either malicious or benign.

The preliminary correlation

At this point in the investigation, the analysts should start to detect some patterns, or at least see relationships in the IOCs. In the preliminary correlation phase, analysts start to marry up indicators that correlate. As Dr. Peter Stephenson states, correlation can be defined as:

The comparison of evidentiary information from a variety of sources with the objective of discovering information that stands alone, in concert with other information, or corroborates or is corroborated by other evidentiary information.

Simply put, the preliminary correlation phase takes the individual events and correlates them into a chain of events. For example, we can take the case of a web shell that has been loaded to a web server. In this case, there are several specific evidence points that are created. A web application firewall may see the HTTP POST in the logs. The POST would also create a date and time stamp on the web server. An analysis of the web shell may indicate that another external resource is under the control of the adversary. From these data points, the analyst would be able to determine the adversary’s IP address from the Internet Information Service (IIS) logs, when the web shell was posted, and whether there was any additional adversary infrastructure contained within it.

A good way to look at the preliminary correlation phase is the first time that the analyst is saying “this happened, then this, and then this”.

Event normalization

Adversary actions on a system may have multiple sources of data. For example, an adversary uses the Remote Desktop Protocol (RDP) and compromises credentials to gain access to the domain controller. There will be entries within the Windows event log related to the RDP connection and the use of the credentials. If the adversary traversed a firewall, there would also be a record of the connection in the firewall connection logs. Again, according to Dr. Stephenson, the event normalization phase is defined as the combination of evidentiary data of the same type from different sources with different vocabularies into a single, integrated terminology that can be used effectivity in the correlation process.

In this stage, the duplicate entries are combined into a single syntax. In the previous case, the various entries in question can then be combined into a statement regarding the adversary gaining access to the system via the Windows RDP.

One challenge that has been an issue in the past with event normalization is the formulation of a global syntax of adversary behaviors, such as the one in the previous example. To address this, the MITRE Corporation has created the Adversarial Tactics, Techniques, and Common Knowledge (ATT&CK) framework. This knowledge base addresses the tactics and techniques used by adversaries to carry out network intrusions. This knowledge base provides a standard syntax to describe adversary actions and normalize various pieces of evidence. The ATT&CK knowledge base has become such a mainstay in describing adversaries that you will find it included in Chapter 17.

Event deconfliction

There are also times when there are multiple events related to an adversary’s activity. For example, brute forcing passwords will produce a significant number of failed login event entries. A brute-force attempt that records 10,000 failures should be counted as a single event. Instead of listing all of them, the analyst can simply record the failures during a defined time, such as between 1634 and 1654 UTC on April 10, 2022. In this way, the overall intent and the adversary action are known without having to include all the raw data.

The second correlation

Now that the data has gone through an initial correlation and subsequent normalization and deconfliction processes, the analysts have a set of data that is then fed through a second correlation. This second correlation should produce a much more refined set of data points that can then be fed into the next phase.

The timeline

The one output of an incident investigation is a timeline of events. Now that the analysts have the incident events normalized, deconflicted, and correlated, they should place the events in order. There is no specialized tool that analysts need; a simple spreadsheet or diagram can be used to craft out the sequence of events that led to the network intrusion.

Kill chain analysis

At this stage, the analyst should have the necessary indicators extracted from the evidence, normalized and deconflicted, and in time sequence. The next phase is to place the IOCs and other evidence into a construct that guides the analyst through an understanding of the relationship of the events to the overall intrusion, along with the interaction between the adversary and the victim organization. A construct in common usage is the combination of the Lockheed Martin Cyber Kill Chain and the diamond model of intrusion analysis. Due to their importance, these two methods are covered in the next two sections.

Reporting

One critical piece that is often overlooked in incident investigations is the reporting piece. Depending on the type of analysis, reports can be detailed and lengthy. It is therefore critical to keep detailed notes of an incident analyst’s actions and observations throughout the entire process. It is nearly impossible to reconstruct the entire analysis after several days at the very end.

Incident reporting is often divided into three sections and each one of these addresses the concerns and questions of a specific audience. The first section is often the executive summary. This one-to-two-page summary provides a high-level overview of the incident and analysis and details what the impact was. This allows senior leadership to make decisions for further improvement, along with reporting findings to the board or regulatory oversight bodies.

The second section of the report is the technical details. In this section, the incident response analysts will cover the findings of the investigation, the timeline of the events, and the IOCs. Another focus of the section will be on the TTPs of the adversary. This is critical information for both leadership and technical personnel, as it details the sequence of events the adversary took, along with the vulnerabilities that they were able to exploit. This information is useful for long-term remediation to reduce the likelihood of a similar attack in the future.

The final section of the report is the recommendations. As the technical section details, there are often vulnerabilities and other conditions that can be remediated, which would reduce the likelihood of a similar attack in the future. Detailed strategic and tactical recommendations assist the organization in prioritizing changes to the environment to strengthen its security.

Reporting will be covered in depth later, in Chapter 13.

The cyber kill chain

The timeline that was created as part of the incident investigation provides a view into the sequence of events that the adversary took. This view is useful but does not have the benefit of context for the events. Going back to the RDP example, the analyst can point to the date and time of the connection but lacks insight into at which stage of the attack the event took place. One construct that provides context is placing the events into a kill chain that describes the sequence of events the adversary took to achieve their goal.

The military has used the concept of kill chains to a great extent to describe the process that units must execute to achieve an objective. One version of this concept was outlined in the United States military’s targeting doctrine of Find, Fix, Track, Target, Engage, Assess (F2T2EA). This process is described as a chain because it allows a defender to disrupt the process at any one step. For example, an adversary that you can find and fix but that can slip tracking through subterfuge would go unengaged due to the chain being broken.

In the white paper Intelligence-Driven Computer Network Defense Informed by Analysis of Adversary Campaigns and Intrusion Kill Chains, Eric Hutchins, Michael Cloppert, and Rohan Amin outlined a kill chain that specifically addressed cyber intrusions. In this case, the group expanded on the existing F2T2EA model and created one that specifically addresses cyber attacks. At the heart of almost all network intrusions is a threat actor that must craft some form of payload, whether that is malware or another exploit, and have that payload or exploit breach perimeter defenses. Once inside, the adversary needs to establish persistent access with effective command and control. Finally, there is always some objective that must be satisfied, such as data theft or destruction. This chain can be seen in Figure 4.4, which outlines the seven stages of the cyber intrusion kill chain: Reconnaissance, Weaponization, Delivery, Exploitation, Installation, Command and Control, and Actions on Objectives.

Figure 4.4 – The cyber kill chain

The first stage of the cyber kill chain is the Reconnaissance phase. On the surface, this stage may appear to simply be the identification of and initial information gathering about a target. The Reconnaissance phase involves a good deal more. It is best to think of this phase as the preparatory phase of the entire intrusion or campaign. For example, a nation-state APT group does not choose its targets. Rather, targets are selected for them by a command authority, such as a nation’s state intelligence service. This focuses their reconnaissance against their target. Other groups such as those involved in ransomware attacks will often perform an initial round of reconnaissance against internet-connected organizations to see whether they are able to find a vulnerable target. One a vulnerable target is identified, they will then conduct a more focused reconnaissance.

This stage also involves the acquisition of tools and infrastructure needed to carry out the intrusion. This can include the coding of exploits, registering domains, and configuring command-and-control infrastructure. To target a specific organization, the group may also compromise a third party as part of the overall intrusion. A perfect example of this was the Solar Winds compromise, where it is suspected that an APT group associated with the Russian SVR compromised the software manufacturer to carry out attacks on their customers.

Once the backend infrastructure is configured, the group will then conduct their reconnaissance of the target. This reconnaissance can be broken into two major categories. The first is a technical focus where the threat actor will leverage software tools to footprint the target’s infrastructure, including IP address spaces, domains, and software visible to the internet. This technical focus can also go even deeper, where threat actors research vulnerabilities in software that they have identified during their reconnaissance.

A second focus will often be the organization and employees. One common way that threat actors will gain access to the internal network is through phishing attacks. Understanding the target’s primary business or function along with the key players can help them craft emails or other phishing schemes that appear legitimate. For example, identifying a key person in the account-receivable department of the target organization may help the threat actor craft an email that appears legitimate to a target employee. This can be enhanced further with an understanding of specific products or services an organization offers through an examination of business documents that are made public or descriptions on the target’s website.

It can be very difficult to determine the specifics behind reconnaissance activity when conducting an intrusion investigation. First, much of the reconnaissance will not touch the target’s network or infrastructure. For example, domain records can be accessed by anyone. Searches of social media profiles on sites such as LinkedIn are not visible to the defenders or the analysts. Second, it is next to impossible to identify a threat actor’s IP address from the thousands that may have connected to the target’s website in the past 24 hours. Finally, the tasking and any other preparation take place outside the view of any network defender and may only be visible during a post-incident intrusion analysis.

The next stage of the kill chain is the Weaponization phase. During this phase, the adversary configures their malware or another exploit. For example, this may be repurposing a banking trojan such as Dridex, as is often seen in ransomware attacks. In other instances, Weaponization may be a long process in which custom malware is crafted for a specific purpose, as was seen with the Stuxnet malware. Weaponization also includes the packaging of the malware into a container such as a PDF or Microsoft Word document. For example, a malicious script that serves as the first stage of an intrusion may be packaged into a Word document.

Much like the first phase, Weaponization takes place outside the view of the defender. The important aspect of analyzing the Weaponization stage of an intrusion is that the analyst will often gain insight into an activity that took place days, weeks, or even months ago when the adversary was crafting their exploit or malware.

The third phase of the kill chain is the Delivery of the exploit or malware into the defender’s environment. Delivery methods vary from the tried-and-true phishing emails to drive-by downloads and even the use of physical devices such as USBs. For analysts, understanding the root cause of an incident is an important part of the chain. Successful delivery of a payload may be indicative of a failure of some security controls to detect and prevent the action.

Nearly all successful intrusion involves the exploitation of vulnerabilities. This can be a vulnerability in the human element that makes phishing attacks successful. In other circumstances, this can be a software vulnerability, including the feared zero-day. In either case, the fourth phase of the kill chain is Exploitation. At this stage, the adversary exploits a vulnerability in the software, the human, or a combination of both. For example, an adversary can identify a vulnerability within the Microsoft IIS application that they are able to exploit with shell code. In some intrusions, multiple exploits against vulnerabilities are used in a loop until the adversary can fully exploit the system or systems. For example, an attacker crafts a phishing email directed at an employee in accounts payable. This email contains an Excel workbook that contains a Visual Basic for Applications (VBA) script. The first exploitation occurs when the individual opens the email and then the Excel spreadsheet. The next exploitation leverages the inherent vulnerability of Excel to execute the VBA script.

So far in the analysis of the kill chain, the adversary has conducted a reconnaissance of the target, crafted their exploit or malware, and then delivered them to the target. Leveraging any number of vulnerabilities, they were able to gain an initial foothold into the target network. The next stage is to maintain some sort of persistence. This is where the next stage of the kill chain, Installation, comes into play.

The initial infection of a system does not give the adversary the necessary long-term persistence that is needed for an extended intrusion. Even attacks such as ransomware require an adversary to have long-term access to the network for network discovery and to move laterally throughout. In the Installation phase, the adversary installs files on the system, makes changes to the registry to survive a reboot, or sets up more persistent mechanisms, such as a backdoor. One aspect to keep in mind when looking at the Installation stage is that not all actions will leave traces on the disk. There are tools that are leveraged by adversaries that limit the evidence left by running entirely in memory or remaining hidden by not communicating with any external resources.

Once the adversary can establish their persistence, they need to be able to interact with the compromised systems. This is where phase six, Command and Control, comes into play. In this stage, the adversary establishes and maintains network connectivity with the compromised systems. For example, post-exploitation frameworks such as Metasploit and Cobalt Strike allow an adversary to communicate and execute commands on impacted systems.

The final stage, Actions on Objectives, is where the adversary executes actions after they have effective control of the system. These actions can vary from sniffing network data for credit cards to the theft of intellectual property. In some instances, there may be multiple actions that take place. A sophisticated cybercriminal may gain access to a network and exfiltrate data over a day. Then, as they are completing their intrusion, they encrypt the systems with ransomware. The important consideration at the last stage is to include actions that take place after command and control has been established.

The diamond model of intrusion analysis

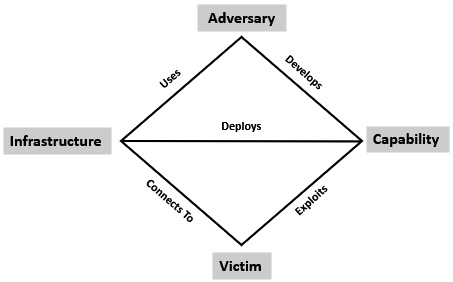

The cyber kill chain provides a construct to place adversarial action in the proper stages of an intrusion. Going deeper requires examining the relationship between the adversary and the victim organization. The diamond model of intrusion analysis provides an approach that considers much more detail than the cyber kill chain’s phases. The diamond model was first created by Sergio Caltagirone, Andrew Pendergast, and Christopher Betz in the white paper The Diamond Model of Intrusion Analysis. A simple way to understand the diamond model is this: an adversary deploys a capability over some infrastructure against a victim. These activities are called events and are the atomic features. What this model does is uncover the relationship between the adversary and the victim and attempt to determine the tools and techniques used to accomplish the adversary’s goal.

Figure 4.5 visualizes the basic structure of the diamond model with the following four vertices: Adversary, Capability, Victim, and Infrastructure. In addition to the four vertices, there are also five relationships: Uses, Develops, Exploits, Connects To, and Deploys. Coupled together, these provide the foundation for describing the relationship of the four vertices.

Figure 4.5 – The diamond model

The Adversary vertex describes any information or data concerning the perpetrators of the intrusion activity. This can be either a group or an individual, which can be further broken down into whether the adversary is an operator or customer. An adversary that is defined as a customer is an individual or group that will benefit from the activity. Sophisticated cyber threat actors will often leverage task division in which one group conducts a specific task. For example, a malware coder working as part of a ransomware gang would fall into this category. The customer for the output of this activity can be thought of as a customer. In other circumstances, an operator can be a lone individual who is conducting their own activities independent of anyone else that will directly benefit.

Data about adversaries can include online personas, such as social media identifiers or email addresses. In other circumstances, intent or motivation can be brought into the Adversary vertex. It should be noted that while the intent may be very simple, such as a financially motivated ransomware attack, in other circumstances, determining the motivation from a single intrusion may be difficult.

The next vertex, Capability, describes what tools and tradecraft the adversary can leverage. The challenge with regards to Capability is that there is a wide spectrum in terms of tools and tradecraft. For example, a novice adversary may use scripts and well-known hacking tools such as Metasploit or Cobalt Strike to carry out an attack that takes a low degree of skill or experience. On the other side of the spectrum are the APT groups that can craft custom malware that exploits zero-day exploits and that can remain undetected for months or even years.

When discussing Capability, it is important to consider two facets. First, when discussing tools, keep in mind what falls into the tool category. Malware is easily categorized as a tool but what about legitimate tools? For example, PowerShell alone does not represent a capability but using PowerShell to execute an encoded script that downloads a secondary payload would. The adversary’s goal or intent has a direct impact on what can be considered a capability. If the tool’s usage furthers this intent, it should be identified as a capability.

Second, despite their sophistication, adversaries will often leverage a few patterns. For example, an adversary may use a combination of phishing emails and malicious scripts to establish a foothold on a system. Once a C2 link has been established, you may see commands such as whoami.exe in a specific order. It is important to make note of such activity, even though it may seem mundane.

The next vertex is Infrastructure. One aspect of threat actors that often gets lost in the sensationalism and mysticism surrounding the dreaded hacker or APT is this: threat actors are bound by the same constraints of software, hardware, and technology that everyone else is. They do not exist outside the four corners of technology and thus must operate as we all do. This is where Infrastructure comes into play. In this context, Infrastructure refers to a physical or logical mechanism that the adversary uses to deploy their tools or tradecraft. For example, an adversary may leverage public cloud computing resources, such as Amazon Web Services (AWS) or Digital Ocean. From here, they can configure a Cobalt Strike C2 server. From an analysis, the IP address or domain registration for this server would serve as a data point under the infrastructure vertex.

In Figure 4.6, we have a visualization of the relationship between the four vertices. The Adversary develops some capability, indicated by 1, and deploys that capability over an Infrastructure, indicated by 2, and finally connects to the victim, indicated by 3. When discussing the Victim vertex, it can be broken down into either an individual or an organization. Phishing attacks may target a single individual or may be directed at the organization at large. Adversary capabilities and infrastructure can also be directed at humans or systems. The diamond model delineates the human victim as an Entity and the system component as an Asset.

Figure 4.6 – The diamond model relationship

Let’s look at a real-world example where we can express the Adversary/Victim relationship in the diamond model in the context of the execution of a real-world attack. In this case, we will go ahead and look at an example of a Drive-By Compromise malware delivery technique (T1189 found in the MITRE ATT&CK framework at https://attack.mitre.org/techniques/T1189/). In this example, the adversary has set up a website that appears legitimate. When the site is accessed, the adversary then delivers malicious JavaScript that attempts to exploit a vulnerability in common internet browsers.

Figure 4.7 shows the potential data points that can be extracted during an analysis of the attack. In this case, we will look at the Delivery phase of the cyber kill chain, which denotes how the adversary will deliver their exploit. In this case, the adversary leveraged internal capabilities (1) to configure a website with the functionality to deliver malicious JavaScript. Some adversaries have been known to use the Java-based profiler RICECURRY to determine what vulnerabilities web browsers have and exploit them based on this data. After crafting the specific malware delivery mechanism, the infrastructure is configured to host the malicious site. In this case, the badsite.com domain (2) and the corresponding hosting provider can be included. Once a victim navigates to the site via a browser, they become infected. In this case, the victim can be represented as a system name (3), in this case, Lt0769.acme.local. Depending on the analysis team’s ability, they may be able to trace the attack to the specific domain that was used as the watering hole attack. A review of the WHOIS registration information may provide details such as the email address (4) that the adversary used to register the site.

Figure 4.7 – An example of the diamond model

The diamond model serves as a good construct to show the relationship between the adversary, their capabilities and infrastructure, and the victim. What is required is the data in which to draw these relationships. The ability to leverage this construct is dependent on the ability to locate these data points and augment them with external data to map these relationships out. Regardless of whether or not the victim has this data, the following axioms apply directly to every intrusion.

The diamond model’s utility is derived from how it defines the relationship with each vertex. This provides a context to the overall event, as opposed to just seeing an IP address in the firewall logs. Digging deeper into that data point has the potential to uncover the infrastructure the adversary uses, its tools, and its capability. In addition to the construct, the authors of the diamond model have also defined several axioms to keep in mind.

Diamond model axioms

Axiom 1: For every intrusion event there exists an adversary taking a step towards an intended goal by using a capability over infrastructure against a victim to produce a result. This is the heart of the diamond model. The previous discussion of how indicators of compromise related to each other in the model is represented by this axiom. The key here is that the adversary has a goal, whether that is to access confidential data or to deploy ransomware into the environment.

Axiom 2: There exists a set of adversaries (insiders, outsiders, individuals, groups, and organizations) that seek to compromise computer systems or networks to further their intent and satisfy their needs. The second axiom builds upon the key point found in the first axiom, which is that the adversary has a goal. The second axiom also relates to the previous discussion about the various levels of digital investigation. A root-cause analysis attempts to answer the how of an intrusion. An intrusion analysis attempts to answer the question of why.

Axiom 3: Every system, and by extension every victim asset, has vulnerabilities and exposures. It is often repeated that the only secure system is one that is turned off. Every system has vulnerabilities and exposures that an adversary can exploit. These vulnerabilities can manifest themselves as features within the operating system that an adversary exploits, such as the ability to dump the LSASS.exe process from memory, and access to credentials. Of course, there is also the dreaded zero-day vulnerability, which is exploited before it is even identified.

Axiom 4: Every malicious activity contains two or more phases that must be successfully executed in succession to achieve the desired result. An adversary may attempt to deploy the first-stage malware against an infrastructure but if the system’s antimalware protection blocks the execution of the malware, the model is incomplete and therefore no compromise has taken place.

Axiom 5: Every intrusion event requires one or more external resources to be satisfied prior to success. Keep in mind that the adversary is bound to the same rules and protocols as their target organization. There is no magical set of adversary tradecraft and tools that they use. In an intrusion, the adversary has to configure a C2 infrastructure, aggregate their tools, register domains, and host malware delivery platforms. These are all data points that should be incorporated into any intrusion analysis to gain as complete a picture of the adversary as possible.

Axiom 6: A relationship always exists between the Adversary and their Victim(s) even if distant, fleeting, or indirect. A concept that is often used in the investigation of criminal activity is victimology or the study of the victim. Specifically, investigators look at the aspects of the victim, their personality, habits, and lifestyle to determine why they were selected for victimization. The same thought process can be applied to intrusion analysis. Whether or not the adversary compromised a web server to mine bitcoin or conducted a months-long intrusion to gain access to confidential data, the adversary has a goal. This behavior creates a relationship.

Axiom 7: There exists a sub-set of the set of adversaries that have the motivation, resources, and capabilities to sustain malicious effects for a significant length of time against one or more victims while resisting mitigation efforts. The previous axiom set up the adversary-victim relationship. Some of these are short in duration, as with a ransomware case where the victim was able to recover. In other cases, the adversary is able to maintain access to the network despite the victim’s attempts to remove them. This type of relationship is often referred to as a persistent adversary relationship. This is the APT that is often discussed in relation to nation states and well-funded adversaries. In these cases, the adversary can maintain access for a long period of time and take steps to maintain that persistence, even as the victim tries to remove them.

Corollary: There exists varying degrees of adversary persistence predicated on the fundamentals of the Adversary-Victim relationship. Again, the victim-adversary relationship, along with the goal of the adversary, will dictate the adversary-victim relationship. The point is that for each intrusion, this relationship is unique.

A combined diamond model and kill chain intrusion analysis

The kill chain provides a straightforward method of delineating specific adversary actions that take place during a network intrusion. What the kill chain lacks is a consistent structure of the relationship between the adversaries. For example, an analysis of an intrusion may uncover a weaponized PDF document attached to an email sent to the comptroller of the company. While understanding the delivery method is useful in understanding the root cause, going deeper into the intrusion requires further detail. That is where combining the diamond model into the kill chain comes into play.

The diamond model represents an individual event, in this case, the data around the method the adversary used to deliver their payload. Integrating a diamond model into each phase of the kill chain provides a much more structured and comprehensive approach to intrusion analysis. In other words, as Figure 4.8 shows, for each stage of the kill chain, there is a corresponding diamond model in which evidence acquired during the analysis is placed.

Figure 4.8 – A combined kill chain and diamond model

The goal should be for each vertex of the diamond model to be identified for each phase of the cyber kill chain. Obviously, that may only be possible in a perfect world. Some evidence associated with an intrusion will be unavailable to analysts. Therefore, a more realistic benchmark is necessary. Robert Lee, the author of the SANS Cyber Threat Intelligence course, has provided the benchmark of at least one vertex having to contain an evidence item or items for phases two through six to be considered complete. This does not mean that the evidence uncovered does not have value but that our confidence in the intrusion analysis is based on uncovering as much detail as possible while balancing time efforts.

Another consideration that you may need to address is circumstances where analysts must investigate and analyze two intrusions into the network. For example, the first intrusion was a phishing email that contained a malicious document that downloaded a secondary payload designed to exploit a vulnerability in the Windows OS. This malware was stopped at the Exploitation stage by the antivirus and the intrusion was unsuccessful at reaching the Actions on Objectives stage.

The second intrusion was similar, but instead of the antivirus blocking the malware from executing, it was able to exploit a vulnerability and execute. The adversary was further able to install a persistence mechanism and configure a C2 channel before being discovered and the system isolated. In this case, the second intrusion takes precedence over the first and should be investigated first. If there are resources available, the first intrusion investigation can be run in parallel but if there are additional resources needed for the second investigation, again, that takes precedence.

The technical tools and techniques of intrusion analysis would take a whole book to cover in themselves. What the diamond model / cyber kill chain methodology does is provide a construct to both guide the analysis and place the evidence items within an appropriate relationship to each other, so that a more comprehensive analysis of the adversary is conducted.

This combined kill chain and diamond model analysis construct is useful for a full intrusion analysis. Again, the deciding factor of whether an intrusion analysis is successful is dependent on the organization’s ability to aggregate the necessary evidence to populate the specific vertices of the applicable diamond models in their appropriate kill chain phases. Often, constraints such as evidence volatility, visibility, and lack of expertise reduce the chances of being able to successfully investigate a network intrusion.

Attribution

The pinnacle of an incident investigation is attribution. In attribution, the analysts can directly tie an intrusion to an individual or threat actor group, whether that is an individual, group, or government organization. Given that threat actors, especially highly skilled ones, can cover their tracks, attributing an attack is extremely difficult and is largely reserved to governments or private entities that specialize in long-term analysis and have datasets from a large number of intrusions.

For example, the cyber threat intelligence provider Mandiant released the APT1 report in 2013. This report directly implicated individuals in the second Bureau of the Chinese PLA General Staff’s third Department. This conclusion took years, based on data from 300 separate indicators and 141 separate organizations that suffered an intrusion. These numbers are a testament to how much data is required for proper attribution, which is likely outside the reach of analysts within their organization.

These data points are combined with other intrusion analyses where patterns of overlapping capabilities, infrastructure, and victims are identified. From here, data about the adversary for each intrusion set is used to possibly determine who was behind an intrusion. Again, this is most often outside the purview of the analyst or analysts investigating intrusions but does come into play with organizations that leverage this type of incident analysis for threat intelligence purposes.

Summary

Digital forensics does not exist in a vacuum. The tools and techniques that this book focuses on exist as part of a larger effort. Without a methodology to test an analyst’s hypothesis, digital forensics is merely the gathering and extraction of data. Rather, it is critical to understand what type of incident investigation is needed and to determine what methodology is applicable. To answer the key questions related to an intrusion requires the incorporation of the incident investigation methodology and the diamond model of intrusion analysis. This combination of these two constructs provides the structure in which analysts can properly examine the evidence and test their hypothesis.

The next chapter will begin the process of evidence acquisition by examining tools and techniques focused on network evidence.

Questions

- The type of incident investigation that is concerned with determining whether an event is an incident or not is:

- Attribution

- Root cause

- Detection

- Intrusion analysis

- What is the first phase of the cyber kill chain?

- Reconnaissance

- Weaponization

- Command and Control

- Delivery

- Obtaining data during the Reconnaissance phase of the cyber kill chain is often difficult due to the lack of any connection to the target network.

- True

- False