A final task to complete before beginning the actual process of planning your server installation and post-implementation management is selecting and categorizing all the software you plan to deploy on the Terminal Servers. This includes not only the applications you want to deliver to your users but also any server support software you’ll use to manage the environment.

After developing the two application lists, you need to prioritize the order in which the applications will be installed. I usually arrange applications for installation as follows:

Common application components—. These usually consist of low-level middleware components such as ODBC drivers or SQL drivers (Sybase, Oracle, and the like) that are required by the applications, the administrative utilities, and possibly the server software itself (MetaFrame, for example). The Microsoft Data Access Components (MDAC) application is a good example of an application installed during this step.

NOTE:

MDAC 2.5 ships with Windows 2000 Server, while MDAC 2.8 ships with Windows Server 2003. The version appropriate for your implementation will depend on your application needs and any security updates that may have become available. For example, Service Pack 3 for MDAC 2.5 is currently available and a recommended update for a Windows 2000 deployment if you are not going to a newer MDAC version. This update would include bug fixes as well as patches for any known security issues. Find out more about MDAC on the MSDN Web site: http://msdn.microsoft.com/data/default.aspx.

Administrative support software—. Next I install the necessary support software to be used by administrators. This usually includes tools such as the Server Resource Kit and any third-party resource management tools such as Microsoft Operations Manager (MOM) or NetIQ.

Common-user-application software—. Next comes the common-user-application software that will be installed on all Terminal Servers, along with any software required for one or more other applications. In most cases this includes office productivity tools, Adobe Acrobat Reader, and so on. Alternate Web browsers such as Opera or Mozilla could be installed during this phase so they are available if required.

User applications—. The final installation step handles remaining applications to be used by the user. Microsoft Office, Lotus Notes, and custom client/server applications are examples.

Chapter 21, “Application Integration,” discusses the steps involved in installation and configuration of applications on Terminal Server.

Administration and system support tools are an important part of software planning, but their need in the environment is often overlooked until near the end of the project or when an issue arises requiring these tools. I always recommend that administrative support tools be identified early on, so any additional hardware or software requirements can be addressed and resolved prior to the project entering any form of piloting or testing phase. Some components, such as service packs or hotfixes, need to be addressed even before you begin the operating system installation, so you have a clear and documented idea of how the server should be built.

TIP:

During the investigation of your planned support software, maintain a list of all the common components and additional resources they may require. For example, Citrix’s Resource Manager, a component of MetaFrame Presentation Server Enterprise Edition, requires existence of a Microsoft SQL Server or Oracle database server to store historical application and server metrics.

Many organizations look to leveraging the management features of Terminal Server to centralize their operations. A requirement of this may involve support of users who require access to non-English versions of software. Through use of the Multilingual User Interface (MUI), you can configure a Windows Terminal Server to support simultaneous users configured to access the Windows desktop in the language of their choice. Language selection can be configured by the user through the Regional Settings option on the Control Panel or defined within a group policy object.

To utilize this feature, you must purchase the MUI version of Windows Server 2003 or Windows 2000 Server. After installing the base English version of the server OS, the desired MUI components are installed on the server to enable access to those language options. You can find out more information about Windows MUI on the Microsoft Web site at http://www.microsoft.com/globaldev/DrIntl/faqs/MUIFaq.mspx.

Both Microsoft and Citrix periodically release service packs, hotfixes, and security patches for their products. Considering how quickly tools and source code are created and distributed on the Internet that take advantage of a known exploit in an operating system, particularly a Microsoft operating system, it is the responsibility of every administrator to ensure that their servers are properly secured. I strongly recommend that you keep up-to-date on these patches as they become available. Chapter 9, “Service Pack and Hotfix Management,” discusses in detail the process and steps to managing this patching process.

Microsoft and Citrix provide load-balancing solutions specific to their environments, which I discussed briefly in Chapter 1, “Microsoft Windows Terminal Services,” and Chapter 2, “Citrix MetaFrame Presentation Server.” Microsoft has its Network Load Balancing (NLB) component of Windows Cluster and Citrix has its Load Manager. If your deployment involves anything other than a single-server implementation, I recommend that you plan to include the appropriate load-balancing solution. Load balancing lets you better utilize existing resources by automatically distributing users across all available servers and providing a means of allowing users to quickly reconnect to the environment if a server becomes unavailable. It also eases support by letting you schedule removal of hardware for servicing without having to modify the clients. Through load balancing, users simply connect to the environment and log on to one of the remaining available servers.

Even if your current environment contains only a single server, planning to support a load-balanced environment now can make the transition much smoother in the future. For example, referencing the server using an alias that has been defined in the DNS for the environment instead of the actual host name can make switching to a cluster name with NLB much easier. Or publishing all applications on your MetaFrame server and not having users connect directly to the server name will mean that client changes will not be required when moving to a load-balanced MetaFrame environment. Chapter 22, “Server Operations and Support,” provides details on implementation of Microsoft NLB and Citrix Load Management.

One key to successful management of a large-scale implementation is being able to build a server quickly from a standard image. This functionality is important for two reasons. One, it provides a means of establishing an effective disaster-recovery plan that lets you quickly re-create your exact environment, given the proper hardware and supporting infrastructure. Second, it lets you expand the environment easily or roll back to a previous configuration in a consistent fashion.

A number of means exist for cloning a server. Utilities such as Symantec’s Norton Ghost let you capture an entire snapshot of a drive and save it to a file that can be imaged onto another machine. Other methods of cloning involve use of backup software in conjunction with a second operating system installation used to restore the backup server image onto the new hardware. The exact process depends on the software used.

I’ve found cloning to be an invaluable process for managing a Terminal Server environment. Using cloning, a Terminal Server can typically be built from an image in 30–60 minutes. The result is a completely functional server containing all applications installed prior to creation of the image. Details on the planning and process of server cloning are discussed in Chapter 8, “Server Installation and Management Planning.”

NOTE:

Issues have been raised in the past about a lack of support on Microsoft’s part for cloning of Windows 2000 or 2003 servers. Foremost is the question of SID uniqueness on the cloned server. When Windows is installed, a unique security identifier (SID) is generated for that machine. This SID plus an incremented value known as the relative ID (RID) are used to create local security group and user SIDs. If an image of this server is cloned onto another computer, that second computer will have the same computer and account SIDs that existed on the first computer, introducing a potential security problem in a workgroup environment. The local SID is also important in a Windows 2000/2003 domain, as it uniquely identifies a computer in the domain.

Fortunately, Microsoft does support a technique for cloning complete installations of Windows from one server to another. Using a utility available for free download from the Microsoft Web site called Sysprep, you can prepare a Windows Terminal Server for replication and have a unique SID automatically generated on the target server when it reboots. Sysprep doesn’t perform the actual imaging but prepares a server to be imaged. Once Sysprep is run, any imaging tool can be used to create and distribute the server clone.

Depending on the size of the Terminal Server environment you plan to deploy, you may want to consider use of application deployment tools to assist in timely and consistent delivery of applications to all your Terminal Servers. The key consideration here is consistency. In a small environment (two to five servers), you have a fairly good chance of being able to perform manual installations of an application on each of the servers and maintain a consistent configuration. But if you have a medium or large Terminal Server environment (10, 20, or more servers), odds of making a mistake on at least one of these machines during application installation goes up dramatically.

In these types of environments, it can be advantageous to look at developing an application deployment process involving use of some form of automated tool. Examples of tools that can be used include the following:

SysDiff—. Provided as part of the Resource Kit, the SysDiff tool is used to generate what’s called a difference file, which contains information on an application installation that can then be applied against another machine to reproduce the exact installation. There are several steps to using SysDiff: Generate a base snapshot of the server before the application is installed, install the desired application, generate the difference file based on the snapshot and the current state, and then use the difference file to install the application on another machine. You can also use SysDiff to generate a list of differences in only registry and INI files if desired.

Citrix Installation Manager—. The Installation Manager (IM), provided as part of the Enterprise Edition of MetaFrame Presentation Server, provides the ability to install an application on a single MetaFrame server and have that application automatically installed on any other MetaFrame servers in the server farm. Conceptually it works similarly to SysDiff in that it generates what’s called a package, containing the information on how an application was installed on the server. The difference between SysDiff and IM is that IM then pushes that application installation to target MetaFrame server(s) and is integrated as part of Citrix’s published application metaphor.

Wise Solutions’ Wise Package Studio—. Provides robust support for centralized package creation and distribution. Conceptually similar to Citrix’s Installation Manager. For more information, see http://www.wise.com.

Altiris Software’s Delivery Solution—. An advanced software distribution solution, this product allows for centralized distribution of software packages to remote systems. For more information see http://www.altiris.com.

Automating deployment of applications within a Terminal Server environment can take significant planning in order to properly develop and configure the environment. Thorough documentation on installation and configuration of the application is a key requirement to ensure the application is prepared properly for deployment. Chapter 21 provides details on these planning and implementation processes.

TIP:

Due to permission restrictions and the simple fact that Terminal Server is a multiuser environment, the Windows Installer feature of Intellimirror is not available to users running on a Terminal Server. You can still create MSI or custom transformation files (when supported) so you’ve preconfigured an installation that can simply be run without any intervention by an administrator, but you need to make sure that all necessary components of an application are installed—not just the installation points. One area where Intellimirror can be utilized is with deployment of Terminal Services or MetaFrame clients.

After implementation, there likely will be times when you will want to perform a change to the registry or file system on each of your Terminal Servers. Need for consistency is as important here as it is with an application installation. Many tools and scripting languages exist that you can use to automate replication of file or registry changes out to all your servers.

For example, the ROBOCOPY (Robust Copy) utility from the Windows Server Resource Kit provides an automated way to maintain an identical set of files between two servers and, because of the logging functionality it provides, is an excellent aid for developing scripted tasks. Another option is to use XCOPY, which is included with all versions of Windows and can be used to script replication of files from a source to a target server.

Using the REGDMP and REGINI utilities from the Resource Kit, you could extract the desired registry changes from one server’s registry and then import those changes into another. Scripting languages such as VBScript or JavaScript, which provide robust registry editing support, can also be used to create customized scripts to replicate the necessary changes out to the remote servers. An example of use of these utilities is discussed in Chapter 20.

NOTE:

A number of commercial products also exist that provide file and/or registry replication features. An excellent example is Tricerat Software’s (http://www.tricerat.com) Reflect replication tool, which provides support for replicating both registry-based and file-based data.

Being proactive about the overall health and stability of your Terminal Servers before a system failure occurs is an important part of Terminal Server administration. Unfortunately, I’ve seen many implementations of Terminal Server in which inadequate consideration has been given to the tools and processes that would aid in managing the environment. Reacting to problems after they’ve brought down a server is far too common.

Numerous tools exist that have been specifically designed to run on a Terminal Server. The following list describes some key considerations for choosing a monitoring tool:

Terminal Server– and MetaFrame-“aware” tools—. These tools tend to be well tuned to operate in a Terminal Server environment without introducing too much additional overhead. They also usually have default configurations better suited for monitoring a Terminal Server than for monitoring a regular Windows server.

A robust notification system—. A monitoring tool is of little use if there’s no way to configure it to send a notification to one or more administrators when a system problem is detected. At a minimum, it should support SNMP (Simple Network Management Protocol) and SMTP (Simple Mail Transfer Protocol).

Proactive error-correcting logic—. Some of the more robust monitoring tools can react to problems by attempting to correct them. An excellent example is a situation where a service fails and the monitoring software automatically tries to restart the service. A little-known feature of Windows 2000 and 2003 is the ability to configure a service to automatically attempt to restart or perform some other action when a failure is detected. While the functionality is somewhat limited, this is an example of proactive error correction. Figure 7.1 shows the Recovery tab for a service on a Windows 2000 server.

Report generation—. Reports should be easily producible based on the performance data collected. This information is helpful not only for troubleshooting but also for trend analysis and future capacity planning.

Two common tools I’ve used are Citrix’s Resource Manager (CRM) and NetIQ’s AppManager. AppManager is a robust systems management and application management product that provides support for both Terminal Server and MetaFrame. It provides all the functionality summarized in the preceding list in addition to a number of other features, such as event log monitoring, automatic resetting of Terminal Server sessions, and the ability to push tasks out to each server where it’s run. Using such a feature, you could have maintenance or application scripts scheduled to run at a given time locally on a server. NetIQ’s AppManager is discussed in Chapter 22, “Server Operations and Support.”

The Citrix Resource Manager, another component included as part of the Enterprise Edition of MetaFrame, provides detailed information on the health of a MetaFrame server as well as information such as which applications have been run, how often, by whom, and what resources they consumed (percent of CPU, RAM, and so on). CRM integrates into the Management Console for MetaFrame Presentation Server, letting you quickly monitor the status of the servers in your farm; see the example in Figure 7.2.

Figure 7.2. Citrix’s Resource Manager integrates into the Management Console for MetaFrame Presentation Server.

A common requirement of most resource-monitoring tools is a database management system (DBMS) to store the collected data. The size and type of DBMS supported depends on the product being used. For example, Citrix Resource Manager requires a Microsoft SQL Server or Oracle database to store its information.

Make sure that such requirements are flagged early and tasked in your project plan. It’s likely you’ll need to engage assistance of a database administrator so the necessary tables can be created and available for populating by the monitoring product.

With the proliferation of computer viruses, particularly the continued exploitation success of e-mail–born viruses, desire for an antivirus solution as part of a Terminal Server implementation is growing stronger. While a properly locked down Terminal Server prevents a regular user (non-administrator) who accidentally comes across an infected file from introducing a problem onto a Terminal Server, there’s still the problem of the user’s personal files or user profile potentially becoming infected and in some fashion interrupt or impair the user’s ability to work.

Fortunately, as popularity of Terminal Server grows, so does support from antivirus software manufacturers for the Terminal Server environment. The impact on server performance is a major factor in selecting the right antivirus solution. A poorly configured antivirus program can easily consume huge amounts of system resources, particularly when live scanning is enabled. Trend Micro’s ServerProtect is one example of an antivirus software package fully supported on a Terminal Server. When looking for an antivirus product to use, always make sure it is supported on Terminal Server. Trend Micro’s Web site is http://www.antivirus.com.

When testing an antivirus product on a Terminal Server, always try to work with the latest version available. Older product versions are more likely to produce issues that can ultimately result in system downtime or impaired performance. Look only at solutions that provide integrated security that prevents users from being able to modify the configuration or otherwise make changes that affect performance on the entire server. After the antivirus software has been installed, spend some time collecting performance data to get an idea as to the overhead the program introduces. Practically all antivirus product companies provide some form of limited trial download, so you should be able to evaluate a few products to get a fairly good idea of which product will work best in your environment.

I’ve found the Windows Server Resource Kit to be one of the most valuable tools to assist in administration of a Terminal Server environment. Tools such as SysPrep (for use with server cloning) or REGDMP and REGINI (registry changes and replication) are just a few examples of what’s available. Many of these tools are downloadable at no charge from Microsoft’s Web site.

A number of tools are designed specifically for Terminal Server. This list describes a few tools available with the Windows 2000 and 2003 Resource Kit Tools:

LSView and LSReport (Windows 2003)—. These tools provide the ability to query for Terminal Services licensing information in your environment. Their use is discussed in Chapter 12, “License Server Installation and Configuration.”

RDPClip (Windows 2000)—. The RDPClip tool adds file-copy functionality to the Windows 2000 Terminal Services clipboard. On its own, Windows 2000 supports only text and graphics cut-and-paste between the server and the client. With this tool you can now also cut and paste files. This is not required with Windows Server 2003, which includes support for file cut-and-paste.

TSCTst (Windows 2003)—. This tool will dump all the Terminal Services license information stored on a client. This lets you see what temporary and permanent licenses may have been assigned.

TSReg (Windows 2000)—. This tool allows very granular tuning of sections of the RDP client’s registry, which pertain to bitmap and glyph caching. Using this tool, you can modify settings such as the cache size.

Winsta (Windows 2000)—. This simple GUI tool provides a list of users logged on to the Terminal Server where the tool is run. It provides a simple way of quickly seeing who may be on the server. It’s similar to the QWINSTA command line tool that’s part of the Terminal Server command set, but it doesn’t allow you to query information on a remote server.

Terminal Services Scalability Planning Tools (tsscaling.exe)—. Slightly different versions of these tools exist for both Windows 2000 and Windows 2003.

This self-extracting executable contains utilities and scripts used to simulate the load on a Terminal Server. Additional documentation relating to Windows 2000 can be found at the following URL:

http://www.microsoft.com/windows2000/library/technologies/terminal/tscaling.asp

Scripting can play an important role in the development and management of your Terminal Servers, particularly when deploying a large number of applications across multiple servers. Scripting is particularly important for the creation of domain or machine logon scripts, as certain per-user options can be set to assist in the smooth operation of an application. A number of scripting languages are available, and the decision of which to use is really a matter of personal preference and familiarity on the administrator’s part, since you need to be able to manage and update these scripts as necessary. Here are some of the supported features you should look for in a scripting language:

Registry manipulation—. This is a very useful feature when creating customized application-compatibility scripts.

File read and write—. The ability to read and write to a text file can be very useful for such tasks as information logging or application-file configuration.

Structured programming—. Basic programming features such as IF...ENDIF and LOOP structures are important for developing robust and useful scripts.

In addition to third-party scripting languages such as KiXtart or Perl, Microsoft provides robust scripting support through its Windows Script Host (WSH). WSH is language independent and includes support for both VBScript and Jscript. Using VBScript with WSH, you can produce robust scripting solutions, including development of your own custom COM objects. Details on scripting are beyond the scope of this book, but a number of excellent resources exist both on the Web and in print. The best place to start is on the Microsoft Scripting Web site: http://www.microsoft.com/scripting.

While the exact number and type of applications you’ll be deploying is dependent on the requirements of your project, a standard methodology can be applied to capture the required information, making scheduling and actual implementation of the applications more manageable. When planning to install an application, gather the following information:

Are any customization tools available for the application to automate installation and/or lock down the user’s access to certain components that may not be suitable for Terminal Server?

Two such utilities I commonly use are the Internet Explorer Administration Kit (IEAK) and the Microsoft Office Resource Kit. Both allow customization of their respective products prior to or post installation. Certain features such as the animated Office Assistant or the Internet Connection wizard can be turned off. Customized group policy object templates can also be used to provide granular control over the appearance and functionality of Internet Explorer and Microsoft Office. Chapter 15, “Group Policy Configuration,” provides more details about group policies.

How is user-specific information for the application maintained?

Applications can maintain user-specific information in a number of different areas. In the registry, through INI files, or through other cusotmized or proprietary files. Depending on the original specifications for the application, it may assume that only one user can run the program at a time and not maintain per-user information. These types of applications, usually custom-built applications or commercial applications originating from smaller companies delivering vertical market solutions, can be troublesome in a Terminal Server environment. Understanding how this user information is managed will help in the assessment of how well the application can be expected to function in the Terminal Server environment.

What additional drivers does this program need?

Components such as ODBC drivers, the Borland database engine, or Oracle SQLNet drivers are a few examples. Collect this information so you can develop a list of common components you will install on the server prior to installing the application.

Are any other applications dependent on this program? Are certain configuration features assumed to be available?

The most common scenarios involve Web browsers or office productivity tools such as Microsoft Excel or Microsoft Access. When an application has been custom built for your organization, either internally or contractually, requirements on external components are not uncommon. Custom-built applications may interact with programs like Excel for displaying data results or creating graphs. These dependencies need to be flagged so these applications can be installed first on the Terminal Server. If multiple applications depend on a common component, this information will help to ensure that the requirements of one don’t conflict with the requirements of another.

After collecting the necessary information, arrange and prioritize installation of each component, ensuring that all dependencies have been accounted for. From the results, you can schedule dates and times for installation of each component. This method can be extremely valuable, particularly if additional human resources must be scheduled to assist in the application installation. Table 7.1 shows a sample prioritization for a project. The numbers in the “Dependencies” column refer to any other listed applications an application is dependent on. For example, the Custom Visual Basic Client/Server application is dependent on application numbers 1, 2, and 3.

Table 7.1. Sample Application Installation Priority List

No. | Application | Dependencies |

|---|---|---|

1. | Microsoft Data Access Components (MDAC) 2.7 with Service Pack 1 | None |

2. | Custom Forms Management package | 1 |

3. | Internet Explorer 6 with latest service packs | None |

4. | Microsoft Office Professional 2003 | None |

5. | Custom Visual Basic Client/Server application | 1, 2, 3 |

Although the actual process for application installation is covered in Chapter 21, one area that should be flagged during the planning stage is a standard on the location of installed application files.

Many commercial Windows applications default to installing in the %systemdrive% Program Files directory. My standard Terminal Server configuration involves use of two drives (or partitions), one containing the system/boot partition and the other containing the applications and static data available to the user. Whenever possible, all applications are installed onto this dedicated application volume.

Depending on the number of custom or in-house applications being maintained, a custom installation folder can be designated resulting in the following target folders. Note that the Y: drive represents my application volume.

Y:Program Files for commercial applications such as Microsoft Office.

Y:Custom Program Files for custom-developed applications specific to your company. I like to use this name because the eight-character short name will be custom~1, something easy to remember.

All associated administrators involved in the project should also clearly understand these standards, to help eliminate accidental deployment of an application on the system/boot drive. Unless an administrator is told otherwise, they will most likely install an application using the default target folder, which in most cases will be Program Files on the system/boot drive. Here are a couple of other application-related settings to consider:

Hard-coded target folders—. Be aware that some applications (most often, custom-built in-house applications) may have been hard-coded to expect to find their application files in a specific location, usually a folder under the C: drive. This is an obvious problem when you have separated your system/boot partition and your application partition, because the program will continually want to reference your system/boot drive. This can be even more troublesome for a Terminal Server administrator, especially if you have remapped your drives using the MetaFrame DriveRemap utility located on the root of the installation CD-ROM. In this configuration, there is no local C: drive on the Terminal Server, meaning that an application depending on this specific drive will fail unless a network drive mapping or the SUBST command are used to make it available.

Changes to the system path—. Pay particular attention to updates to the system path. Many in-house developed applications assume their specific application directory will be listed in the system path. In a Terminal Server environment, you should avoid this whenever possible. By keeping the system path size to a minimum, you reduce the chances of a misplaced DLL or other application file affecting functionality of another program. This also reduces overhead of directory searches, which are performed on all entries in the path when the system is searching for a file.

TIP:

Make certain that these standards are conveyed to all development teams working on applications targeted for your Terminal Server environment. While hard-coded paths and the like are poor programming practice, proactively making development teams aware of this will help reduce the time required for the teams to fix it or for you to find ways to work around it in your implementation.

As part of your software planning, you should also develop change and release management procedures. A critical part of maintaining a stable Terminal Server environment is having the proper software testing and validation procedures in place that must be completed prior to the software’s deployment. System downtime as a result of a poor software deployment is completely avoidable in a Terminal Server environment.

NOTE:

I once encountered an incident that serves to reiterate the need to develop change and release management procedures. A custom-developed application was presented to me for deployment into a production environment. I informed the project team that the application would have to go onto a test server before it would even be scheduled for deployment into production. Even though the developers were adamant about the fact that the program was very small and had caused no problems on the desktop, I wouldn’t allow a production deployment without the new application going onto a test server first. I informed them that desktop validation was not a substitute for Terminal Server validation.

After installation on a test server, the program was working perfectly. Only a short time later, however, I discovered that a number of other applications had stopped functioning properly, including a mission-critical application for the organization. The reason was that the install program for the new application had placed a database driver file in the SYSTEM32 directory that was being picked up by the new application (and others), instead of the file from the standard database driver directory on the Terminal Server. This was a simple mistake made by the programming team while creating the install program and hadn’t been picked up during testing on the desktop (since they hadn’t run the other applications). To correct the problem, I just moved the database driver file into the new application’s working directory, where it would be available to only this application.

Luckily, this problem was detected on a test server, and I was able to correct it before the actual production deployment, which went without incident. This certainly wouldn’t have been the case had I not insisted on the testing in the first place. A simple change-management process helped me avoid impacting my production users and saved me the grief of having to troubleshoot the problem while under the added pressure of trying to recover a downed production environment. The main rule of change management is, “Test before you release.”

An area where you should start planning early is in selection of your test and pilot users. Choosing the right users for this task is an important ingredient in implementing an application successfully. These users are not the same ones you might employ to do some load testing prior to deployment. These are the veteran users who will become involved in the early days of your implementation. They’ll be key in validating the acceptable level of performance and functionality for an application and will help pave the way for success of the software implementation. Choose these people with careful consideration. A suitable pilot user should have the following qualifications:

Advanced user of the application—. An advanced user can recognize immediately any variances from the normal operating behavior of an application. When looking for the advanced user, talk with both the desktop support staff who serve these users and the manager and other staff in that department.

Comfortable with change—. You may find that many advanced users are resistant to change. Such people don’t make good pilot users. In many cases, they know how to do their jobs very well but are unable to adapt well to new situations. They’ve learned their jobs through repetition more than through knowledge.

Calm under pressure or stress—. There are times when testing a new environment or application can become very stressful, particularly when trying to perform production-type work. Querying data, accessing a Web site, running a report, or printing information are all examples of tasks that may require additional tweaking to correct minor problems. If a user is trying to operate in the new environment and continuously encounters these problems, potential for becoming discouraged is high. A calm disposition can be valuable for overcoming these types of problems. Obviously your goal is to avoid these problems as much as possible, but attempting to provide some protection in case problems do arise is certainly smart planning.

WARNING:

No matter how tempting it may be, don’t make false promises to solicit cooperation of an advanced user who is uncomfortable with change. He or she will expect you to live up to those promises. More specifically, don’t promise better performance unless you can deliver it. I say this because it’s done so often that it’s almost cliché. Always be honest about why you’re doing something and set realistic expectations. It is much better to say there will be chances of downtime so the user is at least prepared for it than having them surprised and annoyed if an application continuously fails to start.

Positive attitude toward success of the project—. The user needs to have a clear idea as to why the project is being undertaken and must believe in the benefits it will bring, regardless of the problems that may arise in getting there. Because this user will most likely be well known and respected among his or her peers, you can’t have this person complaining loudly to anyone who will listen about problems he or she may have encountered during testing. This is a surefire way of planting seeds of resistance to your Terminal Server implementation. At the same time, positive feedback may increase the desire to move to the new environment. If it appears that your test user is operating in a “superior” environment, then very quickly his or her peers will want to get involved. Any negative experiences from your test user need to be dealt with quickly to reassure them that things are being resolved in a timely fashion.

Excellent listener and communicator—. Not only must pilot users be able to relay accurately any problems they may be having, but they also must be able to follow instructions given to them.

Don’t hesitate to drop or replace a pilot user who hasn’t measured up to your expectations. This is not to say that he or she should be removed just for being critical of the system. On the contrary, objective criticism can be beneficial in delivering a robust environment. Users who provide little or no feedback or who abuse the testing privileges should be prime candidates for immediate replacement.

Software testing and deployment in your Terminal Server environment will go through two distinct phases. The first is during the Terminal Server implementation, and the second is once you’ve gone into a production mode:

Terminal Server implementation phase—. During this phase, you’ll be migrating one or more applications from the user’s desktop into the Terminal Server environment, as well as introducing new applications. The key difference between this phase and the next is that the Terminal Server environment, being new, is more open to change now than it will be later. Until Terminal Server is rolled into production, its configuration is very malleable. This provides you with the flexibility to ensure not only that the environment is robust and stable but also that whatever is required to get the applications working is done properly. The biggest benefit is that you have some room for error. You can make some mistakes and not bring the world crashing down with you. Use this opportunity to its fullest potential. Take the time to understand what’s happening and experiment with more than one solution to a problem. When your Terminal Server environment goes live, you’ll no longer have this luxury.

Production maintenance phase—. At this point you’re running a production Terminal Server environment with many users running key applications. The number-one priority now is to maintain stability. Any changes you introduce must not have a negative effect on the existing users. You need to have a process in place for testing new software before it gets near the production environment. Production won’t be the place for doing testing or last-minute changes. A set of one or more non-production Terminal Servers should be available for testing all changes.

The actual approach you take to implement software is the same in both phases, and Figure 7.3 outlines the process of adding and testing software in the Terminal Server environment. Details on the individual tasks are described in the next sections.

NOTE:

An important part of every step is proper documentation of what was done to configure an application to run on Terminal Server. Even if the installation was straightforward and without any issues, you should mention this somewhere so that, if the software ever needs to be installed again, someone can look up this information and quickly re-create the installation.

I’ve worked with some organizations that develop detailed “build books” that include information on all components of the server build, including the individual applications. While this may appear to be substantial work upfront, the benefits of having such information available can be invaluable if the server ever needs to be re-created at some point in the future.

During the implementation phase the production mirror will not necessarily be a clone of a production server, but it should be a clone of the most recent build you’re currently working with. See the earlier section “Cloning and Server Replication” for more information.

By using a cloned image of your latest “production” server, you ensure that if the application installs and functions properly, it will work in the production environment. Testing a new application on a generic installation of Terminal Server is not sufficient to guarantee its stability in your production configuration.

TIP:

It’s certainly reasonable to use this test server to test more than one software package concurrently, although I suggest that you handle this with care. It’s best if you can keep to only two or three packages being tested on a single server at a time—fewer, if possible. The problem with having many applications being tested at once is that if a problem occurs, such as intermittent performance degradations or crashes, it becomes more difficult to narrow down the actual culprit.

Many applications, particularly custom applications or older 16-bit or 32-bit applications, need to be configured differently or augmented with scripting to get them to function properly or to resolve performance issues when run on Terminal Server. Installation and configuration of applications in your environment will usually take up the majority of the project time. See Chapter 20 for a complete discussion on installing and configuring applications.

It is worth reiterating the importance of documentation, particularly during installation of your applications, since it’s unlikely that you will remember subtle changes made to an application or that such changes can be easily re-created by someone else.

After you have the software installed on your test server, you should do some initial testing. You’ll be better able to examine general-purpose applications such as Microsoft Word than custom applications developed for a specific department. Depending on the sensitivity of the data within an application, you may not be able to test much more than the logon screen. Although you may not be able to run the application, when possible you should attempt to verify connectivity to back-end database systems such as SQL Server prior to having the test user become involved.

TIP:

One additional test you can perform, especially if this application may be scheduled for an update or replaced in the near future, is to verify that the uninstall works properly. Be aware that when an application is installed from a network location, it may refer back to this location to perform the software uninstall. If that network point does not exist in the future, the software uninstall may fail.

Before you can run, you need to learn to walk. This is why initially you should introduce only a single user to the software on Terminal Server. Make sure that the process of switching back and forth between Terminal Server and the desktop is clear to the user (when implementing a desktop-replacement scenario). This will minimize the impact if the application in Terminal Server is not working properly. I highly recommend that you demonstrate the back-out procedures to anyone who may have concerns with downtime, such as a tester’s manager. The ability to quickly roll back to the original desktop application is another benefit to software deployment during the Terminal Server implementation.

WARNING:

If an application is being migrated from a user’s desktop, don’t remove the user’s desktop version of the application until the Terminal Server version is in production. During testing, the desktop software will be the user’s immediate back-out if there are problems on Terminal Server.

A frequent question is, “How long should I test with one user?” My response is typically, “Until you’re comfortable that multiple users could work in the same environment with the same or fewer issues than the single user.” If a single user is having frequent problems, there’s no reason to believe that adding more users will make the problem disappear. More likely, multiple users will start to exhibit the same problem, and you will have succeeded only in increasing your support responsibilities. This is not usually what a busy Terminal Server administrator wants to do. Testing with a single user will very often eliminate the majority of the issues that would also be encountered by multiple users.

TIP:

When performing your application testing, be sure you’re replicating how the application will be run in the production environment. If users will be accessing a full desktop, make sure they’re working in a full desktop session. If the application will run in a seamless window, test in a seamless window. This will help eliminate surprises later on and ensure that your testing results are actually a valid representation of the production environment.

When the application is working properly for the single test user, the next step is to increase this to two or three users. The reason is to ensure that the software continues to function when multiple instances are being run on the same computer (the Terminal Server). A classic problem area is in applications that create temp files or user files in a location not unique for multiple users on a Terminal Server. The hard-coded folder C:Temp is a common shared location where this problem occurs. Instead of an application using the %TEMP% environment variable, which is unique for each Terminal Server user, C:TEMP is used instead.

An application’s binary folder can also be a trouble spot, but you’ll normally discover this when testing with a single user since he or she should not have write access to the folder by default. Don’t let yourself fall into the trap of trying to add too many users too quickly. Always start out slowly when performing your initial testing. The more users you have testing, the more work required to ensure they are working properly and to provide any required user support.

Another potential problem area is the registry, where the software may place user-specific application information in HKEY_LOCAL_MACHINE instead of HKEY_CURRENT_USER. All users can then access the same information, causing problems in the application. Again, if access to the registry is being controlled, this problem will be uncovered during single-user testing. This issue is becoming less and less of a concern as most Windows-based applications have been certified to run on at least Windows 2000. In order to pass certification, certain minimum standards must be met. One of these standards is that user-specific information be maintained in the HKEY_CURRENT_USER registry hive and not in HKEY_LOCAL_MACHINE.

NOTE:

Unfortunately, many software manufacturers can provide only minimal (if any) support for their product when run on a Terminal Server. You’ll have much more success if you can describe the problem specifically for the manufacturer. For example, if a software package insists on writing a temporary file into a specific location and this is causing conflicts on Terminal Server, ask the vendor if there’s a way to modify the location of this file. This will be more easily answered than why the program won’t run on Terminal Server.

In a number of situations, I haven’t revealed to the support people that I’m attempting to use their product on Terminal Server. When I do, the response is usually, “Sorry, we don’t support our product on that platform,” even though the issue usually has to do with a user having more restrictive access and not with the host operating system itself.

Because of the increased possibility of problems when you first begin testing with multiple users, don’t add too many users during initial testing. “Too many” usually means more than five. With five or fewer users, ideally located in the same geographic area, the support responsibilities are manageable by a single support person. More than five, and your testing group may become much more difficult to support.

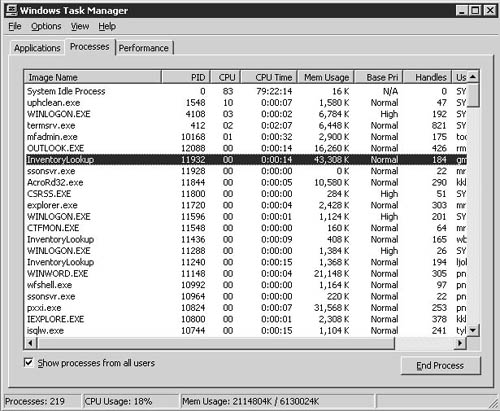

After the application has gone through multiuser testing successfully, the only outstanding task is to examine the load that the software will introduce to the Terminal Server. This can actually be started during single-user testing because severe performance problems may be noticed immediately. Performance Monitor is one tool that can be used to capture performance data for a specific application as well as the server. Even using only Task Manager, you can get an idea of the typical processor and memory utilization of an application. See Figure 7.4.

As part of the development of your change-management procedures, document the process that application developers and others need to follow if they want to have a program implemented within the Terminal Server environment. Regardless of which release procedures exist today, you need to establish a guideline that everyone will have to follow. Otherwise you run the risk of allowing releases to be scheduled before they’ve been properly tested. Figure 7.5 describes the release management process that I commonly implement.

A key requirement is insisting that the development team clearly document exactly how the application should be installed. You shouldn’t necessarily expect that the documentation clearly explain how to deploy the application onto Terminal Server, but it should explain how the development team would normally install it on a desktop. You can then review this procedure and make any necessary adjustments to accommodate your Terminal Server requirements.