8.1 ![]() . (In fact, we always get doubles with probability

. (In fact, we always get doubles with probability ![]() when at least one of the dice is fair.) Any two faces whose sum is 7 have the same probability in distribution Pr1, so S = 7 has the same probability as doubles.

when at least one of the dice is fair.) Any two faces whose sum is 7 have the same probability in distribution Pr1, so S = 7 has the same probability as doubles.

8.2 There are 12 ways to specify the top and bottom cards and 50! ways to arrange the others; so the probability is 12.50!/52! = 12/(51.52) = ![]() .

.

8.3 ![]() (3 + 2 + · · · + 9 + 2) = 4.8;

(3 + 2 + · · · + 9 + 2) = 4.8; ![]() (32 + 22 + · · · + 92 + 22 – 10(4.8)2) =

(32 + 22 + · · · + 92 + 22 – 10(4.8)2) = ![]() , which is approximately 8.6. The true mean and variance with a fair coin are 6 and 22, so Stanford had an unusually heads-up class. The corresponding Princeton figures are 6.4 and

, which is approximately 8.6. The true mean and variance with a fair coin are 6 and 22, so Stanford had an unusually heads-up class. The corresponding Princeton figures are 6.4 and ![]() . 12.5 (This distribution has κ4 = 2974, which is rather large. Hence the standard deviation of this variance estimate when n = 10 is also rather large,

. 12.5 (This distribution has κ4 = 2974, which is rather large. Hence the standard deviation of this variance estimate when n = 10 is also rather large, ![]() 20.1 according to exercise 54. One cannot complain that the students cheated.)

20.1 according to exercise 54. One cannot complain that the students cheated.)

8.4 This follows from (8.38) and (8.39), because F(z) = G(z)H(z). (A similar formula holds for all the cumulants, even though F(z) and G(z) may have negative coefficients.)

8.5 Replace H by p and T by q = 1 – p. If SA = SB =![]() we have p2qN =

we have p2qN = ![]() and pq2N =

and pq2N = ![]() q +

q + ![]() ; the solution is p = 1/φ2, q = 1/φ.

; the solution is p = 1/φ2, q = 1/φ.

8.6 In this case X|y has the same distribution as X, for all y, hence E(X|Y) = EX is constant and V(E(X|Y)) = 0. Also V(X|Y) is constant and equal to its expected value.

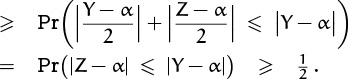

8.7 We have ![]() by Chebyshev’s monotonic inequality of Chapter 2.

by Chebyshev’s monotonic inequality of Chapter 2.

8.8 Let p = Pr(ω ![]() A ∩ B), q = Pr(ω ∉A), and r = Pr(ω ∉B). Then p + q + r = 1, and the identity to be proved is p = (p + r)(p + q) – qr.

A ∩ B), q = Pr(ω ∉A), and r = Pr(ω ∉B). Then p + q + r = 1, and the identity to be proved is p = (p + r)(p + q) – qr.

8.9 This is true (subject to the obvious proviso that F and G are defined on the respective ranges of X and Y), because

8.10 Two. Let x1 < x2 be distinct median elements; then 1 ≤ Pr(X ≤ x1) + Pr(X ≥ x2) ≤ 1, hence equality holds. (Some discrete distributions have no median elements. For example, let Ω be the set of all fractions of the form ±1/n, with Pr(+1/n) = Pr(–1/n)= ![]() .)

.)

8.11 For example, let K = k with probability 4/(k + 1)(k + 2)(k + 3), for all integers k ≥ 0. Then EK = 1, but E(K2) = ∞. (Similarly we can construct random variables with finite cumulants through κm but with κm+1 = ∞.)

8.12 (a) Let pk = Pr(X = k). If 0 < x ≤ 1, we have Pr(X ≤ r) = ∑k≤rpk ≤ ∑k≤r xk–rpk ≤ ∑k xk–rpk = x–rP(x). The other inequality has a similar proof. (b) Let x = α/(1–α) to minimize the right-hand side. (A more precise estimate for the given sum is obtained in exercise 9.42.)

8.13 (Solution by Boris Pittel.) Let us set Y = (X1 + · · · + Xn)/n and Z = (Xn+1 + · · · + X2n)/n. Then

The last inequality is, in fact, ‘>’ in any discrete probability distribution, because Pr(Y = Z) > 0.

8.14 Mean(H) = p Mean(F) + q Mean(G); Var(H) = p Var(F) + q Var(G) + pq (Mean(F)–Mean(G))2. (A mixture is actually a special case of conditional probabilities: Let Y be the coin, let X|H be generated by F(z), and let X|T be generated by G(z). Then VX = EV(X|Y) + VE(X|Y), where EV(X|Y) = pV(X|H) + qV(X|T) and VE(X|Y) is the variance of pzMean(F)+ qzMean(G).)

8.15 By the chain rule, H′(z) = G′(z)F′ (G(z)); H″(z) = G″(z)F′ (G(z)) + G′(z)2F′′ (G(z)). Hence

Mean(H) = Mean(F) Mean(G);

Var(H) = Var(F) Mean(G)2 + Mean(F) Var(G).

(The random variable corresponding to probability distribution H can be understood as follows: Determine a nonnegative integer n by distribution F; then add the values of n independent random variables that have distribution G. The identity for variance in this exercise is a special case of (8.106), when X has distribution H and Y has distribution F.)

8.16 ew(z–1)/(1 – w).

8.17 Pr(Yn,p ≤ m) = Pr(Yn,p + n ≤ m + n) = probability that we need ≤ m + n tosses to obtain n heads = probability that m + n tosses yield ≥ n heads = Pr(Xm+n,p ≥ n). Thus

and this is (5.19) with n = r, x = q, y = p.

8.18 (a) GX(z) = eμ(z–1). (b) The mth cumulant is μ, for all m ≥ 1. (The case μ = 1 is called F∞ in (8.55).)

8.19 (a) GX1+X2(z) = GX1(z)GX2(z) = e(μ1+μ2)(z–1). Hence the probability is e–μ1–μ2 (μ1 + μ2)n/n!; the sum of independent Poisson variables is Poisson. (b) In general, if KmX denotes the mth cumulant of a random variable X, we have Km(aX1 + bX2) = am(KmX1) + bm(KmX2), when a, b ≥ 0. Hence the answer is 2mμ1 + 3mμ2.

8.20 The general pgf will be G(z) = zm/F(z), where

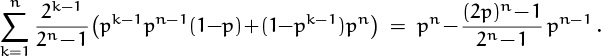

8.21 This is ∑n≥0 qn, where qn is the probability that the game between Alice and Bill is still incomplete after n flips. Let pn be the probability that the game ends at the nth flip; then pn + qn = qn–1. Hence the average time to play the game is ∑n≥1npn = (q0 – q1) + 2(q1 – q2) + 3(q2 – q3) + · · · = q0 + q1 + q2 + · · · = N, since limn→∞ nqn = 0.

Another way to establish this answer is to replace H and T by ![]() z. Then the derivative of the first equation in (8.78) tells us that

z. Then the derivative of the first equation in (8.78) tells us that ![]() .

.

By the way, ![]() .

.

8.22 By definition we have V(X|Y) = E(X2|Y)–(E(X|Y))2) and V(E(X|Y)) = E((E(X|Y))2)–(E(E(X|Y)))2; hence E(V(X|Y))+ V(E(X|Y))= E(E(X2|Y))–(E(E(X|Y)))2. But E(E(X|Y))= ∑y Pr(Y = y)E(X|y) = ∑x,y Pr(Y = y) × Pr((X|y) = x)x = EX and E(E(X2|Y)) = E(X2), so the result is just VX.

8.23 Let ![]() and

and ![]() ; and let Ω2 be the other 16 elements of Ω. Then

; and let Ω2 be the other 16 elements of Ω. Then ![]() according as ω

according as ω ![]() Ω0, Ω1, Ω2. The events A must therefore be chosen with kj elements from Ωj, where (k0, k1, k2) is one of the following: (0, 0, 0), (0, 2, 7), (0, 4, 14), (1, 4, 4), (1, 6, 11), (2, 6, 1), (2, 8, 8), (2, 10, 15), (3, 10, 5), (3, 12, 12), (4, 12, 2), (4, 14, 9), (4, 16, 16). For example, there are

Ω0, Ω1, Ω2. The events A must therefore be chosen with kj elements from Ωj, where (k0, k1, k2) is one of the following: (0, 0, 0), (0, 2, 7), (0, 4, 14), (1, 4, 4), (1, 6, 11), (2, 6, 1), (2, 8, 8), (2, 10, 15), (3, 10, 5), (3, 12, 12), (4, 12, 2), (4, 14, 9), (4, 16, 16). For example, there are ![]() events of type (2, 6, 1). The total number of such events is [z0](1 + z20)4(1 + z–7)16(1 + z2)16, which turns out to be 1304872090. If we restrict ourselves to events that depend on S only, we get 40 solutions S

events of type (2, 6, 1). The total number of such events is [z0](1 + z20)4(1 + z–7)16(1 + z2)16, which turns out to be 1304872090. If we restrict ourselves to events that depend on S only, we get 40 solutions S ![]() A, where

A, where ![]() , and the complements of these sets. (Here the notation ‘

, and the complements of these sets. (Here the notation ‘![]() ’ means either 2 or 12 but not both.)

’ means either 2 or 12 but not both.)

8.24 (a) Any one of the dice ends up in J’s possession with probability ![]() ; hence

; hence ![]() . Let

. Let ![]() . Then the pgf for J’s total holdings is (q + pz)2n+1, with mean (2n + 1)p and variance (2n + 1)pq, by (8.61). (b)

. Then the pgf for J’s total holdings is (q + pz)2n+1, with mean (2n + 1)p and variance (2n + 1)pq, by (8.61). (b) ![]() .

.

8.25 The pgf for the current stake after n rolls is Gn(z), where

(The noninteger exponents cause no trouble.) It follows that Mean(Gn) = Mean(Gn–1), and ![]() . So the mean is always A, but the variance grows to

. So the mean is always A, but the variance grows to ![]() .

.

This problem can perhaps be solved more easily without generating functions than with them.

8.26 The pgf Fl,n(z) satisfies ![]() ; hence

; hence ![]() and

and ![]() ; the variance is easily computed. (In fact, we have

; the variance is easily computed. (In fact, we have

which approaches a Poisson distribution with mean 1/l as n → ∞.)

8.27 (n2Σ3 – 3nΣ2Σ1 + 2Σ![]() )/n(n – 1)(n – 2) has the desired mean, where

)/n(n – 1)(n – 2) has the desired mean, where ![]() . This follows from the identities

. This follows from the identities

| EΣ3 = | nμ3; |

| E(Σ2Σ1) = | nμ3 + n(n – 1)μ2μ1; |

| E(Σ |

nμ3 + 3n(n – 1)μ2μ1 + n(n – 1)(n – 2)μ |

Incidentally, the third cumulant is κ3 = E((X–EX)3), but the fourth cumulant does not have such a simple expression; we have κ4 = E((X – EX)4)– 3(VX)2.

8.28 (The exercise implicitly calls for p = q =![]() , but the general answer is given here for completeness.) Replace

, but the general answer is given here for completeness.) Replace H by pz and T by qz, getting SA(z) = p2qz3/(1 – pz)(1 – qz)(1 – pqz2) and SB(z) = pq2z3/(1 – qz)(1 – pqz2). The pgf for the conditional probability that Alice wins at the nth flip, given that she wins the game, is

This is a product of pseudo-pgf’s, whose mean is 3+p/q+q/p+2pq/(1–pq). The formulas for Bill are the same but without the factor q/(1 – pz), so Bill’s mean is 3 + q/p + 2pq/(1 – pq). When p = q =![]() , the answer in case (a) is

, the answer in case (a) is ![]() ; in case (b) it is

; in case (b) it is ![]() . Bill wins only half as often, but when he does win he tends to win sooner. The overall average number of flips is

. Bill wins only half as often, but when he does win he tends to win sooner. The overall average number of flips is ![]() , agreeing with exercise 21. The solitaire game for each pattern has a waiting time of 8.

, agreeing with exercise 21. The solitaire game for each pattern has a waiting time of 8.

8.29 Set H = T = ![]() in

in

1 + N(H + T) = N + SA + SB + SC

N HHTH = SA(HTH + 1) + SB(HTH + TH) + SC(HTH + TH)

N HTHH = SA(THH + H) + SB(THH + 1) + SC(THH)

N THHH = SA(HH) + SB(H) + SC

to get the winning probabilities. In general we will have SA + SB + SC = 1 and

SA(A:A) + SB(B:A) + SC(C:A) = SA(A:B) + SB(B:B) + SC(C:B)

= SA(A:C) + SB(B:C) + SC(C:C).

In particular, the equations 9SA + 3SB + 3SC = 5SA + 9SB + SC = 2SA + 4SB + 8SC imply that ![]() ,

, ![]() ,

, ![]() .

.

8.30 The variance of P(h1, . . . , hn; k)|k is the variance of the shifted binomial distribution ((m – 1 + z)/m)k–1z, which is ![]() by (8.61). Hence the average of the variance is Mean(S)(m – 1)/m2. The variance of the average is the variance of (k – 1)/m, namely Var(S)/m2. According to (8.106), the sum of these two quantities should be VP, and it is. Indeed, we have just replayed the derivation of (8.96) in slight disguise. (See exercise 15.)

by (8.61). Hence the average of the variance is Mean(S)(m – 1)/m2. The variance of the average is the variance of (k – 1)/m, namely Var(S)/m2. According to (8.106), the sum of these two quantities should be VP, and it is. Indeed, we have just replayed the derivation of (8.96) in slight disguise. (See exercise 15.)

8.31 (a) A brute force solution would set up five equations in five unknowns:

A = 1; B = ![]() zA +

zA + ![]() zC; C =

zC; C = ![]() zB +

zB + ![]() zD;

zD;

D = ![]() zC +

zC + ![]() zE; E =

zE; E = ![]() zD +

zD + ![]() zA.

zA.

But positions C and D are equidistant from the goal, as are B and E, so we can set C = D and B = E in these generating functions for the probabilities. Only two equations now remain to be solved:

B = ![]() z +

z + ![]() zC; C =

zC; C = ![]() zB +

zB + ![]() zC.

zC.

Hence C = z2/(4 – 2z – z2); we have Mean(C) = 6 and Var(C) = 22. (Rings a bell? In fact, this problem is equivalent to flipping a fair coin until getting heads twice in a row: Heads means “advance toward the apple” and tails means “start over.”) (b) Chebyshev’s inequality says that Pr(C ≥ 100) = Pr((C – 6)2 ≥ 942) ≤ 22/942 ≈ .0025. (c) The second tail inequality says that Pr(C ≥ 100) ≤ 1/(x98(4 – 2x – x2)) for all x ≥ 1, and we get the upper bound 0.00000005 when ![]() . (The actual probability is ∑n≥100 Fn–1/2n = F101/299 ≈ 0.0000000009, according to exercise 37.)

. (The actual probability is ∑n≥100 Fn–1/2n = F101/299 ≈ 0.0000000009, according to exercise 37.)

8.32 By symmetry, we can reduce each month’s situation to one of four possibilities:

D, the states are diagonally opposite;

A, the states are adjacent and not Kansas;

K, the states are Kansas and one other;

S, the states are the same.

“Toto, I’ve a feeling we’re not in Kansas anymore.”

—Dorothy

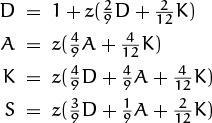

Considering the Markovian transitions, we get four equations

whose sum is D + K + A + S = 1 + z(D + A + K). The solution is

but the simplest way to find the mean and variance may be to write z = 1+w and expand in powers of w, ignoring multiples of w2:

Now ![]() , and

, and ![]() . The mean is

. The mean is ![]() and the variance is

and the variance is ![]() . (Is there a simpler way?)

. (Is there a simpler way?)

8.33 First answer: Clearly yes, because the hash values h1, . . . , hn are independent. Second answer: Certainly no, even though the hash values h1, . . . , hn are independent. We have Pr(Xj = 0) = ![]() sk [j ≠ k](m–1)/m = (1 – sj)(m – 1)/m, but Pr(X1 = X2 = 0) =

sk [j ≠ k](m–1)/m = (1 – sj)(m – 1)/m, but Pr(X1 = X2 = 0) = ![]() sk[k > 2](m – 1)2/m2 = (1 – s1 – s2)(m – 1)2/m2 ≠ Pr(X1 = 0) Pr(X2 = 0).

sk[k > 2](m – 1)2/m2 = (1 – s1 – s2)(m – 1)2/m2 ≠ Pr(X1 = 0) Pr(X2 = 0).

8.34 Let [zn] Sm(z) be the probability that Gina has advanced < m steps after taking n turns. Then Sm(1) is her average score on a par-m hole; [zm] Sm(z) is the probability that she loses such a hole against a steady player; and 1 – [zm–1] Sm(z) is the probability that she wins it. We have the recurrence

S0(z) = 0;

Sm(z) = 1 + pzSm–2(z) + qzSm–1(z)/(1 – rz), for m > 0.

To solve part (a), it suffices to compute the coefficients for m, n ≤ 4; it is convenient to replace z by 100w so that the computations involve nothing but integers. We obtain the following tableau of coefficients:

| S0 | 0 | 0 | 0 | 0 | 0 |

| S1 | 1 | 4 | 16 | 64 | 256 |

| S2 | 1 | 95 | 744 | 4432 | 23552 |

| S3 | 1 | 100 | 9065 | 104044 | 819808 |

| S4 | 1 | 100 | 9975 | 868535 | 12964304 |

Therefore Gina wins with probability 1 – .868535 = .131465; she loses with probability .12964304. (b) To find the mean number of strokes, we compute

(Incidentally, S5(1) ≈ 4.9995; she wins with respect to both holes and strokes on a par-5 hole, but loses either way when par is 3.)

8.35 The condition will be true for all n if and only if it is true for n = 1, by the Chinese remainder theorem. One necessary and sufficient condition is the polynomial identity

(p2+p4+p6 + (p1+p3+p5)w)(p3+p6 + (p1+p4)z + (p2+p5)z2) = (p1wz + p2z2 + p3w + p4z + p5wz2 + p6),

but that just more-or-less restates the problem. A simpler characterization is

| (p2 + p4 + p6)(p3 + p6) = p6, | (p1 + p3 + p5)(p2 + p5) = p5, |

which checks only two of the coefficients in the former product. The general solution has three degrees of freedom: Let a0 + a1 = b0 + b1 + b2 = 1, and put p1 = a1b1, p2 = a0b2, p3 = a1b0, p4 = a0b1, p5 = a1b2, p6 = a0b0.

8.36 (a) ![]() (b) If the kth die has faces with s1, . . . , s6 spots, let pk(z) = zs1 + · · · + zs6. We want to find such polynomials with p1(z) . . . pn(z) = (z + z2 + z3 + z4 + z5 + z6)n. The irreducible factors of this polynomial with rational coefficients are zn(z + 1)n × (z2 + z + 1)n(z2 – z + 1)n; hence pk(z) must be of the form zak (z + 1)bk × (z2 + z + 1)ck (z2 – z + 1)dk. We must have ak ≥ 1, since pk(0) = 0; and in fact ak = 1, since a1 + · · · + an = n. Furthermore the condition pk(1) = 6 implies that bk = ck = 1. It is now easy to see that 0 ≤ dk ≤ 2, since dk > 2 gives negative coefficients. When d = 0 and d = 2, we get the two dice in part (a); therefore the only solutions have k pairs of dice as in (a), plus n – 2k ordinary dice, for some

(b) If the kth die has faces with s1, . . . , s6 spots, let pk(z) = zs1 + · · · + zs6. We want to find such polynomials with p1(z) . . . pn(z) = (z + z2 + z3 + z4 + z5 + z6)n. The irreducible factors of this polynomial with rational coefficients are zn(z + 1)n × (z2 + z + 1)n(z2 – z + 1)n; hence pk(z) must be of the form zak (z + 1)bk × (z2 + z + 1)ck (z2 – z + 1)dk. We must have ak ≥ 1, since pk(0) = 0; and in fact ak = 1, since a1 + · · · + an = n. Furthermore the condition pk(1) = 6 implies that bk = ck = 1. It is now easy to see that 0 ≤ dk ≤ 2, since dk > 2 gives negative coefficients. When d = 0 and d = 2, we get the two dice in part (a); therefore the only solutions have k pairs of dice as in (a), plus n – 2k ordinary dice, for some ![]() .

.

8.37 The number of coin-toss sequences of length n is Fn–1, for all n > 0, because of the relation between domino tilings and coin flips. Therefore the probability that exactly n tosses are needed is Fn–1/2n, when the coin is fair. Also qn = Fn+1/2n–1, since ∑k≥n Fkzk = (Fnzn + Fn–1zn+1)/(1 – z – z2). (A systematic solution via generating functions is, of course, also possible.)

8.38 When k faces have been seen, the task of rolling a new one is equivalent to flipping coins with success probability pk = (m – k)/m. Hence the pgf is ![]() . The mean is

. The mean is ![]() ; the variance is

; the variance is ![]() ; and equation (7.47) provides a closed form for the requested probability, namely

; and equation (7.47) provides a closed form for the requested probability, namely ![]() . (The problem discussed in this exercise is traditionally called “coupon collecting.”)

. (The problem discussed in this exercise is traditionally called “coupon collecting.”)

8.39 E(X) = P(–1); V(X) = P(–2) – P(–1)2; E(ln X) = –P′(0).

8.40 (a) We have ![]() , by (7.49). Incidentally, the third cumulant is npq(q–p) and the fourth is npq(1–6pq). The identity q+pet = (p+qe–t)et shows that fm(p) = (–1)mfm(q)+[m = 1]; hence we can write fm(p) = gm(pq)(q – p)[m odd], where gm is a polynomial of degree

, by (7.49). Incidentally, the third cumulant is npq(q–p) and the fourth is npq(1–6pq). The identity q+pet = (p+qe–t)et shows that fm(p) = (–1)mfm(q)+[m = 1]; hence we can write fm(p) = gm(pq)(q – p)[m odd], where gm is a polynomial of degree ![]() m/2

m/2![]() , whenever m > 1. (b) Let p =

, whenever m > 1. (b) Let p = ![]() and F(t) = ln(

and F(t) = ln( ![]() +

+ ![]() et). Then ∑m≥1 κmtm –1/(m–1)! = F′(t) = 1–1/(et+1), and we can use exercise 6.23.

et). Then ∑m≥1 κmtm –1/(m–1)! = F′(t) = 1–1/(et+1), and we can use exercise 6.23.

8.41 If G(z) is the pgf for a random variable X that assumes only positive integer values, then ![]() G(z) dz/z = Pk–1 Pr(X = k)/k = E(X–1). If X is the distribution of the number of flips to obtain n + 1 heads, we have G(z) = (pz/(1 – qz))n+1 by (8.59), and the integral is

G(z) dz/z = Pk–1 Pr(X = k)/k = E(X–1). If X is the distribution of the number of flips to obtain n + 1 heads, we have G(z) = (pz/(1 – qz))n+1 by (8.59), and the integral is

if we substitute w = pz/(1 – qz). When p = q the integrand can be written (–1)n((1+w)–1–1+w–w2+· · ·+(–1)nwn–1), so the integral is ![]() . We have

. We have ![]() by (9.28), and it follows that

by (9.28), and it follows that ![]() .

.

8.42 Let Fn(z) and Gn(z) be pgf’s for the number of employed evenings, if the man is initially unemployed or employed, respectively. Let qh = 1 – ph and qf = 1 – pf. Then F0(z) = G0(z) = 1, and

Fn(z) = phzGn–1(z) + qhFn–1(z);

Gn(z) = pfFn–1(z) + qfzGn–1(z).

The solution is given by the super generating function

where B(w) = w(qf –(qf –ph)w)/(1–qhw) and A(w) =(1–B(w))/(1–w).

Now ∑n≥0![]() (1)wn = αw/(1 – w)2 + β/(1 – w) – β/1 – (qf – ph)w) where

(1)wn = αw/(1 – w)2 + β/(1 – w) – β/1 – (qf – ph)w) where

hence ![]() (1) = αn + β(1 – (qf – ph)n). (Similarly

(1) = αn + β(1 – (qf – ph)n). (Similarly ![]() (1) = α2n2 + O(n), so the variance is O(n).)

(1) = α2n2 + O(n), so the variance is O(n).)

8.43 Gn(z) = ∑k≥0 ![]() zk/n! = z

zk/n! = z![]() /n!, by (6.11). This is a product of binomial pgf’s,

/n!, by (6.11). This is a product of binomial pgf’s, ![]() , where the kth has mean 1/k and variance (k – 1)/k2; hence Mean(Gn) = Hn and Var (Gn) = Hn –

, where the kth has mean 1/k and variance (k – 1)/k2; hence Mean(Gn) = Hn and Var (Gn) = Hn – ![]() .

.

8.44 (a) The champion must be undefeated in n rounds, so the answer is pn. (b,c) Players x1, . . . , x2k must be “seeded” (by chance) in distinct subtournaments and they must win all 2k(n – k) of their matches. The 2n leaves of the tournament tree can be filled in 2n! ways; to seed it we have 2k!(2n–k)2k ways to place the top 2k players, and (2n – 2k)! ways to place the others. Hence the probability is (2p)2k(n–k)/![]() . When k = 1 this simplifies to (2p2)n–1/(2n – 1). (d) Each tournament outcome corresponds to a permutation of the players: Let y1 be the champ; let y2 be the other finalist; let y3 and y4 be the players who lost to y1 and y2 in the semifinals; let (y5, . . . , y8) be those who lost respectively to (y1, . . . , y4) in the quarterfinals; etc. (Another proof shows that the first round has 2n!/2n–1! essentially different outcomes; the second round has 2n–1!/2n–2!; and so on.) (e) Let Sk be the set of 2k–1 potential opponents of x2 in the kth round. The conditional probability that x2 wins, given that x1 belongs to Sk, is

. When k = 1 this simplifies to (2p2)n–1/(2n – 1). (d) Each tournament outcome corresponds to a permutation of the players: Let y1 be the champ; let y2 be the other finalist; let y3 and y4 be the players who lost to y1 and y2 in the semifinals; let (y5, . . . , y8) be those who lost respectively to (y1, . . . , y4) in the quarterfinals; etc. (Another proof shows that the first round has 2n!/2n–1! essentially different outcomes; the second round has 2n–1!/2n–2!; and so on.) (e) Let Sk be the set of 2k–1 potential opponents of x2 in the kth round. The conditional probability that x2 wins, given that x1 belongs to Sk, is

Pr(x1 plays x2)·pn–1(1 – p) + Pr(x1 doesn’t play x2)·pn

= pk–1pn–1(1 – p) + (1 – pk–1)pn.

The chance that x1 ![]() Sk is 2k–1/(2n – 1); summing on k gives the answer:

Sk is 2k–1/(2n – 1); summing on k gives the answer:

(f) Each of the 2n! tournament outcomes has a certain probability of occurring, and the probability that xj wins is the sum of these probabilities over all (2n – 1)! tournament outcomes in which xj is champion. Consider interchanging xj with xj+1 in all those outcomes; this change doesn’t affect the probability if xj and xj+1 never meet, but it multiplies the probability by (1 – p)/p < 1 if they do meet.

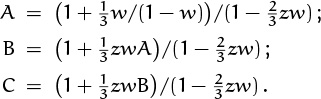

8.45 (a) A(z) = 1/(3 – 2z); B(z) = zA(z)2; C(z) = z2A(z)3. The pgf for sherry when it’s bottled is z3A(z)3, which is z3 times a negative binomial distribution with parameters n = 3, p = ![]() . (b) Mean(A) = 2, Var(A) = 6; Mean(B) = 5, Var(B) = 2 Var(A) = 12; Mean(C) = 8, Var(C) = 18. The sherry is nine years old, on the average. The fraction that’s 25 years old is

. (b) Mean(A) = 2, Var(A) = 6; Mean(B) = 5, Var(B) = 2 Var(A) = 12; Mean(C) = 8, Var(C) = 18. The sherry is nine years old, on the average. The fraction that’s 25 years old is ![]() . (c) Let the coefficient of wn be the pgf for the beginning of year n. Then

. (c) Let the coefficient of wn be the pgf for the beginning of year n. Then

Differentiate with respect to z and set z = 1; this makes

The average age of bottled sherry n years after the process started is 1 greater than the coefficient of wn–1, namely 9–(![]() )n(3n2+21n+72)/8. (This already exceeds 8 when n = 11.)

)n(3n2+21n+72)/8. (This already exceeds 8 when n = 11.)

8.46 (a) P(w, z) = 1 + ![]() (wP(w, z) + zP(w, z)) = (1 –

(wP(w, z) + zP(w, z)) = (1 – ![]() (w + z))–1, hence pm,n = 2–m–n

(w + z))–1, hence pm,n = 2–m–n![]() . (b) Pk(w, z) =

. (b) Pk(w, z) = ![]() (wk + zk)P(w, z); hence

(wk + zk)P(w, z); hence

(c) ![]() ; this can be summed using (5.20):

; this can be summed using (5.20):

(The methods of Chapter 9 show that this is ![]() – 1 + O(n–1/2).

– 1 + O(n–1/2).

8.47 After n irradiations there are n + 2 equally likely receptors. Let the random variable Xn denote the number of diphages present; then Xn+1 = Xn + Yn, where Yn = –1 if the (n + 1)st particle hits a diphage receptor (conditional probability 2Xn/(n + 2)) and Yn = +2 otherwise. Hence

EXn+1 = EXn + EYn = EXn – 2EXn/(n+2) + 2(1 – 2EXn/(n+2)).

The recurrence (n+2)EXn+1 = (n–4)EXn+2n+4 can be solved if we multiply both sides by the summation factor (n + 1)5; or we can guess the answer and prove it by induction: EXn = (2n + 4)/7 for all n > 4. (Incidentally, there are always two diphages and one triphage after five steps, regardless of the configuration after four.)

8.48 (a) The distance between frisbees (measured so as to make it an even number) is either 0, 2, or 4 units, initially 4. The corresponding generating functions A, B, C (where, say, [zn] C is the probability of distance 4 after n throws) satisfy

A = ![]() zB, B =

zB, B = ![]() zB +

zB + ![]() zC, C = 1 +

zC, C = 1 + ![]() zB +

zB + ![]() zC.

zC.

It follows that A = z2/(16 – 20z + 5z2) = z2/F(z), and we have Mean(A) = 2 – Mean(F) = 12, Var(A) = – Var(F) = 100. (A more difficult but more amusing solution factors A as follows:

where ![]() , and p1 + q1 = p2 + q2 = 1. Thus, the game is equivalent to having two biased coins whose heads probabilities are p1 and p2; flip the coins one at a time until they have both come up heads, and the total number of flips will have the same distribution as the number of frisbee throws. The mean and variance of the waiting times for these two coins are respectively

, and p1 + q1 = p2 + q2 = 1. Thus, the game is equivalent to having two biased coins whose heads probabilities are p1 and p2; flip the coins one at a time until they have both come up heads, and the total number of flips will have the same distribution as the number of frisbee throws. The mean and variance of the waiting times for these two coins are respectively ![]() and

and ![]() , hence the total mean and variance are 12 and 100 as before.)

, hence the total mean and variance are 12 and 100 as before.)

(b) Expanding the generating function in partial fractions makes it possible to sum the probabilities. (Note that ![]() /(4ϕ) + ϕ2/4 = 1, so the answer can be stated in terms of powers of φ.) The game will last more than n steps with probability 5(n–1)/24–n(φn+2 – φ–n–2); when n is even this is 5n/24–nFn+2. So the answer is 5504–100F102 ≈ .00006.

/(4ϕ) + ϕ2/4 = 1, so the answer can be stated in terms of powers of φ.) The game will last more than n steps with probability 5(n–1)/24–n(φn+2 – φ–n–2); when n is even this is 5n/24–nFn+2. So the answer is 5504–100F102 ≈ .00006.

8.49 (a) If n > 0, PN(0, n) = ![]() [N = 0] +

[N = 0] + ![]() PN–1(0, n) +

PN–1(0, n) + ![]() PN–1(1, n–1); PN(m, 0) is similar; PN(0, 0) = [N = 0]. Hence

PN–1(1, n–1); PN(m, 0) is similar; PN(0, 0) = [N = 0]. Hence

gm,n = ![]() zgm–1,n+1 +

zgm–1,n+1 + ![]() zgm,n +

zgm,n + ![]() zgm+1,n–1;

zgm+1,n–1;

g0,n = ![]() +

+ ![]() zg0,n +

zg0,n + ![]() g1,n–1; etc.

g1,n–1; etc.

(b) ![]() ;

; ![]() ; etc. By induction on m, we have

; etc. By induction on m, we have ![]() for all m, n ≥ 0. And since

for all m, n ≥ 0. And since ![]() , we must have

, we must have ![]() . (c) The recurrence is satisfied when mn > 0, because

. (c) The recurrence is satisfied when mn > 0, because

this is a consequence of the identity sin(x – y) + sin(x + y) = 2 sin x cos y. So all that remains is to check the boundary conditions.

8.50 (a) Using the hint, we get

now look at the coefficient of z3+l. (b) ![]() . (c) Let

. (c) Let ![]() . One can show that (z–3+r)(z–3–r) = 4z, and hence that (r/(1 – z) + 2)2 = (13 – 5z + 4r)/(1 – z) = (9 – H(z))/(1 – H(z)).

. One can show that (z–3+r)(z–3–r) = 4z, and hence that (r/(1 – z) + 2)2 = (13 – 5z + 4r)/(1 – z) = (9 – H(z))/(1 – H(z)).

(d) Evaluating the first derivative at z = 1 shows that Mean(H) = 1. The second derivative diverges at z = 1, so the variance is infinite.

8.51 (a) Let Hn(z) be the pgf for your holdings after n rounds of play, with H0(z) = z. The distribution for n rounds is

Hn+1(z) = Hn(H(z)),

so the result is true by induction (using the amazing identity of the preceding problem). (b) gn = Hn(0) – Hn–1(0) = 4/n(n + 1)(n + 2) = 4(n – 1)–3. The mean is 2, and the variance is infinite. (c) The expected number of tickets you buy on the nth round is Mean(Hn) = 1, by exercise 15. So the total expected number of tickets is infinite. (Thus, you almost surely lose eventually, and you expect to lose after the second game, yet you also expect to buy an infinite number of tickets.) (d) Now the pgf after n games is Hn(z)2, and the method of part (b) yields a mean of 16 – ![]() π2 ≈ 2.8. (The sum ∑k≥11/k2 = π2/6 shows up here.)

π2 ≈ 2.8. (The sum ∑k≥11/k2 = π2/6 shows up here.)

8.52 If ω and ω′ are events with Pr(ω) > Pr(ω′), then a sequence of n independent experiments will encounter ω more often than ω′, with high probability, because ω will occur very nearly n Pr(ω) times. Consequently, as n → ∞, the probability approaches 1 that the median or mode of the values of X in a sequence of independent trials will be a median or mode of the random variable X.

8.53 We can disprove the statement, even in the special case that each variable is 0 or 1. Let p0 = Pr(X = Y = Z = 0), ![]() ,

, ![]() , where

, where ![]() . Then p0 + p1 + · · · + p7 = 1, and the variables are independent in pairs if and only if we have

. Then p0 + p1 + · · · + p7 = 1, and the variables are independent in pairs if and only if we have

(p4 + p5 + p6 + p7)(p2 + p3 + p6 + p7) = p6 + p7,

(p4 + p5 + p6 + p7)(p1 + p3 + p5 + p7) = p5 + p7,

(p2 + p3 + p6 + p7)(p1 + p3 + p5 + p7) = p3 + p7.

But Pr(X + Y = Z = 0) ≠ Pr(X + Y = 0) Pr(Z = 0) ![]() p0 ≠ (p0 + p1)(p0 + p2 + p4 + p6). One solution is

p0 ≠ (p0 + p1)(p0 + p2 + p4 + p6). One solution is

p0 = p3 = p5 = p6 = 1/4; p1 = p2 = p4 = p7 = 0.

This is equivalent to flipping two fair coins and letting X = (the first coin is heads), Y = (the second coin is heads), Z = (the coins differ). Another example, with all probabilities nonzero, is

p0 = 4/64, p1 = p2 = p4 = 5/64,

p3 = p5 = p6 = 10/64, p7 = 15/64.

For this reason we say that n variables X1, . . . , Xn are independent if

Pr(X1 = x1 and · · · and Xn = xn) = Pr(X1 = x1) . . . Pr(Xn = xn);

pairwise independence isn’t enough to guarantee this.

8.54 (See exercise 27 for notation.) We have

E(Σ![]() ) = nμ4 + n(n–1)μ

) = nμ4 + n(n–1)μ![]() ;

;

E(Σ2Σ![]() ) = nμ4 + 2n(n–1)μ3μ1 + n(n–1)μ

) = nμ4 + 2n(n–1)μ3μ1 + n(n–1)μ![]() + n(n–1)(n–2)μ2μ

+ n(n–1)(n–2)μ2μ![]() ;

;

E(Σ![]() ) = nμ4 + 4n(n–1)μ3μ1 + 3n(n–1)μ

) = nμ4 + 4n(n–1)μ3μ1 + 3n(n–1)μ![]()

+ 6n(n–1)(n–2)μ2μ![]() + n(n–1)(n–2)(n–3)μ

+ n(n–1)(n–2)(n–3)μ![]() ;

;

it follows that V(![]() X) = κ4/n + 2κ

X) = κ4/n + 2κ![]() /(n – 1).

/(n – 1).

8.55 There are ![]() permutations with X = Y, and

permutations with X = Y, and ![]() permutations with X ≠ Y. After the stated procedure, each permutation with X = Y occurs with probability

permutations with X ≠ Y. After the stated procedure, each permutation with X = Y occurs with probability ![]() , because we return to step S1 with probability

, because we return to step S1 with probability ![]() . Similarly, each permutation with X ≠ Y occurs with probability

. Similarly, each permutation with X ≠ Y occurs with probability ![]() . Choosing p =

. Choosing p = ![]() makes Pr(X = x and Y = y) =

makes Pr(X = x and Y = y) = ![]() for all x and y. (We could therefore make two flips of a fair coin and go back to S1 if both come up heads.)

for all x and y. (We could therefore make two flips of a fair coin and go back to S1 if both come up heads.)

8.56 If m is even, the frisbees always stay an odd distance apart and the game lasts forever. If m = 2l + 1, the relevant generating functions are

| Gm | = | ||

| A1 | = | ||

| Ak | = | for 1 < k < l, | |

| Al | = |

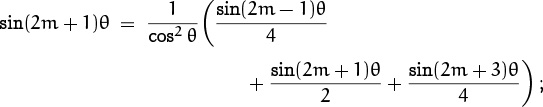

(The coefficient [zn] Ak is the probability that the distance between frisbees is 2k after n throws.) Taking a clue from the similar equations in exercise 49, we set z = 1/cos2 θ and A1 = X sin 2θ, where X is to be determined. It follows by induction (not using the equation for Al) that Ak = X sin 2kθ. Therefore we want to choose X such that

It turns out that X = 2 cos2 θ/sin θ cos(2l + 1)θ, hence

The denominator vanishes when θ is an odd multiple of π/(2m); thus 1–qkz is a root of the denominator for 1 ≤ k ≤ l, and the stated product representation must hold. To find the mean and variance we can write

Trigonometry wins again. Is there a connection with pitching pennies along the angles of the m-gon?

because tan2 θ = z – 1 and tan θ = θ + ![]() θ3 + · · ·. So we have Mean(Gm) =

θ3 + · · ·. So we have Mean(Gm) = ![]() (m2 –1) and Var(Gm) =

(m2 –1) and Var(Gm) = ![]() m2(m2 –1). (Note that this implies the identities

m2(m2 –1). (Note that this implies the identities

The third cumulant of this distribution is ![]() m2 (m2–1) (4m2 – 1); but the pattern of nice cumulant factorizations stops there. There’s a much simpler way to derive the mean: We have Gm + A1 + · · · + Al = z(A1 + · · · + Al) + 1, hence when z = 1 we have

m2 (m2–1) (4m2 – 1); but the pattern of nice cumulant factorizations stops there. There’s a much simpler way to derive the mean: We have Gm + A1 + · · · + Al = z(A1 + · · · + Al) + 1, hence when z = 1 we have ![]() . Since Gm = 1 when z = 1, an easy induction shows that Ak = 4k.)

. Since Gm = 1 when z = 1, an easy induction shows that Ak = 4k.)

8.57 We have A:A ≥ 2l–1 and B:B < 2l–1 + 2l–3 and B:A ≥ 2l–2, hence B:B – B:A ≥ A:A – A:B is possible only if A:B > 2l–3. This means that ![]() , τ1 = τ4, τ2 = τ5, . . . , τl–3 = τl. But then A:A ≈ 2l–1 + 2l–4 + · · ·, A:B ≈ 2l–3 +2l–6 +· · ·, B:A ≈ 2l–2 +2l–5 +· · ·, and B:B ≈ 2l–1 +2l–4 +· · ·; hence B:B – B:A is less than A:A – A:B after all. (Sharper results have been obtained by Guibas and Odlyzko [168], who show that Bill’s chances are always maximized with one of the two patterns

, τ1 = τ4, τ2 = τ5, . . . , τl–3 = τl. But then A:A ≈ 2l–1 + 2l–4 + · · ·, A:B ≈ 2l–3 +2l–6 +· · ·, B:A ≈ 2l–2 +2l–5 +· · ·, and B:B ≈ 2l–1 +2l–4 +· · ·; hence B:B – B:A is less than A:A – A:B after all. (Sharper results have been obtained by Guibas and Odlyzko [168], who show that Bill’s chances are always maximized with one of the two patterns Hτ1 . . . τl–1 or Tτ1 . . . τl–1. Bill’s winning strategy is, in fact, unique; see the following exercise.)

8.58 (Solution by J. Csirik.) If A is Hl or Tl, one of the two sequences matches A and cannot be used. Otherwise let  = τ1 . . . τl–1, H = HÂ, and T = T Â. It is not difficult to verify that H:A = T:A = Â:Â, H:H + T:T = 2l–1 + 2( Â:Â) + 1, and A:H + A:T = 1 + 2(A:A) – 2l. Therefore the equation

implies that both fractions equal

Then we can rearrange the original fractions to show that

where pq > 0 and p + q = gcd(2l–1 + 1, 2l – 1) = gcd(3, 2l – 1); so we may assume that l is even and that p = 1, q = 2. It follows that A:A – A:H = (2l – 1)/3 and A:A–A:T = (2l+1–2)/3, hence A:H–A:T = (2l – 1)/3 ≥ 2l–2. We have A:H ≥ 2l–2 if and only if A = (TH)l/2. But then H:H – H:A = A:A – A:H, so 2l–1 + 1 = 2l – 1 and l = 2.

(Csirik [69] goes on to show that, when l ≥ 4, Alice can do no better than to play HTl–3H2. But even with this strategy, Bill wins with probability nearly ![]() .)

.)

8.59 According to (8.82), we want B:B – B:A > A:A – A:B. One solution is A = TTHH, B = HHH.

8.60 (a) Two cases arise depending on whether hk ≠ hn or hk = hn:

(b) We can either argue algebraically, taking partial derivatives of G(w, z) with respect to w and z and setting w = z = 1; or we can argue combinatorially: Whatever the values of h1, . . . , hn–1, the expected value of P(h1, . . . , hn–1, hn; n) is the same (averaged over hn), because the hash sequence (h1, . . . , hn–1) determines a sequence of list sizes (n1, n2, . . . , nm) such that the stated expected value is ((n1+1)+(n2+1)+· · ·+(nm+1) /m (n – 1 + m)/m. Therefore the random variable EP(h1, . . . , hn; n) is independent of (h1, . . . , hn–1), hence independent of P(h1, . . . , hn; k).

8.61 If 1 ≤ k < l ≤ n, the previous exercise shows that the coefficient of sksl in the variance of the average is zero. Therefore we need only consider the coefficient of ![]() , which is

, which is

the variance of ((m – 1 + z)/m)k–1z; and this is (k – 1)(m – 1)/m2 as in exercise 30.

8.62 The pgf Dn(z) satisfies the recurrence

We can now derive the recurrence

which has the solution ![]() (n+2) (26n + 15) for all n ≥ 11 (regardless of initial conditions). Hence the variance comes to

(n+2) (26n + 15) for all n ≥ 11 (regardless of initial conditions). Hence the variance comes to ![]() (n + 2) for n ≥ 11.

(n + 2) for n ≥ 11.

8.63 (Another question asks if a given sequence of purported cumulants comes from any distribution whatever; for example, κ2 must be nonnegative, and ![]() must be at least

must be at least ![]() , etc. A necessary and sufficient condition for this other problem was found by Hamburger [6], [175].)

, etc. A necessary and sufficient condition for this other problem was found by Hamburger [6], [175].)

9.1 True if the functions are all positive. But otherwise we might have, say, f1(n) = n3 + n2, f2(n) = –n3, g1(n) = n4 + n, g2(n) = –n4.

9.2 (a) We have nlnn ≺ cn ≺ (ln n)n, since (ln n)2 ≺ n ln c ≺ n ln ln n.

(b) nlnlnlnn ≺ (ln n)! ≺ nlnlnn. (c) Take logarithms to show that (n!)! wins. (d) ![]() ; HFn ∼ n ln φ wins because φ2 = φ + 1 < e.

; HFn ∼ n ln φ wins because φ2 = φ + 1 < e.

9.3 Replacing kn by O(n) requires a different C for each k; but each O stands for a single C. In fact, the context of this O requires it to stand for a set of functions of two variables k and n. It would be correct to write ![]() .

.

9.4 For example, limn→∞ O(1/n) = 0. On the left, O(1/n) is the set of all functions f(n) such that there are constants C and n0 with |f(n)| ≤ C/n for all n ≥ n0. The limit of all functions in that set is 0, so the left-hand side is the singleton set {0}. On the right, there are no variables; 0 represents {0}, the (singleton) set of all “functions of no variables, whose value is zero.” (Can you see the inherent logic here? If not, come back to it next year; you probably can still manipulate O-notation even if you can’t shape your intuitions into rigorous formalisms.)

9.5 Let f(n) = n2 and g(n) = 1; then n is in the left set but not in the right, so the statement is false.

9.6 nln + γn + O(![]() lnn).

lnn).

9.7 (1 – e–1/n)–1 = nB0 – B1 + B2n–1/2! + · · · = n + ![]() + O(n–1).

+ O(n–1).

9.8 For example, let f(n) = ![]() n/2

n/2![]() !2 + n, g(n) = (

!2 + n, g(n) = (![]() n/2

n/2![]() – 1) !

– 1) ! ![]() n/2

n/2![]() ! + n. These functions, incidentally, satisfy f(n) = O (ng(n)) and g(n) = O) nf(n)); more extreme examples are clearly possible.

! + n. These functions, incidentally, satisfy f(n) = O (ng(n)) and g(n) = O) nf(n)); more extreme examples are clearly possible.

9.9 (For completeness, we assume that there is a side condition n → ∞, so that two constants are implied by each O.) Every function on the left has the form a(n) + b(n), where there exist constants m0, B, n0, C such that |a(n) |≤ B |f(n) |for n ≥ m0 and |b(n) |≤ C |g(n) |for n ≥ n0. Therefore the left-hand function is at most max(B, C) (|f(n)| +| g(n)|), for n ≥ max(m0, n0), so it is a member of the right side.

9.10 If g(x) belongs to the left, so that g(x) = cos y for some y, where |y| ≤ C|x| for some C, then 0 ≤ 1 – g(x) = 2 sin2(y/2) ≤ ![]() y2 ≤

y2 ≤ ![]() C2x2; hence the set on the left is contained in the set on the right, and the formula is true.

C2x2; hence the set on the left is contained in the set on the right, and the formula is true.

9.11 The proposition is true. For if, say, |x| ≤ |y|, we have (x + y)2 ≤ 4y2. Thus (x + y)2 = O(x2) + O(y2). Thus O(x + y)2 = O ((x + y)2) = O(O(x2) + O(y2)) = O(O(x2)) + O(O(y2)) = O(x2) + O(y2).

9.12 1 + 2/n + O(n–2) = (1 + 2/n) (1 + O(n–2)/(1 + 2/n)) by (9.26), and 1/(1 + 2/n) = O(1); now use (9.26).

9.13 nn(1 + 2n–1 + O(n–2))n = nn exp (n(2n–1 + O(n–2))) = e2nn + O(nn–1).

9.14 It is nn+β exp ((n + β) (α/n – ![]() α2/n2 + O(n–3))).

α2/n2 + O(n–3))).

(It’s interesting to compare this formula with the corresponding result for the middle binomial coefficient, exercise 9.60.)

9.15 ![]() , so the answer is

, so the answer is

9.16 If l is any integer in the range a ≤ l < b we have

Since l + x ≥ l + 1 – x when ![]() , this integral is positive when f(x) is nondecreasing.

, this integral is positive when f(x) is nondecreasing.

9.18 The text’s derivation for the case α = 1 generalizes to give

the answer is 22nα(πn)(1–α)/2α–1/2(1 + O(n–1/2+3![]() )).

)).

9.19 H10 = 2.928968254 ≈ 2.928968256; 10! = 3628800 ≈ 3628712.4; B10 = 0.075757576 ≈ 0.075757494; π(10) = 4 ≈ 10.0017845; e0.1 = 1.10517092 ≈ 1.10517083; ln 1.1 = 0.0953102 ≈ 0.0953083; 1.1111111 . . . ≈ 1.1111; 1.10.1 = 1.00957658 ≈ 1.00957643. (The approximation to π(n) gives more significant figures when n is larger; for example, π(109) = 50847534 ≈ 50840742.)

9.20 (a) Yes; the left side is o(n) while the right side is equivalent to O(n). (b) Yes; the left side is e · eO(1/n). (c) No; the left side is about ![]() times the bound on the right.

times the bound on the right.

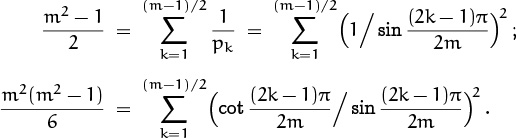

9.21 We have Pn = p = n (ln p – 1 – 1/ln p + O(1/log n)2), where

It follows that

(A slightly better approximation replaces this O(1/log n)2 by the quantity –5.5/(ln n)2 + O(log log n/log n)3; then we estimate P1000000 ≈ 15480992.8.)

What does a drowning analytic number theorist say?

log log log log . . .

9.22 Replace O(n–2k) by – ![]() n–2k + O(n–4k) in the expansion of Hnk ; this replaces O(∑3(n2)) by

n–2k + O(n–4k) in the expansion of Hnk ; this replaces O(∑3(n2)) by ![]() in (9.53). We have

in (9.53). We have

hence the term O(n–2) in (9.54) can be replaced by – ![]() n–2 + O(n–3).

n–2 + O(n–3).

9.23 nhn = ∑0≤k<n hk/(n–k)+2cHn/(n+1)(n+2). Choose c = eπ2/6 = ∑k≥0 gk so that ∑k≥0 hk = 0 and hn = O(log n)/n3. The expansion of ∑0≤k<n hk/(n – k) as in (9.60) now yields nhn = 2cHn/(n + 1)(n + 2) + O(n–2), hence

9.24 (a) If ∑k≥0|f(k)| < ∞ and if f(n – k) = O(f(n)) when 0 ≤ k ≤ n/2, we have

which is 2O(f(n) ∑k≥0|f(k)|), so this case is proved. (b) But in this case if an = bn = α–n, the convolution (n + 1)α–n is not O(α–n).

9.25 ![]() . We may restrict the range of summation to 0 ≤ k ≤ (log n)2, say. In this range

. We may restrict the range of summation to 0 ≤ k ≤ (log n)2, say. In this range ![]() and

and ![]() , so the summand is

, so the summand is

Hence the sum over k is 2–4/n + O(1/n2). Stirling’s approximation can now be applied to ![]() , proving (9.2).

, proving (9.2).

9.26 The minimum occurs at a term B2m/(2m)(2m–1)n2m–1 where 2m ≈ 2πn+ ![]() , and this term is approximately equal to

, and this term is approximately equal to ![]() . The absolute error in ln n! is therefore too large to determine n! exactly by rounding to an integer, when n is greater than about e2π+1.

. The absolute error in ln n! is therefore too large to determine n! exactly by rounding to an integer, when n is greater than about e2π+1.

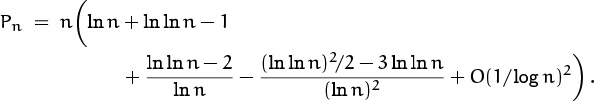

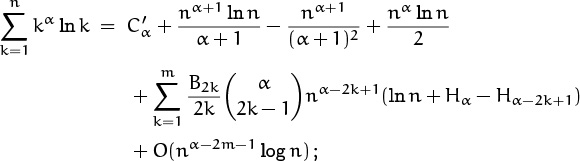

9.27 We may assume that α ≠ –1. Let f(x) = xα; the answer is

(The constant Cα turns out to be ζ(–α), which is in fact defined by this formula when α > –1.)

In particular, ζ(0) = –1/2, and ζ(–n) = –Bn+1/(n+1) for integer n > 0.

9.28 In general, suppose f(x) = xα ln x in Euler’s summation formula, when α ≠ –1. Proceeding as in the previous exercise, we find

the constant ![]() can be shown [74, §3.7] to be –ζ′(–α). (The log n factor in the O term can be removed when α is a positive integer ≤ 2m; in that case we also replace the kth term of the right sum by B2kα! (2k – 2 – α)! × (–1)αnα–2k+1/(2k)! when α < 2k – 1.) To solve the stated problem, we let α = 1 and m = 1, taking the exponential of both sides to get

can be shown [74, §3.7] to be –ζ′(–α). (The log n factor in the O term can be removed when α is a positive integer ≤ 2m; in that case we also replace the kth term of the right sum by B2kα! (2k – 2 – α)! × (–1)αnα–2k+1/(2k)! when α < 2k – 1.) To solve the stated problem, we let α = 1 and m = 1, taking the exponential of both sides to get

Qn = A · nn2/2+n/2+1/12e–n2/4(1 + O(n–2)),

where A = e1/12–ζ ′(–1) ≈ 1.2824271291 is “Glaisher’s constant.”

9.29 Let f(x) = x–1 ln x. A slight modification of the calculation in the previous exercise gives

where γ1 ≈ –0.07281584548367672486 is a “Stieltjes constant” (see the answer to 9.57). Taking exponentials gives

9.30 Let g(x) = xle–x2 and ![]() . Then n–l/2 ∑k≥0 kle–k2/n is

. Then n–l/2 ∑k≥0 kle–k2/n is

Since g(x) = xl – x2+l/1! + x4+l/2! – x6+l/3! + · · · , the derivatives g(m)(x) obey a simple pattern, and the answer is

9.31 The somewhat surprising identity 1/(cm–k + cm) + 1/(cm+k + cm) = 1/cm makes the terms for 0 ≤ k ≤ 2m sum to ![]() . The remaining terms are

. The remaining terms are

and this series can be truncated at any desired point, with an error not exceeding the first omitted term.

9.32 ![]() by Euler’s summation formula, since we know the constant; and Hn is given by (9.89). So the answer is

by Euler’s summation formula, since we know the constant; and Hn is given by (9.89). So the answer is

The world’s top three constants, (e, π, γ), all appear in this answer.

neγ+π2/6 (1 – ![]() n–1 + O(n–2)).

n–1 + O(n–2)).

9.33 We have ![]() ; dividing by k! and summing over k ≥ 0 yields e – en–1 +

; dividing by k! and summing over k ≥ 0 yields e – en–1 + ![]() en–2 + O(n–3).

en–2 + O(n–3).

9.34 ![]() .

.

9.35 Since 1/k(ln k + O(1)) = 1/k ln k + O(1/k(log k)2), the given sum is ![]() . The remaining sum is ln ln n + O(1) by Euler’s summation formula.

. The remaining sum is ln ln n + O(1) by Euler’s summation formula.

9.36 This works out beautifully with Euler’s summation formula:

Hence ![]() .

.

9.37 This is

The remaining sum is like (9.55) but without the factor μ(q). The same method works here as it did there, but we get ζ(2) in place of 1/ζ(2), so the answer comes to ![]() .

.

9.38 Replace k by n – k and let ![]() . Then ln ak(n) = n ln n – ln k! – k + O(kn–1), and we can use tail-exchange with bk(n) = nne–k/k!, ck(n) = kbk(n)/n, Dn = {k | k ≤ ln n }, to get

. Then ln ak(n) = n ln n – ln k! – k + O(kn–1), and we can use tail-exchange with bk(n) = nne–k/k!, ck(n) = kbk(n)/n, Dn = {k | k ≤ ln n }, to get ![]() .

.

9.39 Tail-exchange with ![]() , ck(n) = n–3(ln n)k+3/k!, Dn = { k | 0 ≤ k ≤ 10 ln n }. When k ≈ 10 ln n we have

, ck(n) = n–3(ln n)k+3/k!, Dn = { k | 0 ≤ k ≤ 10 ln n }. When k ≈ 10 ln n we have ![]() , so the kth term is O(n–10 ln(10/e) log n). The answer is

, so the kth term is O(n–10 ln(10/e) log n). The answer is ![]() .

.

9.40 Combining terms two by two, we find that ![]() plus terms whose sum over all k ≥ 1 is O(1). Suppose n is even. Euler’s summation formula implies that

plus terms whose sum over all k ≥ 1 is O(1). Suppose n is even. Euler’s summation formula implies that

hence the sum is ![]() . In general the answer is

. In general the answer is ![]() .

.

9.41 Let ![]() . We have

. We have

The latter sum is ∑k>n O(αk) = O(αn). Hence the answer is

φn (n+1)/25–n/2C + O(φn(n–3)/25–n/2),

where C = (1 – α)(1 – α2)(1 – α3) . . . ≈ 1.226742.

9.42 The hint follows since ![]() . Let m =

. Let m = ![]() αn

αn![]() = αn –

= αn – ![]() . Then

. Then

So ![]() , and it remains to estimate

, and it remains to estimate ![]() . By Stirling’s approximation we have

. By Stirling’s approximation we have ![]() = = – 12 ln n–(αn–

= = – 12 ln n–(αn–![]() ) ln(α–

) ln(α–![]() /n)–– (1–α)n+

/n)–– (1–α)n+![]() – × ln(1 – α +

– × ln(1 – α + ![]() /n) + O(1) = – 12 ln n – αn ln α – (1 – α)n ln(1 – α) + O(1).

/n) + O(1) = – 12 ln n – αn ln α – (1 – α)n ln(1 – α) + O(1).

9.43 The denominator has factors of the form z – ω, where ω is a complex root of unity. Only the factor z – 1 occurs with multiplicity 5. Therefore by (7.31), only one of the roots has a coefficient Ω(n4), and the coefficient is c = 5/(5!·1·5·10·25·50) = 1/1500000.

9.44 Stirling’s approximation says that ln(x–αx!/(x – α)!) has an asymptotic series

in which each coefficient of x–k is a polynomial in α. Hence x–αx!/(x – α)! = c0(α) + c1(α)x–1 + · · · + cn(α)x–n + O(x–n–1) as x → ∞, where cn(α) is a polynomial in α. We know that ![]() whenever α is an integer, and

whenever α is an integer, and ![]() is a polynomial in α of degree 2n; hence

is a polynomial in α of degree 2n; hence ![]() for all real α. In other words, the asymptotic formulas

for all real α. In other words, the asymptotic formulas

(See [220] for further discussion.)

generalize equations (6.13) and (6.11), which hold in the all-integer case.

9.45 Let the partial quotients of α be ![]() a1, a2, . . .

a1, a2, . . . ![]() , and let αm be the continued fraction 1/(am + αm+1) for m ≥ 1. Then D(α, n) = D(α1, n) < D(α2,

, and let αm be the continued fraction 1/(am + αm+1) for m ≥ 1. Then D(α, n) = D(α1, n) < D(α2, ![]() α1n

α1n![]() ) + a1 + 3 < D (α3,

) + a1 + 3 < D (α3, ![]() α2

α2![]() α1n

α1n![]()

![]() ) + a1 + a2 + 6 < · · · < D(αm+1,

) + a1 + a2 + 6 < · · · < D(αm+1, ![]() αm

αm![]() . . .

. . . ![]() α1n

α1n![]() . . .

. . .![]()

![]() ) +a1 +· · ·+am +3m < α1 . . . αm n+a1 +· · ·+am +3m, for all m. Divide by n and let n → ∞; the limit points are bounded above by α1 . . . αm for all m. Finally we have

) +a1 +· · ·+am +3m < α1 . . . αm n+a1 +· · ·+am +3m, for all m. Divide by n and let n → ∞; the limit points are bounded above by α1 . . . αm for all m. Finally we have

9.46 For convenience we write just m instead of m(n). By Stirling’s approximation, the maximum value of kn/k! occurs when k ≈ m ≈ n/ln n, so we replace k by m + k and find that

Actually we want to replace k by ![]() m

m![]() + k; this adds a further O(km–1 log n). The tail-exchange method with |k| ≤ m1/2+

+ k; this adds a further O(km–1 log n). The tail-exchange method with |k| ≤ m1/2+![]() now allows us to sum on k, giving a fairly sharp asymptotic estimate in terms of the quantity Θ in (9.93):

now allows us to sum on k, giving a fairly sharp asymptotic estimate in terms of the quantity Θ in (9.93):

A truly Bell-shaped summand.

The requested formula follows, with relative error O(log log n/log n).

9.47 Let logm n = l + θ, where 0 ≤ θ < 1. The floor sum is l(n + 1) + 1 – (ml + 1 – 1)/(m – 1); the ceiling sum is (l + 1)n – (ml+1 – 1)/(m – 1); the exact sum is (l + θ)n – n/ln m + O(log n). Ignoring terms that are o(n), the difference between ceiling and exact is (1–f(θ))n, and the difference between exact and floor is f(θ)n, where

This function has maximum value f(0) = f(1) = m/(m – 1) – 1/ln m, and its minimum value is ln ln m/ln m + 1 – (ln(m – 1))/ln m. The ceiling value is closer when n is nearly a power of m, but the floor value is closer when θ lies somewhere between 0 and 1.

9.48 Let dk = ak + bk, where ak counts digits to the left of the decimal point. Then ak = 1 + ![]() log Hk

log Hk![]() = log log k + O(1), where ‘log’ denotes log10. To estimate bk, let us look at the number of decimal places necessary to distinguish y from nearby numbers y –

= log log k + O(1), where ‘log’ denotes log10. To estimate bk, let us look at the number of decimal places necessary to distinguish y from nearby numbers y – ![]() and y +

and y + ![]() ′: Let δ = 10–b be the length of the interval of numbers that round to ŷ. We have

′: Let δ = 10–b be the length of the interval of numbers that round to ŷ. We have ![]() ; also

; also ![]() and

and ![]() . Therefore

. Therefore ![]() +

+ ![]() ′ > δ. And if δ < min(

′ > δ. And if δ < min(![]() ,

, ![]() ′), the rounding does distinguish ŷ from both y –

′), the rounding does distinguish ŷ from both y – ![]() and y +

and y + ![]() ′. Hence 10–bk < 1/(k – 1) + 1/k and 101–bk ≥ 1/k; we have bk = log k + O(1). Finally, therefore,

′. Hence 10–bk < 1/(k – 1) + 1/k and 101–bk ≥ 1/k; we have bk = log k + O(1). Finally, therefore, ![]() , which is n log n + n log log n + O(n) by Euler’s summation formula.

, which is n log n + n log log n + O(n) by Euler’s summation formula.

9.49 We have ![]() , where f(x) is increasing for all x > 0; hence if n ≥ eα–γ we have Hn ≥ f(eα–γ) > α. Also

, where f(x) is increasing for all x > 0; hence if n ≥ eα–γ we have Hn ≥ f(eα–γ) > α. Also ![]() , where g(x) is increasing for all x > 0; hence if n ≤ eα–γ we have Hn–1 ≤ g(eα–γ) < α. Therefore Hn–1 ≤ α ≤ Hn implies that eα–γ + 1 > n > eα+γ – 1. (Sharper results have been obtained by Boas and Wrench [33].)

, where g(x) is increasing for all x > 0; hence if n ≤ eα–γ we have Hn–1 ≤ g(eα–γ) < α. Therefore Hn–1 ≤ α ≤ Hn implies that eα–γ + 1 > n > eα+γ – 1. (Sharper results have been obtained by Boas and Wrench [33].)

9.50 (a) The expected return is ![]() , and we want the asymptotic value to O(N–1):

, and we want the asymptotic value to O(N–1):

The coefficient (6 ln 10)/π2 ≈ 1.3998 says that we expect about 40% profit.

(b) The probability of profit is ![]() , and since

, and since ![]() this is

this is

actually decreasing with n. (The expected value in (a) is high because it includes payoffs so huge that the entire world’s economy would be affected if they ever had to be made.)

9.51 Strictly speaking, this is false, since the function represented by O(x–2) might not be integrable. (It might be ‘[x ![]() S]/x2’, where S is not a measurable set.) But if we stipulate that f(x) is an integrable function such that f(x) = O(x–2) as x → ∞, then

S]/x2’, where S is not a measurable set.) But if we stipulate that f(x) is an integrable function such that f(x) = O(x–2) as x → ∞, then ![]() .

.

(As opposed to an execrable function.)

9.52 In fact, the stack of n’s can be replaced by any function f(n) that approaches infinity, however fast. Define the sequence ![]() m0, m1, m2, . . .

m0, m1, m2, . . . ![]() by setting m0 = 0 and letting mk be the least integer > mk–1 such that

by setting m0 = 0 and letting mk be the least integer > mk–1 such that

Now let A(z) = ∑k≥1(z/k)mk. This power series converges for all z, because the terms for k > |z| are bounded by a geometric series. Also A(n + 1) ≥ ((n + 1)/n)mn ≥ f(n + 1)2, hence limn→∞ f(n)/A(n) = 0.

9.53 By induction, the O term is ![]() . Since f(m+1) has the opposite sign to f(m), the absolute value of this integral is bounded by

. Since f(m+1) has the opposite sign to f(m), the absolute value of this integral is bounded by ![]() ; so the error is bounded by the absolute value of the first discarded term.

; so the error is bounded by the absolute value of the first discarded term.

9.54 Let g(x) = f(x)/xα. Then g′(x) ∼ –αg(x)/x as x → ∞. By the mean value theorem, ![]() for some y between

for some y between ![]() and

and ![]() . Now g(y) = g(x) 1 + O(1/x), so

. Now g(y) = g(x) 1 + O(1/x), so ![]() . Therefore

. Therefore

Sounds like a nasty theorem.

9.55 The estimate of (n + k + ![]() ) ln (1 + k/n) + (n – k +

) ln (1 + k/n) + (n – k + ![]() ) ln (1 – k/n) is extended to k2/n + k4/6n3 + O(n–3/2+5

) ln (1 – k/n) is extended to k2/n + k4/6n3 + O(n–3/2+5![]() ), so we apparently want to have an extra factor e–k4/6n3 in bk(n), and ck(n) = 22nn–2+5

), so we apparently want to have an extra factor e–k4/6n3 in bk(n), and ck(n) = 22nn–2+5![]() e–k2/n. But it turns out to be better to leave bk(n) untouched and to let

e–k2/n. But it turns out to be better to leave bk(n) untouched and to let

ck(n) = 22nn–2+5![]() e–k2/n + 22nn–5+5

e–k2/n + 22nn–5+5![]() k4e–k2/n,

k4e–k2/n,

thereby replacing e–k4/6n3 by 1+O(k4/n3). The sum Σkk4e–k2/n is O(n5/2), as shown in exercise 30.

9.56 If k ≤ n1/2+![]() we have

we have ![]() by Stirling’s approximation, hence

by Stirling’s approximation, hence

nk/nk = e–k2/2n (1 + k/2n – ![]() k3/(2n)2 + O(n–1+4

k3/(2n)2 + O(n–1+4![]() )).

)).

Summing with the identity in exercise 30, and remembering to omit the term for k = 0, gives ![]() .

.

9.57 Using the hint, the given sum becomes ![]() . The zeta function can be defined by the series

. The zeta function can be defined by the series

where γ0 = γ and γm is the Stieltjes constant [341, 201]

Hence the given sum is

ln n + γ – 2γ1(ln n)–1 + 3γ2(ln n)–2 – · · ·.

9.58 Let 0 ≤ θ ≤ 1 and f(z) = e2πizθ/(e2πiz – 1). We have

Therefore |f(z)| is bounded on the contour, and the integral is O(M1–m). The residue of 2πif(z)/zm at z = k ≠ 0 is e2πikθ/km; the residue at z = 0 is the coefficient of z–1 in

namely (2πi)mBm(θ)/m!. Therefore the sum of residues inside the contour is

This equals the contour integral O(M1–m), so it approaches zero as M → ∞.

9.59 If F(x) is sufficiently well behaved, we have the general identity

where ![]() . (This is “Poisson’s summation formula,” which can be found in standard texts such as Henrici [182, Theorem 10.6e].)

. (This is “Poisson’s summation formula,” which can be found in standard texts such as Henrici [182, Theorem 10.6e].)

9.60 The stated formula is equivalent to

by exercise 5.22. Hence the result follows from exercises 6.64 and 9.44.

9.61 The idea is to make α “almost” rational. Let ak = 222k be the kth partial quotient of α, and let ![]() , where qm = K(a1, . . . , am) and m is even. Then 0 < {qmα} < 1/K(a1, . . . , am+1) < 1/(2n), and if we take v = am+1/(4n) we get a discrepancy

, where qm = K(a1, . . . , am) and m is even. Then 0 < {qmα} < 1/K(a1, . . . , am+1) < 1/(2n), and if we take v = am+1/(4n) we get a discrepancy ![]() . If this were less than n1–

. If this were less than n1–![]() we would have

we would have ![]() ; but in fact

; but in fact ![]() .

.

9.62 See Canfield [48]; see also David and Barton [71, Chapter 16] for asymptotics of Stirling numbers of both kinds.

9.63 Let c = φ2–φ. The estimate cnφ–1+o(nφ–1) was proved by Fine [150]. Ilan Vardi observes that the sharper estimate stated can be deduced from the fact that the error term e(n) = f(n) – cnφ–1 satisfies the approximate recurrence cφn2–φe(n) ≈ – ∑k e(k)[1 ≤ k < cnφ–1 ]. The function

satisfies this recurrence asymptotically, if u(x + 1) = –u(x). (Vardi conjectures that

Additional progress on this problem has been made by Jean-Luc Rémy, Journal of Number Theory, vol. 66 (1997), 1–28.

for some such function u.) Calculations for small n show that f(n) equals the nearest integer to cnφ–1 for 1 ≤ n ≤ 400 except in one case: f(273) = 39 > c · 273φ –1 ≈ 38.4997. But the small errors are eventually magnified, because of results like those in exercise 2.36. For example, e(201636503) ≈ 35.73; e(919986484788) ≈ –1959.07.

9.64 (From this identity for B2(x) we can easily derive the identity of exercise 58 by induction on m.) If 0 < x < 1, the integral ![]() can be expressed as a sum of N integrals that are each O(N–2), so it is O(N–1); the constant implied by this O may depend on x. Integrating the identity

can be expressed as a sum of N integrals that are each O(N–2), so it is O(N–1); the constant implied by this O may depend on x. Integrating the identity ![]() and letting N → ∞ now gives

and letting N → ∞ now gives ![]() , a relation that Euler knew ([107] and [110, part 2, §92]). Integrating again yields the desired formula. (This solution was suggested by E. M. E. Wermuth [367]; Euler’s original derivation did not meet modern standards of rigor.)

, a relation that Euler knew ([107] and [110, part 2, §92]). Integrating again yields the desired formula. (This solution was suggested by E. M. E. Wermuth [367]; Euler’s original derivation did not meet modern standards of rigor.)

9.65 Since a0+a1n–1 +a2n–2 +· · · = 1+(n–1)–1(a0+a1(n–1)–1 +a2(n– 1)–2 + · · · ), we obtain the recurrence ![]() , which matches the recurrence for the Bell numbers. Hence am = ϖm.

, which matches the recurrence for the Bell numbers. Hence am = ϖm.

A slightly longer but more informative proof can be based on the fact that 1/(n-1)…(n-m)=![]() , by (7.47).

, by (7.47).

“The paradox is now fully established that the utmost abstractions are the true weapons with which to control our thought of concrete fact.”

—A. N. Whitehead [372]

9.66 The expected number of distinct elements in the sequence 1, f(1), f(f(1)), . . . , when f is a random mapping of {1, 2, . . . , n} into itself, is the function Q(n) of exercise 56, whose value is ![]() ; this might account somehow for the factor

; this might account somehow for the factor ![]() .

.

9.67 It is known that ![]() ; the constant e–π/6 has been verified empirically to eight significant digits.

; the constant e–π/6 has been verified empirically to eight significant digits.

9.68 This would fail if, for example, ![]() for some integer m and some

for some integer m and some ![]() ; but no counterexamples are known.

; but no counterexamples are known.