Chapter 11. Cisco Unified Computing Systems Overview

The Cisco Unified Computing System (UCS) is the industry’s first converged data center platform. The Cisco UCS delivers smart, programmable infrastructure that simplifies and speeds enterprise-class applications and service deployment in bare-metal, virtualized, and cloud-computing environments.

The Cisco UCS is an integrated computing infrastructure with intent-based management to automate and accelerate deployment of all applications, including virtualization and cloud computing, scale-out and bare-metal workloads, and in-memory analytics, in addition to edge computing that supports remote and branch locations and massive amounts of data from the Internet of Things (IoT).

This chapter covers the following key topics:

• Cisco UCS Architecture: This section provides an overview of UCS B-Series, C-Series, and Fabric Interconnect (FI) architecture and connectivity.

• Cisco UCS Initial Setup and Management: This section cover UCS B-Series and C-Series initial setup and configuration.

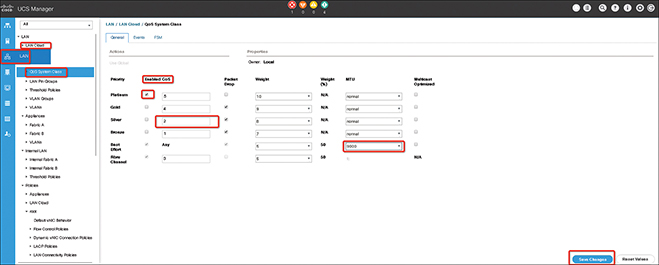

• Network Management: This section discusses UCS LAN management, including VLANs, pools, polices, quality of service (QoS), and templates.

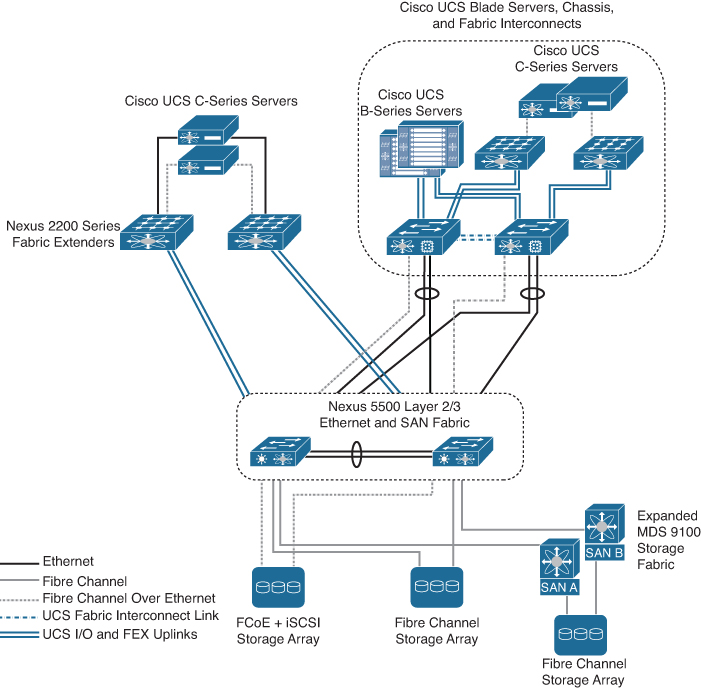

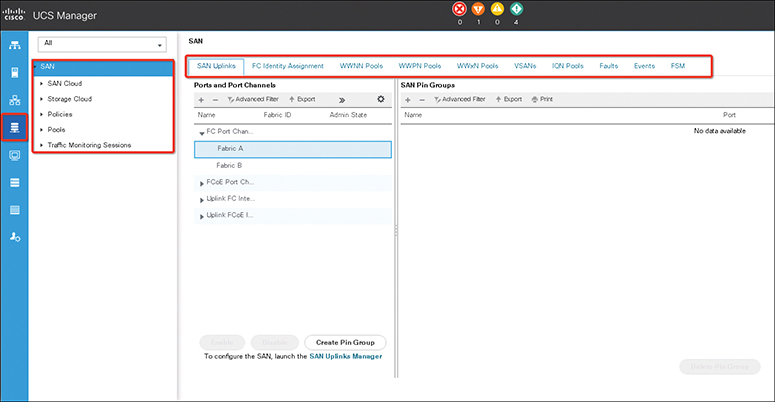

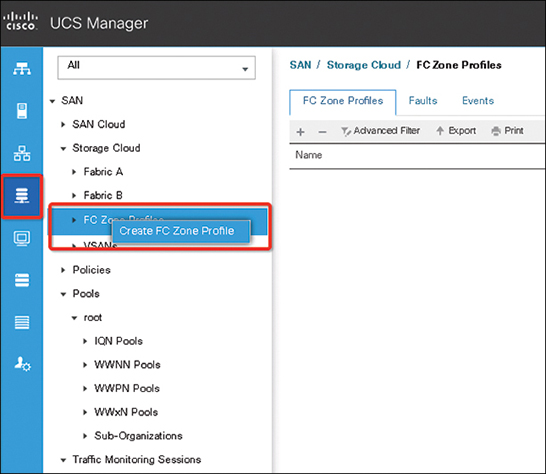

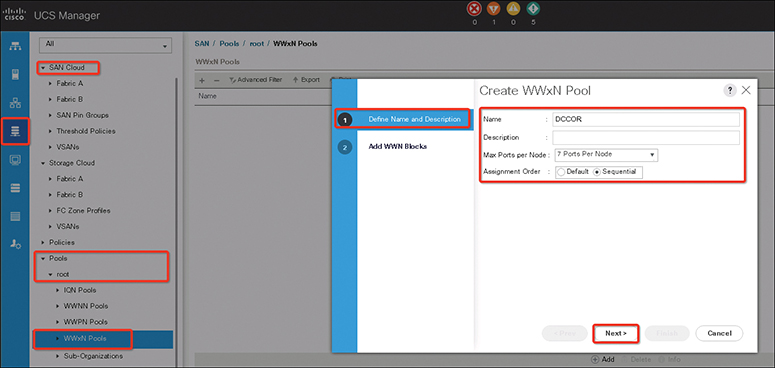

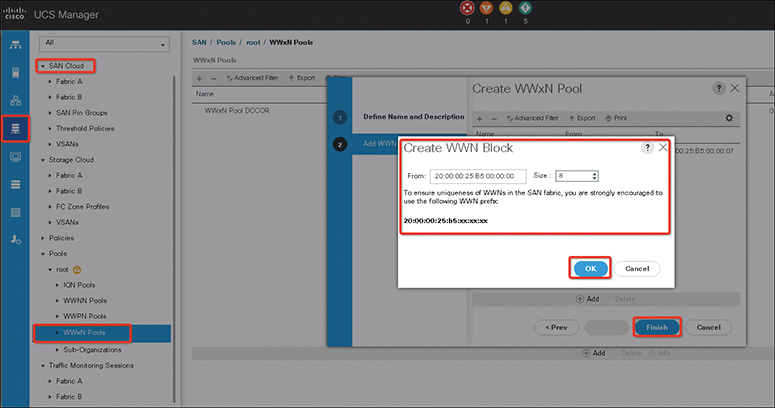

• UCS Storage Management: This section discusses UCS SAN management, including SAN connectivity (iSCSI, Fiber Channel, FCoE), VSANs, WWN pools, and zoning.

“Do I Know This Already?” Quiz

The “Do I Know This Already?” quiz enables you to assess whether you should read this entire chapter thoroughly or jump to the “Exam Preparation Tasks” section. If you are in doubt about your answers to these questions or your own assessment of your knowledge of the topics, read the entire chapter. Table 11-1 lists the major headings in this chapter and their corresponding “Do I Know This Already?” quiz questions. You can find the answers in Appendix A, “Answers to the ‘Do I Know This Already?’ Quizzes.”

Table 11-1 “Do I Know This Already?” Section-to-Question Mapping”

Caution

The goal of self-assessment is to gauge your mastery of the topics in this chapter. If you do not know the answer to a question or are only partially sure of the answer, you should mark that question as wrong for purposes of the self-assessment. Giving yourself credit for an answer you correctly guess skews your self-assessment results and might provide you with a false sense of security.

1. What are the Cisco UCS Mini main infrastructure components? (Choose two answers.)

a. Fabric Interconnect

b. Blade Server

c. Power Supply

d. I/O module

2. What type of connection does UCS blade chassis FEX fabric support? (Choose two answers.)

a. Basic mode

b. Discrete mode

c. Port mode

d. Port channel mode

3. When a host firmware package policy is created, what must it be associated with to upgrade the BIOS?

a. Blade or blade pool

b. Boot policy

c. Service profile

d. Service template

4. Which commands allow you to view the state of high availability between the two clustered fabric interconnects? (Choose two answers.)

a. show cluster HA status

b. show cluster state extended

c. show cluster extended-state

d. show cluster state

5. What is the correct path to verify the overall status of Chassis 1 | Server 3?

a. Servers tab > Chassis > Server 3 General tab

b. Status tab > Chassis > Chassis 1 > Servers > Server 3

c. Equipment tab > Chassis > Chassis 1 > Servers > Server 3 FSM tab

d. Equipment tab > Chassis > Chassis 1 > Servers > Server 3 Status tab

e. Admin tab > Chassis > Chassis 1 > Servers > Server 3 General tab

f. Equipment tab > Chassis > Chassis 1 > Servers > Server 3 General tab

6. If the virtual local-area network (VLAN) is deleted from the fabric interconnect using the Cisco UCS Manager, what happens?

a. The port belonging to the VLAN is assigned to the default VLAN.

b. The port belonging to the VLAN is pinned to a native VLAN.

c. You cannot delete the VLAN because an interface member belongs to that VLAN.

d. The port changes to a shutdown state.

7. Which of the following characteristics are true in the Cisco UCS unicast traffic path in end-host switching mode? (Choose two answers.)

a. Each server link is pinned as one-to-many uplink ports.

b. Server-to-server Layer 2 traffic is pinned to an uplink port.

c. Server-to-network traffic goes out on its pinned uplink port.

d. Server-to-server Layer 2 traffic is locally switched.

e. Server-to-network traffic is locally switched.

8. Which method is a TCP/IP-based protocol for establishing and managing connections between IP-based storage devices, hosts, and clients?

a. iFCP

b. FCIP

c. iSCSI

d. FCoE

9. Which important feature on the front end is provided to clients by multiple servers that access the same storage devices across a storage-area network?

a. Security

b. Storage

c. Redundancy

d. Recovery

Foundation Topics

Cisco UCS Architecture

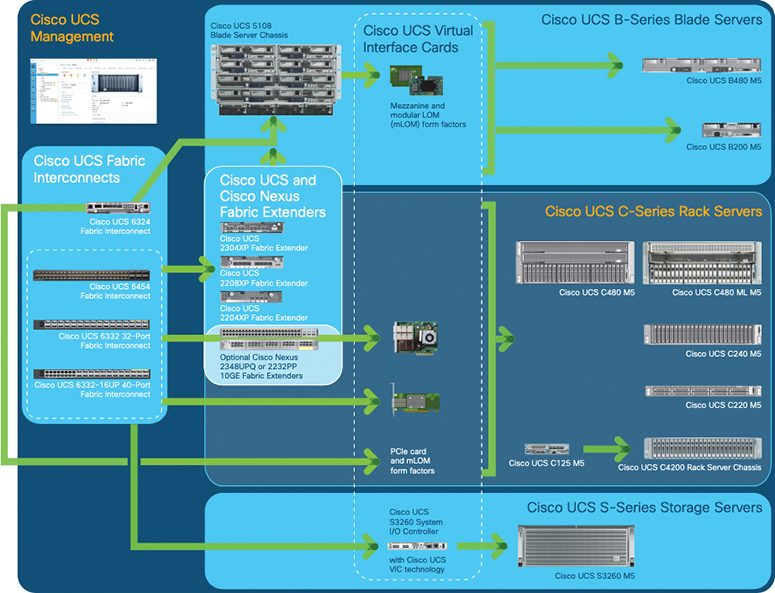

The Cisco Unified Computing System (UCS) has a unique architecture that integrates compute, data network access, and storage network access into a common set of components under a single management portal (single-pane-of-glass portal). The Cisco UCS combines access layer networking and servers. This high-performance, next-generation server system provides a data center with a high degree of workload agility and scalability. The hardware and software components support Cisco’s unified fabric, which runs multiple types of data center traffic over a single converged network adapter. Figure 11-1 shows UCS management and network connectivity.

![]()

Figure 11-1 Cisco Unified Computing System Architecture

The simplified architecture of the Cisco UCS reduces the number of required devices and centralizes switching resources. By eliminating switching inside a chassis, Cisco significantly reduced the network access layer fragmentation. The Cisco UCS implements a Cisco unified fabric within racks and groups of racks, supporting Ethernet and Fibre Channel protocols. This simplification reduces the number of switches, cables, adapters, and management points by up to two-thirds. All devices in a Cisco UCS domain remain under a single management domain, which remains highly available through the use of redundant components. The Cisco UCS architecture provides the following features (see Figure 11-2):

• High availability: The management and data plane of the Cisco UCS is designed for high availability and redundant access layer fabric interconnects. In addition, the Cisco UCS supports existing high-availability and disaster recovery solutions for the data center, such as data replication and application-level clustering technologies.

• Scalability: A single Cisco UCS domain supports multiple chassis and their servers, all of which are administered through one Cisco UCS Manager.

• Flexibility: A Cisco UCS domain allows you to quickly align computing resources in the data center with rapidly changing business requirements. This built-in flexibility is determined by whether you choose to fully implement the stateless computing feature. Pools of servers and other system resources can be applied as necessary to respond to workload fluctuations, support new applications, scale existing software and business services, and accommodate both scheduled and unscheduled downtime. Server identity can be abstracted into a mobile service profile that can be moved from server to server with minimal downtime and no need for additional network configuration. With this level of flexibility, you can quickly and easily scale server capacity without having to change the server identity or reconfigure the server, LAN, or SAN. During a maintenance window, you can quickly do the following:

• Deploy new servers to meet unexpected workload demand and rebalance resources and traffic.

• Shut down an application, such as a database management system, on one server and then boot it up again on another server with increased I/O capacity and memory resources.

• Optimized for server virtualization: The Cisco UCS has been optimized to implement VM-FEX technology. This technology provides improved support for server virtualization, including better policy-based configuration and security, conformance with a company’s operational model, and accommodation for VMware’s VMotion.

![]()

Figure 11-2 Cisco UCS Components and Connectivity

![]()

Cisco UCS Components and Connectivity

The main components of the Cisco UCS are as follows:

• Cisco UCS Manager: The Cisco UCS Manager is the centralized management interface for the Cisco UCS.

• Cisco UCS Fabric Interconnects: The Cisco UCS Fabric Interconnect is the core component of Cisco UCS deployments, providing both network connectivity and management capabilities for the Cisco UCS system. The Cisco UCS Fabric Interconnects run the Cisco UCS Manager control software and consist of the following components:

• Cisco UCS 6200 Series Fabric Interconnects, Cisco UCS 6332 Series Fabric Interconnects, and Cisco UCS Mini

• Transceivers for network and storage connectivity

• Expansion modules for the various Fabric Interconnects

• Cisco UCS Manager software

• Cisco UCS I/O modules and Cisco UCS Fabric Extender: I/O modules (or IOMs) are also known as Cisco Fabric Extenders (FEXs) or simply FEX modules. These modules serve as line cards to the FIs in the same way that Nexus series switches can have remote line cards. I/O modules also provide interface connections to blade servers. They multiplex data from blade servers and provide this data to FIs and do the same in the reverse direction. In production environments, I/O modules are always used in pairs to provide redundancy and failover.

Note

The 40G backplane setting is not applicable for 22xx IOMs.

• Cisco UCS blade server chassis: The Cisco UCS 5100 Series Blade Server Chassis is a crucial building block of the Cisco UCS, delivering a scalable and flexible architecture for current and future data center needs, while helping reduce total cost of ownership.

• Cisco UCS blade servers: Cisco UCS blade servers are at the heart of the Cisco UCS solution. They come in various system resource configurations in terms of CPU, memory, and hard disk capacity. All blade servers are based on Intel Xeon processors. There is no AMD option available.

• Cisco UCS rack servers: The Cisco UCS rack-mount servers are standalone servers that can be installed and controlled individually. Cisco provides FEXs for the rack-mount servers. FEXs can be used to connect and manage rack-mount servers from FIs. Rack-mount servers can also be directly attached to the fabric interconnect. Small and medium businesses (SMBs) can choose from different blade configurations as per business needs.

• Cisco UCS S-Series: Storage servers are modular servers that support up to 60 large-form-factor internal drives to support storage-intensive workloads including big data, content streaming, online backup, and storage-as-a-service applications. The servers support one or two computing nodes with up to two CPUs each, and with up 160 Gbps of unified fabric connectivity per node. These features simplify the process of deploying just the right amount of resources to most efficiently support your applications.

• Cisco UCS Mini solutions: These solutions can be created by using Cisco UCS 6234 Fabric Interconnects in the blade server chassis instead of rack-mount FEXs. This creates a standalone Cisco UCS instance that can connect to blade servers, rack servers, and external storage systems.

Cisco UCS 5108 Blade Server Chassis

The Cisco UCS 5108 Blade Server Chassis is six rack units (6RU) high, can mount in an industry-standard 19-inch rack, and uses standard front-to-back cooling (see Figure 11-3). A chassis can accommodate up to eight half-width or four full-width Cisco UCS B-Series blade servers form factors within the same chassis. By incorporating unified fabric and fabric-extender technology, the Cisco Unified Computing System enables the chassis to

• Have fewer physical components.

• Require no independent management.

• Be more energy efficient than a traditional blade server chassis.

The Cisco UCS 5108 Blade Server Chassis is supported with all generations of fabric interconnects.

Figure 11-3 UCS 5108 Blade Server Chassis

UCS Blade Servers

Cisco UCS B-Series blade servers are based on Intel Xeon processors (see Figure 11-4). They work with virtualized and nonvirtualized applications to increase performance, energy efficiency, flexibility, and administrator productivity.

Figure 11-4 UCS B200 M5 and B480 M5 Blade Servers

With the Cisco UCS blade server, you can quickly deploy stateless physical and virtual workloads, with the programmability that the Cisco UCS Manager and Cisco Single Connect technology enables.

Cisco UCS B480 M5 is a full-width server that uses second-generation Intel Xeon Scalable processors or Intel Xeon Scalable processors with up to 6 TB of memory or 12 TB of Intel Optane DC persistent memory; up to four SAS, SATA, and NVMe drives; M.2 storage; up to four GPUs; and 160-Gigabit Ethernet connectivity. It offers exceptional levels of performance, flexibility, and I/O throughput to run the most demanding applications.

Cisco UCS B200 M5 is a half-width server that uses second-generation Intel Xeon Scalable processors or Intel Xeon Scalable processors with up to 3 TB of memory or 6 TB of Intel Optane DC persistent memory; up to two SAS, SATA, and NVMe drives; plus M.2 storage; up to two GPUs; and up to 80-Gigabit Ethernet. The Cisco UCS B200 M5 blade server offers exceptional levels of performance, flexibility, and I/O throughput to run applications.

Note

The central processing unit (CPU) is designed to control all computer parts, improve performance, and support parallel processing. The current CPU is a multicore processor. A graphic processing unit (GPU) is used in computer graphic cards and image processing. The GPU can be used as a coprocessor to accelerate CPUs. In today’s IT world, distributed applications (such as artificial intelligence, or AI) or deep learning applications require high-speed and parallel processing. GPUs are the best solution for distributed applications because GPUs contain high-core density (256 cores or more) compared to CPUs that contain 8 or 16 or a maximum of 32 cores. CPUs can offload some of the compute-intensive and time-consuming portions of the code to the GPU.

Cisco UCS Rack Servers

UCS C-Series rack servers deliver unified computing in an industry-standard form factor to increase agility (see Figure 11-5). Each server addresses varying workload challenges through a balance of processing, memory, I/O, and internal storage resources.

Figure 11-5 UCS C-Series Rack Servers

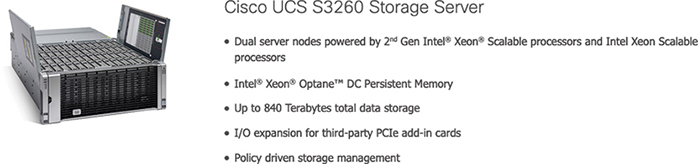

Cisco UCS Storage Servers

The Cisco UCS S3260 storage server is a modular dual-node x86 server designed for investment protection (see Figure 11-6). Its architectural flexibility provides high performance or high capacity for your data-intensive workloads. Using a storage server combined with the Cisco UCS Manager, you can easily deploy storage capacity from terabytes to petabytes within minutes.

Figure 11-6 Cisco UCS Storage Server

The Cisco UCS S3260 helps you achieve the highest levels of performance and capacity. With a dual-node capability that is based on the Intel Xeon Scalable processors or Intel Xeon processor E5-2600 v4 series, it features up to 720 TB of local storage in a compact 4-rack-unit (4RU) form factor. The drives can be configured with enterprise-class Redundant Array of Independent Disks (RAID) redundancy or as “just a bunch of disks” (JBOD) in pass-through mode. Network connectivity is provided with dual-port 40G per server nodes with expanded unified I/O capabilities for connectivity between NAS and SAN environments. This high-capacity storage server comfortably fits in a standard 32-inch depth rack, such as the Cisco R42612 rack.

![]()

Cisco UCS Mini

The Cisco UCS Mini solution extends the Cisco UCS architecture into environments that requires smaller domains, including branch and remote offices, point-of-sale locations, and smaller IT environments.

The Cisco UCS Mini has three main infrastructure components:

• Cisco UCS 6324 Fabric Interconnect

• Cisco UCS blade server chassis

• Cisco UCS blade or rack-mount servers

In the Cisco UCS Mini solution, the Cisco UCS 6324 Fabric Interconnect is collapsed into the I/O module form factor and is inserted into the IOM slot of the blade server chassis. The Cisco UCS 6324 Fabric Interconnect has 24 × 10G ports available on it. Sixteen of these ports are server facing, and two 10G ports are dedicated to each of the eight half-width blade slots. The remaining eight ports are divided into groups of four 1/10G Enhanced Small Form-Factor Pluggable (SFP+) ports and one 40G Quad Small Form-Factor Pluggable (QSFP) port, which is called the scalability port. Figure 11-7 shows UCS Mini connectivity.

Note

Currently, the Cisco UCS Manager supports only one extended chassis for the Cisco UCS Mini.

Figure 11-7 The Cisco UCS Mini Infrastructure

Cisco UCS Fabric Infrastructure

Cisco UCS Fabric Interconnects are top-of-rack devices and provide unified access to the Cisco UCS domain. The Cisco UCS Fabric Interconnect hardware is now in its fourth generation. The following fabric interconnects are available in the Cisco UCS Fabric Interconnects product family:

• Cisco UCS 6454 Fabric Interconnects

• Cisco UCS 6300 Series Fabric Interconnects

• Cisco UCS 6200 Series Fabric Interconnects

• Cisco UCS 6324 Fabric Interconnects

The Cisco UCS 6200 Series supports expansion modules that can be used to increase the number of 10G, Fibre Channel over Ethernet (FCoE), and Fibre Channel ports:

• The Cisco UCS 6248 UP has 32 ports on the base system. It can be upgraded with one expansion module providing an additional 16 ports.

• The Cisco UCS 6296 UP has 48 ports on the base system. It can be upgraded with three expansion modules providing an additional 48 ports.

Cisco UCS 6454 Fabric Interconnect

The Cisco UCS 6454 Fabric Interconnect provides both network connectivity and management capabilities to the Cisco UCS system. The fabric interconnect provides Ethernet and Fibre Channel to the servers in the system. The servers connect to the fabric interconnect and then to the LAN or SAN.

Each Cisco UCS 6454 Fabric Interconnect runs the Cisco UCS Manager to fully manage all Cisco UCS elements. The fabric interconnect supports 10/25-Gigabit Ethernet ports in the fabric with 40/100-Gigabit Ethernet uplink ports. High availability can be achieved when a Cisco UCS 6454 Fabric Interconnect is connected to another Cisco UCS 6454 Fabric Interconnect through the L1 or L2 port on each device. UCS 6454 FI is a 1RU top-of-rack switch that mounts in a standard 19-inch rack, such as the Cisco R Series rack. It has 48 10/25-Gigabit Ethernet SFP28 ports (16 unified ports) and 6 40/100-Gigabit Ethernet QSFP28 ports. Each 40/100-Gigabit Ethernet port can break out into 4 × 10/25-Gigabit Ethernet uplink ports. The 16 unified ports support 10/25-Gigabit Ethernet or 8/16/32-Gbps Fibre Channel speeds.

Note

The Cisco UCS 6454 Fabric Interconnect supported 8 unified ports (ports 1–8) with Cisco UCS Manager 4.0(1) and 4.0(2), but with release 4.0(4) and later it supports 16 unified ports (ports 1–16).

The Cisco UCS 6454 Fabric Interconnect supports a maximum of eight FCoE port channels or four SAN ports, or a maximum of eight SAN port channels and FCoE port channels (four each). It also has one network management port, one console port for setting the initial configuration, and one USB port for saving or loading configurations. The FI also includes L1/L2 ports for connecting two fabric interconnects for high availability. The fabric interconnect contains a CPU board that consists of the following:

• Intel Xeon D-1528 v4 Processor, 1.6 GHz

• 64 GB of RAM

• 8 MB of NVRAM (4 × NVRAM chips)

• 128-GB SSD (bootflash)

The ports on the Cisco UCS 6454 Fabric Interconnect can be configured to carry either Ethernet or Fibre Channel traffic. You can configure only the first 16 ports to carry Fibre Channel traffic. The ports cannot be used by a Cisco UCS domain until you configure them.

Note

When you configure a port on a fabric interconnect, the administrative state is automatically set to enabled. If the port is connected to another device, this may cause traffic disruption. The port can be disabled and enabled after it has been configured.

Ports on the Cisco UCS 6454 Fabric Interconnect are numbered and grouped according to their function. The ports are numbered top to bottom and left to right. Figure 11-8 shows the port numbering, which is as follows:

Figure 11-8 Cisco UCS 6454 Fabric Interconnect

1. Ports 1–16: Unified ports can operate as 10/25-Gigabit Ethernet or 8/16/32-Gbps Fibre Channel. FC ports are converted in groups of four.

2. Ports 17–44: Each port can operate as either a 10-Gbps or 25-Gbps SFP28 port.

Note

When you use Cisco UCS Manager releases earlier than 4.0(4), ports 9–44 are 10/25-Gbps Ethernet or FCoE.

3. Ports 45–48: Each port can operate as a 1-Gigabit Ethernet, 10-Gigabit Ethernet, or 25-Gigabit Ethernet or FCoE port.

4. Uplink Ports 49–54: Each port can operate as either a 40-Gbps or 100-Gbps Ethernet or FCoE port. When you use a breakout cable, each of these ports can operate as 4 × 10-Gigabit Ethernet or 4 × 25-Gigabit Ethernet or FCoE ports. Ports 49–54 can be used only to connect to Ethernet or FCoE uplink ports, and not to UCS server ports.

Cisco UCS 6454 Fabric Interconnects support splitting a single 40/100 Gigabit Ethernet QSFP port into four 10/25 Gigabit Ethernet ports using a supported breakout cable. These ports can be used only as uplink ports connecting to a 10/25G switch. On the UCS 6454 Fabric Interconnect, by default, there are six ports in the 40/100G mode. These are ports 49 to 54. These 40/100G ports are numbered in a 2-tuple naming convention. For example, the second 40G port is numbered as 1/50. The process of changing the configuration from 40G to 10G, or from 100G to 25G is called breakout, and the process of changing the configuration from 4 × 10G to 40G or from 4 × 25G to 100G is called unconfigure.

When you break out a 40G port into 10G ports or a 100G port into 25G ports, the resulting ports are numbered using a 3-tuple naming convention. For example, the breakout ports of the second 40-Gigabit Ethernet port are numbered as 1/50/1, 1/50/2, 1/50/3, and 1/50/4. Figure 11-8 shows the rear view of the Cisco UCS 6454 Fabric Interconnect and includes the ports that support breakout port functionality (Group 4).

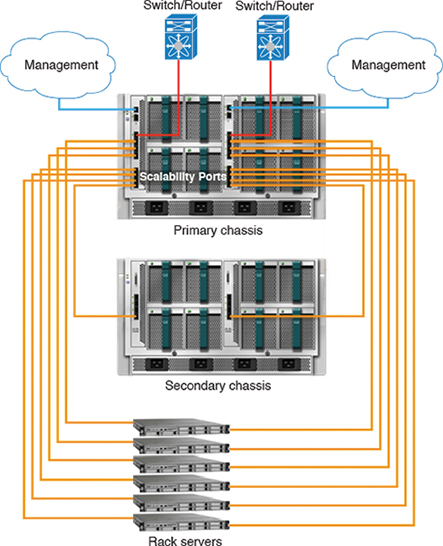

Cisco UCS 6300 Series Fabric Interconnects

The Cisco UCS 6300 Series Fabric Interconnect joins next-generation UCS products, including the following hardware:

• Cisco UCS 6332 Fabric Interconnect, an Ethernet or Fibre Channel over Ethernet (FCoE) chassis with 32 40-Gigabit Ethernet QSFP+ ports

• Cisco UCS 6332-16UP Fabric Interconnect, an Ethernet, FCoE, and Fibre Channel chassis with 16 1- or 10-Gigabit Ethernet SFP+ ports or 16 4-, 8-, or 16-Gbps Fibre Channel ports, 24 40-Gigabit Ethernet QSFP+ ports

• Cisco 2304 IOM or Cisco 2304V2, I/O modules with eight 40-Gigabit backplane ports and four 40-Gigabit Ethernet uplink ports

• Multiple VICs

UCS 6332 Fabric Interconnect is a 1RU, top-of-rack switch with 32 40-Gigabit Ethernet QSFP+ ports, one 100/1000 network management port, one RS-232 console port for setting the initial configuration, and two USB ports for saving or loading configurations (see Figure 11-9). The switch also includes an L1 port and an L2 port for connecting two fabric interconnects to provide high availability. The switch mounts in a standard 19-inch rack, such as the Cisco R-Series rack. Cooling fans pull air front-to-rear. That is, air intake is on the fan side, and air exhaust is on the port side.

Figure 11-9 Cisco UCS Fabric Interconnect 6332

Ports on the Cisco UCS 6300 Series Fabric Interconnects can be configured to carry either Ethernet or Fibre Channel traffic. These ports are not reserved. They cannot be used by a Cisco UCS domain until you configure them. When you configure a port on a fabric interconnect, the administrative state is automatically set to enabled. If the port is connected to another device, this may cause traffic disruption. You can disable the port after it has been configured.

The Cisco UCS Fabric Interconnect 6300 Series supports splitting a single QSFP port into four 10-Gigabit Ethernet ports using a supported breakout cable. By default, there are 32 ports in the 40-Gigabit mode. These 40-Gigabit Ethernet ports are numbered in a 2-tuple naming convention. For example, the second 40-Gigabit Ethernet port is numbered as 1/2. The process of changing the configuration from 40-Gigabit Ethernet to 10-Gigabit Ethernet is called breakout, and the process of changing the configuration from 4 ×10-Gigabit Ethernet to 40-Gigabit Ethernet is called unconfigure. When you break out a 40-Gigabit Ethernet port into 10-Gigabit Ethernet ports, the resulting ports are numbered using a 3-tuple naming convention. For example, the breakout ports of the second 40-Gigabit Ethernet port are numbered as 1/2/1, 1/2/2, 1/2/3, and 1/2/4. Table 11-2 summarizes the constraints for breakout functionality for Cisco UCS 6300 Series Fabric Interconnects.

Table 11-2 Cisco UCS 6300 Port Breakout Summary

Note

Up to four breakout ports are allowed if QoS jumbo frames are used.

![]()

Fabric Interconnect and Fabric Extender Connectivity

Fabric Extenders (FEs) are extensions of the fabric interconnects (FIs) and act as remote line cards to form a distributed modular fabric system. The fabric extension is accomplished through the FEX fabric link, which is the connection between the fabric interconnect and the FEX. A minimum of one connection between the FI and FEX is required to provide server connectivity. Depending on the FEX model, subsequent connections can be up to eight links, which provides added bandwidth to the servers.

The Cisco UCS 2304 IOM (Fabric Extender) is an I/O module with 8 × 40-Gigabit backplane ports and 4 × 40-Gigabit uplink ports (see Figure 11-10). It can be hot-plugged into the rear of a Cisco UCS 5108 blade server chassis. A maximum of two UCS 2304 IOMs can be installed in a chassis. The Cisco UCS 2304 IOM provides chassis management control and blade management control, including control of the chassis, fan trays, power supply units, and blades. It also multiplexes and forwards all traffic from the blade servers in the chassis to the 10-Gigabit Ethernet uplink network ports that connect to the fabric interconnect. The IOM can also connect to a peer IOM to form a cluster interconnect.

Figure 11-10 Cisco UCS 2300 IOM

Figure 11-11 shows how the FEX modules in the blade chassis connect to the FIs. The 5108 chassis accommodates the following FEXs:

• Cisco UCS 2304

Note

The Cisco UCS 2304 Fabric Extender is not compatible with the Cisco UCS 6200 Fabric Interconnect series.

• Cisco UCS 2208XP

• Cisco UCS 2208XP

![]()

Figure 11-11 Connecting Blade Chassis Fabric Extenders to Fabric Interconnect

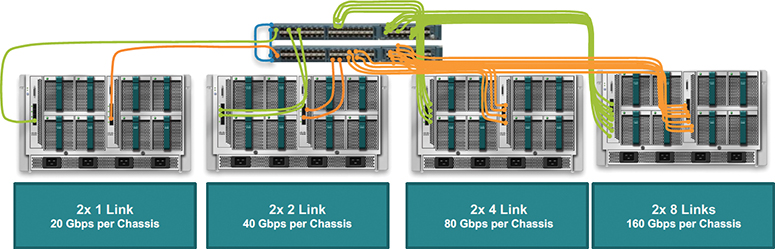

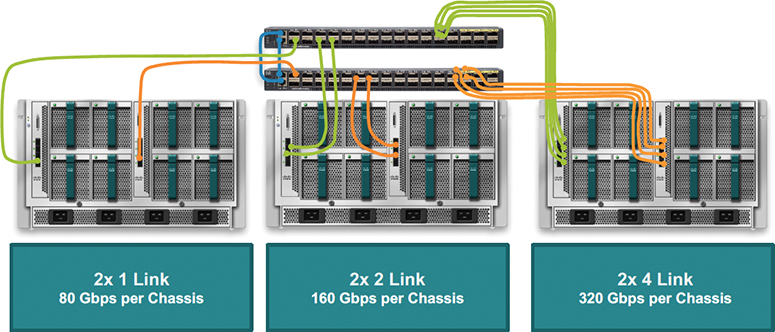

In a blade chassis, the FEX fabric link (the link between the FEX and the FI) supports two different types of connections:

• Discrete mode

• Port channel mode

In discrete mode, a half-width server slot is pinned to a given FEX fabric link. The supported numbers of links are 1, 2, 4, and 8, as shown in Table 11-3. Figure 11-12 shows an example of four FEX fabric link connections, and Figures 11-13 and 11-14 show an example of four FEX fabric link connections.

![]()

Table 11-3 Blade Chassis Slot to Link Mapping

Figure 11-12 Discrete Mode FEX Fabric Link Slot

Figure 11-13 UCS 10-Gigabit Ethernet FEX to FI Connectivity

Figure 11-14 UCS 40-Gigabit Ethernet FEX to FI Connectivity

In port channel mode, the FEX fabric links are bundled into a single logical link (see Figure 11-15) to provide higher bandwidth to the servers. Depending on the FEX, up to eight links can be port channeled.

Figure 11-15 FEX Fabric Links in Port Channel Mode

The Adapter-FEX uses a mechanism to divide a single physical link into multiple virtual links or channels, as shown in Figure 11-16. Each channel is identified by a unique channel number, and its scope is limited to the physical link.

![]()

Figure 11-16 UCS FEX virtual links

The physical link connects a port on a server network adapter with an Ethernet port on the device, which allows the channel to connect a virtual network interface card (vNIC) on the server with a virtual Ethernet interface on the device.

Packets on each channel are tagged with a virtual network tag (VNTag) with a specific source virtual interface identifier (VIF). The VIF allows the receiver to identify which channel that the source transmit is using to the packet.

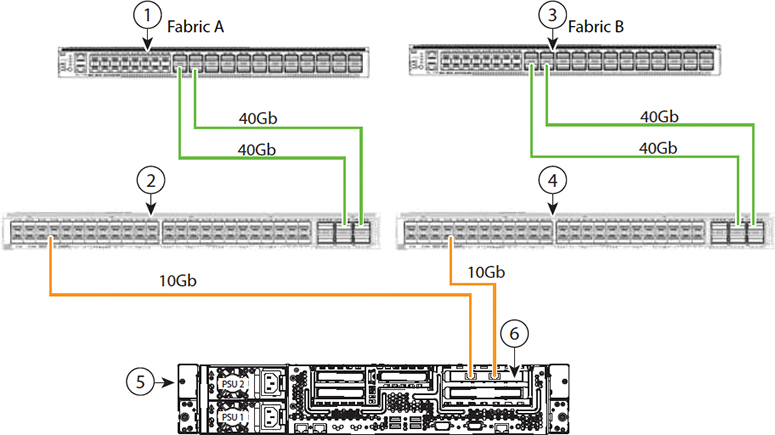

A rack-mount server has a different connectivity method. The Cisco C-Series support two types of connections:

• Single-wire management

• Dual-wire management

Cisco UCS Manager single-wire management supports an additional option to integrate the C-Series rack-mount server with the Cisco UCS Manager using the NC-SI. This option enables the Cisco UCS Manager to manage the C-Series rack-mount servers using a single wire for both management traffic and data traffic. When you use the single-wire management mode, one host-facing port on the FEX is sufficient to manage one rack-mount server instead of the two ports you would use in the Shared-LOM (LAN On Motherboard) mode. This connection method allows you to connect more rack-mount servers with the Cisco UCS Manager for integrated server management. You should make sure you have the correct server firmware for integration with the Cisco UCS Manager. If not, upgrade your server firmware before integrating the server with the Cisco UCS Manager. Figure 11-17 shows how the C-Series rack-mount chassis connect to the FEXs and FIs for single-wire management, with numbered elements as follows:

![]()

Figure 11-17 C-Series Rack Chassis with Single-Wire Management

1. Cisco UCS 6332-16UP FI (Fabric A)

2. Cisco Nexus 2232PP, 2232TM-E, or 2348UPQ (Fabric A)

3. Cisco UCS 6332-16UP FI (Fabric B)

4. Cisco Nexus 2232PP, 2232TM-E, or 2348UPQ (Fabric B)

5. Cisco UCS C-Series server

6. Cisco UCS VIC1225 in PCIe slot 1

The Cisco UCS dual-wire manager supports the existing rack server integration and management option through shared LOM, using two separate cables for data traffic and management traffic, as shown in Figure 11-18. The prerequisites for integration with the Cisco UCS Manager are built into the C-Series servers. You should make sure you have the correct server firmware for integration with the Cisco UCS Manager. If not, you need to upgrade your server firmware before integrating the server with the Cisco UCS Manager. Figure 11-18 shows how the C-Series rack-mount chassis connect to the FEXs and FIs for dual-wire management, with numbered elements as follows:

![]()

Figure 11-18 C-Series Rack-Mount Chassis with Dual-Wire Management

1. Cisco UCS 6332-16UP FI (Fabric A)

2. GLC-TE transceiver in FEX port (Fabric A)

3. Cisco Nexus 2232PP, 2232TM-E, or 2348UPQ (Fabric A)

4. Cisco UCS 6332-16UP FI (Fabric B)

5. GLC-TE transceiver in FEX port (Fabric B)

6. Cisco Nexus 2232PP, 2232TM-E, or 2348UPQ (Fabric B)

7. Cisco UCS C-Series server

8. 1-Gb Ethernet LOM ports

9. 10-Gb Adapter card in PCIe slot 1

![]()

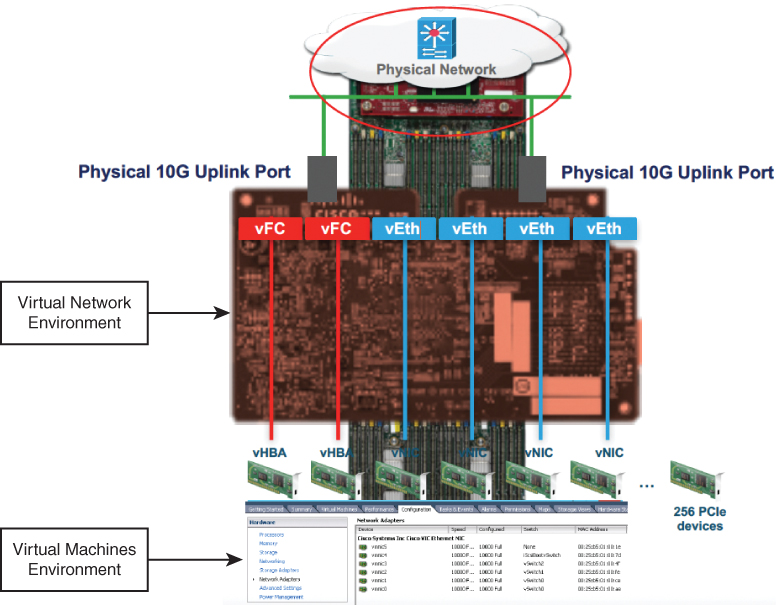

Cisco UCS Virtualization Infrastructure

The Cisco UCS is a single integrated system with switches, cables, adapters, and servers all tied together and managed by unified management software. Thus, you are able to virtualize every component of the system at every level. The switch port, cables, adapter, and servers can all be virtualized.

Because of the virtualization capabilities at every component of the system, you have the unique ability to provide rapid provisioning of any service on any server on any blade through a system that is wired once. Figure 11-19 illustrates these virtualization capabilities.

Figure 11-19 UCS Virtualization Infrastructure

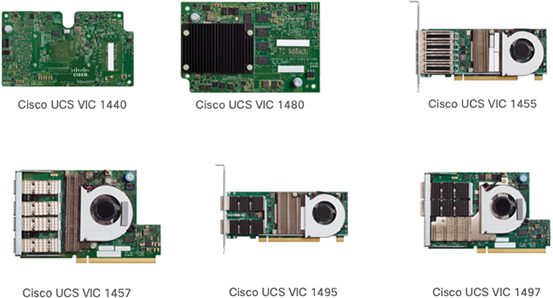

The Cisco UCS Virtual Interface Card 1400 Series (Figure 11-19) extends the network fabric directly to both servers and virtual machines so that a single connectivity mechanism can be used to connect both physical and virtual servers with the same level of visibility and control. Cisco VICs provide complete programmability of the Cisco UCS I/O infrastructure, with the number and type of I/O interfaces configurable on demand with a zero-touch model.

Cisco VICs support Cisco Single Connect technology, which provides an easy, intelligent, and efficient way to connect and manage computing in your data center. Cisco Single Connect unifies LAN, SAN, and systems management into one simplified link for rack servers, blade servers, and virtual machines. This technology reduces the number of network adapters, cables, and switches needed and radically simplifies the network, reducing complexity. Cisco VICs can support 256 PCI Express (PCIe) virtual devices, either virtual network interface cards (vNICs) or virtual host bus adapters (vHBAs), with a high rate of I/O operations per second (IOPS), support for lossless Ethernet, and 10/25/40/100-Gbps connection to servers. The PCIe Generation 3 × 16 interface helps ensure optimal bandwidth to the host for network-intensive applications, with a redundant path to the fabric interconnect. Cisco VICs support NIC teaming with fabric failover for increased reliability and availability. In addition, it provides a policy-based, stateless, agile server infrastructure for your data center.

The VIC 1400 Series is designed exclusively for the M5 generation of UCS B-Series blade servers, C-Series rack servers, and S-Series storage servers. The adapters are capable of supporting 10/25/40/100-Gigabit Ethernet and Fibre Channel over Ethernet. It incorporates Cisco’s next-generation converged network adapter (CNA) technology and offers a comprehensive feature set, providing investment protection for future feature software releases. In addition, the VIC supports Cisco’s Data Center Virtual Machine Fabric Extender (VM-FEX) technology. This technology extends the Cisco UCS Fabric Interconnect ports to virtual machines, simplifying server virtualization deployment.

The Cisco UCS VIC 1400 Series provides the following features and benefits (see Figure 11-20):

• Stateless and agile platform: The personality of the card is determined dynamically at boot time using the service profile associated with the server. The number, type (NIC or HBA), identity (MAC address and World Wide Name [WWN]), failover policy, bandwidth, and quality of service (QoS) policies of the PCIe interfaces are all determined using the service profile. The capability to define, create, and use interfaces on demand provides a stateless and agile server infrastructure.

• Network interface virtualization: Each PCIe interface created on the VIC is associated with an interface on the Cisco UCS Fabric Interconnect, providing complete network separation for each virtual cable between a PCIe device on the VIC and the interface on the fabric interconnect.

Figure 11-20 Cisco UCS Virtual Interface Cards (VICs)

UCS M5 B-Series VIC:

• Cisco VIC 1440

• Single-port 40-Gigabit Ethernet or 4 × 10-Gbps Ethernet/FCoE capable modular LAN On Motherboard (mLOM).

• Cisco UCS VIC 1440 capabilities are enabled for two ports of 40-Gbps Ethernet.

• UCS VIC 1440 enables a policy-based, stateless, agile server infrastructure that can present to the host PCIe standards-compliant interfaces that can be dynamically configured as either NICs or HBAs.

• Cisco VIC 1480

• Single-port 40-Gigabit Ethernet s or 4 × 10-Gigabit Ethernet/FCoE capable mezzanine card (mezz).

• UCS VIC 1480 enables a policy-based, stateless, agile server infrastructure that can present PCIe standards-compliant interfaces to the host that can be dynamically configured as either NICs or HBAs.

UCS M5 C-Series VIC:

• Cisco VIC 1455

• Quad-port Small Form-Factor Pluggable (SFP28) half-height PCIe card.

• Supports 10/25-Gigabit Ethernet or FCoE. The card can present PCIe standards-compliant interfaces to the host, and these can be dynamically configured as either NICs or HBAs.

• Cisco VIC 1457

• Quad-port Small Form-Factor Pluggable (SFP28) mLOM card.

• Supports 10/25-Gigabit Ethernet or FCoE. The card can present PCIe standards-compliant interfaces to the host, and these can be dynamically configured as either NICs or HBAs.

• Cisco VIC 1495

• Dual-port Quad Small Form-Factor (QSFP28) PCIe.

• Supports 40/100-Gigabit Ethernet or FCoE. The card can present PCIe standards-compliant interfaces to the host, and these can be dynamically configured as NICs or HBAs.

• Cisco VIC 1497

• Dual-port Quad Small Form-Factor (QSFP28) mLOM card.

• The card supports 40/100-Gigabit Ethernet or FCoE. The card can present PCIe standards-compliant interfaces to the host, and these can be dynamically configured as NICs or HBAs.

Cisco UCS Initial Setup and Management

The Cisco UCS Manager enables you to manage general and complex server deployments. For example, you can manage a general deployment with a pair of fabric interconnects, which is the redundant server access layer that you get with the first chassis that can scale up to 20 chassis and up to 160 physical servers. This can be a combination of blades and rack-mount servers to support the workload in your environment. As you add more servers, you can continue to perform server provisioning, device discovery, inventory, configuration, diagnostics, monitoring, fault detection, and auditing.

Beginning with release 4.0(2a), the Cisco UCS Manager extends support for all existing features on the following Cisco UCS hardware unless specifically noted:

• Cisco UCS C480 M5 ML Server

• Cisco UCS VIC 1495

• Cisco UCS VIC 1497

• Cisco UCS 6454 Fabric Interconnect

• Cisco UCS VIC 1455

• Cisco UCS VIC 1457

• Cisco UCS C125 M5 Server

By default, the Cisco UCS 6454 Fabric Interconnect, the Cisco UCS 6332 FIs, the Cisco UCS Mini 6324 FIs, and the UCS 6200 Series FIs include centralized management. You can manage the Cisco UCS blade servers and rack-mount servers that are in the same domain from one console. You can also manage the Cisco UCS Mini from the Cisco UCS Manager.

To ensure optimum server performance, you can configure the amount of power that you allocate to servers. You can also set the server boot policy, the location from which the server boots, and the order in which the boot devices are invoked. You can create service profiles for the Cisco UCS B-Series blade servers and the Cisco UCS Mini to assign to servers. Service profiles enable you to assign BIOS settings, security settings, the number of vNICs and vHBAs, and anything else that you want to apply to a server. Initial configuration of fabric interconnects is performed using the console connection. It is essential to maintain symmetric Cisco UCS Manager versions between the fabric interconnects in a domain.

Follow these steps to perform the initial configuration for the Cisco UCS Manager:

Step 1. Power on the fabric interconnect. You see the power-on self-test messages as the fabric interconnect boots.

Step 2. If the system obtains a lease IPv4 or IPv6 address, go to step 6; otherwise, continue to the next step.

Step 3. Connect to the console port.

Step 4. At the installation method prompt, enter GUI.

Step 5. If the system cannot access a DHCP server, you are prompted to enter the following information:

• IPv4 or IPv6 address for the management port on the fabric interconnect

• IPv4 subnet mask or IPv6 prefix for the management port on the fabric interconnect

• IPv4 or IPv6 address for the default gateway assigned to the fabric interconnect

Note

In a cluster configuration, both fabric interconnects must be assigned the same management interface address type during setup.

Step 6. Copy the web link from the prompt into a web browser and go to the Cisco UCS Manager GUI launch page.

Step 7. On the Cisco UCS Manager GUI launch page, select Express Setup.

Step 8. On the Express Setup page, select Initial Setup and click Submit.

Step 9. In the Cluster and Fabric Setup area, do the following:

• Click the Enable Clustering option.

• For the Fabric Setup option, select Fabric A.

• In the Cluster IP Address field, enter the IPv4 or IPv6 address that the Cisco UCS Manager will use.

Step 10. In the System Setup area, complete the following fields:

Step 11. Click Submit. A page then displays the results of your setup operation.

Another option is to use the command-line interface (CLI) to configure the primary fabric interconnect as follows:

Step 1. Connect to the console port.

Step 2. Power on the fabric interconnect. You see the power-on self-test messages as the fabric interconnect boots.

Step 3. When the unconfigured system boots, it prompts you for the setup method to be used. Enter console to continue the initial setup using the console CLI.

Step 4. Enter setup to continue as an initial system setup.

Step 5. Enter y to confirm that you want to continue the initial setup.

Step 6. Enter the password for the admin account.

Step 7. To confirm, reenter the password for the admin account.

Step 8. Enter yes to continue the initial setup for a cluster configuration.

Step 9. Enter the fabric interconnect fabric (either A or B).

Step 10. Enter the system name.

Step 11. Enter the IPv4 or IPv6 address for the management port of the fabric interconnect. If you enter an IPv4 address, you are prompted to enter an IPv4 subnet mask. If you enter an IPv6 address, you are prompted to enter an IPv6 network prefix.

Step 12. Enter the respective IPv4 subnet mask or IPv6 network prefix; then press Enter. You are prompted for an IPv4 or IPv6 address for the default gateway, depending on the address type you entered for the management port of the fabric interconnect.

Step 13. Enter either of the following:

• IPv4 address of the default gateway

• IPv6 address of the default gateway

Step 14. Enter yes if you want to specify the IP address for the DNS server or no if you do not.

Step 15. (Optional) Enter the IPv4 or IPv6 address for the DNS server. The address type must be the same as the address type of the management port of the fabric interconnect.

Step 16. Enter yes if you want to specify the default domain name or no if you do not.

Step 17. (Optional) Enter the default domain name.

Step 18. Review the setup summary and enter yes to save and apply the settings, or enter no to go through the Setup wizard again to change some of the settings. If you choose to go through the Setup wizard again, it provides the values you previously entered, and the values appear in brackets. To accept previously entered values, press Enter.

Example 11-1 sets up the first fabric interconnect for a cluster configuration using the console to set IPv4 management addresses.

Example 11-1 UCS FI IPv4 Initialization

Enter the installation method (console/gui)? console Enter the setup mode (restore from backup or initial setup) [restore/setup]? setup You have chosen to setup a new switch. Continue? (y/n): y Enter the password for “admin”: adminpassword Confirm the password for “admin”: adminpassword Do you want to create a new cluster on this switch (select ‘no’ for standalone setup or if you want this switch to be added to an existing cluster)? (yes/no) [n]: yes Enter the switch fabric (A/B): A Enter the system name: dccor Mgmt0 IPv4 address: 192.168.10.11 Mgmt0 IPv4 netmask: 255.255.255.0 IPv4 address of the default gateway: 192.168.0.1 Virtual IPv4 address: 192.168.0.10 Configure the DNS Server IPv4 address? (yes/no) [n]: yes DNS IPv4 address: 198.18.133.200 Configure the default domain name? (yes/no) [n]: yes Default domain name: domainname.com Join centralized management environment (UCS Central)? (yes/no) [n]: no Following configurations will be applied: Switch Fabric=A System Name=dccor Management IP Address=192.168.0.11 Management IP Netmask=255.255.255.0 Default Gateway=192.168.0.1 Cluster Enabled=yes Virtual Ip Address=192.168.0.10 DNS Server=198.18.133.200 Domain Name=domainname.com Apply and save the configuration (select ‘no’ if you want to re-enter)? (yes/no): yes

Example 11-2 sets up the first fabric interconnect for a cluster configuration using the console to set IPv6 management addresses:

Example 11-2 UCS FI IPv6 Initialization

Enter the installation method (console/gui)? console Enter the setup mode (restore from backup or initial setup) [restore/setup]? setup You have chosen to setup a new switch. Continue? (y/n): y Enter the password for “admin”: adminpassword Confirm the password for “admin”: adminpassword Do you want to create a new cluster on this switch (select ‘no’ for standalone setup or if you want this switch to be added to an existing cluster)? (yes/no) [n]: yes Enter the switch fabric (A/B): A Enter the system name: dccor Mgmt0 address: 2020::207 Mgmt0 IPv6 prefix: 64 IPv6 address of the default gateway: 2020::1 Configure the DNS Server IPv6 address? (yes/no) [n]: yes DNS IP address: 2020::201 Configure the default domain name? (yes/no) [n]: yes Default domain name: domainname.com Join centralized management environment (UCS Central)? (yes/no) [n]: no Following configurations will be applied: Switch Fabric=A System Name=dccor Enforced Strong Password=no Physical Switch Mgmt0 IPv6 Address=2020::207 Physical Switch Mgmt0 IPv6 Prefix=64 Default Gateway=2020::1 Ipv6 value=1 DNS Server=2020::201 Domain Name=domainname.com Apply and save the configuration (select ‘no’ if you want to re-enter)? (yes/no): yes

To configure the subordinate fabric interconnect using the GUI, follow these steps:

Step 1. Power up the fabric interconnect. You see the power-on self-test message as the fabric interconnect boots.

Step 2. It the system obtains a lease, go to step 6; otherwise, continue to the next step.

Step 3. Connect to the console port.

Step 4. At the installation method prompt, enter GUI.

Step 5. If the system cannot access a DHCP server, you are prompted to enter the following information:

• IPv4 or IPv6 address for the management port on the fabric interconnect

• IPv4 subnet mask or IPv6 prefix for the management port on the fabric interconnect

• IPv4 or IPv6 address for the default gateway assigned to the fabric interconnect

Note

In a cluster configuration, both fabric interconnects must be assigned the same management interface address type during setup.

Step 6. Copy the web link from the prompt into a web browser and go to the Cisco UCS Manager GUI launch page.

Step 7. On the Cisco UCS Manager GUI launch page, select Express Setup.

Step 8. On the Express Setup page, select Initial Setup and click Submit. The fabric interconnect should detect the configuration information for the first fabric interconnect.

Step 9 In the Cluster and Fabric Setup Area, do the following:

• Select the Enable Clustering option.

• For the Fabric Setup option, make sure Fabric B is selected.

Step 10. In the System Setup Area, enter the password for the Admin account into the Admin Password of Master field. The Manager Initial Setup Area is displayed.

Step 11. In the Manager Initial Setup Area, the field that is displayed depends on whether you configured the first fabric interconnect with an IPv4 or IPv6 management address. Complete the field that is appropriate for your configuration, as follows:

• Peer FI is IPv4 Cluster enabled. Please Provide Local fabric interconnect Mgmt0 IPv4 Address: Enter an IPv4 address for the Mgmt0 interface on the local fabric interconnect.

• Peer FI is IPv6 Cluster Enabled. Please Provide Local fabric interconnect Mgmt0 IPv6 Address: Enter an IPv6 address for the Mgmt0 interface on the local fabric interconnect.

Step 12. Click Submit. A page displays the results of your setup operation.

To configure the subordinate fabric interconnect using the CLI, follow these steps:

Step 1. Connect to the console port.

Step 2. Power up the fabric interconnect. You see the power-on self-test messages as the fabric interconnect boots.

Step 3. When the unconfigured system boots, it prompts you for the setup method to be used. Enter console to continue the initial setup using the console CLI.

Note

The fabric interconnect should detect the peer fabric interconnect in the cluster. If it does not, check the physical connections between the L1 and L2 ports, and verify that the peer fabric interconnect has been enabled for a cluster configuration.

Step 4. Enter y to add the subordinate fabric interconnect to the cluster.

Step 5. Enter the admin password of the peer fabric interconnect.

Step 6. Enter the IP address for the management port on the subordinate fabric interconnect.

Step 7. Review the setup summary and enter yes to save and apply the settings, or enter no to go through the Setup wizard again to change some of the settings. If you choose to go through the Setup wizard again, it provides the values you previously entered, and the values appear in brackets. To accept previously entered values, press Enter.

Example 11-3 sets up the second fabric interconnect for a cluster configuration using the console and the IPv4 address of the peer.

Example 11-3 UCS Second FI IPv4 Initialization

Enter the installation method (console/gui)? console Installer has detected the presence of a peer Fabric interconnect. This Fabric interconnect will be added to the cluster. Continue (y/n) ? y Enter the admin password of the peer Fabric Interconnect: adminpassword Peer Fabric interconnect Mgmt0 IPv4 Address: 192.168.10.11 Apply and save the configuration (select ‘no’ if you want to re-enter)? (yes/no): yes

Example 11-4 sets up the second fabric interconnect for a cluster configuration using the console and the IPv6 address of the peer.

Example 11-4 UCS Second FI IPv6 Initialization

Enter the installation method (console/gui)? console Installer has detected the presence of a peer Fabric interconnect. This Fabric interconnect will be added to the cluster. Continue (y/n) ? y Enter the admin password of the peer Fabric Interconnect: adminpassword Peer Fabric interconnect Mgmt0 IPv6 Address: 2020::207 Apply and save the configuration (select ‘no’ if you want to re-enter)? (yes/no): yes

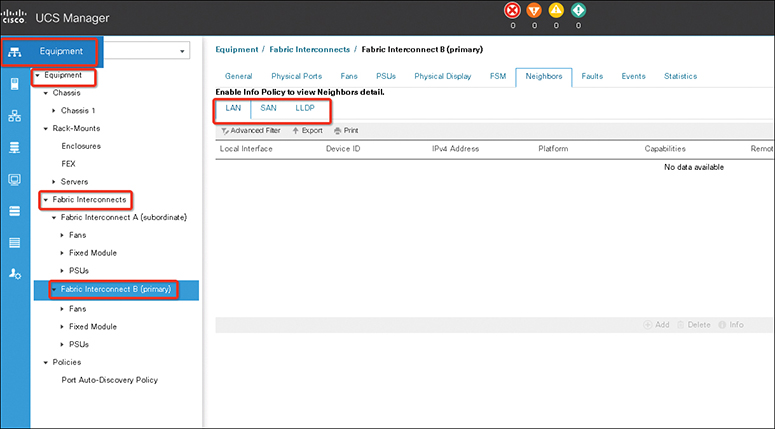

You can verify that both fabric interconnect configurations are complete by logging in to the fabric interconnect via the SSH or GUI and verifying the cluster status through the CLI as the commands in Table 11-4 or through the GUI, as shown in Figure 11-21.

![]()

Table 11-4 Cluster Verification CLI

Figure 11-21 Cisco UCS Manager Cluster Verification

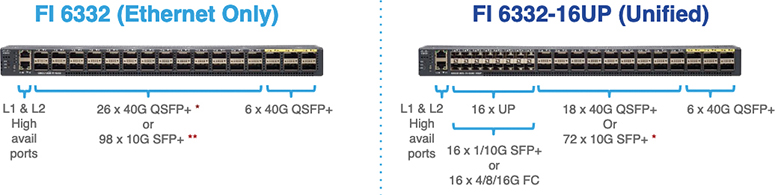

Fabric Interconnect Connectivity and Configurations

A fully redundant Cisco Unified Computing System consists of two independent fabric planes: Fabric A and Fabric B. Each plane consists of a central fabric interconnect (Cisco UCS 6300 or 6200 Series Fabric Interconnects) connected to an I/O module (Fabric Extender) in each blade chassis. The two fabric interconnects are completely independent from the perspective of the data plane; the Cisco UCS can function with a single fabric interconnect if the other fabric is offline or not provisioned (see Figure 11-22).

Figure 11-22 UCS Fabric Interconnect (FI) Status

The following steps show how to determine the primary fabric interconnect:

Step 1. In the Navigation pane, click Equipment.

Step 2. Expand Equipment > Fabric Interconnects.

Step 3. Click the fabric interconnect for which you want to identify the role.

Step 4. In the Work pane, click the General tab.

Step 5. In the General tab, click the down arrows on the High Availability Details bar to expand that area.

Step 6. View the Leadership field to determine whether the fabric interconnect is primary or subordinate.

Note

If the admin password is lost, you can determine the primary and secondary roles of the fabric interconnects in a cluster by opening the Cisco UCS Manager GUI from the IP addresses of both fabric interconnects. The subordinate fabric interconnect fails with the following message: “UCSM GUI is not available on secondary node.”

The fabric interconnect is the core component of the Cisco UCS. Cisco UCS Fabric Interconnects provide uplink access to LAN, SAN, and out-of-band management segments, as shown in Figure 11-23. Cisco UCS infrastructure management is handled through the embedded management software, the Cisco UCS Manager, for both hardware and software management. The Cisco UCS Fabric Interconnects are top-of-rack devices and provide unified access to the Cisco UCS domain.

![]()

Figure 11-23 Cisco UCS Components Logical Connectivity

All network endpoints, such as host bus adapters (HBAs) and management entities such as Cisco Integrated Management Controllers (CIMCs; formerly referred to as baseboard management controllers, or BMCs), are dual-connected to both fabric planes and thus can work in an active-active configuration.

Virtual port channels (vPCs) are not supported on the fabric interconnects, although the upstream LAN switches to which they connect can be vPC or Virtual Switching System (VSS) peers.

Cisco UCS Fabric Interconnects provide network connectivity and management for the connected servers. They run the Cisco UCS Manager control software and consist of expansion modules for the Cisco UCS Manager software.

Uplink Connectivity

Fabric interconnect ports configured as uplink ports are used to connect to upstream network switches. You can connect these uplink ports to upstream switch ports as individual links or as links configured as port channels. Port channel configurations provide bandwidth aggregation as well as link redundancy.

You can achieve northbound connectivity from the fabric interconnect through a standard uplink, a port channel, or a virtual port channel configuration. The port channel name and ID configured on the fabric interconnect should match the name and ID configuration on the upstream Ethernet switch.

It is also possible to configure a port channel as a vPC, where port channel uplink ports from a fabric interconnect are connected to different upstream switches. After all uplink ports are configured, you can create a port channel for these ports.

Downlink Connectivity

Each fabric interconnect is connected to I/O modules in the Cisco UCS chassis, which provides connectivity to each blade server. Internal connectivity from blade servers to IOMs is transparently provided by the Cisco UCS Manager using the 10BASE-KR Ethernet standard for backplane implementations, and no additional configuration is required. You must configure the connectivity between the fabric interconnect server ports and IOMs. Each IOM, when connected with the fabric interconnect server port, behaves as a line card to fabric interconnect; hence, IOMs should never be cross-connected to the fabric interconnect. Each IOM is connected directly to a single fabric interconnect.

The Fabric Extender (also referred to as the IOM, or FEX) logically extends the fabric interconnects to the blade server. The best analogy is to think of it as a remote line card that’s embedded in the blade server chassis, allowing connectivity to the external world. IOM settings are pushed via the Cisco UCS Manager and are not managed directly. The primary functions of this module are to facilitate blade server I/O connectivity (internal and external), multiplex all I/O traffic up to the fabric interconnects, and help monitor and manage the Cisco UCS infrastructure. You should configure fabric interconnect ports that should be connected to downlink IOM cards as server ports. You need to make sure there is physical connectivity between the fabric interconnect and IOMs. You must also configure the IOM ports and the global chassis discovery policy.

![]()

Fabric Interconnect Port Modes

The port mode determines whether a unified port on the fabric interconnect is configured to carry Ethernet or Fibre Channel traffic. You configure the port mode in the Cisco UCS Manager. However, the fabric interconnect does not automatically discover the port mode.

Changing the port mode deletes the existing port configuration and replaces it with a new logical port. Any objects associated with that port configuration, such as VLANs and VSANS, are also removed. There is no restriction on the number of times you can change the port mode for a unified port.

![]()

When you set the port mode to Ethernet, you can configure the following Ethernet port types:

• Server ports

• Ethernet uplink ports

• Ethernet port channel members

• Appliance ports

• Appliance port channel members

• SPAN destination ports

• SPAN source ports

Note

For SPAN source ports, you configure one of the port types and then configure the port as a SPAN source.

For Fiber Channel, you can configure the following port types:

• Fibre Channel uplink ports

• Fibre Channel port channel members

• Fibre Channel storage ports

• FCoE Uplink ports

• SPAN source ports

A port must be explicitly defined as a specific type, and this type defines the port behavior. For example, discovery of components such as Fabric Extenders or blades is performed only on server ports. Similarly, uplink ports are automatically configured as IEEE 802.1Q trunks for all VLANs defined on the fabric interconnect.

The following steps show how to verify fabric interconnect neighbors:

Step 1. In the Navigation pane, click Equipment.

Step 2. In the Equipment tab, expand Equipment > Fabric Interconnects.

Step 3. Click the fabric interconnect for which you want to view the LAN or SAN or LLDP neighbors.

Step 4. In the Work pane, click the Neighbors tab.

Step 5. Click the LAN or SAN or LLDP subtab. This subtab lists all the LAN or SAN or LLDP neighbors of the specified Fabric Interconnect. (See Figure 11-24.)

Figure 11-24 UCS Fabric Interconnect (FI) Neighbors Detail

Note

In either Ethernet switching mode, a fabric interconnect does not require an upstream switch for Layer 2 traffic between two servers connected to it on the same fabric.

An external switch is required for switching Layer 2 traffic between servers if vNICs belonging to the same VLAN are mapped to different fabric interconnects (see Figure 11-25).

Figure 11-25 UCS FI to External LAN Connection

Fabric Failover for Ethernet: High-Availability vNIC

To understand the switching mode behavior, you need to understand the fabric-based failover feature for Ethernet in the Cisco UCS. Each adapter in the Cisco UCS is a dual-port adapter that connects to both fabrics (A and B). The two fabrics in the Cisco UCS provide failover protection in the event of planned or unplanned component downtime in one of the fabrics. Typically, host software—such as NIC teaming for Ethernet and PowerPath or multipath I/O (MPIO) for Fibre Channel—provides failover across the two fabrics (see Figure 11-26).

![]()

Figure 11-26 UCS Fabric Traffic Failover Example

A vNIC in the Cisco UCS is a host-presented PCI device that is centrally managed by the Cisco UCS Manager. The fabric-based failover feature, which you enable by selecting the high-availability vNIC option in the service profile definition, allows network interface virtualization (NIV)-capable adapters (Cisco virtual interface card, or VIC) and the fabric interconnects to provide active-standby failover for Ethernet vNICs without any NIC-teaming software on the host.

For unicast traffic failover, the fabric interconnect in the new path sends gratuitous Address Resolution Protocols (gARPs). This process refreshes the forwarding tables on the upstream switches.

For multicast traffic, the new active fabric interconnect sends an Internet Group Management Protocol (IGMP) Global Leave message to the upstream multicast router. The upstream multicast router responds by sending an IGMP query that is flooded to all vNICs. The host OS responds to these IGMP queries by rejoining all relevant multicast groups. This process forces the hosts to refresh the multicast state in the network in a timely manner.

Cisco UCS fabric failover is an important feature because it reduces the complexity of defining NIC teaming software for failover on the host. It does this transparently in the fabric based on the network property that is defined in the service profile.

Ethernet Switching Mode

The Ethernet switching mode determines how the fabric interconnect behaves as a switching device between the servers and the network. The fabric interconnect operates in either of the following Ethernet switching modes:

• End-host mode

• Switching mode

In end-host mode, the Cisco UCS presents an end host to an external Ethernet network. The external LAN sees the Cisco UCS Fabric Interconnect as an end host with multiple adapters (see Figure 11-27).

Figure 11-27 UCS FI End-Host Mode Ethernet

End-host mode allows the fabric interconnect to act as an end host to the network, representing all servers (hosts) connected to it through vNICs. This behavior is achieved by pinning (either dynamically pinning or hard pinning) vNICs to uplink ports, which provides redundancy to the network, and makes the uplink ports appear as server ports to the rest of the fabric.

In end-host mode, the fabric interconnect does not run the Spanning Tree Protocol (STP), but it avoids loops by denying uplink ports from forwarding traffic to each other and by denying egress server traffic on more than one uplink port at a time. End-host mode is the default Ethernet switching mode and should be used if either of the following is used upstream:

• Layer 2 switching for Layer 2 aggregation

• vPC or VSS aggregation layer

Note

When you enable end-host mode, if a vNIC is hard pinned to an uplink port and this uplink port goes down, the system cannot repin the vNIC, and the vNIC remains down.

Server links (vNICs on the blades) are associated with a single uplink port, which may also be a port channel. This association process is called pinning, and the selected external interface is called a pinned uplink port. The pinning process can be statically configured when the vNIC is defined or dynamically configured by the system. In end-host mode, pinning is required for traffic flow to a server.

Static pinning is performed by defining a pin group and associating the pin group with a vNIC. Static pinning should be used in scenarios in which a deterministic path is required. When the target (as shown on Figure 11-28) on Fabric Interconnect A goes down, the corresponding failover mechanism of the vNIC goes into effect, and traffic is redirected to the target port on Fabric Interconnect B.

Figure 11-28 UCS LAN Pinning Group Configuration

If the pinning is not static, the vNIC is pinned to an operational uplink port on the same fabric interconnect, and the vNIC failover mechanisms are not invoked until all uplink ports on that fabric interconnect fail. In the absence of Spanning Tree Protocol, the fabric interconnect uses various mechanisms for loop prevention while preserving an active-active topology.

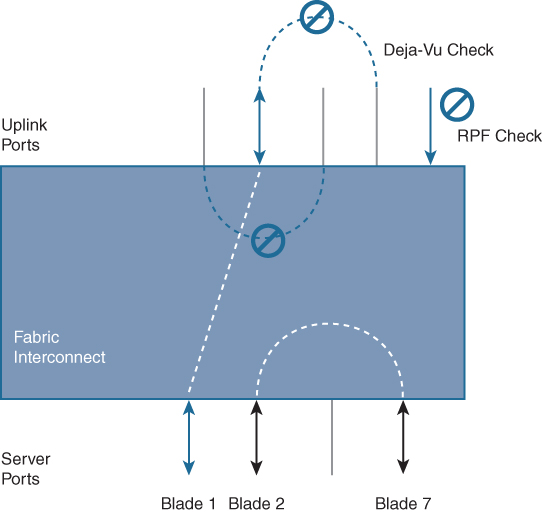

In the Cisco UCS, two types of Ethernet traffic paths will have different characteristics—Unicast and Multicast/Broadcast.

• Unicast traffic paths in the Cisco UCS are shown in Figure 11-29. Characteristics of unicast traffic in the Cisco UCS include the following:

• Each server link is pinned to exactly one uplink port (or port channel).

• Server-to-server Layer 2 traffic is locally switched.

• Server-to-network traffic goes out on its pinned uplink port.

• Network-to-server unicast traffic is forwarded to the server only if it arrives on a pinned uplink port. This feature is called the Reverse Path Forwarding (RPF) check.

• Server traffic received on any uplink port, except its pinned uplink port, is dropped (called the deja-vu check).

• The server MAC address must be learned before traffic can be forwarded to it.

![]()

Figure 11-29 UCS Unicast Traffic Path

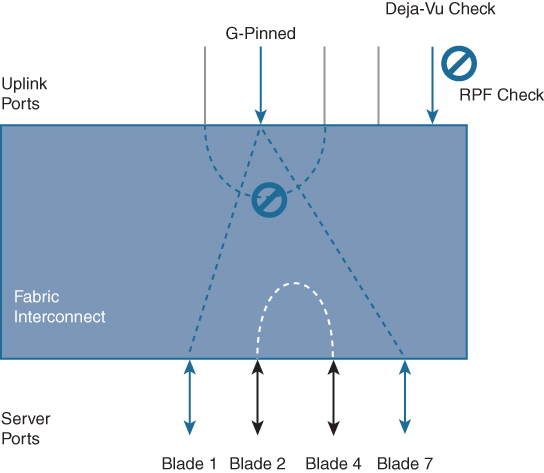

• Multicast/broadcast traffic paths in the Cisco UCS are shown in Figure 11-30. Characteristics of multicast/broadcast traffic in the Cisco UCS include the following:

• Broadcast traffic is pinned on exactly one uplink port in the Cisco UCS Manager, and the incoming broadcast traffic is pinned on a per-VLAN basis, depending on uplink port VLAN membership.

• IGMP multicast groups are pinned based on IGMP snooping. Each group is pinned to exactly one uplink port.

• Server-to-server multicast traffic is locally switched.

• RPF and deja-vu checks also apply to multicast traffic.

![]()

Figure 11-30 Multicast and Broadcast Traffic Summary

In switching mode the fabric interconnect runs STP to avoid loops, broadcast and multicast packets are handled in the traditional way. You should use the switching mode only if the fabric interconnect is directly connected to a router or if either of the following is used upstream:

• Layer 3 aggregation

• VLAN in a box

In Ethernet switching mode (see Figure 11-31), the Cisco UCS Fabric Interconnects act like traditional Ethernet switches with support for Spanning Tree Protocol on the uplink ports.

Figure 11-31 UCS FI Switch Mode Ethernet

The following are Ethernet switching mode features:

• Spanning Tree Protocol is run on the uplink ports per VLAN as defined by Cisco Per-VLAN Spanning Tree Plus (PVST+).

• Configuration of Spanning Tree Protocol parameters (such as bridge priority and hello timers) is not supported.

• VLAN Trunk Protocol (VTP) is not supported.

• MAC address learning and aging occur on both the server and uplink ports as in a typical Layer 2 switch.

• Upstream links are blocked according to Spanning Tree Protocol rules.

• In most cases, end-host mode is preferable because it offers scalability and simplicity for server administrators when connecting to an upstream network. However, there are other factors to consider when selecting the appropriate switching mode, including the following:

• Scalability

• Efficient use of bandwidth

• Fabric failover

• Active-active link utilization

• Disjoint Layer 2 domain or a loop-free topology

• Optimal network behavior for the existing network topology

• Application-specific requirements

Note

For both Ethernet switching modes, even when vNICs are hard-pinned to uplink ports, all server-to-server unicast traffic in the server array is sent only through the fabric interconnect and is never sent through uplink ports. Server-to-server multicast and broadcast traffic is sent through all uplink ports in the same VLAN.

Note

Cisco UCS Manager Release 4.0(2) and later releases support Ethernet and Fibre Channel switching modes on Cisco UCS 6454 Fabric Interconnects.

To configure Ethernet switching mode (see Figure 11-32), follow these steps:

Step 1. In the Navigation pane, click Equipment.

Figure 11-32 Cisco UCS Switch Fabric Mode Configuration

Step 2. Expand Equipment > Fabric Interconnects > Fabric_Interconnect_Name.

Step 3. In the Work pane, click the General tab.

Step 4. In the Actions area of the General tab, click one of the following links:

• Set Ethernet Switching Mode

• Set Ethernet End-Host Mode

Note

The link for the current mode is dimmed.

Step 5. In the dialog box, click Yes. The Cisco UCS Manager restarts the fabric interconnect, logs you out, and disconnects the Cisco UCS Manager GUI.

Note

When you change the Ethernet switching mode, the Cisco UCS Manager logs you out and restarts the fabric interconnect. For a cluster configuration, the Cisco UCS Manager restarts both fabric interconnects. The subordinate fabric interconnect reboots first as a result of the change in switching mode. The primary fabric interconnect reboots only after you acknowledge it in Pending Activities. The primary fabric interconnect can take several minutes to complete the change in Ethernet switching mode and become system ready. The existing configuration is retained. While the fabric interconnects are rebooting, all blade servers lose LAN and SAN connectivity, causing a complete outage of all services on the blades. This might cause the operating system to fail.

In some commonly deployed LAN topologies, switch mode provides the best network behavior. A typical example is a switch directly connected to a pair of Hot Standby Router Protocol (HSRP) routers that are the Spanning Tree Protocol roots on different VLANs. Switch mode provides the optimal path because of the use of Spanning Tree Protocol. For example, a vNIC belonging to an odd-numbered VLAN can be dynamically pinned to link X on Fabric Interconnect A (see Figure 11-33). As a result of this process, traffic traverses an extra hop to the HSRP master.

Figure 11-33 VLANs Load-Balanced Across a Pair of Switches

When a switch is directly connected to a pair of HRSP routers, the recommended Ethernet switching mode is switch mode because it provides the optimal path. End-host mode can be used if static pinning is employed.

UCS Device Discovery

The chassis connectivity policy determines whether a specific chassis is included in a fabric port channel after chassis discovery. This policy is helpful for users who want to configure one or more chassis differently from what is specified in the global chassis discovery policy. The chassis connectivity policy also allows for different connectivity modes per fabric interconnect, further expanding the level of control offered with regards to chassis connectivity.

By default, the chassis connectivity policy is set to global. This means that connectivity control is configured when the chassis is newly discovered, using the settings configured in the chassis discovery policy. Once the chassis is discovered, the chassis connectivity policy controls whether the connectivity control is set to none or port channel.

Chassis /FEX Discovery

The chassis discovery policy determines how the system reacts when you add a new chassis. The Cisco UCS Manager uses the settings in the chassis discovery policy to determine the minimum threshold for the number of links between the chassis and the fabric interconnect and whether to group links from the IOM to the fabric interconnect in a fabric port channel. In a Cisco UCS Mini setup, chassis discovery policy is supported only on the extended chassis.

The Cisco UCS Manager cannot discover any chassis that is wired for fewer links than are configured in the chassis/FEX discovery policy. For example, if the chassis/FEX discovery policy is configured for four links, the Cisco UCS Manager cannot discover any chassis that is wired for one link or two links. Reacknowledgement of the chassis resolves this issue.

Rack Server Discovery Policy

The rack server discovery policy determines how the system reacts when you add a new rack-mount server. The Cisco UCS Manager uses the settings in the rack server discovery policy to determine whether any data on the hard disks is scrubbed and whether server discovery occurs immediately or needs to wait for explicit user acknowledgment.

The Cisco UCS Manager cannot discover any rack-mount server that has not been correctly cabled and connected to the fabric interconnects. The steps to configure rack server discovery are as follows:

Step 1. In the Navigation pane, click Equipment.

Step 2. Click the Equipment node. In the Work pane, click the Policies tab.

Step 3. Click the Global Policies subtab.

Step 4. In the Rack Server Discovery Policy area, specify the action that you want to occur when a new rack server is added and specify the scrub policy. Then click Save Changes.

Initial Server Setup for Standalone UCS C-Series

Use the following procedure to perform initial setup on a UCS C-Series server:

Step 1. Power up the server. Wait for approximately two minutes to let the server boot in standby power during the first bootup. You can verify power status by looking at the Power Status LED:

• Off: There is no AC power present in the server.

• Amber: The server is in standby power mode. Power is supplied only to the CIMC and some motherboard functions.

• Green: The server is in main power mode. Power is supplied to all server components.

Note

Verify server power requirements because some servers (UCS C-240, for example) require 220V instead of 110V.

Note

During bootup, the server beeps once for each USB device that is attached to the server. Even if no external USB devices are attached, there is a short beep for each virtual USB device, such as a virtual floppy drive, CD/DVD drive, keyboard, or mouse. A beep is also emitted if a USB device is hot-plugged or hot-unplugged during the BIOS power-on self-test (POST), or while you are accessing the BIOS Setup utility or the EFI shell.

Step 2. Connect a USB keyboard and VGA monitor by using the supplied Kernel-based Virtual Machine (KVM) cable connected to the KVM connector on the front panel. You can use the VGA and USB ports on the rear panel. However, you cannot use the front-panel VGA and the rear-panel VGA at the same time. If you are connected to one VGA connector and you then connect a video device to the other connector, the first VGA connector is disabled.

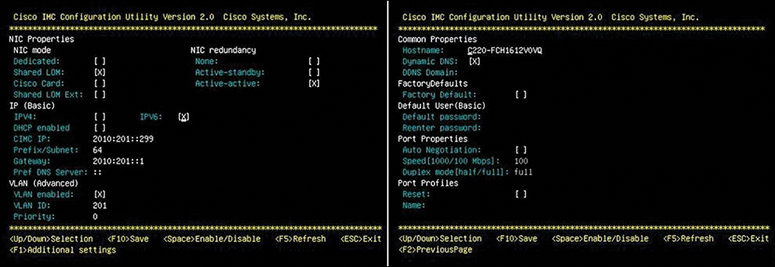

Step 3. Open the Cisco IMC Configuration Utility as follows:

• Press the Power button to boot the server. Watch for the prompt to press F8.

• During bootup, press F8 when prompted to open the Cisco IMC Configuration Utility, as shown in Figure 11-34.

Figure 11-34 Standalone UCS CIMC Configuration Utility

Note

The first time that you enter the Cisco IMC Configuration Utility, you are prompted to change the default password. The default password is password.

The following are the requirements for a strong password:

• The password can have a minimum of 8 characters and a maximum of 14 characters.

• The password must not contain the user’s name.

• The password must contain characters from three of the following four categories:

• English uppercase letters (A through Z)

• English lowercase letters (a through z)

• Base 10 digits (0 through 9)

• Nonalphabetic characters (!, @, #, $, %, ^, &, *, -, _, =, “)

Step 4. Set NIC mode and NIC redundancy as follows:

• Set the NIC mode to your choice for which ports to use to access the CIMC for server management:

• Shared LOM EXT (default): This is shared LOM extended mode. This is the factory-default setting, along with Active-active NIC redundancy and DHCP-enabled. With this mode, the shared LOM and Cisco card interfaces are both enabled.

In this mode, DHCP replies are returned to both the shared LOM ports and the Cisco card ports. If the system determines that the Cisco card connection is not getting its IP address from a Cisco UCS Manager system because the server is in standalone mode, further DHCP requests from the Cisco card are disabled. Use the Cisco card NIC mode if you want to connect to the CIMC through a Cisco card in standalone mode.

• Dedicated: The dedicated management port is used to access the CIMC. You must select a NIC redundancy and IP setting.

• Shared LOM: The 1-Gigabit Ethernet ports are used to access the CIMC. You must select a NIC redundancy and IP setting.

• Cisco Card: The ports on an installed Cisco UCS virtual interface card are used to access the CIMC. You must select a NIC redundancy and IP setting.

• Use this utility to change the NIC redundancy to your preference. This server has three possible NIC redundancy settings:

• None: The Ethernet ports operate independently and do not fail over if there is a problem.

• Active-standby: If an active Ethernet port fails, traffic fails over to a standby port.

• Active-active: All Ethernet ports are utilized simultaneously.

Step 5. Choose whether to enable DHCP for dynamic network settings or to enter static network settings. The static IPv4 and IPv6 settings include the following:

• The Cisco IMC IP address.

• The prefix/subnet. For IPv6, valid values are 1–127.

• The gateway. For IPv6, if you do not know the gateway, you can set it as none by typing :: (two colons).

• The preferred DNS server address. For IPv6, you can set this as none by typing :: (two colons).

Step 6. (Optional) Use this utility to make VLAN settings.

Step 7. (Optional) Set a host name for the server.

Step 8. (Optional) Enable dynamic DNS and set a dynamic DNS (DDNS) domain.

Step 9. (Optional) If you select the Factory Default check box, the server is set back to the factory defaults.

Step 10. (Optional) Set a default user password.

Step 11. (Optional) Enable autonegotiation of port settings or set the port speed and duplex mode manually. Autonegotiation is applicable only when you use the Dedicated NIC mode. Autonegotiation sets the port speed and duplex mode automatically based on the switch port to which the server is connected. If you disable autonegotiation, you must set the port speed and duplex mode manually.

Step 12. (Optional) Reset port profiles and the port name.

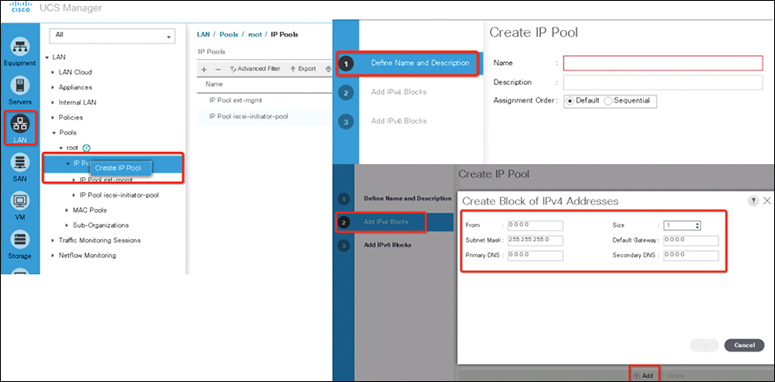

Step 13. Press F5 to refresh the settings you made. You might have to wait about 45 seconds until the new settings appear and the “Network settings configured” message is displayed before you reboot the server in the next step.