9 Evaluating the Outcomes of

Information Systems Plans

Managing information technology

evaluation – techniques and

processes*

As far as I am concerned we could write off our IT expenditure over the last five years to the training budget. (Senior executive, quoted by Earl, 1990)

. . . the area of measurement is the biggest single failure of information systems while it is the single biggest issue in front of our board of directors. I am frustrated by our inability to measure cost and benefit. (Head of IT: AT & T quoted in Coleman and Jamieson, 1991)

Introduction

Information Technology (IT) now represents substantial financial investment. By 1993, UK company expenditure on IT was exceeding £12 billion per year, equivalent to an average of over 1.5% of annual turnover. Public sector IT spend, excluding Ministry of Defence operational equipment, was over £2 billion per year, or 1% of total public expenditure. The size and continuing growth in IT investments, coupled with a recessionary climate and concerns over cost containment from early 1990, have served to place IT issues above the parapet in most organizations, perhaps irretrievably. Understandably, senior managers need to question the returns from such investments and whether the IT route has been and can be, a wise decision.

This is reinforced in those organizations where IT investment has been a high risk, hidden cost process, often producing disappointed expectations. This is a difficult area about which to generalize, but research studies suggest that at least 20% of expenditure is wasted and between 30% and 40% of IT projects realize no net benefits, however measured (for reviews of research see Willcocks, 1993). The reasons for failure to deliver on IT potential can be complex. However major barriers, identified by a range of studies, occur in how the IT investment is evaluated and controlled (see for example Grindley, 1991; Kearney, 1990; Wilson, 1991). These barriers are not insurmountable. The purpose of this chapter is to report on recent research and indicate ways forward.

Evaluation: emerging problems

Taking a management perspective, evaluation is about establishing by quantitative and/or qualitative means the worth of IT to the organization. Evaluation brings into play notions of costs, benefits, risk and value. It also implies an organizational process by which these factors are assessed, whether formally or informally.

There are major problems in evaluation. Many organizations find themselves in a Catch 22. For competitive reasons they cannot afford not to invest in IT, but economically they cannot find sufficient justification, and evaluation practice cannot provide enough underpinning, for making the investment. One thing all informed commentators agree on: there are no reliable measures for assessing the impact of IT. At the same time, there are a number of common problem areas that can be addressed. Our own research shows the following to be the most common:

• inappropriate measures

• budgeting practice conceals full costs

• understating human and organizational costs

• understating knock-on costs

• overstating costs

• neglecting ‘intangible’ benefits

• not fully investigating risk

• failure to devote evaluation time and effort to a major capital asset

• failure to take into account time-scale of likely benefits.

This list is by no means exhaustive of the problems faced (a full discussion of these problems and others appears in Willcocks, 1992a). Most occur through neglect, and once identified are relatively easy to rectify. A more fundamental and all too common failure is in not relating IT needs to the information needs of the organization. This relates to the broader issue of strategic alignment.

Strategy and information systems

The organizational investment climate has a key bearing on how investment is organized and conducted, and what priorities are assigned to different IT investment proposals. This is affected by:

• the financial health and market position of the organization

• industry sector pressures

• the organizational business strategy and direction

• the management and decision-making culture.

As an example of the second, 1989–90 research by Datasolve showed IT investment priorities in the retail sector focusing mainly on achieving more timely information, in financial services around better quality service to customers, and in manufacturing on more complete information for decision-making. As to decision-making culture, senior management attitude to risk can range from conservative to innovative, their decision-making styles from directive to consensus-driven (Butler Cox, 1990). As one example, conservative consensus-driven management would tend to take a relatively slow, incremental approach, with large-scale IT investment being unlikely. The third factor will be focused on here, that is creating a strategic climate in which IT investments can be related to organizational direction. Shaping the context in which IT evaluation is conducted is a necessary, frequently neglected prelude to then applying appropriate evaluation techniques and approaches. This section focuses on a few valuable pointers and approaches that work in practice to facilitate IT investment decisions that add value to the organization.

Alignment

A fundamental starting point is the need for alignment of business/organizational needs, what is done with IT, and plans for human resources, organizational structures and processes. The highly publicized 1990 Landmark Study tends to conflate these into alignment of business, organizational and IT strategies (Scott Morton, 1991; Walton, 1989). A simpler approach is to suggest that the word ‘strategy’ should be used only when these different plans are aligned. There is much evidence to suggest that such alignment rarely exists. In a study of 86 UK companies, Ernst and Young (1990) found only two aligned. Detailed research also shows lack of alignment to be a common problem in public sector informatization (Willcocks, 1992b). The case of an advertising agency (cited by Willcocks and Mason, 1994) provides a useful illustrative example:

In the mid-1980s, this agency installed accounting and market forecasting systems at a cost of nearly £100,000. There was no real evaluation of the worth of the IT to the business. It was installed largely because one director had seen similar systems running at a competitor. Its existing systems had been perfectly adequate and the market forecasting system ended up being used just to impress clients. At the same time as the system was being installed, the agency sacked over 36 staff and asked its managers not to spend more than £200 a week on expenses. The company was taken over in 1986. Clearly there had been no integrated plan on the business, human resource, organizational and IT fronts. This passed on into its IT evaluation practice. In the end, the IT amplifier effect may well have operated. IT was not used to address the core, or indeed any, of the needs of the business. A bad management was made correspondingly worse by the application of IT.

One result of such lack of alignment is that IT evaluation practice tends to become separated from business needs and plans on the one hand, and from organizational realities that can influence IT implementation and subsequent effectiveness on the other. Both need to be included in IT evaluation, and indeed are in the more comprehensive evaluation methods, notably the information economics approach (see below).

Another critical alignment is that between what is done with IT and how it fits with the information needs of the organization. Most management attention has tended to fall on the ‘technology’ rather than the ‘information’ element in what is called IT. Hochstrasser and Griffiths (1991) found in their sample no single company with a fully developed and comprehensive strategy on information. Yet it would seem to be difficult to perform a meaningful evaluation of IT investment without some corporate control framework establishing information requirements in relationship to business/organizational goals and purpose, prioritization of information needs and, for example, how cross-corporate information flows need to be managed. An information strategy directs IT investment, and establishes policies and priorities against which investment can be assessed. It may also help to establish that some information needs can be met without the IT vehicle.

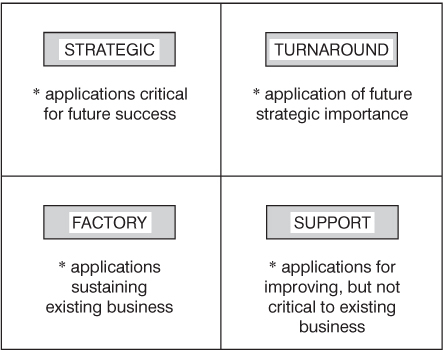

IT Strategic grid

The McFarlan and McKenney (1983) grid is a much-travelled, but useful framework for focusing management attention on the IT evaluation question: where does and will IT give us added value? A variant is shown below in Figure 9.1.

Figure 9.1 Strategic grid analysis

Cases: Two manufacturing companies

Used by the author with a group of senior managers in a pharmaceutical company, it was found that too much investment had been allowed on turnaround projects. In a period of downturn in business, it was recognized that the investment in the previous three years should have been in strategic systems. It was resolved to tighten and refocus IT evaluation practice. In a highly decentralized multinational mainly in the printing/publishing industry, it was found that most of the twenty businesses were investing in factory and support systems. In a recessionary climate, competitors were not forcing the issue on other types of system, the company was not strong on IT know-how, and it was decided that the risk-averse policy on IT evaluation, with strong emphasis on cost justification should continue.

The strategic grid is useful for classifying systems then demonstrating, through discussion, where IT investment has been made and where it should be applied. It can help to demonstrate that IT investments are not being made into core systems, or into business growth or competitiveness. It can also help to indicate that there is room for IT investment in more speculative ventures, given the spread of investment risk across different systems. It may also provoke management into spending more, or less, on IT. One frequent outcome is a demand to reassess which evaluation techniques are more appropriate to different types of system.

Value chain

Porter and Millar (1991) have also been useful in establishing the need for value chain analysis. This looks at where value is generated inside the organization, but also in its external relationships, for example with suppliers and customers. Thus the primary activities of a typical manufacturing company may be: inbound logistics, operations, outbound logistics, marketing and sales, and service. Support activities will be: firm infrastructure, human resource management, technology development and procurement. The question here is what can be done to add value within and across these activities? As every value activity has both a physical and an information-processing component, it is clear that the opportunities for value-added IT investment may well be considerable. Value chain analysis helps to focus attention on where these will be.

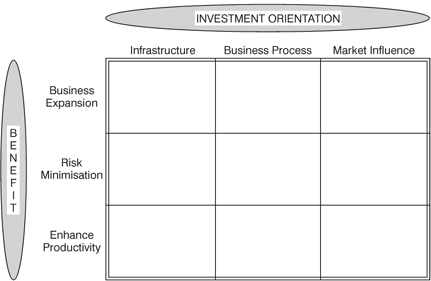

IT investment mapping

Another method of relating IT investment to organizational/business needs has been developed by Peters (1993). The basic dimensions of the map were arrived at after reviewing the main investment concerns arising in over 50 IT projects. The benefits to the organization appeared as one of the most frequent attributes of the IT investment (see Figure 9.2).

Figure 9.2 Investment mapping

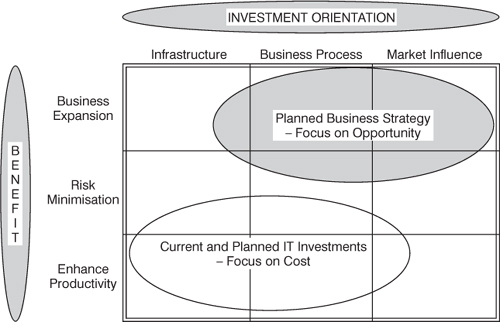

Thus one dimension of the map is benefits ranging from the more tangible arising from productivity enhancing applications to the less tangible from business expansion applications. Peters also found that the orientation of the investment toward the business was also frequently used in evaluation. He classifies these as infrastructure, e.g., telecommunications, software/hardware environment; business operations, e.g., finance and accounts, purchasing, processing orders; and market influencing, e.g., increasing repeat sales, improving distribution channels. Figure 9.3 shows the map being used in a hypothetical example to compare current and planned business strategy in terms of investment orientation and benefits required, against current and planned IT investment strategy.

Figure 9.3 Investment map comparing business and IT plans

Mapping can reveal gaps and overlaps in these two areas and help senior management to get them more closely aligned. As a further example:

a company with a clearly defined, product-differentiated strategy of innovation would do well to reconsider IT investments which appeared to show undue bias towards a price-differentiated strategy of cost reduction and enhancing productivity.

Multiple methodology

Finally, Earl (1989) wisely opts for a multiple methodology approach to IS strategy formulation. This again helps us in the aim of relating IT investment more closely with the strategic aims and direction of the organization and its key needs. One element here is a top-down approach. Thus a critical success factors analysis might be used to establish key business objectives, decompose these into critical success factors, then establish the IS needs that will drive these CSFs. A bottom-up evaluation would start with an evaluation of current systems. This may reveal gaps in the coverage by systems, for example in the marketing function or in terms of degree of integration of systems across functions. Evaluation may also find gaps in the technical quality of systems and in their business value. This permits decisions on renewing, removing, maintaining or enhancing current sysems. The final leg of Earl's multiple methodology is ‘inside-out innovation’. The purpose here is to ‘identify opportunities afforded by IT which may yield competitive advantage or create new strategic options’. The purpose of the whole threefold methodology is, through an internal and external analysis of needs and opportunities, to relate the development of IS applications to business/organizational need and strategy.

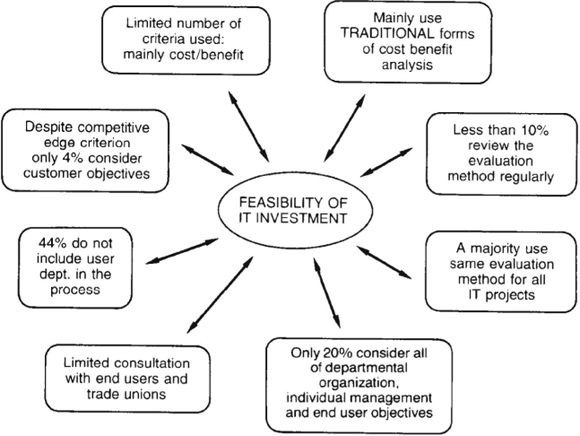

Evaluating feasibility: findings

The right ‘strategic climate’ is a vital prerequisite for evaluating IT projects at their feasibility stage. Here, we find out how organizations go about IT feasibility evaluation and what pointers for improved practice can be gained from the accumulated evidence. The picture is not an encouraging one. Organizations have found it increasingly difficult to justify the costs surrounding the purchase, development and use of IT. The value of IT/IS investments are more often justified by faith alone, or perhaps what adds up to the same thing, by understating costs and using mainly notional figures for benefit realization (see Farbey et al., 1992; PA Consulting, 1990; Price Waterhouse, 1989; Strassman, 1990; Willcocks and Lester, 1993).

Willcocks and Lester (1993) looked at 50 organizations drawn from a cross-section of private and public sector manufacturing and services. Subsequently this research was extended into a follow-up interview programme. Some of the consolidated results are recorded in what follows. We found all organizations completing evaluation at the feasibility stage, though there was a fall off in the extent to which evaluation was carried out at later stages. This means that considerable weight falls on getting the feasibility evaluation right. High levels of satisfaction with evaluation methods were recorded. However, these perceptions need to be qualified by the fact that only 8% of organizations measured the impact of the evaluation, that is, could tell us whether the IT investment subsequently achieved a higher or lower return than other non-IT investments. Additionally there emerged a range of inadequacies in evaluation practice at the feasibility stage of projects. The most common are shown in Figure 9.4.

Figure 9.4 IT evaluation: feasibility findings

Senior managers increasingly talk of, and are urged toward, the strategic use of IT. This means doing new things, gaining a competitive edge, and becoming more effective, rather than using IT merely to automate routine operations, do existing things better, and perhaps reduce the headcount. However only 16% of organizations used over four criteria on which to base their evaluation. Cost/benefit was used by 62% as their predominant criterion in the evaluation process. The survey evidence here suggests that organizations may be missing IS opportunities, but also taking on large risks, through utilizing narrow evaluation approaches that do not clarify and assess less tangible inputs and benefits. There was also little evidence of a concern for assessing risk in any formal manner. However the need to see and evaluate risks and ‘soft’ hidden costs would seem to be essential, given the history of IT investment as a ‘high risk, hidden cost’ process.

A sizable minority of organizations (44%) did not include the user department in the evaluation process at the feasibility stage. This cuts off a vital source of information and critique on the degree to which an IT proposal is organizationally feasible and will deliver on user requirements. Only a small minority of organizations accepted IT proposals from a wide variety of groups and individuals. In this respect most ignored the third element in Earl's multiple methodology (see above). Despite the large amount of literature emphasizing consultation with the workforce as a source of ideas, know-how and as part of the process of reducing resistance to change, only 36% of organizations consulted users about evaluation at the feasibility stage, while only 18% consulted unions. While the majority of organizations (80%) evaluated IT investments against organizational objectives, only 22% acted strategically in considering objectives from the bottom to the top, that is, evaluated the value of IT projects against all of organization, departmental, individual management, and end-user objectives. This again could have consequences for the effectiveness and usability of the resulting systems, and the levels of resistance experienced.

Finally, most organizations endorsed the need to assess the competitive edge implied by an IT project. However, somewhat inconsistently, only 4% considered customer objectives in the evaluation process at the feasibility stage. This finding is interesting in relationship to our analysis that the majority of IT investment in the respondent organizations were directed at achieving internal efficiencies. It may well be that not only the nature of the evaluation techniques, but also the evaluation process adopted, had influential roles to play in this outcome.

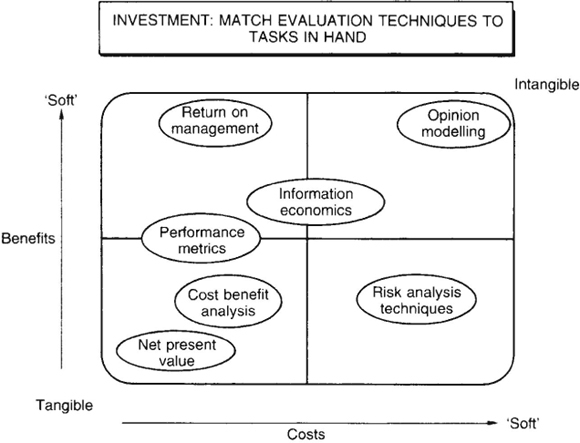

Linking strategy and feasibility techniques

Much work has been done to break free from the limitations of the more traditional, finance-based forms of capital investment appraisal. The major concerns seem to be to relate evaluation techniques to the type of IT project, and to develop techniques that relate the IT investment to business/organization value. A further development is in more sophisticated ways of including risk assessment in the evaluation procedures for IT investment. A method of evaluation needs to be reliable, consistent in its measurement over time, able to discriminate between good and indifferent investments, able to measure what it purports to measure, and be administratively/organizationally feasible in its application.

Return on management

Strassman (1990) has done much iconoclastic work in the attempt to modernize IT investment evaluation. He concludes that:

Many methods for giving advice about computers have one thing in common. They serve as a vehicle to facilitate proposals for additional funding . . . the current techniques ultimately reflect their origins in a technology push from the experts, vendors, consultants, instead of a ‘strategy’ pull from the profit centre managers.

He has produced the very interesting concept of Return on Management (ROM). ROM is a measure of performance based on the added value to an organization provided by management. Strassman's assumption here is that, in the modern organization, information costs are the costs of managing the enterprise. If ROM is calculated before then after IT is applied to an organization then the IT contribution to the business, so difficult to isolate using more traditional measures, can be assessed. ROM is calculated in several stages. First, using the organization's financial results, the total value-added is established. This is the difference between net revenues and payments to external suppliers. The contribution of capital is then separated from that of labour. Operating costs are then deducted from labour value-added to leave management value-added. ROM is management value-added divided by the costs of management. There are some problems with how this figure is arrived at, and whether it really represents what IT has contributed to business performance. For example, there are difficulties in distinguishing between operational and management information. Perhaps ROM is merely a measure in some cases, and a fairly indirect one, of how effectively management information is used. A more serious criticism lies with the usability of the approach and its attractiveness to practising managers. This may be reflected in its lack of use, at least in the UK, as identified in different surveys (see Butler Cox, 1990; Coleman and Jamieson, 1991; Willcocks and Lester, 1993).

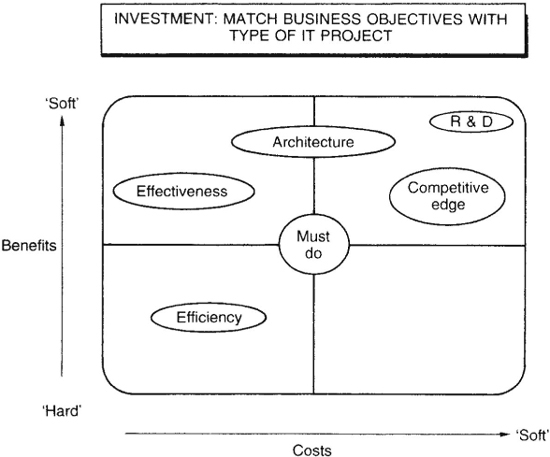

Matching objectives, projects and techniques

A major way forward on IT evaluation is to match techniques to objectives and types of projects. A starting point is to allow business strategy and purpose to define the category of IT investment. Butler Cox (1990) suggests five main purposes:

1 surviving and functioning as a business;

2 improving business performance by cost reduction/increasing sales;

3 achieving a competitive leap;

4 enabling the benefits of other IT investments to be realized;

5 being prepared to compete effectively in the future.

The matching IT investments can then be categorized, respectively, as:

1 Mandatory investments, for example accounting systems to permit reporting within the organization, regulatory requirements demanding VAT recording systems; competitive pressure making a system obligatory, e.g., EPOS amongst large retail outlets.

2 Investments to improve performance, for example, Allied Dunbar and several UK insurance companies have introduced laptop computers for sales people, partly with the aim of increasing sales.

3 Competitive edge investments, for example SABRE at American Airlines, and Merrill Lynch's cash management account system in the mid-1980s.

4 Infrastructure investments. These are important to make because they give organizations several more degrees of freedom to manoeuvre in the future.

5 Research investments. In our sample we found a bank and three companies in the computer industry waiving normal capital investment criteria on some IT projects, citing their research and learning value. The amounts were small and referred to case tools in one case, and expert systems in the others.

There seems to be no shortage of such classifications now available. One of the more simple but useful is the sixfold classification shown in Figure 9.5.

Once assessed against, and accepted as aligned with required business purpose, a specific IT investment can be classified, then fitted on to the cost benefit map (Figure 9.5 is meant to be suggestive only). This will assist in identifying where the evaluation emphasis should fall. For example, an ‘efficiency’ project could be adequately assessed utilizing traditional financial investment appraisal approaches; a different emphasis will be required in the method chosen to assess a ‘competitive edge’ project. Figure 9.6 is one view of the possible spread of appropriateness of some of the evaluation methods now available.

Figure 9.5 Classifying IT investments

Figure 9.6 Matching projects to techniques

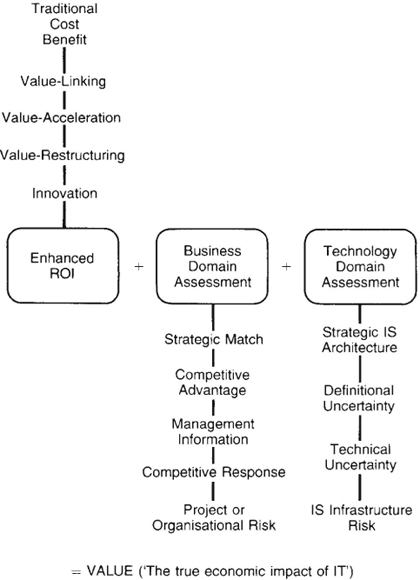

From cost-benefit to value

A particularly ambitious attempt to deal with many of the problems in IT evaluation – both at the level of methodology and of process – is represented in the information economics approach (Parker et al.1988). This builds on the critique of traditional approaches, without jettisoning where the latter may be useful.

Information economics looks beyond benefit to value. Benefit is a ‘discrete economic effect’. Value is seen as a broader concept based on the effect IT investment has on the business performance of the enterprise. How value is arrived at is shown in Figure 9.7. The first stage is building on traditional cost benefit analysis with four highly relevant techniques to establish an enhanced return on investment calculation. These are:

(a) Value linking. This assesses IT costs which create additional benefits to other departments through ripple, knock-on effects.

(b) Value acceleration. This assesses additional benefits in the form of reduced time-scales for operations.

(c) Value restructuring. Techniques are used to measure the benefit of restructuring a department, jobs or personnel usage as a result of introducing IT. This technique is particularly helpful where the relationship to performance is obscure or not established. R&D, legal and personnel are examples of departments where this may be usefully applied.

Figure 9.7 The information economics approach

(d) Innovation valuation. This considers the value of gaining and sustaining a competitive advantage, while calculating the risks or cost of being a pioneer and of the project failing.

Information economics then enhances the cost-benefit analysis still further through business domain and technology domain assessments. These are shown in Figure 9.7. Here strategic match refers to assessing the degree to which the proposed project corresponds to established goals; competitive advantage to assessing the degree to which the proposed project provides an advantage in the marketplace; management information to assessing the contribution toward the management need for information on core activities; competitive response to assessing the degree of corporate risk associated with not undertaking the project; and strategic architecture to measuring the degree to which the proposed project fits into the overall information systems direction.

Case: Truck leasing company

As an example of what happens when such factors and business domain assessment are neglected in the evaluation, Parker et al. (1988) point to the case of a large US truck leasing company. Here they found that on a ‘hard’ ROI analysis, IT projects on preventative maintenance, route scheduling and despatching went top of the list. When a business domain assessment was carried out by line managers, customer/sales profile system was evaluated as having the largest potential effect on business performance. An important infrastructure project – a Database 2 conversion/installation – also scored highly where previously it was scored bottom of eight project options. Clearly the evaluation technique and process can have a significant business impact where economic resources are finite and prioritization and drop decisions become inevitable.

The other categories in Figure 9.7 can be briefly described:

• Organizational risk – looking at how equipped the organization is to implement the project in terms of personnel, skills and experience.

• IS infrastructure risk – assessing how far the entire IS organization needs, and is prepared to support, the project.

• Definitional uncertainty – assessing the degree to which the requirements and/or the specifications of the project are known. Incidentally, research into more than 130 organizations shows this to be a primary barrier to the effective delivery of IT (Willcocks, 1993). Also assessed are the complexity of the area and the probability of non-routine changes.

• Technical uncertainty – evaluating a project's dependence on new or untried technologies.

Information economics provides an impressive array of concepts and techniques for assessing the business value of proposed IT investments. The concern for fitting IT evaluation into a corporate planning process and for bringing both business managers and IS professionals into the assessment process is also very welcome.

Some of the critics of information economics suggest that it may be over-mechanistic if applied to all projects. It can be time-consuming and may lack credibility with senior management, particularly given the subjective basis of much of the scoring. The latter problem is also inherent in the process of arriving at the weighting of the importance to assign to the different factors before scoring begins. Additionally there are statistical problems with the suggested scoring methods. For example, a scoring range of 1/5 may do little to differentiate between the ROI of two different projects. Moreover, even if a project scores nil on one risk, e.g. organizational risk, and in practice this risk may sink the project, the overall assessment by information economics may cancel out the impact of this score and show the IT investment to be a reasonable one. Clearly much depends on careful interpretion of the results, and much of the value for decision-makers and stakeholders may well come from the raised awareness of issues from undergoing the process of evaluation rather that from its statistical outcome. Another problem may lie in the truncated assessment of organizational risk. Here, for example, there is no explicit assessment of the likelihood of a project to engender resistance to change because of, say, its job reduction or work restructuring implications. This may be compounded by the focus on bringing user managers, but one suspects not lower level users, into the assessment process.

Much of the criticism, however, ignores how adaptable the basic information economics framework can be to particular organizational circumstances and needs. Certainly this has been a finding in trials in organizations as varied as British Airports Authority, a Central Government Department and a major food retailer.

Case: Retail food company

In the final case, Ong (1991) investigated a three-phase branch stock management system. Some of the findings are instructive. Managers suggested including the measurement of risk associated with interfacing systems and the difficulties in gaining user acceptance of the project. In practice few of the managers could calculate the enhanced ROI because of the large amount of data required and, in a large organization, its spread across different locations. Some felt the evaluation was time-independent; different results could be expected at different times. The assessment of risk needed to be expanded to include not only technical and project risk but also the risk impact of failure to an organization of its size. In its highly competitive industry, any unfavourable venture can have serious knock-on impacts and most firms tend to be risk-conscious, even risk-averse.

Such findings tend to reinforce the view that information economics provides one of the more comprehensive approaches to assessing the potential value to the organization of its IT investments, but that it needs to be tailored, developed, and in some cases extended, to meet evaluation needs in different organizations. Even so, information economics remains a major contribution to advancing modern evaluation practice.

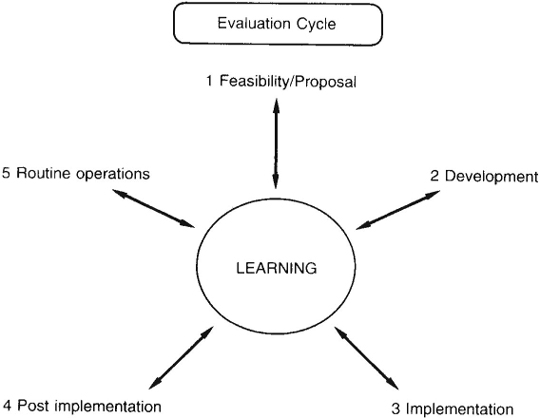

CODA: From development to routine operations

This chapter has focused primarily on the front-end of evaluation practice and how it can be improved. In research on evaluation beyond the feasibility stage of projects, we have found evaluation variously carried on through four main additional stages. Respondent organizations supported the notion of an evaluation learning cycle, with evaluation at each stage feeding into the next to establish a learning spiral across time – useful for controlling a specific project, but also for building organizational know-how on IT and its management (see Figure 9.8). The full research findings are detailed elsewhere (see Willcocks and Lester, 1993). However, some of the limitations in evaluation techniques and processes discovered are worth commenting on here.

We found only weak linkage between evaluations carried out at different stages. As one example, 80% of organizations had experienced abandoning projects at the development stage due to negative evaluation. The major reasons given were changing organizational or user needs and/or ‘gone over budget’. When we reassembled the data, abandonment clearly related to underplaying these objectives at the feasibility stage. Furthermore, all organizations abandoning projects because ‘over budget’ depended heavily on cost-benefit in their earlier feasibility evaluation, thus probably understating development and second-order costs. We found only weak evidence of organizations applying their development stage evaluation, and indeed their experiences at subsequent stages, to improving feasibility evaluation techniques and processes.

Figure 9.8 The evaluation cycle

Key stakeholders were often excluded from the evaluation process. For example, only 9% of organizations included the user departments/users in development evaluation. At the implementation stage, 31% do not include user departments, 52% exclude the IT department, and only 6% consult trade unions. There seemed to be a marked fall-off in attention given to, and the results of, evaluation across later stages. Thus 20% do not carry out evaluation at the post-implementation stage, some claiming there was little point in doing so. Of the 56% who learn from their mistakes at this stage, 25% do so from ‘informal evaluation’. At the routine operations stage, only 20% use in their evaluation criteria systems capability, systems availability, organizational needs and departmental needs.

These, together with our detailed findings, suggest a number of guidelines on how evaluation practice can be improved beyond the feasibility stage. At a minimum these include:

1 Linking evaluation across stages and time – this enables ‘islands of evaluation’ to become integrated and mutally informative, while building into the overall evaluation process possibilities for continuous improvement.

2 Many organizations can usefully reconsider the degree to which key stakeholders are participants in evaluation at all stages.

3 The relative neglect given to assessing the actual against the posited impact of IT, and the fall-off in interest in evaluation at later stages, mean that the effectiveness of feasibility evaluation becomes difficult to assess and difficult to improve. The concept of learning would seem central to evaluation practice, but tends to be applied in a fragmented way.

4 The increasing clamour for adequate evaluation techniques is necessary, but may reveal a quick-fix orientation to the problem. It can shift attention from what may be a more difficult, but in the long term more value-added area, which is getting the process right.

Conclusions

The high expenditure on IT, growing usage that goes to the core of organizational functioning, together with disappointed expectations about its impact, have all served to raise the profile of how IT investment can be evaluated. It is not only an underdeveloped, but also an undermanaged area which organizations can increasingly ill-afford to neglect. There are well-established traps that can now be avoided. Organizations need to shape the context in which effective evaluation practice can be conducted. Traditional techniques cannot be relied upon in themselves to assess the types of technologies and how they are increasingly being applied in organizational settings. A range of modern techniques can be tailored and applied. However, techniques can only complement, not substitute for developing evaluation as a process, and the deeper organizational learning about IT that entails. Past evaluation practice has been geared to asking questions about the price of IT. Increasingly, it produces less than useful answers. The future challenge is to move to the problem of value of IT to the organization, and build techniques and processes that can go some way to answering the resulting questions.

References

Butler Cox (1990) Getting value from information technology. Research Report 75, June, Butler Cox Foundation, London.

Coleman T. and Jamieson, M. (1991) Information systems: evaluating intangible benefits at the feasibility stage of project appraisal. Unpublished MBA thesis, City University Business School, London.

Earl, M. (1989) Management Strategies for Information Technology, Prentice Hall, London.

Earl, M. (1990) Education: The foundation for effective IT strategies. IT and the new manager conference. Computer Weekly/Business Intelligence, June, London.

Ernst and Young, (1990) Strategic Alignment Report: UK Survey, Ernst and Young, London.

Farbey, B., Land, F. and Targett, D. (1992) Evaluating investments in IT. Journal of Information Technology, 7(2), 100–112.

Grindley, K. (1991) Managing IT at Board Level, Pitman, London.

Hochstrasser, B. and Griffiths, C. (1991) Controlling IT Investments: Strategy and Management, Chapman and Hall, London.

Kearney, A. T. (1990) Breaking the Barriers: IT Effectiveness in Great Britain and Ireland, A. T. Kearney/CIMA, London.

McFarlan, F. and McKenney, J. (1983) Corporate Information Systems Management: The Issues Facing Senior Executives, Dow Jones Irwin, New York.

Ong, D. (1991) Evaluating IS investments: a case study in applying the information economics approach. Unpublished thesis, City University, London.

PA Consulting Group (1990) The Impact of the Current Climate on IT – The Survey Report, PA Consulting Group, London.

Parker, M., Benson, R. and Trainor, H. (1988) Information Economics, Prentice Hall, London.

Peters, G. (1993) Evaluating your computer investment strategy. In Information Management: Evaluation of Information Systems Investments (ed. L. Willcocks), Chapman and Hall, London, pp. 99 –112.

Porter, M. and Millar, V. (1991) How information gives you competitive advantage. In Revolution in Real Time: Managing Information Technology in the 1990s (ed. W. McGowan), Harvard Business School Press, Boston, pp. 59–82.

Price Waterhouse (1989) Information Technology Review 1989/90, Price Waterhouse, London.

Scott Morton, M. (ed.) (1991) The Corporation of the 1990s, Oxford University Press, Oxford.

Strassman, P. A. (1990) The Business Value of Computers, The Information Economics Press, New Canaan, CT.

Walton, R. (1989) Up and Running, Harvard Business School Press, Boston.

Willcocks, L. (1992a) Evaluating information technology investments: research findings and reappraisal. Journal of Information Systems, 2(3), 242–268.

Willcocks, L. (1992b) The manager as technologist? In Rediscovering Public Services Management (eds L. Willcocks and J. Harrow), McGraw-Hill, London.

Willcocks, L. (ed.) (1993) Information Management: Evaluation of Informations Systems Investments, Chapman and Hall, London.

Willcocks, L. and Lester, S. (1993) Evaluation and control of IS investments. OXIIM Research and Discussion Paper 93/5, Templeton College, Oxford.

Willcocks, L. and Mason, D. (1994) Computerising Work: People, Systems Design and Workplace Relations, 2nd edn, Alfred Waller Publications, Henley-on-Thames.

Wilson, T. (1991) Overcoming the barriers to implementation of information systems strategies. Journal of Information Technology, 6(1), 39–44.

Adapted from Willcocks, L. (1992) IT evaluation: managing the catch 22. The European Management Journal, 10(2), 220–229. Reprinted by permission of the author and Elsevier Science.

Questions for discussion

1 The value of IT/IS investments is more often justified by faith alone, or perhaps what adds up to the same thing, by understanding costs and using mainly notional figures for benefit realization. Discuss the reasons for which IT evaluation is rendered so difficult.

2 Evaluate the three major evaluation techniques the author discusses – ROM, matching objectives, projects and techniques, and information economics.

3 Again refer back to the revised stages of growth model introduced in Chapter 2: might different evaluation techniques be appropriate at different phases?

4 Who should be involved in the IT evaluation process?

5 Given the two approaches to SISP (impact and alignment) proposed by Lederer and Sethi in Chapter 8, what evaluation approach might be appropriate for the two SISP approaches?

* An earlier version of this chapter appeared in the European Management Journal, Vol. 10, No. 2. June, pp. 220–229.