5.3 Building Theories

Students in the physical sciences often think of theories as mathematical equations that sum up the essential characteristics of a certain class of phenomena. Are such laws the goal that we must aspire to if we want to contribute to theory? Molander [4] concisely defines theories as “systems of statements, some of which are regarded as laws, that describe and explain the phenomena within a certain area of investigation in an integrated and coherent way” (author's translation). By this definition the answer to our question is no, because statements are not necessarily made in the language of mathematics. It is, in fact, quite easy to find examples of important theories that are not mathematical, such as plate tectonics in geology or evolution in biology.

Theories make statements about what we are going to call theoretical concepts. These represent certain aspects of reality and are used to facilitate our thinking about the world. Theories can be seen as rules that apply to the concepts. Newton's third law, for example, states that “the forces of two bodies on each other are always equal and in opposite directions”. This is clearly a strict and general rule that applies to the concepts of forces and bodies. But we do not have to aspire to come up with something like Newton's laws to make valuable contributions to theory. We only have to contribute with good, general knowledge that in due course may be integrated into a greater, more general theory. The established theories that we have learned about in textbooks have not emerged from a vacuum. Most of them build on a multitude of contributions from various researchers. For example, both Galileo and Kepler contributed to the development of Newton's mechanics, and they both built on the work of others.

The building of theories begins by looking at the world and describing it. Organizing the characteristics of a phenomenon is a necessary preparation for trying to understand its underlying mechanisms. Theoretical physicist Richard Feynman uses Mendeleev's discovery of the periodic table of elements as a good example of this [5]. The table is based on the observation that some chemical elements behave more alike than others and that their properties repeat periodically as the atomic mass increases. The table does not explain why the pattern exists but it makes the pattern readily visible. The explanation for the periodic behavior came later with atomic theory.

According to Feynman, the search for a new theory begins by making a guess about the reason behind a certain phenomenon. The next step is to work out its various consequences to see if it tallies with reality: “If it disagrees with experiment it is wrong. In that simple statement is the key to science” [5]. This sounds very much like the hypothetico-deductive approach introduced in Chapter 2 but this, Feynman adds, will give us the wrong impression of science because

[…] this is to put experiment into a rather weak position. In fact experimenters have a certain individual character. They like to do experiments even if nobody has guessed yet, and they very often do their experiments in a region in which people know the theorist has not made any guesses. […] In this way experiment can produce unexpected results, and that starts us guessing again [5].

This description sums up the point that we made in Chapter 2 about science not being purely hypothetico-deductive: sometimes observation precedes theory and sometimes it is the other way around.

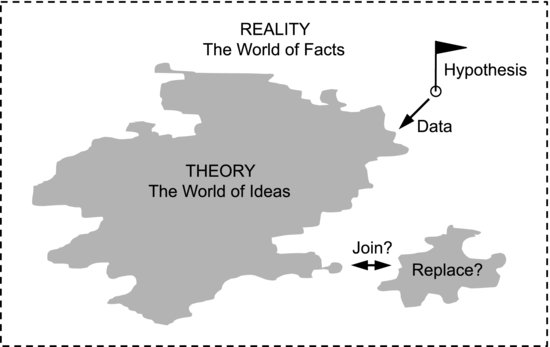

The aim of developing theories is to come as close as possible to a general understanding of the world. We wish to represent reality by ideas or rules that are so general that we may, in some cases, even call them Laws of Nature. This could be seen as a mapping process where theory is the map and the real world is the terrain to be surveyed. The process is sketched in Figure 5.3. Parts of reality that are not yet covered by any theory are white areas on the map. To expand our theoretical knowledge we need to know its boundaries. We also need strategies for moving them forward. In the hypothetico-deductive approach this is done by formulating hypotheses, which can be seen as theoretical outposts in the uncharted terrain. We collect data to see how well the hypotheses represent reality; a wide variety of observational support is generally required to incorporate an hypothesis into the body of established theory. But this gives a limited view of how theory grows. Previous examples in this book show that the growth is not necessarily an additive process where new parts are added to the existing ones. Sometimes we suspect that two areas of theory may be joined into a larger one, as when Maxwell joined electricity and magnetism into the unified electromagnetic theory that explained more. Older theories may also be replaced with new ones that explain more aspects of a given phenomenon.

Figure 5.3 Development of theory can be seen as a mapping process. In the hypothetico-deductive approach new areas are charted by suggesting hypotheses and comparing them with data. Theory can also grow in other ways.

Example 5.1: To illustrate the role of theoretical concepts in the development of theory, let us look at a phenomenon that has been known to humanity for a long time: fire. The complexity of combustion is daunting. In a flame, thousands of chemical reactions occur at the same time, often in a turbulent flow. The detailed description of both the turbulence and the chemistry goes beyond the state of the art of these sciences, and in combustion they are coupled in one problem: the turbulence affects the chemistry of the flame and vice versa. How do we even begin to formulate theories about something as complex as fire?

To illustrate this I will use a very brief (and simplified) example from my own field of expertise, diesel engines. As we said before, theories begin by looking at the world and describing what we see. When looking at a burning jet of diesel fuel, we may observe that it always burns as a lifted flame. The opposite of this would be an attached flame, such as the flame sitting on the wick of a candle or on a Bunsen burner. If you increase the gas flow sufficiently in a Bunsen burner, the flame will detach from the nozzle and become lifted. This happens when the flow speed at the nozzle exceeds the flame speed, which is the speed at which a flame propagates through a stationary gas mixture. Already at this point we have introduced the simple theoretical concepts of attached and lifted flames to describe a process that is fundamentally enormously complex.

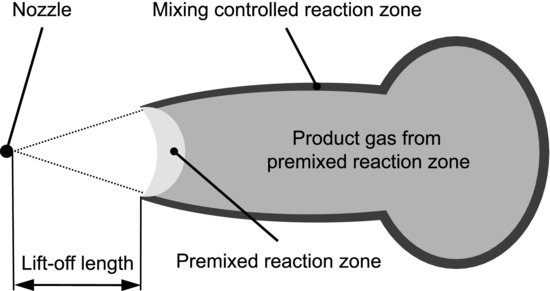

In a lifted flame, the distance from the nozzle to the flame is called the lift-off length. This is another theoretical concept that is important for the understanding of diesel combustion. Combustion in diesel jets occurs in two stages [6], which are schematically described in Figure 5.4. Fuel flows into the combustion chamber from the nozzle at the left and starts to react at the lift-off position. At the center of the jet is a premixed reaction zone – another theoretical concept used to describe the situation when fuel and oxidant are mixed prior to combustion. The product gas from this zone is surrounded by a second, mixing-controlled reaction zone at the periphery of the jet. “Mixing-controlled” refers to fuel and oxidant being mixed during the course of combustion. The reason for the two-stage process is that the premixed stage is fuel rich – there is not enough air present to completely oxidize the fuel. Partially oxidized products from this stage mix and react with fresh air at the jet periphery, giving rise to the mixing-controlled zone. We have now used four more theoretical concepts to concisely characterize some essential features of a process that, at first sight, seems too complex to describe at all.

Figure 5.4 Schematic description of a burning diesel jet. Fuel is injected into the combustion chamber from the nozzle. Flame reactions commence at the lift-off position and occur in two steps. The mushroom shape arises from fluid phenomena.

After this sort of characterization we can try to explain interesting aspects using the concepts. For example, diesel engines are often connected with black smoke that mainly consists of soot formed during combustion. The lift-off length is believed to be important for understanding the formation of soot in the diesel jet. Soot can be seen as carbon atoms from the fuel that are not completely oxidized, and thereby lump together into particles. As the first, premixed stage of combustion is fuel rich, it produces large amounts of soot. The more air that is entrained in the diesel jet upstream of the lift-off position, the less fuel rich the premixed zone becomes and the less soot is formed. It is no use trying to entrain air into the jet downstream of the lift-off position, because it will be consumed in the mixing-controlled zone. Air entrainment prior to lift-off should, therefore, control the formation of soot.

It could seem like we now have a good understanding of the limiting factors behind soot formation in diesel jets. Theory says that the lift-off position is stabilized at a certain distance from the nozzle due to the balance between the flow speed and the flame speed. The flame speed is more or less constant, whereas the flow speed decreases with the distance from the nozzle. The lift-off length, in turn, determines how fuel rich the premixed zone becomes and this determines how much soot is formed. That is what we thought, but the situation is complicated by some recent experimental findings. These indicate that, under the conditions prevailing in diesel engines, the lift-off length is not stabilized through a balance between flow and flame speed. It rather seems to stabilize through a process that is more closely related to auto-ignition [7]. The old theory is thus not adequate under these conditions and, at present, we have no good theory available to explain how the lift-off length is stabilized in diesel engines. The situation echoes Feynman's words, “experiment can produce unexpected results, and that starts us guessing again”.![]()

The example shows that it is important to find ways to reduce the complexity of a problem before trying to understand it. We do this by looking for general patterns and describing them using concepts that can guide our thinking.

When it comes to theories, simplicity is not only desirable, it is a requirement. This is often called the principle of parsimony. It is also popularly called Occam's razor, after the mediaeval philosopher William of Occam. It states that when we try to explain a phenomenon we should not increase the complexity of our explanations beyond what is necessary. Naturally, some problems require more complex solutions than others but that is not a contradiction. The principle merely states that we should simplify as much as possible, not more. Parsimony is fundamentally a question of intellectual hygiene. We should never add unnecessary features to a theory, because complexity makes it difficult to see underlying patterns. The need to patch a theory up with various features is often a sign that something is wrong with the idea behind it.

We have already seen how the principle of parsimony works in connection with the development of the Copernican system in Chapter 3. This system was favored not because it was more accurate than the Ptolemaic one – which it initially was not – but because it was easier to understand. The problem was that it incorrectly represented the planetary orbits by circles. The first attempt to correct the prediction errors was to add epicycles to the circular orbits, which made the model less parsimonious. When Kepler realized that the orbits were ellipses he decreased the complexity again and improved the accuracy at the same time. In retrospect we see that this was a sign that he was closer to the truth.

What characterizes a good theory apart from simplicity? Good theories should of course be testable. If we cannot test their validity they tell us nothing about the world. They should also be both good and useful representations of reality. A good representation is general, meaning that it applies to a wide variety of conditions. For a predictive theory “good” also means accurate. Not all theories are mathematical, but in order to be predictive a theory must be expressed in mathematical form. The usefulness aspect has more to do with how easy the theory is to apply. For example, Newton's mechanics may not be quite as general as quantum mechanics but we still use them, for instance, to calculate the orbits of satellites. We could, in principle, calculate such orbits with quantum mechanics as well, but that would be much more complicated and less intuitive. Newton's theory is simply more useful for this purpose.

We can roughly divide theories into two groups. One of them is explanatory theories, which are capable of explaining a class of phenomena as consequences of a few fundamental principles. The other group is phenomenological theories. These treat relationships between observable phenomena without making assumptions about their underlying causes. Thermodynamics and optics are examples of phenomenological theories. Thermodynamics is a system of laws, such as Boyle's law which states that the pressure of a gas is inversely proportional to its volume in a system where the temperature and mass are kept constant. That law was established directly from observation and is purely descriptive. Phenomenological laws are common also in applied areas. It is possible, for example, for a medical researcher to establish from observation that a certain feature in an ECG (electrocardiograph) indicates a certain heart disorder without knowing exactly why. This would be a general rule that applies to a theoretical concept (the specific ECG feature) but in itself it does not explain the connection between the feature and the disorder. Such phenomenological laws must often be formulated before deeper, explanatory theories can be developed.

The best explanatory theories are based on a few simple and general rules from which phenomenological laws eventually can be explained. The kinetic theory of gases, for example, explains why temperatures, pressures and volumes of gases behave like they do by considering the gas to be a system of small particles in constant, random motion. Based on this and other assumptions, Boyle's law and other thermodynamic laws may be derived by deduction. Another example of a good explanatory theory is Maxwell's equations, from which the laws of optics can be derived.

The line between phenomenological and explanatory theory is not distinct. If we consider the picture of the burning diesel jet again, we noted that we do not fully understand why the lift-off length is established at a certain position. Based on a wide range of observations we may still formulate a phenomenological law that describes how the lift-off position is affected by various variables. Despite its phenomenological nature, this law will help us understand trends in soot formation, as described in the example. Even phenomenological laws thus have some explanatory power. In a similar manner, we could say also that explanatory theories like Maxwell's equations have phenomenological features. Despite them explaining everything from optics to radar as consequences of a general set of basic rules, we do not know the fundamental reason why the rules work. During the last century, physicists were working hard to unify the theory of electromagnetism with that of gravity to find a common cause for the two, just like Maxwell unified electricity and magnetism before them. But even if that had been achieved, the reason for the unified theory would still be unknown. The problem threatens to degenerate into an infinite regression of unknown causes. At one level or other, all theoretical laws become phenomenological.

As researchers we should not let ourselves be disheartened by the fact that we may never find the ultimate cause for everything. That would keep us from realizing that our most general theories actually constitute very powerful knowledge of Nature's machinery. The fact that we can identify such wide varieties of phenomena as the consequences of such a small number of general rules is quite awe inspiring. It is, for example, a direct, logical consequence of Newton's three laws of motion that distant galaxies are shaped as they are. At the same time these laws explain why your bread usually falls with the buttered side down when you drop it from your breakfast table. Neat, isn't it?