20

Protecting the Privacy of Electricity Consumers in the Smart City

Binod Vaidya1 and Hussein T. Mouftah1

1School of Electrical Engineering and Computer Science (EECS), University of Ottawa, Ottawa, ON, Canada

20.1 Introduction

An intelligent energy infrastructure, which links together various elements of city operations, would be one of the essential features in a smart city.

A smart grid is an electric power grid that employs information and communication technologies (ICTs) to improve its efficiency, reliability, resiliency, and flexibility. Such an electric grid not only provides information about its energy usage and costs to consumers such that they can make decisions independently but also transforms electric power grid through remote monitoring and control, automation, self‐healing approach as well as provides safe and reliable integration of distributed renewable energy resources (Strasser et al., 2015).

Smart meters are one of the key enablers of smart grids. An advanced metering infrastructure (AMI) allows bidirectional energy flows as well as utilizes two‐way communication enabling energy service providers (ESPs) to receive energy consumption data of consumers and sends pricing or control signals back to consumers in real time. By measuring consumers' near real‐time energy consumption data at high temporal resolution, AMI enables ESPs to control and optimize the supply and distribution and even offer their customers pricing schemes based on current offer and demand (Siano, 2014). Distribution system operators can monitor the electric power grid at a higher sampling rate and granularity than earlier. Electricity customers also can be benefitted from smart meter deployment by receiving timely information about consumed power and managing power consumption accordingly.

Furthermore, the smart grid allows consumers to sell electricity to the grid or other consumers by producing electricity using photovoltaic or wind turbines (Depuru, Wang, and Devabhaktuni, 2011b). Similarly, the smart grid also includes vehicle‐to‐grid (V2G), in which electric vehicles (EVs) can communicate with power grid operators to trade demand response services by delivering stored electricity into the electric power grid (Yu et al., 2016).

The AMI is a predominant and fundamental component in the development and deployment of the smart grid in the smart cities; nonetheless, V2G networks are also rapidly increasing in the urban cities for charging /discharging EVs.

Although the smart grid delivers numerous performance benefits to the electric power industry and also enables consumers to optimize their power consumption, the smart grid infrastructure (i.e., AMI and V2G) in the smart city has become increasingly susceptible to a wide range of cyber‐threats.

Basically, Smart grid technologies capture customer data relating to sensitive information that is used for various purposes such as real‐time pricing and demand response; however, privacy can be threatened and breached by a number of practices, which are normally considered as unacceptable (Finster and Baumgart, 2015; Li et al., 2012).

If realistic security and privacy‐preserving approaches are not employed in the smart grid, new challenges to security protection, privacy, and data protection will emerge.

The objective of this chapter is to provide insights of privacy protections of the electricity consumers in the smart city. It mainly focuses on aspects of privacy principles including privacy by design (PbD). It also stipulates a basis for better understanding of the current state‐of‐the‐art privacy engineering as well as privacy impact assessment and privacy‐enhancing technologies. The rest of the chapter is organized as follows. Section 20.2 discusses privacy concern in the smart grid including AMI and V2G. Section 20.3 discusses the privacy principles while section 20.4 presents privacy engineering focusing on privacy protection goals and pertinent frameworks. Section 20.5 depicts privacy impact assessment, and section 20.6 explores privacy‐enhancing technologies. Finally, section 20.7 concludes the chapter.

20.2 Privacy in the Smart Grid

The smart grid (i.e., AMI and V2G) introduces substantial benefits and opportunities to the smart city, but it also raises several challenges related to privacy. For instance, fine‐grained smart metering data and control messages provide ESPs with the information about real‐time electricity consumption status. However, using the fine‐grained electricity consumption, not only varied information about the consumer premises can be inferred but also the probability of leaking customers' privacy including personal information, daily activities, individual behaviors, etc., increases (Lisovich, Mulligan, and Wicker, 2010; Jokar, Arianpoo, and Leung, 2016).

Indeed, security and privacy are considered as crucial components for the success of secure smart grid networks including AMI and V2G networks. Privacy emphasizes the individual's ability to control the collection, use, and dissemination of his/her personally identifying information (PII), whereas security offers mechanisms to ensure confidentiality and integrity of information and availability of ICT systems. Yet the perceptions of privacy and security do intersect. Ensuring privacy is more complicated than ensuring security (SGIPCSWG‐V1, 2014; SGIPCSWG‐V2, 2014; SGIPCSWG‐V3, 2014).

Privacy considerations in the smart grid embrace examining the rights, values, and interests of individuals. According to NIST IR 7628v2 (SGIPCSWG‐V2, 2014), four dimensions of privacy are considered: 1) privacy of personal information; 2) privacy of the person: 3) privacy of personal behavior; 4) privacy of personal communications. Though most smart grid stakeholders directly address the first dimension, since most data protection laws and regulations mainly cover privacy of personal information, the remaining three dimensions are also essential privacy considerations in the smart grid.

Not only potential risks in AMI can become ingress points for adversaries, but also potential insider risks can be exacerbates to stretch to a magnified threat level. Several serious privacy risks in AMI include eavesdropping, traffic analysis, statistical disclosure, and consumption profiling (Jiang et al., 2014; Krishna, Weaver, and Sanders, 2015). Malicious entities could use the smart metering personal data to enable malevolent uses such as identity theft, burglary, vandalism, stalking, etc.

Privacy concern is also one of the obstacles to the successful deployment of V2G networks. Privacy protection issues in V2G networks are more challenging than in AMI networks. Due to the electric mobility (E‐mobility), EVs may join or depart the EV‐charging network frequently, so their privacy requirements are more stringent. Privacy issues in V2G networks include location privacy of EVs and attacks of leaking privacy such as eavesdropping, man‐in‐the‐middle attack (MiTM), impersonation attack, sybil attack, and physical attack.

Primarily, potential privacy consequences of smart grid systems (i.e. AMI and V2G) include identity theft, determine personal behavior patterns, determine specific appliances used, perform real‐time surveillance, reveal activities through residual data, targeted home invasions, provide accidental invasions, activity censorship, decisions and actions based upon inaccurate data, profiling, unwanted publicity and embarrassment, tracking behavior of renters/leasers, behavior tracking (possible combination with personal behavior patterns), location tracking, and public aggregated searches revealing individual behavior (Asghar et al., 2017; Cintuglu et al., 2017).

20.2.1 Privacy Concerns over Customer Electricity Data Collected by the Utility

A primary data flow in the AMI encompasses customer electricity data from the smart meter to the utility. Smart meters can take readings of fine‐grained customer electricity data and send them to the utilities. And the utilities use these data for a variety of purposes. For instance, the customer electricity data can be used for applying time‐variant pricing (i.e., time‐of‐use rates, critical peak pricing, real‐time pricing), for better understanding customer demand, or for detecting meter tampering (Depuru, Wang & Devabhaktuni, 2011a; Amin et al., 2015). Utilities also make the data available to customers for optimizing their power consumption (Hubert and Grijalva, 2012). However, this information flow in AMI raises the following privacy concerns.

- Smart meters store meter data internally. Under existing the AMI architecture, the utility controls the meter located at the customer's premise and does not grant access to other parties without customer's consent. However, some adversaries may obtain some data about a specific electricity customer and remotely access his/her smart meter to acquire an energy consumption pattern. Such an energy consumption pattern may be key information for other malicious activities.

- Consumer meter data moves from the smart meter to the utility, typically via a private network that the utility operates and even through public network (i.e. the Internet). Though data confidentiality is mandatory for communications between the smart meter and the utility in the existing AMI systems, user privacy is not protected, so still high privacy risks do exist in such AMI systems.

- While storing consumer meter data at the utility premise, a customer's privacy interests should be legally protected. An adversary may be able to penetrate the AMI network to access meter data stored at the utility premise. Privacy risks will be even higher if third parties are allowed to access energy usage information directly from the utilities.

20.2.2 Privacy Concerns on Energy Usage Information Collected by a Non‐Utility‐Owned Metering Device

A significant shift in the smart grid, relative to its predecessor, is that third parties will be enabled to collect metering data. In case of V2G networks, charging station operators shall collect meter data and process them. These metering data may be transferred to the utility company for third‐party billing. Hence, during collecting energy usage information by a non‐utility‐owned metering device, the customer's privacy is a prime concern.

20.2.3 Privacy Protection

Cyber security is a critical concern owing to the growing potential of cyber‐attacks and incidents against critical energy infrastructures in the smart city. The cyber‐security solution for such an energy infrastructure must tackle not only intentional attacks from industrial espionage and hackers but also unintentional compromises of the electric power grid infrastructure due to user errors, equipment failures, and natural disasters.

Since private information about consumers (e.g., home energy consumption) shall be included during energy usage data exchange between multiple stakeholders of the Ssart grid, it could harm particular individuals, if the consumer data are not used with appropriate protection measures. Thus, privacy risks and challenges introduced by the smart grid (i.e., AMI and V2G) have to be properly addressed. Protecting critical energy infrastructures in the smart city has to be given great precedence, and technical measures to protect customer's privacy should be considerably prioritized.

Privacy protection comprises preventing any valuable information (i.e., private information) related to the identity of an entity to be known by other entities. Only well‐protected smart grid systems in the smart city ecosystem would be considered robust and secure.

The degree of privacy protection should be well premeditated. Applying PbD in conjunction to engineering aspects is critically imperative such that privacy impact assessment (PIA) can be properly conducted, and appropriate privacy‐enhancing technologies (PETs) can be deployed.

20.3 Privacy Principles

There are several privacy principles that can assist to impose privacy protection in ICT‐based systems including smart grid networks.

As system design and architecture may collect PII, there is a possibility to violate privacy protection. A better methodology to protect privacy of the consumer data is to apply a PbD approach (IPCO 2009; Cavoukian 2011). With initiation of A. Cavoukian, an original Privacy‐by‐Design (PbD) approach was proposed in 1990. This approach identifies a set of foundational principles that should be followed when designing and developing privacy‐sensitive applications (IPCO, 2009). These 7 foundational principles are depicted in Figure 20.1.

Figure 20.1 Seven foundational principles of PbD.

With a proactive approach, PbD encompasses embedding privacy directly into design of technologies, business practices, and networked infrastructures. It compels privacy as a foundational requirement, consequently, preventing privacy‐invasive occurrences before they may happen. By making privacy the default setting within an organization, its customers' privacy can be well protected (Cavoukian, 2011).

Nevertheless, as an early approach for interpretation of privacy and personal information, the Federal Trade Commission (FTC), USA, developed the Fair Information Practice Principles (FIPPs) in 1973. Its core principles of privacy in the context of information are: 1) notice/awareness; 2) choice/consent; 3) access/participation; 4) integrity/security; and 5) enforcement/redress (Landesberg et al., 1998).

Different adaptations of the FIPPs have been demarcated. For instance, inferring privacy and data protection, the Guidelines on the Protection of Privacy and Trans‐border Flows of Personal Data were developed by the Organization for Economic Cooperation and Development (OECD) in 1980 and revised in 2013 as OECD Privacy Framework (OECD, 2013). The principles in the OECD documents have been widely adopted.

In order to realize a universal privacy framework, the International Organization for Standardization (ISO) and the International Electrotechnical Commission (IEC) have published ISO/IEC 29100 as an international standard in 2011 (ISO/IEC JTC 1/SC 27 IT Security techniques, 2011). Privacy principles described in the ISO/IEC 29100:2011 Privacy Framework are mainly derived from existing principles developed by various international bodies such as the FTC and OECD. This framework not only targets organizations and intends to support them in defining their privacy preservation requirements but also aims at enhancing the existing security standards by the privacy perspective whenever PII is processed. Hence, these privacy principles are used to guide the design, development, and implementation of privacy policies and privacy controls.

The American Institute of Certified Public Accounts (AICPA) and the Canadian Institute of Chartered Accountants (CICA) have developed privacy principles and applicable criteria, known as Generally Accepted Privacy Principles (GAPPs) in order to assist organizations in the design and implementation of comprehensive privacy practices and policies (Cornelius, 2009).

The relationship among three major privacy principles, namely, OECD Privacy Framework Privacy Principles, Privacy Principles of the ISO/IEC 29100:2011 Privacy Framework, and AICPA Generally Accepted Privacy Principles (GAPPs) is shown in Figure 20.2.

Figure 20.2 The relationship among OECD Privacy Framework, ISO/IEC 29100:2011 Privacy Framework, and AICPA GAPPs.

By studying privacy principles from other relevant industries that handle and store sensitive information, smart grid actors, i.e. utilities, can provide utmost protection to the consumer electricity data.

The National Institute of Standards and Technology (NIST), USA, is one of the central players in promoting the growth of the smart grid by developing a framework for the smart grid that embraces interoperable standards and protocols so that all components of the smart grid shall be able to work together. In this regard, NIST published Smart Grid Cyber Security Strategy and Requirements (NIST IR 7628) in 2010 and later revised in 2014 (SGIPCSWG‐V1, 2014; SGIPCSWG‐V2, 2014; SGIPCSWG‐V3, 2014). NIST IR 7628 Vol 2 (SGIPCSWG‐V2, 2014) is dedicated to privacy in the smart grid. NIST IR 7628 applies FIPPs while deliberating privacy considerations for the smart grid and uses GAPPs as one of privacy principles.

IPCO (2010), by adapting a PbD approach with the seven fundamental foundational principles to the smart grid context, best practices for smart grid PbD have been created such that smart grid systems should not only proactively embed privacy requirements into their designs and ensure that privacy is the default but also be visible and transparent to consumers and be designed with respect for consumer privacy. A related approach that emphasizes embedding privacy into the design of electricity has been depicted in Cavoukian, Polonetsky, and Wolf (2010). Further applying privacy by design for third‐party access to customer energy usage data is provided in the article Cavoukian and Polonetsky (2013).

Wicker and Thomas (2011) have advocated a framework for privacy‐aware design practices for embedding privacy awareness into information networks that consists of a set of principles derived from the FIPPs. This approach that has five privacy‐aware principles is specifically intended for the demand response platform (Wicker and Schrader 2011), which is depicted in Table 20.1.

Table 1 Five Privacy‐Aware Principles for the Demand Response Platform.

| Privacy‐aware principle | Requirement |

| Provide full disclosure of data collection |

|

| Require consent to data collection |

|

| Minimize collection of personal data |

|

| Minimize identification of data with individuals |

|

| Minimize and secure data retention |

|

20.4 Privacy Engineering

Provisioning a PbD approach is not sufficient due to the lack of holistic and systematic methodologies that address the intricacy of privacy and the absence of the translation of privacy principles into engineering activities (Alshammari and Simpson 2016). Privacy engineering deals with designing, implementing, adapting, and evaluating guidelines, protocols, and techniques to methodically apprehend and address privacy issues in the development of ICT systems (Gurses and Del Alamo, 2016; Spiekermann and Cranor, 2009). Hence, privacy engineering focuses on providing guidance that will enable organizations to make persistent decisions about resource allocation and effective deployment of controls in ICT systems in order to decrease privacy risks (Cavoukian, Shapiro, and Cronk, 2014).

There are not only several efforts to formulate concepts for privacy engineering but also approaches to define engineering, technical, and operational aspects for PbD (Hoepman, 2014; Kroener and Wright, 2014; Antignac and Le Metayer, 2015; Bringer et al., 2015).

20.4.1 Privacy Protection Goals

Protection goals are regarded as authoritative components while evaluating information security of ICT systems and choosing appropriate technical and operational safeguards in various technologies (Meis, Wirtz, and Heisel, 2015).

Classically, information security in ICT systems features three security protection goals, namely confidentiality, integrity, and availability, commonly known as CIA triad. The CIA triad is typically deemed as critical to evaluate security conditions of the ICT systems. Confidentiality guarantees preserving authorized controls on information access and disclosure including means for protecting personal privacy and sensitive information from malicious people. Integrity ensures protecting against unauthorized and improper information alteration or destruction and includes ensuring information non‐repudiation and authenticity. Availability provides a guarantee of timely and reliable access to the information by authorized people.

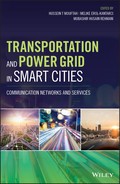

Three privacy‐specific protection goals such as unlinkability, transparency, and intervenability (i.e. UTI triad) (Hansen, Jensen, and Rost, 2015) have been identified in order to strengthen the privacy perspective by accompanying with the above security protection goals, i.e., the CIA triad.

- Unlinkability guarantees that privacy‐relevant data cannot be linked across privacy domains that are constituted by a common purpose and context. This implies that processes must function in such a way that the privacy‐relevant data are not linkable to any privacy‐relevant information outside of the domain. It is interrelated to not only the principles of necessity and data minimization but also the principle of purpose binding. Unlinkability refers to the property of anonymity and its different aspects of realization. The protection goal of unlinkability targets realizing unobservability and undetectability.

- Transparency ensures that all aspects (i.e., legal, technical, and organizational) of privacy‐relevant data processing should be clear and able to reassemble at any time. The information that is subjected for privacy‐relevant data processing needs to be available any time. It is correlated to the principles concerning openness and a prerequisite for accountability.

- Intervenability guarantees that intervention is likely regarding all possible privacy‐relevant data processing, in particular for data owners. The purpose of intervenability is to apply corrective measures and counterbalances on demand. It is related to the principles concerning customers' rights. Furthermore, data controllers should have effectively control over data processors and their ICT systems to manipulate the data processing at any time.

Meaningful protection goals should be delivered in order to balance the requirements derived from both protection goals (i.e., CIA triad and UTI triad) concerning legal, technical, and organizational processes. Considerations on fairness, impartiality, and accountability postulate guidance for balancing the requirements as well as determining better strategies and appropriate protections.

In a similar manner, NIST has also provided three privacy engineering goals—disassociability, predictability, and manageability (i.e., DPM triad)—for developing and operating privacy‐preserving ICT systems (Brooks et al., 2017). These goals are designed to enable ICT designers to build ICT systems that are capable of implementing an organization's privacy protection goals and reinforce the management of privacy risk.

- Disassociability empowers the processing of personal information or events without any correlation with individuals or devices beyond the operational requirements of the ICT system. It recognizes that privacy risks can result from exposure even when access is authorized or as a consequence of a transaction. Further deliberation for increasing the effectiveness of disassociability is by constructing a classification of prevailing identity‐related categories, including anonymity, de‐identification, unlinkability, unobservability, pseudonymity, or others. Such a classification could provision a more precise privacy risk mitigation.

- Predictability enables reliable assumptions by individuals, owners, and operators about personal privacy and secret information and their processing by the ICT system. It supports a wide range of organizational interpretations of transparency from a value statement about the significance of open processes to a requirements‐based view that particular information has to be shared. Predictability facilitates the preservation of trusted relationships between ICT systems and individuals and the capability for individuals' self‐determination.

- Manageability offers the capability for granulated administration of personal information including alteration, deletion, and selective disclosure. It is a primary property for enabling self‐determination and fair treatment of individuals. When the ICT system permits fine‐grained control over data, organizations can be able to implement key privacy principles including maintaining data quality and integrity and implementing individuals' privacy preferences. Manageability could provision the mapping of technical controls such as data tagging and emerging standards in identity management that relate to attribute transmission.

Combination of three privacy engineering objectives (i.e., DPM triad), complemented by the CIA triad to address unauthorized access to personal information, stipulate a chief set of information system capabilities to support the well‐balanced realization of business goals and privacy goals and assist in the mapping of controls to mitigate identified privacy risks.

The UTI triad and DPM triad can be loosely associated to provide privacy protection goals for a particular privacy‐preserving ICT system. A mapping between UTI and DPM triads is shown in Figure 20.3.

Figure 20.3 Mapping between UTI and DPM triads.

20.4.2 Privacy Engineering Framework and Guidelines

Numerous methodologies for designing privacy into modern ICT systems have been considered (Notario et al., 2014; Notario et al., 2015; Kung, 2014). Aspects of privacy engineering can be articulated by incorporating privacy requirements into the areas of the systems engineering life cycle (SELC) that could facilitate core privacy protection objectives and other organizational objectives. For some organizations, the prime motivation for privacy engineering would be for regulatory compliance purposes or reducing organizational risk.

Furthermore, MITRE Corporation has formulated a privacy engineering framework such that privacy engineering operationalizes the PbD logical framework within ICT systems (MITRE‐CoP, 2014) by:

- segregating PbD into activities related with those of the SELC and adopted by particular methods that account for privacy's distinct characteristics;

- outlining and applying privacy requirements in terms of implementable system functionality and properties for focusing privacy risks within the SELC. Privacy risks are recognized and sufficiently addressed; and

- supporting deployed ICT systems by affiliating system usage and enhancement with a broader privacy platform.

Figure 20.4 demonstrates mapping of the fundamental privacy engineering activities into stages of the typical SELC. Such a mapping ensues for every SELC, including agile development.

Figure 20.4 Mapping of the fundamental privacy engineering activities into stages of the typical SELC (MITRE‐CoP, 2014).

Table 20.2 Privacy Engineering Activities and Methods.

| Life Cycle Activity | Privacy Method |

| Privacy requirements definition | Baseline and custom privacy system requirements |

| Privacy empirical theories and abstract concepts | |

| Privacy design and development | Fundamental privacy design concepts |

| Privacy empirical theories and abstract concepts | |

| Privacy design tools | |

| Privacy heuristics | |

| Privacy verification and validation | Privacy testing and review |

| Operational synchronization |

The primary life cycle activities for privacy engineering and privacy methods are listed in Table 20.2, and a brief discussion of life cycle activities for privacy engineering framework is given as follows.

- Privacy requirements definition. This phase shall define specification of system privacy properties in a way that supports system design and development. It includes the following activities: selection and fine‐tuning of baseline privacy requirements and tests, privacy risk analysis of functional requirements, and development of custom privacy requirements and tests based on results of privacy risk analysis.

- Privacy design and development. This phase provides representation and implementation of those elements of the system that support defined privacy requirements. It includes the following activities: identification of privacy design strategies and patterns; identification of architectural, technical point, and policy privacy controls; development of data and process models reflecting identified privacy controls; alignment, integration, and implementation of privacy controls with functional elements; and privacy risk analysis of overall design.

- Privacy verification and validation: This phase is premeditated for confirmation that defined privacy requirements has been correctly implemented and reflected stakeholder expectations. Typically, it encompasses the following activities: privacy test case development/refinement; privacy test case execution; and check of operational behavior against applicable privacy policies and procedures.

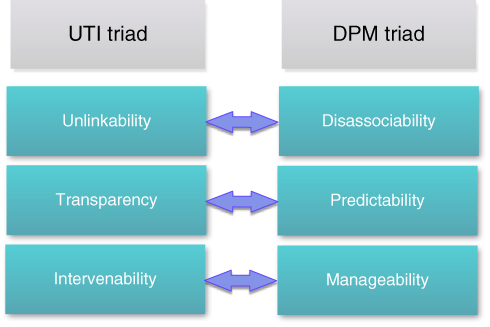

Fhom and Bayarou (2011) proposed a step‐by‐step approach as engineering flow to smart grid systems to address privacy concerns. An overall privacy‐aware engineering flow is depicted in Figure 20.5, and the steps and guidelines of the proposed methodology are outlined as follows.

- Identify relevant high‐level privacy requirements. High‐level policies that are relevant to the designed smart grid system should be identified. In general, these policies include privacy requirements that all the smart grid stakeholders must comply with while dealing with privacy sensitive data and are typically imposed by agencies and regulatory bodies. These policies may include contractual agreements as well as privacy and data protection guidelines.

- System modeling. A detailed model of the designed smart grid system should be developed, in which every element of the model, its functionalities, and the interdependencies among relevant elements should be distinctly designated.

- Privacy risks and impacts assessment. For an overall threat and risk assessment process, privacy impact assessment (PIA) for the smart grid should be conducted. Thus, this phase ensures better perception of the privacy threats and risks associated with the accessibility of sensitive information within the designed smart grid system.

- Identify privacy goals and elicit and analyze low‐level privacy requirements. During this phase, privacy goals and requirements for the designed smart grid should be captured and analyzed. They not only perceptibly include traditional privacy properties such as confidentiality and integrity, as well as anonymity‐related properties such as unlinkability and unobservability but also might explicitly target ensuring a user‐centric privacy management.

- Select, integrate, and evaluate appropriate PETs. In this phase, privacy‐preserving mechanisms including PETs, which can not only be adopted as countermeasures against identified privacy risks and threats but also appropriately satisfy the related privacy requirements, are selected and incorporated into the designed smart grid system. Finally these PETs must be evaluated to fulfill privacy protection goals.

Figure 20.5 Overall privacy‐aware engineering flow.

20.5 Privacy Risk and Impact Assessment

To effectively comply with the privacy protection goals, the privacy principles and other regulations need a greater understanding of the privacy risks in demand response systems (i.e., smart grid).

20.5.1 System Privacy Risk Model

Basically, a privacy risk model aims to provide a structured, repeatable, and quantifiable method for addressing privacy risk in ICT systems. The model may be defined with an equation and a series of inputs designed to enable (i) the identification of problems that may occur from the processing of personal information and (ii) the calculation of how such problems can be reflected in an organizational risk management approach that allows for prioritization and resource allocation to achieve organizational goals while minimizing overall adversative events.

In general, the system privacy risk is the product of three inputs: personal information collected or generated, data actions performed on that information, and the context surrounding the collection, generation, and processing of this personal information (Brooks et al., 2017).

As the system privacy risk is the risk of challenging data actions occurring, its inputs can be explained as follows.

- Data actions: They are any information system operations that process personal information. Processing of personal information may include the collection, retention, logging, generation, transformation, disclosure, transfer, and disposal of personal information.

- Personal information and context: They are two critical inputs that modify the privacy risk of any given data action. For every data action, an organization should identify the associated personal information at a granular level. Both context and associated personal information contribute to whether a data action has the potential to cause privacy problems. Based on these pieces of information, it would be possible for an organization to illustrate initial observations about data actions.

And the equation expression for a system privacy risk model can be expressed as in Figure 20.6.

Figure 20.6 Equation expression for a system privacy risk model.

Subsequently, a system privacy risk model can assist the organization to identify possible privacy risks other than security risk. Given the emphasis on the operations of the system while processing personal information, ICT system's privacy risk, hence, can be ascribed as a function of the likelihood that a data action causes problems for individuals.

20.5.2 Privacy Impact Assessment (PIA)

A PIA is a comprehensive and methodical process for identifying and addressing privacy issues in an ICT system that processes PII. Basically, the PIA is used for determining the privacy, confidentiality, and security risks associated with the collection, use, and disclosure of PII as well as assessing the potential effects on privacy of a process and an information system. The PIA also describes the measures that can be used to mitigate and probably eliminate the identified risks.

The main goals of the PIA include: (i) to ensure that information handling obeys the pertinent legal, regulatory, and policy requirements regarding privacy; (ii) to determine the risks and effects of collecting, maintaining, and disseminating PIIs within the ICT system; and (iii) to examine and evaluate protections and alternate processes for handling information to alleviate potential privacy risks.

The PIA being integral to the process for privacy risk treatment to evaluate and manage privacy impacts and to ensure compliance with privacy protection rules and responsibilities, it is considered as an approach for making privacy engineering more specific and effectual.

In recent years, risk management focusing on PIA has started to take on a more prominent role in privacy and data protection (Wright, Finn, and Rodrigues, 2013; Kalogridis et al., 2014; Engage, 2011). Several organizations and regulatory entities have developed PIA guidelines and strategies, which often differ in scope, context, and goal, depending on the concerned organizations and their purposes (IPCO, 2015).

The ISO/IEC 29134 (ISO/IEC JTC 1/SC 27 IT Security techniques, 2017), which is currently under development, is a standard on privacy impact assessment that shall basically provide a set of guidelines for the managing PIAs. The PIA guidelines of the ISO/IEC 29134 standard can be summarized as follows: (i) Determine PIA requisite in the given system and define the information flows and other privacy impacts; (ii) distinguish privacy risks and possible solutions; (iii) formulate and implement the PIA‐related recommendations; (iv) conduct third‐party review and/or audit of the PIA; (v) update the PIA if revisions occur; and (vi) embed privacy awareness throughout the organization and ensure accountability. These guidelines shall assist the organization in conducting the resulting PIA.

In NIST IR 7628 v2 (SGIPCSWG‐V2, 2014), the NIST has depicted a comprehensive consumer‐to‐utility PIA that is meant for the smart grid. Such a smart grid PIA activity delivers a structured, repeatable type of analysis aimed at determining how collected meter data can reveal personal information about individuals, and the focus of the PIA can be on a segment within the electric power grid or the power grid as a whole. Privacy principles and corresponding recommendations for smart grid high‐level consumer‐to‐utility privacy impact assessment as depicted in NIST IR 7628 v2 is shown in Table 20.3.

Table 20.3 Privacy Principles and Corresponding Recommendations for Smart Grid High‐Level Consumer‐to‐Utility Privacy Impact Assessment.

| Principle | Recommendations |

| Management and accountability |

• assign privacy responsibility • establish privacy audits • establish or amend incident response and law enforcement request policies and procedures |

| Notice and purpose | • provide notification for personal information collected • provide notification for new information use purposes and collection |

| Choice and consent | • provide notification about choices |

| Collection and scope | • limit the collection of data to only that necessary for smart grid operations • obtain the data by lawful and fair means |

| Use and retention | • review privacy policies and procedures • limit information retention |

| Individual access | • access to energy usage data • dispute resolution |

| Disclosure and limiting use | • limit information use • disclosure |

| Security and safeguards | • associate energy data with individuals only when and where required • de‐identify information • safeguard personal information • do not use personal information for research purposes |

| Accuracy and quality | • keep information accurate and complete |

| Openness, monitoring, and challenging compliance | • policy challenge procedures • perform regular privacy impact assessments • establish breach notice practices |

Hofer et al. (2013) propose a PIA for the e‐Mobility system that mainly focuses on the ISO/IEC 15118 standard. The e‐Mobility PIA includes the following guidelines: (i) stipulating scope and purpose definition; (ii) identifying stakeholders; (iii) determining information assets; (iv) identifying information requirements and use; (v) determining information handling and other considerations; and (vi) conducting evaluation.

In the EU, several privacy risk assessment methodologies have been developed (Papakonstantinou and Kloza, 2015), for instance, the French Commission Nationale de l'informatique et des Liberte (CNIL) methodology for privacy risk management (CNIL, 2015) and the UK Information Commissioner's Office (ICO) privacy impact assessments code of practice. More prominently, the Data Protection Impact Assessment (DPIA) template for the smart grid and smart metering has been developed so that smart grid actors can conduct an assessment prior to deployment of any smart metering application (EU‐SGTF 2014).

20.6 Privacy Enhancing Technologies

Privacy enhancing technologies (PET; Senicar, Jerman‐Blazic, and Klobucar, 2003) can refer to particular methods that work in accordance with the data protection laws to prevent situations that might result in violation of privacy. For instance, PETs allow electricity consumers to protect privacy of their PII provided to and handled by other stakeholders in the smart grid. PETs are used to protect electricity consumers, largely against activity and behavioral analysis. Thus PETs are determined to protect privacy by minimizing personal data, subsequently preventing excessive processing of personal data, without the loss of the functionality of the smart grid system (Jawurek, Kerschbaum, and Danezis, 2012).

The main objective of PETs is to protect personal data as well as ensure the customers that their information remains confidential and management of data protection is a priority for the service providers who are responsible for dealing with PIIs. In this regard, PETs aim to furnish functionality and benefits to all stakeholders and to make available personal data to third parties, without disclosing any sensitive information (Kement et al., 2017; Jo, Kim, and Lee, 2016; Tonyali et al., 2017).

To address the privacy concerns in the smart grid, several privacy‐preserving protocols (PPP) and PETs have been proposed and deployed (Souri et al., 2014; Ferrag et al., 2016; Han and Xiao, 2016b). The majority of PETs are focused on AMI networks (Diao et al., 2015; Birman et al., 2015; Li et al., 2015; Wang, Mu, and Chen, 2016); however, some PETs are dedicated for V2G networks (Liu et al., 2014; Wang et al., 2015; Han and Xiao, 2016a; Liu et al., 2016).

Design of innovative PETs should assure consumers' privacy and allow ESPs to monitor and control the grid securely (Abdallah and Shen, 2016; He et al., 2017; Li et al., 2017; Liao et al., 2017). PETs can be classified into several categories: anonymization, trusted computation, cryptographic computation, perturbation, and verifiable computation (Jawurek, Kerschbaum, and Danezis, 2012).

20.6.1 Anonymization

The basic notion of anonymization is that the data consumer (e.g., ESP or utility) can still perform the needed calculations although the direct association between data item (i.e., smart meter reading for consumed electricity) and the data producer (e.g., household or customer) has been isolated. In other words, an anonymization technique shall remove user‐specific features from metering data before sending it to the authorized data consumer such that the ESP obtains anonymous metering data (i.e., data without any PII), which cannot be simply attributed to any specific customer. Thus, the ESP can have the statistical data processing and perform required computations. However, it is difficult or impossible for the data consumers to associate the metering data received to a specific smart meter, household, or electric vehicle.

The data consumer's incapability to attribute information determined from electricity customer data items to their producers can be viewed as a substantial privacy‐enhancing effect due to the anonymization. Thus, anonymity is one fundamental form of privacy protection that can be beneficial. Several privacy‐preserving mechanisms using the anonymization approach have been discussed.

Efthymiou and Kalogridis (2010) propose a mechanism for anonymizing customers' metering data using pseudonymous IDs through an escrow service by a trusted third party (TTP), so data consumers can be assured of the legality of the received metering data, but they would not be able to link the data to a specific customer. Similarly, Gong et al. (2016) propose utilizing two different IDs (i.e., anonymous ID and attributable ID) for providing privacy‐preserving incentive‐based demand response.

Badra and Zeadally (2014) propose a different approach to providing authorized data consumers with anonymous metering data, i.e., to use virtual ring architecture. While Finster and Baumgart (2013) propose a pseudonymous smart metering protocol without a TTP such that a smart meter uses a blinded pseudonym signed by the authorized data consumer, so the smart meter does reveal its identity.

Rottondi, Mauri, and Verticale (2015) propose a data pseudonymization protocol, which uses a secret splitting scheme to construct a unique pseudonym from different intermediate trusted nodes such that once the data consumer receives all the shares attached with the same pseudonym, it can recover the metering data associated with the pseudonym. Furthermore, Vaidya, Makrakis, and Mouftah (2014) propose ID‐based partially restrictive blind signature for V2G network such that the blindness property of the e‐token keeps EV's real ID anonymous to the local aggregator.

Apparently, an anonymization technique is practical only if the computation result does not have to be attributed to a specific data producer. This technique can be ineffective, since sometimes it is possible to re‐identify the owner of the data. Jawurek, Johns, and Rieck (2011) find that pseudonymized consumption traces (i.e., separated from PIIs), can still be attributed to individuals using auxiliary information such as household observation correlations between power events and physical events.

20.6.2 Trusted Computation

In trusted computation approaches, the data consumer would not have direct access to the electricity usage information of the individual consumer. Instead, it only receives an aggregation of metering data, which is computed either by the data producers themselves or an additional TTP that is introduced as external aggregator. By issuing aggregation results, the data consumer cannot recover consumers' personal details (i.e., PIIs). Yet the data consumer obtains sufficiently accurate aggregated metering data. The aggregation of metering data is mostly done in either temporal (i.e., power traces of a singe user over time) or spatial (i.e., power traces of multiple users at a certain time interval) manner.

In this approach, the TTP are mainly used for provisioning unlinkability between readings and the smart meters and supporting fraud/loss detection. However, the disclosure of this individual data to the data consumer constitutes one of the major threats. The disclosure can be performed by the aggregating entities. Thus, these types of privacy‐preserving protocols typically demand the strong assumptions for the trustworthiness of the aggregating entities.

Li, Luo, and Liu (2010) propose a mechanism that provides aggregation of Paillier‐encrypted data while routing through a minimal‐spanning‐tree of smart meters toward the authorized data consumer. Similarly, Ruj, Nayak, and Stojmenovic (2011) propose a two‐tier system for aggregation of smart metering data and subsequent access by authorized data consumers with a help of Paillier encryption.

Chen, Lu, and Cao (2015) propose a privacy‐preserving data aggregation scheme with fault tolerance (so‐called PDAFT) for smart grid communications that uses a homomorphic Paillier encryption technique to encrypt sensitive consumer data such that the data consumer can obtain the aggregated data without knowing individual ones.

20.6.3 Cryptographic Computation

In the cryptographic computation approach, either encryption schemes based on the homomorphic property or secret‐sharing schemes can be deployed. Metering data items that arrive at the data consumer shall be either ciphertexts or secret shares. So the privacy‐preserving protocol should ensure that the data consumer could only decrypt the aggregate of data items (i.e., ciphertexts or secret shares) but not individual data item.

In the homomorphic encryption technique, the individual power consumption items are encrypted by the data producers (i.e., smart meters) using the public key of the data consumer (Tonyali, Saputro, and Akkaya, 2015). Essentially, the encrypted data item undergoes a homomorphic operation prior to going to the data consumer. If the individual data items have to be aggregated, a homomorphic addition operation is employed. Finally, after receiving the aggregated data, the data consumer can obtain the decrypted result using its private key.

In case of a secret sharing scheme, a secret is allotted in multiple parts, and each part is given to an authorized participant. All or a subset of these participants have to contribute their shares to reconstruct the resultant secret. In the context of the smart grid, a consumer's electricity consumption reading obtained from a smart meter can be used as a share.

Garcia and Jacobs (2011) propose a privacy‐friendly energy metering system that uses an aggregation protocol based on a homomorphic property of encryption and specifically targets the detection of energy theft at substations. Likewise, Nateghizad, Erkin, and Lagendijk (2016) put forward a privacy‐preserving cryptographic protocol based on homomorphic encryption for smart metering that can reduce communication cost and improve efficiency of the protocol with encrypted inputs.

Tonyali et al. (2016a) investigated the feasibility and performance of fully homomorphic encryption (FHE) aggregation in the smart grid AMI networks utilizing the reliable data transport protocol, TCP, and proposed a novel packet reassembly mechanism for TCP to overcome the packet reassembly problem.

Rottondi, Verticale, and Capone (2012) describe an approach where trusted privacy‐preserving nodes and a central configurator are introduced into the smart metering system and uses Shamir's secret‐sharing algorithm, a secret sharing variant. Similarly, Rottondi, Fontana, and Verticale (2014) propose to employ Shamir's secret‐sharing scheme in privacy‐preserving mechanism for V2G networks, in which three types of EV data (i.e., plug‐in time period, charge level of the battery, and amount of recharged electricity) are split into parts. Each one in a set of local aggregators holds one part of the data, and the required information is reconstructed from the parts contributed by all the local aggregators.

20.6.4 Perturbation

Privacy‐enhancing protocols using a perturbation technique add certain amount of noise to individual data items or to the final aggregate such that the data consumer can still perform the required computation, but it cannot be used to derive any sensitive information of the data producer (i.e., sufficiently protect privacy of the data producer).

One of the choices of the perturbation‐based PETs is a differential privacy technique, in which a differential private aggregation function appends an adequate quantity of random noise to their result so that individual input data items cannot be deduced from the function's result.

PETs that use perturbation mechanisms that add certain amount of noise to every measurement in a distributed manner such that the resultant noise values is just adequate for reaching differential privacy (Jawurek and Kerschbaum, 2012).

Acs and Castelluccia (2011) put forward a PET that guarantees differential privacy by exploiting an innovative way for adding appropriate noise to the computed aggregate. The distributed noise generation mechanism is designed such that it allows not relying on a TTP to act as an aggregator. Similarly, Shi et al. (2011) describe an aggregation protocol in which individual data producers can add random noise in such a way that a sum of the random noise of all data producers shall ensure differential privacy for the aggregate outcome. Every data producer encrypts its noisy measurement before sending it to the aggregator. The aggregator uses a final share that allows decrypting the differential private aggregate of all measurements.

Bao and Lu (2015) propose a data aggregation scheme for smart metering, named differentially private data aggregation with fault tolerance (DPAFT), which can ensure differential privacy of data aggregation along with fault tolerance by applying the Boneh–Goh–Nissim cryptosystem.

Since smart meters periodically send fine‐grained power consumption data to the utility company in AMI networks, consumer privacy is one of prime concerns. In Tonyali et al. (2016b), a meter data obfuscation scheme is proposed to protect consumer privacy from eavesdroppers and the utility companies while preserving the utility companies' ability to use the data for state estimation.

20.6.5 Verifiable Computation

In the verifiable computation paradigm, the aggregator not only provides an aggregation outcome but also furnishes a proof that the computed result has been performed as claimed. Hence, such privacy‐preserving protocols can be deployed in untrusted environments (i.e., having untrusted aggregators) so that the untrusted aggregators can perform the required computations while guaranteeing the integrity of the aggregation outcome. Such protocols are normally based on the zero‐knowledge proof (ZKP) protocols, in which the verifier only validates the legitimacy of the statement provided by the prover to be proven, but no private information has been revealed.

The verifiable computation–based PET is suitable for deploying protocols related to billing purposes. This is due to the fact that the verifiable computation protocols using ZKP can provide integrity and accuracy of the aggregate result that can be used for billing purposes without disclosing any private information.

In the case of the smart grid, the data producer can be projected as the prover and the data consumer as the verifier. The data producer (i.e., smart meter) computes the total energy consumption for certain duration and sends it to the data consumer (i.e., utility company). Subsequently, the data consumer can verify the validity of the result without disclosing individual smart meter readings. Furthermore, the zero‐knowledge proof enables the data consumer to verify cumulative price such that the total amount for the energy consumption has been calculated correctly.

Rial and Danezis (2011) have proposed an approach in which a smart meter produces metering data as well as its commitment and signature over the commitments and sends them to a user device. The user device obtains the tariff from the service provider and calculates the fee and the required proof. It implements ZKP protocols for various tariffs including cumulative tariffs, interval linear tariffs, and even cumulative polynomial tariffs.

Wan, Zhu, and Wang (2016) propose a privacy‐preserving mechanism called PRAC (privacy via randomized anonymous credentials) for V2G communication, which ensures anonymous authentication and rewarding as well as guarantees unlinkable credentials and rewards. They have used zero‐knowledge proof as an approach to prove that the scheme can ensure integrity and anonymous authentication. Likewise, Rahman et al. (2017) propose a solution in which a secure and privacy‐preserving communication channel between a bidder and a registration manager is established and utilizes El‐Gamal public key encryption and Schnorr signature scheme for ensuring zero‐knowledge proof.

Acknowledgment

This work was funded by The Ontario Ministry of Energy Smart Grid Fund.

References

- Abdallah, A., and Shen, X., 2016, ‘A Lightweight Lattice‐based Homomorphic Privacy‐Preserving Data Aggregation Scheme for Smart Grid’, IEEE Trans. on Smart Grid.

- Acs, G. and Castelluccia, C., 2011, ‘I Have a DREAM! (DiffeRentially privatE smArt Metering)’, In: Filler, T., Pevny, T., Craver, S., and Ker, A. (eds.), Information Hiding, IH 2011, Lecture Notes in Computer Science, LNCS 6958, Berlin, Heidelberg: Springer, 118–132.

- Alshammari, M. and Simpson, A., 2016, ‘Towards a Principled Approach for Engineering Privacy by Design’, Oxford University Research, Report No.: RR‐16‐05, viewed Jan 10, 2017.

- Amin, S., Schwartz, G.A., Cardenas, A.A., and Sastry, S.S., 2015, ‘Game‐Theoretic Models of Electricity Theft Detection in Smart Utility Networks: Providing New Capabilities with Advanced Metering Infrastructure’, IEEE Control Systems 35(1), 66–81.

- Antignac, T. and Le Metayer, D., 2015, ‘Trust Driven Strategies for Privacy by Design’, In: Damsgaard Jensen, C., Marsh, S., Dimitrakos, T., Murayama, Y. (eds.), ‘Trust Management IX. IFIP International Federation for Information Processing (IFIPTM 2015)’, IFIP Advances in Information and Communication Technology 454, Springer, Cham, 60–75.

- Asghar, M.R, Dan, G., Miorandi, D., and Chlamtac, I., 2017, ‘Smart Meter Data Privacy: A Survey’, IEEE Communications Surveys & Tutorials.

- Badra, M. and Zeadally, S., 2014, ‘Design and performance analysis of a virtual ring architecture for smart grid privacy’, IEEE Trans. on Information Forensics and Security 9(2), 321–329.

- Bao, H., and Lu, R., 2015, ‘A New Differentially Private Data Aggregation With Fault Tolerance for Smart Grid Communications’, IEEE Internet of Things Journal 2(3), 248–258.

- Birman, K., Jelasity, M., Kleinberg, R., and Tremel, E., 2015, ‘Building a Secure and Privacy‐Preserving Smart Grid’, ACM SIGOPS Operating Systems Review—Special Issue on Repeatability and Sharing of Experimental Artifacts Archive 49(1), 131–136.

- Bringer, J., Chabanne, H., Le Metayer, D., and Lescuyer, R., 2015, ‘Privacy by Design in Practice: Reasoning about Privacy Properties of Biometric System Architectures’, In: Bjorner and de Boer (eds.), ‘FM 2015: Formal Methods’, Lecture Notes in Computer Science 9109, Springer, Cham, 90–107.

- Brooks, S., Garcia, M., Lefkovitz, N., Lightman, S., and Nadeau, E., 2017, ‘An Introduction to Privacy Engineering and Risk Management in Federal Systems’, National Institute of Standards and Technology, NIST IR No.: 8062, viewed Jun 11, 2017.

- Cavoukian A. and Polonetsky, J., 2013, ‘Privacy by Design and Third Party Access to Customer Energy Usage Data’, Information and Privacy Commissioner of Ontario, viewed Jan 10, 2017.

- Cavoukian, A., 2011, ‘Privacy by Design: The 7 Foundational Principles’, Information and Privacy Commissioner of Ontario, viewed Jan 10, 2017.

- Cavoukian, A., Polonetsky, J., and Wolf, C., 2010, ‘SmartPrivacy for the Smart Grid: embedding privacy into the design of electricity conservation’, Identity in the Information Society 3(2), 275–294.

- Cavoukian, A., Shapiro, S., and Cronk, R.J., 2014, ‘Privacy Engineering: Proactively Embedding Privacy, by Design’, Information and Privacy Commissioner of Ontario, viewed Jan 10, 2017.

- Chen, L., Lu, R., and Cao, Z., 2015, ‘PDAFT: A privacy‐preserving data aggregation scheme with fault tolerance for smart grid communications’, Peer‐to‐Peer Networking and Applications 8(6), 1122–1132.

- Cintuglu, M.H., Mohammed, O.A., Akkaya, K., and Uluagac, A.S., 2017, ‘A Survey on Smart Grid Cyber‐Physical System Testbeds’, IEEE Communications Surveys & Tutorials 19(1), 446–464.

- Commission Nationale de l'informatique et des Liberte (CNIL) 2015, ‘Privacy impact assessment (PIA) Methodology—How to carry out a PIA’, viewed Jan 10, 2017.

- Cornelius, D 2009, AICPA's Generally Accepted Privacy Principles, Compliance Building, viewed 10 Jan 2017, URL: http://www.compliancebuilding.com/2009/01/09/aicpas‐generally‐accepted‐privacy‐principles/

- Depuru, S.S.S.R., Wang, L., and Devabhaktuni, V., 2011a, ‘Electricity theft: Overview, issues, prevention and a smart meter based approach to control theft’, Energy Policy 39, 1007–1015.

- Depuru, S.S.S.R., Wang, L., and Devabhaktuni, V., 2011b, ‘Smart meters for power grid: Challenges, issues, advantages and status’, Renewable and Sustainable Energy Reviews 15(6), 2736–2742.

- Diao, F., Zhang, F., and Cheng, X., 2015, ‘A Privacy‐Preserving Smart Metering Scheme Using Linkable Anonymous Credential’, IEEE Trans. on Smart Grid 6(1), 461–467.

- Efthymiou, C. and Kalogridis, G., 2010, ‘Smart Grid Privacy via Anonymization of Smart Metering Data’, In: Proceedings of the 1st IEEE International Conference on Smart Grid Communications (SmartGridComm 2010), Gaithersburg, MD, USA, Oct. 2010, IEEE, 238–243.

- Engage Consulting Ltd., 2011, ‘Privacy Impact Assessment: Use of Smart Metering data by Network Operators’, Ref No.: ENA‐CF002‐007‐1.0, viewed Jan 10, 2017.

- EU Smart Grid Task Force (EU‐SGTF) 2014, ‘Data Protection Impact Assessment Template for Smart Grid and Smart Metering systems’, viewed Jan 10, 2017.

- Ferrag, M.A., Maglaras, L.A., Janicke, H., and Jiang, J., 2016, ‘A Survey on Privacy‐preserving Schemes for Smart Grid Communications’, Cornell University Library, arXiv: 1611.07722, viewed Jan 20, 2017, URL: https://arxiv.org/abs/1611.07722.

- Fhom, H.S. and Bayarou, K.M., 2011, ‘Towards a Holistic Privacy Engineering Approach for Smart Grid Systems’, In: Proceedings of 2011 International Joint Conference of IEEE TrustCom‐11/IEEE ICESS‐11/FCST‐11 2011.

- Finster, S. and Baumgart, I., 2013, ‘Pseudonymous smart metering without a trusted third party’, In: Proceedings of 12th IEEE International Conference on Trust, Security and Privacy in Computing and Communications (TrustCom), Melbourne, Australia, Jul 2013, IEEE, 1723–1728.

- Finster, S, and Baumgart, I., 2015, ‘Privacy‐Aware Smart Metering: A Survey’, IEEE Communications Surveys & Tutorial 17(2), 1088–1101.

- Garcia, F.D., and Jacobs, B., 2011, ‘Privacy‐Friendly Energy‐Metering via Homomorphic Encryption’, In: Cuellar, J., Lopez, J., Barthe, G., and Pretschner, A. (eds.), Security and Trust Management, STM 2010, Lecture Notes in Computer Science, LNCS 6710, Berlin, Heidelberg: Springer, 226–238.

- Gong, Y., Cai, Y., Guo, Y., and Fang, Y., 2016, ‘A Privacy‐Preserving Scheme for Incentive‐Based Demand Response in the Smart Grid’, IEEE Trans. on Smart Grid 7(3), 1304–1313.

- Gurses, S. and Del Alamo, J.M., 2016, ‘Privacy Engineering: Shaping an Emerging Field of Research and Practice’, IEEE Security & Privacy 14(2), 40–46.

- Han, W. and Xiao, Y., 2016b, ‘IP2DM: integrated privacy‐preserving data management architecture for smart grid V2G networks’, Wireless Communications and Mobile Computing 16(17), 2956–2974.

- Han, W. and Xiao, Y., 2016a, ‘Privacy preservation for V2G networks in smart grid: A survey’, Computer Communications 91–92, 17–28.

- Hansen, M., Jensen, M., and Rost, M., 2015, ‘Protection Goals for Privacy Engineering’, in Proceedings of 2015 IEEE CS Security and Privacy Workshops, San Jose, CA, USA, May 2015, IEEE, 159–166.

- He, D., Kumar, N., Zeadally, S., Vinel, A., and Yang, L.T., 2017, ‘Efficient and Privacy‐Preserving Data Aggregation Scheme for Smart Grid against Internal Adversaries’, IEEE Trans. on Smart Grid.

- Hoepman, J.H., 2014, ‘Privacy Design Strategies’, in Proceedings of IFIP International Information Security Conference SEC 2014: ICT Systems Security and Privacy Protection, IFIPAICT, vol. 428, Marrakech, Morocco, 446–459.

- Hofer, C., Petit, J., Schmidt, R., and Kargl, F., 2013, ‘Popcorn: privacy‐preserving charging for e‐mobility’, In: Proceedings of the 2013 ACM workshop on Security, privacy & dependability for cyber vehicles, ACM, 37–48.

- Hubert, T., and Grijalva, S., 2012, ‘Modeling for Residential Electricity Optimization in Dynamic Pricing Environments’, IEEE Trans. on Smart Grid 3(4), 2224–2231.

- Information and Privacy Commissioner of Ontario (IPCO) 2009, ‘Privacy by Design: The 7 Foundational Principles’, Information and Privacy Commissioner of Ontario, viewed Jan 10, 2017.

- Information and Privacy Commissioner of Ontario (IPCO) 2010, ‘Privacy by Design: Achieving the Gold Standard in Data Protection for the Smart Grid’, Information and Privacy Commissioner of Ontario, viewed Jan 10, 2017.

- Information and Privacy Commissioner of Ontario (IPCO) 2015, ‘Planning for Success: Privacy Impact Assessment Guide’, Information and Privacy Commissioner of Ontario, viewed Jan 10, 2017.

- ISO/IEC JTC 1/SC 27 IT Security techniques 2011, ISO/IEC 29100:2011 Information technology — Security techniques — Privacy framework, 1st ed, International Organization for Standardization (ISO), viewed 10 Jan 2017.

- ISO/IEC JTC 1/SC 27 IT Security techniques 2017, ISO/IEC 29134:2017 Information technology ‐ Security techniques ‐ Guidelines for privacy impact assessment, International Organization for Standardization, viewed 11 Jun 2017.

- Jawurek, M. and Kerschbaum, F., 2012, ‘Fault‐Tolerant Privacy‐Preserving Statistics’, In: Fischer‐Hubner, S., and Wright, M. (eds.), Privacy Enhancing Technologies, PETS 2012, Lecture Notes in Computer Science, LNCS 7384, Berlin, Heidelberg: Springer.

- Jawurek, M., Johns, M., and Rieck, K., 2011, ‘Smart metering depseudonymization’, in Proceedings of the 27th Annual Computer Security Applications Conference (ACSAC'11) , New York, NY, USA, 227–236.

- Jawurek, M., Kerschbaum, F., and Danezis, G., 2012, ‘SoK: Privacy technologies for Smart grids—a survey of options’, technical report, Microsoft Research, viewed Jan 10, 2017.

- Jiang, R., Lu, R., Wang, Y., Luo, J., Shen, C., and Shen, X.S., 2014, ‘Energy‐theft detection issues for advanced metering infrastructure in smart grid’, Tsinghua Science and Technology 19(2), 105–120.

- Jo, H.J., Kim, I.S., and Lee, D.H., 2016, ‘Efficient and Privacy‐Preserving Metering Protocols for Smart Grid Systems’, IEEE Trans. on Smart Grid 7(3), 1732–1742.

- Jokar, P., Arianpoo, N., and Leung, V.C.M., 2016, ‘Electricity Theft Detection in AMI Using Customers' Consumption Patterns’, IEEE Trans. on Smart Grid 7(1), 216–226.

- Kalogridis, G., Sooriyabandara, M., Fan, Z., and Mustafa, M.A., 2014, ‘Toward Unified Security and Privacy Protection for Smart Meter Networks’, IEEE Systems Journal 8(2), 641–654.

- Kement, C.E., Gultekin, H., Tavli, B., Girici, T., and Uludag, S., 2017, ‘Comparative Analysis of Load Shaping Based Privacy Preservation Strategies in Smart Grid’, IEEE Trans. on Industrial Informatics.

- Krishna, V.B., Weaver, G.A., and Sanders, W.H., 2015, ‘PCA‐Based Method for Detecting Integrity Attacks on Advanced Metering Infrastructure’, In: Campos, J. and Haverkort, B.R. (eds.), Proceedings of Quantitative Evaluation of Systems: 12th International Conference, QEST 2015, Lecture Notes in Computer Science (LNCS) 9259, New York: Springer‐Verlag, 70–85.

- Kroener, I. and Wright, D., 2014, ‘A Strategy for Operationalizing Privacy by Design’, The Information Society 30(5), 355–365.

- Kung, A., 2014, ‘PEARs: Privacy Enhancing Architectures’, In: Preneel, B., and Ikonomou, D. (eds.), Privacy Technologies and Policy, APF 2014, Lecture Notes in Computer Science, LNCS 8450, Springer, Cham, 18–29.

- Landesberg, M.K., Levin, T.M., Curtin, C.G., and Lev, O., 1998, ‘Privacy Online: A Report to Congress’, Federal Trade Commission, viewed Jan 10, 2017.

- Li, C., Lu, R., Li, H., Chen, L., and Chen, J., 2015, ‘PDA: a privacy‐preserving dual‐functional aggregation scheme for smart grid communications’, Wiley Security and Communication Networks 8, 2494–2506.

- Li, F., Luo, B., and Liu, P., 2010, ‘Secure information aggregation for Smart grids using homomorphic encryption’, In: Proceedings of the 1st IEEE International Conference on Smart Grid Communications (SmartGridComm 2010), Gaithersburg, MD, USA, Oct. 2010, IEEE, 327–332.

- Li, H, Gong, S, Lai, L, Han, Z, Qiu, RC, Yang, D 2012, ‘Efficient and Secure Wireless Communications for Advanced Metering Infrastructure in Smart Grids’, IEEE Trans. on Smart Grid, vol. 3, iss. 3, pp. 1540‐1551.

- Li, S., Xue, K., Yang, Q., and Hong, P., 2017, ‘PPMA: Privacy‐Preserving Multi‐Subset Aggregation in Smart Grid’, IEEE Trans. on Industrial Informatics.

- Liao, X., Srinivasan, P., Formby, D., and Beyah, A.R., 2017, ‘Di‐PriDA: Differentially Private Distributed Load Balancing Control for the Smart Grid’, IEEE Trans. on Dependable and Secure Computing.

- Lisovich, M.A., Mulligan, D.K., and Wicker, S.B., 2010, ‘Inferring Personal Information from Demand‐Response Systems’, IEEE Security & Privacy 8(1), 11–20.

- Liu, H., Ning, H., Zhang, Y., Xiong, Q., and Yang, L.T., 2014, ‘Role‐Dependent Privacy Preservation for Secure V2G Networks in the Smart Grid’, IEEE Trans. on Information Forensics and Security 9(2), 208–220.

- Liu, J.K., Susilo, W., Yuen, T.H., Au, M.H., Fang, J., Jiang, Z.L., and Zhou, J., 2016, ‘Efficient Privacy‐Preserving Charging Station Reservation System for Electric Vehicles’, The Computer Journal 59(7), 1040–1053.

- Meis, R., Wirtz, R., and Heisel, M., 2015, ‘A Taxonomy of Requirements for the Privacy Goal Transparency’, In: Fischer‐Hubner, S., Lambrinoudakis, C., and Lopez, J. (eds.), ‘Trust, Privacy and Security in Digital Business, TrustBus 2015’, Lecture Notes in Computer Science, LNCS 9264, Springer International Publishing, 195–209.

- MITRE Privacy Community of Practice (CoP) 2014, ‘Privacy Engineering Framework’, viewed Jan 10, 2017.

- Nateghizad, M., Erkin, Z., and Lagendijk, R.L., 2016, ‘An efficient privacy‐preserving comparison protocol in smart metering systems’, EURASIP Journal on Information Security.

- Notario, N., Crespo, A., Kung, A., Kroener, I., Le Metayer, D., Troncoso, C., Del Alamo, J.M., and Martin, Y.S., 2014, ‘PRIPARE: A New Vision on Engineering Privacy and Security by Design’, In: Cleary, F., and Felici, M. (eds.), ‘Cyber Security and Privacy, CSP 2014’, Communications in Computer and Information Science, CCIS 470, Springer, Cham, 65–76.

- Notario, N., Crespo, A., Martin, Y.S., Del Alamo, J.M., Le Metayer, D., Antignac, T., Kung, A., Kroener, I., and Wright, D., 2015, ‘PRIPARE: Integrating Privacy Best Practices into a Privacy Engineering Methodology’, In: Proceedings of 2015 IEEE CS Security and Privacy Workshops, San Jose, CA, USA, May 2015, IEEE, 151–158.

- Organisation for Economic Co‐operation and Development (OECD) 2013, ‘The OECD Privacy Framework’, Organisation for Economic Co‐operation and Development', viewed Jan 10, 2017.

- Papakonstantinou, V. and Kloza, D., 2015, ‘Legal Protection of Personal Data in Smart Grid and Smart Metering Systems from the European Perspective’, In: Goel, S., Hong, Y., Papakonstantinou, V., and Kloza, D. (eds.), Smart Grid Security, Springer Briefs in Cybersecurity 2193‐973X, London: Springer, 41‐129.

- Rahman, M.S., Basu, A., Kiyomoto, S., and Bhuiyan, M.Z.A., 2017, ‘Privacy‐friendly secure bidding for smart grid demand‐response’, Information Sciences 379, 229–240.

- Rial, A. and Danezis, G., 2011, ‘Privacy‐preserving smart metering’, In: Proceedings of the 10th Annual ACM workshop on Privacy in the Electronic Society, WPES'11 , Chicago, IL, USA, Oct 2011, ACM, 49–60.

- Rottondi, C., Fontana, S., and Verticale, G., 2014, ‘Enabling privacy in vehicle‐to‐grid inter‐actions for battery recharging’, Energies 7(5), 2780–2798.

- Rottondi, C., Mauri, G., and Verticale, G., 2015, ‘A protocol for metering data pseudonymization in smart grids’, IEEE Trans. on Emerging Telecommunications Technologies 26(5), 876–892.

- Rottondi, C., Verticale, G., and Capone, A., 2012, ‘A security framework for smart metering with multiple data consumers’, In: Proceedings of 2012 IEEE Conference on Computer Communications Workshops (INFOCOM WKSHPS), Orlando, FL, USA. IEEE, 103–108.

- Ruj, S., Nayak, A., and Stojmenovic, I., 2011, ‘A security architecture for data aggregation and access control in smart grids’, Cornell University Library, arXiv: 1111.2619, viewed Jan 20, 2017, URL: https://arxiv.org/abs/1111.2619.

- Senicar, V., Jerman‐Blazic, B., and Klobucar, T., 2003, ‘Privacy‐Enhancing Technologies—approaches and development’, Computer Standards & Interfaces 25(2), 147–158.

- Shi, E., Chan, T.H., Rieffel, E.G., Chow, R., and Song, D., 2011, ‘Privacy‐preserving aggregation of time‐series data’, In: Proceedings of the 18th Annual Network and Distributed System Security Symposium (NDSS), San Diego, CA, USA, Feb 2011.

- Siano, P., 2014, ‘Demand response and smart grids—A survey’, Elsevier Renewable and Sustainable Energy Reviews 30, 461–478.

- Souri, H., Dhraief, A., Tlili, S., Drira, K., and Belghith, A., 2014, ‘Smart Metering privacy‐preserving techniques in a nutshell’, Procedia Computer Science. 32, 1087–1094.

- Spiekermann, S. and Cranor, L.F., 2009, ‘Engineering Privacy’, IEEE Trans. on Software Engineering 35(1), 67–82.

- Strasser, T., Andren, F., Kathan, J., Cecati, C., Buccella, C., Siano, P., Leitao, P., Zhabelova, G., Vyatkin, V., Vrba, P., and Marik, V., 2015, ‘A Review of Architectures and Concepts for Intelligence in Future Electric Energy Systems’, IEEE Trans. on Industrial Electronics 62(4), 2424–2438.

- The Smart Grid Interoperability Panel Cyber Security Working Group 2014, Guidelines for Smart Grid Cyber security: Vol. 1, (SGIPCSWG‐V1) Smart Grid Cyber security Strategy, Architecture, and High‐Level Requirements, National Institute of Standards and Technology, U.S. Department of Commerce, NIST IR No.: 7628 Rev 1, viewed 6 Jan 2017.

- The Smart Grid Interoperability Panel Cyber Security Working Group, 2014, Guidelines for Smart Grid Cyber security: Vol. 2, (SGIPCSWG‐V2) Privacy and the Smart Grid, National Institute of Standards and Technology, U.S. Department of Commerce, NIST IR No.: 7628 Rev 1, viewed 6 Jan 2017.

- The Smart Grid Interoperability Panel Cyber Security Working Group, 2014, Guidelines for Smart Grid Cyber security: Vol. 3, (SGIPCSWG‐V3) Supportive Analyses and References, National Institute of Standards and Technology, U.S. Department of Commerce, NIST IR No.: 7628 Rev 1, viewed 6 Jan 2017.

- Tonyali, S., Akkaya, K., Saputro, N., and Uluagac, A.S., 2016a, ‘A reliable data aggregation mechanism with Homomorphic Encryption in Smart Grid AMI networks’, In: Proceedings of 2016 13th IEEE Annual Consumer Communications & Networking Conference (CCNC), Las Vegas, NV, USA, Jan 2016, IEEE.

- Tonyali, S., Akkaya, K., Saputro, N., Uluagac, A.S., and Nojoumian, M., 2017, ‘Privacy‐preserving protocols for secure and reliable data aggregation in IoT‐enabled Smart Metering systems’, Future Generation Computer Systems.

- Tonyali, S., Cakmak, O., Akkaya, K., Mahmoud, M.M.E.A., and Guvenc, I., 2016b, ‘Secure Data Obfuscation Scheme to Enable Privacy‐Preserving State Estimation in Smart Grid AMI Networks’, IEEE Internet of Things Journal 3(5), 709–719.

- Tonyali, S., Saputro, N., and Akkaya, K., 2015, ‘Assessing the feasibility of fully homomorphic encryption for Smart Grid AMI networks’, In: Proceedings of 2015 Seventh International Conference on Ubiquitous and Future Networks (ICUFN), Sapporo, Japan, Jul 2015, IEEE.

- Vaidya, B., Makrakis, D., and Mouftah, H.T., 2014, ‘Security and Privacy‐Preserving Mechanism for Aggregator Based Vehicle‐to‐Grid Network’, In: Mitton, N., Gallais, A., Kantarci, M., and Papavassiliou, S. (eds.), ‘Ad Hoc Networks, ADHOCNETS 2014’, Lecture Notes of the Institute for Computer Sciences, Social Informatics and Telecommunications Engineering, LNICST 140, Springer, Cham., 75–85.

- Wan, Z., Zhu, W.T., and Wang, G., 2016, ‘PRAC: Efficient privacy protection for vehicle‐to‐grid communications in the smart grid’, Computers & Security 62, 246–256.

- Wang, H., Qin, B., Wu, Q., Xu, L., and Domingo‐Ferrer, J., 2015, ‘TPP: Traceable Privacy‐Preserving Communication and Precise Reward for Vehicle‐to‐Grid Networks in Smart Grids’, IEEE Trans. on Information Forensics and Security 10(11), 2340–2351.

- Wang, X.F., Mu, Y., and Chen, R.M., 2016, ‘An efficient privacy‐preserving aggregation and billing protocol for smart grid’, Wiley Security and Communication Networks 9, 4536–4547.

- Wicker, S. and Thomas, R., 2011, ‘A Privacy‐Aware Architecture For Demand Response Systems’, in Proceedings of the 44th Hawaii International Conference on System Sciences (HICSS'11), Hawaii, USA, Jan 2011, 1–9.

- Wicker, S.B. and Schrader, D.E., 2011, ‘Privacy‐Aware Design Principles for Information Networks’, Proc. of the IEEE 99(2), 330–350.

- Wright, D., Finn, R., and Rodrigues, R., 2013, ‘A Comparative Analysis of Privacy Impact Assessment in Six Countries’, Journal of Contemporary European Research 9(1).

- Yu, R., Zhong, W., Xie, S., Yuen, C., Gjessing, S., and Zhang, Y., 2016, ‘Balancing Power Demand Through EV Mobility in Vehicle‐to‐Grid Mobile Energy Networks’, IEEE Trans. on Industrial Informatics12(1), 79–90.