6

Software Defined Networking and Virtualization for Smart Grid

Hakki C. Cankaya

Fujitsu Network Communications, Richardson, TX

6.1 Introduction

The smart grids that connect smart homes and smart buildings have been built to collectively shape smart cities and will facilitate many new applications and services. The new and/or improved services and applications over smart‐grid infrastructure will include but not be limited to Internet access, video surveillance, power distribution automation, security, and many more that we have not even think about yet. All these new services and applications will shape smart cities and improve lives. They require collecting ambient intelligence, situation awareness, and frequent and reliable communication between devices and distributed control centers (Rehmani, 2015). Machine‐to‐machine communication and IoT (Internet of Things) will be utilized regularly, and large volume of data (big data) that have been collected will have to be stored in the cloud and analyzed to make actionable decisions. Data centers and public and/or private clouds have been becoming parts of the utilities and energy sector IT infrastructure already. Security and privacy of the data and vulnerability of this complex and heterogeneous communication system are the main concerns for some time now, and there have been discussions on how to address them. Repeating attacks into networks of such systems are inevitable; however, correlating these individual attacks and understanding patterns are valuable means to provide intelligent prevention of planned attacks with known prediction algorithms.

Due to security reasons and specific application requirements (delay, jitter, QoS, etc.), utilities and power grids do not rely on the public Internet for their communication needs, and they mostly have their own telecommunications networks and/or receive dedicated private services from area service providers. Wireless access and point‐to‐point services are popular choices. One of the challenges for power grids is to constantly maintain and improve their dedicated telecommunications networks, which are administrated by multiple domains and technologies (Erol‐Kantarci, 2015). Domains include the core network (WAN), the access networks (AN), the field area networks (FAN), and/or the neighborhood area networks (NAN) over multiple technologies. FAN is mostly a broadband wireless network that provides connectivity to ICT‐enabled new‐generation utilities field devices in a coverage area. NAN provides last‐mile outdoor access to smart meters from WAN. These networks are owned by utilities and/or distribution system operators or leased from network operators. It is also possible that they have a combination of both, which introduces additional administrative and management complexities. They use a variety of wireline and wireless technologies in these networks.

In this chapter, we describe the current status of the power grid and the motivation for modernization. We review software‐defined networking and virtualization for both networking and functions. We then look at the challenges of the smart grid and how SDN/NFV will benefit the new applications. Last, we share some use cases and existing demo proposals of SDN/NFV for the smart grid from other groups.

6.2 Current Status of Power Grid and Smart Grid Modernization

Power and energy have been the main enablers of the growth and evaluation of human societies and civilization. We use energy from basic human needs such as lighting and heating to modern needs that include powering data centers for cloud applications and connecting people via internetworks. The power grid is basically an infrastructure that moves power from generation sites (power plants) to end consumers including homes, enterprises, production facilities, etc. In general, the power grid is made up of subsystems including (1) power generation, (2) transmission, (3) distribution, and (4) consumption (see Figure 6.1).

Figure 6.1 Traditional power grid.

The electricity is first generated at power stations by using different resources like hydro, coal, fuel‐oil, wind, solar, or nuclear. The electricity is then transmitted over high‐voltage transmission lines from generation sites to residential and industrial areas and locations where substations terminate the high‐voltage feed and transfer it to low‐voltage power. The distribution power network distributes this low‐voltage power to the consumers in the designated area that is served by the substation. The residential or commercial users then use the electricity to power many of their power devices. The expectation is the reliability and availability of this power service.

The operation of this rather large and complex system requires large number of sensors, measurement devices, and controllers. All these devices need to communicate with each other through a series of communication networks and gather information to a control center where decisions to accommodate recent changes in the power grid are made and fed back to the devices in the grid.

6.2.1 Smart Grid

The introduction of the smart grid increases the amount of information exchange and the functionalities. The smart grid has the following information‐generating and ‐consuming functions.

- Consumers can now be producers of power. Therefore, power production information should be collected from consumers as well. This makes consumers a new source of information.

- Accurate load balancing of the production requires dynamic load information exchange from all power generation stations.

- Smart sensors collecting information from power transmission lines, transformers, distribution substations, etc.

- Advanced smart meters collecting power usage information.

- Information collected from electric vehicles (EV) and high‐capacity energy storage devices, such as large batteries in EVs.

With the integration of the IoT to the smart grid, virtually all assets from anywhere can connect, and efficiencies and productivity can increase. This ubiquitous connectivity will transform our businesses; new services that improve our lives will be possible. IoT will offer new value to utilities enabling them to make better business decisions, use their resources more efficiently, and be the best service provider possible to their customers.

There are multiple types of data collected and moved in the smart grid with different requirements. These are: (1) time‐critical data, and (2) volume data. Time‐critical data has stringent time budget requirements for delay and is needed for decisions including protection switching. The volume data does not have such strict requirements for delay but needs high throughput; meter data is one of them.

The geographical footprint of the smart grid communication network is large and therefore employs heterogeneous networks in different sections of the entire coverage. Optical fiber networks are commonly used for connecting generation sites to substations and deployed along with transmission lines and are called core or wide area networks (WAN). In field area networks (FAN) and neighborhood area networks (NAN), the bandwidth is not high; however, flexibility and access are important. Power line communication and/or wireless access technologies are frequently used in distribution areas.

In the traditional power grid, the devices and protocols used in substations in the distribution areas were proprietary and closed systems. To increase the interoperability of many other new devices and functionalities that are coming in to the picture with the smart grid, a standardization of substations automation became a need. The TC 57 working group released a series of standards called IEC 61850 (IEC) family where inter‐ and intra‐substation devices, communication, and functions have been reviewed and standardized.

In the process of modernizing the traditional power grid toward the smart grid, there is an increasing need for communication between machines as well as machine and human. This need puts information and communication technologies (ICT) in a vital role for this evaluation and modernization to happen smoothly.

In the smart grid, there are technology and business challenges. The integration of renewable distributed energy resources (DER) will require frequent and close monitoring of the entire system.

Some of the technology challenges of the smart grid include the following integration of systems and functionalities:

- demand side management (DSM);

- automated meter reading (AMR);

- customer energy management systems (CEMS) to manage new prosumers (consumer and producer) into the smart grid architecture;

- hybrid and plug‐in electric vehicles with large batteries that require coordination for storage from the grid;

- intelligent electronic devices (IED) that are used for collecting, monitoring, and processing data;

- self‐healing capability against failures and disasters (earthquakes, hurricanes, tornados, etc.);

- quick isolation of failures in microgrid to prevent large blackouts;

- providing predictive information to utilities as well as subscribers with recommendations about efficiencies in usage as well as production;

- interoperability among multiple heterogeneous networks and domains; the smart grid should be able to work multiple networks and platforms together with no inconvenience to users and other systems; and

- real‐time analytic information processing systems to determine the current status and predictions for what is coming next.

Business challenges of smart grid include:

- increase energy trade, not only for traditional power plants, but from individual consumers/producers (prosumers); and

- developing business cases for new services and applications (predictive and/or historical analysis of usage and production for example).

The potential solutions for these challenges lead to high volume of data collection, transfer, and processing, which increase the complexity and the requirements of the ICT infrastructure. In addition to this complexity, the ICT infrastructure still requires even higher performance, availability, reliability (Abhishek, 2016), dependability, stability, and security. To combat this complexity, there have been proposals from researchers and practitioners in the industry, which include new networking concepts such as virtualization and the softwarization of functionalities. For a successful implementation of the smart grid system, one needs to seamlessly integrate these new concepts in to the existing grid system with ICT.

Moving to the smart grid requires a carrier‐grade communication network, which enables scalability, self‐healing, and organizing (Nakata, 2016), quality of service, management and monitoring, and security (confidentiality, integrity, and availability). Smart Grid is large scale, heterogeneous and distributed system. In Smart Grid, power distribution networks have large number of Distributed Energy Resources (DER) which are enabled by the new energy market where any consumer can become a producer of power. Information communication infrastructure should be enhanced matching these technological challenges of the Smart Grid.

In the smart grid, large data collection is required to make actionable smart decisions for the dynamic control of the intelligent devices. The new generation of devices such as phasor measurement units (PMUs) measure power quality with high accuracy. The measurements are frequent, resulting in up to hundreds of measurements per second, collecting large amounts of data.

6.3 Network Softwarerization in Smart Grids

6.3.1 Software Defined Networking (SDN) as Next‐Generation Software‐Centric Approach to Telecommunications Networks

The traditional communication networks are already quite complex and costly in operations and management. They are based on purpose‐built hardware for each network function and use technology‐specific interfaces. These issues and difficulties have motivated many researchers and industry toward new concepts and architectures. A relatively new paradigm change in communications networking seems to be taking the lead. Many startup companies, incumbent communication and networking equipment vendors, and medium and large network operators and service providers have invested both time and money in these new ways of designing and managing communications networks, called software‐defined networking (SDN) and network function virtualization (NFV) as their next‐generation software‐centric approach to networking.

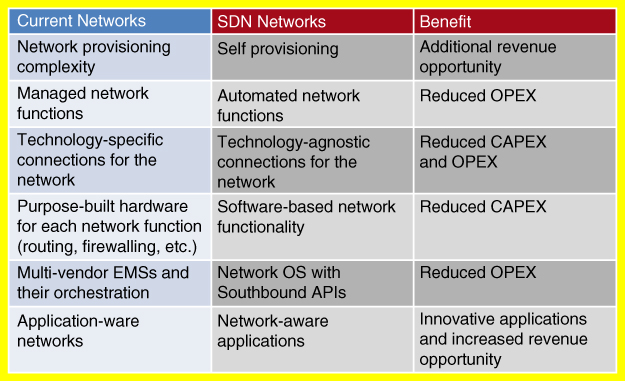

When it first started, there were multiple definitions of it offered by different organizations. Recently, these definitions have been converging based on some common points. Among all the organizations, the Open Networking Forum (ONF) has been one of the main organization behind SDN. They organize the education and implementation of this new paradigm and have working groups to make recommendations for different aspects of it. SDN is a programmable approach to designing, building, and managing reliable networks (Abhishek, 2016). It decouples network control from forwarding in network devices and offloads its functions to logically centralized SDN controller software, as seen in Figure 6.2.

Figure 6.2 SDN framework.

The replacement of the control intelligence into logically centralized SDN controller(s) would capture the global view of the entire network and provide vendor‐independent control to the day‐to‐day operators of the network. An immediate benefit is obvious simplification, which leads to cost reduction for network devices. Operating SDN‐capable networks will be less complex. Network administrators can programmatically configure the entire network at the SDN control layer, instead of having to manually integrate all configurations in to devices scattered around the network. The SDN controller will provide the common network services such as routing, access control, dynamic bandwidth management, QoS, storage optimization, and policy management to different application‐specific functions through open northbound APIs. SDN's unified control plane allows network abstraction and lets these applications be implemented easily and efficiently with required customization and optimization. The expectation is that these abstractions and simplifications will create a fruitful environment for innovation as turning up new services would be an easy task but needs to be invented. The same unified control plane facilitates the creation of a virtualized network and the slicing of pieces of the physical network or any other hardware source for specific functions and their virtualization, called virtualized functions. In this context combined with IT virtualization in data centers, network function virtualization (NFV) has been proposed and supported by major network operators and service providers as a platform to host and operate network services as virtual network functions (VNFs). There have been studies suggesting that NFV results in considerable cost efficiencies in both Capex and Opex (Yu, 2015; Cerroni; 2014).

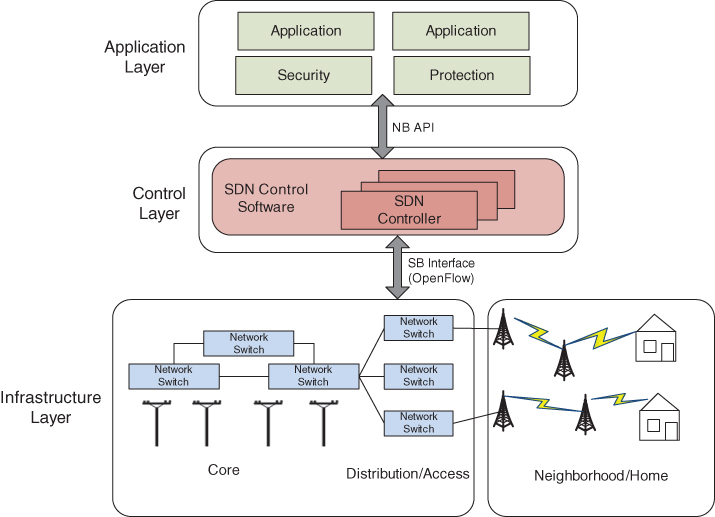

Flexibility is another benefit that SDN with NFV offers; mix‐and‐match solutions from different vendors would be accomplished easily with SDN. A summary of an incomplete list on how SDN can benefit current network is tabulated in Figure 6.3 (Fujitsu, 2015).

Figure 6.3 Generic SDN benefits.

6.3.2 Adaptation of SDN for Smart Grid and City

There has been very focused research on alternative next‐generation networking infrastructures and architectures such as SDN/NFV producing encouraging results; however, these are unlikely to be deployed by utility companies and distribution system operators without an implementation and demonstration of a successful large‐scale prototype under real‐world conditions to build smart cities.

Another reason for the slow pace of adopting these existing new communications concepts and prototypes into the real production network of any utility or energy company is the regulations and hefty fees enforced by NERC (North American Electric Reliability Corporation) for each day that the utility is in violation of standards. This is an understandable risk to be avoided because utilities networks are part of the critical infrastructure of smart cities.

The pathway to the adoption of these new concepts and architectures for smart grid and smart cities should involve the following steps:

- idea generation on how SDN/NFV can be used to address the problems of utilities networks and smart city applications in general;

- proof of concept in a lab environment;

- testing with simulators/emulators;

- testing under real conditions in a sliced isolated real network in field trials; and

- Approval of results and migration to production network in incremental phases.

6.3.3 Opportunities for SDN in Smart Grid

Current challenges and push for modernization require open standards and non‐proprietary solutions to mitigate the risk of rapid technology shifts and to reduce high OPEX cost. Following this trend, SDN and NFV not only provide solutions to some of the challenges of the utilities and energy sector for their networks but could also be a solid option for future‐proof modernization including the smart grid.

SDN, as defined, provides a global end‐to‐end view of the network; therefore, with this new approach, the complexity of multiple domains and networks can be dealt with. SDN adopts mostly open standards and introduces technology abstraction, which provides a vendor‐agnostic approach to configuring and maintaining various types of network elements. Hardware virtualization is one of the goals of SDN and is used for piecing up a physical network into virtual slices for ease of managing different networks while using resources efficiently. It will help connect multiple data centers in different physical locations and make their use efficient. Programmatic network control of SDNs serve for service abstraction and will simplify all configuration activities. Due to its holistic view of networks, an SDN network will be able to have a better control over delay and jitter in the network. This will help teleprotection of control traffic including SCADA. The bandwidth‐on‐demand feature of SDNs will solve the problem of elastic bandwidth need for new and emerging apps in the smart grid. This will also create an opportunity to increase revenue through accelerated service velocity in cases where the utility also serves as a service provider in the coverage area. It has been observed that more and more utility companies serve as Internet service providers in rural areas.

In this new networking infrastructure (see Figure 6.4), the utility‐specific apps on the applications layer will have the opportunity to blossom. These apps could facilitate power/energy peak‐shaving, analytics of system and customer data, M2M communication, fast restoration from a failure, better management of heterogeneous network devices, rapid diagnostics on large inventory of network devices, distributed automation enablement, AMI network monitoring, and many more one could think of.

Figure 6.4 SDN‐based smart grid communication infrastructure.

SDN for resiliency and protection in smart grids: The smart grid maintains multiple large‐scale heterogeneous communication networks to deliver services. One challenge is automatic fast recovery when failures occur by manmade or natural causes. There have been studies that demonstrate self‐healing capability to the failures of links for inter‐substation communication by using SDN architecture (Aydeger, 2016; Sydney2014; Ren, 2016; Ghosh 2016). All these failure recoveries are possible using the SDNs feature of having overall topology view of the entire network. To support multi‐network fast recovery, there are proposed algorithms that are integrated into the SDN control layer (Dorsch, 2014). The algorithm proactively configures alternate routes for all possible failure scenarios and coordinates with SDN controller's monitoring function to be notified at a port status change (happens at a failure). Then, automatic switching takes place, and traffic start flowing on a re‐routed path. There are some studies reporting improved recovery times compared to MPLS‐based techniques (Aydeger, 2016). In addition to link failures, self‐recoveries from node failures have been also reported by using the SDN architecture (Zhang, 2016).

Simple management: The simplicity of the management is a direct product of the separation of the control layer from the device infrastructure layer of the SDN architecture (see SDN architecture in Figure 6.2). An SDN controller at the control layer collects all topology information from devices and makes the collective management decision for the entire network. An example implementation of this management simplicity for the smart grid has been reported by Kim (2015) for an AMI application. AMI meters need forwarding information for the metered data they receive. In an SDN‐based architecture, the SDN controller pushes the forwarding policy for all AMI devices in the data/infrastructure level, so that AMI meters do not carry the burden of making their own forwarding decisions; this reduces the computational burden on AMI devices as well as the topological updates AMI devices needed to receive to make those decisions.

Interoperability and programmatic interfaces: The SDN architecture offers standardized interfaces to be used seamlessly for different communications devices at the infrastructure layer. The northbound interfaces are used for the communication between the SDN controller and applications running for different network services. Due to the variety of such applications, there is no single interface for northbound. In fact, there are expected multiples of interfaces in addition to NETCONF and REST. The southbound interface is used for the communication between the SDN controller and the network switches and routers. The interface facilitates the dynamic control of switches and routers according to the network traffic demand by the SDN controller. The OpenFlow is the first and most used southbound interface in the field. Regardless of the vendors and models, these devices could be performing different functionalities as a physical device or implemented in software as a virtual device. All of the information provided by such devices can be transparently interpreted by the control layer and used by the service provider and/or network operator in an interoperable way; no special control stack should be maintained at the control level by the operator. This would give great flexibility to the smart grid operators for all different kinds of devices they need to have in their communications infrastructure. The SDN architecture offers the flexibility of adopting any change in the middleware control layer by the way it is designed based on software. Considering the rapidly changing protocols and new interfaces the smart grid needs in its growth cycle, this feature of SDN could be a good asset.

Network awareness: With SDN, all devices in the infrastructure layer may provide constant feedback to the controller in the control layer. The operator can define the network events based on the collective information, such as low performance, congestion, large delays, etc. These events could dynamically trigger functions such as load balancing, re‐routing, and new arrangements of QoS parameters. The direct application of this in the smart grid could be the readjustments of AMI and IED devices in terms of reading frequencies and reconfigurations. These would not require any human interventions and eliminate any fat‐finger problems.

Hardware as commodity: Currently, there are different types of equipment from multiple vendors in the traditional network architecture requiring frequent installs for updates and bug fixes. With the introduction of the new SDN architecture, hardware becomes a commodity, and types and vendors will not make a difference at the control layer. All changes and updates will be done at the control layer and pushed to all hardware at once by using the standardized interfaces.

Load balancing and quality of service: SDN offers load balancing based on flow information that is shared between the controller and application profiles. The traditional packet‐based load balancing cannot relate the load balancing functionality to the applications through service profiles. This capability makes the new SDN‐based network more application aware and able to prioritize flows for more efficient resource use. This feature of the SDN architecture could be quite useful to secure the minimum bandwidth and resource levels for high‐priority information flows such as power‐quality event warning messages so that these types of emergency event handlings can be prioritized. There are some specific studies that report such benefits of flow‐based priority differentiation in smart grid applications (Sydney et al., 2014). Flow information that is used for load balancing in the SDN architecture can be easily leveraged for QoS and DiffServ applications. Once flow is recognized and prioritized by the SDN controller, depending on the arrival rate and delay requirements of the flow, appropriate queues can be modelled to accommodate any QoS mechanism in a dynamic manner. This flexibility and control in terms of QoS are quite useful in smart grid applications. There are proposals on heuristic methods for smart grid QoS applications where smart grid–specific AMI flows can be treated differently based on the application service profiles (Qin et al., 2014).

Security: A lot of attacks target critical infrastructures including utilities communications networks. More concerning is the fact that these types of activities are increasing, which justifies the ongoing awareness in and around the security of critical infrastructure networks and assets. These networks require large security coverage due to their footprint with multiple domains and numerous end points (Cankaya, 2015). Each end point counts for a potential point of attack and increases the risk and vulnerability of the system. There are many guidelines, best practices, and standards for security of utilities and energy sector administered by multiple organizations.

For a long time, we have been using the same technologies and practices for security, which have been strictly defense oriented. The “block all” vision that we had has been forcing us to drop a firewall appliance wherever the function is needed in the network. SDN has triggered a change for moving away from this trend. It can enable security developers to implement more innovative solutions for perceived threats as it provides better visibility of the entire network for both control and data. With logically centralized control, the confusion about where to place a security appliance will come to an end. SDN's security‐enabled infrastructure allows network security administrators to route all traffic to a logically central firewall that can virtually be present wherever and whenever needed in the network (Ghosh et al., 2016). In addition to conventional brute‐force techniques, the change will feed innovation for intelligent security applications such as smarter quarantine systems, faster emergency broadcasts, advanced Honeynets, and context‐aware detection algorithms.

In general SDN can provide security based on software without a specialized hardware. There are proposals to use role‐based access control systems in the SDN architecture (Pfeiffenberger and Du, 2014), where an SDN controller uses digital signature with shared keys for all control packets. Kloti et al. (2013) provide a good analysis for open‐flow‐based security for an SDN architected network and provides recommendations on security issues within OpenFlow deployments in SDN architectures.

Flexibility in network routing: An SDN centralized controller can continuously keep track of all network devices and maintain the topology of the network. Using this topology, it can also construct a routing information base that can compute any route with the network, instead of letting devices run their own copy of the distributed routing algorithms to build the routes. In this case, if a device needs a route, it receives the direction of the packet to move in terms of the forwarding information from the SDN controller. Dorsch et al. (2014) proposed a purpose‐built SDN architecture for smart grids, called SDN4SamrtGrid. In this new SDN architecture, the topology and the routes between the smart grid devices are constructed by the centralized SDN controller, and the forwarding information is disseminated to all required devices for next‐hop decisions. The article also claims a superior performance of SDN4SMartGrid routing to the traditional open shortest path first (OSPF) routing.

Ease of deploying new services and applications: The SDN architecture makes the new service and application deployment relatively easy. Service providers and operators have the direct control of the SDN controller. The business case for a new service and/or application starts with simply a policy development for the new service. After the policy has been developed, the policy needs to be provisioned directly to the SDN controller without any hardware dependency in terms of installation and configuration on the infrastructure. The controller layer then monitors and manages the service continuously and decides to make changes only on the control layer to be pushed to the devices.

6.4 Virtualization for Networks and Functions

6.4.1 Network Virtualization

Network virtualization (NV) is a technique to create an independent isolated instance of a network service, called virtual network (VN), over a shared physical network infrastructure with customizable performance levels. A VN is composed of virtual nodes and links that form a virtual topology of the VN. A virtual node is mapped to a physical node; however, a virtual link can be mapped to a section of the physical network that may include multiple physical links and nodes. The owner of the VN can choose to use any protocol, mechanism, and/or control and management planes in this virtual network. The benefits of VNs are many. First, multiple VNs can share the same physical network infrastructure or multiple heterogeneous physical network infrastructures without functionally affecting each other. VNs are also isolated from each other meaning that any failure, misconfiguration, and security threats in one VN should not replicate themselves to the others. Another motivation for VNs is to ameliorate heterogeneity, which complicates the management and service delivery. One is the heterogeneity of the underlying network technologies. There may be WDM, Ethernet, and/or wireless technologies used at the physical infrastructure layer together. A VN is, by definition, transparent to these multiple technologies; only the topological connectivity is important to the administrator of the specific VN. Similarly, multiple VNs should be able to run different protocol stacks and deliver different services. In the smart grid, the amount of information being exchanged is quite large compared to the traditional grid. With the open platform that the smart grid provides, the sources of information have also been increasing. Power generation equipment feeds information for their status and load conditions from subscriber sites. All smart sensors monitoring transmission lines, substations, and transformers send information to control centers. Advanced meters are read frequently. Large capacity power storages at subscriber generation sites including electric vehicle batteries need to communicate their status to the controllers. Not only the count and size of these information sources but also where they communicate to matter. There are also different applications, mainly control and operations applications and data collection applications. The requirements for these applications are different. Control and operations applications are small in data volume but require real‐time communication with limits on delay and availability; however, data collection may be in large bursts but do not have strict requirements on delay; however, they may benefit from high throughput.

Information exchange occurs on multiple physical network technologies, i.e., heterogeneous networks. Optical networking technology is used in the transmission part of the grid to connect substations and head‐end power stations in a wide area. Power line communication (PLC) and wireless mesh (WM) are used in the distribution part of the grid due to the favorable cost point and flexibility of these technologies.

In comparison the requirements of the applications, optical transmission has reliability, low latency, and high data volume capabilities; however, it does not cover the distribution part of the neighborhood area and home networks due to high cost and ROI issues. On the other hand, PLC and WM are ubiquitous in home and neighborhood areas but don't have the robustness and reliability by themselves.

Because the smart grid delivers very diverse applications with a different set of requirements over heterogeneous networks and technologies, one can argue that NV is quite applicable to smart grid communication with considerable benefits. For example, a specific application can run on a tailored VN without affecting any other application running on different VNs. The main challenge in NV in the smart grid, like any other implementation of the NV, is the mapping of virtual networks to physical network. There have been some proposals for the mapping problem (Cai et al., 2010; Zhu et al., 2006; Chowdhury et al., 2009; Lischka and Karl, 2009).

In the smart grid, there are even multiple physical networks, i.e., PLS, WM, optical, for this mapping exercise. Houidi et al. (2011) report on mapping to multiple physical networks. Specifically for the smart grid, Pin et al. (2014) implement NV for smart grid applications by considering WM and PLC as underlying two physical networks and report benefits. The study provisions two VNs, one for real‐time applications where latency and reliability are priority and the other is a data collection application where the throughput is more important. This multi‐objective optimization lands itself into NP‐hard problem set, and the authors propose a suboptimal heuristic solution to map these application‐specific VNs to PLC and WM physical network infrastructures. They report considerable improvement in higher reliability by mapping logical links into diverse physical links from both the PLC and WM infrastructures. A similar diversification has been employed to increase throughput for data collection application VNs.

6.4.2 Network Function Virtualization

In traditional methods, network functions have been delivered by hardware products and devices; for example, various vendors make routers, firewalls, switches, load balancers, etc., for specific network functions and to be placed and installed at certain physical locations in networks. This traditional way of accomplishing network functions has been challenged by a new concept called network function virtualization (NFV) supported by the SDN framework. Other than being just a concept, the number of successful implementations in data center networks, wireless backhaul networks, and content delivery networks has been in the rise along with obvious benefits (Network Function Virtualisation, 2013; Chiosi et al., 2012).

The NFV framework has an extended coverage that includes NV and softwarization of functions (implementation of functions in software) to deliver network services. This will liberate offering services from strict dependency on purpose‐built hardware devices in the traditional networking. The trend is to load the special functionalities to software and run the software on commodity compute, storage, and network hardware resources. The benefit is flexibility and agility in offering new and more complex services by using virtualized function chains. In this context, the coordination and life cycle management of VNFs are done by the VNF orchestrator/manager. The responsibility includes creating an instance of VNF and monitoring its performance and termination.

Niedermeier and Meer (2016) use NFV‐based virtualization to implement virtualized advanced meter infrastructure (AMI) that reports increased reliability and availability with reduced cost, which were pain points for the smart grid AMI application without the implementation of virtualization.

6.5 Use Cases of SDN/NFV in the Smart Grid

In the smart grid, one of the features is to be able to dynamically respond to the varying levels of power/electricity demand. There are peak hours where the demand for power increases for a duration of time and then comes back to the regular level in a 24‐hr. daily cycle. There are options to deal with these peaks. One option is to engage extra power generation temporarily, such as turning up the generators at multiple locations. The other option is to introduce intelligence to the control system by introducing power control agents, which dynamically collect measurements and make decisions about which device should use power and which device should be delayed in receiving power from the grid. For example, when people come back from work, their electric vehicles do not necessarily need an immediate power charge during the peak hours of 6:00–8:00 p.m., and it could be delayed till sometime after midnight to be ready by the next morning. Of course in these cases, the subscribers need to opt in to the application, which is a contractual issue. Collecting the measurements from smart devices for the application needs a reliable and real‐time network and a control agent that can oversee the entire infrastructure. This application, therefore, is a good candidate for an SDN‐based smart grid network infrastructure. First, an SDN‐based network is able to provide protection and load balancing for dynamic traffic that is needed for this application. Sydney et al. (2014) propose an SDN‐based platform for demand response that experimentally implements and reports benefits of automatic fail‐over, load‐balancing, and QoS, which are leveraged for the demand response use case of the smart grid.

Another challenge for the smart grid is to move the high data volume across domains and devices in the smart grid network. The smart grid has a large number of devices that include PMUs in the grid, intelligent electronic devices (IEDs) in substations, and smart meters at homes. These devices generate high frequency of data flow at hundreds of reads per second, which creates a scalability problem for the communication. To address this complexity there have been proposals for middleware solutions: GridDataBus (Kim et al., 2012), where producers and consumers of data are grouped based on certain features such as type and location; GridStat (Gjermundrod et al., 2009) is another study that manages the complexity by separating data and management planes. Management plane manages the communication network resources and failures; data plane provides the efficient data flow through data brokers. Software‐based control of the SDN architecture can inherently become a good platform to facilitate these middleware‐based solutions. Koldehofe et al. (2012) implements a similar middleware based on the SDN architecture by using the OpenFlow protocol. In this proposal the authors implemented the middleware in the SDN controller as a control agent (handler), which benefits from the holistic view of the network at the SDN controller and is able to cater for an efficient connection between data producers and consumers in the network by leveraging distributed systems tools such as group communication, while enjoying the other benefits of the SDN such as load balancing, fail‐over, etc. It is reasonable to expect growth in these kinds of use cases to reduce the complexity of the smart grid communication (Hannon et al., 2016).

Rapid failure recovery and resiliency represent another use case for SDN in smart grids. Aydeger et al. (2015) claim a successful demonstration that integrates an emulated OpenDayLight controller‐based SDN platform with smart grid applications such as manufacturing message specification data‐flow on TCP. The demo setup uses Mininet for simulating the OpenFlow protocol and an ns‐3 network simulator to generate the network topology and traffic. The aim was to increase the redundancy by using wireless connections as back‐up for failures in primary wireline connections. The switch‐over from wireline to wireless infrastructure is reported to be fast enough to sustain the connections for smart grid applications.

MicroGrids (Lasseter, 2010; Alparslan et al., 2017) and Virtual Power Plants (VPP) (Lukovic et al., 2010) have been proposed to alleviate the complexity of the distributed energy resources (DERs) in the smart grid, which is the main trend moving away from the centralized generation toward distributed generation. The expectation for this move is to capture efficiencies for the distribution of power and high reliability for trust. However, the trade‐off is the complexity in the management of such distributed systems. In both MicroGrid and VPP the idea is to aggregate the DERs based on any criteria that makes sense for the specific application. There are proposals to aggregate based on geographical locations of DERs or the technology used at these locations. However, the control and management of such formations of clusters of DERs need high volume of information exchange where topology and data volume may be dynamic and require high availability of the networks. These features of this use case apparently could benefit from virtual networking where demand and topology changes can be accommodated by virtual networks and moving resources quite adaptively. Thus, the SDN infrastructure becomes an apparent fit for the solution. The parallel between VPP and virtual networks become quite obvious for future studies in smart grid communication.

Among the enablers of the smart grid are the intelligent electronic devices that have microprocessors and a control software. These devices perform all metering, monitoring, protection, and control functions for the smart grid. With the similar idea that stems from the compute virtualization, the software of all these devices could be removed from the individual devices and moved into grid data centers (grid cloud) on a virtual machine (VM)–based infrastructure and could be controlled and managed in a centralized way.

This would turn all these devices in to commodity hardware in substations and the smart grid; then SDN benefits that have been already realized for data centers and the cloud would become applicable to smart grid as well. Along with virtualized compute, the SDN‐enabled VNs would create a multi‐tenant environment for the smart grid, where a physical transmission system could serve multiple utilities that have virtual computer and network resources of their own, called slice of compute and slice of network, without any security concerns. This would create new business cases and revenue streams for smart grid admins as well as utilities that would use the virtual infrastructure as a service to create their services to end customers (subscribers) in an innovative and agile way.

6.6 Challenges and Issues with SDN/NFV‐Based Smart Grid

Since SDN is creating a logically centralized control plane that keeps track of all devices in the infrastructure layer on a one‐to‐one communication basis, it creates an overhead on the communication infrastructure of the smart grid, which is not resource‐rich. Therefore, an SDN‐based smart grid could benefit from studies in reducing this overhead.

SDN can use centralized routing instead of using distributed OSPF‐like routing algorithms. An SDN controller then distributes these routes in terms of flow‐based forwarding information to the devices in the infrastructure layer. This works well except for some events, such as initiation of the network or after failure, where all or multiple devices need to be updated with the new forwarding information that creates a burst of data exchange. For such cases, traditional distributed routing may be kept as an alternative that may run in parallel to the centralized routing. Another solution is to employ multiple SDN controllers that manage and control some segments of the network. The coordination of multiple SDN controllers can use master‐slave synchronization algorithms of distributed systems field.

IEC61850 provides a set of standards for substation automation and communication specifications for smart grid applications (Molina et al., 2015). IEC61850 also have recommendations for configuration language and information model for data representation. These recommendations should be integrated into the SDN‐based smart grid communication architecture for vendor‐agnostic implementations. Northbound interfaces between smart grid applications and the SDN controller layer need standardization to keep the vendor‐agnostic nature of this trend.

The storage and processing of the collected high volume data and securing it over a large footprint are also challenges for the smart grid. Following this trend, there have been proposals that offer consolidated and comprehensive solutions for the smart grid that leverage the principles of SDNs. Jararweh et al. (2015) propose a new architecture for the mart grid that includes software‐defined storage, software‐defined security, and software‐defined IoT along with SDN.

6.7 Conclusion

In conclusion, softwarerization and virtualization of networks and functions using SDNs and NFVs are inevitable developments for any communications networks. The smart grid operates on multiple large‐scale heterogeneous communications networks to deliver new applications and services. To realize that, the operators of the smart grid need to work on how to best utilize the benefits of these new emerging technologies for their day‐to‐day needs in communication networking and share their expertise to shape the future. There are technological and business challenges to address, and there are promising recent academic and industrial proof of concepts and demonstrations to leverage. Moving forward, this is an exciting time in building smart cities and grids where innovation and opportunities will blossom in creating new applications that improve human lives and societies.

References

- Abhishek, R. et al., 2016, ‘SPArTaCuS: Service priority adaptiveness for emergency traffic in smart cities using software‐defined networking’, Proceedings of IEEE International Smart Cities Conference (ISC2), Trento, 2016, 1–4.

- Alparslan, M., et al., 2017, ‘Impacts of microgrids with renewables on secondary distribution networks’, Applied Energy (2017).

- Aydeger, A., Akkaya, K., and Uluagac, A.S., 2015, ‘SDN‐based Resilience for Smart Grid Communications’, Proceedings of IEEE Conference on Network Function Virtualization and SDN, 2015 Demo Track.

- Aydeger, A., Akkaya, K., Cintuglu, M.H., Uluagac, A.S., and Mohammed, O., 2016, ‘Software Defined Networking for Resilient Communications in Smart Grid Active Distribution Networks’, Proceedings of IEEE ICC SAC Communications for the Smart Grid.

- Cai, Z., Liu, F., Xiao, N., Liu,Q., and Wang, Z. 2010, ‘Virtual network embedding for evolving networks’, Proceedings of IEEE GLOBECOM, 2010, 1–5.

- Cankaya, H.C., 2015, ‘SDN as a Next‐Generation Software‐Centric Approach to Communications Networks’, OSP Feb. 2015, vol. 33, issue 2.

- Cerroni, W., and Callegati, F., 2014, ‘Live migration of virtual network functions in cloud‐based edge networks’, Proceedings of the IEEE International Conference on Communications (ICC 2014), 2963–2968.

- Chiosi, M., Clarke, D., Willis, P., Feger, J., Bugenhagen, M., Khan, W., Fargano, M., Chen, C., Huang, J., Benitez, J., Michel, U., Damker, H., Ogaki, K., Fukui, M., Shimano, K., Delisle, D., Loudier, Q., Kolias, C., Guardini, I., Demaria, E., López, D., Salguero, Ramón, F.J., Ruhl, F., and Sen, P., 2012, ‘Network functions virtualisation’, introductory white paper. Proceedings of SDN and OpenFlow World Congress.

- Chowdhury, N., Rahman, M., and Boutaba, R. 2009, ‘Virtual network embedding with coordinated node and link mapping’, Proceedings of IEEE INFOCOM, 2009, 783–791.

- Dorsch, N., Kurtz, F., Georg, H., Hagerling, C., and Wietfeld, C., 2014, ‘Software‐defined networking for Smart Grid communications: Applications, challenges and advantages’, Proceedings of the 2014 IEEE International Conference on Smart Grid Communications (Smart GridComm), Venice, Italy, 3–6 November 2014, 422–427.

- Erol‐Kantarci, M., et al., 2015, ‘Energy‐Efficient Information and Communication Infrastructures in the Smart Grid: A Survey on Interactions and Open Issues’, IEEE Communications Surveys and Tutorials, vol. 17, no. 1, 2015, 179–197.

- Fujitsu 2015, ‘Software Defined Networking for the Utilities and Energy Sector’, URL: http://www.fujitsu.com/us/Images/SDN‐for‐Utilities.pdf.

- Gjermundrod, H., Bakken, D.E., Hauser, C.H., and Bose, A., 2009, ‘Gridstat: A flexible qos‐managed data dissemination framework for the power grid’, IEEE Transactions on Power Delivery, vol. 24, no. 1, 2009, 136–143.

- Ghosh, U., et al. 2016, ‘A Simulation Study on Smart Grid Resilience under Software‐Defined Networking Controller Failures’, Proceedings of the 2nd ACM International Workshop on Cyber‐Physical System Security (CPSS), ACM, 2016.

- Hannon, C., et al., 2016, ‘DSSnet: A smart grid modeling platform combining electrical power distribution system simulation and software defined networking emulation’, Proceedings of the 2016 annual ACM Conference on SIGSIM Principles of Advanced Discrete Simulation.

- Houidi, I., Louati, W., Ben Ameur, W., and Zeghlache, D., 2011, ‘Virtual network provisioning across multiple substrate networks’, Computer Networks, vol. 55, no. 4, Mar. 2011, 1011–1023.

- IEC, Communication networks and systems in substation—Specific communication service mapping, IEC 61850.

- Jararweh, Y., Darabseh, A., Al‐Ayyoub, M., Bousselham, A., Benkhelifa, B. 2015, ‘Software Defined Based Smart Grid Architecture’, Proceedings of 12th IEEE International Conference of Computer Systems and Applications (AICCSA).

- Kim, Y.J., Lee, J., Atkinson, G., and Thottan, M., 2012, ‘Griddatabus: Information‐centric platform for scalable secure resilient phasor‐data sharing’, Proceedings of IEEE Computer Communications Workshops (INFOCOM WKSHPS), 115–120.

- Kim, J., Filali, F., and Ko, Y., 2015, ‘Trends and Potentials of the Smart Grid Infrastructure: From ICT Sub‐System to SDN‐Enabled Smart Grid Architecture’, Applied Sciences, 5, 2015, pp 706–727.

- Kloti, R., Kotronis, V., and Smith, P., 2013, ‘Openflow: A security analysis’, Proceedings of the 2013 21st IEEE International Conference on Network Protocols (ICNP), Göttingen, Germany, 7–10 October 2013, 1–6.

- Koldehofe, B., et al. 2012, ‘The power of software‐defined networking: line‐rate content‐based routing using OpenFlow’, Proceedings of 7th Workshop on Middleware for Next Generation Internet Computing, Montreal, Canada, December 2012.

- Lasseter, R.H., 2010, ‘Microgrids and distributed generation’, Proceedings of Intelligent Automation & Soft Computing, 2010, 16(2): 225–234.

- Lischka, J., and Karl, H., 2009, ‘A virtual network mapping algorithm based on subgraph isomorphism detection’, Proceedings of the 1st ACM Workshop on Virtualized Infrastructure System Architecture, 2009, 81–88.

- Lukovic, S., et al. 2010, ‘Virtual power plant as a bridge between distributed energy resources and smart grid’, Proceedings of 43rd Hawaii International Conference on System Sciences (HICSS), Kauai, HI, USA, January 2010.

- Molina, E., Jacob, E., Matias, J., Moreira, N., and Astarloa, A., 2015, ‘Using software defined networking to manage and control IEC 61850‐based systems’, Computer Electronics Engineering, 43, 142–154.

- Nakata S., et al., 2016, ‘A smart grid technology for electrical power transmission lines by a self‐organized optical network using LED’, Proceedings of SPIE 9948, Novel Optical Systems Design and Optimization XIX, 99481E, September 2016.

- Network Functions Virtualisation (NFV) 2013, ‘Use Cases. Technical report’, ETSI GS NFV 001 V1.1.1 (2013‐10), 2013.

- Niedermeier, M., and Meer, H., 2016, ‘Construction Dependable Smart Grid Networks sing Network Functions Virtualization’, Journal of Network and Systems Management, 24, 2016, 449–469.

- Pfeiffenberger, T., and Du, J.L., 2014, ‘Evaluation of software‐defined networking for power systems’, Proceedings of the 2014 IEEE International Conference on Intelligent Energy and Power Systems (IEPS), Kyiv, Ukraine, 2–6 June 2014, 181–185.

- Pin L., Wang, X., Yang, Y., and Xu, M., 2014, ‘Network Virtualization for Smart Grid Communication’, IEEE Systems Journal, Vol. 8, No.2, June 2014.

- Qin, Z., Denker, G., Giannelli, C., Bellavista, P., and Venkatasubramanian, N. A., 2014, ‘Software defined networking architecture for the internet‐of‐things’, Proceedings of the 2014 IEEE Network Operations and Management Symposium (NOMS), Krakow, Poland, 5–9 May 2014; 1–9 .

- Rehmani, M.H., et al. 2015, ‘Smart Grids: a Hub of Interdisciplinary Research’, IEEE Access, vol. 3, 2015, 3114–3118.

- Ren, L., et al., 2016, ‘Enabling Resilient Microgrid through Programmable Network’, IEEE Transactions on Smart Grid, Issue 99, 2016.

- Sydney, A., Ochs, D.S., Scoglio, C., Gruenbacher, D., and Miller, R., 2014, ‘Using GENI for experimental evaluation of Software Defined Networking in Smart Grids’, Computer Networks, n. 63, 2014, 5–16.

- Yu, R., Xue, G.,Kilari, V.T., and Zhang, X., 2015, ‘Function virtualization in the multi‐tenant cloud’, IEEE Network, vol. 29(3): 42–47.

- Zhang, X., Wei, K., Guo, L., Hou, W., and Wu, J., 2016, ‘SDB‐based Resilience Solutions for Smart Grids’, Software Networking (ICSN), 2016 International Conference on, May 2016.

- Zhu Y., and Ammar, M., 2006, ‘Algorithms for assigning substrate network resources to virtual network components’, Proceedings of IEEE INFOCOM, 2006, vol. 2, 1–12.