Chapter 8

Consonance and Dissonance

The Evolution of Line

Introduction: The Roots of Who We Become

I got my first guitar for Christmas when I was five or six years old—a Roy Rogers children’s toy. A couple of years later, it was deemed that my older brother Tom would take lessons at Willis Music, the local music store on Gratiot Avenue a couple of miles from my house. My parents bought him a Gibson ES-120T Thinline Fully Hollow Body Electric Archtop. It was the cheapest electric guitar that Gibson made, but to me it seemed like it must have cost a million dollars. I became infatuated with it, and snuck in to my brother’s room whenever he wasn’t around to play it. Eventually, he lost interest in guitar playing, and I became more brash in my playing it, eventually claiming some kind of ownership, a point that remains in dispute to this day. I have proven the old adage, however, that “possession is nine-tenths of the law.”

In high school I joined our church folk group—folk music became permissible and popular in church almost simultaneously with my shift from altar boy to musician. Folk music is all about melody, and I was an avid song writer, having written a whole collection of songs in junior high. Years later I would go back to my church for a wedding and found one of my old songs still in the church hymnal. I can remember the tune to this day, even though it’s one of those tunes one really wishes they could forget (see my discussion about “banal” hooks in Chapter 10). This background, however, gave me an affinity for melody, and would contribute enormously to my early career as a sound designer until I discovered that a gift for memorable tunes isn’t always a desired commodity in theatre composition.

In 1977, my first year as a graduate student, I used some Fripp and Eno music in a sound score for Sam Shepard’s Cowboys #2, a sound score that would eventually find its way to my first professional production at the Perry Street Theatre in New York that same year. A pharmacy student experienced the production, and was shocked to find out that someone else at Purdue University knew who Fripp and Eno were. He contacted our chair at the time, Dr. Dale Miller, and Dr. Miller put him in touch with me. That’s how I met my first business partner, Brad Garton, who went on to become director of the Computer Music Center at Columbia University, and a marvelous composer in his own right. Brad and I decided to work on a production of Shakespeare’s The Tempest at Purdue together, a major endeavor that would require an almost continuous sound score. Brad was a godsend, quite frankly because he had a boatload of gear, including a Teac 3340 four track reel-to reel tape recorder and a Micro Moog synthesizer, and I was a poor kid from Detroit who had spent his entire salary from the previous summer’s work at the Black Hills Playhouse in Custer, South Dakota to buy a Teac 2300 two track reel-to-reel. Even better, he knew how to use his equipment. What became immediately obvious was that I had a really nice affinity for melody and Brad was a genius at orchestration. We complemented each other really, really well. The show was a big hit, and Dr. Miller used the sound track to demonstrate the power of music in theatre in his Theatre Appreciation classes for many years.

Brad and I started our business together, Zounds Productions. We would operate quite successfully for five years, when Brad decided to study computer music at Princeton University with the legendary computer music composer, Paul Lansky. It was at that moment that I realized that Brad had a huge advantage over me in orchestration: he had studied classical piano as a child, and that taught him how to manage multiple simultaneous ideas in both his mind and his fingers. You can do that with guitar, but in a much more limited way. I started studying classical piano with Caryl Matthews at the ripe old age of 30, and continued for another 20 years. This would be too late to become a concert pianist, of course; our brains and muscles are just not plastic enough anymore at that age. But studying classical piano changed my life and taught me an incredible amount about orchestrating music. There is a reason why students in music schools are required to study piano in addition to their primary instrument. To this day, I recommend that students who want to become serious composers for theatre study classical piano; not so much for performance, but to learn how to think contrapuntally, and to study how other great composers have done so.

Around the same time I worked with Brad, I read a book about modern composition that said that, in the twentieth century, all composition was really a matter of managing consonance and dissonance. So while I was studying all the “rules” of voice leading and chord progressions and so forth, I was also experimenting with simply letting my ear be my guide, and manipulating tension and release using varying amounts of consonance and dissonance. It would be many years before I would be able to let go of melody enough to simply explore inciting emotion and creating/releasing tension through consonance and dissonance. Eventually I learned that theatre sound scoring is fundamentally about managing consonance and dissonance. We manage consonance and dissonance both vertically through simultaneously sounded notes (chords) and horizontally through sequences of notes (line/melody). The combination of these two practices is harmony, and every composer and sound designer needs to understand as much as they possibly can about how consonance and dissonance work in both visual music and audible music if they want to learn to manipulate them to carry the ideas of the play.

Most of us start to learn about consonance and dissonance by learning about Western music practice. This is fine and highly recommended, but it may also help to consider how consonance and dissonance came to be, and how our brain works in its most primitive way to perceive both, and how that, in turn, affects us emotionally. You don’t have to be Rachmaninoff to create effective sound scores. But you do have to understand how to manipulate consonance and dissonance over time to create and release tension and to manipulate emotions.

In this chapter, we’ll start by considering the next step in our evolutionary development, the near simultaneous development of language, visual-spatial arts, and music—not just proto-music, but music in the fullest sense of our original definition. For the first time, we’ll find evidence of non-referential manipulations of time as a form of art. That evidence comes in the form of primitive flutes capable of playing discrete pitches, which naturally introduces the possibility of consonance and dissonance. We’ll examine our modern brain to look for clues as to how the brain processes consonance and dissonance. Finally, we’ll consider how we use consonance and dissonance in theatre to incite emotions in our audience while they are simultaneously immersing them in the dreamlike world of the play.

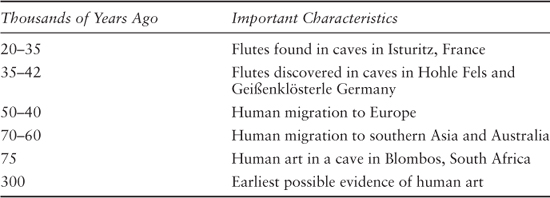

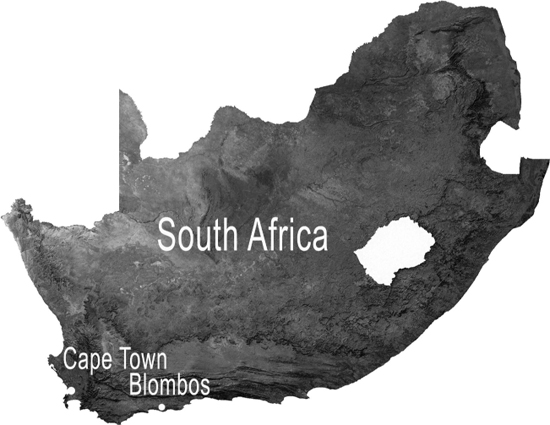

The Evolution of Line

When we last left our Homo sapiens sapiens ancestors, they were struggling with another mini ice age coupled with a giant volcanic eruption near Lake Toba on Sumatra in Indonesia 70,000 years ago. The eruption once again almost decimated the small human population. But this was a vastly improved iteration of Homo sapiens sapiens, one that had developed sophisticated tools, advanced language, and art. The emergence of art was most likely not a sudden discovery. Archaeologists have discovered other artifacts that may provide evidence of art created by Homo erectus as early as 300,000 years ago (Brahic 2014). But, starting around 100,000 years ago, archaeologists have discovered an increasing amount of evidence of the use of ochre (iron oxide that can be made into a pigment), bone, and charcoal in artistic applications. One very early and very significant example was found in a cave in Blombos, South Africa, dating back 75,000 years (Henshilwood et al. 2016).

Of course, like language, the evidence of the origins of fully developed music is often contested. Nevertheless, the Blombos cave provides uncontested evidence of human artistic endeavor, and there is general consensus that early humans had established a long tradition of geometrically engraving stones and other artistic activity by then (Henshilwood, d’Errico and Watts 2009).

During the next 40,000 years, humans developed the ability to draw pictures of themselves and the world around them. This was a monumental achievement, because for the first time, it allowed humans to record their stories outside of their memories. Merlin Donald calls it the third major cognitive breakthrough, after the mimesis of Australopithecines and Homo erectus and language development in Homo sapiens (Donald 1993a, 160; Donald 1993b, 35). Imagine the consequences of the slow movement from the biologically based, limited storage capacity of the human brain that expired with its human host, and the permanence of cave paintings. Such cave paintings would ultimately develop into written languages that used symbols to communicate and preserved ideas among members of each tribe (d’Errico et al. 2003, 31–32).

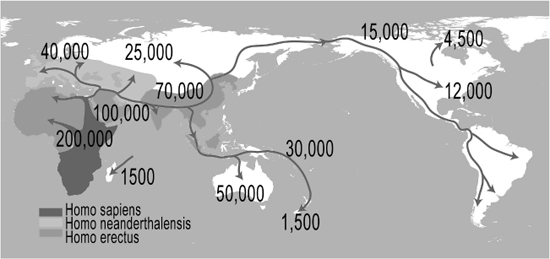

Humans once again spread out from Africa at a lessening of one of the worst periods of the ice age that occurred about 70,000 to 60,000 years ago1 (see Figure 7.8).

They first followed the coast through Yemen and into southern Asia, and then migrated into Australia at a time when sea levels were probably about 300 feet lower, creating a land bridge that allowed easier migration (National Geographic 2016). They were well-equipped to travel, having clearly developed much more sophisticated forms of language, music, ritual and visual art (among many other things). The development of language would have allowed them to pass their stories from one generation to another, thus beginning ancient “oral traditions” that would couple language and music in complementary ways essential to the development of theatre, especially in ritual. The sculptures, engravings, and cave and rock paintings that became commonplace would become the basis for written language that would eventually supplant the oral tradition.

Figure 8.4 Map of human migrations shows periods when Homo sapiens migrated throughout the world.

Credit: Map re-drawn by Altaileopard, Urutseg, and NordNordWest at https://commons.wikimedia.org/wiki/File:Spreading_homo_sapiens_la.svg, with data from Göran Burenhult, The First People, Weltbild Verlag, 2000. ISBN 3-8289-0741-5. Accessed July 21, 2017. Adapted by Richard K. Thomas.

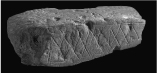

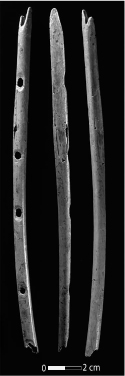

By about 50,000 to 40,000 years ago, the earth’s climate had warmed sufficiently for humans to migrate to Europe (Higham et al. 2011; Benazzi et al. 2011). This particular migration pattern is important to us for two reasons. First, it’s important because we are marching toward the establishment of the first autonomous theatre in ancient Greece, which is definitely in the direction of Europe from Africa. Second, the first hard evidence of human musical instruments was discovered in caves in Germany and France about 35,000 years ago. The German flutes were discovered in the Hohle Fels cave in southwestern Germany, and dated about 35,000 years ago (Conard, Malina and Münzel 2009), while flutes found in the nearby Geißenklösterle caves dated between 35,000 and 42,000 years ago (Higham et al. 2012).

The French flutes were discovered in a cave in Isturitz, France. They are estimated to be between 20,000 and 35,000 years old and are reasonably complete (d’Errico et al. 2003, 39). The flutes from the above archaeological sites are generally accepted as authentic musical instruments.2 These flutes indicate a distinct change in the way humans made music, including a knowledge of discrete pitches and scales necessary for the creation of melodic lines, and even harmonies if two are played simultaneously.

Consider how the development of musical line corresponds to a certain degree with the development of visual line in art. At first glance it appears that visual line predates auditory line by tens of thousands of years or more. But this may not necessarily be the case. One of the archaeologists who discovered the German bone flutes, Nicholas J. Conard, suggested that the “finds demonstrate the presence of a well-established musical tradition at the time when modern humans colonized Europe” (Conard, Malina and Münzel 2009). It seems likely that the flutes weren’t invented in the German caves, but were brought by the migrants who traveled and settled there. d’Errico and others have noted a distinct similarity between the German and the French flutes, even though they were separated “in time by hundreds or even thousands of years, making a common, much earlier origin ‘increasingly possible’” (d’Errico et al. 2003, 45–46).

Figure 8.5 Map of Germany shows location of Hohle Fels and Geißenklösterle caves.

Credit: Best-Backgrounds/Shutterstock.com. Adapted by Richard K. Thomas.

Consider also, the technical challenges of crafting a workable flute compared with picking up a burned stick and creating a charcoal drawing. We should also expect that the evolution of a sophisticated instrument like a flute came much later than more simple developments in music. Many potential primitive musical instruments consist of materials that decay over time (stretched animal skins over hollowed out logs for drum heads, for example). A flute could indicate the presence of an advanced musical mode of expression that developed between the time when vocal expression evolved from unfixed pitch (such as that of gibbons) and when fixed pitch mode instruments appeared (as reflected in the bone flutes). It’s hard to imagine fixed pitch vocal music coming after the engineering of flutes, particularly, when, as we will see in later sections, the human brain had much earlier evolved predisposed to consonance and dissonance. In the final analysis, given the ongoing controversies as to what constitutes visual art and what constitutes music, the dates and evidence are murky enough to allow us to imagine that music and line, in both their visual and auditory manifestations, may have developed side by side as human cognitive abilities developed. In the big picture of evolution, both visual and auditory line appear at very similar points in time.

Figure 8.6 A bone flute from Hohle Fels.

Credit: Adapted by permission from Macmillan Publishers Ltd., Nature. Conard, N.J., M. Malina, and S.C. Münzel. “New Flutes Document the Earliest Musical Tradition in Southwestern Germany.” Nature 460: 737–740. doi:10.1038/nature08169, copyright 2009.

It is one thing to understand that music evolved alongside visual art in these prehistoric cultures. However, in this book we are even more interested in the answer to the questions, how was music used, and for what purpose? Caves in which both art objects and musical instruments were found, were often, if not typically, used as gathering places. The cave at Isturitz, for example, seems to have been used as a large gathering place in the spring and fall. Ian Morley cites additional studies suggesting that the acoustics of the caves themselves would have been “highly significant.” You can hopefully imagine the reverberant possibilities of a flute played inside a cave of this sort (remembering that space is also an element of music), not to mention that of the human singing voice. Morley also describes a veritable orchestra of bones, beaters and rattles at another cave in Mezin, Ukraine, dating to about 20,000 years ago found in the same cave as piles of red and yellow ochre. By this time ochre was widely used symbolically throughout the ancient world, including in burials, cave and rock paintings and so forth (Henshilwood, d’Errico and Watts 2009, 28). Morley suggests these caves were used for large scale gatherings over a very extended period of time. He concludes that it would be hard to imagine that ritual did not play an important role in the gatherings of this culture, and that music would play an important role in the rituals: “The performance and perception of music can provide the perfect medium for carrying symbolic (including religious) associations because of its combination of having no fixed meaning [italics mine] … whilst having the potential to stimulate powerful emotional reactions” (Morley 2009, 170–171). Music as a Chariot.

In this period between 100,000 and 30,000 years ago, we witness the development of art, music and ritual on a scale unprecedented in any other species. While hard evidence is hard to come by due to the temporal nature of the events and practices, evidence does exist of art in burial sites dating back to 100,000 years ago. Henshilwood and others suggest that “elaborated burials … are considered to be the archaeological expression of symbolically mediated behaviors” (Henshilwood, d’Errico and Watts 2009, 27). Even the simple quartz dropped into the Sima de los Huesos cave at Atapuerca in Northern Spain (discussed in Chapter 7) suggests that humans were considering where they came from, and where they went after they died. It is hard to imagine a ritual that humans developed to assist in the transition from death to whatever came after that did not involve music. It seems much more than chance, that line (or its audible manifestation in melody) created possibilities for the social expression and experiencing of emotion unmatched in other species.

Consonance and Dissonance

Something as simple as holes in a pipe can mean quite a lot in human development. It provides concrete evidence of humans sequencing sounds by discrete pitch steps. Remember in Chapter 4 when we talked about the definition of music we marveled how music, unlike visual objects or language had the unique property of being sequential and simultaneous? With these pipes we now have evidence of an ability to produce discrete pitches both sequentially and simultaneously. Sequential production of notes on a single pipe produces an audible line (melody), with characteristics similar to the lines on the red ochre pieces uncovered in the cave at Blombos. Simultaneous production of notes (on different pipes) results in harmony. The foundation of harmony is not scales or modes as you might think. That’s because scales and modes are culturally derived ways of dividing up pitches; they vary from country to country and culture to culture. What doesn’t change wherever you go, and is as much a foundation of language and theatre as it is of music, is consonance and dissonance.

What Is Consonance and Dissonance?

The musical concept of consonance and dissonance appears to hold across all cultures and time periods. Roederer specifically indicates this in his book The Physics and Psychophysics of Music, “Tonal music of all cultures seems to indicate that the human auditory system possesses a sense for certain special frequency intervals—the octave, fifth, fourths, etc.” (Roederer 2008, 170). This is important because in order for the phenomenon to be evolutionary, we should see it across all cultures, and it should have been that way for a long period of time. We should see evidence of it everywhere. And this is exactly what we find with consonance and dissonance. A wide variety of research has shown that cultures around the world perceive consonance and dissonance in similar ways. This applies, according to Tramo and others, “over a wide range of musical styles enjoyed by people throughout much of the industrialized world: contemporary pop and theatre (including rock, rhythm and blues, country and Latin-American), European music from the Baroque, Classical, and Romantic eras (1600–1900), children’s songs, and many forms of ritualistic music (e.g., church songs, processionals, anthems, and holiday music).” Tramo’s studies suggest that all of us have “common basic auditory mechanisms” for perceiving consonance and dissonance (Tramo et al. 2003, 128; Fritz et al. 2009). In this part of the chapter, we’ll attempt to identify those mechanisms.

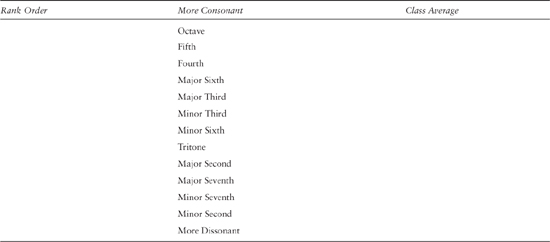

What exactly is consonance? According to Tramo, consonance means “harmonious, agreeable, and stable,” while dissonance means “disagreeable, unpleasant, and in need of resolution” (2003, 128). Not everyone, including me, fully agrees that dissonance is a negative thing, and so I tend to simply think of consonance as eliciting a state of calm, rest and resolution, and dissonance eliciting a state of arousal. Consonance is determined by the simplicity of the ratio of two pitches (frequencies) x:y, where y is the lower of the two pitches. The simplest of these ratios is 1:1 (unison), followed by the octave (2:1), fifth (3:2), fourth (4:3), major third (5:4) and minor third (6:5). At the other extreme, dissonant intervals do not have simple numerical ratios, for example, minor seconds (16:15) and tritones (45:32), approximately including the augmented fourth or diminished fifth. Humans can tolerate about 0.9%–1.2% of error in determining pitches, however, which allows us to use the equal-tempered scale in Western music to compensate for problems caused by reducing intervals in an octave to simple numerical intervals (i.e., so-called Pythagorean tuning) (2003, 132). These constraints apply to frequencies from about 25 Hz to 5 kHz (2003, 130).

Subcortical Consonance and Dissonance Perception

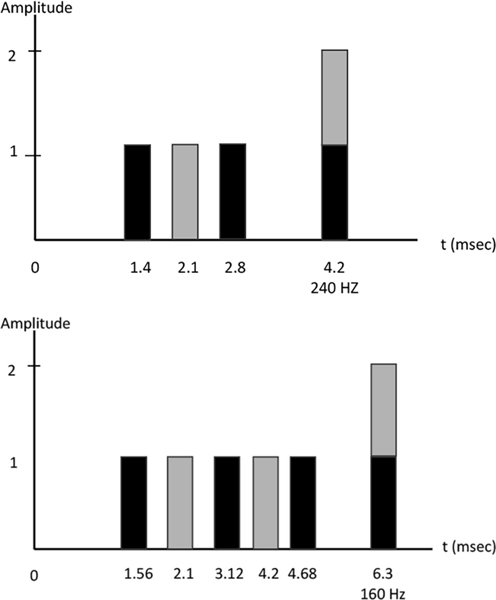

As we’ve discussed before, the basilar membrane in the cochlea converts sound waves into neural pulses (see Figure 7.10). These pulses are separated along the basilar membrane by frequency. When they are separated, the neural pulses then fire at intervals consistent with the amount of time it takes to complete one cycle. A 480 Hz (cycles per second) tone would produce a neural spike along the particular neural fibers dedicated to that frequency every 1/480 seconds, or every 2.1 milliseconds (msec). The greater the amplitude of the sound wave, the greater the number of neural pulses firing at that time interval. Now if we simultaneously play a tone that is a musical fifth above that, at 720 Hz, the neural fibers dedicated to that frequency will also start firing every 1/720 Hz, or every 1.39 msec.

But here’s where things start to get interesting. Every once in a while, an auditory stimulus will include both frequencies, and that will generate the corresponding neural pulses on their respective neural fibers at the same time. The first frequency triggers neural pulses at 2.1 and then 4.2 milliseconds; the second at 1.4, 2.8 and 4.2 milliseconds. They have a simple numerical ratio to each other that causes their pulses to coincide every two pulses for 480 Hz, and every three pulses for 720 Hz, a 3:2 frequency ratio that causes pulses to coincide every four msec.

Also note that there will be a series of simultaneous pulses then at 4.2 msec (240 Hz), 8.4 msec (120 Hz), 12.6 msec (80 Hz), and so forth, for as long as the stimulus lasts (although very quickly they fall outside the frequency range of audible hearing). This all happens in the cochlea, before the sound ever reaches the brain, but it turns out that the brain really likes these short interspike intervals, or ISI, as they are called.3

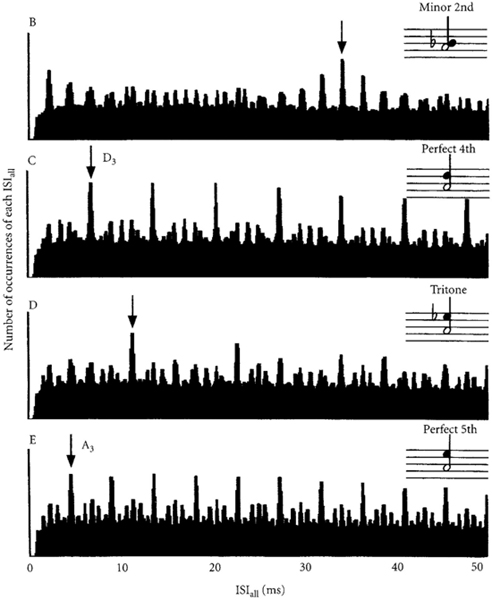

Now consider what happens for the musical interval of the fourth, having a basic frequency ratio of 4:3 such as 640 Hz and 480 Hz (see Figure 8.7). These tones will only fire simultaneously on every fourth cycle of 640 Hz (1/640 Hz = 1.56 msec; 4 × 1.56 msec = 6.3 msec), and every third cycle of 480 Hz (1/480 Hz = 2.1 msec; 3 × 2.1 msec = 6.3 msec). So they still fire at the same time every 6.3 msec (160 Hz), 12.6 msec (80 Hz), and so forth. The interspike intervals of the fourth take longer to repeat than the fifth, and humans subjectively perceive that to be less consonant. In musical terms when you have a simple numerical ratio of frequencies combining together, you will often perceive a frequency that is the difference between the two frequencies (in the preceding example 640 Hz − 480 Hz = 240 Hz)—even if the lower frequency (240 Hz) is not acoustically sounded! This is called the missing fundamental, a phenomenon particularly observed in the way frequencies are emitted by certain bells when struck. Now, we begin to see a neurological basis for it.

Finally, consider an interval that everyone perceives to be dissonant, the minor second, which has a frequency ratio of 16/15. We’ll spare you the math, but imagine how long it takes before the first spike frequency repeats 16 times, while waiting for the second frequency to repeat 15 times in order to occur at the same time.4 Much research has demonstrated that this periodicity is extremely important in consonance and dissonance perception (Stolzenburg 2015, 216).

In the preceding examples, we’ve mentioned such Western concepts as musical intervals of the fifth, the fourth and the second. We must remember, however, that we used these as convenient examples from a system with which most everyone is familiar. We could just as easily have used examples from other cultures (OK, it would have taken a bit more work). The biological phenomenon we are investigating is that there appears to be a continuum in all music and cultures from more consonant to more dissonant.

But periodicity is not the only factor the brain perceives relative to consonance and dissonance. Another factor that appears to contribute to the perception of dissonance is called “roughness.” As early as 1862, Hermann Helmholtz first identified roughness as a function of the cochlea in his landmark book On the Sensation of Tone (Helmholtz 1877, 159–173). Roughness is caused by two frequencies that are very close together creating a variation of the loudness of the sound. If one note is tuned to 220 Hz and the other plays at 225 Hz, we will hear a rising and falling of the amplitude of the combined tone corresponding to the period of 5 Hz, or oscillating between loud and quiet every 200 msec (225 Hz − 220 Hz = 5 Hz, 1/5 Hz = 200 msec).

This is called a “beat” frequency. If the difference between the two frequencies is between 20 and 200 Hz, the resulting combination will sound “rough.”5 For small frequency differences, within the just noticeable difference threshold of about 1%, the pitch of the combined sound will now be perceived as a single frequency fluctuating in amplitude (getting louder and softer) (Tramo et al. 2003, 138).

Guitar players use beat frequencies to tune their guitars. As they bring the strings closer together in pitch, they can hear the pitch differences until at a certain point, they no longer hear pitch differences, just a single pitch varying in amplitude according to the beat frequency (i.e., the frequency difference that the two strings are out of tune). To tune the guitar, they simply try to slow the beat frequency until it stops altogether. At that point, the two strings are exactly in tune.

The periodicities of consonances and dissonances can also be detected in other parts of the neural pathway beyond the cochlea, however, in contrast to beat frequencies and roughness which appear to only be detected in the cochlea (Fritz et al. 2013, 3099–3104). Tramo and others wanted to see how these periodicities manifested themselves in other parts of the auditory system, so they measured over 100 auditory nerve fibers of our reasonably close mammalian cousins, cats.

Keep in mind that the human auditory nerve contains about 30,000 of these fibers, but Tramo’s group measured just the auditory nerve fibers associated with the most consonant and dissonant intervals in cats (fifth, fourth, minor second and tritone). Their results indicate that if you integrate the measurements from the individual nerve fibers together, you will get the same periodicities as in the acoustic waveforms, including the subfrequencies generated (i.e., the missing fundamental). However, when Tramo’s group measured dissonant intervals like the minor second and the tritone (diminished fifth) they found little or no correlation to the pitches in the acoustic intervals (Tramo et al. 2003, 136).

Fritz and others put two acoustic tones in separate ears so that the cochlea couldn’t process the two frequencies simultaneously (Fritz et al. 2013). With the cochlea out of the way, they found that the inferior colliculi in the midbrain were also involved in the perception of consonance and dissonance (see Figures 7.10and 7.11). Remember that the inferior colliculus is the area in the midbrain between the pons (lateral lemniscus) and the thalamus that integrates the two streams of auditory data from both ears.6

Credit: Hellwig, Ansgar. 2005. “Beating of Two Frequencies.” Accessed June 20, 2017. https://commons.wikimedia.org/wiki/File:Beating_Frequency.svg. CC-BY-2.5. https://creativecommons.org/licenses/by/2.5/deed.en. Adapted by Richard K. Thomas.

All of this research further supports the well-established conclusions that consonance and dissonance perception is biologically based, and probably not related to “musical training, long-term enculturation, (or) memory/cognitive capacity” (Fritz et al. 2013, 3100). Even in pitch and consonance perception, it’s all about time. The consonance of two tones is the amount of periodicity (synchronization in time) that exists between tones, and according to Stolzenburg, it does not matter whether the tones occur simultaneously or sequentially.7 Our brains determine consonance by determining periodic patterns of neuronal firings; the shorter the time between the repetitions of the periodic pattern, the more consonance we perceive (Stolzenburg 2015, 216–217).

There is one problem with many of these tests: they have been undertaken almost exclusively using dyads (two-note chords). Yet we also perceive triads and other sound structures as being more or less consonant. Stolzenburg proposed a mathematical solution that explained how the brainstem can still perceive periodic patterns even for three- and four-note chords, including for sequential notes in melody. Remember that the hair cells in the cochlea only have a finite amount of resolution, that is, they can only discriminate pitch to a certain degree, about 0.9%–1.2%. A typical person will not practically be able to tell the difference between a 991 Hz tone and a 1,000 Hz tone. Because the brain has this kind of “slop” in determining pitches, it is possible that the cochlea and brainstem work together to smooth our perception of intervals to the simplest numerical ratios possible within that ~1% range.8 As it turns, out, when you have a little more room to work with like that, it’s easier to find simple ratios (Stolzenburg 2015, 222).

Credit: Tramo, M.J., P.A. Cariani, B. Delgutte and L.D. Braida. 2001. “Neurobiological Foundations for the Theory of Harmony in Western Tonal Music.” Annals of the New York Academy of Sciences 930: 92–116. doi:10.1111/j.1749–6632.2001.tb05727.x. Copyright © 1999–2017 John Wiley & Sons, Inc. All rights reserved.

Stolzenburg demonstrated that even when there are multiple pitches generated either simultaneously in chords or sequentially in melodies, there is still a relative periodicity that arises due to complex relationships between the tones that can be simplified not only on paper mathematically, but also by the human auditory system. How does this happen? Stolzenburg suggests that the brain does not need to perform the sort of complex mathematical manipulation he performs in his paper. Rather, our neural system may find the relatively simple numerical ratios available within the less than noticeable differences we humans can perceive. This occurs through synchronization with so-called oscillator neurons. Basically oscillator neurons are neurons in the brain that communicate with each other by firing at a set number of frequencies. According to Stolzenburg, “the external signal is synchronized with that of the oscillator neurons, which limits signal precision.”

Does all this sound familiar? In case you haven’t noticed, we seem to be describing consonance as a form of entrainment, very similar to entrainment in rhythm, but with much shorter time periods, and correspondingly much higher frequencies. Just such a model for neural entrainment has been proposed by Heffernan and Longtin. And while there are inherent problems in the model, “for simple integer ratios of stimulus frequencies … the oscillators entrain one another” (Heffernan and Longtin 2009, 104).

Such a conclusion argues once again for an understanding of music, and subsequently theatre (in as much as theatre can be shown to be a specific kind of music) as something much more than a cognitive process. Music and theatre involve a unique experience that functions at the subconscious level. Both harmonic periodicity and rhythmic entrainment are related to the temporal separation of neural spikes, and both seem to originate at very primitive levels of brain function.

Consonance and dissonance are such fundamentally perceived phenomena that we may consider them to be to line (melody) and texture (harmony) as pulse is to rhythm. They help to immerse us into the musical dreamlike world of theatre even without our consciously paying attention to them.

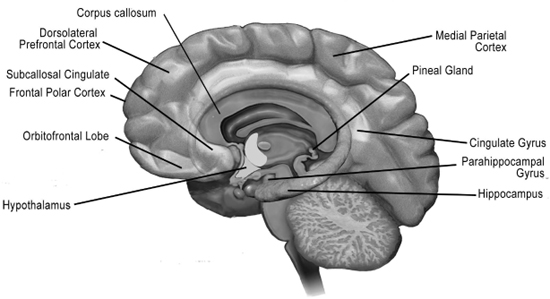

Cortical Consonance and Dissonance Perception

In the last section, we explored how much processing has been accomplished in the cochlea, the brainstem, and the midbrain even before the stimulus reaches the auditory cortex in our very human “new brain.” This processing probably includes transducing the signal into discrete frequencies in the cochlea, and then determining the simplest numerical frequency ratios possible through entrainment of oscillatory neurons in the auditory nerve, including further processing in the inferior colliculus and other organs in the brainstem and the midbrain (Heffernan and Longtin 2009, 97). The connections that we have with our emotional reactions, however, require greater processing power at higher levels of the brain. At some point, our neocortex also gets involved, and it must communicate not only with the auditory pathway, but also with our more primitive limbic system, the parts of our brain responsible for emotions and memory (see Figure 5.9).

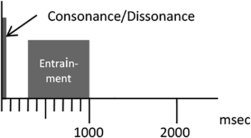

The auditory cortex first sorts out elements such as pitch and roughness within about 100 msec of the ear perceiving the sound (Koelsch and Siebel 2005, 578), with the right front of the auditory cortex activating for dissonance (Foo et al. 2016, 16). Experiments also show that people with lesions in the auditory cortex have difficulty perceiving consonance (Tramo et al. 2003, 144–146).

The extracted features next enter into the echoic memory, a short term holding space primarily in the auditory cortex that temporarily stores the sound (depending on who you talk to), in order for the dorsolateral prefrontal cortices to analyze it9 (Sebri et al. 2004, 69; Alain, Woods and Knight 1998, 23). Echoic memory is the auditory form of sensory memory. Memory researcher Nelson Cowan defines sensory memory as “any memory that preserves the characteristics of a particular sensory modality: the way an item looks, sounds, feels, and so on” (Cowan 2015). As an example, the visual form of sensory memory, iconic memory is limited to about 200 msec.10 We experience visual iconic memory when we watch children twirl sparklers; if they twirl them fast enough, we see the patterns sustain, as circles, loops and so forth. The auditory cortex holds information in its echoic memory for enough time so that it may confer with the rest of the brain and process the sound.

In a 1999 study, Blood and others demonstrated that different parts of the brain activate after the initial auditory processing depending on whether the stimulus is consonant or dissonant.

Dissonance activated the parahippocampal and medial part of the parietal cortex (both associated with memory). Consonance activated the orbitofrontal lobe (decision making), medial subcallosal cingulate (which plays a key role in processing emotions), and right frontal polar cortex (right behind our forehead, whose function is not well understood). The Blood group’s research also supported the general thinking that the right hemisphere of the brain activated more for emotional processing of music, further demonstrating that the brain processes emotional responses to music separately from more perceptual process (In theatre, we often don’t want the audience to be consciously aware of the sound score, we just want to use the score to incite an emotional reaction, so this is good news that perceptual emotional and perceptual responses can be separated.) (Blood et al. 1999).

Credit: “http://Blausen.com” Blausen.com staff. 2014. “Medical gallery of Blausen Medical 2014”. WikiJournal of Medicine 1 (2). DOI:10.15347/wjm/2014.010. ISSN 2002–4436. CC-BY-3.0 (https://creativecommons.org/licenses/by/3.0/deed.en). Adapted by Richard K. Thomas.

A decade later in 2009, Dellacherie and many others were able to better identify the key cognitive processes in perceiving consonance and dissonance, and to document the order in which different parts of the brain processed both. Their work confirmed the early involvement of the auditory cortex we have already discussed, especially showing that dissonance is processed early in the right auditory cortex. About 500–1,000 msec later, the orbitofrontal cortex became activated as it analyzed the nature of the sounds. Finally, at a very late 1,200–1,400 msec, our old friend the amygdala activated, indicating a subconscious emotional reaction to the stimulus music. The researchers suspected that the late activation of the amygdala occurred because the test subjects’ attention was consciously focused on a different task, and their perception of the emotional properties of the stimulus was subconscious (Dellacherie et al. 2009, 339).

Finally, a 2014 study by Omigie and others was able to look very closely at how signals flow between three areas: the auditory cortex, the orbitofrontal cortex and the amygdala. The subjects had been implanted with depth electrodes (planted deep within the brain) for presurgical evaluations for epilepsy. This provided an opportunity to study more precisely where and when interactions took place than normal electroencephalograph (EEG) measurements, which place electrodes on the scalp. The researchers confirmed the general results of Dellacherie and many others that came before, but this group was able to show that the amygdala actually modulates (or modifies) the processes of the orbitofrontal cortex and the auditory cortex, indicating that emotional responses and memory have a strong influence over our conscious perception of consonance and dissonance.

How the different parts of the brain, such as the auditory cortex, the amygdala, and the orbitofrontal lobes communicate with each other turns out to be quite important. Neural oscillations called brain waves fire between cortical areas to synchronize processes in different parts of the brain. Two particular frequency regions, the theta (4–8 Hz) and the alpha (8–13 Hz), were found to particularly activate in Omigie’s tests. The theta band is noted for processing emotions. In their study, the auditory cortex activity peaked at 100 msec, and then the orbitofrontal and amygdala became increasingly activated between 200 and 600 msec, with the amygdala modulating (changing and influencing) both the orbitofrontal and the auditory cortices (Omigie et al. 2015, 4038–4039).

In plain and simple terms, Omigie and others found that it was our old friend the amygdala, the center of emotional reactions that was modulating the orbitofrontal lobe, the center of decision making, not the other way around: “the amygdala is a critical hub in the musical emotion processing network … one part of this role … might be to influence higher order areas which are involved in the evaluation of a stimulus’ value” (2015, 4044). In theatre, we use consonance and dissonance as an emotional stimulant to modulate (change) what our audience is thinking about what they hear, because the researchers found that “the causal flow from the amygdala to the orbitofrontal cortex (was) greater than in the reverse direction.” If the reverse direction were true, we would analyze the intervals, and use that information to tell us whether we felt happy, sad, aroused and so forth. In acting we call such a technique “indicating,” when an actor indicates an emotion by first thinking about it, and then creating an action that points to the emotion, rather than truly feeling the emotion and inciting us to feel it, too. We almost always (never say never!) want to avoid indicating emotion with music. That’s why I avoid describing music as a “communication.” We work hard as composers and sound designers to incite emotion in our audience, not to tell them what emotions they should be feeling. Isn’t it curious that our reaction to this can actually be measured in how the brain processes it?

Consonance and Dissonance in Theatre

Omigie and the work of others brings us to the fundamental relationship between consonance/dissonance and theatre. Let’s start by breaking this relationship down into absurdly simple relationships: consonance = resolution; dissonance = conflict. Plays are about conflict, most typically a protagonist in conflict with an antagonist. The arc of a simple play is that the general situation at the beginning of the play is fairly resolved, and then something happens (the inciting incident) to create a conflict between the protagonist and the antagonist. The conflict builds throughout the play until the climax, where, one way or another, the conflict gets resolved. In its most simple form musically, plays go from consonance to dissonance to consonance again, taking the audience on an emotional journey to which we attach the fundamental ideas/story/meaning of the play. In real world plays that last for hours, such a simple scheme would be hard to sustain. So, in actuality, there is a lot of back and forth between consonance and dissonance in a play, and a lot of shades in the continua between them. As composers and sound designers, we don’t necessarily track the conflicts tit for tat. We might have much more sophisticated and devious agendas for the emotional journey of our audience.

For example, in the opening scene of Arthur Kopit’s Indians that I composed for Purdue University in 1992, we started out with a grand and very consonant circus theme that started impossibly slowly at a tempo of maybe 30 bpm, slowly revealing Buffalo Bill 70 feet away beyond the fire curtain and at the back of the paint shop in the old Loeb Theatre. Over the next minute and a half, we brought Buffalo Bill, tiny due to his distance from the audience, to life, slowly increasing the tempo as he moved from slow motion to live action toward downstage center, thus never giving up the triumphant consonance of the musical theme. By the time he got to the downstage of the theatre, at the final tempo of 130 bpm, he was much larger than life, much grander than humanly possible, and very, very subtly shallow and hollow, isolated in the spotlight with a creeping look of horror in his eyes. The music never made us feel that; quite the contrary, the music was boundlessly happy and triumphant. It was the contrast between the consonance of the music and director Jim O’Connor’s brilliant visualization that created such a powerful feeling of uneasiness in the audience from the very opening of the show. And all with a very happy, consonant sound score. The sound score does not always need to fundamentally follow the emotional journey of the audience in order to fundamentally affect that journey. The great filmmaker Kurosawa suggested that techniques such as this actually increase the power of the medium:

I changed my thinking about musical accompaniment from the time Hayaska Fumio began working with me as a composer of my film scores. Up until that time film music was nothing more than accompaniment—for a sad scene there was always sad music. This is the way most people use music, and it is ineffective. [Ebrahimian 87] …

Kurosawa ultimately came to the following perhaps not-so-surprising conclusion:

Cinematic strength derives from the multiplier effect of sound and visual being brought together.

(Kurosawa 1983, 107)

Line/Melody

As our discussion of the relationship between theatre and music develops, it is inevitable that our attention should turn to line. Stravinsky said, “I am beginning to think, in full agreement with the general public, that melody must keep its place at the summit of the hierarchy of elements that make up music … Melody is the most essential of these elements…. What survives every change of system is melody” (Stravinsky 1947, 41–43). The musical line is contained in melody by definition: Webster’s states that line is “a succession of musical notes esp. considered in melodic phrases” (1963, 491). We are fortunate, as in the case of rhythm and color, to be sharing, from the beginning, a coincidental vocabulary with the visual artists.

Unfortunately, defining melody as “any succession of single tones” (Goetschius 1970, 64) is simply not adequate if we are to include the “mystique” of melody. Since the musical world is so sharply divided between whether a melody is achieved by intuition or by logical manipulation, a more concise definition is impossible. A better definition, first postulated by F. Busoni may, however, include a “list of ingredients”:

A row of repeated ascending and descending intervals which, organized and moving rhythmically, contains in itself a latent harmony and gives back a certain atmosphere of feeling; which can and does exist independent of text for expression and independent of accompanying voices for form, and in the performance of which the choice of pitch and of instruments exercises no change over its essence.

(Holst 1966, 13)

The smallest element of the melodic line is the single tone. I have discussed these single tones individually in the section on color. It is important to make the distinction that melody is only concerned with the relative pitch of the perceived color, and exists completely independently from the timbre of the tone-producing device.

Western intervals divide the octave into a series of well-defined steps that proceed in neurologically well-defined ways. It fairly meticulously follows the brain’s perception in terms of increasing complexity of ratios from consonance to dissonance: Perfect consonances include the unison (1:1), then the octave (2:1), then the fifth (3:2) and finally the fourth (4:3). Imperfect consonances come next, starting with the third (5:4), then the sixth (5:3), then the minor third (6:5), and finally the minor sixth (8:5). These progress to increasing dissonances: the second (9:8), the seventh (15:8), the minor second (16:15), the minor seventh (15:8) and finally, the tritone (45:32) (LoPrestor 2009, 146). It is interesting to note, however, that of the 1,500 possible pitches that can be distinguished by the average human at medium intensities, Western music uses only about 100 (Lundin 1967, 68). Seashore believed that the reason for this was that “our present half-tone step is as small a step as the average of an unselected population can hear with reasonable assurance, enjoy, and reproduce in the flow of melody and harmony in music.” (Seashore 1967, 128).

It is equally important for us to not consider melody simply in terms of Western intervals. In some cultures, you can have 70 or more points marked off within an octave. In all cultures you still have the same basic relationship, moving on a scale from consonant to dissonant, from calm to arousing. The general physiological sensations produced in the brain work on all sound, not just traditional Western music intervals. The more that frequencies combine in simple numeric ratios, the greater the perceived emotional stability. Tension is introduced as fundamental frequencies combine that are close to each other, or generate harmonics that are close to one another, and loud enough to factor into the perceived timbre. A fricative consonant is more dissonant than the simpler harmonic structure of the vowel “ooh.”

Modern composition and sound design provides us with endless opportunities to explore consonance and dissonance, either in the well-established culturally acquired forms of Western music, in the scales of other cultures, or more primitively, in simply exploring the range of pitch and harmonic relationships that we discover on the continuum between consonance and dissonance. We don’t normally associate consonance and dissonance with naturally occurring sounds or human speech, but we should, because it’s important in understanding how we combine music and speech in theatre. The screech of a crow is naturally more dissonant than the purer tones of a robin or a sparrow.

We must also consider prosody as a type of consonance and dissonance. A mother attempting to soothe her child will use more consonant tones with a simpler harmonic structure than the dissonant tonal structure of a person shrieking in anger at the checkout counter. We generally determine the gender and size of a person subjectively through our perception of the fundamental frequency of their voice. We determine vowels by the relative strengths of the first and second harmonics of vocalizations (Giraud and Poeppel 2012, 228–229). And of course, we humans can create all sort of inharmonic frequencies with our voices when we get agitated. Consonance and dissonance apply as much to the prosody of an actor’s voice as they do to the music that orchestrates their speech melody.

Beyond the inherent consonance and dissonance of complex sounds, it is only when we associate these single tones together that line is formed, just as visual lines cannot be formed by a single point. In theatre, we have melodies and we have tunes. I learned this from Imogen Holst, who wrote a whole book about the latter, called Tune. Melodies don’t exist well without their orchestrations; they fulfill and reveal themselves in their accompaniment. So we should talk about those more in the next section when we discuss harmony. Tunes, on the other hand, are self-contained; and after hearing one a few times, we can hum and sing a tune on its own without any accompaniment, and it still captures us. We refer to the part of the tune that deliciously sticks in our memory as a “hook.” In popular music it’s most often the chorus or a part of a chorus. Since hooks have a lot to do with memory, we’ll talk much more about them in Chapter 10 when we talk about memory. For the time being, let me just attempt to put this little hook into your memory: tunes have been very important to me in composing for theatre, because of how easy it is to attach important ideas and meanings of the play to them that the audience can then carry out of the theatre.

Harmony

For me, creating melodies and then orchestrating them to score scenes is much more of a craft than finding a tune that metaphysically transcends “any succession of single tones.” I learned this from Jack Smalley, or more properly from his book Composing Music for Film (Smalley 2005). Jack Smalley is a prolific composer of music for film and television. Unlike this author, Smalley is a man of few words, but he chooses them carefully and makes every one count. He wrote a short, amazingly concise book that provides a wealth of precise techniques for composing and orchestrating music for film. You have to order the book directly from Mr. Smalley, but it’s well worth it, and I highly recommend it. The most important thing he taught me was how to craft a score, and not to worry about inspiration. Smalley starts from the development of a theme—not a tune, but the smallest group of notes you can form into an identifiable, useable shape, typically one, two or three measures long. One then develops this theme systematically into a complete movie score. As I’ve mentioned before, the opportunities to create and use a memorable tune in theatre are somewhat rare. The opportunities for crafting a cohesive sound score in theatre are almost endless.

Line (melody) usually plays a somewhat diminished role in theatre scoring due to the simple fact that the actors, playwright and director have already crafted the lead melodies using the prosody of dialogue. Our job as composers and sound designers then becomes to orchestrate those melodies, and there’s plenty we can do with consonance and dissonance to incite emotions in our audience beyond melody, and—significantly differing from film—in our actors. In film the score happens after the actors have “left the building,” so to speak. In theatre, the actors also experience the underscores we create, a point that theatre composers must never forget. It is a much more intimate experience composing music for live theatre, because we share the character with the actor in a much more concrete way. Get the scoring right and the actor will adore you. Mess it up and the actor may try to get you fired.

We don’t necessarily need musical instruments to score the actor’s prosody either. The room tone, or a silent recording of the ambience in a room (which is not really silent at all!), can be manipulated for consonance and dissonance over long periods of time. I used such a technique with the (synthesized) wind in the cave for Joel Fink’s production of Edgar Allen Poe’s Cask of Amontillado in Chicago; manipulating it from a relative consonance when Montressor and Fortunato first entered to a stronger dissonance as the scene turned ugly and Montressor walled up Fortunato in the niche of the wine cellar. I almost never let my ambiences just sit in a scene; I actually learned that trick from my old partner Brad Garton, who taught me that, in music synthesis, never let sustained tones sit unchanged. Always put some sort of subtle movement into them, otherwise, their constancy will draw attention to itself. And as long as we are putting some subtle movement in our sustained tones, why not take the opportunity to very, very subtly manipulate the emotional mood in the scene?

The most common compositional technique using traditional music instruments is the pad. We first met pads back in Chapter 2, which should be just as good an indication as any that they reach the most primitive parts of our brains. A pad is simply a sustained tone, chord, or complex texture that can be sustained for as long as one desires in a scene. Pads are insanely popular, and quite frankly, hopelessly overused, because they work so well. If you want to create tension in a horror scene what do you do? You take two violins and you put them a minor second apart, right? And then you just sustain that dissonance to habituate the audience in an endlessly tense suspension until whatever startle effect you need. Everybody knows it and everybody’s done it, and the thing is, it works, without us thinking about it. I distinctly remember using it in a world premiere of Ross Maxwell’s play His Occupation, and then mischievously watching the audience physically jump each night at the startle moment. We don’t have to intellectually think, “oh my, here comes the monster jumping out of the closet!” You don’t have to know anything about music in order for it to work. It is quite easy to manipulate emotions using pads; one simply has to migrate the tonal clusters from increasing consonance to increasing dissonance based on the simple numerical ratios discussed earlier. Along the way, a wide range of emotions can be achieved and important ideas attached without ever pulling focus from the lead melodies of the actors. More sophisticated composers, however, will put great effort into their pads, burying extremely subtle but powerful rhythms and textures within them that nevertheless appear at first listen unchanging and habituating.

It might not be going too far to suggest that most underscoring either starts with a pad, emphasizing consonance and dissonance, a pulse, emphasizing rhythm, or both. If one can capture the emotion in a scene with one or both of those simple tools, then the challenge is to simply not draw attention with all of the harmonic decorations one puts on top to develop emotional nuance (unless that’s what needs to be done, of course). And, of course, to avoid the banal contrivances that also draw attention to themselves for their hokiness.

As we discussed in the chapter on pulse, consonance and dissonance can also be used to effect transitions between scenes; it can resolve a scene tending toward consonance, or leave it left unresolved tending toward dissonance. In the exposition of a play, everything on the surface may seem to be right between the main characters, but the underscoring tells us that there is a lot more going on just below the surface. Shakespeare provided just such an opportunity in the opening line of Twelfth Night: “If music be the food of love, play on,” which is followed in a very few lines with the indication, “Enough; no more: ’Tis not so sweet now as it was before.” In the horror scene example above, the monster gets killed in the end, and we expect the scene to resolve musically, but it doesn’t. We immediately know there will be a sequel.

Conclusion: Consonance and Dissonance and Time

In this chapter, we traced the evolution of our human species to the discovery of the first undisputed musical instruments, which somewhat coincided with the discovery of visual art, and an emerging cognition of a world beyond the simple day to day living of our Homo sapiens ancestors, which perhaps raised the question, “Where does life come from, and where does it go when it ends here?” This led to an increased use of ritual to explore such questions, and we argue that ritual proceeds from a musical base that helps transport us into that other world. With music as a fully evolved human activity that now included the ability to create discrete pitches on primitive instruments such as bone flutes, we examined how the brain evolved to sort those pitches on a scale from consonant and calm to dissonant and aroused. We then examined how we can use those qualities to create sound scores for theatre.

Subtly throughout this chapter, however, we have returned to our discussion about how we perceive time, and how we use time to modulate the emotional states of our audience. Consonance and dissonance represent some of the shortest time spans we humans perceive in sonic stimuli. The time intervals we create in consonance are much shorter than they are in pulse and entrainment, which is the next major temporal region we use to manipulate the sense of time and emotional states of our audience.

We hold both of these in our echoic, sensory memory, a significant evolutionary development that allows us humans to consciously examine the immediate past when need be, perhaps referring to the amygdala to discover if there is anything in our emotional memory that triggers a strong emotional reaction to the auditory stimulus.

In the next chapter, we will turn our attention to the matter of attention itself—what grabs and holds our attention; how we arouse our audience, how we manipulate their conscious perception, and the rewards we provide them for going along on the journey. We will explore one of the first manifestations of music being put to an activity that bears a strong resemblance to theatre, ritual, and how we use music in ritual to grab hold of the conscious experience of an audience and transport them into the dreamlike worlds of our dramatic imagination.

Ten Questions

- Name three human activities that developed 50,000 to 100,000 years ago that signaled a dawning consciousness in humans that would forever separate them from their ape ancestors.

- What are Donald’s three major cognitive breakthroughs, and why is the third one so important—so critically important—in advancing human cognition?

- Provide four reasons why researchers think that flutes weren’t invented in German caves, but were brought by those who settled in the area that contribute to a general conclusion that language, music, ritual and visual art all developed alongside each other.

- Why do researchers think that musical instruments and visual art appear so often in prehistoric caves?

- Why does the brain perceive the fifth to be consonant and the minor second to be dissonant?

- What is roughness, where does it occur, and what is the sensation with which it is associated?

- What does relative periodicity have to do with entrainment?

- Describe three main areas of the brain that process consonance and dissonance for emotion, and describe the order in which they process sound stimuli.

- How do consonance and dissonance specifically relate to the typical plot structure of a play?

- Why is it so important to learn the craft of composition in theatre?

Things to Share

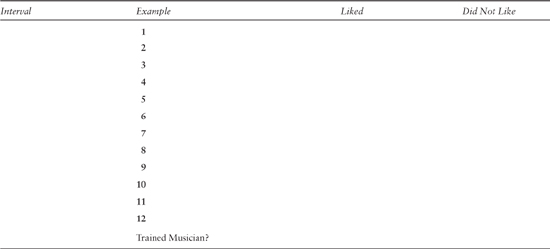

- An in-class experiment: the instructor draws the names of each of the 12 intervals of the chromatic scale out of a hat, and plays each interval in succession, always using Middle C as the lowest tone. After the instructor plays each interval, students enter whether they liked the sound or didn’t like the sound in the following spreadsheet.

Student responses are tabulated (averaged) during a break, and then compared against the simple numeric ratios to find out how well the class correlated consonance and dissonance on a continuum with the expected results.

See (LoPrestor 2009) for more information on this experiment. - Find a movie that has won an Academy Award for sound editing, or music composition. Pick a movie that you love and wouldn’t mind seeing again. Now get hold of the movie (perhaps buy it, because you can use it for other projects in this class, rent it from Netflix, or even check it out from your local library). Watch it with an ear toward a composer consciously using consonance and dissonance, and especially examples in which they transition from one into another. Make a note of the time in the movie where the consonance and dissonance transition occurs so we can cue it up in class and you can share it with us. Pay careful attention to how the sound artists manipulate changes in the consonance and dissonance of the sound score to manipulate the sense of calm and arousal in the listener. Be prepared to describe for us how you think the sound team is manipulating us. Bring at least two examples with you in case someone uses your first example.

- Having explored the wonderful world of rhythm (rhythm, beat, pulse, tempo, meter, phrasing, duration), now it’s time for you to explore these concepts in composition. Compose or design a 1:00–1:30 piece that explores rhythm in all its glory: expressive or abrupt tempo changes, rhythmic nuance, multiple and perhaps even compound meters, a variety of approaches to phrasing your rhythms and many layers of durations—pads, whole, half, quarter, eighth and sixteenth notes, and so forth. If you don’t consider yourself a composer, consider using samples and exploring a drum machine app of some sort. Stick to a relatively small color palette, so that your rhythm will predominate. As always, play and experiment, but still work to create a cohesive composition.

Notes

1Technically, the “ice age” is a glacial period called the Würm that occurred between 115,000 and 11,700 years ago. It is part of a much larger ice age that dates back 2,588,000 years. See Clayton et al. (2006).

2Note that earlier specimens have been discovered, one from the femur of a cave bear found in a cave in western Slovenia dated between 82,000 and 67,000 years ago. However, archaeologists dispute the authenticity of these earlier flutes (d’Errico et al. 1998).

3For those of you with calculators blazing, thinking, “Hey, the numbers don’t add up!” Relax. We’ve rounded, of course, but much more importantly, remember that the basilar membrane only discriminates frequencies that are greater than 0.9%–1.2% apart. Can you tell the difference between a 1,000 Hz tone and a 1,001 Hz tone? Probably not, at least most people can’t. Human hearing is highly subjective, and it’s always a good idea to know what our tolerances are before getting too wrapped up in endless digits beyond the decimal points.

431.2 msec (32 Hz) for a 480 Hz tone and the second above, 512 Hz, if you must know! And since you’re reading this, also note that the next interspike interval would happen at 62.4 msec (16 Hz), below the threshold of human frequency perception, which might also help to explain why consonance is not perceived.

5Assuming the difference frequencies do not share a simple numerical ratio with the stimulus frequencies. For example, a 100 Hz tone and a 150 Hz tone would not sound rough because the difference frequency, 50 Hz, suggests a simple 3:2 relationship between the 150 and 100 Hz tones.

6The left pulvinar in the thalamus may also be responsible for helping an individual focus attention on the dissonances presented to the inferior colliculus.

7Roughness, according to Tramo, can only occur in the vertical, or simultaneous dimension (Tramo et al. 2003, 143–144).

8Note that Stolzenburg cites a different study which suggests that the “just noticeable difference” increases to about 3.6% at 100 Hz, and decreases to .7% at higher frequencies (Stolzenburg 2015, 222).

9Echoic memory may store sound for as little as a few hundred milliseconds to three to four seconds, to as much as 30 seconds, also depending on who you talk to! See Cowan (1997, 27).

10Cowan suggested that there was no difference between auditory and visual sensory memory: “The conclusion was that the persistence of sensory storage does not appear to differ among modalities” (1997, 27). More about this in Chapter 10 when we explore memory.

Bibliography

Alain, Claude, David L. Woods, and Robert T. Knight. 1998. “A Distributed Cortical Network for Auditory Sensory Memory in Humans.” Brain Research 812 (1): 23–27.

Benazzi, Stefano, Katerina Douka, Cinzia Fornai, Catherine Bauer, Ottmar Kullmer, Jirí Svoboda, Ildikó Pap, et al. 2011. “Early Dispersal of Modern Humans in Europe and Implications for Neanderthal Behaviour.” Nature 479 (7374): 525–529.

Blood, Anne J., Robert J. Zattorre, Patrick Bermudez, and Alan C. Evans. 1999. “Emotional Responses to Pleasant and Unpleasant Music Correlate With Activity in Paralimbic Brain Regions.” Nature Neuroscience 2 (4): 382–387.

Brahic, Catherine. 2014. “Shell ‘Art’ Made 300,000 Years Before Humans Evolved.” New Scientist, December 2014.

Carroll, Sean. 2011. “Ten Things Everyone Should Know About Time.” September 1. Accessed February 15, 2016. http://blogs.discovermagazine.com/cosmicvariance/2011/09/01/ten-things-everyone-should-know-about-time/#.VsI99cdTLct.

Clayton, Lee, John W. Attig, David M. Mickelson, Mark D. Johnson, and Kent M. Syverson. 2006. “Glaciation of Wisconsin.” UW Extension. Accessed 07 26, 2017. http://www.geology.wisc.edu/~davem/abstracts/06-1.pdf.

Conard, Nicholas J., Maria Malina, and Susanne C. Münzel. 2009. “New Flutes Document the Earliest Musical Tradition in Southwestern Germany.” Nature 460 (7256): 737–740.

Cowan, Nelson. 2015. “Things We See, Hear, Feel or Somehow Sense.” In Mechanisms of Sensory Working Memory, edited by Pierre Jolicoeur, Christine Lefebvre, and Julio Martinez-Trujillo, 5–22. New York: Academic Press/Elsevier.

D’Errico, Francesco, Christopher Henshilwood, Graeme Lawson, Marian Vanhaeren, Anne-Marie Tillier, Marie Soressi, Frédérique Bresson, et al. 2003. “Archaeological Evidence for the Emergence of Language, Symbolism, and Music—An Alternative Multidisciplinary Perspective.” Journal of World Prehistory 17 (1): 1–70.

D’Errico, Francesco, Paola Villa, Ana C. Pinto Llona, and Rosa Ruiz Idarraga. 1998. “A Middle Palaeolithic Origin of Music? Using Cave-Bear Bone Accumulations to Assess the Divje Babe I Bone ‘Flute’.” Antiquity 72 (275): 65–79.

Dellacherie, Delphine, Micha Pfeuty, Dominique Hasboun, Julien Lefèvre, Laurent Hugueville, Denis P. Schwartz, Michel Baulac, Claude Adam, and Séverine Samson. 2009. “The Birth of Musical Emotion: A Depth Electrode Case Study in a Human Subject with Epilepsy.” Annals of the New York Acadmy of Sciences 1169: 336–341.

Donald, Merlin. 1993a. “Human Cognitive Evolution.” Social Research 60 (1): 143–170.

———. 1993b. “Précis of Origins of the Modern Mind.” Behavioral and Brain Sciences 16 (4): 737–791.

Foo, Francine, David King-Stephens, Peter Weber, Kenneth Laxer, Josef Parvizi, and Robert T. Knight. 2016. “Differential Processing of Consonance and Dissonance Within the Human Superior Temporal Gyrus.” Frontiers in Human Neuroscience 10: 1–12.

Fritz, Thomas, Sebastian Jentschke, Nathalie Gosselin, Daniela Sammler, Isabelle Peretz, Robert Turner, Angela D. Friederici, and Stefan Koelsch. 2009. “Universal Recognition of Three Basic Emotions in Music.” Current Biology 19 (7): 573–576.

Fritz, Thomas Hans, Wiske Renders, Karsten Müller, Paul Schmude, Marc Leman, Robert Turner, and Amo Villringer. 2013. “Anatomical Differences in the Human Inferior Colliculus Relate to the Perceived Valence of Musical Consonance and Dissonance.” European Journal of Neuroscience 38 (1): 3099–3105.

Giraud, Anne-Lise, and David Poeppel. 2012. “Speech Perception From a Neurophysiological Perspective.” In The Human Auditory Cortex, edited by David Poeppel, Tobias Overath, Arthur N. Popper, and Richard R. Fay, 225–260. New York: Springer.

Goetschius, Percy. 1970. The Structure of Music. Westport, CT: Greenwood Press.

Heffernan, B., and A. Longtin. 2009. “Pulse-Coupled Neuron Models as Investigative Tools for Musical Consonance.” Journal of Neuroscience Methods 183 (1): 95–106.

Helmholtz, Hermann L.F. 1877. On the Sensations of Tone as a Physiological Basis for the Theory of Music. 4th Edition. London: Longmans, Green.

Henshilwood, Christopher S., Francesco d’Errico, and Ian Watts. 2009. “Engraved Ochres From the Middle Stone Age Levels at Blombos Cave, South Africa.” Journal of Human Evolution 57 (1): 27–47.

Henshilwood, Christopher S., Francesco d’Errico, Karen L. van Niekerk, Yvan Coquinot, Zenobia Jacobs, Stein-Erik Lauritzen, Michel Menu, and Renata García-Moreno. 2016. “A 100,000-Year-Old Ochre-Processing Workshop at Blombos Cave, South Africa.” Science 334 (6053): 219–222.

Higham, Thomas, Laura Basell, Roger Jacobi, Rachel Wood, Christopher Bronk Ramsey, and Nicholas J. Conard. 2012. “Τesting Models for the Beginnings of the Aurignacian and the Advent of Figurative Art and Music: The Radiocarbon Chronology of Geißenklösterle.” Journal of Human Evolution 62 (6): 664–676.

Higham, Tom, Tim Compton, Chris Stringer, Roger Jacobi, Beth Shapiro, Erik Trinkaus, Barry Chandler, et al. 2011. “The Earliest Evidence for Anatomically Modern Humans in Northwestern Europe.” Nature 479 (7374): 521–524.

Holst, Imogen. 1966. Tune. New York: October House.

Koelsch, Stefan, and Walter A. Siebel. 2005. “Towards a Neural Basis of Music Perception.” Trends in Cognitive Sciences 9 (12): 578–584.

Kurosawa, Akira. 1983. Something Like an Autobiography. New York: Vintage Books Edition.

LoPrestor, Michael C. 2009. “Experimenting With Consonance and Dissonance.” Physics Education 44 (2): 145–150.

Lundin, Robert W. 1967. An Objective Psychology of Music. Westport, CT: Ronald Press.

Morley, Ian. 2009. “Ritual and Music: Parallels and Practice, and the Palaeolithic.” In Becoming Human: Innovation in Prehistoric Material and Spirtual Cultures, edited by Colin Renfrew and Ian Morley, 159–175. Cambridge: Cambridge University Press.

National Geographic. 2016. “The Human Journey: Migration Routes.” Accessed May 2, 2016. https://genographic.nationalgeographic.com/human-journey/.

Omigie, Diana, Delphine Dellacherie, Dominique Hasboun, Nathalie George, Sylvain Clement, Michel Baulac, Claude Adam, and Severine Samson. 2015. “An Intracranial EEG Study of the Neural Dynamics of Musical Valence Processing.” Cerebral Cortex 25 (11): 4038–4047.

Roederer, Juan G. 2008. The Physics and Psychophysics of Music. 4th Edition. New York: Springer Science+Business Media, LLC.

Seashore, Carl. 1967. The Psychology of Music. Mineola, NY: Dover.

Sebri, Merav, David A. Kareken, Mario Dzemidzic, Mark J. Lowe, and Robert D. Melara. 2004. “Neural Correlates of Auditory Sensory Memory and Automatic Change Detection.” NeuroImage 21 (1): 69–74.

Smalley, Jack. 2005. Composing Music for Film. 3rd Edition. JackSmalley.com: JPS.

Stolzenburg, Frieder. 2015. “Harmony Perception by Periodicity Detection.” Journal of Mathematics and Music 9 (3): 215–238.

Stravinsky, Igor. 1947. Poetics of Music. Translated by Arthur Knodel and Ingolf Dahl. New York: Vintage Books.

Tramo, Mark Jude, Peter A. Cariani, Bertrund Delgutte, and Louis D. Braida. 2003. “Neurobiology of Harmony Perception.” In The Cognitive Neuroscience of Music, edited by Isabelle Peretz and Robert J. Zatorre, 128–151. Oxford: Oxford University Press.

Webster’s Seventh New Collegiate Dictionary. 1963. Springfield, MA: G&C Merriam.