Chapter 2

Let There Be a Big Bang

Introduction: If a Tree Falls in the Universe …

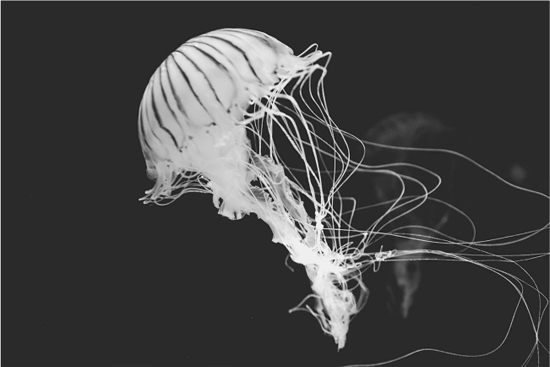

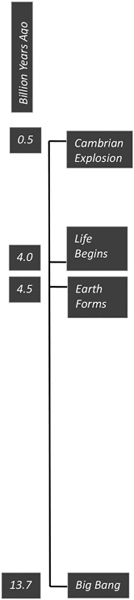

About 13.7 billion years ago, the universe exploded into existence with the Big Bang. As far as we know, there was very little music and theatre at that particular moment. There is quite a bit of controversy about what came before the Big Bang. One line of thought says, “nothing,” which is hard to wrap our brains around. But we do call it the “dawn of time.” Before the Big Bang, there was no time. There was no matter. Or if there was something, it is irrelevant, because it cannot possibly have any effect on the present moment. This monumental event set into motion everything that came after it, not from a deterministic point of view in which every future moment is inevitable, but from the vantage point that all the rules of the universe, including how sound and music work, proceed from this singularity, this singular moment in time.

Now, the Bible also says in Genesis 1:3, “And God said, let there be light: and there was light.” Notwithstanding that the surrounding verses paint a decidedly different picture of creation, the Bible has a good point. The Big Bang must have been quite a spectacular burst of light, but could it have made a sound? In my very first Introduction to Sound Design class in the spring of 1977, I offered two distinct definitions of the word “sound.” I got the first out of David Collison’s 1976 book, Stage Sound. At the time, it was really the only book devoted to sound for the theatre outside of Harold Burris-Meyer’s 1959 book, Sound in the Theatre (Burris-Meyer 1959). Collison’s definition states: “sound is essentially the movement of air in the form of pressure waves radiating from the source at a speed of about 1,130 feet (350 meters) per second” (Collison 1976, 10). The second definition came from Howard Tremaine’s Audio Cyclopedia: (sound) “is a wave motion propagated in an elastic medium, traveling in both transverse and longitudinal directions, producing an auditory sensation in the ear by the change of pressure at the ear” (Tremaine 1969, 11). Besides the obvious difference in technical specificity between the two definitions, I couldn’t help but notice a critically important distinction: one definition insisted that it’s not a sound if no one hears it; the other, not so much. A light bulb went off, as I realized that the answer to the age-old question about whether a tree falling in the woods makes a sound if there is no one around to hear it, depends on how you define sound! Even in my first classes, I became aware of how important definitions were in making your case. This may sound like a small thing now, but we will return to the importance of it in Chapter 4 when we define music, and in Chapter 12, as we bring together many of the ideas of this book into a hopefully consistently argued and well-supported thesis about the origins of theatre in music.

Leaving aside the “if a tree falls in the woods, and no one is around to hear it, does it make a sound?” cocktail party chestnut, we are still left to deal with any definition of sound that describes it as a vibration that propagates through a medium, such as air. While the singularity that produced the Big Bang was infinitely dense, the next moment and all the subsequent moments produced a lot of empty space. And sound doesn’t travel in a vacuum. So, it’s pretty debatable how big a “bang” there actually was. As a pragmatic reality, while the potential for sound existed throughout the universe since the dawn of time, it would not be until about 9 billion years later when the earth formed that sound would begin to have a practical significance.

Now this might seem like nit-picking, but in actuality it reveals an incredibly significant difference between light and sound that has tremendous implications for this book and for the art we create. Light and sound provide two of the most important stimuli to our senses that help us apprehend the external world. In theatre, they are almost solely responsible for providing the sensory input that informs our aesthetic. If we consider theatre to be an art form in which we seek to divine the great secrets of the universe, then we must accept that we will be doing this primarily through these two senses. Before we can understand how we use these senses in the creation, mediation and experience of theatre, it will help tremendously to first understand a bit more about them and how they reveal our universe to us.

In this chapter, we will investigate the fundamental differences between light and sound and how evolution advanced so that we could perceive them. We will see how light transmits through electromagnetic waves that are perceived by the eye as primarily spatial and secondarily temporal, and how sound transmits through mechanical waves that are perceived by the ear as primarily temporal and secondarily spatial. Understanding these differences is essential to understanding the role that time and music play in theatre. We will investigate that role in later chapters.

The Nature of Light and Sound

When I first started teaching about sound design back in the mid-1970s, there was great pressure to think, and therefore teach, about sound like it was visual design, for example, scenery, costumes and lights. Sound as a design element, when anyone considered it at all, was thought of as “the fourth design element,” similar to the other three. My job quickly became to provide lectures on sound design in introductory scenography classes based on this premise.

In one of the beginning scenography classes, taught by my major professor, Van Phillips, and legendary Broadway lighting designer, Lee Watson, we investigated fundamental elements of visual design: line, color, mass, rhythm, space and texture. It wasn’t a far leap for me to find strong equivalents of these elements in sound. I liked the process Van and Lee used, and developed my own course based on their class. I’ve been developing these ideas ever since, and they not only serve as the foundation for this book, but also my workshops and class projects based on this book. I am indebted to them both for planting the seed that would later become my life’s work.

Finding a common language to talk about both visual design and sound design seemed surprisingly easy. Much later in life I would discover why. Sound and light provide our senses with clues to the nature of the universe in the form of waves that emanate from matter in that universe. These waves might come from a distant star, or that molten eruption on our forming earth. Waves are vibrations that transfer energy from one place to another. There are two relevant media that transmit waves: space and mass. Generally speaking, light waves are electromagnetic vibrations that transmit through space, and sound waves are mechanical vibrations that transmit through mass. In order for us to know anything about the universe that surrounds us, we’ll have to take in energy that has been transmitted to us through space and mass.

Waves transmitted through space and mass share a great number of similarities. They have wavelengths and frequencies that are determined by the speed with which they travel through the medium. They share properties of reflection (the angle of incidence equals the angle of reflection), diffraction (the ability to bend around objects), absorption (typically turning the energy into heat, like your microwave), transmission (going right through an object) and Doppler shift (a phenomenon in which the received frequency changes from the source frequency because either the source or the receiver or both are moving). So, from the vantage point that sound and light are both wave transmissions that share similar properties, it makes perfect sense that we would want to consider sound design as similar to our visual design counterpoints, scenery, costumes and lights. I suspect, however, that in my beginning scenography classes and the larger theatre in general, this was more of a marriage of convenience than a matter of scientific inquiry.

But the waves that travel through space and those that travel through mass also have significant differences. In an effort to separate the differences between sound and light, I developed the practice of starting each guest lecture by writing Einstein’s famous equation, E = mc2 on the chalkboard. “This is also how theatre works,” I would say; “dramatic energy is equal to mass put into motion by ‘c’ which is space and time, light and sound.” I really knew nothing about Einstein’s theories, but for some reason, the equation seemed to fit. Only much later would I realize the significance of this statement, and much of this book will lay out the argument for including this concept in one’s aesthetic.

Electromagnetic waves that travel through space, such as light, transmit themselves using the massless photon which streams from the source to the receiver. This type of transmission allows electromagnetic waves to travel at the fastest speeds possible in our universe, 186,300 miles per second.1 This lack of mass allows photons to oscillate at superfast frequencies—311,000 to 737,000 gigacycles per second for light, with wavelengths of 16–38 millionths of an inch. The tiny wavelengths of a light wave typically reflect in all directions when they encounter an object, which is responsible for making objects visible from many directions simultaneously. But those same tiny wavelengths only travel in a straight line, meaning that the receiver must be pointed at the source of the light in order to perceive the streaming mass of photons. This does have its advantages, however: it allows the receiver to precisely determine the direction of the incoming source of light. Light is very well-suited to reveal the spatial characteristics of mass. Why? Because light involves extremely fast vibrations that can convey a tremendous amount of information about the spatial characteristics of a source, especially direction and shape.

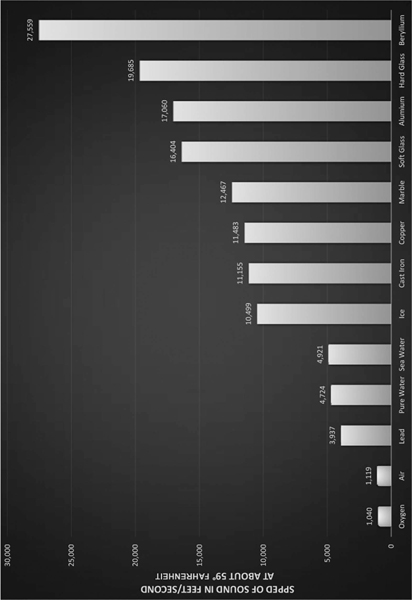

Mechanical waves that travel through mass, such as sound, work quite the opposite of the fast oscillations of light. They are much closer to the sense of touch, which requires direct contact between the source and the receiver. Mechanical waves generated by the source vibrate the molecules of their neighbors in all directions, and these molecules vibrate their neighbor, and so on, continuing in this fashion until they are absorbed, or the wave has reached the receiver, and, in turn, vibrates the receiver. This type of transmission takes a lot more time to happen than for light. Sound transmits much, much slower than light, about 1,130 feet (344 meters) per second in air.

Figure 2.1 The speed of sound traveling through various materials.

Credit: Davis, Don. Carolyn Davis. 1987. Sound System Engineering. Indianapolis: Howard W. Sams. 149.

The types of vibrations that can be transmitted through mass work best at very low frequencies, less than 20,000 vibrations per second for sound, and have wavelengths as long as 50 feet! As frequency increases, the mass medium itself absorbs more and more, often turning the wave into heat before it effectively reaches the receiver. Mechanical waves transmit best at short distances, much closer to the zero-distance requirement for touch.

Sound waves that travel through mass constantly change perceptibly over time. The wavelengths that reflect off of objects do not provide detailed spatial characteristics about the source itself. The mass through which sound transmits also degrades the ability of the sound wave to provide detailed information about the spatial characteristics of the source. Unlike light, sound sources emit waves over a wide spherical area, especially at lower frequencies; the receiver does not need to be oriented in any particular direction in order to receive information from the source. This “omnidirectionality” tends to compromise the receiver’s ability to localize to the specific direction of the source, especially at low frequencies. Sound does provide the receiver with amplitude information that varies in time in an analogous manner to the way the source vibrated, but at a later time than the source because the vibrations are traveling at substantially less than the speed of light. Sound waves provide less detailed information regarding the spatial characteristics of the source, but very detailed temporal information.2 Therefore, sound waves are very well-suited to revealing the temporal properties of mass, that is, how mass vibrates in time.

In this way, electromagnetic vibrations such as light complement the mechanical vibrations such as sound in such a way as to provide a more detailed picture of four primary dimensions of our universe than either one does individually. Potsdam is either 40 kilometers or 30 minutes from Berlin; if you know both, you know more about the journey to Potsdam than if you only know one. Scientists use the speed of light, 186,300 miles per second, to determine the precise length of a meter. They first developed our concepts of time (hours, minutes and seconds of a day) based on the spatial position of the sun in the sky (ibn-Ahmad al-Bīrūnī 1879, 148). We understand then, that light reveals space by defining it relative to time, and sound reveals time by defining it relative to space3 (Thomas 2010; Landau 2001).

Consider how magnificently light and sound complement each other and how important they have been to our survival. Our 4-billion-year-old earth must have had its share of spectacular storms producing lightning and thunder. While the photons emitted from the lightning flash provided very specific information about the spatial direction of the lightning, the time it took for the sound to travel to the receiver would also provide important information about how imminent the danger was. From an evolutionary vantage point, it would certainly make sense for organisms to develop an ability to perceive both electromagnetic waves that transmit through space and mechanical waves that transmit through mass. Organisms that did this would stand a much better chance of surviving.

The Evolution of Hearing and Seeing

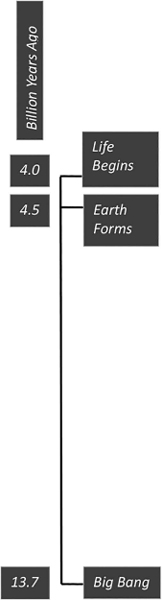

It would take another half billion years or so before life would begin to appear on earth, about 4 billion years ago. Scientists are pretty sure that these organisms didn’t sing, dance, play music or go to the theatre, being single-celled creatures. But they did develop a rudimentary nervous system, that is, the ability to sense vibrations from the outside world. The prokaryote, one of the earliest forms of life, evolved in the oceans of the earth. It had mechanoreceptor cells that sensed motion, mechanical vibrations that varied over time (Fritzsch and Beisel 2001, 712).

Notice the ultra-primitive connection to sound? Eventually single-celled organisms evolved into multicelled organs, and then groups of these cells started to specialize, resulting in multi-organ creatures like jellyfish, perhaps the oldest multi-organ animal on earth dating back 600–700 million years ago (Angier 2011).

These organisms also developed the ability to perceive a small frequency band of the electromagnetic spectrum that penetrated water, what we now call visible light. Early eyes were only able to tell light from dark, but that was enough to develop a sense of direction based on where the light was. Perception of time via these primitive eyes was pretty much limited to circadian rhythms, the slowly changing 24-hour light transmissions of the moon and stars at night and the sun during the day. At this earliest stage of evolution in the animal kingdom, electromagnetic waves provided spatial information (light from above) that allowed animals to perceive time (circadian rhythms), while mechanical waves provided temporal information (low frequency waves) that allowed animals to perceive space (e.g., the boundaries of their environment). Again notice the complementary relationship between primitive perception of time and space.

Another significant development during this early period that would affect how we perceive the world was evolution’s symmetrical approach to developing organisms that would eventually result in two eyes, two ears and two halves of the brain (among many other features) (Martindale and Henry 1998). Two eyes provide either the advantage of increased depth perception when located at the front of the head like many predators, or a wider field of vision when located on each side of the head like many prey. Two ears seem to have evolved primarily to allow creatures to discern temporal differences that helped localize a sound source. At first the brain appeared as a cluster of neurons near the ears and eyes, but it too would evolve two symmetrical sections (New Scientist 2011). Those two symmetrical halves of the brain will play an important role later in this book.

About 500 million years ago there was a sudden, tremendous spate of evolutionary development called the Cambrian Explosion, sometimes referred to as the “Big Bang” of evolution. Evolutionary biologists argue that the catalyst for this was the sudden evolution of eyes that were able to perceive space much better—direction more precisely, shapes more clearly, and color, especially reflections in the blue-green wavelengths of light that transmit through water, more diversely. This all happened in the relatively short time span of about 1 million years, and was a huge game changer, resulting in the sudden explosion of phyla. Phyla is the term scientists use to describe the broadest categorization of animals. Most of the 37 phyla that exist today evolved in this preciously short time period.

One extraordinary characteristic common to some lucky creatures in the 37 phyla was the unique ability to synchronize pulses together rhythmically. Rhythmic movement is commonplace in the animal kingdom; how would animals move through their environment without it? But two animals synchronizing their movements with each other is much rarer; it requires that each animal perceive the intervals between beats in such a way as to be able to predict where the next beat will occur. A number of insects have figured this out; for example, a group of fiddler crabs looking for love have figured out that they are more likely to attract females if they all sound together in joint repetitive signaling, thus amplifying their sound in what is called the beacon effect. Ditto for North American meadow crickets. Once females join the group, then the males start competing for their favor. Smart male insects adjust their timing so that their signal occurs just slightly before other males. The females will orient toward the male that signals first, or plays “ahead of the beat” (Merker, Madison and Eckerdal 2009, 5).4 Ants, crickets, frogs and fireflies all seem to have evolved with this common ability to synchronize their sound or light (in the case of fireflies) to each other. Hard to imagine that this ability to synchronize to a beat—the same ability some of us display so marvelously on the dance floor—is found in many other species in the animal kingdom. But it does tell us something about how important the ability of animals to “organize time” is in the animal kingdom. We’ll explore the human version of this phenomenon, called entrainment, more in Chapters 5 and 6, but it’s worth pointing out now that its basis may lie in our most distant past, over 500 million years ago.

Our particular branch of evolution descends from the phylum chordata, the subphylum of which is vertebrata, or vertebrates, that include our human predecessors: boney fish (Parker 2011). The ability of these fish to sense mechanical vibrations continued on a much more linear course than the evolution of eyes. The mechanoreceptors responsible for sensing external vibrations developed into the lateral line.

The lateral line is an organ containing hair cells that run lengthwise down each side of the fish, allowing the fish to detect movement, that is, vibrations in time (DOSITS 2016). The lateral line only sensed vibrations lower than 160–200 Hz (think bass; fish would love hip hop!), but only at a distance of a couple of body lengths. It allowed fish to sense other creatures nearby and perhaps any atmospheric disturbances that made their way through the water. Not much by human standards, but one could imagine these two primitive senses proved very useful in helping a fish pursue its single most critical need (finding food) and avoid its single most critical mistake (becoming food).

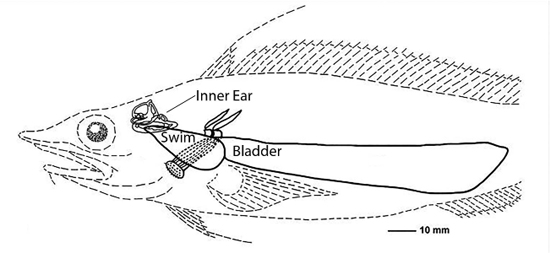

Credit: Reprinted from Deep Sea Research Part I: Oceanographic Research Papers, Vol 58, Issue 1, Xiaohong Deng, Hans-Joachim Wagner, Arthur N. Popper “The Inner Ear and Its Coupling to the Swim Bladder in the Deep-Sea Fish Antimora Rostrata (Teleostei: Moridae),” 27–37, Copyright 2011 with permission from Elsevier.

In fish, we again see a distinct difference in how early creatures perceived time and space. Fish eyes sensed space as the simultaneous reflections of light on an infinite number of objects upon which the fish must focus its eyes one at a time in space to perceive something. Its lateral line, however, perceived space as time, a disturbance that occurred suddenly, probably did not last long, and ended just as quickly. Certainly, the eyes became the most useful organ to perceive space after the Big Bang of the Cambrian Explosion. Cambrian eyes provided animals a much better ability to perceive space than the lateral line. The lateral line could only provide spatial information about the general direction and size of a wave, but very little information about the object itself, in a “who made that sound?” sort of way.

Still, the ability of the lateral line to perceive temporal relationships between objects in space had its uses. Since light waves travel in a straight line, fish eyes evolved to focus in a particular direction; a lateral line could prove very useful in alerting about a potential predator in a different direction than where the eyes were focused (e.g., from behind). Eyes were somewhat useless once the sun went down, or if the water was murky, but fish sensed mechanical vibrations in the water 24/7 and regardless of how murky the water was (Butler and Hodos 2005, 194). So there were good reasons for the lateral line to continue to evolve. Still, mechanisms for sensing mechanical vibrations evolved slowly; if eye evolution was to be the hare of the Cambrian Explosion, ear evolution would certainly be the tortoise (Parker 2011, 328).

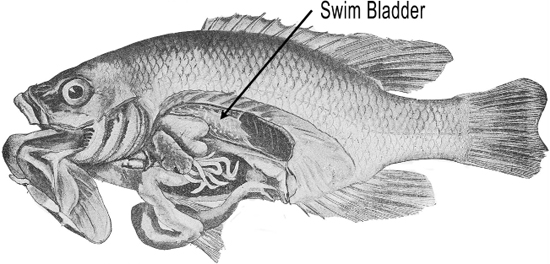

Some fish evolved a swim bladder that literally provided them with buoyancy, the ability to stay afloat in the water.

It turns out that the swim bladder was also very receptive to mechanical vibrations (Jourdain 1997, 9). Eventually fish evolved an inner ear around the same time as the lateral line, some arguing that the inner ear evolved from the lateral line, some arguing that they both evolved from a common ancestral structure (Popper, Platt and Edds 1992). The inner ear had similar hair cells to those of the lateral line that allowed fish to sense somewhat higher frequencies, but still less than about 1,000 Hz, not exactly what we would call high-fidelity.

But if the swim bladder was located close to the inner ear, higher frequency reception improved, as the bones of the fish conducted the vibrations of the swim bladder to the inner ear. This increased frequency resolution would provide greater information about the organisms generating the sound waves.

Because water has about the same density as fish bodies, sound passes right through the fish’s body and into its inner ear (DOSITS 2016). That was all well and good, but about 365 million years ago, fish started wandering out of the water and onto dry land. Now, there were problems because the mechanical vibrations were in air, and vibrations don’t like to change densities when going from one medium (air) to another (the animal’s body). These tetrapods, as they were called (literally “four feet”) needed to adapt their hearing apparatus to accommodate this change of density—at least the ones that wanted to survive!

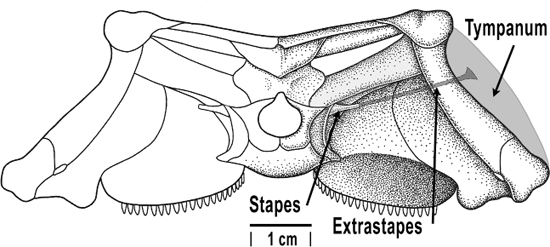

To help out, a single bone from the jaw of the earliest tetrapods migrated to become attached to the inner ear (Clack 1994). This bone, which we would eventually refer to as the stapes, better matched the impedance (resistance to vibrations) between the air and the fluids of the inner ear.

About 200–250 million years ago, some tetrapods developed an eardrum, a thin vibrating layer of tissue that connected the outside air to the stapes (Manley and Köppl 1998). Now when the eardrum vibrated, the stapes vibrated, and that, in turn, vibrated the inner ear. Slowly evolving in this inner ear was the basilar membrane, the part of the inner ear that transduces the mechanical vibrations of sound into the electrical pulses of neurons. The basilar membrane is a long structure that tends to vibrate in different parts depending on which frequencies are exciting it via the middle ear bones (see Figure 7.10 for a drawing of the human version). The hair cells along the basilar membrane vibrate in turn and generate electrical impulses that correlate to the original source wave, with different frequencies stimulating different parts of the stiff, resonant structure. Tetrapods with eardrums and more highly evolved inner ears could sense temporal changes such as frequency and envelope (how a sound unfolds in time) much better on land than animals without one.

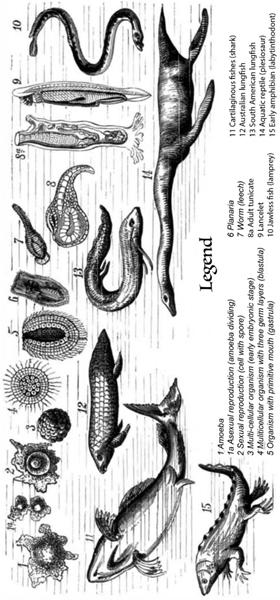

Credit: Haeckel, Ernst. 1876. “Haeckel on the Human Pedigree.” Scientific American 34 (11): 167. Adapted by Richard K. Thomas

Credit: Müller J., Tsuji L.A. 2007. “Impedance-Matching Hearing in Paleozoic Reptiles: Evidence of Advanced Sensory Perception at an Early Stage of Amniote Evolution.” PLOS ONE 2 (9): e889. Accessed July 20, 2017. https://doi.org/10.1371/journal.pone.0000889. Adapted by Richard K. Thomas CC-BY-2.5. https://creativecommons.org/licenses/by/2.5/deed.en. Adapted by Richard K. Thomas.

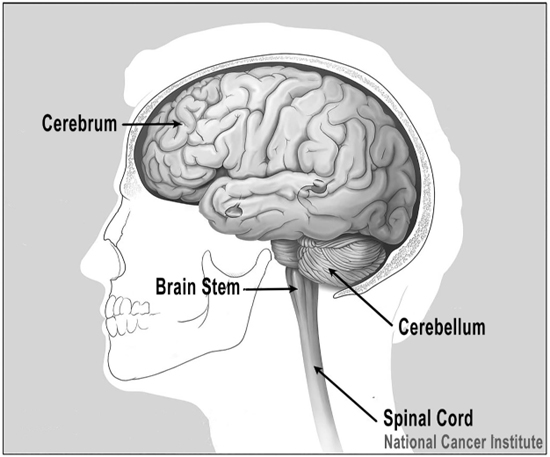

Figure 2.11 The major parts of the brain.

Credit: From the National Cancer Institute, Alan Hoofring, Illustrator. Adapted by Richard K. Thomas.

The Evolution of the Brain Leads to the Ability to Express Emotions

As tetrapods evolved, their brains grew in size and complexity. The tetrapod brain had long ago acquired its brainstem and cerebellum. The brainstem sits at the top of our spinal cord, the cerebellum right behind it.

The brainstem provides the nerve impulses back and forth between the body and the brain. It plays a central role in our most biologically fundamental functions such as breathing, heart rate, sleeping and eating. Within the brainstem are mechanisms for reflexive actions, all things we tend to do more or less unconsciously. The cerebellum, on the other hand, plays a major role in motor control, the part of our brain that controls our muscles and limbs. These are some of the oldest part of our brains, and in most vertebrates, the nerves of the inner ear directly connect to the brainstem and cerebellum, which can produce some interesting responses (Butler and Hodos 2005, 197). It gets a lot more complicated in humans, but the basic results are similar. Don’t worry; we’ll explore the auditory pathway from the ear to the brain in much greater detail in Chapter 7.

Sound stimulates the brainstem and then our muscles directly (Davis et al. 1982, 791). That’s a hugely important point. A sudden loud sound bypasses all of our analytical circuits, and immediately triggers a reflexive muscle reaction. Makes good sense, doesn’t it? A sudden loud sound shouldn’t just go to the parts of the brain that analyze things, because sometimes you have to react without thinking. Rock falling—yikes! At that basic level, we can’t afford to think about sound; we just react. Fortunately, the transmission from ear to muscles happens very fast, within about 14–40 milliseconds in humans (Parham and Willott 1990, 831), way before we have a chance to stop and think about it. This type of reaction is called the startle response or startle reflex. To demonstrate this phenomenon in my lectures, I will typically just randomly shout out sometime in my lecture at the top of my lungs, “HEY!” Everybody jumps of course—works every time! The downside is that it can be a bit hard to get back to the lecture because everyone is so, well, startled!

Startle response doesn’t have a lot to do with what an animal consciously thinks. As far as we know, tetrapods didn’t spend a lot of time consciously thinking about things anyway, not having evolved much of that part of their brain. Startle response happens without conscious effort; it’s reflexive. Francis Crick, co-discoverer of DNA’s structure, postulated that the reason our hearing would evolve with this “bypass” mechanism is strictly “fight or flight.” In primitive worlds, stopping to think could cost you your life! In our modern minds, we most likely are simply “startled,” hence the name for the phenomenon, the “startle” response (Crick 1994; Levitin 2007, 183). In our modern brains, we typically react physically, and then our thinking brain takes over and lets us know that we are not in any real danger. Primitive creatures don’t have much of a thinking brain; they just react.

Related to the startle response is another effect called habituation. Habituation means if I just go HEY! HEY! HEY! HEY! …, you very quickly don’t jump anymore because you’ve gotten used to the sound. Habituation is a condition in which we subconsciously learn to ignore sounds that persist and don’t change. Even the simplest organisms experience habituation (Wood 1988). Basically, habituation occurs because the more you stimulate auditory neurons, the less they respond (Jourdain 1997, 54). They don’t need to; the brain already knows the sound is there and it’s not changing. Primitive animals needed to separate threatening sounds from all of the other constant sounds around them. Habituation accomplishes this, allowing animals to ignore sounds that don’t change (like crickets at night) from sounds that do change (like the twig snapped by an approaching predator).

Without habituation and startle, there would probably be no horror movies. If you introduce the underscore in the appropriate place, and it doesn’t draw too much attention to itself, then the audience will forget it’s there. Then when the creature jumps out of the closet, we startle the audience with a very loud sonic punctuation. I mean, who among us has never used a sustained droning tone to habituate an audience, and followed up with a good startle effect?!! These really are the oldest tricks in the book—hundreds of millions of years old!

Of course, there would be no point in introducing underscore unless we had a purpose for it beyond making the audience forget it’s there. To understand more about what we can do with habituation, we’ll have to explore another amazing evolutionary development that occurred during the Cambrian Explosion.

When fish first wandered out of the water and onto dry land, they faced a new problem: how to breathe on dry land! To venture out on land, they needed organs that would allow them to directly breathe air: lungs. They also needed to develop a mechanism that would prevent water from entering those newly evolved lungs when they went back into the water. Lungfish evolved about 380 million years ago with just such a mechanism (Allen, Midgley and Allen 2002, 54–55).

A very simple sphincter-type muscle prevented water from entering the lungs. That muscle could also vibrate and produce sound when the lungfish exhaled (Fitch 2010, 222–224). Of course, this didn’t necessarily mean that they immediately started singing Wagnerian operas. Imagine the sound you get when you pull the opening of an air-filled balloon tight, and allow air to squeak through as the opening vibrates against itself. Not the best form of vocalizing, but without some sort of sphincter-type muscle that would evolve into the larynx, there would never be a Hello Dolly! or Hamilton.

Figure 2.13 Australian lungfish.

Credit: From Günther, Albert. 1888. Guide to the Galleries of Reptiles and Fishes in the Department of Zoology of the British Museum (Natural History). London: Taylor and Francis 98.

Perhaps as a consequence of wandering out onto land, perhaps as an enhancement that enabled wandering out onto land, tetrapods found themselves with a most unusual ability: the ability to communicate with each other by manipulating sound waves in time. Such an ability to communicate with one’s own kind would be a very favorable evolutionary adaptation! Imagine an early tetrapod bleating out into the distance: “Anybody out there?” Now another tetrapod, who had also wandered out onto dry land, takes a quick look around, but does not readily see the first tetrapod. Streaming photons are like that. They reflect off trees and fauna that hide what is behind, whereas sound waves travel right through or bend around. Sound waves can be hard to stop! So, the second tetrapod hears the sound wave vibration and uses the somewhat limited ability provided by its two ears to localize to the direction of the sound: “Over here!” it calls back. To which the first tetrapod croaks “Where?” “Here!” calls the second. This goes on for quite some time until one of the tetrapods uses its superior visual acuity to identify the other. And then they get a hotel room and make beautiful music together, if you know what I mean.

Calling out in a lovesick lament must surely have been one of the earliest examples of animals communicating with each other. Mating is pretty important in survival of the fittest. Expressing anger was probably another. Tetrapods expressed emotion? Yes, yet another of the evolutionary miracles of the Cambrian explosion was the development of the amygdala.

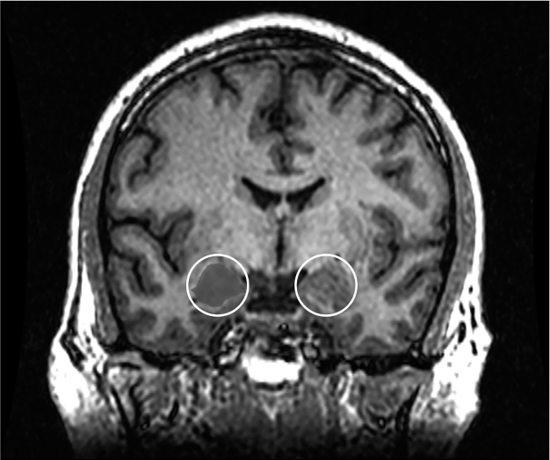

The amygdala is the part of our brain responsible for processing emotions, making decisions about them, and storing and retrieving them in our emotional memory. It is a key part of a larger system, called the limbic system (see Figure 5.9). The limbic system is involved in emotions and memory, perhaps the two most important brain functions we encounter in sound design and music composition, so we’ll be revisiting this area a lot throughout the book. In humans, the nerves from the auditory system connect directly to the amygdala through the brainstem and the thalamus (the primitive part of our brain responsible for routing signals from our senses). The amygdala has been around since the tetrapods. This means that we are entirely capable of emotionally reacting to an auditory stimulus without ever even consciously thinking about it (Robson 2011; Di Marino, Etienne and Niddam 2016, 91–95)!

Figure 2.14 MRI coronal view of the amygdala in the human brain.

Credit: Amber Rieder, Jenna Traynor, Geoffrey B Hall.

The amygdala, two small almond-shaped groups of nuclei right next to the brainstem and cerebellum, have a profound significance for us in theatre. Darwin was perhaps the first to propose that even the most primitive creatures were capable of feeling and expressing emotion (Darwin 1904, 106). We can surmise, of course, that like everything else about these prehistoric creatures, the range of emotions and expressions must have been decidedly limited. Anyone who has sat in a traffic jam and attempted to communicate with the car in front using just their horn probably understands the dilemma of our primitive lungfish. Nevertheless, we can see in some of these creatures an ability to manipulate pitch, amplitude and time to express emotion. Even in the most primitive creatures on earth, we witness the manipulation of elements we associate with music: pitch, amplitude and duration. This is significant because these elements are not evidence of the use of symbols like we use in language. They are evidence of the use of musical elements that we use to express emotions and incite them in others, existing predominantly in the temporal domain.

Think about how we use the amygdala to process emotions and emotional memory in theatre. First, we play music, which stimulates the amygdala directly, contributing to the rising of an emotion in the listener. Then we create a “theme” out of that music and reuse it when we want to recall emotions. We play the music again in another section of the play, perhaps as a variation, and all of a sudden, we take people emotionally back to where they were the last time they heard the music. The audience associates, consciously or not, what was now happening with what happened before. This is much more than telling the audience, “Oh yeah, remember that?” We want the audience to recall and re-experience the emotions again, and attach them to the new scene to possibly create an even more complex emotional response based on both what happened in the current scene and how it relates to a specific musical memory. This is a hugely important technique in theatre, and its basis lies in our old friend, the amygdala, which has been around since the tetrapods. We’ll explore it more in Chapter 10.

Now consider the power that we humans have when we combine habituation and startle with the ability to incite emotions and to effect recollections of them with the amygdala. Underscoring suddenly has a purpose. Now instead of simply habituating the audience in the horror movie, we can both habituate them, and simultaneously make them feel very uneasy before we smack them with that startle effect. And the next time the monster appears, we can repeat the habituating underscore to quickly transport the audience back emotionally to the last time it happened—and that, as they will emotionally recall, was not good!

We must be careful when and where we introduce underscoring, because it will initially not habituate and will draw attention to itself. I’ve had many directors ask me to just “sneak it in under the dialogue,” but I find all too often, this just means that the change in sound distracts different audience members at different points in the action; we relinquish control over how we stimulate the audience. We typically start an underscore in its own acting beat, for example, during a pause between spoken lines. We will also work very hard to localize the underscore to where the eyes are already focused: the dramatic scene. If we localize to another space, above the proscenium, or on the side of the proscenium, for example, our audience will momentarily reflexively focus their attention to source of the sound. It’s a reflex that’s very hard to stop, and it’s one of the reasons why a lot of underscoring is so distracting before it ever really gets a chance to work in a scene.

Once we’ve established the underscore, we work hard to immediately habituate our audience by carefully controlling the amount of variation in the music that could draw attention to itself. We tend to use droning sounds and musical keyboard “pads” in our underscores because they evolve slowly and don’t call attention to themselves, and yet, still have powerful abilities to stimulate emotions. As a matter of fact, they work so well that we’ll discuss them more in Chapter 8. We are careful about how we move underscore sound around in space, because spatial changes draw attention. When we add a new stimulus to the habituated sound we run the risk of dishabituating (yes that really is a word, it simply means to add a sound to or change a habituated sound in a way that causes the original sound to be consciously heard again!). We’re careful about using melody in underscoring because it really has a tendency to draw attention to itself. I’ll often advise students that they must consider the actors’ vocalizations as being the main melodies they are orchestrating. Don’t fight actors’ melodies with your own melodies; habituate under them. If you can do that successfully, you open up a whole world for yourself in terms of manipulating the audience’s emotions, first in the simple emotional stimulus you provide with the underscoring, and then in your ability to recall strong prior emotions by attaching new actions to them in scenes. All of this without the audience becoming too consciously aware of how or when it is being done, thanks to habituation. Harold Burris-Meyer, one of the pioneering fathers of modern theatre sound, was fond of saying, “you can shut your eyes, but the sound comes out to get you!”

In a larger sense, we must realize that habituation and startle are somewhat extreme examples of our ability to subtly use sound to manipulate the audience’s focus in time, in the same way we might use lighting to manipulate the audience’s focus in space. One of the characteristics of habituation is that as the stimulus gets weaker, the onset of habituation comes quicker and is more pronounced. A very loud continuous sound such as a fire alarm will probably produce no habituation at all. A very soft continuous sound like a well-designed ventilation system might never be noticed in the first place (Rankin et al. 2009, 137). In between are wonderful opportunities to manipulate the attention and focus of the audience, subtly drawing their attention to important lines or stage business by introducing small changes in the scoring. A chord change may barely be noticed and habituate quickly, but dramatically change the focus on the stage, or even bring the audience back if they have somehow drifted away. When we consider habituation and startle in this manner, we start to understand the dramatic possibilities we have available to us through careful manipulation of the dynamics of sound scores.

Eyes and Ears, Space and Time

It should not be lost on us that both habituation and startle are phenomenon that primarily happen in the time domain, as does inciting an emotion. Where the startle sound occurs in space won’t have as much effect on creating a startle response as when the sound happens. Where the sound and effect happen is a secondary effect—still very important to potential prey once they’ve been alerted that there may be a problem, but the typical response at that point will be that the potential prey will look around to identify the direction of the disturbance, a primary response for the eyes. As we continue to move up the evolutionary ladder, we will continue to see the differentiation of hearing as primarily a temporal sense and secondarily a spatial sense. Evolution has brilliantly complemented hearing with vision, which is primarily a spatial sense and secondarily a temporal one.

Even in my earliest lectures, I began to understand that the eyes were the dominant sense when perceiving space, and sound the dominant sense when perceiving time. The eyes perceive mass through reflected light in space, the ears perceived mass through changes of air pressure in time. The eyes perceive time as a series of three-dimensional (spatial) images; the ears perceive space as a series of sequential (temporal) pressure variations. When the eyes perceive the reflection of an object, the position of the object in space correlates to a position on the retina of the eye (Jourdain 1997, 20). The ear perceives the position of an object as a temporal difference between the arrival of the sound at the two ears, or delay (typically measured in milliseconds) between the direct sound and the reflected sound in a space.

We see this complementary nature of sight and sound even in our ability to perceive the location of an object. It takes two eyes to perceive depth with some degree of accuracy, because the brain must interpret the slight variations in spatial position of the object on the two retinas. It only takes one ear, because a single ear can judge the closeness of a source by the difference in level between the direct sound coming from the source and the level of the indirect sound of the room (the part of the sound that takes longer to decay, such as the reverb in a cathedral). Conversely, it takes two ears to more accurately locate the direction of a source in the horizontal plane due to temporal differences of the arriving sound at each ear; it only takes one eye to do that because spatial positioning occurs on the retina.

We know about this eye-ear complement intuitively, too. We can take a photo and freeze a moment in time, and then study its spatial characteristics. We cannot freeze a moment of sound in time and examine its spatial characteristics by listening to that moment. We can focus on one video display in a sports bar, because we can focus on one particular stream of photons emanating from different displays in different spaces simultaneously. But if the bartender turns up the volume for every video display, we will have a very hard time understanding just one of the sound sources because the sound sources are all occurring at the same time, and our ears perceive all the simultaneous sounds at once.

We use spatial terms like length, width and depth to describe visual art. But we use temporal terms such as tempo, meter, rhythm, phrasing and duration to describe sound art. Scientists routinely specify light in wavelengths, but sound in cycles per second. When we want to measure objects we see, we use a tape measure. When we want to measure objects we hear, we use a stopwatch. Consider the old saying, “a picture is worth a thousand words.” Yes, but how long does it take to say a thousand words?

The bottom line? The eyes have it when perceiving space. But when it comes to having a keen perception of time, as we shall see throughout this book, the ears excel.

Not exclusively, just dominantly. Obviously, the eyes can perceive temporal relationships, and the ear, spatial relationships. As we have seen throughout this chapter, sound and light function in a complementary fashion, providing a more complete apprehension of four of the dimensions of our universe than either can provide by itself. As we saw in the first part of this chapter, sound and light share many similar properties. It should not surprise us, then, that the eyes and ears evolved to perceive light and sound in many similar ways.

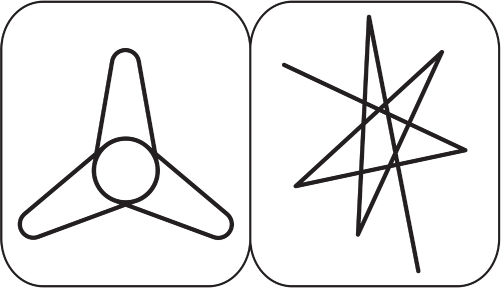

Consider the two types of images offered by John Booth Davies in 1978 (Davies 1978, 104). One is called takete, the other uloomu. Can you match the correct name with the correct picture? I’ve done this test with students and with international attendees at my lectures for almost 40 years. Overwhelmingly, participants will call the left one “uloomu” and the right one “takete.” It appears that there is, indeed, a correlation between what the eyes perceive in space and what the ears perceive in time.

Figure 2.15 Uloomu versus takete.

Credit: Data from Davies, John Booth. 1978. The Psychology of Music. Stanford, CA: Stanford University Press 104. Adapted by Richard K. Thomas.

This correlation between eyes and ears should come as no surprise. We witnessed a similar correlation between light and sound waves earlier in this chapter, and we should expect that our eyes and ears would evolve to favor perception of light and sound respectively. The eyes perceive color using photoreceptor cells, rods and cones, while the ear perceives color using hair cells on the basilar membrane (although color is localized along specific parts of the basilar membrane, and the color sensing cones of the eye are more or less distributed across the retina).

Both the rods and cones and the basilar membrane can be fatigued by overstimulation, resulting in a temporary inability to perceive overstimulated frequencies. We call the combination of all frequencies “white light” visually and “white noise” audibly. When colors don’t go together, we either say they “clash” visually or are “dissonant” sonically. We know that the human brain has an “integration constant” of about 50 milliseconds: delay a reflection of a sound slower than about 50 milliseconds and we’ll start to hear an echo in space; slow down the frame rate of the film projector lower than 20 frames per second (i.e., delay the next picture by more than 1/20th of a second, or 50 milliseconds) and we start seeing separate images in time.

Technology often prevented artists from exploring the temporal promises of visual art, but advances in the twentieth century found artists increasingly exploring time in the visual arts. A significant example is Tharon Musser, the famous lighting designer, who became known for her ability to treat light like a “living entity” by moving “light and stage picture in synchronized rhythm with the dialogue of the performance” (Unruh 2006, 59). In other words, she started treating light like music. At the same time, the invention of film finally made the possibility of moving pictures in time a reality, and stage scenery, which had remained relatively static for most of the history of theatre, began to experiment with time in the form of moving projections. In Chapter 4 we’ll explore this emergence of the musicality of light in more detail, as we wrestle with the very definition of music.

While the visual designers in theatre work to develop methods of manipulating time that are as facile as manipulating space, we find a similar complementary problem with sound: manipulating space as effortlessly as we manipulate time. The obvious solution to getting the sound to correctly orient to a specific location has always been to simply put a loudspeaker there. But what about trying to recreate the acoustic signature of the space in which the sound originated? Until the late twentieth century, it was impossible to even conceive. Tomlinson Holman famously suggested that it would take about a million loudspeakers to fully reproduce the sound of one space in another (Holman 2001). Modern spatial imaging systems have found ways to reduce that number using similar tricks to those employed in 3D film, but like 3D, they are still tricks. We haven’t yet, either for time in visual art or space in sonic art, been able to fully create four dimensions uninhibited by corporeal limitations.

But is a medium that is perfectly fluid in all four dimensions necessary? As we journey through the rest of this book, we will wrestle with the very nature of theatre itself. That struggle hinges on the nature of time. In Einstein’s famous equation, E = mc2, we see a definitive statement about the relationship of space and time to mass and energy: c is the ratio of distance (space) to time. Since we now confidently suggest that sight is weighted toward space and sound toward time, we can begin to more seriously consider sound’s strong suit: time. As we continue to explore our evolutionary journey in the next chapter, we’ll uncover some extraordinary discoveries related to our understanding of time that have had a profound influence on the medium we know that is theatre.

Ten Questions

- What is the incredibly significant difference between light and sound that has tremendous implications for this book?

- Describe the fundamental difference between electromagnetic waves and mechanical waves.

- Light waves are ideally suited to reveal mass ________ in while sound waves are ideally suited to reveal mass in ________?

- What was the most significant development during the Cambrian Explosion that would change the course of evolution?

- What is the startle response?

- What is habituation?

- What is the amygdala and why is it important?

- Give an example of how we could use the brainstem, the cerebellum and the amygdala in a sound score for theatre.

- What are three lessons habituation teaches us about creating effective underscoring?

- Provide three examples that light also has a temporal component and three examples that sound also has a spatial component.

Things to Share

- Find a partner. One partner wears a blindfold; the other partner wears earplugs. Make a note about what time it is. Now go out and explore your environment. Make mental notes of the spatial and temporal aspects of what you discover. How do you describe the information your senses are providing you? When you think 15 minutes have elapsed, stop, record the actual time and length of your exploration and then your observations. Now, switch places so that whoever had earplugs now has a blindfold and whoever had a blindfold now has earplugs. Repeat the process. What did you discover about your perception of the passage of time?

- Find a movie that has won an Academy Award for sound. Pick a movie that you love and wouldn’t mind seeing again. Now get hold of the movie (perhaps buy it, because you can use it for other projects in this class, rent it from Netflix, or even check it out from your local library). Watch it with an eye toward habituation and startle, the use of dynamics to create an emotional response in the listener. If you can find some big startle effects, fine. Make a note of the time in the movie where the effect occurs so we can cue it up in class and you can share it with us. But also, feel free to consider subtler uses of habituation and dishabituation, as we discussed in this chapter. Pay careful attention to how the sound artists manipulate changes in the dynamics of the sound score to manipulate emotions in the listener. Be prepared to describe for us how you think the sound team is manipulating us. Bring at least two examples with you in case someone uses your first example.

Notes

1Although this assertion is constantly being challenged with new scientific inquiries.

2Assuming all of that information is transmitted without significant loss in the medium. This is more likely to happen for sound when the source is close to the receiver. Light travels farther without significant distortion; sound is closer to human touch, requiring the source to be closer to the receiver in order to transmit without significant distortion.

3This is on planet earth, where we’ll confine most of our investigation! Once we consider light traveling from distant stars, scientists also use time to measure light in the form of light years—a further indication of the complementary relationship between light and sound. More on this complementary relationship later in this chapter.

4As a side note, this particular effect is called the Haas or Precedence Effect, and we use it all the time in theatre. For example, consider a sound that travels directly from a source to the listener, and a reflection that arrives at the listener a certain amount of time later. According to Ahnert and Steffen, “the ear always localizes first the signal of the source from which the sound waves first arrive” (1999, 53). For humans, when one sound arrives less than about 30–50 milliseconds later, the listener will perceive the spatial location of the sound to be the earlier arriving one. What’s even more amazing is that the second sound can actually be almost twice as loud, as the earlier sound (that is, greater than about 10 dB), and we will still localize to the earlier arriving sound. In pop festivals, we delay the sound from the remote loudspeakers covering the rear of the audience to the main loudspeakers on stage so that the sound from the stage arrives first. In theatre, we delay the sound from speakers under the front lip of the stage, the proscenium and balcony loudspeakers to loudspeakers buried on stage, or to the human voices on the stage, to keep the sound score localized to the dramatic action. Curiously, I have found that this technique works well even when the loudspeakers buried on the stage are small: as long as we delay the larger loudspeakers elsewhere in the theatre back to the smaller stage loudspeakers, we tend to localize to the stage. One of the most distracting things a sound designer can do is to localize the sound outside of the dramatic space; we are just naturally trained to focus our attention in the direction of the sound. Of course, we will habituate to this, but every time the habituation breaks down, we will be distracted from the dramatic action on the stage. I can’t tell you how many sound designers have perfectly good cues cut from shows because the director was not aware that the reason the cue distracted was localization, not some other characteristic of the sound.

Bibliography

Ahnert, Wolfgang, and Frank Steffen. 1999. Sound Reinforcement Engineering Fundamentals and Practice. London: E&FN Spon.

Allen, Gerald R., Stephen Hamar Midgley, and Mark Allen. 2002. Field Guide to Freshwater Fishes of Australia. Collingwood, VIC: W.A. Perth.

Angier, Natalie. 2011. “So Much More Than Plasma and Poison.” New York Times June 6: D1.

Burris-Meyer, Harold. 1959. Sound in the Theatre. New York: Radio Magazines.

Butler, Anne B., and William Hodos. 2005. Comparative Vertebrate Neuroanatomy: Evolution and Adaptation. 2nd Edition. Hoboken, NJ: Wiley-Interscience.

Clack, J.A. 1994. “Earliest Known Tetrapod Braincase and the Evolution of the Stapes and Fenestra Ovalis.” Nature 369 (2): 392–394.

Collison, David. 1976. Stage Sound. New York: Drama Book Specialists.

Crick, Francis. 1994. The Astonishing Hypothesis. New York: Scribner/Maxwell Macmillan.

Darwin, Charles. 1904. The Expression of the Emotions in Man and Animals. London: John Murry, Albemarle Steet.

Davies, John Booth. 1978. The Psychology of Music. Stanford, CA: Stanford University Press.

Davis, Michael, David S. Gendelman, Marc D. Tischler, and Phillip M. Gendelman. 1982. “A Primary Acoustic Startle Circuit: Lesion and Stimulation Studies.” Society of Neuroscience 2 (6): 791–805.

Di Marino, Vincent, Yves Etienne, and Maurice Niddam. 2016. The Amygdaloid Nuclear Complex. New York: Springer.

DOSITS. 2016. “How Do Fish Hear?” Accessed January 28, 2013. www.dosits.org/animals/soundreception/fishhear/.

Fitch, W. Tecumseh. 2010. The Evolution of Language. London: Cambridge University Press.

Fritzsch, B., and K.W. Beisel. 2001. “Evolution of the Nervous System.” Brain Research Bulletin 55 (6): 711–721.

Holman, Tomlinson. 2001. “Future History.” Surround Professional, March–April: 58.

Ibn-Ahmad al-Bīrūnī, Muhammad. 1879. The Chronology of Ancient Nations: An English Version of the Arabic Text of the Athâr-ul-Bâkiya of Albîrûnî, Or “Vestiges of the Past.” London: William H. Allen.

Jourdain, Robert. 1997. Music, the Brain and Ectasy. New York: William Morrow.

Landau. 2001. Accessed May 7, 2001. http://Landau1.phys.Virginia.EDU/classes/109/lectures/spec_rel.html.

Levitin, Daniel J. 2007. This Is Your Brain On Music. New York: Penguin Group/Plume.

Manley, Gerald A., and Christine Köppl. 1998. “Phylogenetic Development of the Cochlea and Its Innervation.” Current Opinion in Neurobiology 8 (4): 468–474.

Martindale, Mark Q., and Jonathan Q. Henry. 1998. “The Development of Radial and Biradial Symmetry: The Evolution of Bilaterality.” American Zoologist 38 (4): 672–684.

Merker, Bjorn H., Guy S. Madison, and Patricia Eckerdal. 2009. “On the Role and Origin of Isochrony in Human Rhythmic Entrainment.” Cortex 45 (1): 4–17.

New Scientist. 2011. “A Brief History of the Brain.” September 21. Accessed January 31, 2016. www.newscientist.com/article/mg21128311-800-a-brief-history-of-the-brain/.

Parham, Kourosh, and James F. Willott. 1990. “Effects of Inferior Colliculus Lesions on the Acoustic Startle Response.” Behavioral Neuroscience 104 (6): 831–840.

Parker, Andrew R. 2011. “On the Origin of Optics.” Optics & Laser Technology 43 (2): 323–329.

Popper, Arthur N., Christopher Platt, and Peggy L. Edds. 1992. “Evolution of the Vertebrate Inner Ear: An Overview of Ideas.” In The Evolutionary Biology of Hearing, edited by Douglas B. Webster, Richard R. Fay, and Arthur N. Popper. New York: Springer-Verlag.

Rankin, Catharine H., Thomas Abrams, Robert J. Barry, Seem Bhatnagar, David F. Clayton, John Colombo, Gianluca Coppola, et al. 2009. “Habituation Revisited: An Updated and Revised Description of the Behavioral Characteristics of Habituation.” Neurobiology of Learning and Memory 92 (2): 135–138.

Robson, David. 2011. “A Brief History of the Brain.” September 24. Accessed January 29, 2015. www.newscientist.com/article/mg21128311-800-a-brief-history-of-the-brain/.

Thomas, Richard. 2010. “The Sounds of Time.” In Sound: A Reader in Theatre Practice, edited by Ross Brown, 177–187. London: Palgrave Macmillan.

Tremaine, Howard M. 1969. Audio Cyclopedia. 2nd Edition. Indianapolis: Howard Sams.

Unruh, Delbert. 2006. The Designs of Tharon Musser. Syracuse, NY: Broadway Press.

Wood, David C. 1988. “Habituation in Stentor: Produced by Mechanoreceptor Channel Modification.” Journal of Neuroscience 8 (7): 2254–2258.