In the previous chapters, you installed your host and got to know your way around it. You then learned how to add and configure storage hardware. Now it’s time to look at how to make the software work for you. In this chapter, we will cover the infrastructure services that help you manage the basics of your network.

We’ll first describe how to keep the time on your systems synchronized, which is important because a lot of applications rely on your host having the correct time. In the process, we’ll introduce you to the Network Time Protocol (NTP).

We’ll also cover the Domain Name System (DNS), which is the glue that allows networks like the Internet to function by allowing hosts to find one another. We’ll detail the components of DNS and how to set up and manage a DNS server.

We’ll then discuss the Dynamic Host Configuration Protocol (DHCP), which is used to assign addresses and network configuration to your hosts. Using DHCP means you don’t have to configure individual network settings for clients in your network; rather, this can be automatically provided. You’ll learn about how to use DHCP and how to set up address allocation and pass network configuration information to your hosts.

Note

We’ll look at other ways to automatically configure hosts in Chapter 19.

Finally, we’ll expand on the Secure Shell (SSH) service and show you how to easily access hosts and how to transfer files between hosts using SSH.

Keeping Time

Of course, having systems keep time is very important. Imagine having your Linux host powering your solid rocket boosters were out of sync with your main thrusters? When you timed both to burn you could be seconds to minutes out of sync. In the more mundane world imagine the horror of database transactions having incorrect timestamps! I don’t even want to think about it.

Let’s look at keeping time, or at least our server with the same relative time. The general-purpose tool for keeping time is NTP which you will find on many systems. On Red Hat-derived systems you find Chrony.

Time with timedatectl

We would like to show you how to manage time on your Linux server with the timedatactl command. This comes as part of the systemd system. With it we can do the following:

Set the current time

Set the date

Set the time zone

First let’s look at the current status:

$ sudo timedatectl statusLocal time: Fri 2016-09-30 21:22:26 EDTUniversal time: Sat 2016-10-01 01:22:26 UTCRTC time: Fri 2016-09-30 18:06:27Time zone: America/New_York (EDT, -0400)NTP enabled: yesNTP synchronized: yesRTC in local TZ: noDST active: yesLast DST change: DST began atSun 2016-03-13 01:59:59 ESTSun 2016-03-13 03:00:00 EDTNext DST change: DST ends (the clock jumps one hour backwards) atSun 2016-11-06 01:59:59 EDTSun 2016-11-06 01:00:00 EST

There we have our current time and date status. You can see the local time, UTC (Coordinated Universal Time), time zone, and daylight saving information.

You can see from that information that our time zone is set to New York. Let’s demonstrate how to change that to our local time zone.

First we are going to list the time zones, but because the result is a very long list we are going to use grep to return only the result for Melbourne.

$ timedatectl list-timezones |grep MelbAustralia/Melbourne

Now to set the time zone we issue the following:

$ sudo timedatectl set-timezone Australia/MelbourneNow when we check our status we have the correct time zone set.

$ timedatectl statusLocal time: Sat 2016-10-01 11:29:01 AESTUniversal time: Sat 2016-10-01 01:29:01 UTCRTC time: Fri 2016-09-30 18:13:03Time zone: Australia/Melbourne (AEST, +1000)NTP enabled: yes

You can use the timedatectl --help option to list more options. For example, to set the system time you would issue timedatectl set-time. Managing your time with timedatectlis very easy. Let’s move on to how we manage keeping your hosts time in sync with world clocks automatically.

Network Time Protocol

We’ll start by showing you how to keep all system clocks on your hosts synchronized. Though this might seem a trivial issue, having system clocks match means your log entries will all carry consistent timestamps. This in turn means you can easily correlate log entries from different hosts, should the need arise. Synchronized system clocks are also a prerequisite for the functionality we’ll be enabling later. You can’t simply rely on your host’s motherboard’s onboard clocks, as their quality varies a lot and some can run out of sync by as much as several minutes each day.

We have explained a little about NTP already in Chapter 2. Time services are provided by a service called the Network Time Protocol. NTP servers provide synchronization services to a client that connects to them, and they also synchronize themselves with upstream time servers. The layers in this model are called strata, with the highest level, stratum 0, consisting of dedicated time hardware such as atomic clocks or satellite receivers. Servers connected to these stratum 0 time sources are called stratum 1 servers. Servers that synchronize off stratum 1 servers are stratum 2 servers, and so on.

Note

You can read more about NTP strata at http://www.akadia.com/services/ntp_synchronize.html .

You can make use of NTP servers in two ways. One is by running a client utility called ntpdate that synchronizes the system clock each time you run it. The other is to run an NTP service that automatically synchronizes whenever the system clock runs too far out of sync with the actual time. A lot of systems actually use both methods. If the system clock and atomic time differ too much, it can take a while for a system to synchronize with an upstream time server. To overcome this, the ntpdate utility is invoked and the clock is synchronized before the NTP service is started.

Let’s have a look at the ntpdate utility first. On both CentOS and Ubuntu it is provided by the ntpdate package. To update the system time, run the utility with the upstream server address as the only command-line parameter. It needs to be run as root, in order to be able to update the system clock.

$ sudo ntpdate pool.ntp.org24 Jun 21:25:35 ntpdate[1565]: step time server 220.233.156.30 offset 1.810551 sec

Note

If you already have an ntpd daemon running, the ntpdate command will fail with a message similar to this: “24 Jun 23:53:05 ntpdate[22609]: the NTP socket is in use, exiting.” You can use systemctl ntpd stop and try again.

The ntpdateutility connected to one of the pool.ntp.org servers and adjusted our system time by 1.810551 seconds. There are two effect ways to make sure the system clock remains synchronized, you can add an entry in /etc/crontab that runs ntpdate once, say, every two hours. Or you can use the more effective and accurate ntp daemon.

If you are going to use the cron method , you should redirect standard input and standard output to /dev/null, so you don’t receive twice-hourly e-mails.

0 */2 * * * root /usr/sbin/ntpdate pool.ntp.org > /dev/null 2>&1However, you’d need to install and maintain such a crontab entry on each of your hosts, and even then, depending on the quality of the hardware, the system clock can skew quite a lot over the course of two hours. You can ensure that the system clock is adjusted whenever it attempts to run out of sync by installing and running an NTP daemon on your host. This will keep your host synchronized and also allow you to use it to synchronize other hosts on your network.

The NTP daemon and some associated utilities are provided by the ntp package. You need to install it via yum install ntp on CentOS or sudo aptitude install ntp on Ubuntu. However each distribution has a slightly different ntp.conf file and we will show you the Ubuntu version. When it starts, the ntpd service will read its options from the /etc/ntp.conf file and listen on UDP port 123. When you look at this configuration file, you can see it consists of two main sections: first is the actual time source configuration and second is the authorization configuration. We’ll start with the reporting and time source configuration as shown in Listing 10-1.

Listing 10-1. ntp.conf

# /etc/ntp.conf, configuration for ntpd; see ntp.conf(5) for helpdriftfile /var/lib/ntp/ntp.drift# Enable this if you want statistics to be logged.#statsdir /var/log/ntpstats/statistics loopstats peerstats clockstatsfilegen loopstats file loopstats type day enablefilegen peerstats file peerstats type day enablefilegen clockstats file clockstats type day enable# Specify one or more NTP servers.# Use servers from the NTP Pool Project. Approved by Ubuntu Technical Board# on 2011-02-08 (LP: #104525). See http://www.pool.ntp.org/join.html for# more information.pool 0.ubuntu.pool.ntp.org iburstpool 1.ubuntu.pool.ntp.org iburstpool 2.ubuntu.pool.ntp.org iburstpool 3.ubuntu.pool.ntp.org iburst# Use Ubuntu's ntp server as a fallback.pool ntp.ubuntu.com

The driftfile directive gives the server a place to store information about the idiosyncrasies of your local system clock. It stores the clock frequency offset every hour , depending on the tolerance of drift, and uses this information when the daemon is started. If the file is not there it sets the frequency offset to zero. Over time, it will use this information to report the time more precisely between synchronization attempts, as the daemon knows how the local clock behaves.

Statistics reporting is not enabled by default, as the statsdir option is not enabled. However, if you were to uncomment that line, the next directive, statistics, would enable loopstats, peerstats, and clockstats reporting to files in /var/log/ntpstats.

loopstatscollects information on the updates made to the local clock by the ntpd server. peerstats logs information about all peers—upstream servers as well as clients that use your server to synchronize. Finally, clockstats writes statistical information about the local clock to the log file.

The filegen directive tells the daemon which file you want this statistical information written to and how often the file needs to be changed. In our example, a new version of each of these files is created each day due to the type day directive.

Finally, the pool option tells ntpd which upstream servers to use for synchronization. To make sure your host stays in sync, it is generally a good idea to add multiple server directives with multiple different servers. You can specify individual time servers with the server option or a pool of servers as we have done here. The iburst option tells ntp to send an extra eight packets if it doesn’t get an initial response, originally for devices like modems and ISDN that can be slow in establishing connections. We’ll explain more about the pool.ntp.org servers in a moment.

First let’s quickly look at the next section in the /etc/ntp.conf file, which defines which hosts may access your NTP server . On CentOS, this section is listed at the top of the file, as you can see in Listing 10-2.

Listing 10-2. Access Control in ntp.conf

# By default, exchange time with everybody, but don't allow configuration.restrict -4 default kod notrap nomodify nopeer noquery limitedrestrict -6 default kod notrap nomodify nopeer noquery limited# Local users may interrogate the ntp server more closely.restrict 127.0.0.1restrict ::1# Needed for adding pool entriesrestrict source notrap nomodify noquery

The restrict keyword is used to define access classes. The same access levels are defined for IPv4 and IPv6 clients here, by using the -4 and -6 parameters.

default is a wildcard keyword that matches all possible addresses. Kod, “kiss-of-death” is used to slow down clients that exceed a defined rate limit, by sending a special response packet. These limits are defined with the discard option, and since we haven’t defined such limits, it’s not used here. notrap rejects any control packets that get sent, while nomodify disallows attempts to modify the time on the server. nopeer ensures your server doesn’t start using a connecting client as an upstream NTP server. The noquery prevents your server from being queried for peer and other statistics. Finally, limited is used to deny the time service if packets violate the limit set in the discard option.

The second set of restrict directives ensures that connections from the local machine, 127.0.0.1 (IPv4) and ::1(IPv6), can interrogate and reconfigure the NTP server. None of these prevent a client from synchronizing with your NTP server, though.

The last set of restrict directives allow for the pool server to become peers, meaning we can query them for time information.

restrict source notrap nomodify noqueryHere the source refers to the the pool servers and you will notice that nopeer is not set but the other restricts are still in place.

You can further restrict your ntp servers by using cryptographic keys to ensure that only servers that can participate in exchanging public key encrypted packets can use your ntp services . It is uncommon in most scenarios and impossible when using the pool.ntp.org servers. If you would like more information, please see www.ntp.org/ntpfaq/NTP-s-algo-crypt.htm .

Note

You can find more information on NTP configuration and access control here: http://doc.ntp.org/4.1.1/confopt.htm .

The Global NTP Server Pool

Many organizations run their own time servers and make them accessible to third parties. Microsoft and Apple run time servers that are used by default by their respective operating systems, and many Linux vendors do the same.

However, when you want to add extra servers (using the server configuration) to your own ntp.conf file, you will need to know their addresses. Luckily, there is an open source project that aims to provide a pool of local NTP servers for all continents. This project is called pool.ntp.org , and the participants are individual users and organizations that allow third parties to use their servers for synchronization.

The project provides DNS-based groups for various server strata and geographical locations—for instance, 1.pool.ntp.org is provided by stratum 1 servers, au.pool.ntp.org contains only servers located in Australia, and us.pool.ntp.org is provided by servers located in the United States. By adding a selection of pool.ntp.org servers, you are assured of always having up-to-date and nearby servers available for synchronization.

Note

You can read more about the project and join the pool at www.pool.ntp.org/ .

Using the host command, we are going to see what 0.ubuntu.pool.ntp.org will return as shown in Listing 10-3. Listing 10-3 showspossible servers our ntpd daemon will try to synchronize against.

Listing 10-3. Ubuntu ntp Pool Servers

$ host 0.ubuntu.pool.ntp.org0.ubuntu.pool.ntp.org has address 129.250.35.2500.ubuntu.pool.ntp.org has address 129.250.35.2510.ubuntu.pool.ntp.org has address 27.124.125.2520.ubuntu.pool.ntp.org has address 121.0.0.41

Compare that with what is returned from CentOS’s 0.centos.pool.ntp.org.

$ host 0.centos.pool.ntp.org0.centos.pool.ntp.org has address 27.124.125.2520.centos.pool.ntp.org has address 121.0.0.410.centos.pool.ntp.org has address 129.250.35.2500.centos.pool.ntp.org has address 129.250.35.251

Hey look, they contain the same servers. And what if we looked at 0.au.pool.ntp.org

$ host 0.au.pool.ntp.org0.au.pool.ntp.org has address 129.250.35.2510.au.pool.ntp.org has address 27.124.125.2520.au.pool.ntp.org has address 121.0.0.410.au.pool.ntp.org has address 129.250.35.250

Well isn’t that interesting. You should test the results from 0.us.pool.ntp.org and see what you get. It is a common practice to set your local network hosts to two or three local ntp servers, which then synchronize with servers from the ntp pool.

If you choose to change these settings, your ntp.conf can restart the NTP server with sudo service ntp restart on Ubuntu or sudo systemctl restart ntpd on CentOS. The server writes any status updates to the system logger; you can find them in /var/log/syslog on Ubuntu or in /var/log/messages on CentOS. Listing 10-4 shows you the output of a server that is started and then synchronizes with upstream servers.

Listing 10-4. ntpd Status in the Ubuntu System Log

Jun 25 09:07:00 ubuntu ntp[13644]: * Starting NTP server ntpdJun 25 09:07:00 ubuntu ntpd[13653]: ntpd [email protected] Fri Apr 8 20:58:07 UTC 2016 (1): StartingJun 25 09:07:00 ubuntu ntpd[13653]: Command line: /usr/sbin/ntpd -p /var/run/ntpd.pid -g -u 112:116Jun 25 09:07:00 ubuntu ntp[13644]: ...done.Jun 25 09:07:00 ubuntu systemd[1]: Started LSB: Start NTP daemon.Jun 25 09:07:00 ubuntu ntpd[13655]: proto: precision = 0.059 usec (-24)Jun 25 09:07:00 ubuntu ntpd[13655]: Listen and drop on 0 v6wildcard [::]:123Jun 25 09:07:00 ubuntu ntpd[13655]: Listen and drop on 1 v4wildcard 0.0.0.0:123Jun 25 09:07:00 ubuntu ntpd[13655]: Listen normally on 2 lo 127.0.0.1:123Jun 25 09:07:00 ubuntu ntpd[13655]: Listen normally on 3 enp0s3 10.0.2.15:123Jun 25 09:07:00 ubuntu ntpd[13655]: Listen normally on 4 lo [::1]:123Jun 25 09:07:00 ubuntu ntpd[13655]: Listen normally on 5 enp0s3 [fe80::ff:86ff:fe2d:ca23%2]:123Jun 25 09:07:00 ubuntu ntpd[13655]: Listening on routing socket on fd #22 for interface updatesJun 25 09:07:01 ubuntu ntpd[13655]: Soliciting pool server 203.122.222.45Jun 25 09:07:02 ubuntu ntpd[13655]: Soliciting pool server 27.124.125.251Jun 25 09:07:02 ubuntu ntpd[13655]: Soliciting pool server 103.51.68.133Jun 25 09:07:03 ubuntu ntpd[13655]: Soliciting pool server 130.102.128.23Jun 25 09:07:03 ubuntu ntpd[13655]: Soliciting pool server 150.101.217.196Jun 25 09:07:03 ubuntu ntpd[13655]: Soliciting pool server 121.0.0.41Jun 25 09:07:04 ubuntu ntpd[13655]: Soliciting pool server 121.0.0.42Jun 25 09:07:04 ubuntu ntpd[13655]: Soliciting pool server 202.127.210.36Jun 25 09:07:04 ubuntu ntpd[13655]: Soliciting pool server 202.127.210.37Jun 25 09:07:04 ubuntu ntpd[13655]: Soliciting pool server 200.160.7.186

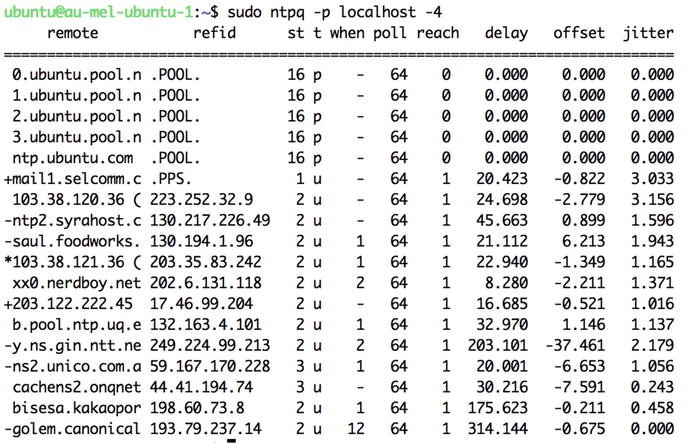

We can also verify that our host is synchronized by querying the NTP server from the local host via the ntpq command, as shown in Listing 10-4. We use the -p option to list any peers we are connected to and the -4 option to resolve the hostname to an IPv4 address.

In Figure 10-1 you can see the output of our peer listing. The remote host starting with a ‘*’ is the current time source and those with a ‘+’ are hosts that have been selected to be used in the final set for the weighted average computation (do you remember that from Chapter 2?); those with a ‘-‘ have been discarded. The st column is the server stratum and we are peered to both stratum 1 and 2 servers via the Ubuntu stratum 16 servers (you shouldn’t need to peer to any peer 1 stratum servers directly). You can also see other details about the delay, offset, and jitter.

Figure 10-1. Listing connected peers

You can now configure the hosts on your network to use the bastion host as their upstream NTP server and you can verify that they work via the ntpq command.

Chrony

Chrony is an alternative to the NTP service daemon that keeps hosts in sync with world clocks. There are several differences between Chrony and NTP in their implementation but both use the same upstream time sources. The main differences between the two implementations are the following:

Chrony does not support multicast and manycast.

Chrony is useful in situations where networks are intermittent.

Chrony works better in congested networks and virtual hosts.

Install and Configure Chrony

Chrony is quick and easy to install and is available on both CentOS and Ubuntu. Let’s quickly show how to install and set it up.

Installation is simple via package management on both distributions.

$ sudo yum install –y chrony$ sudo aptitude install –y chrony

There is a configuration file placed in /etc/chrony.conf on CentOS and /etc/chrony/chrony.conf on Ubuntu. In that file you will find the public time servers and other settings.

Listing 10-5. /etc/chrony.conf from CentOS

# Use public servers from the pool.ntp.org project.# Please consider joining the pool (http://www.pool.ntp.org/join.html).server 0.centos.pool.ntp.org iburstserver 1.centos.pool.ntp.org iburstserver 2.centos.pool.ntp.org iburstserver 3.centos.pool.ntp.org iburst# Ignore stratum in source selection.stratumweight 0# Record the rate at which the system clock gains/losses time.driftfile /var/lib/chrony/drift# Enable kernel RTC synchronization.rtcsync# In first three updates step the system clock instead of slew# if the adjustment is larger than 10 seconds.makestep 10 3# Allow NTP client access from local network.#allow 192.168/16# Listen for commands only on localhost.bindcmdaddress 127.0.0.1bindcmdaddress ::1# Serve time even if not synchronized to any NTP server.#local stratum 10keyfile /etc/chrony.keys# Specify the key used as password for chronyc.commandkey 1# Generate command key if missing.generatecommandkey# Disable logging of client accesses.noclientlog# Send a message to syslog if a clock adjustment is larger than 0.5 seconds.logchange 0.5logdir /var/log/chrony#log measurements statistics tracking

The settings listed in Listing 10-5 are similar to the ntp.conf file we have shown earlier. By default on both Ubuntu and CentOS we don’t allow clients to synchronize from this time service. To do that we need to set the allow setting as follows:

allow 192.168/16You would only set this if you were going to use these hosts in your networks as local network time clocks. Otherwise you can leave it commented out.

You may also need to add the cmdallow setting on CentOS to enable the chronyc command to access the service. We will look at chronyc shortly.

Now we use the systemctl command to enable and start our service. Replace chronyd for chrony on Ubuntu.

$ sudo systemctl enable chronyd && sudo systemctl start chronydThen check the service is running with

$ sudo systemctl status chronydManaging Chrony with Chronyc

Chrony provides a command-line interface tool to query and manage Chrony called chronyc. Again it is similar to how you can use the ntp tool.

You can enter the command-line interface by just typing chronyc or you can access the subcommands from the Linux command shell directly. You can get help with the following:

$ chronyc helpChrony provides tracking information, different metrics about the time service, from the command line:

$ chronyc trackingReference ID : 192.189.54.33 (warrane.connect.com.au)Stratum : 3Ref time (UTC) : Sat Oct 1 00:51:03 2016System time : 0.000043108 seconds slow of NTP timeLast offset : -0.000094345 secondsRMS offset : 0.027604111 secondsFrequency : 459.036 ppm slowResidual freq : -0.012 ppmSkew : 0.430 ppmRoot delay : 0.075154 secondsRoot dispersion : 0.012796 secondsUpdate interval : 260.5 secondsLeap status : Normal

We can see from the the foregoing that we have various metrics describing our system time and our clock accuracy. We can see the stratum we are syncing against, the system time, and the offset.

Next we can view the clock sources using the following:

$ chronyc sources210 Number of sources = 4MS Name/IP address Stratum Poll Reach LastRx Last sample===========================================================================================^+ 0.time.itoc.com.au 2 9 377 446 +798us[ +798us] +/- 73ms^+ dns02.ntl01.nsw.privatecl 2 9 377 249 +1507us[+1507us] +/- 52ms^* warrane.connect.com.au 2 9 377 504 -1782us[-1876us] +/- 50ms^+ 203.122.222.45 2 9 377 183 -200us[ -200us] +/- 85ms

You can use the chronyc command to add and deny access to chrony and to add and remove peers also.

For more information, see the following:

Domain Name System

In Chapter 2 we suggested using descriptive names for hosts. We also provided an IP (Internet Protocol ) address for the host. We can, of course, use the IP address to access our host; however, humans tend to like to use names more than than “strange” numbers. But how do we map the hostname to the IP address? We can use local host’s files to do address-to-name mappings, as we showed you in Chapter 4. But once your network grows beyond more than a handful of hosts, making sure that all copies of this file remain synchronized becomes an effort.

So a way of translating these names to the IP addresses of the hosts was devised. This is called Domain Name System or DNS. A DNS server maintains lists of address-to-hostname (and vice versa) mappings and can be queried by other hosts, or by users directly, using various utilities. DNS can be used to find the IP addresses of hosts on your local network or hosts on networks anywhere in the world. Let’s see how it does this, starting with the root servers.

Note

Before DNS existed, a single hosts.txt file was used. This file was maintained by the Network Information Center (NIC) and distributed to all ARPANET-connected machines via FTP (File Transfer Protocol).

Root Servers

Somehow, a DNS server needs to know which host or hosts to query for the correct address. An apple.com DNS server has no idea about a google.com host, so how does our own DNS server know where to look?

The entire DNS structure is like a large upside-down tree. Each period in a domain name is like a branch in this tree . As you read a domain name from left to right, each period indicates a split to a lower level in the tree, which is closer to the root. These levels are called zones, and for each zone a domain is a part of, a query is done to find out what the name servers are for that zone. One of these servers is then queried in turn to obtain a DNS server for the next zone. The lowest-level zone—the one that all other zones are members of—is called the root zone. We indicate this zone with a single period. The next level consists of top-level domains (TLDs), including generic domains such as net, com, org, and edu, as well as country codes such as au, nz, uk, and us. Figure 10-2 shows a small part of this tree structure.

Figure 10-2. DNS tree structure

When writing a hostname or domain name, you usually leave off the trailing period for the root zone, but when you’re dealing with DNS servers you should explicitly include it, as leaving it off may lead to unexpected results. The DNS information for these TLDs is stored in so-called root servers.

There are presently 13 globally distributed root name servers in the world that return the list of authoritive name servers for the TLDs. There are not actually just 13 servers chugging away at this but a highly distributed cluster of dedicated servers in multiple datacenters. We shall see the role these servers play in the delegation path in the upcoming “dig” section.

Note

Since the root servers are an absolute critical core of the Internet, they have been the target for network attacks. Following is summary list: https://en.wikipedia.org/wiki/Distributed_denial-of-service_attacks_on_root_nameservers .

WHOIS

When an organization or person buys a domain, the root DNS servers need to know which DNS servers further down the tree have been delegated to respond to queries for that domain. The organization in charge of the .com domain is the Internet Corporation for Assigned Names and Numbers (ICANN), and it manages the registrars.

When you buy a domain from a registrar, you can specify the name servers the domain is delegated to. The registrar then ensures that your DNS servers are added in the correct TLD zone, so third parties can use your DNS servers to look up hostnames on your domain. This is called the delegation path.

You can obtain a listing of DNS servers for a given domain by querying the registrar’s database directly. The tool used for this, whois, is handy for making sure DNS delegations are correct. On CentOS and Ubuntu it’s provided by the whois package. After installing the package, we can look at the delegation’s detail for the google.com domain, as shown in Listing 10-6.

Listing 10-6. Using whois to Check Delegation Details

$ whois 'domain google.com'Whois Server Version 2.0Domain names in the .com and .net domains can now be registeredwith many different competing registrars. Go to http://www.internic.netfor detailed information.Domain Name: GOOGLE.COMRegistrar: MARKMONITOR INC.Sponsoring Registrar IANA ID: 292Whois Server: whois.markmonitor.comReferral URL: http://www.markmonitor.comName Server: NS1.GOOGLE.COMName Server: NS2.GOOGLE.COMName Server: NS3.GOOGLE.COMName Server: NS4.GOOGLE.COMStatus: clientDeleteProhibited https://icann.org/epp#clientDeleteProhibitedStatus: clientTransferProhibited https://icann.org/epp#clientTransferProhibitedStatus: clientUpdateProhibited https://icann.org/epp#clientUpdateProhibitedStatus: serverDeleteProhibited https://icann.org/epp#serverDeleteProhibitedStatus: serverTransferProhibited https://icann.org/epp#serverTransferProhibitedStatus: serverUpdateProhibited https://icann.org/epp#serverUpdateProhibitedUpdated Date: 20-jul-2011Creation Date: 15-sep-1997Expiration Date: 14-sep-2020>>> Last update of whois database: Sat, 25 Jun 2016 12:34:50 GMT <<<

We issue the whois command and we specify that we are looking only for the domain google.com. If we search without specifying the domain we are after, every domain that contains google.com in the domain name will be returned. You can see we have retrieved some information about Google’s domain, including the name of the registrar; the name servers it is delegated to; and creation, modification, and expiration dates.

Some registrars also provide contact details for the domain owner via whois. This is something to keep in mind when choosing a registrar to buy your domain from, as it’s a relatively convenient way for spammers to collect e-mail addresses .

Note

Most registrars allow only a limited number of lookups per day from a specific address against their database, to discourage address harvesting.

Querying Name Servers

You will already be using the DNS server or servers run by your Internet service provider to look up addresses for hosts on the Internet. Typing www.google.com is a lot more convenient than having to remember 74.125.19.147 whenever you want to do a web search. The addresses for these DNS servers are stored in the /etc/resolv.conf file. We’ve included ours in Listing 10-7; yours will, of course, be different.

Listing 10-7. /etc/resolv.conf

$ cat /etc/resolv.confsearch example.comnameserver 192.168.1.1nameserver 192.168.1.254

When you visit a web site or connect to a host via SSH, the application in question performs a host lookup using these DNS servers. These applications use a system library, which first checks your /etc/hosts file and then queries a name server only if needed.

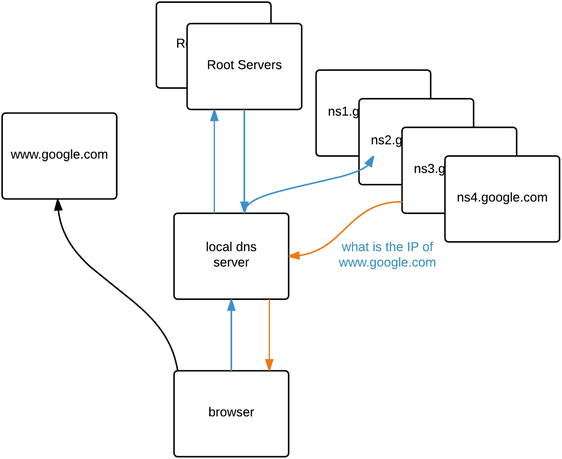

In the following Figure 10-3 we can see a how a browser will request an IP address from their local dns, it will then query a list of root servers to find the name servers it needs to ask for the google.com domain.

Figure 10-3. Simple recursive DNS query

The local dns server will first need to ask the ‘.’ root servers for a server that can answer for the ‘.com.’ zone. The local dns server will then ask one of the ‘.com.’ servers which server can answer queries for ‘‘.google.com.’. We then query one of the nsX.google.com. servers for the ‘www’ record. The browser now knows which IP address to use when looking for www.google.com.

The host Command

You can also query DNS servers manually. The DNS-related tools are provided by the bind-utils package on CentOS and the dnsutils package on Ubuntu, so install them. Direct host or address lookups can be done via the host utility.

Note

You may be used to using the deprecated nslookup utility. The host command is its replacement.

You pass the hostname or address you want to look up and optionally the DNS server you want to query, as shown in Listing 10-8. If you leave off the DNS server, the utility will use one defined in /etc/resolv.conf.

Listing 10-8. Querying a DNS Server with host

$ host www.google.com 192.168.1.1Using domain server:Name: 192.168.1.1Address: 192.168.1.1#53Aliases:www.google.com has address 150.101.161.167www.google.com has address 150.101.161.173www.google.com has address 150.101.161.174www.google.com has address 150.101.161.180www.google.com has address 150.101.161.181www.google.com has address 150.101.161.187www.google.com has address 150.101.161.146www.google.com has address 150.101.161.152www.google.com has address 150.101.161.153www.google.com has address 150.101.161.159www.google.com has address 150.101.161.160www.google.com has address 150.101.161.166www.google.com has IPv6 address 2404:6800:4006:800::2004

In Listing 10-8, we’ve asked the DNS server running on 192.168.1.1 to look up an address for www.google.com it returns several different IP addresses, including an IPv6 address. The Google web site will respond on any of these addresses and is used in a round robin, where the order of the returned list will change every so often (like we saw with the ntp pool servers earlier).

Conversely, we can do a dns lookup of a hostname for an IP addresses as well.

$ host 205.251.193.236236.193.251.205.in-addr.arpa domain name pointer ns-492.awsdns-61.com.

What that has done is query the DNS server asking if it knows about any records for the address 205.251.193.236 and what host that refers to. It’s called a reverse name lookup and may not always return any results as you don’t have to record that information in your DNS records, it is just sometimes nice to have.

The dig Command

Though host is useful, it does not generally provide enough information to help resolve any DNS problem you might have, especially when you run your own DNS servers. A more flexible utility is dig, which is also provided by the bind-utils or dnsutils package.

At its most basic level, dig also does name- or address-based lookups, but it provides additional information with each lookup. Let’s do the same lookup we did in Listing 10-8, but use dig instead (see Listing 10-9).

Listing 10-9. Querying a DNS Server with dig

$ dig www.google.com; <<>> DiG 9.10.3-P4-Ubuntu <<>> www.google.com;; global options: +cmd;; Got answer:;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 33352;; flags: qr rd ra; QUERY: 1, ANSWER: 12, AUTHORITY: 4, ADDITIONAL: 5;; OPT PSEUDOSECTION:; EDNS: version: 0, flags:; udp: 4096;; QUESTION SECTION:;www.google.com. IN A;; ANSWER SECTION:www.google.com. 41 IN A 150.101.161.153www.google.com. 41 IN A 150.101.161.159www.google.com. 41 IN A 150.101.161.160www.google.com. 41 IN A 150.101.161.166www.google.com. 41 IN A 150.101.161.167www.google.com. 41 IN A 150.101.161.173www.google.com. 41 IN A 150.101.161.174www.google.com. 41 IN A 150.101.161.180www.google.com. 41 IN A 150.101.161.181www.google.com. 41 IN A 150.101.161.187www.google.com. 41 IN A 150.101.161.146www.google.com. 41 IN A 150.101.161.152;; AUTHORITY SECTION:google.com. 2071 IN NS ns4.google.com.google.com. 2071 IN NS ns1.google.com.google.com. 2071 IN NS ns3.google.com.google.com. 2071 IN NS ns2.google.com.;; ADDITIONAL SECTION:ns1.google.com. 179 IN A 216.239.32.10ns2.google.com. 4851 IN A 216.239.34.10ns3.google.com. 186 IN A 216.239.36.10ns4.google.com. 8300 IN A 216.239.38.10;; Query time: 11 msec;; SERVER: 192.168.1.1#53(192.168.1.1);; WHEN: Sun Jun 26 00:11:48 UTC 2016;; MSG SIZE rcvd: 371

In Listing 10-9 you can see that dig outputs the query results in distinct sections. First comes some information about the command you’re running, including whether or not the query succeeded (opcode: QUERY, status: NOERROR, id: 33352). Next is the query section, which shows you what you actually sent to the DNS server. In this case, we are looking for an A record for the host www.google.com .

;; QUESTION SECTION:;www.google.com. IN A

An A record is one that maps names to addresses. We’ll cover record types in more detail shortly.

Note

If a record doesn’t exist you will get a status response of “NXDOMAIN.” For a list of all the possible responses see www.iana.org/assignments/dns-parameters/dns-parameters.xhtml#dns-parameters-6 .

The answer section holds the response to your query . In this case, it says that www.google.com has multiple A records assigned to it.

;; ANSWER SECTION:www.google.com. 41 IN A 150.101.161.153www.google.com. 41 IN A 150.101.161.159...

In the authority section, dig lists the authoritative name servers for this query. Here you can see that authoritative responses for the google.com. zone can be obtained from four DNS servers.

;; AUTHORITY SECTION:google.com. 2071 IN NS ns4.google.com.google.com. 2071 IN NS ns1.google.com.google.com. 2071 IN NS ns3.google.com.google.com. 2071 IN NS ns2.google.com.

dig provides us with the IP addresses of these four servers in the additional section.

;; ADDITIONAL SECTION:ns1.google.com. 179 IN A 216.239.32.10...

Finally, dig tells us how long the query took, which server was queried, when the query was run, and how much data it received.

;; Query time: 11 msec;; SERVER: 192.168.1.1#53(192.168.1.1);; WHEN: Sun Jun 26 00:11:48 UTC 2016;; MSG SIZE rcvd: 371

The actual response data is displayed in five columns. This format is identical to the way Berkeley Internet Name Domain (BIND) defines domains internally, where records are defined using five fields and semicolons are used for comments. These five fields are the record name, the time until the data expires (better known as time to live or TTL), the record class (which is virtually always IN for Internet), the record type, and finally the data for this record.

<record name> <ttl> <class> <type> <data>

www.google.com. 41 IN A 150.101.161.153You can use dig to query any DNS server for specific record types as well. Table 10-1 lists the most commonly used record types. We’ll set up some of these later as well.

Table 10-1. DNS Record Types

Type | Used For |

|---|---|

SOA | Defines a serial number and expiration information for the domain |

A | IPv4 hostname to address maps |

AAAA | IPv6 hostname to address maps |

CNAME | Adds an alias for an existing A or AAAA record |

MX | Specifies mail servers for the domain |

TXT | Text records, often used with SPF or DKIM (MX record validation) and other machine-readable data |

SRV | Specifies service records associated with services |

NS | Specifies DNS servers for the domain |

PTR | Maps an address to a hostname |

DS | Delegation Signer—used in DNSSEC |

DNSKEY | DNSKEY record used in DNSSEC |

RRSIG | Resource record signature used in DNSSEC |

Armed with this knowledge, you can now make use of the more advanced features of dig. We previously invoked it with just a hostname as parameter, but a full command usually looks like dig @server name type. In the case of our first example, the full explicit command would have been dig @192.168.1.1 www.google.com A.

Note

To use the host utility for the same kind of lookup, enter host -v -t <type> <name> <server>.

We found out the main DNS servers for the google.com domain via whois earlier. To check that these DNS servers are configured properly, we can query them for all records of the NS type in the google.com domain, as shown in Listing 10-10.

Listing 10-10. Querying a DNS Server for a Specific Record Type

$ dig @ns1.google.com google.com NS; <<>> DiG 9.10.3-P4-Ubuntu <<>> google.com NS;; global options: +cmd;; Got answer:;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 44887;; flags: qr rd ra; QUERY: 1, ANSWER: 4, AUTHORITY: 0, ADDITIONAL: 5;; OPT PSEUDOSECTION:; EDNS: version: 0, flags:; udp: 4096;; QUESTION SECTION:;google.com. IN NS;; ANSWER SECTION:google.com. 10158 IN NS ns2.google.com.google.com. 10158 IN NS ns3.google.com.google.com. 10158 IN NS ns1.google.com.google.com. 10158 IN NS ns4.google.com.;; ADDITIONAL SECTION:ns1.google.com. 8267 IN A 216.239.32.10ns2.google.com. 12939 IN A 216.239.34.10ns3.google.com. 8274 IN A 216.239.36.10ns4.google.com. 1987 IN A 216.239.38.10;; Query time: 9 msec;; SERVER: 10.0.2.3#53(10.0.2.3);; WHEN: Sun Jun 26 01:57:01 UTC 2016;; MSG SIZE rcvd: 175

Listing 10-10 shows us that the ns1.google.com DNS server does indeed have information about four name servers for the google.com domain, so it appears to be configured correctly.

Sometimes you need to know the delegation path (Listing 10-11) to your name servers. To find out that information we can use dig with the +trace option.

Listing 10-11. Seeing the Delegation Path

$ dig +trace www.google.com; <<>> DiG 9.10.3-P4-Ubuntu <<>> +trace www.google.com;; global options: +cmd. 9903 IN NS a.root-servers.net.. 9903 IN NS d.root-servers.net.. 9903 IN NS j.root-servers.net.. 9903 IN NS e.root-servers.net.. 9903 IN NS k.root-servers.net....<snip>.... 9903 IN NS h.root-servers.net....<snip>...;; Received 913 bytes from 10.0.2.3#53(10.0.2.3) in 12 mscom. 172800 IN NS a.gtld-servers.net.com. 172800 IN NS b.gtld-servers.net.com. 172800 IN NS c.gtld-servers.net.com. 172800 IN NS d.gtld-servers.net.com. 172800 IN NS e.gtld-servers.net....<snip>...com. 172800 IN NS m.gtld-servers.net....<snip>...;; Received 738 bytes from 192.58.128.30#53(j.root-servers.net) in 25 msgoogle.com. 172800 IN NS ns2.google.com.google.com. 172800 IN NS ns1.google.com.google.com. 172800 IN NS ns3.google.com.google.com. 172800 IN NS ns4.google.com....<snip>...;; Received 664 bytes from 192.52.178.30#53(k.gtld-servers.net) in 176 mswww.google.com. 300 IN A 150.101.213.166www.google.com. 300 IN A 150.101.213.159...<snip>...www.google.com. 300 IN A 150.101.213.174;; Received 224 bytes from 216.239.34.10#53(ns2.google.com) in 177 ms

We have removed some of the authentication records listed in the output to make it clearer. With the +trace option we can see that the ‘.’ root zone delegates to the servers in the ‘com.’ zone which then delegate to the name servers at ‘google.com.’. If you are setting up a DNS server as the authority for your domain, you can validate that your delegation path is correct with the +trace option.

Tip

There is often a “propagation delay” as the world of DNS reorganizes itself with updates. This delay is dependent on record TTLs and how often name servers update their records. Using dig is useful in displaying the world of DNS.

Zone Metadata

We mentioned earlier that one of the columns listed in dig results is TTL. This field defines how long DNS records are valid for, which allows your local applications to cache the results of a DNS lookup for a certain time. This way, there is no need to perform several DNS lookups for each connection you make (remember, one or more lookups are performed to find an authoritative DNS server first), which speeds up the process of establishing network connections considerably.

The other important type is called SOA, for Start of Authority . This record contains meta-information about the zone. For instance, it includes a serial number so servers can check if the zone was changed, and it defines a contact e-mail for the server administrator as well.

Let’s ask one of the Google servers for the SOA record of the google.com domain (Listing 10-12). We’ve left the authoritative and extra sections off the output.

Listing 10-12. Querying a DNS Server for an SOA Record

$ dig google.com @ns1.google.com SOA; <<>> DiG 9.10.3-P4-Ubuntu <<>> google.com @ns1.google.com SOA;; global options: +cmd;; Got answer:;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 43145;; flags: qr aa rd; QUERY: 1, ANSWER: 1, AUTHORITY: 4, ADDITIONAL: 4;; WARNING: recursion requested but not available;; QUESTION SECTION:;google.com. IN SOA;; ANSWER SECTION:google.com. 60 IN SOA ns2.google.com. dns-admin.google.com. 125880391 900 900 1800 60

Listing 10-12 shows that the SOA record consists of seven fields, which define how other DNS servers interact with this zone. After the DNS type (SOA) you will see seven fields.

<name server> <admin email address> serial # refresh retry expiry nx ttlns2.google.com. dns-admin.google.com. 125880391 900 900 1800 60

We will look at them in greater detail shortly but we would like to mention the last item in the list, which is the negative cache TTL, or nx ttl. This tells other the dns servers to cache negative results (“NXDOMAIN”) to prevent authoritive name servers from continuously performing a lookup for a host that does not exist. In this case, a remote server should keep responding with “No such host” for 60 seconds after the initial query before it queries the authoritative name servers again. This period can be longer than 60 seconds and some might be as long as a week.

Running Caching DNS

Not all ISPs’ name servers are equally reliable, and some can be slow, so why don’t we run our own? A few DNS server software packages are available, but the most commonly used and well-known is Berkeley Internet Name Domain (BIND) .

Note

BIND is named after the place where it was developed, the University of California at Berkeley.

The software is provided by the bind package on CentOS. You install these via yum install bind. On Ubuntu, these are provided by the bind9 package, which you add via sudo aptitude install bind9. The DNS server binary itself is called named—because it is name(server) daemon.

The main configuration file shipped on Ubuntu is /etc/bind/named.conf, while on CentOS the /etc/named.conf file is used. Listing 10-13 shows you the basic file that ships with Ubuntu.

Listing 10-13. The Top of /etc/bind/named.conf in Ubuntu

// This is the primary configuration file for the BIND DNS server named.//// Please read /usr/share/doc/bind9/README.Debian.gz for information on the// structure of BIND configuration files in Debian, *BEFORE* you customize// this configuration file.//// If you are just adding zones, please do that in /etc/bind/named.conf.localinclude "/etc/bind/named.conf.options";include "/etc/bind/named.conf.local";include "/etc/bind/named.conf.default-zones";

This file contains references to other files, which contain the actual configuration settings and (optionally) information about domains that are hosted locally. Comments in these configuration files are prefixed with a double slash (//) and all directives and blocks are terminated with a semicolon (;).

The include directive tells named to read the specified file and process any directives it contains, including nested include commands. In this case, the named.conf.options file contains the options section, which affects the way named operates. This is the file you would edit to make changes to your configuration on Ubuntu (see Listing 10-14).

Listing 10-14. Default named Options in Ubuntu

options {directory "/var/cache/bind";// If there is a firewall between you and nameservers you want// to talk to, you may need to fix the firewall to allow multiple// ports to talk. See http://www.kb.cert.org/vuls/id/800113// If your ISP provided one or more IP addresses for stable// nameservers, you probably want to use them as forwarders.// Uncomment the following block, and insert the addresses replacing// the all-0's placeholder.// forwarders {// 0.0.0.0;// };//========================================================================// If BIND logs error messages about the root key being expired,// you will need to update your keys. See https://www.isc.org/bind-keys//========================================================================dnssec-validation auto;auth-nxdomain no; # conform to RFC1035listen-on-v6 { any; };};

The directorydirective determines that the location named will be used to look for files and also to write any files, if it’s configured to do so. You can override this for individual files by specifying a full system path starting with /.

Forwardersare what named calls upstream DNS servers. If you want your caching name server to use only your ISP’s name server or a set of other name servers, you can list their IP addresses in the forwarders block, each on a line by itself and terminated by a semicolon.

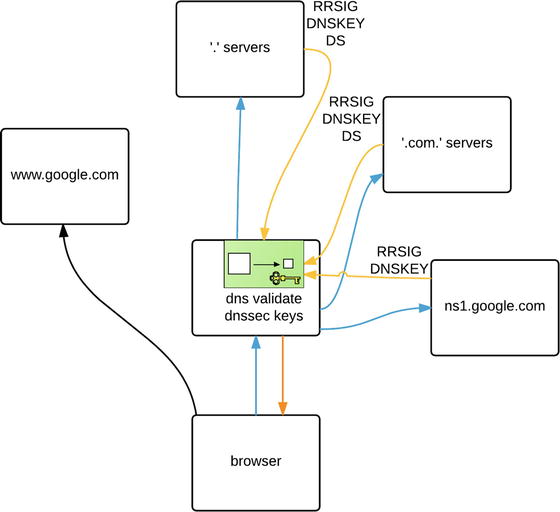

The next option, dnssec-validation, defaults to auto. This means it will try to validate the replies from dns servers with DNSSEC enabled if it receives a delegated signer record. DNSSEC is designed to prevent response tampering or dns cache poisoning, where a malicious actor can inject fake records into dns cache servers and route Internet traffic to hosts of their choosing.

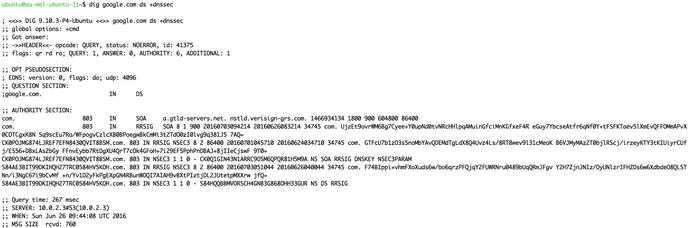

In Figure 10-4 we use the dig tool to find out more information about the google.com dnssec records.

Figure 10-4. Google’s DNSSEC records

We apologize if you have to squint, but we used the dig google.com ds +dnssec command to query for the delegated signing records for dnssec. Take a look at the following sidebar for more information about what they mean.

The next option, auth-nxdomain, is set to no. This controls how the name server responds to lookups for domains that it thinks do not exist, which means that your local DNS server will not claim to be authoritative if it cannot find information about a domain. This in turn means that a client can continue querying other DNS servers, if this one cannot find information about a domain.

Finally, the listen-on-v6 option tells BIND that it should listen for queries on all available IPv6 addresses on all network interfaces.

To avoid the chicken-and-egg problem, a caching DNS server ships with a built-in listing of root servers. You can find them in /var/named/named.ca on CentOS and /etc/bind/db.root on Ubuntu. You can also use dig to obtain a current list of root servers, by querying a root server for all records of type NS in the “.” zone.

$ dig @a.root-servers.net . NS > db.root.Let’s now take a look at the /etc/named.conf file (see Listing 10-15) that is installed when we install the BIND package on CentOS.

Listing 10-15. CentOS /etc/named.conf

//// named.conf//// Provided by Red Hat bind package to configure the ISC BIND named(8) DNS// server as a caching only nameserver (as a localhost DNS resolver only).//// See /usr/share/doc/bind*/sample/ for example named configuration files.//options {listen-on port 53 { 127.0.0.1; };listen-on-v6 port 53 { ::1; };directory "/var/named";dump-file "/var/named/data/cache_dump.db";statistics-file "/var/named/data/named_stats.txt";memstatistics-file "/var/named/data/named_mem_stats.txt";allow-query { localhost; };/*- If you are building an AUTHORITATIVE DNS server, do NOT enable recursion.- If you are building a RECURSIVE (caching) DNS server, you need to enablerecursion.- If your recursive DNS server has a public IP address, you MUST enable accesscontrol to limit queries to your legitimate users. Failing to do so willcause your server to become part of large scale DNS amplificationattacks. Implementing BCP38 within your network would greatlyreduce such attack surface*/recursion yes;dnssec-enable yes;dnssec-validation yes;/* Path to ISC DLV key */bindkeys-file "/etc/named.iscdlv.key";managed-keys-directory "/var/named/dynamic";pid-file "/run/named/named.pid";session-keyfile "/run/named/session.key";};logging {channel default_debug {file "data/named.run";severity dynamic;};};zone "." IN {type hint;file "named.ca";};include "/etc/named.rfc1912.zones";include "/etc/named.root.key";

The major difference between the CentOS and Ubuntu files is the location you use to store data for named. The dump-file directive allows named to write transient data to a file when it exits. It can then reread this data when it is started again. The statistics-filedefines where named writes statistical information about the types and number of queries it receives.

This bind server is set to listen on localhost for IPv4 and IPv6. With this configuration, other hosts on the network will not be able to use this service. We will need to change the listen-on(-v6) to listen on a reachable IP address.

listen-on port 53 { 127.0.0.1; 192.168.1.1; };listen-on-v6 port 53 { ::1; };

The next settings in Listing 10-5 are recursion (should be ‘yes’) as we are not using this dns server as an authoritative name server (meaning it won’t be queried for any zones itself, but rather ask other dns servers). We enable dnssec and dnssec-validation by setting both to ‘yes’. The bindkeys-file points to the iscdlv.key path. This key is used in dnssec validation when there is no DS record (this is less common now that DNSSEC is rolled out nearly everywhere).

We then have logging options. You can view the logs in /var/named/data/named.run on CentOS and you can dynamically adjust the severity levels.

The root zone file is next. That is output you received from dig @a.root-servers.net . NS and you would place it in the file named /var/named/named.ca. Bind uses this to find the root servers for recursive lookups.

Finally, like we saw in the Ubuntu named.conf file, we can include other configuration files with the include directive.

We can now start the name server via the sudo systemctl start named command. On Ubuntu, the name server is started automatically when it is installed, but if it were not running we could start it via sudo service bind9 start.

So that our new DNS server can be queried, we need to ensure the firewall is not blocking traffic. DNS defaults to using the UDP protocol on port number 53, but it will switch to the TCP if responses contain a large amount of data. Add the appropriate rules to the correct Netfilter chain for your network layout.

$ sudo /sbin/iptables –t filter –A INPUT -p udp --dport 53 -j ACCEPT$ sudo /sbin/iptables –t filter –A INPUT -p tcp --dport 53 -j ACCEPT

Note

Make sure to configure the firewall on the DNS host to permit outgoing DNS responses, too. We covered firewalls and iptables in Chapter 7.

We now have our own caching DNS server, which we can use to do lookups. We call it a caching DNS server because it keeps the answers to any queries we do, so the next time we perform the same query, it can respond immediately with the cached information.

To make sure it works, we will query it directly, as shown in Listing 10-16.

Listing 10-16. Querying Our Local Caching DNS Server

$ host www.google.com localhostUsing domain server:Name: localhostAddress: 127.0.0.1#53Aliases:www.google.com has address 216.58.220.132www.google.com has IPv6 address 2404:6800:4006:806::2004

We asked the DNS server running on localhost to look up the address for www.google.com and it responded, so it works!

With a working caching DNS, we can replace the nameserver entries in our /etc/resolv.conf file with nameserver 192.168.0.1 to use our own server. We can also add this DNS server to the resolv.conf files on any other hosts we have in our local network.

Authoritative DNS

If you need to map hostnames to IP addresses for hosts in your own network, then a DNS caching server will not do. You will need to have an authoritative DNS server. An authoritive DNS server is an authoritative source of information for a zone. An authoritive DNS will provide DNS resolution for our local network, which will house our example.com domain. We do this by defining two zones: one to provide mappings from name to address and one to provide reverse mappings, from address to name.

Rezoning

Domains are described as zones. Zones are defined in zone files, much like the root zone file we mentioned earlier. Zone files always contain a header, also known as the SOA record. This header is optionally followed by DNS records that define services and hosts. We’ve included a sample zone file header in Listing 10-17.

Listing 10-17. Zone File Header for the example.com Domain

$ORIGIN example.com.$TTL 86400@ IN SOA example.com. root.example.com. (2016070100 ; Serial604800 ; Refresh86400 ; Retry2419200 ; Expire3600 ) ; Negative Cache TTL

This header defines some meta-information about our zone that is used by caching DNS servers and also by any slave servers we may have defined. Slave serversare authoritative DNS servers that automatically retrieve their zone information from a master DNS server. You would use them to provide redundant DNS services, like your ISP does.

Note

DNS is a read heavy database, few writes, so it can easily scale to many slaves.

We’ve listed the fields from our zone header and their use in Table 10-2. In our example we’ve listed all times in seconds, but you can also use 1d instead of 86400 to indicate one day, or 4w instead of 2419200 to indicate four weeks.

Table 10-2. Zone Header Fields

Field | Use |

|---|---|

$ORIGIN | Defines the start of the zone |

$TTL | Time to live , which is the default expiration for records in this zone that do not have their own expiration time set |

SOA | Start of Authority, which contains seven records of zone metadata |

Master | Primary authoritative DNS server for this domain |

Contact | E-mail address of the contact for this domain, with the at sign (@) replaced by a period |

Serial | Defines the version of this zone file, used by slave name servers |

Refresh | Defines how often slave servers should update their copy of this zone |

Retry | Defines the interval between attempts to refresh a slave server |

Expire | Defines how long a slave server is allowed to use any version of this zone file |

Negative Cache TTL | Defines how long a failed lookup result may be cached |

It’s also worth noting that we’re using a serial number based on the current date. You can use the YYYYMMDD for the current year, month, and day, followed by a two-digit number . This allows people to easily see when the zone was last changed, while still allowing for 99 changes each day. You can also choose to use a date based on unix epoch time (number of seconds since 01/01/1970) for the zone. The format is a ten-digit string and it can be generated with the following command: $ date +%s. For another alternative, you can use a simple incrementing number as the serial number. The at symbol (@) in front of the SOA evaluates to the name of the current zone. We could have also typed example.com. in its place.

The TTL for the zone can vary greatly. Smaller values mean that records will be cached by other DNS servers for a shorter period of time. That means your DNS servers will be queried more often, whereas longer TTLs means less queries to your DNS servers. If your host IPs are changing regularly you will want to have this value small. If they are not, then longer times are suitable.

Forward Lookup Zones

It is easy to create zones files via a text editor and add their definitions to the /etc/named.conf.local file. We’re going to store our forward lookup zone in a file called example.com.db.

Before we go on there are are few differences between CentOS and Ubuntu to be aware of. Zone files are stored in /var/named/ and Ubuntu in /var/cache/bind. The daemon that runs the DNS service on CentOS is called named and is run by the named user. Ubuntu, on the other hand, runs the bind daemon with the bind user. In the following example we will be using an Ubuntu server. Because only the root user may write to the zone file directory, we start our editor using sudo.

$ sudo vim /var/cache/bind/master/example.com.dbWe place these authoritive zone files in the master directory. We will be showing you how the slaves operate shortly. You will need to make sure that the directory /var/cache/bind/master or /var/named/master exists, depending on your distribution. Now, we simply copy and paste the zone header from Listing 10-18 into this file and save it. With the header done, we can start to add actual host and service records into this file.

You need two basic service record types to be present in your zone. One is the NS record, which defines which hosts act as DNS server for this domain, and the other is the MX record, which defines mail servers for this domain. Both records start with a blank field, as they do not define hostnames.

Listing 10-18. Our Service Records

IN NS ns.example.com.IN MX 10 mail.example.com.

The data for the MX record consists of a priority number and then the hostname that remote servers should try to deliver mail to. A properly configured remote mail server will work through a list of MX records, starting with the lowest priority number, and try to deliver e-mail. Note that we’ve specified a fully qualified domain name (FQDN; which is the hostname plus the full domain name) with a trailing period for these entries. If we’d left off the trailing period, the DNS server would assume we had defined hostnames only and would automatically append the $ORIGIN to the end of these records.

We’ve used the ns and mail hostnames in these definitions, but we’ve not yet defined these hosts in the zone file, so let’s do that next (see Listing 10-19). Host-to-address records are called A records and remember that AAAA records are for IPv6. We’ll also add an A record for our current hostname.

Listing 10-19. Creating A Records for Our Domain

@ IN A 192.168.0.1ns IN A 192.168.0.254mail IN A 192.168.0.1au-mel-ubuntu-1 IN A 192.168.0.1

We did not specify an FQDN in the host column for these records, so the DNS server will treat them as if they have $ORIGIN (example.com.) appended to them, which is exactly what we want. The @ symbol is replaced with the origin, too, so users will be able to access a host by going to just the domain as well.

You’ll note that both these names will now resolve to the same address. An IP address can have as many A records associated with it as you like. The other type ofd record in a forward zone is called a CNAME, also known as an alias.

You use a CNAME when you want to associate a number of aliased names with a single host and still be able to change the address for that host without needing to then change a long list of A records . For instance, our host au-mel-ubuntu-1 needs to provide web and SQL services, and the mail server will also provide POP and IMAP access. We can create some CNAME entries to provide aliases that all point to the mail A entry (see Listing 10-20). In the future if we migrate mail services to a different host, we only need to change the A record and all CNAME entries will automatically point at the new address as well.

Listing 10-20. Adding Some CNAME Entries

gateway IN CNAME ns.example.com.headoffice IN CNAME au-mel-ubuntu-1.example.com.smtp IN CNAME mail.example.com.pop IN CNAME mail.example.com.imap IN CNAME mail.example.com.www IN CNAME au-mel-ubuntu-1.example.com.sql IN CNAME au-mel-ubuntu-1.example.com.

We’ve also created CNAMEs called gateway and headoffice, which we will use when we set up a Virtual Private Network in Chapter 15. That’s all we need for now. We’ll save the file and create an accompanying reverse zone file, which will provide an address-to-name mapping.

Reverse Lookup Zones

In order to set up a reverse zone, you need to first find out what it is called. Unlike a forward zone, it has no domain name, but it does have a unique address range. To provide lookups for addresses, a special domain named in-addr.arpa. is used. This is essentially the root zone for reverse mappings.

Just like forward zones, you prepend the parts of your network address to this zone, with the most significant parts to the right. For our network of 192.168.0.x, this results in a 0.168.192.in-addr.arpa. reverse zone name.

Note

In-addr.arpa. zones are always prefixed with up to three-quarters of a dotted quad. There is no standard way of having reverse zones for a subnet with fewer than 255 addresses.

We once again fire up our editor (as the root user) to create a new zone file.

$ sudo vim /var/cache/bind/192.168.0.dbThe header in this file needs to be a bit different from our forward zone, as the zone name is different. Add in the contents of Listing 10-21.

Listing 10-21. The Reverse Zone Header

$ORIGIN 0.168.192.in-addr.arpa.$TTL 86400@ IN SOA ns.example.com. root.example.com. (2016070100 ; Serial604800 ; Refresh86400 ; Retry2419200 ; Expire3600 ) ; Negative Cache TTL

With the header created, we can now start adding PTR records, which map addresses to names. Let’s add one for our bastion host and one for our host on 192.168.0.254 and the mail A records, as shown in Listing 10-22.

Listing 10-22. Adding PTR Records for Our Hosts

IN NS ns.example.com.1 PTR mail.example.com1 PTR au-mel-ubuntu-1.example.com.254 PTR ns.example.com.

We save the reverse zone file and exit the editor. All that’s left to do now is add the zone definitions for these two zones to /etc/bind/named.conf.local. We open this file and add in the definitions, as shown in Listing 10-23. Each zone directive block contains a reference to the file that defines the zone. By default, the server expects these in the directory specified in the main configuration file. Since we’re the authoritative DNS server providing these zones, we need to set the zone type to master.

Listing 10-23. Adding Zone Definitions

zone "example.com" {type master;file "master/example.com.db";};zone "0.168.192.in-addr.arpa" {type master;file "master/192.168.0.db";};

Then we save the file and quit the editor. We need to tell the server to reload its configuration, either by restarting the server via systemctl reload or service, or using the rndc utility. The latter is much faster and does not interrupt services , so let’s do that.

$ sudo rndc reloadserver reload successful

The rndc utility is used to control the named (bind) server . Not only can it reload the named configuration (after you have edited files), it can reload particular zones, change logging levels, and flush caches. We will show you more uses for rndc throughout the chapter.

The name server should know about our new zones, and we can query it to check this. Let’s start by looking up the address for ns.example.com, as shown in Listing 10-24.

Listing 10-24. Testing Forward Name Resolution

$ host ns.example.com localhostUsing domain server:Name: localhostAddress: 127.0.0.1#53Aliases:ns.example.com has address 192.168.0.254

That works fine. Let’s also check that the reverse zone works by looking up the name associated with the 192.168.0.1 address, as shown in Listing 10-25.

Listing 10-25. Testing Reverse Name Resolution

$ host 192.168.0.1 localhostUsing domain server:Name: localhostAddress: 127.0.0.1#53Aliases:1.0.168.192.in-addr.arpa domain name pointer au-mel-ubuntu-1.example.com.1.0.168.192.in-addr.arpa domain name pointer mail.example.com.0.168.192.in-addr.arpa.

That isn’t quite right! The name server has appended the reverse zone name to the mail.example.com host. We know what usually causes this, though, so if we go and check the reverse zone file , we can see that we did indeed forget the trailing period at the end of the entry for mail.example.com.

But let’s take the time to investigate how we can see the query logs on our bind server . Using the rndc utility again we can issue the following command:

$ sudo rndc querylog onIf we tail the /var/log/syslog (on Ubuntu) we will see the following:

Jun 28 12:23:26 localhost named[2548]: received control channel command 'querylog on'Jun 28 12:23:26 localhost named[2548]: query logging is now on

We will now be able to see the DNS queries in the system log as follows:

Jun 28 12:25:31 localhost named[2548]: client 127.0.0.1#47616 (1.0.168.192.in-addr.arpa): query: 1.0.168.192.in-addr.arpa IN PTR + (127.0.0.1)To turn off the query logging we simply issue the following:

$ sudo rndc querylog offWe’ll add the period now and increment the zone serial number and the records should now look as follows:

$ORIGIN 0.168.192.in-addr.arpa.$TTL 86400@ IN SOA ns.example.com. root.example.com. (2016070100 ; Serial604800 ; Refresh86400 ; Retry2419200 ; Expire3600 ) ; Negative Cache TTLIN NS ns.example.com.1 PTR mail.example.com.1 PTR au-mel-ubuntu-1.example.com.254 PTR ns.example.com.

When we finish, we issue the sudo rndc reload command again. If we test the reverse resolution again, we can see the problem has been fixed.

$ host 192.168.0.1 localhostUsing domain server:Name: localhostAddress: 127.0.0.1#53Aliases:1.0.168.192.in-addr.arpa domain name pointer au-mel-ubuntu-1.example.com.1.0.168.192.in-addr.arpa domain name pointer mail.example.com.

Security Considerations

We’re now running the DNS server on the bastion host as both an authoritative and caching DNS server. Though the software can handle this fine, there are some security considerations. The main one of these is due to an attack known as DNS cache poisoning, which allows an attacker to make your caching DNS server hand out incorrect addresses. This can cause a user to click a malicious web link or open an e-mail with an embedded link.

Note

You can read more about DNS cache poisoning at http://en.wikipedia.org/wiki/ DNS_cache_poisoning.

Luckily BIND provides a way for us to mitigate this problem with DNSSEC, the security extension of the DNS protocol. With recent versions of bind (bind v9.7 and above), we can use auto key signing which will automatically sign and resign zones at the defined intervals. From version 9.9 onward we can use a feature called “inline” key signing.

There are two kinds of records we can create, NSEC and NSEC3. Depending on your requirements you can implement whichever is appropriate. They both provide “authenticated denial of existence,” meaning that if a record is not available then you can trust the response from the server. The main difference between the two records is that NSEC3 prevents zone walking, where you can build a map of the domain by what records are not there. You can read more about the differences at www.internetsociety.org/deploy360/resources/dnssec-nsec-vs-nsec3/ .

Tip

The next couple of steps will require a good amount of system entropy to create good-quality encryption keys. You should install haveged which is a daemon that will provide an additional source of entropy. See details at www.issihosts.com/haveged/ .

We are going to create and store our keys in the /etc/bind/keys directory. We will need create that directory and then change our bind configuration file for the local zones to be like the following:

zone "example.com" {type master;file "master/example.com.db";key-directory "/etc/bind/keys";auto-dnssec maintain;inline-signing yes;};zone "0.168.192.in-addr.arpa" {type master;file "master/192.168.0.db";key-directory "/etc/bind/keys";auto-dnssec maintain;inline-signing yes;};

We use the key-directorydirective to tell bind where to find the keys we are going to creating. We will create those keys now. To do that we need the dnssec-keygen command to create a zone signing key (ZSK) and a key signing key (KSK). Change to the /etc/bind/keys directory and we will create our ZSK.

$ sudo dnssec-keygen -a RSASHA256 -b 2048 -3 example.comGenerating key pair...............................................+++ ...............+++Kexample.net.+008+50331

Now we need a KSK to verify our zone key.

$ sudo dnssec-keygen -a RSASHA256 -b 2048 -f KSK -3 example.comGenerating key pair........................................................................+++ ...........................+++Kexample.net.+008+62695

Looking inside the directory we see that there are four keys produced. Two private keys, ending with .private, and two public keys, ending in .key.

-rw-r--r-- 1 root bind 606 Jul 3 02:41 Kexample.net.+008+50331.key-rw------- 1 root bind 1776 Jul 3 02:41 Kexample.net.+008+50331.private-rw-r--r-- 1 root bind 605 Jul 3 02:44 Kexample.net.+008+62695.key-rw------- 1 root bind 1776 Jul 3 02:44 Kexample.net.+008+62695.private

A copy of the private keys should be stored somewhere safe like we do with all highly sensitive information. You will notice that the keys are owned by the root user. We will need to change the owner of the files to bind (Ubuntu). With the keys in place we will start up our bind server using the $ sudo systemctl start bind9 command or if your bind server is already running we can run $ sudo rndc reconfig. We can view the logs using the $ sudo journalctl –x –u bind9 command in another terminal window or shell.

We will issue the following commands to make sure our zones are signed.

$ sudo rndc sign example.com$ sudo rndc signing -list example.comDone signing with key 814/RSASHA256Done signing with key 62760/RSASHA256

In Listing 10-26 we can see the logs from the journalctl command.

Listing 10-26. bind journalctl Log

Jul 06 13:01:59 ubuntu-xenial named[12461]: received control channel command 'sign example.com'Jul 06 13:01:59 ubuntu-xenial named[12461]: zone example.com/IN (signed): reconfiguring zone keysJul 06 13:01:59 ubuntu-xenial named[12461]: zone example.com/IN (signed): next key event: 06-Jul-2016 14:01:59.665Jul 06 13:02:09 ubuntu-xenial named[12461]: received control channel command 'signing -list example.com'Jul 06 13:04:40 ubuntu-xenial named[12461]: received control channel command 'sign 0.168.192.in-addr.arpa'Jul 06 13:04:40 ubuntu-xenial named[12461]: zone 0.168.192.in-addr.arpa/IN (signed): reconfiguring zone keysJul 06 13:04:40 ubuntu-xenial named[12461]: zone 0.168.192.in-addr.arpa/IN (signed): next key event: 06-Jul-2016 14:04:40.598

You can see that the bind server has automatically signed our zones (zone example.com/IN (signed): loaded serial 2016070100). You can also see that we have a “next key event,” which is when the keys will be regenerated. Now take a look at our zone file directory.

$ ll /var/cache/bind/master/total 48drwxr-xr-x 2 bind bind 4096 Jul 3 12:46 ./drwxrwxr-x 3 root bind 4096 Jul 3 12:47 ../-rw-r--r-- 1 bind bind 346 Jul 3 12:35 192.168.0.db-rw-r--r-- 1 bind bind 512 Jul 3 12:35 192.168.0.db.jbk-rw-r--r-- 1 bind bind 349 Jul 3 12:35 192.168.0.db.signed-rw-r--r-- 1 bind bind 899 Jul 3 12:25 example.com.db-rw-r--r-- 1 bind bind 512 Jul 3 12:35 example.com.db.jbk-rw-r--r-- 1 bind bind 839 Jul 3 12:35 example.com.db.signed-rw-r--r-- 1 bind bind 13384 Jul 3 12:46 example.com.db.signed.jnl

We now have zone files that have been signed (example.com.db.signed). We can now validate that our keys are signed as we expect.

$ dig +dnssec +multiline @127.0.0.1 mail.example.commail.example.com. 86400 IN A 192.168.0.1mail.example.com. 86400 IN RRSIG A 8 3 86400 (20160802120825 20160703120630 6513 example.com.<snip>TorDjrwEutOJnt1HLxoJ/+EVJ6K9l+sZfrfG4ZM4lB5ieVxmZe3quQ3M+HHDHPVwZu1XwJkNz97Kuw== )mail .example.com. 86400 IN RRSIG A 8 3 86400 (20160802124245 20160703120630 65028 example.com.<snip>qGxaP6lJ+WKbIhw3NoqSd++E6bVUU5L46qaxczIhact3xZEOwrAnAQ2MSq9Qx1b41ghbwfVBUOMQZQ== )

Adding a Slave Server

In order to provide reliable DNS services, virtually all domain registrars require you to enter a minimum of two DNS servers for any domain. It is, of course, possible to maintain multiple copies of all your zone files, but you can make use of the master/slave functionality in BIND to automate this process.

In this section we are going to add a DNS slave using our CentOS server. First we will show you how to configure the master.

Note

We do note that some people object to using the “master/slave” terminology when describing leader/follower relationships. BIND still uses this terminology and we have chosen to use the same in order not to confuse readers, rather than out of indifference to the subject.

Ubuntu

If you want to set up the master to allow transfers from slave hosts on Ubuntu , you need to change the zone definitions in /etc/bind/named.conf.local. You need to ensure that the master server contacts the slaves when a zone is updated, and you do this by adding the notify yes directive. This means you don’t need to wait until the slave reaches the zone expiration time, as any zone changes on the master server will be replicated to the slave immediately.

Next, you add an allow-transfer directive, which should contain the IP address of the slave server. We’ve included the new definition for the example.com zone in Listing 10-27.

Listing 10-27. Adding Zone Definitions

acl "transfer-hosts" {192.168.0.254 ;127.0.0.1 ;};zone "example.com" {type master;file "master/example.com.db";notify yes;allow-transfer {transfer-hosts ;};key-directory "/etc/bind/keys";auto-dnssec maintain;inline-signing yes;};

What we have done in Figure 10-26 is use a variable called “transfer-hosts,” which we created with the acl option in our bind zone configuration file . We have then used that in the allow-transfer section to allow the IP addresses we have defined in transfer-hosts. Creating acl variables is good practice as you can quickly update your configuration in one place. It makes reading and validation of your configuration easier. If we add more slaves, we can add them to the transfer-hosts acl.

When you’ve added the addresses for all slaves, save the file and then tell BIND to reload its configuration via sudo rndc reload.

Tip

To test the master configuration, you can use dig on the slave to simulate a zone transfer. Use the AXFR query type: dig example.com @127.0.0.1 AXFR.

By default, the bind server listens on the localhost address (127.0.0.1). You will need to set the listen-on named.conf option to get the bind server to listen on the local network interface. For the master you will need to add the following to the /etc/bind/named.conf.options file.

listen-on port 53 { 127.0.0.1; 192.168.0.1; };On the slave you will need to add the following to the /etc/named.conf file:

listen-on port 53 { 127.0.0.1; 192.168.0.254; };...allow-query { localhost; 192.168.0.0/24; };

The next step is to tell the slave server where to find the master. Open the /etc/named/named.conf.local file on the slave server and add a zone definition for the example.com domain. Set the zone type to slave. To make sure that the server can retrieve the zone data, you need to specify the address for the master server in the masters configuration block. We’ve included the configuration for our network in Listing 10-28.

Listing 10-28. Slave Server Zone Configuration

zone "example.com" {type slave;masters {192.168.0.1;};file "slaves/example.com.db";};

When you’ve finished, save the configuration file and tell the slave server to reload it via sudo rndc reload. You can check the /var/log/syslog file to verify that the zone is being transferred, or you can query the slave server using host or dig to ensure the zone data is present.