Now that you have learned how to build the components for a few systems, we are going to show you how you can build thousands of them quickly, at the same time, and all having the right configuration for each type of system! In the last 18 chapters we wanted to show you just how each part of the Linux system was configured, what switch applied to what command, and what outcome each switch had. All this was essential to understanding what automated provisioning and configuration management are now going to do for you.

In this chapter, we’re going to look at three facets of configuration management.

Automated provisioning and installation of new hosts

Automated management of your configuration including files, users, and packages

How to test your configuration as code

The first process we’re going to examine, automated provisioning or installation of new hosts, is sometimes called bootstrapping. In the CentOS world, bootstrapping is often referred to as kickstarting (after the Kickstart tool used to perform it). On Ubuntu and Debian, the process is called preseeding.

Provisioning is a way of automatically installing a distribution to a host. When we first looked at installing distributions in Chapter 2, we demonstrated how to do it manually. You inserted a DVD and followed the onscreen prompts to install your distribution. Automated provisioning is a way of installing a distribution without being prompted by the configuration questions. This makes provisioning quick and simple, and it also has the advantage of ensuring every build is identical.

Tip

You can use provisioning for both server hosts and desktop hosts. Not only is it a quick way of building (or rebuilding) server hosts, but it can also be a quick way to automatically install desktops for your users.

The second process we’re going to examine is configuration management and automation. By now you’ve seen that you can accumulate a lot of installed packages, users, configuration files, and other settings. Your environment can quickly get complicated and difficult to manage if you don’t take steps to control and automate it. Configuration management allows you to centralize your configuration, document it, and automate it. This allows you to manage and control changes to your environment and protects you against accidental or malicious configuration changes.

The third is testing your infrastructure just like you would your application code. Because configuration management is just code, this makes testing your configurations easier and helps to ensure fewer bugs creep into your production servers. This can lead to fewer administration mistakes as things can be tested and reviewed prior to being pushed into production. This can be all hooked into your normal Jenkins or other continuous integration/continuous deployment (CI/CD) infrastructure to make this a seamless operation.

Provisioning, configuration management, and testing are meant to be used in a workflow. In your system provisioning process you can install the operating system with Cobbler, or different provisioning system, and then use Ansible or Puppet to apply the tested configuration as part of that process. This means that by the time you first get access to your console, it has the right disk layout, it has the right operating system, it has the right network configuration, it has the right users and packages, it has the right configuration for services, and those services have been started. Not only that, but every system you build after that is also just right, even if it consists of thousands of them. And it can be done automatically for you after you have completed your CI builds!

Provisioning

We’ve talked a little about what provisioning is, but how you go about it varies between distributions. We are going to explain how to automatically provision both CentOS and Ubuntu hosts .

Provisioning is usually a two-stage process.

Boot your host and send it the files required for installation.

Automate the installation steps.

The process starts with a host booting up. Remember in Chapter 5 when we told you about the boot sequence? On many hosts, you can configure that boot sequence to look in alternative places to get its boot instructions; for example, you can boot from a DVD or a USB stick. In addition to these methods, you can also get your boot instructions from a network source.

The technology behind this boot process is called Preboot Execution Environment (PXE) . A network boot server is hence called a PXE boot (pronounced “pixie boot”) server. The host that we intend to build uses a network query to find a PXE boot server, usually a network query to a DHCP server, that might offer it the files required to boot and then transfers those files to the host using a file transfer protocol called Trivial File Transfer Protocol (TFTP) .

Note

You can read more about PXE at http://en.wikipedia.org/wiki/Preboot_Execution_Environment .

Once this initial boot takes place, your provisioning process continues by installing a prepackaged version of your distribution, usually with a series of automated scripted responses to the various configuration questions you are prompted for when installing.

Note

We’re using network-based provisioning to create our hosts rather than any of the alternatives, such as CD or DVD. This is because we believe network-based provisioning is the simplest, easiest, and most efficient way to automatically build hosts.

Provisioning with CentOS Cobbler

CentOS has a variety of tools for provisioning hosts, ranging from the most basic, Kickstart, which automates installations, to full-featured GUI management tools for host configuration such as Cobbler ( http://cobbler.github.io/ ) and Spacewalk ( http://spacewalk.redhat.com/ ).

We’re going to look at a combination of three tools:

Kickstart: An installation automation tool for Red Hat–based operating systems

Preseed: An installation automation tool for Debian-based operating systems

Cobbler: A provisioning server that provides a PXE boot server

We’ll take you through the process of creating a Cobbler server and a build to install. Later in this chapter, we’ll show you how to configure Kickstart to automate your configuration and installation options.

Installing Cobbler

Let’s start by installing Cobbler on your host. To run Cobbler, you need to install the EPEL repositories.

$ sudo yum install –y epel-releaseThen we need to install Cobbler.

$ sudo yum install –y cobblerThis will install some additional YUM utilities and the createrepo package , which assist in repository management. We’ve also installed some additional packages Cobbler uses: the DHCP daemon, a TFTP server, and the Apache web server. You may already have these packages installed, in which case YUM will skip them.

Once everything is installed, we need to enable cobblerd, the daemon process, at boot and start it.

$ sudo systemctl enable cobblerd httpd$ sudo systemctl start cobblerd$ sudo systemctl start httpd

Cobbler requires access to the Apache server to be started. Also, we need to ensure that the cobblerd service can access the httpd server port. SELinux by default will prevent this, so we need to issue the following:

$ sudo setsebool -P httpd_can_network_connect trueCobbler has some specific SELinux settings , and you can view them with the following command:

$ sudo getsebool -a|grep cobblercobbler_anon_write --> offcobbler_can_network_connect --> offcobbler_use_cifs --> offcobbler_use_nfs --> offhttpd_can_network_connect_cobbler --> offhttpd_serve_cobbler_files --> off

We will enable the following SELinux Booleans:

$ sudo setsebool -P httpd_serve_cobbler_files on$ sudo setsebool -P httpd_can_network_connect_cobbler on

Configuring Cobbler

After you’ve installed the required packages, you need to configure Cobbler. Cobbler comes with a handy check function that tells you what needs to be done to configure it. To see what needs to be done, run the following:

The following are potential configuration items that you may want to change:1 : The 'server' field in /etc/cobbler/settings must ... by all machines that will use it.2 : For PXE to be functional, the 'next_server' field ... the IP of the boot server on the PXE network.3 : SELinux is enabled. Please review the following ... https://github.com/cobbler/cobbler/wiki/Selinux4 : change 'disable' to 'no' in /etc/xinetd.d/tftp5 : some network boot-loaders are missing from /var/lib/cobbler/loaders, ...is the easiest way to resolve these requirements.6 : debmirror package is not installed, it will be required to manage debian deployments and repositories7 : ksvalidator was not found, install pykickstart8 : The default password used by the sample templates ... 'your-password-here'" to generate new one9 : fencing tools were not found, and are required to use ... cman or fence-agents to use themRestart cobblerd and then run 'cobbler sync' to apply changes.

You can see there are a few things you need to do to get Cobbler running. Let’s work through each of these issues.

First, you configure the /etc/cobbler/settings file . You need to update two fields in this file: server and next_server. You need to replace the existing values (usually 127.0.0.1) with the IP address of your host so a PXE-booted host can find your Cobbler host. In our case, we specify the following:

server 192.168.0.1next_server 192.168.0.1

To update Cobbler’s configuration, you then run this:

$ sudo cobbler syncNote

You need to run the $ sudo cobbler sync command any time you change the /etc/cobbler/settings file. Common errors include leaving trailing spaces after options in the settings file. Make sure you delete any extra spaces from the file.

You also need to configure a DHCP server (like the one we introduced in Chapter 10). You have two choices here: you can get Cobbler to manage your existing DHCP server, or you can tell your existing DHCP server to point to Cobbler.

After you have run cobbler sync and rerun cobbler check, you will notice the list of outstanding things to check has been reduced. We are going to now install the Cobbler loaders and debmirror binary.

$ sudo cobbler get-loadersFor debmirror, you need to download the file from Debian, untar it, and copy it to a common location (alternative, you could use FPM, like we showed you in Chapter 9, to create a package to do this for you in a repeatable way!).

We need at least these Perl modules installed:

$ sudo yum install -y perl-LockFile-Simple perl-IO-Zlib perl-Digest-MD5 perl-Net-INET6Glue perl-LWP-Protocol-httpsNext we will download and install the debmirror package , untar it, and place it in the /usr/local/bin directory.

$ curl -s http://archive.ubuntu.com/ubuntu/pool/universe/d/debmirror/debmirror_2.25ubuntu2.tar.xz -o debmirror_2.25.tar.xz$ tar xf debmirror_2.25.tar.xz && sudo cp debmirror-2.25ubuntu2/debmirror /usr/local/bin/

To test we have everything installed correctly for debmirror, run debmirror --help and make sure you don’t get any Perl module errors.

Lastly, we are going to change the default root password that gets placed on the hosts. First you can create a secure SHA-512 password using python3with the following:

python3 -c 'import crypt; print(crypt.crypt("yourpasswordhere", crypt.mksalt(crypt.METHOD_SHA512)))'$6$KnsQG.tEetSCSmid$HpqUNyEk1UPkt9Dc9MPcwPY...guKOGdUeNXoA7.ugUBGGaDIk8RY8FRYVOwzmsM.u01

Then you need to update the default_password_crypted: setting in the /etc/cobbler/settings file. Remember to run cobbler sync after each change.

Note

Python 3 is not installed by default on CentOS but can be available on Ubuntu. The previous script for generating the password can be run on any host that has Python 3 installed already and can be copied across.

Now when we run $ sudo cobbler check, the list contains only three items, which we don’t need to address.

Cobbler Managing Your DHCP

If you want to enable Cobbler to manage your DHCP server, then you need to enable another option in the /etc/cobbler/settings file.

manage_dhcp: 1You also need to update a template file that Cobbler will use to configure your DHCP server, /etc/cobbler/dhcp.template. Listing 19-1 shows an example of this file.

Listing 19-1. The /etc/cobbler/dhcp.template File

# ******************************************************************# Cobbler managed dhcpd.conf file## generated from cobbler dhcp.conf template ($date)# Do NOT make changes to /etc/dhcpd.conf. Instead, make your changes# in /etc/cobbler/dhcp.template, as /etc/dhcpd.conf will be# overwritten.## ******************************************************************ddns-update-style interim;allow booting;allow bootp;ignore client-updates;set vendorclass = option vendor-class-identifier;option pxe-system-type code 93 = unsigned integer 16;key dynamic-update-key {algorithm hmac-sha256;secret "RZqM/JutbhgHiBR8ICG0LDyN+9c1LpNU83ycuU9LPaY=";}zone 0.168.192.in-addr.arpa. {key dynamic-update-key;primary 192.168.0.1;}zone example.com. {key dynamic-update-key;primary 192.168.0.1;}subnet 192.168.0.0 netmask 255.255.255.0 {option routers 192.168.0.254;option domain-name "example.com";option domain-name-servers 192.168.0.1;option broadcast-address 192.168.0.255;next-server $next_server;filename "/pxelinux.0";group "static" {use-host-decl-names on;host au-mel-rhel-1 {hardware ethernet 00:16:3E:15:3C:C2;fixed-address au-mel-rhel-1.example.com;}}pool {range 192.168.0.101 192.168.0.150;deny unknown clients;}pool {range 192.168.0.151 192.168.0.200;allow unknown clients;default-lease-time 7200;max-lease-time 21600;}}

If you have an existing DHCP server with a configuration, you should update this template to reflect that configuration. You can see we’ve adjusted the template in Listing 19-1 to reflect the DHCP configuration we used in Chapter 10. We’ve added two settings.

allow booting;allow bootp;

These two options tell the DHCP server to respond to queries from hosts who request network boots.

The other two important settings to note in Listing 19-1 are next-server and filename configuration options. The next-server option is set to $next_server. This value will be replaced by the IP address we just configured in the next_server option in the /etc/cobbler/settings file. This tells our DHCP server where to route hosts that request a net boot.

The filename option is set to /pxelinux.0, which is the name of the boot file that PXE-booted hosts should look for to start their boot process. We’ll set up this file shortly.

Now, after changing these files, you need to run the following command:

$ sudo cobbler syncCaution

If you have an existing DHCP server, this template will overwrite its configuration by overwriting the /etc/dhcpd.conf configuration file. Only do this if you are sure you know what you are doing, and make a copy of your existing /etc/dhcpd.conf file before running the command.

Cobbler Not Managing Your DHCP

If you don’t want Cobbler to manage your DHCP, then you just need to adjust your existing DHCP configuration file, /etc/dhcpd.conf, to add the next-server and filename options. Let’s update the relevant portions of the configuration we created in Chapter 9 with this option, as shown in Listing 19-2.

Listing 19-2. Existing dhcpd.conf Configuration File

allow booting;allow bootp;subnet 192.168.0.0 netmask 255.255.255.0 {option routers 192.168.0.254;option domain-name "example.com";option domain-name-servers 192.168.0.1;option broadcast-address 192.168.0.255;filename "/pxelinux.0";next-server 192.168.0.1;group "static" {use-host-decl-names on;host au-mel-rhel-1 {hardware ethernet 00:16:3E:15:3C:C2;fixed-address au-mel-rhel-1.example.com;}}pool {range 192.168.0.101 192.168.0.150;deny unknown clients;}pool {range 192.168.0.151 192.168.0.200;allow unknown clients;default-lease-time 7200;max-lease-time 21600;}}

You can see we’ve added two options to the start of the DHCP section.

allow booting;allow bootp;

These two options tell the DHCP server to respond to queries from booting clients.

We’ve also added the next-server option to our subnet definition.

next-server 192.168.0.1The next-server option tells DHCP where to send hosts that request a PXE network boot. We need to specify the IP address of our Cobbler server.

Lastly, we’ve added the filename option, set to /pxelinux.0, which is the name of the boot file that PXE-booted hosts should look for to start their boot process. We’ll set up this file shortly.

Tip

After configuring your DHCP server, you will need to restart the Cobbler server for the new configuration to be applied.

Configuring TFTP

Once the daemon is started, you need to enable your TFTP server to send your boot file to the host to be installed. To do this, you edit the /etc/xinet.d/tftp file to enable a TFTP server. Inside this file, find this line:

disable = yesand change it to this:

disable = noNext, you enable the TFTP server like so:

$ sudo systemctl enable tftp$ sudo systemctl start tftp

You need to ensure your hosts can connect to the Cobbler server through your firewall by opening some required ports, 69, 80, 25150, and 25151, for example, by creating firewalld rules such as the following:

$ sudo firewall-cmd --zone=public --add-service=tftp --permanent$ sudo firewall-cmd --zone=public --add-service=httpd –permanent$ sudo firewall-cmd --zone=public --add-port=25150:25151/tcp –permanent

These rules allow access for any host on the 192.168.0.0/24 subnet to the boot server on the appropriate ports. You can find more information on firewall rules in Chapter 7.

Using Cobbler

Once you’ve configured Cobbler, you can start to make use of it. Cobbler allows you to specify a distribution you’d like to build hosts with, imports that distribution’s files, and then creates a profile. You can then build hosts using this distribution and profile.

We have mounted our ISO files to /mnt/centos and /mnt/ubuntu, respectively. This is done like so:

$ sudo mount –o loop /path/to/downloaded.iso /path/to/mountpointLet’s start by creating our first profile using the import command .

$ sudo cobbler import --path=/mnt/centos --name=CentOS7 --arch=x86_64task started: 2016-12-22_055922_importtask started (id=Media import, time=Thu Dec 22 05:59:22 2016)Found a candidate signature: breed=redhat, version=rhel6Found a candidate signature: breed=redhat, version=rhel7Found a matching signature: breed=redhat, version=rhel7Adding distros from path /var/www/cobbler/ks_mirror/CentOS7-x86_64:creating new distro: CentOS7-x86_64trying symlink: /var/www/cobbler/ks_mirror/CentOS7-x86_64 -> /var/www/cobbler/links/CentOS7-x86_64creating new profile: CentOS7-x86_64associating reposchecking for rsync repo(s)checking for rhn repo(s)checking for yum repo(s)starting descent into /var/www/cobbler/ks_mirror/CentOS7-x86_64 for CentOS7-x86_64processing repo at : /var/www/cobbler/ks_mirror/CentOS7-x86_64need to process repo/comps: /var/www/cobbler/ks_mirror/CentOS7-x86_64looking for /var/www/cobbler/ks_mirror/CentOS7-x86_64/repodata/*comps*.xmlKeeping repodata as-is :/var/www/cobbler/ks_mirror/CentOS7-x86_64/repodata*** TASK COMPLETE ***

We will import our Ubuntu ISO also.

$ sudo cobbler import --path=/mnt/ubuntu --name Ubuntu-16.04 --breed=ubuntu --os-version=xenialYou issue the cobbler command with the import option. The --path option specifies the source of the distribution you want to import—in our case, /mnt/ubuntu and /mnt/centos. The --name is any name you want to give the distribution, and you can add --breed and --os-version to help the import command find the right signature to match your distribution.

Note

If you get errors when doing an import, make sure you run $ sudo cobbler signature update and try again. Learn more about signatures here: http://cobbler.github.io/manuals/2.8.0/3/2/3_-_Distro_Signatures.html .

You can also sync with online repositories. Here’s an example:

$ sudo cobbler reposynctask started: 2016-12-22_063019_reposynctask started (id=Reposync, time=Thu Dec 22 06:30:19 2016)hello, reposyncrun, reposync, run!running: /usr/bin/debmirror --nocleanup --verbose --ignore-release-gpg --method=http --host=archive.ubuntu.com --root=/ubuntu --dist=xenial,xenial-updates,xenial-security --section=main,universe /var/www/cobbler/repo_mirror/Ubuntu-16.04-x86_64 --nosource -a amd64

This will sync with online repositories and, in this case, uses the debmirror binary we installed earlier to sync our Ubuntu Xenial release.

Tip

You will need sufficient disk space on your host to copy whatever distributions you want to keep. Hosting your own syncs of repositories will speed up your deployments greatly and reduce online network downloads.

Cobbler will run the import process and then return you to the prompt. Depending on the performance of your host (and, if you are importing over the network, the speed of your connection), this may take some time.

You can view the distributions install via this command:

$ sudo cobbler distro listCentOS7-x86_64Ubuntu-16.04-x86_64

The import will create two profiles as well; we can see them with this command:

$ sudo cobbler profile listCentOS7-x86_64Ubuntu-16.04-x86_64

After you’ve created your distribution and profile, you can see the full details in Cobbler using the report option, as shown in Listing 19-3.

Listing 19-3. A Cobbler Report

$ sudo cobbler reportdistros:==========Name : CentOS7-x86_64Architecture : x86_64TFTP Boot Files : {}Breed : redhatComment :Fetchable Files : {}Initrd : /var/www/cobbler/ks_mirror/CentOS7-x86_64/images/pxeboot/initrd.imgKernel : /var/www/cobbler/ks_mirror/CentOS7-x86_64/images/pxeboot/vmlinuzKernel Options : {}Kernel Options (Post Install) : {}Kickstart Metadata : {'tree': 'http://@@http_server@@/cblr/links/CentOS7-x86_64'}Management Classes : []OS Version : rhel7Owners : ['admin']Red Hat Management Key : <<inherit>>Red Hat Management Server : <<inherit>>Template Files : {}Name : Ubuntu-16.04-x86_64Architecture : x86_64TFTP Boot Files : {}Breed : ubuntuComment :Fetchable Files : {}Initrd : /var/www/cobbler/ks_mirror/Ubuntu-16.04/install/netboot/ubuntu-installer/amd64/initrd.gzKernel : /var/www/cobbler/ks_mirror/Ubuntu-16.04/install/netboot/ubuntu-installer/amd64/linuxKernel Options : {}Kernel Options (Post Install) : {}Kickstart Metadata : {'tree': 'http://@@http_server@@/cblr/links/Ubuntu-16.04-x86_64'}Management Classes : []OS Version : xenialOwners : ['admin']Red Hat Management Key : <<inherit>>Red Hat Management Server : <<inherit>>Template Files : {}

This option displays all the distributions, and their profiles are currently imported into Cobbler.

Note

You may see more than one distribution and profile created from importing a distribution.

Listing 19-3 shows our vanilla CentOS7-x86_64 distribution and the profile we created, CentOS7-x86_64. Most of the information in Listing 19-3 isn’t overly important to us; we are going to use these profiles to create a new system shortly.

Note

You can see the other options you can edit on your profile by looking at the cobbler command’s man page or visiting the documentation at http://cobbler.github.io/manuals/2.8.0/ .

Building a Host with Cobbler

Now that you’ve added a profile and a distribution, you can boot a host and install your distribution. Choose a host (or virtual machine) you want to build and reboot it. Your host may automatically search for a boot device on your network, but more likely you will need to adjust its BIOS settings to adjust the boot order. To boot from Cobbler, you need to specify that your host boots from the network first.

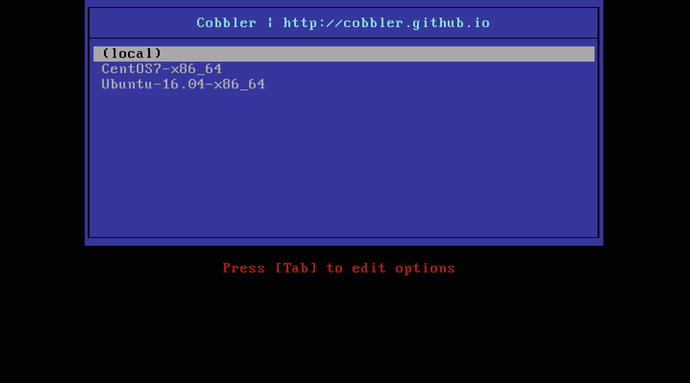

We have created a VirtualBox host on the same Host-only Adapter interface as our Cobbler server. We created VirtualBox hosts in Chapter 3 and created a basic host with an 8Gb hard drive. In the System settings, we are going to select Network for the boot device.

In Figure 19-1 we are selecting the Network Boot option. We will come back to here and set it to Hard Disk when we are finished. We now start the hosts like we would normally.

Figure 19-1. Setting Network Boot

When your host boots, it will request an IP address from the network and get an answer from your DHCP server, as you can see in Figure 19-2.

Figure 19-2. Requesting DHCP address

Your host will boot to the Cobbler boot menu. You can see an example of this menu in Figure 19-3.

Figure 19-3. The Cobbler menu

From this menu, you can select the profile you’d like to install (e.g., CentOS7-x86_64). If you don’t select a profile to be installed, Cobbler will automatically launch the first item on the menu (local), which continues the boot process on the localhost.

Note

If you don’t have an operating system installed on this host, the local boot process will obviously fail.

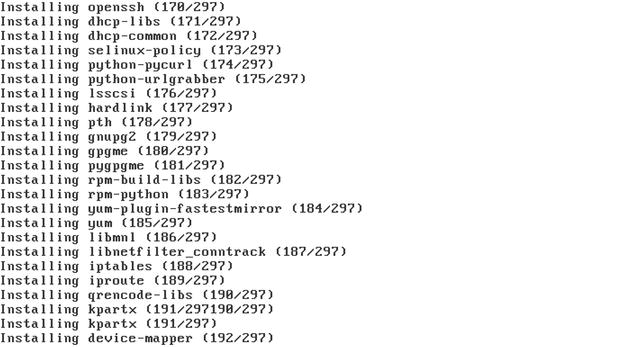

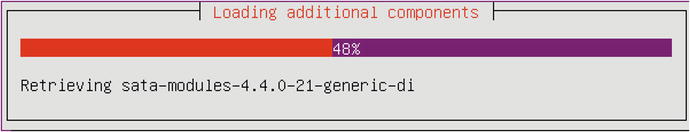

We will select CentOS7-x86_64, which will automatically install CentOS onto our host. If we select Ubuntu-16.04-x86_64, we will install Ubuntu; Figures 19-4 through 19-6 tell that story.

Figure 19-4. Installing CentOS

Figure 19-5. Installing Ubuntu

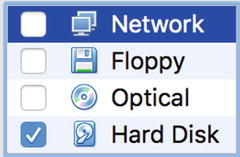

Figure 19-6. Selecting Boot from Hard Disk

Remember, when we have finished installing our host on VirtualBox, we need power on the host and need to change the boot device and then start the host again.

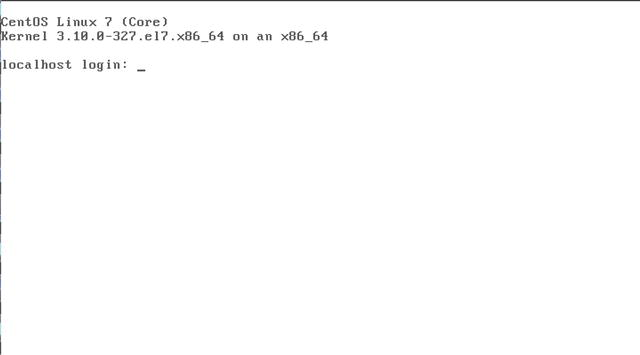

Figure 19-7. CentOS installed

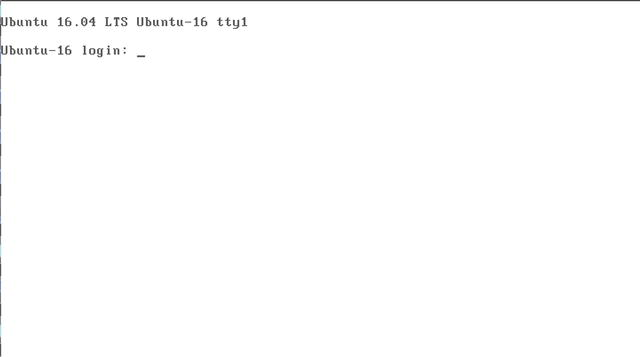

Figure 19-8. Ubuntu installed

We selected a profile, and then this profile started the installation process using the instructions contained in the associated Kickstart or Preseed file. If you are watching your installation process, you will see the installation screens progress—all without requiring input from you to continue or select options.

Using Cobbler, you can also specify configuration options for particular hosts. You don’t need to do this, but it is useful if you have a specific role in mind for a host and want to specify a particular profile or Kickstart configuration.

To do this, you add hosts to Cobbler, identifying them via their MAC or IP addresses , using the system command.

$ sudo cobbler system add --name=web1.example.com --mac=08:00:27:66:EF:C2--profile=CentOS7-x86_64 --interface eth1

Here we’ve added a system named web1.example.com with the specified MAC address.

Note

You can usually see your MAC address during the network boot process, or you can often find it printed on a label on your network card. Alternatively, you may have a way of seeing your virtual interfaces, like you can in VirtualBox.

The new host uses the CentOS7-x86_64 profile . So far it is no different from the hosts we have built previously. If a host with the appropriate MAC address connects to our Cobbler host, then Cobbler will use these configuration settings to provision the host.

If you need to change the way you build a host, you can create a new profile. Your new profiles can inherit settings from other profiles, which are regarded as their parents. We are going to create a profile called centos-base that will inherit the distro and other settings from the parent CentOS7-x86_64.

$ sudo cobbler profile add --name centos-base --parent CentOS7-x86_64This is how we can use different common Kickstart or preseed files for different host groups. Kickstart and Preseed files may have different disk configurations or package lists that are tailored for particular profiles. To add a particular Kickstart or Preseed file, you first copy and modify any existing one to the way you like it and add it to the /var/lib/cobbler/kickstarts directory. Then you can add it to the profile with the --kickstart option.

You can list the configured hosts using the list and report options .

$ sudo cobbler system listweb1.example.com

A full listing of the gateway.example.com system definition can be seen using the report option.

$ sudo cobbler system report –name=web1.example.comWe can also delete a system using the remove command.

$ sudo cobbler system remove --name=web1.example.comNote

You can read about additional Cobbler capabilities on the cobbler command’s man page.

Cobbler Web Interface

Cobbler also has a simple web interface you can use to manage some of its options. It’s pretty simple at this stage, and the command-line interface is much more fully featured, but it is available if you want to implement it. You can find instructions at http://cobbler.github.io/manuals/2.8.0/5_-_Web_Interface.html .

Troubleshooting Cobbler

You can troubleshoot the network boot process by monitoring elements on your host, including your log files, and by using a network monitoring tool like the tcpdump or tshark command.

You can start by monitoring the output of the DHCP process by looking at the /var/log/messages log files. Cobbler also logs to the /var/log/cobbler/cobbler.log file and the files contained in the kicklog and syslog directories also under /var/log/cobbler.

You can also monitor the network traffic passing between your booting host and the boot server. You can use a variety of network monitoring tools for this.

$ sudo tcpdump port tftpCobbler has a wiki page available that contains documentation at http://cobbler.github.io/manuals . The documentation includes some useful tips for troubleshooting at http://cobbler.github.io/manuals/2.8.0/7_-_Troubleshooting.html . The Cobbler community also has a mailing list and other support channels that you can see here: http://cobbler.github.io/community.html .

Kickstart

On CentOS, the language used to automatically install your host is called Kickstart . On Ubuntu, it is called Preseed . For simplicity’s sake and because it’s an easier language to use, we’re going to show you how to use Kickstart to automate your installation for both CentOS and Ubuntu. Where something isn’t supported on Ubuntu, we’ll show you how to use Preseed to configure it.

A Kickstart configuration file contains the instructions required to automate the installation process. It’s a simple scripted process for most installation options, but it can be extended to do some complex configuration.

You can find the latest detailed documentation for Kickstart at http://pykickstart.readthedocs.io/en/latest/kickstart-docs.html .

You can find documentation on Preseed and its directives at https://wiki.debian.org/DebianInstaller/Preseed . We’ll work with a few of these directives later in this section.

You’ve already seen how to specify Kickstart files to your provisioning environments, using Cobbler. Let’s start by looking at some of the contents of a simple Kickstart file in Listing 19-4.

Listing 19-4. A Kickstart File

install# System authorization informationauth --enableshadow --passalgo sha512# System bootloader configurationbootloader --location=mbr# Partition clearing informationclearpart --all --initlabel# Use text mode installtext

Listing 19-4 shows a list of configuration directives starting with the install option, which dictates the behavior of the installation process by performing an installation.

You can then see configuration directives with options, for example, auth --enableshadow --passalgo sha512, which tell Kickstart how to answer particular installation questions. The auth statement has the values --enableshadow and --passalgo sha512 here, which enable shadow passwords and specify that passwords hashes must use the SHA512 password algorithm, respectively.

The option that follows, bootloaderwith a value of --location=mbr, tells Kickstart to install the boot loader into the MBR. Next is the directive clearpart, which clears all partitions on the host and creates default labels for them. The final option, text, specifies we should use text-based installation as opposed to the GUI.

Tip

You can use Kickstart to upgrade hosts as well as install them. If you have an existing host, you can network boot from a new version of your operating system and use a Kickstart file to script and upgrade.

There are too many directives to discuss them individually, so we show you in Table 19-1 the directives that must be specified and some of the other major directives that you may find useful.

Table 19-1. Required Kickstart Directives

Directive | Description |

|---|---|

auth | Configures authentication. |

bootloader | Configures the boot loader. |

keyboard | Configures the keyboard type. |

lang | Configures the language on the host. |

part | Configures partitions. This is required for installation, but not if upgrading. |

rootpw | Specifies the password of the root user. |

timezone | Specifies the time zone the host is in. |

You can also find a useful list of the available directives with explanations at http://pykickstart.readthedocs.io/en/latest/kickstart-docs.html#chapter-2-kickstart-commands-in-fedora .

Tip

If you are on CentOS, you can see an example Kickstart file that was created when you installed your host in the /root/anaconda-ks.cfg file. This will show you how your current host is built and can be used as an example to build similar hosts.

Installation Source

You’ve already seen the install and upgrade directives that specify the behavior of the installation. You can also specify the source of your installation files.

url --url http://192.168.0.1/centos/For Cobbler, we define a variable to specify the location of our installation source.

url --url=$treeThe url directive can also be used to specify an FTP server.

url --url ftp://jsmith:[email protected]/centosWe can specify some alternative sources, including cdrom, when installing from a locally mounted CD or DVD and hard drive to install from a local partition.

harddrive --dir=/centos --partition=/installsourceKeyboard, Language, and Time Zone

The next snippet we’re going to show you configures our keyboard, language, and time zone.

# System keyboardkeyboard us# System languagelang en_AU# System timezonetimezone Australia/Melbourne

Here we’ve specified us as the value for the keyboarddirective to indicate a U.S. keyboard. We’ve specified our language as en_AU (English Australian) and our time zone as Australia/ Melbourne.

Managing Users

You can also set the root user’s password with the Kickstart rootpw directive.

rootpw --iscrypted $6$D7CxLkSBeC9.k.k3$S8G9s3/Y5LJ4dio....S5GS78p2laxALxaJ.lCN9tzKB1zIpYz38Fs9/The rootpw directive is a required Kickstart option for all Kickstart files. It can take either a plain-text value or an encrypted value for the root user’s password when the --iscrtypted option is specified. You can lock the root user account so that no one can log in with it using the --lock option too (if --lock is specified, then you don’t need a password as well).

You can create a new user with Kickstart using the user directive.

user jsmith --password passwordThe preceding code creates a new user called jsmith, with a password of password. By adding the --iscrypted option, you can add a user with an encrypted password. We would create our encrypted password as we did with the rootpw directive.

Firewall and Network

On CentOS, you can configure your host’s initial firewall and network configuration.

# Firewall configurationfirewall --enabled --http --ssh --smtp# SELinux configurationselinux --enabled

Here we enabled the firewall with the firewall option and allowed access via HTTP, SSH, and SMTP. (You can disable the firewall with the --disabled option.) We also enabled SELinux—if you really need to, you can disable using the selinux --disabled option.

You can configure your network connections with Kickstart like so:

# Network informationnetwork --bootproto=static --device=eth0 --gateway=192.168.0.254--ip=192.168.0.1 --nameserver=192.168.0.1 --netmask=255.255.255.0 --onboot=on

You can also specify network configuration for one or more interfaces using the network option. You can see we’ve set the various options required to configure the eth0 interface. You can also specify DHCP, for example:

network --bootproto=dhcp --device=eth0 --onboot=onOn CentOS with Cobbler, if you’re working with a specific host (one created with the cobbler system command), you can pass specific network configuration values to the Cobbler system configuration.

$ sudo cobbler system edit --name=gateway.example.com --mac=00:0C:29:3B:22:46--profile=centos-base --interface=eth0 --ip=192.168.0.1 --subnet=255.255.255.0 --gateway=192.168.0.254 --hostname=gateway --bootproto=static

Here we’ve specified the edit command to change an existing Cobbler-defined system and passed network configuration values to our system. This would define a static network configuration for interface eth0. We specify that the boot protocol is static using the --static=1 option; we would specify --static=0 for a DHCP configuration. The interface to be configured is specified using the --interface=eth0 option.

Then, instead of specifying a network line, in our Kickstart file we specify what Cobbler calls a snippet.

$SNIPPET('network_config')When building your host, Cobbler passes the network configuration you’ve specified to this snippet and a template it contains. This is then converted into the appropriate network line, and your host is configured.

Tip

This snippet is a simple use of Cobbler’s snippet system. You can define a variety of other actions using snippets, and you can see a selection of these in the /var/lib/cobbler/snippets directory, including the network_config snippet we used in this section. You can see how to use these snippets in the sample.ks file, and you can find instructions on how to make use of templates and snippets at http://cobbler.github.io/manuals/2.8.0/3/5_-_Kickstart_Templating.html and http://cobbler.github.io/manuals/2.8.0/3/6_-_Snippets.html .

Disks and Partitions

You’ve already seen one option Kickstart uses to configure disks and partitions, clearpart, which clears the partitions on the host. You can then use the part option to configure partitions on the host like so:

# Partition clearing informationclearpart --all --initlabelpart /boot --asprimary --bytes-per-inode=4096 --fstype="ext4" --size=150part / --asprimary --bytes-per-inode=4096 --fstype="ext4" --size=4000part swap --bytes-per-inode=4096 --fstype="swap" --size=512

Note

On CentOS, you can create a similar configuration just by specifying the autopart option. The autopart option automatically creates three partitions. The first partition is a 1GB or larger root (/) partition, the second is a swap partition, and the third is an appropriate boot partition for the architecture. One or more of the default partition sizes can be redefined with the part directive.

You use the part option to create specific partitions. In the preceding code, we first created two partitions , /boot and /, both ext4. We specified a size of 150MB for the /boot partition and a size of 4000MB (or 4GB) for the / or root partition. We also created a swap partition with a size of 512MB.

Using Kickstart on CentOS, we can create software RAID configurations, for example:

part raid.01 --asprimary --bytes-per-inode=4096 --fstype="raid" --grow --ondisk=sda--size=1part raid.02 --asprimary --bytes-per-inode=4096 --fstype="raid" --grow --ondisk=sdb--size=1part raid.03 --asprimary --bytes-per-inode=4096 --fstype="raid" --grow --ondisk=sdc--size=1part raid.04 --asprimary --bytes-per-inode=4096 --fstype="raid" --grow --ondisk=sdd--size=1part raid.05 --asprimary --bytes-per-inode=4096 --fstype="raid" --grow --ondisk=sde--size=1raid / --bytes-per-inode=4096 --device=md0 --fstype="ext4" --level=5 raid.01 raid.02raid.03 raid.04 raid.05

We specified five RAID disks, and each disk uses its entire contents as indicated by the --grow option. The respective disk to be used is specified with the --ondisk option, here ranging from sda to sde. Lastly, we used the raid option to specify the md0 RAID disk as the / or root partition.

You can also create partitions using LVM during an automated installation. On CentOS, for example, you would create them like so:

part /boot --fstype ext4 --size=150part swap --size=1024part pv1 --size=1 --growvolgroup vg_root pv1logvol / --vgname=vg_root --size=81920 --name=lv_root

In the preceding sample, we created a 150MB boot partition, a 1GB swap partition, and a physical volume called pv1 on the remainder of the disk, using the --grow option to fill the rest of the disk. We then created an 80GB LVM logical volume called vg_root.

Package Management

Using Kickstart, you can specify the packages you want to install. On CentOS, you specify a section starting with %packages and then the list of package groups or packages you want to install.

%packages@ Administration Tools@ Server Configuration Tools@ System Tools@ Text-based Internetdhcp

We specify an at symbol (@), a space , and then the name of the package group we want to install, for example, Administration Tools. We can also specify individual packages by listing them by name without the @ symbol and space, as we have here with the dhcp package.

Ubuntu uses a similar setup.

%packages@ kubuntu-desktopdhcp-client

Here we’ve installed the Kubuntu-Desktop package group and the dhcp-client package. For more information, see http://pykickstart.readthedocs.io/en/latest/kickstart-docs.html#chapter-7-package-selection .

Note

We discuss package groups in Chapter 8.

Pre- and Post-installation

You can run scripts before and after Kickstart installs your host. The prerun scripts run after the Kickstart configuration file has been parsed, but before your host is configured. Any prerun script is specified at the end of the Kickstart file and prefixed with the line %pre.

Each %post and %pre section must have a corresponding %end.

The postrun scripts are triggered after your configuration is complete and your host is installed. They should also be specified at the end of the Kickstart file and prefixed by a %post line. This is the %post section from our sample.ks configuration file:

%post$SNIPPET('post_install_kernel_options')$SNIPPET('post_install_network_config')%end

Here we’ve specified two postrun Cobbler snippets that configure kernel and network options.

This postrun scripting space is useful to run any required setup applications or scripts.

Preseed

Preseed is the Debian installation automation tool. It is more opaque than Kickstart, but it performs the same function of automating an installation.

To provide some context , each d-i line that you see is a call to the Debian installer. The format of the file is the same as instructions passed to the debconf-set-selection command. It takes the following form:

<owner> <question name> <question type> <value>So something like setting the locale for your system would look something like this:

d-i debian-installer/locale string enThe owner is the debian-installer, the question is debian-installer/locale, the type is string, and the value is en, or English.

Installation Source

Cobbler is selected as the initial installation source via the live-installer question.

d-i live-installer/net-image string http://$http_server/cobbler/links/$distro_name/install/filesystem.squashfsDuring the install, you can set up your apt repositories. This points your apt sources to the Ubuntu mirrors.

d-i mirror/country string manuald-i mirror/http/hostname string archive.ubuntu.comd-i mirror/http/directory string /ubuntud-i mirror/http/proxy string

You can choose the different apt pools with these settings to get to packages available from backports and the like:

#d-i apt-setup/restricted boolean true#d-i apt-setup/universe boolean true#d-i apt-setup/backports boolean true

You can just uncomment what pool you would like.

Keyboard, Language, and Time Zone

Setting the keyboard and language can be a time-consuming process but not with the installer. You can select the following in Preseed:

d-i debian-installer/locale string end-i debian-installer/country string AUd-i debian-installer/locale string en_AU.UTF-8d-i debian-installer/language string end-i console-setup/ask_detect boolean falsed-i console-setup/layoutcode string usd-i console-setup/variantcode stringd-i keyboard-configuration/layoutcode string usd-i clock-setup/ntp boolean trued-i clock-setup/ntp-server string ntp.ubuntu.comd-i time/zone string UTCd-i clock-setup/utc boolean true

Here we set the locale and country, and we disabled the keyboard prompt to ask for our selection and answered all the questions concerning keyboard layout. Then we enable NTP for our clocks and set their time to UTC.

Managing Users

With Cobbler and Preseed we enable the root user, which Ubuntu doesn’t normally do.

d-i passwd/root-login boolean trued-i passwd/root-password-crypted password $default_password_cryptedd-i passwd/make-user boolean false

So when you build your hosts, you will need to sign in as root. To keep your familiar setup, you could add a user ubuntu either in the Preseed or in a SNIPPET at the end of the installation.

#d-i passwd/user-fullname string Ubuntu User#d-i passwd/username string ubuntu

Firewall and Network

You can set up the network in whichever fashion suits you with Preseed, but you cannot do any firewall configurations. You can also add firewall configurations in post-install scripts in Cobbler if that is a requirement.

# IPv4 example#d-i netcfg/get_ipaddress string 192.168.1.42#d-i netcfg/get_netmask string 255.255.255.0#d-i netcfg/get_gateway string 192.168.1.1#d-i netcfg/get_nameservers string 192.168.1.1#d-i netcfg/confirm_static boolean true

You can set a static IP or allow for DHCP in your network settings.

Disks and Partitions

Disk partition can be fairly complex in Preseed. Here we are just creating a simple LVM partition setup:

d-i partman-auto/method string lvmd-i partman-lvm/device_remove_lvm boolean trued-i partman-lvm/confirm boolean trued-i partman-auto/choose_recipe select atomicd-i partman/confirm_write_new_label boolean trued-i partman/choose_partition select finishd-i partman/confirm boolean true

Package Management

Using Preseed, you can specify the packages you want to install. For group packages, you can use tasksel (or task select owner) to multiselect a package group—like ubuntu-desktop.

tasksel tasksel/first multiselect ubuntu-desktopFor individual packages, you can just use the following:

d-i pkgsel/include string openssh-serverYou can have more than one package selected if you like.

d-i pkgsel/include string openssh-server build-essentialMAAS

We’re going to briefly introduce you to the MAAS tool—or Metal As A Service by Ubuntu. You may remember we saw “Install MAAS rack or region servers” in the initial splash screen when we first created our Ubuntu installation. This is an Ubuntu service used for managing bare metal, or physical computers, like you manage virtual computers. This service is able to install bare-metal Ubuntu and CentOS servers—as well as RHEL and Windows.

The MAAS service strives to provide cloud functionality on your metal servers. The technology underlying this tool is not new. It uses PXE, Trivial File Transfer Protocol (TFTP), and Debian Preseed to build up nodes. It is designed to manage physical data centers and is very scalable to manage thousands of nodes.

Once MAAS spins up a host, you can provision it with Juju, an Ubuntu provision framework. This can install software, user accounts, and other resources onto your server, or you can use other provisioning services like Puppet or Ansible. You can read more about that here: https://maas.ubuntu.com/docs/juju-quick-start.html .

You can see how it works here: https://maas.io/how-it-works . There is a quick get-started tutorial here: https://maas.io/get-started . You can even try to run up a Vagrant test suite here: https://github.com/battlemidget/vagrant-maas-in-a-box .

Configuration Management

We’ve shown you throughout this book that configuring a Linux server includes quite a few tasks, for example, configuring hosts; creating users; and managing applications, daemons, and services. These tasks can be repeated many times in the life cycle of one host in order to add new configurations or remedy a configuration that has changed through error, entropy, or development. They can also be time-consuming and are generally not an effective use of time and effort.

The usual first response to this issue is to try to automate the tasks, which leads to the development of custom-built scripts and applications. Few scripts developed in this ad hoc manner are ever published, documented, or reused, so the same tool is developed over and over again. These scripts also tend not to scale well, and they often require frequent maintenance.

Configuration management tools can automate these tasks efficiently and allow a consistent and repeatable life cycle for your hosts. We’re going to show you how to use one of these tools, Puppet, to automate your configuration.

Introducing Puppet

Puppet ( https://puppet.com/ , formerly Puppetlabs) is an open source configuration management tool that in most installations relies on a client/server deployment model. Puppet is as available as open source or the commercial enterprise product. The enterprise product combines the multiple Puppet open source products and provides an enterprise dashboard to coordinate and configure your resources as well as commercial support agreements. The open source version doesn’t have the fancy enterprise features, is community supported, and is licensed using the Apache 2.0 license. We’re going to give you an overview of Puppet and how to use it to configure your environment and your hosts.

Note

At the time of writing, the Puppet world is changing from version 3.x to version 4.x. Version 3.x will be end of lifed by the end of 2016, so you should be using version 4.x. Version 4 is substantially different under the hood than version 3. The current version at the time of writing is v4.8.

When using Puppet, central servers, called Puppet masters, are installed and configured. Client software is then installed on the target hosts, called nodes, that you want to manage. When a node connects to the Puppet master, a configuration manifest for that node is compiled on the master, sent to the node, and then applied on the node by the Puppet agent.

Tip

There is another way to apply the manifest to a node, and that is called master-less puppet, or a puppet apply. It does not rely on the Puppet master architecture and certificate signing.

To provide client/server connectivity, Puppet uses RESTful web services running over HTTPS on TCP port 8140. In version 4.x, the Puppet server is a JVM-based application. To provide security, the sessions are encrypted and authenticated with internally generated self-signed certificates. Each Puppet client generates a self-signed certificate that is then validated and authorized on the Puppet master.

Thereafter, each client contacts the server—by default every 30 minutes, but this interval is customizable—to confirm that its configuration is up-to-date. If a new configuration is available or the configuration has changed on the node, the configuration manifest is recompiled and then applied to the client. This way, if any existing configuration has varied on the client, it is corrected with the expected configuration from the server. The results of any activity are logged and transmitted to the master.

At the heart of how Puppet works is a language that allows you to articulate and express your configuration. This is called the Puppet Declarative Scripting Language (Puppet DSL). Your configuration components are organized into entities called resources, which in turn can be grouped together in collections. Resources consist of the following:

Type

Title

Attributes

Listing 19-5 shows an example of a simple resource.

Listing 19-5. A Puppet Resource

file { '/etc/passwd':owner => 'root',group => 'root',mode => '0644',}

The resource in Listing 19-5 is a file type resource. The file resource configures the attributes of files under management. In this case, it configures the /etc/passwd file and sets its owner and group to the root user and its permissions to 0644.

Tip

There is a style guide for writing your Puppet manifests that you should become familiar with early on. You can see it here: https://docs.puppet.com/guides/style_guide.html .

The resource type tells Puppet what kind of resource you are managing—for example, the user and file types are used for managing user and file operations on your nodes, respectively. Puppet comes with a number of resource types by default, including types to manage files, services, packages, cron jobs, and repositories, among others.

Tip

You can see a full list of the built-in resource types at https://docs.puppet.com/puppet/4.8/type.html . You can also develop your own types in the Ruby programming language.

The resource’s title identifies it to Puppet. Each title is made up of the name of the resource type (e.g., file) and the name of the resource (e.g., /etc/passwd). These two values are combined to make the resource’s title (e.g., File['/etc/passwd']).

Note

In a resource title, the name of the resource type is capitalized (File), and the name of the resource is encapsulated in block brackets and single quotes (['/etc/passwd']).

Here the name, /etc/passwd, also tells Puppet the path of the file to be managed. Each resource managed by Puppet must be unique—for example, there can be only one resource called File['/etc/passwd'].

The attributes of a resource describe the configuration details being managed, such as defining a particular user and the attributes of that user (e.g., the groups the user belongs to or the location of the user’s home directory). In Listing 19-5, we are managing the owner, group, and mode (or permissions) attributes of the file. Each attribute is separated from its value with the => symbols and is terminated with a comma.

Puppet also uses the concept of collections, which allow you to group together many resources. For example, an application such as Apache is made up of a package, a service, and a number of configuration files. In Puppet, each of these components would be represented as a resource (or resources) and then collected and applied to a node. We’ll look at some of these collection types later in this chapter.

Installing Puppet

Let’s start by installing Puppet. For Puppet, the client and server installations are slightly different, and we’ll show you how to install each.

The Puppet master will require at least 3Gb of memory for the JVM and operating system to have enough room. Also, TCP port 8140 needs to be open on the Puppet master.

CentOS Installation

With the latest packages from Puppet, all the required packages are installed by default when we install the server. On CentOS, on both servers and clients, you will need to add the Puppet repositories for Red Hat–based machines.

$ sudo yum install -y https://yum.puppetlabs.com/puppetlabs-release-pc1-el-7.noarch.rpmThere are several components that make up the Puppet ecosystem such as Facter, a tool for discovering system facts on nodes. System facts are things like operating system, IP addresses, and any custom facts. Another one is Hiera, a key/value lookup database for declaring Puppet configuration data. Finally, there’s MCollective, an orchestration tool for managing Puppet nodes.

On the master, you install the puppetserver, and this will install facter, hiera, the agent, and other required packages from the Puppet repository.

$ sudo yum install puppetserverOn the Puppet nodes, or clients, we can install the puppet-agent package by itself, and it will contain or require all that it needs to run.

$ sudo yum install –y puppet-agentThis of course will require the installation of the YUM repository, like earlier, first.

Ubuntu Installation

On Ubuntu, we again install the apt repository and then install puppetserver on the master server, which will bring down all the necessary Puppet components, like Facter and Hiera and the agent.

On the server or master, we need to do this:

$ wget https://apt.puppetlabs.com/puppetlabs-release-pc1-xenial.deb -O xenial.deb && sudo dpkg -i xenial.deb$ sudo apt-get update$ sudo apt-get install -y puppetserverOn the client, you need the following:$ sudo apt-get install –y puppet-agent

You will now have all the necessary components installed on your systems.

Configuring Puppet

We’ll start configuring Puppet by setting up our Puppet master. Our configuration for the Puppet master will be located under the /etc/puppetlabs directory.

As we have said, Puppet has several components that make up the ecosystem. Puppet’s principal server configuration file is located at /etc/puppetlabs/puppetserver/conf.d/puppetserver.conf. You will rarely need to edit this file, but it has things like various path settings and TLS ciphers being used.

The other main configuration file is on both the agent and the master. It is located at /etc/puppetlabs/puppet/puppet.conf. You can define global configuration settings or service-specific settings under the [service] sections, such as [main], [master], [agent], or [user].

Configuring the Master

Typically the master’s /etc/puppetlabs/puppet/puppet.conf will look something like this:

[main]certname = puppetmaster.example.comserver = puppetenvironment = productionruninterval = 30mstrict_variables = true[master]dns_alt_names = puppetmaster,puppet,puppet.example.com

The [main] section contains the defaults for both the master and agents. Here we determine the certname, which will be the common name specified in the TLS certificate that we will generate on startup. This is related to the dns_alt_names setting that provides alternative DNS names that agents can use to verify the Puppet master. The server = puppet is the name of the Puppet master this Puppet agent will try to connect to. You can see that this matches one of the dns_alt_names.

The Puppet agents can specify the environment that they should use to collect their catalog when they connect to the Puppet master. This is often used to test version control system (VCS) branches of your Puppet code or can be used to multihome your Puppet master for more than one organization.

Do not fall into the mistake of making environments that mirror the roles your hosts may perform. That is, don’t have an environment for development, UAT, staging, and production and assign relevant hosts to those environments. It is easier to treat all your hosts as production and handle the different roles and profiles these hosts may take on in the Puppet manifest itself. It often leads to a horrible divergence of your Puppet code between systems and VCS branches. By all means create an environment to test your Puppet code, but roll that as soon as you can into the production branch. Use alternatives like Hiera and the “roles and profiles” patterns to achieve this. See https://docs.puppet.com/hiera/3.2/ and https://docs.puppet.com/pe/2016.4/r_n_p_full_example.html .

The runinterval is the time between each Puppet run, that is, when the agent will call into the Puppet master for its catalog. strict_variables means that the parse will raise an error when referencing unknown variables.

In the [master] section, we define setting for the Puppet master server. We are not going to set anything here except the dns_alt_names value. Settings that might belong in here are codedir, where Puppet will look for the Puppet code, or the manifest, which we are going to write. However, we are going to take the defaults, which means our codedir will be /etc/puppetlabs/code.

It is in here you will set reporting settings and configuration for using PuppetDB. Using PuppetDB is a great idea as it allows you to do complex catalogs as it collects data from multiple nodes but is outside the scope of this exercise. See here for more details: https://docs.puppet.com/puppetdb/ .

We recommend you create a DNS CNAME for your Puppet host (e.g., puppet.example.com) or add it to your /etc/hosts file.

# /etc/hosts127.0.0.1 localhost192.168.0.1 au-mel-ubuntu-1 puppet puppetmaster puppet.example.com puppetmaster.example.com

Note

We cover how to create CNAMEs in Chapter 10.

Writing the Manifest

We’re going to store our actual configuration in a directory called manifestsunder the /etc/puppetlabs/code/environments/production directory. In this directory, you will most likely see the following directories and files:

ll /etc/puppetlabs/code/environments/production/-rw-r--r-- 1 root root 879 Dec 5 23:53 environment.confdrwxr-xr-x 2 root root 4096 Dec 5 23:53 hieradata/drwxr-xr-x 2 root root 4096 Dec 5 23:53 manifests/drwxr-xr-x 2 root root 4096 Dec 5 23:53 modules/

There is an environment.conf file that the Puppet server will read to determine the specific settings this environment will need. The hieradata directory will contain the Hiera database for variable lookups. The manifest directory is where the Puppet looks for the site.pp file. This file is used to create the root of our configuration. The modules directory is where we install Puppet modules. Modules are collections of Puppet files that perform a specific set of tasks. We will explain them in greater detail shortly.

The manifests directory needs to contain a file called site.pp that is the root of our configuration. Let’s create that now.

$ sudo touch /etc/puppetlabs/code/environments/production/manifests/site.ppNote

Manifest files containing configuration have a suffix of .pp.

We’re also going to create three more directories at the base of our production directory, first site and in that directory profile and role.

$ sudo mkdir -p /etc/puppetlabs/code/environments/production/site/{profile,role}The site directory is actually another module and, like role, will be used to contain specific role and profile information for this particular environment. We will need to edit our environment.conf file to get Puppet to see these. We need to add the following to the modulepath directive:

$ sudo vi /etc/puppetlabs/code/environments/production/environment.confmodulepath = ./sites:./modules:$basemodulepath

We are now going to create a node definition so that we can match each of our nodes to a profile. A profile can be described as the kind of host it is. A role in comparison is like the service that host performs. For example, we can have a role of web_server. We can have a profileof UAT web_server. That is, it is a web server that has things that the UAT people require that might make it slightly different from our production web servers—different database back-end configurations, different authentication requirements, or the like—but still essentially it still has a role of being a web server that might have our application deployed to it.

It can take a bit to get your head around, and there are no perfect answers for how you should implement this structure into your Puppet manifest. Individual companies will have different implementations based on practices that work best for their companies. For a greater discussion of the Role and Profile pattern, see https://www.youtube.com/watch?v=RYMNmfM6UHw .

We’ll continue our configuration by defining our site.pp file, as shown in Listing 19-6.

Listing 19-6. The site.pp File

sudo vi /etc/puppetlabs/code/environments/production/manifests/site.ppnode /^webd+.example.com$/ {include profile::web_server}

The node declaration in Listing 19-6 is how the Puppet master knows what to do with nodes when they “check in.” Here we have used a regular expression, but you can also use plan strings like the following:

node 'web1.example.com' { ... }node 'web1.example.com', 'web2.example.com' { ... }

In our declaration we are saying any node that checks in with a TLS certificate name starts with web (^ web) and has one or more numbers following that (^web d+) and then the domain name (.example.com) and nothing more (.com $). Then we provide this node with the profile::web_server profile.

There is a special node declaration for when there is no match, the default node definition.

node default { ... }You can use this default node declaration to notify people that a node has no definition or to apply a set of default security restrictions. If there is no default node, and no matching definition for a node, Puppet will fail to compile a manifest for this node.

Note

You can find more information on defining nodes here: https://docs.puppet.com/puppet/latest/lang_node_definitions.html .

Starting Puppet Server with the RAL

Here is a neat trick. You can use the Puppet resource command to start your Puppet master server (puppetserver). The Puppet resource command allows you to directly interact with the Puppet Resource Abstraction Layer (RAL) . The RAL is how Puppet interacts and manages the system. With the Puppet resource we will start puppetserver and make it start on boot like this:

sudo /opt/puppetlabs/bin/puppet resource service puppetserver ensure=running enable=trueWe have not yet described how Puppet manages resources, and you will get a deeper understanding of what this command is doing shortly, but briefly what this is doing is the following:

Starting a service (ensure=running)

Making the necessary changes to start a service at boot (enable=true)

Using whatever underlying system to start the service (service puppetserver)

In our case, it will use systemctl commands (start and enable) under the hood. You can run this same command on CentOS, Ubuntu, or any other supported system, and it will start the puppetserver process. If you were on a Mac and starting an Apache service, it would use launchctl—it uses whatever is appropriate for the system it is run on.

We can see if it has started using the normal systemctl command, and we can see the logs here:

$ sudo journalctl -u puppetserver -f-- Logs begin at Tue 2016-12-20 09:24:04 UTC. --Dec 21 09:25:29 puppetmaster systemd[1]: Starting puppetserver Service...Dec 21 09:25:29 puppetmaster puppetserver[4877]: OpenJDK 64-Bit Server VM warning: ignoring option MaxPermSize=256m; support was removed in 8.0Dec 21 09:26:30 puppetmaster systemd[1]: Started puppetserver Service.

Also, the running server logs can be found in /var/log/puppetlabs.

We can use this for keeping an eye on the tasks in the next section.

Connecting Our First Client

Once you have the Puppet master configured and started, you can configure and initiate your first client. On the client, as we mentioned earlier, you need to install the puppet-agent package using your distribution’s package management system. We’re going to install a client on the web.example.com host and then connect to our puppet.example.com host. This installation will also create a /etc/puppetlabs/puppet/ directory with a puppet.conf configuration file.

When connecting our client, we first want to run the Puppet client from the command line rather than as a service. This will allow us to see what is going on when we connect. The Puppet client binary is called puppet agent, and you can see a connection to the master initiated in Listing 19-7.

Listing 19-7. Puppet Client Connection to the Puppet Master

web$ sudo /opt/puppetlabs/bin/puppet agent --server puppet.example.com --test --waitforcert 15Info: Creating a new SSL key for web1.example.comInfo: Caching certificate for caInfo: csr_attributes file loading from /etc/puppetlabs/puppet/csr_attributes.yamlInfo: Creating a new SSL certificate request for web1.example.comInfo: Certificate Request fingerprint (SHA256): 3E:D9:02:08:98:79:FB:8C:40:65:75:4E:15:7C:51:89:4C:14:25:90:16:2A:DB:29:D6:3C:F4:82:64:7E:C8:62Info: Caching certificate for caNotice: Did not receive certificate

In Listing 19-7, we executed the puppet agent binary with a number of options. The first option, --server, specifies the name or address of the Puppet master to connect to. We can also specify this in the main section of the /etc/puppetlabs/puppet/puppet.conf configuration file on the client.

[main]server=puppet.example.com

The --test option runs the Puppet client in the foreground and prevents it from running as a daemon, which is the default behavior. The --test is commonly mistaken, and people think that it only “tests” a Puppet run and isn’t destructive. This sadly misnamed option is actually a meta parameter for onetime, verbose, no-daemonize, no-usecacheonfailure, detailed-exitcodes, no-splay, show_diff, and no-use_cached_catalog. If you want a nondestructive Puppet run, you need to specify --noop.

Tip

The --debug option provides further output that is useful for troubleshooting.

In Listing 19-7, you can see the output from our connection. The client has created a certificate signing request and a private key to secure our connection. Puppet uses TLS certificates to authenticate connections between the master and the client. The client is now waiting for the master to sign its certificate and enable the connection. At this point, the client is still running and awaiting the signed certificate. It will continue to check for a signed certificate every 15 seconds until it receives one or is canceled (using Ctrl+C or the like).

Note

You can change the time the Puppet client will wait using the --waitforcert option like we have done. You can specify a time in seconds or 0 to not wait for a certificate.

Now on the master, we need to sign the certificate. We do this using the puppet cert command.

puppet$ sudo /opt/puppetlabs/puppet/bin/puppet cert list"web1.example.com" (SHA256) 3E:D9:02:08:98:79:FB:8C:40:65:75:4E:15:7C:51:89:4C:14:25:90:16:2A:DB:29:D6:3C:F4:82:64:7E:C8:62

The --list option displays all the certificates waiting to be signed. We can then sign our certificate using the sign option. You can use the certificate fingerprint to verify you are signing the correct certificate.

puppet$ sudo /opt/puppetlabs/puppet/bin/puppet cert sign web1.example.comSigning Certificate Request for:"web1.example.com" (SHA256) 3E:D9:02:08:98:79:FB:8C:40:65:75:4E:15:7C:51:89:4C:14:25:90:16:2A:DB:29:D6:3C:F4:82:64:7E:C8:62Notice: Signed certificate request for web1.example.comNotice: Removing file Puppet::SSL::CertificateRequest web1.example.com at '/etc/puppetlabs/puppet/ssl/ca/requests/web1.example.com.pem'

Note

You can sign all waiting certificates with the puppet cert sign --all command.

On the client, after we’ve signed our certificate , we should see the following entries:

Notice: Did not receive certificateInfo: Caching certificate for web1.example.comInfo: Caching certificate_revocation_list for caInfo: Using configured environment 'production'Info: Retrieving pluginfactsInfo: Retrieving pluginInfo: Loading factsError: Could not retrieve catalog from remote server: Error 500 on SERVER: Server Error: Evaluation Error: Error while evaluating a Function Call, Could not find class ::profile::web_server for web1.example.com at /etc/puppetlabs/code/environments/production/manifests/site.pp:2:3 on node web1.example.comWarning: Not using cache on failed catalogError: Could not retrieve catalog; skipping run

The client is now authenticated with the master, but we have an error, and nothing has been applied.

Error: Could not retrieve catalog from remote server: Error 500 on SERVER: Server Error: Evaluation Error: Error while evaluating a Function Call, Could not find class ::profile::web_server for web1.example.com at /etc/puppetlabs/code/environments/production/manifests/site.pp:2:3 on node web1.example.comWarning: Not using cache on failed catalogError: Could not retrieve catalog; skipping run

The error is fairly detailed. We expected this error, so let’s see what it is telling us. It says that on line 2 in /etc/puppetlabs/code/environments/production/manifests/site.pp we could not find the class ::profile::web_server for web1.example.com. Looking on line 2 of the site.pp file we see the following:

include profile::web_serverWe have told it to include a profile that we have not created yet. We have to create it. Let’s do that next.

Tip

In the error do you notice that ::profile is preceded by ::? That indicates that the error is in the top scope in Puppet.

Creating Our First Configuration

Now our client has connected, and we’re going to add some configuration for it. On the Puppet master, we need to add our profile module and add some configuration to apply to our client.

A module should have the following structure:

modulename/|- manifests|- init.pp|- files|- templates

At the least, you need the manifests directory; you may see other modules with more directories, like spec and lib as well—for testing and module code, respectively.

We have created the profile module directory in /etc/puppetlabs/code/environments/production/sites already. Let’s create a manifests file inside the profile directory. In that directory we will create a file called init.pp. This file is not technically necessary and will not hold any configuration. You can see the contents of this file in Listing 19-8.

Listing 19-8. Our init.pp Configuration

class profile {}

It is simply an empty Puppet file. This is the standard format of a Puppet file. The class declaration is a Puppet type. The profile is a title. Then the class type expects the Puppet code in that class to be between curly braces {...}. See https://docs.puppet.com/puppet/4.8/lang_resources.html for the basics on the Puppet language.

Now inside the profile directory, we will create our web_server.pp file. The Puppet master autoloader, the mechanism that looks for and loads the Puppet files, will, when it sees include profile::web_server, look in its module path for first the directory profile and then in the manifests directory in that. Then it will load all the *.pp files until it finds the class profile::webserver { ... } directive like declared here:

$ sudo vi /etc/puppetlabs/code/environments/production/sites/profile/manifests/web_server.ppclass profile::web_server {}

In this file, between the {...} we are going to declare a resource. This resource is called notify, and a resource is declared like the following:

notify { "profile::webserver – loaded": }The notify is the resource type. The "profile::webserver – loaded": is the title. What it does is print a message in the runtime log of the Puppet run. As with all resource types, you can add attributes, and notify can take a name, message, and withpath attributes. So, you could write it like this:

notify { "profile::webserver – loaded":name => 'a name',message => 'this is our message'}

Feel free to experiment . You will find all the resources and their attributes at the following link: https://docs.puppet.com/puppet/latest/type.html . Save that file, and let’s run the Puppet agent on web1.example.com again.

$ sudo /opt/puppetlabs/bin/puppet agent --server puppet.example.com --testInfo: Using configured environment 'production'Info: Retrieving pluginfactsInfo: Retrieving pluginInfo: Loading factsInfo: Caching catalog for web1.example.comInfo: Applying configuration version '1482320904'Notice: profile::webserver – loadedNotice: /Stage[main]/Profile::Web_server/Notify[profile::webserver – loaded]/message: defined 'message' as 'profile::webserver – loaded'Notice: Applied catalog in 0.01 seconds

There you can see the output of our notify. Notify can be a handy way to debug your Puppet code as you can print out variables and the like to see that your code is working as you expect.

We now have our profile::web_server module working. Now inside this profile we are going to soon include the role apache_web. This is maybe starting to sound a little like Russian dolls, but the idea is that you abstract designation logic from the type of server it is. For the time being, let’s move on to configuring our role.

Create the following directory: /etc/puppetlabs/code/environments/production/sites/role/manifests. In there we create the file in Listing 19-9.

Listing 19-9. The role::apache_web Class

$ sudo vi /etc/puppetlabs/code/environments/production/sites/role/manifests/apache_web.ppclass role::apache_web (String $vhost_name,String $vhost_doc_root,Numeric $vhost_port) {include apacheapache::vhost { $vhost_name:port => $vhost_port,docroot => $vhost_doc_root,}}

This class has a bit more meat to it. Here we declare the class role::apache_web, and we provide a list of parameters we expect this class to be provided with when it is used. Class parameters in Puppet can either be declared when we create the class or be looked up from a key/value database like Hiera at the time this class is compiled for a node. They are declared right after the class name and inside parentheses separated by a comma. Read more about class parameters here: https://docs.puppet.com/puppet/4.8/lang_classes.html .

In Puppet you can define the data types of the variables you are passing in, and if they are not the correct types, Puppet will error. We have used String and Numeric, but the others, like Boolean, Array, and Hash are described here: https://docs.puppet.com/puppet/4.8/lang_data_type.html .

In Listing 9-11 we have included the apache module . This is a module we are now going to install using the Puppet module command like we described earlier, and it will be the puppetlab/apache module. Looking at the documentation, we can declare a virtual host by giving a name, a port, and doc_root, and we have used the parameters provided to the class.

The apache::vhost is what is called a defined resource type. Defined resource types are normal blocks of Puppet code that can be evaluated multiple times. You still cannot have multiple defined resources with the same name, so you could not have two declarations of apache::vhost {' www.example.com ': } for instance, but you can declare apache::vhost {' www.example.com ': } and apache::vhost {'api.example.com': } in the same manifest just fine.

Let’s install the puppetlabs-apache module on the Puppet server now.