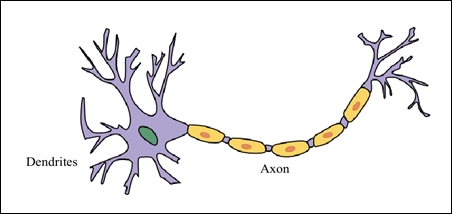

ANNs are modeled from the behavior of the central nervous system of organisms, such as mammals and reptiles, that are capable of learning. The central nervous system of these organisms comprises the organism's brain, spinal cord, and a network of supporting neural tissues. The brain processes information and generates electric signals that are transported through the network of neural fibers to the various organs of the organism. Although the organism's brain performs a lot of complex processing and control, it is actually a collection of neurons. The actual processing of sensory signals, however, is performed by several complex combinations of these neurons. Of course, each neuron is capable of processing an extremely small portion of the information processed by the brain. The brain actually functions by routing electrical signals from the various sensory organs of the body to its motor organs through this complex network of neurons. An individual neuron has a cellular structure as illustrated in the following diagram:

A neuron has several dendrites close to the nucleus of the cell and a single axon that transports signals from the nucleus of the cell. The dendrites are used to receive signals from other neurons and can be thought of as the input to the neuron. Similarly, the axon of the neuron is analogous to the output of the neuron. The neuron can thus be mathematically represented as a function that processes several inputs and produces a single output.

Several of these neurons are interconnected, and this network is termed as a neural network. A neuron essentially performs its function by relaying weak electrical signals from and to other neurons. The interconnecting space between two neurons is called a synapse.

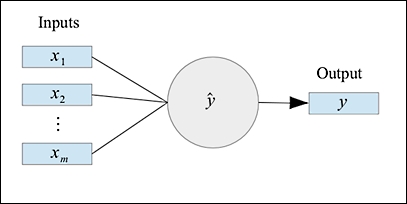

An ANN comprises several interconnected neurons. Each neuron can be represented by a mathematical function that consumes several input values and produces an output value, as shown in the following diagram:

A single neuron can be illustrated by the preceding diagram. In mathematical terms, it's simply a function ![]() that maps a set of input values

that maps a set of input values ![]() to an output value

to an output value ![]() . The function

. The function ![]() is called the activation function of the neuron, and its output value

is called the activation function of the neuron, and its output value ![]() is called the activation of the neuron. This representation of a neuron is termed as a perceptron. Perceptron can be used on its own and is effective enough to estimate supervised machine learning models, such as linear regression and logistic regression. However, complex nonlinear data can be better modeled with several interconnected perceptrons.

is called the activation of the neuron. This representation of a neuron is termed as a perceptron. Perceptron can be used on its own and is effective enough to estimate supervised machine learning models, such as linear regression and logistic regression. However, complex nonlinear data can be better modeled with several interconnected perceptrons.

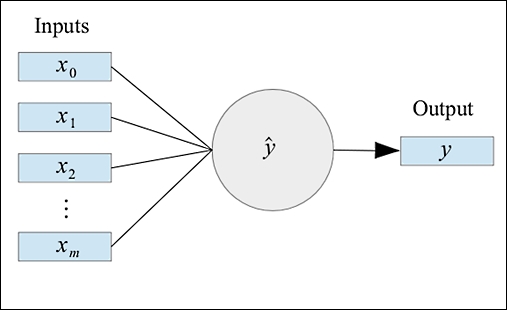

Generally, a bias input is added to the set of input values supplied to a perceptron. For the input values ![]() , we add the term

, we add the term ![]() as a bias input such that

as a bias input such that ![]() . A neuron with this added bias value can be illustrated by the following diagram:

. A neuron with this added bias value can be illustrated by the following diagram:

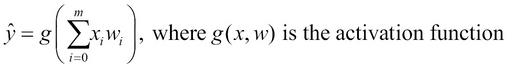

Each input value ![]() supplied to the perceptron has an associated weight

supplied to the perceptron has an associated weight ![]() . This weight is analogous to the coefficients of the features of a linear regression model. The activation function is applied to these weights and their corresponding input values. We can formally define the estimated output value

. This weight is analogous to the coefficients of the features of a linear regression model. The activation function is applied to these weights and their corresponding input values. We can formally define the estimated output value ![]() of the perceptron in terms of the input values, their weights, and the perceptron's activation function as follows:

of the perceptron in terms of the input values, their weights, and the perceptron's activation function as follows:

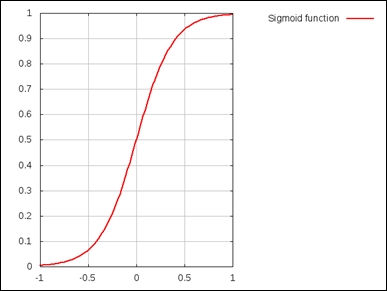

The activation function to be used by the nodes of an ANN depends greatly on the sample data that has to be modeled. Generally, the sigmoid or hyperbolic tangent functions are used as the activation function for classification problems (for more information, refer to Wavelet Neural Network (WNN) approach for calibration model building based on gasoline near infrared (NIR) spectr). The sigmoid function is said to be activated for a given threshold input.

We can plot the variance of the sigmoid function to depict this behavior, as shown in the following plot:

ANNs can be broadly classified into feed-forward neural networks and recurrent neural networks (for more information, refer to Bidirectional recurrent neural networks). The difference between these two types of ANNs is that in feed-forward neural networks, the connections between the nodes of the ANN do not form a directed cycle as opposed to recurrent neural networks where the node interconnections do form a directed cycle. Thus, in feed-forward neural networks, each node in a given layer of the ANN receives input only from the nodes in the immediate previous layer in the ANN.

There are several ANN models that have practical applications, and we will explore a few of them in this chapter.