In this chapter, we will cover the following recipes:

- Configuring Visual Studio 2010 to use OpenGL

- Initializing and preparing OpenGL

- Reading and showing a frame from the image sensor (color/IR)

- Reading and showing a frame from the depth sensor

- Controlling the player when opening a device from file

- Recording streams to file (ONI file)

- Event-based reading of data

In the previous chapter, we learned how to initialize sensors and configure their output in the way we want. In this chapter, we will introduce ways to read this data and show it to the user.

This chapter is not about advanced or high-level (middleware) outputs. For now, we are going to talk only about native OpenNI outputs: color, IR, and depth.

But before anything, we need to know about some of OpenNI's related classes.

Unlike the OpenNI 1.x era, where there were different classes for reading frames of data from sensors, known as MetaData types, here we have only one class to read data from any sensor, called

openni::VideoFrameRef. This is just as in the previous chapter, where we had the openni::VideoStream class for accessing different sensors instead of one

Generator class per sensor.

But let's forget about OpenNI 1.x. We are here to talk about OpenNI 2.x. In OpenNI 2.x, you don't need different classes to access frames; the only thing you need is the openni::VideoFrameRef class.

Every time we want to read one frame from a sensor's stream, we need to ask its openni::VideoStream object to read that frame from the device and give us access to it. This can be done by calling the

openni::VideoStream::readFrame() method. The return value of this method is openni::Status, which can be used to find out whether the operation ended successfully or not. And if so, we can use the

openni::VideoFrameRef variable, which we sent to this method as a parameter before, to access the frame data.

That's it. Simple enough; but you need to know that this operation may not finish immediately if there is no information available to read. For understanding this behavior, it is better to think about network streams (sockets) in socket programming. In socket programming, sockets have 2 different modes: the blocking and non-blocking modes. When you are in the blocking mode, a read request will wait until there is some data to return. In OpenNI 2.x, we have the same behavior. When we request to read a frame from openni::VideoStream as proxy for the physical sensor, OpenNI will wait for a frame to become ready or it may return the last unread frame instantly, if there was one.

Let's talk a bit more about openni::VideoFrameRef. openni::VideoFrameRef has a number of methods that can be used to get more information about the returned frame but the most important one is openni::VideoFrameRef::getData(). This method returns a pointer with the undefined data type (void*) to the first pixel of the frame.

The data type of the returned data must be known before reading and processing it. To find out what the data type of the data is, we can use the openni::VideoFrameRef::getVideoMode() method. We can also request another data type by setting a new VideoMode class for the sensor. Following is a list of data types for different pixel formats:

unsigned char: It is usable when we want to read from a frame with theopenni::PixelFormat::PIXEL_FORMAT_GRAY8pixel format. Each pixel in 8-bit grayscale is 1 byte (in C++ we know bytes as chars) with a range of 0-255.OniDepthPixel: From its name, it is clear that this data type is the main data type when we want to read depth data from a frame with theopenni::PixelFormat::PIXEL_FORMAT_DEPTH_1_MMoropenni::PixelFormat::PIXEL_FORMAT_DEPTH_100_UMpixel format.OniDepthPixelis actually anunsigned shortdata type(also known asUINT16oruint16_t).OniRGB888Pixel: It is a structure containing 3unsigned chardata types for representing the color of a pixel and is used when the pixel format of a frame isopenni::PixelFormat::PIXEL_FORMAT_RGB888. Blue, green, and red are the three values of this structure.OniGrayscale16Pixel: Just as withOniDepthPixel, this data type is only an alias tounsigned short(also known asUINT16oruint16_t) when the frame has theopenni::PixelFormat::PIXEL_FORMAT_GRAY16pixel format.OniYUV422DoublePixel: It is a structure containing fourunsigned chardata types for representing colors of two pixels and is used when the pixel format of a frame isopenni::PixelFormat::PIXEL_FORMAT_YUV422.

After knowing the data type of the returned frame, you can increase the pointer with the size of the returned data type and retrieve the following pixels one by one.

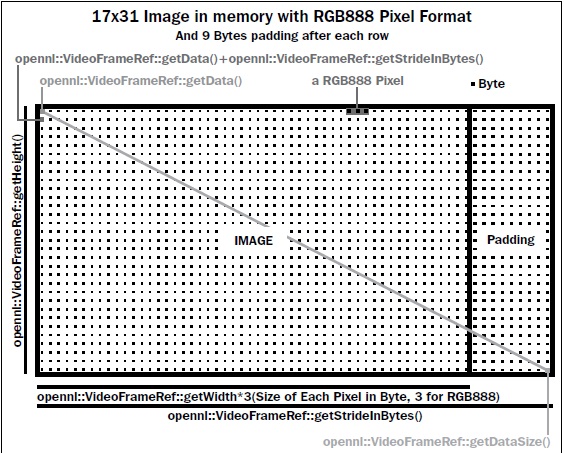

One of the other useful methods is openni::VideoFrameRef::getStrideInBytes(); the return value of this method is of the int type and shows the number of bytes each row of image has, including padding if any. To understand this better, let's review how images can be stored in memory; going against what we know about an image as a two-dimensional array of pixels, they are actually stored in a one-dimensional array of pixels in memory and there is no sign where a row ends and a new row begins. The program itself must take care of that by knowing the width and height of the image before starting to read its data. This is simple and can be done by multiplying the image width with the size of the image's pixel format in bytes. Each pixel holds a number of bytes in memory depending upon the image's pixel format; for example, an image in RGB24 has 3 bytes of data per pixel in memory. So an image with a size of 640 x 480 in RGB24 (also called RGB888) has 921600 (ImageWidth x Image Height x SizeOfEachPixel) bytes of data and each row starts after 1920 (ImageWidth x SizeOfEachPixel) bytes of data. This is a simple approach, but that's not always the case. An image can have padding after each row (unused bytes) and this can break our code. Actually, no one can be sure about padding and the size of padding except the program that wrote this image to memory; and in this case, only OpenNI knows how we need to read data from memory. That's why we have openni::VideoFrameRef::getStrideInBytes(). As we had previously said, this method will return the number of bytes that you must add to the current row's first pixel position to have the next row's first pixel; and even though this number is equal to ImageWidth x SizeOfEachPixel most of the time, it is much safer to use this method instead.

There are other methods too, such as the following:

openni::VideoFrameRef::getDataSize(): Here, the returned value is equal to the number of bytes of the frame.openni::VideoFrameRef::getSensorType(): Here, the returned value is anopenni::SensorTypeobject showing the type of parent sensor (depth, IR, or color).openni::VideoFrameRef::getWidth(): This returns the number of pixels in each row of the frame.openni::VideoFrameRef::getHeight(): This returns the number of rows of the frame.openni::VideoFrameRef::getVideoMode(): This returns an object of typeopenni::VideoMode, which holds the current frame's video mode information, including expected width, height, pixel format, and fps. But the only important value is pixel format, because values of width and height are not trustable. It is better to useopenni::VideoFrameRef::getWidth()andopenni::VideoFrameRef::getHeight()instead.openni::VideoFrameRef::getTimestamp(): This returns the frame generation time in milliseconds from the start of the sensor.openni::VideoFrameRef::getFrameIndex(): This returns the index of the returned frame from the start of the sensor.

There are plenty of other methods that we will discuss in other chapters.

For now, let's take a look at the following image to get an idea about each method's return value:

Please note that this image has a width of 17 pixels, a height of 31 pixels, and an RGB888 (also called RGB24) pixel format, meaning that every 3 bytes is equivalent to 1 pixel. Also, the previous sample has 9 bytes of padding after each pixel; in reality, though, it may have no padding at all.

We already know about the

openni::OpenNI object from the previous chapter. We are going to introduce a method of openni::OpenNI here that we have never talked about before.

In the previous section, we had seen that when using openni::VideoStream::readFrame(), we can't be sure about the immediate response from this method. This is not a big problem if you want to use one thread for each receiving process; for example, a thread for receiving depth and another for receiving color frames along with a thread to get input from the user and control the other two. In this case, two threads wait for a new frame to become available independent of each other and the third thread. But what if you want to wait for a new frame from two different sources (different sensors or different devices) in the same thread? In this case, one operation may block another and this may create a big frame-rate drop. This can become worse if you decide to receive the user's input in the same thread too. But this is exactly what we want to do in all the recipes of this chapter. And yes, we know writing a multithread application is good in current multicore PCs, but it is not always necessary, especially in this case when there is no big calculation in any thread and they are idle most of the time, waiting for a new frame; also, we don't want to make our code hard to read or understand by using third-party libraries for performing multithread operations.

For solving this problem, we need to have a method to tell us if one of the streams has new data to read. And we have a method with the same functionality here in OpenNI, named

openni::OpenNI::waitForAnyStream(). This method receives one or more openni::VideoStream objects and tells you which one has any new data to read. This method also has a time-out parameter that can be used to wait until one of the streams has any data. For example, if you want to read from two or more openni::VideoStream objects and want to know which one has a new frame, you can call openni::OpenNI::waitForAnyStream() in a loop with a high time-out value, since you don't need to do anything else except read frames. But if you want to do something else (for example, check user input and at the same time wait for a new frame to become ready), you can call openni::OpenNI::waitForAnyStream() with 0 or a very small time-out parameter. We will be doing this in the recipes of this chapter.